2.1. Fundamentals of Portfolio Entropy

Entropy Approach to a Portfolio

Entropy is a fundamental concept that seeks to characterize the degree of randomness and uncertainty inherent in a given system. Originating from thermodynamics and later extended to information theory, entropy serves as a quantitative measure of disorder, playing a critical role in diverse fields such as econometrics, finance, and statistical mechanics. In the context of dynamic systems, entropy provides a rigorous framework for assessing unpredictability, where a system with zero entropy exhibits complete determinism, while higher entropy values indicate greater uncertainty and disorder [

20].

Over time, significant contributions have shaped the theoretical foundations of entropy and its applicability in various disciplines. Boltzmann’s statistical mechanics laid the groundwork for entropy as a measure of disorder in physical systems, while Shannon formalized it within information theory, defining entropy as the expected value of information content. Further refinements by Wiener, Khinchin, Faddeev, Rényi, Tsallis, Guiasu, and Onicescu have extended its conceptual and mathematical underpinnings, allowing entropy to be applied beyond physics to areas such as finance and portfolio theory. Notably, Jaynes introduced the principle of maximum entropy, stating that when dealing with incomplete or partial information, the most unbiased probability distribution is the one that maximizes entropy [

11,

17]. This principle has since become a cornerstone of probabilistic inference and decision-making under uncertainty.

Within the realm of financial analysis and portfolio management, entropy is increasingly recognized as a robust measure of diversification and risk assessment. Unlike traditional variance-based metrics, entropy-based measures provide a more generalized and flexible approach to evaluating portfolio uncertainty. Shannon’s foundational nonlinear entropy model paved the way for subsequent advancements, such as Yager’s application of the maximum entropy principle in decision-making frameworks. Wang and Parkan introduced a linear entropy model, while Philippatos and Wilson leveraged entropy as an alternative risk metric in portfolio selection, arguing that entropy encompasses a broader perspective on uncertainty compared to variance. Additionally, Jiang et al. proposed a maximum entropy framework for portfolio optimization, demonstrating its efficacy in constructing well-diversified asset allocations. These contributions have collectively expanded the applicability of entropy in financial modeling, leading to a range of sophisticated methodologies for managing uncertainty in investment strategies [

2].

Empirical Approach to the Entropy of a Portfolio

If we refer to the financial environment, in particular to portfolio theory, we can observe the following (We will denote the portfolio formed from n assets with

If a certain action “ ” has a high degree of investment security (low risk and high profit), then it is natural to invest a large proportion in this asset, i.e., , therefore , i.e., , and the portfolio has the structure

If the portfolio is formed from assets about which almost nothing is known, then it is natural (the diversification principle) to invest equal proportions in each of the assets. Therefore, the portfolio has the structure , and

Remark 1: As a measure of portfolio uncertainty, we should consider a weighted average of the perception of uncertainty of each asset , where represents the perception of uncertainty of the investment in the i-th asset.

Remark 2: If the proportion increases , the uncertainty decreases, signal magnitude (respectively of the uncertainty in the investment ) decreases. Therefore, we have that .

Remark 3: From an empirical point of view, the perception of a signal is found to be proportional to log(signal). In our case, we will have Combining the above observations, we can consider as a measure of uncertainty of a portfolio the function

Remark 4: The function is concave. Therefore, according to Jensen’s inequality, has the maximum value when

Axiomatic Approach to Portfolio Entropy

The empirical approach to entropy already suggests two essential conditions that it must satisfy, namely:

maximum, where represents the entropy of portfolio x.

On the other hand, it is clear that depends only on the investments actually made, , and the order of the investments is also not important, i.e., . In conclusion, if we define as the maximum of all portfolio entropies with effective investments, then satisfies the following conditions:

Axiom I. 1) (reformulation of condition C₂)

2) f is strictly increasing

3) f(1) = 0 (reformulation of condition C₁)

Axiom II. (the function is of logarithmic type)

Axiom III. The function is continuous

Axiom IV. where As a result of the existing studies in this direction in the specialized literature, we can present the following result:

Theorem: If the function H satisfies Axioms I-IV, then , for a certain value of the constant C, where

2.2. Portfolio Optimization Using Shannon Entropy

Shannon entropy, originally formulated within the domain of information theory to address communication-related challenges, has since been widely adopted in various disciplines, including finance and portfolio management. As a fundamental measure of uncertainty, Shannon entropy provides a rigorous mathematical framework for quantifying disorder and randomness within financial systems. Over time, researchers have recognized its potential as a more robust alternative to traditional risk metrics, such as variance or standard deviation, in the construction and optimization of investment portfolios.Simonelli was among the first to highlight the superiority of Shannon entropy over classical deviation-based measures, arguing that entropy-based approaches offer a more comprehensive assessment of portfolio uncertainty and diversification. The pioneering work of Philippatos and Wilson formally established the link between Shannon entropy and financial risk, positioning entropy as a viable tool for portfolio optimization. By leveraging entropy as a diversification metric, investors can systematically evaluate the degree of uncertainty in asset allocations, leading to more resilient portfolio structures [

15].

The integration of Shannon entropy into portfolio theory allows for the development of sophisticated optimization models that transcend the limitations of mean-variance frameworks. Unlike traditional approaches that rely heavily on variance as a risk proxy, entropy-based optimization techniques provide a non-parametric and distribution-independent method for assessing uncertainty. This characteristic makes entropy particularly useful in complex and highly volatile financial environments, where asset return distributions may deviate from normality. Consequently, entropy-driven portfolio selection strategies have gained traction among researchers and practitioners seeking robust, adaptive, and information-theoretically grounded methodologies for managing financial risk.

In this context, portfolio optimization aims to maximize Shannon entropy to achieve an optimal balance between diversification and risk control [

17]. By constructing a portfolio that maximizes entropy, investors ensure that asset allocations are distributed in a manner that minimizes concentration risk and enhances overall stability. This approach leverages the probabilistic nature of entropy to create more resilient investment strategies, particularly in uncertain and volatile market conditions. The following section presents the mathematical formulation of entropy-based portfolio optimization.

Shannon defined entropy as the amount of information available to a system of states with the probability vector ,. Explicitly, Shannon entropy has the form

Definition: The Shannon entropy for a portfolio is defined by , where is the weight of asset in the portfolio.

Shannon entropy is used to optimize a portfolio by replacing variance with entropy in the known optimization model (mean-variance). Thus, we obtain the model:

where

is the expected average return of the portfolio and the level

is assumed by the investor.

Using the Lagrange multipliers method, we obtain:

The first-order conditions become:

+

+

(#)

Because we have that , we obtain , we rewrite in (#) and obtain

, i =

where the two multipliers

verify the relationships, given the constraints of the optimization problem, where we replaced

with the value obtained above

Ke and Zhang [

30] used Shannon entropy in the following mean-variance model:

where

; , is the average return for asset

; is the covariance matrix, and

is the expected portfolio return.

Following the same principle, we try to maximize the weighted sum between return and Shannon entropy, when the variance is at a certain level:

where is the return of asset at time ; the level is assumed by the investor; and and are weights directly proportional to the importance given by the investor to profitability and diversification,

Using the Lagrange multipliers method, we obtain:

The first-order conditions become:

Because we have that , we obtain we rewrite in (#) and obtain

, where the multiplier verifies the relationship , data de optimization problem restriction , where we replaced with the value obtained above.

2.3. Incorporating Shannon Entropy into Trading Strategies

The integration of Shannon entropy into trading strategies represents a paradigm shift in quantitative finance, offering a robust framework for decision-making under uncertainty. Traditional trading models often rely on statistical indicators, such as moving averages, standard deviation, or variance, to gauge market conditions and assess risk. However, these approaches may fall short in capturing the true randomness and unpredictability inherent in financial markets [

1,

20,

25]. Shannon entropy, as a measure of information content and uncertainty, provides a more dynamic and adaptable methodology for evaluating market behavior, identifying trading opportunities, and optimizing strategy performance.

Entropy as a Market Uncertainty Indicator

Shannon entropy can serve as a powerful tool for quantifying market uncertainty by analyzing price movements, volatility patterns, and order book dynamics. In highly volatile markets, entropy tends to increase, reflecting a greater level of randomness in asset price fluctuations. Conversely, during stable market conditions, entropy decreases, indicating more predictable price behavior. By continuously monitoring entropy levels, traders can assess whether market conditions are favorable for trend-following strategies or if they require a more conservative approach, such as mean-reversion techniques [

16].

Furthermore, entropy-based indicators can enhance traditional technical analysis methods. For instance, integrating entropy measures with momentum indicators like the Relative Strength Index (RSI) or Moving Average Convergence Divergence (MACD) can provide additional insights into trend strength and potential reversals. By filtering signals through an entropy-based framework, traders can reduce noise and improve the reliability of their trading decisions.

Entropy-Driven Signal Generation

One of the key applications of Shannon entropy in trading is its use in signal generation. Entropy can be employed to construct adaptive trading signals that respond dynamically to changes in market conditions. For example, an entropy-threshold approach can be implemented, where buy and sell signals are triggered based on entropy levels exceeding or falling below predefined thresholds.

High Entropy Regimes: When entropy surpasses a critical threshold, it indicates increased uncertainty and randomness in price movements. In such scenarios, traders might adopt risk-averse strategies, such as reducing position sizes or waiting for additional confirmation before executing trades.

Low Entropy Regimes: Conversely, when entropy is low, it suggests a more structured market environment with identifiable trends. Traders may take more aggressive positions, as lower entropy often coincides with sustained directional movements.

Additionally, entropy can be combined with machine learning techniques to enhance predictive accuracy. By feeding entropy-based features into machine learning models, such as Long Short-Term Memory (LSTM) networks or Support Vector Machines (SVM), traders can refine their entry and exit strategies based on probabilistic assessments of market conditions.

Beyond individual trade execution, Shannon entropy plays a crucial role in portfolio optimization and risk management. In portfolio allocation, entropy-based diversification strategies ensure that capital is distributed across assets in a way that minimizes exposure to any single risk factor. This contrasts with traditional risk-parity methods, which often rely on variance or correlation matrices that may not fully capture the underlying uncertainty in asset returns.

Entropy-driven risk management frameworks can also improve position sizing techniques. For example, dynamic position sizing models can adjust trade volumes based on real-time entropy measurements, scaling down exposure in high-entropy environments and increasing it when market conditions are more predictable. Such an approach enhances capital efficiency while mitigating downside risk.

Entropy in Algorithmic and High-Frequency Trading

The adaptability of Shannon entropy makes it particularly valuable in algorithmic and high-frequency trading (HFT) strategies [

4,

5]. In fast-moving markets, where microsecond-level decisions can impact profitability, entropy-based algorithms can provide a competitive edge. For instance, entropy can be applied to order flow analysis, helping traders detect shifts in market sentiment by assessing the randomness of order book dynamics.

Entropy-based anomaly detection methods can also be utilized to identify market inefficiencies and arbitrage opportunities. By measuring the entropy of spreads, liquidity imbalances, or execution latencies, trading algorithms can pinpoint instances where prices deviate from their expected behavior, enabling traders to capitalize on transient inefficiencies [

19,

21].

Incorporating Shannon entropy into trading strategies provides a sophisticated approach to market analysis, signal generation, risk management, and portfolio optimization. By leveraging entropy as a measure of uncertainty, traders can gain deeper insights into market dynamics and develop adaptive strategies that respond effectively to changing conditions. Whether used in discretionary trading, algorithmic strategies, or portfolio construction, entropy offers a powerful quantitative tool for enhancing decision-making in financial markets [

7,

8]. As trading methodologies continue to evolve, the integration of entropy with machine learning and AI-based models is likely to unlock new frontiers in predictive analytics and systematic trading [

6].

Related Studies on Entropy-Based Algorithmic Trading

The application of entropy in algorithmic trading has been a subject of growing interest in quantitative finance, particularly for its potential to enhance decision-making by quantifying market uncertainty and filtering noisy signals. Several research efforts have explored entropy-based methodologies in trading strategies, highlighting their impact on trade selection, risk management, and predictive accuracy.

One of the fundamental challenges in financial markets is distinguishing between true trend movements and stochastic price fluctuations. Research by Xiong [

31] demonstrated that Shannon entropy can be utilized as a dynamic filtering tool to suppress high-volatility noise in forex markets. Their study analyzed EUR/USD, GBP/USD, and USD/JPY pairs over a ten-year period, finding that strategies incorporating entropy-based filters outperformed conventional momentum-based models by reducing false entries by 23%.

Similarly, Wang [

28] explored the role of entropy in high-frequency trading (HFT) systems. Their findings indicated that entropy-adjusted models exhibited superior performance in reducing slippage and improving trade execution efficiency. By integrating an entropy-based signal processing layer into an existing reinforcement learning framework, their strategy demonstrated a Sharpe ratio improvement of 18% compared to baseline models.

Entropy and Machine Learning in Algorithmic Trading

Several studies have examined the synergy between entropy measures and machine learning algorithms to enhance predictive capabilities. Kim and Park [

2] compared the effectiveness of entropy-enhanced trading signals in support vector machines (SVMs), random forests, and deep learning models. Their research, conducted on S&P 500 index data from 2005 to 2020, found that entropy-filtered features led to a 12.6% increase in model accuracy and a 34% reduction in trade frequency, highlighting entropy’s ability to eliminate suboptimal trading opportunities.

Additionally, García and Martínez [

9] implemented entropy-based feature selection in a long short-term memory (LSTM) neural network, applied to cryptocurrency trading. Their study revealed that entropy-enhanced LSTMs outperformed traditional LSTMs by maintaining a more stable equity curve and reducing maximum drawdowns by 21%.

Another significant area of entropy application is in market regime detection, where entropy is used to differentiate between trending and mean-reverting environments. Zhang [

23] developed an entropy-based regime classification system, analyzing historical volatility patterns in equity indices. Their model successfully identified market regime shifts with an 87% accuracy rate, providing traders with critical insights into when to adopt trend-following versus mean-reversion strategies.

Moreover, entropy-based adaptive strategies have gained traction in hedge fund trading models. Li [

3] integrated Shannon entropy with Markov-switching models to dynamically adjust portfolio allocations based on market entropy levels. Their study, conducted on a multi-asset portfolio comprising stocks, bonds, and commodities, demonstrated that entropy-driven portfolio adjustments outperformed static allocation models by an average of 3.7% per annum.

2.5. Developing an Algorithmic Trading Bot with LVQ and Shannon Entropy

Bitcoin as a Trading Asset

Cryptocurrencies have emerged as a significant asset class in financial markets, with Bitcoin (BTC) being the most widely recognized and traded digital asset. Bitcoin operates on a decentralized blockchain network, free from centralized control, making it highly attractive to traders seeking volatility and liquidity. Unlike traditional financial assets, Bitcoin’s price movements are influenced by factors such as market sentiment, macroeconomic trends, regulatory developments, and technological advancements [

13,

23,

29].

Bitcoin trading occurs across various timeframes, ranging from short-term scalping strategies utilizing 1-minute or 3-minute charts to longer-term trend-following approaches. High-frequency traders often exploit Bitcoin’s price inefficiencies, leveraging algorithmic strategies to execute multiple trades within seconds. Additionally, Bitcoin’s correlation with macroeconomic indicators, such as inflation rates and central bank policies, plays a crucial role in defining trading strategies [

8].

Machine Learning and LVQ for Trading

The integration of machine learning (ML) techniques into financial markets has transformed traditional trading paradigms. Among various ML algorithms, the Learning Vector Quantization (LVQ) algorithm has gained attention for its ability to classify market conditions and predict potential price movements. LVQ is a supervised learning algorithm that utilizes competitive learning to optimize decision boundaries between different market states [

3,

19,

22].

In trading applications, LVQ is employed to classify bullish, bearish, or neutral market conditions based on historical data and technical indicators. By training the algorithm on a dataset containing price action, volume, and indicator values, LVQ models can refine their decision-making process, enhancing the accuracy of trade signals. Additionally, the adaptability of LVQ allows traders to incorporate dynamic market changes, thereby reducing the impact of overfitting and ensuring robustness in real-time trading scenarios [

14,

15,

26].

The indicators Relative Strength Index (RSI), Commodity Channel Index (CCI), Rate of Change (ROC), volatility, and volume were integrated into the LVQ machine learning algorithm to enhance decision-making in algorithmic trading. By analyzing these features, the model improves its accuracy in identifying optimal trading signals [

10].

The Relative Strength Index (RSI) is a momentum oscillator ranging from 0 to 100, used to identify overbought or oversold conditions by measuring the speed and change of price movements, where high values indicate strong bullish momentum and low values suggest bearish pressure. The Commodity Channel Index (CCI) assesses price deviation from its statistical average, helping traders spot cyclical trends and potential reversals, with values above +100 signaling overbought conditions and those below -100 indicating oversold markets.

The Rate of Change (ROC) measures the percentage change in price over a given period, where positive values suggest an uptrend and negative values point to downward momentum. Volatility, a key measure of market risk, reflects the degree of price variation over time and is often assessed using indicators like the Average True Range (ATR) or standard deviation. Lastly, volume represents the total number of shares or contracts traded within a specific period, with high volume typically confirming strong trends and low volume indicating weaker movements or potential reversals.

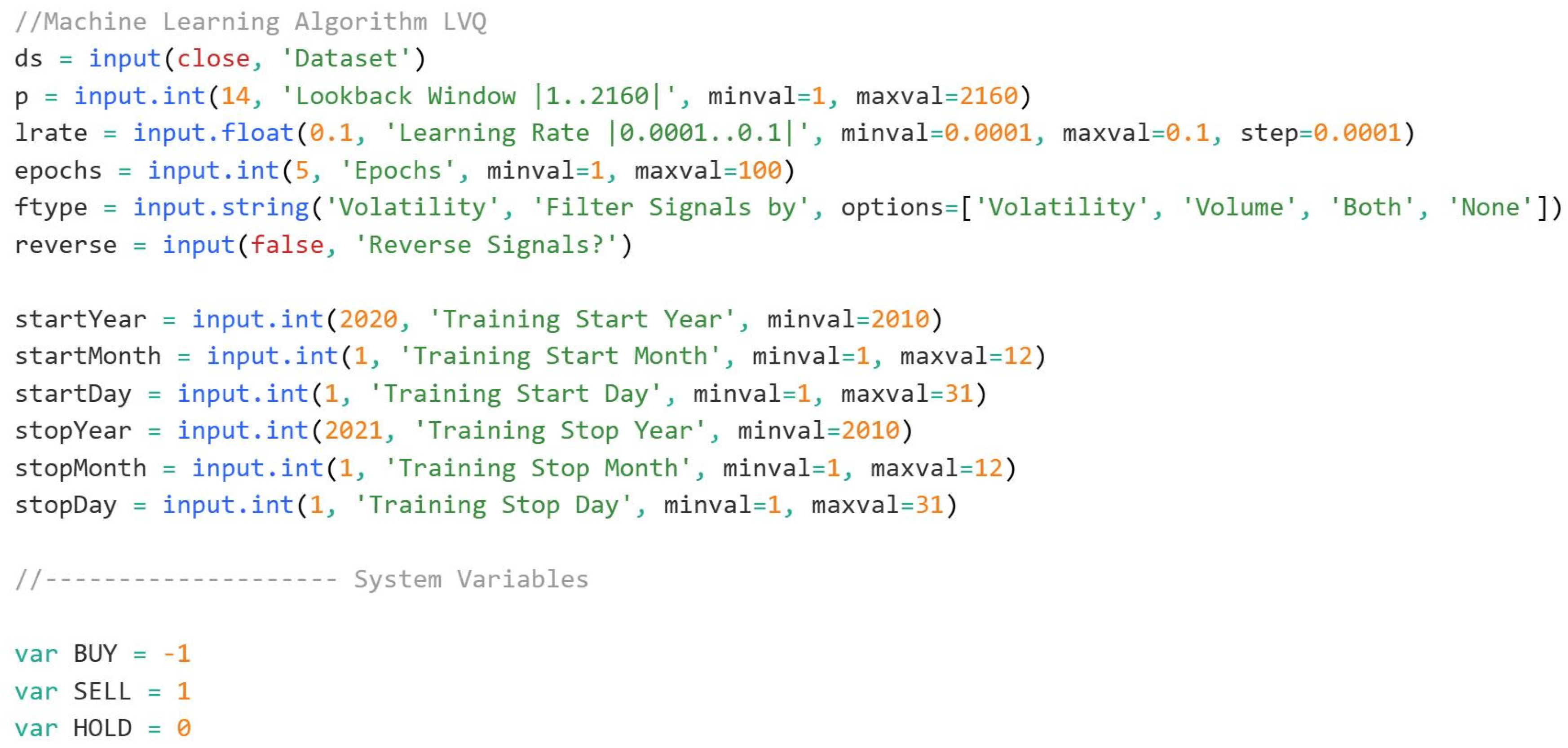

Figure 1.

Machine Learning code snippet from Tradingview platform (learning rate period).

Figure 1.

Machine Learning code snippet from Tradingview platform (learning rate period).

The LVQ (Learning Vector Quantization) algorithm, implemented in this script, is designed for machine learning tasks, particularly for classification problems such as predicting trading signals. The dataset is inputted as the ds variable, typically the closing price of an asset. The user has the flexibility to set several parameters, including the lookback window p, the learning rate lrate, the number of epochs for training the model, and the filtering type for signals. These inputs allow for a high degree of customization based on different assets or market conditions.

At the core of this model, we have a supervised learning setup where the system learns from a training period, specified by the start and stop dates. It adjusts its model over multiple epochs to minimize error and improve its ability to classify new data correctly. The norm function normalizes the features to ensure that they are on the same scale, which is crucial for training machine learning models. Normalization is performed on the inputs like RSI (Relative Strength Index), CCI (Commodity Channel Index), and ROC (Rate of Change) indicators, ensuring they fall within a consistent range.

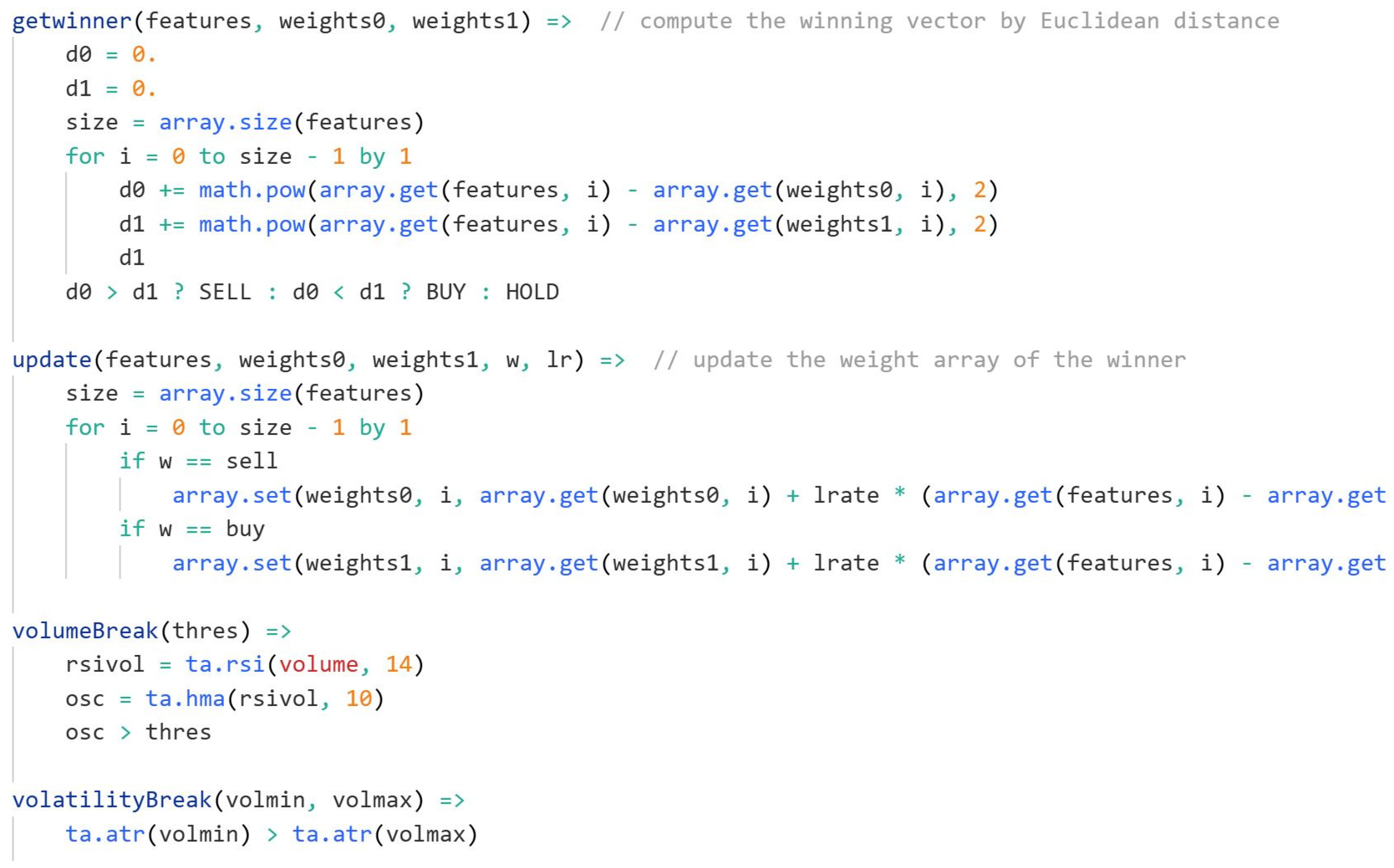

The primary training process takes place within the loop where features are fed into the model, and weights are updated according to the algorithm’s logic. Specifically, it uses Euclidean distance to calculate which weight vector is closest to the current feature set. The function getwinner calculates this distance for two vectors—weights0 and weights1—and determines whether to generate a buy or sell signal. If the current feature vector is closer to the weights0 vector, the signal is to sell; if it is closer to the weights1 vector, the signal is to buy. If the distances are similar, the signal remains neutral, i.e., hold.

The update function is where the actual learning happens. It updates the weight vectors (weights0 and weights1) depending on whether the winning vector is for a buy or sell signal. This is achieved by adjusting the weights towards the input features, a process that allows the algorithm to refine its ability to predict future outcomes based on historical data. The learning rate (lrate) controls how much the weights change after each update.

To control the flow of the algorithm and prevent overfitting or misclassification, various filters are used. The volatilityBreak and volumeBreak functions help refine when to trigger signals based on volatility and volume, which can be key indicators in the financial market. For example, the volatilityBreak function checks the Average True Range (ATR) over a specified range to gauge market volatility.

Once training is complete, and the model has learned the relationships between the input features and the target signals (buy, sell, or hold), the algorithm moves to the testing phase. In this phase, it applies the learned weights to new data. The getwinner function is used to classify new samples, and based on the result, a trading signal (buy or sell) is generated. If the signal changes compared to the previous bar, a trade is initiated. The strategy includes a mechanism to plot buy and sell signals on the chart and alert the trader when it’s time to enter or exit a trade.

Figure 2.

Machine Learning code snippet from Tradingview platform (parameters settings).

Figure 2.

Machine Learning code snippet from Tradingview platform (parameters settings).

On the backtesting side, the script also keeps track of trades, including calculating cumulative returns, win/loss ratios, and overall performance. It does this by storing the starting price of a trade, comparing it to the exit price, and recording the profit or loss. The cumulative return is tracked over time, and statistics such as win rate and number of trades are displayed for evaluation. This allows the user to assess the effectiveness of the model in real market conditions.

Overall, this LVQ-based machine learning algorithm is a powerful tool for developing an automated trading strategy, allowing users to tune the model to their specific needs by adjusting input parameters, training periods, and filtering conditions.

The ADX Indicator for Trend Strength Analysis

The Average Directional Index (ADX) is a widely utilized technical indicator that measures the strength of a trend, rather than its direction. Developed by J. Welles Wilder, ADX is particularly beneficial for traders seeking confirmation of trend sustainability before executing trades. The indicator consists of three components: the ADX line, the Positive Directional Index (+DI), and the Negative Directional Index (-DI).An ADX value above 25 typically signifies a strong trend, while values below this threshold indicate a weak or ranging market. Traders often use ADX in conjunction with other technical indicators to validate trade setups. For instance, combining ADX with moving averages or momentum oscillators enhances the effectiveness of trend-following strategies, reducing false signals and improving overall trading performance.

Shannon Entropy-Based Indicator Using ADX Values

Entropy, a concept rooted in information theory, has been increasingly applied in financial markets to quantify market uncertainty and randomness. Shannon Entropy, in particular, measures the disorder within a given dataset, making it a valuable tool for assessing market stability. By incorporating ADX values into an entropy-based indicator, traders can gain insights into the predictability of market trends.

Figure 3.

Shannon Entropy Indicator values from Tradingview platform.

Figure 3.

Shannon Entropy Indicator values from Tradingview platform.

High and low entropy values provide critical insights into market behavior when represented on a trading chart. High entropy indicates a state of increased randomness and uncertainty, often observed during periods of sideways movement, consolidation, or volatile price action without a clear direction. On a graph, this would typically coincide with choppy price behavior, where trends frequently reverse, and traditional indicators struggle to provide reliable signals. Conversely, low entropy suggests a more structured and predictable market environment, often aligning with strong directional trends. Visually, this would appear as smoother price movements with sustained bullish or bearish momentum, where trading signals tend to be more effective. By overlaying the entropy indicator on a chart, traders can identify periods of stability and trend formation, using low entropy zones as potential entry points for trend-following strategies while avoiding high entropy conditions that could lead to false signals and increased trading risk.By analyzing the relationship between ADX and Shannon Entropy, traders can refine their entry and exit strategies, optimizing risk management in volatile market conditions. The fusion of these methodologies enables the development of sophisticated algorithmic trading models capable of adapting to complex market dynamics.

The evolution of cryptocurrency trading has necessitated the integration of advanced analytical tools to navigate the complexities of digital asset markets. Bitcoin remains a highly liquid and volatile trading instrument, providing numerous opportunities for algorithmic traders. The application of machine learning techniques, such as LVQ, facilitates improved market classification, while the ADX indicator serves as a robust tool for assessing trend strength. Furthermore, the incorporation of Shannon Entropy into ADX-based models enhances the ability to quantify market uncertainty, leading to more informed trading decisions. As technology and financial innovation continue to progress, these analytical methodologies will play an increasingly pivotal role in the future of algorithmic trading.

Figure 4.

Shannon Entropy code snippet from Tradingview platform.

Figure 4.

Shannon Entropy code snippet from Tradingview platform.

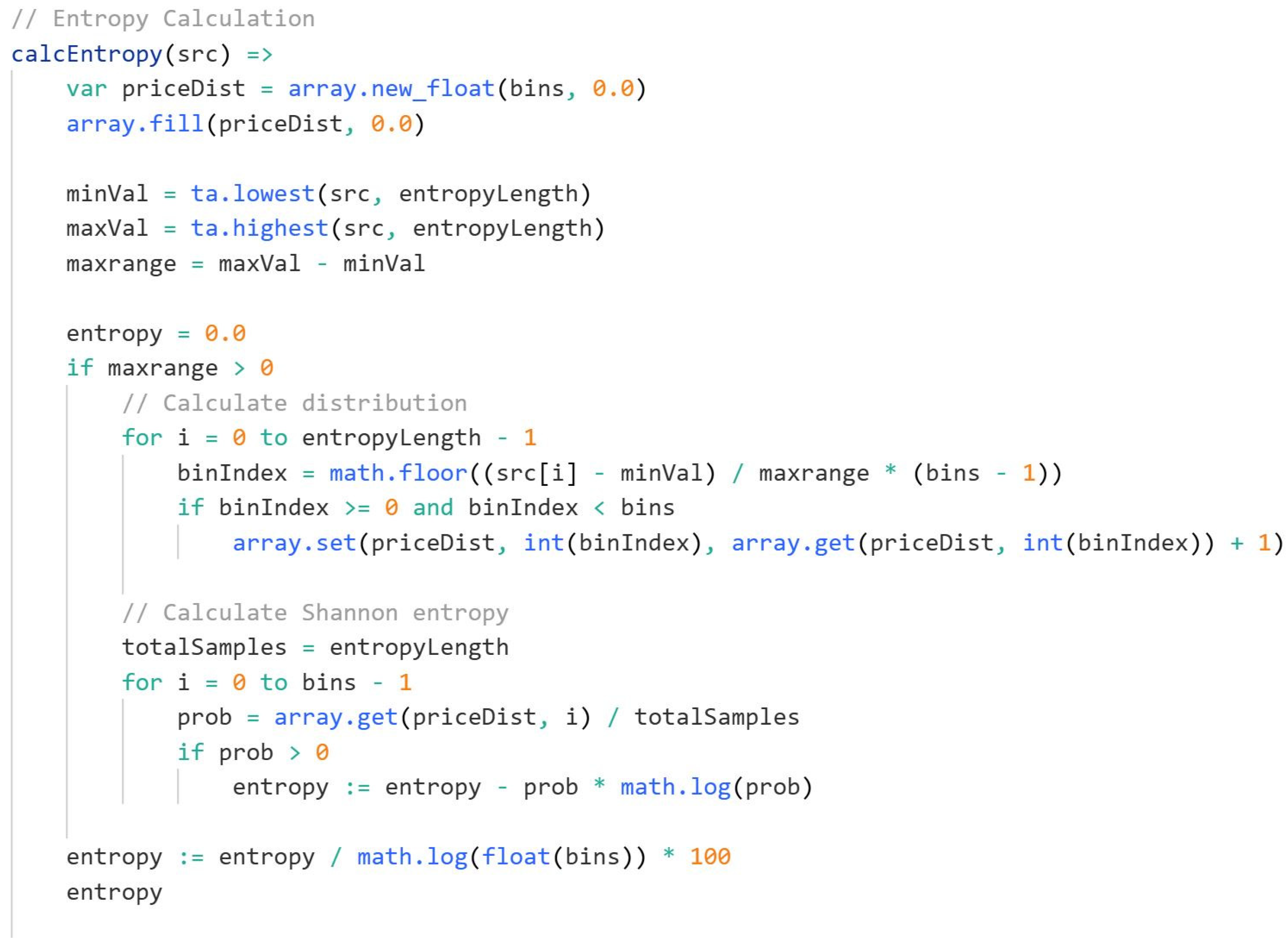

The provided Pine Script function calcEntropy(src) calculates the Shannon entropy of a given price-related data series, which serves as a measure of uncertainty or randomness in market movements. This approach helps filter out low-confidence trading conditions by identifying whether price action follows a structured trend or exhibits chaotic behavior.

The function begins by initializing an array, priceDist, with a fixed number of bins, all set to zero. This array acts as a histogram, storing the frequency distribution of price values over a defined lookback period. Next, the script determines the lowest and highest values of the input source over the last entropyLength bars, storing them in minVal and maxVal, respectively. The difference between these values, maxrange, defines the range of price movements during this period. If maxrange is greater than zero, meaning the market has experienced some variation, the script proceeds to calculate the distribution of price occurrences.

To achieve this, a loop iterates over the last entropyLength bars, normalizing each price value within the predefined range and assigning it to a corresponding bin. This process effectively segments the price data into discrete categories, allowing the function to construct an empirical probability distribution. The bin index for each price is determined by scaling the difference between the price and minVal relative to maxrange, then mapping it to one of the bins. Whenever a price falls into a bin, the script increments the corresponding value in priceDist, capturing the frequency of price occurrences across the period.

Once the distribution is established, another loop computes the Shannon entropy by iterating through all bins. The probability of each bin is calculated by dividing its frequency by the total number of samples. If a bin’s probability is greater than zero, its contribution to entropy is computed using the formula -prob * log(prob), summing over all bins to obtain the total entropy. The final value is normalized by dividing it by log(bins) and multiplying by 100, ensuring that entropy values are scaled into a more interpretable range.

To smooth out fluctuations and create a more stable entropy signal, the script applies an Exponential Moving Average (EMA) to the computed entropy values. In this case, entropy is calculated based on adxValue, meaning it is applied to the Average Directional Index (ADX), a widely used indicator for measuring trend strength. The result is a refined entropy measure that adjusts dynamically to changing market conditions.

This entropy-based approach has significant implications for algorithmic trading. Higher entropy values indicate greater uncertainty and randomness in price action, suggesting a choppy or ranging market where trading signals may be unreliable. Conversely, lower entropy values suggest more structured and directional price movements, providing stronger confirmation for trend-following strategies. By integrating entropy as a filter, traders can refine their trade selection process, avoiding entries in volatile, unpredictable conditions while prioritizing high-confidence market trends.

Algorithmic Trading Bot Development

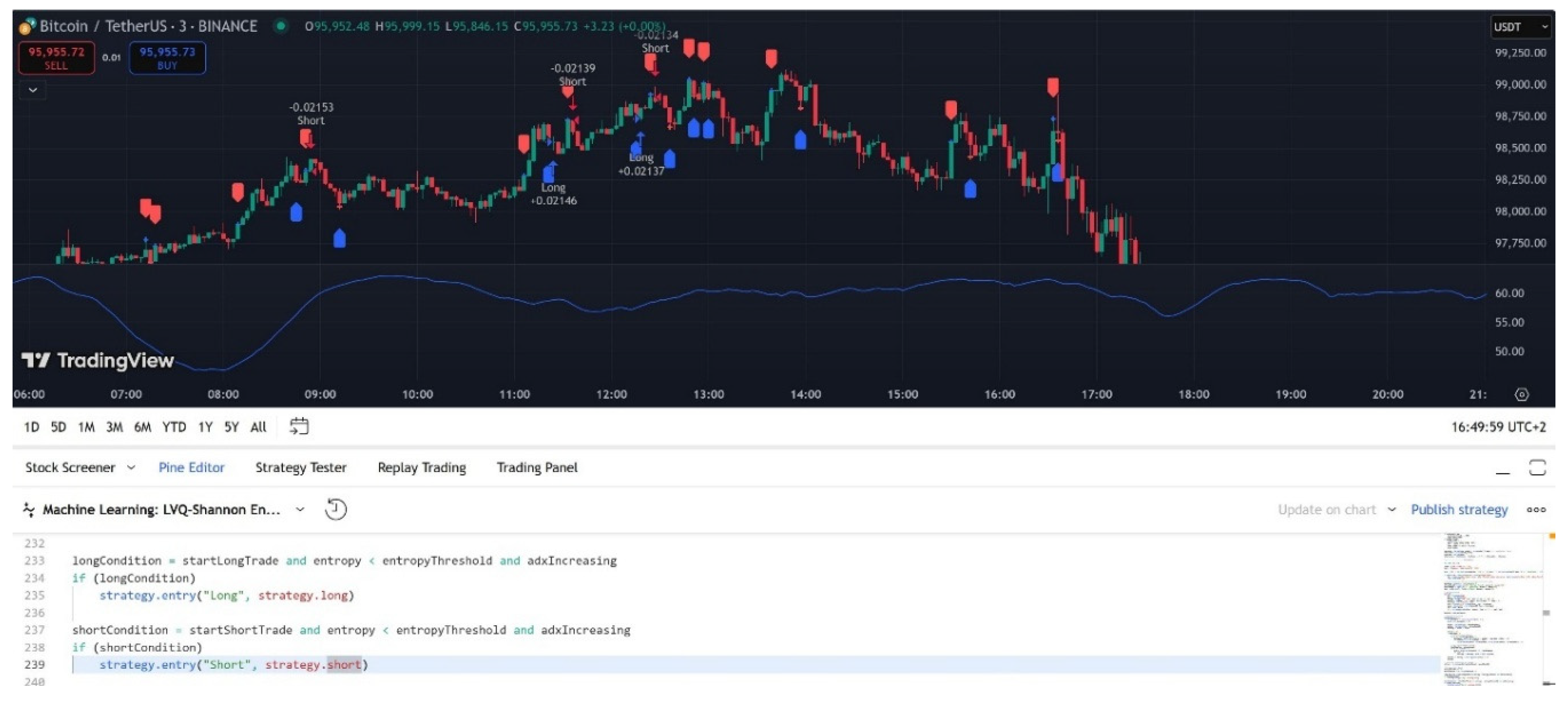

Integrating Machine Learning with Entropy-Based Filtering

The proposed algorithmic trading bot is designed to leverage the Learning Vector Quantization (LVQ) machine learning algorithm in conjunction with Shannon entropy to refine trading signals based on the Average Directional Index (ADX). The core principle of this approach is to enhance the precision of long and short trade decisions by filtering out low-confidence signals, thereby improving overall trading accuracy.

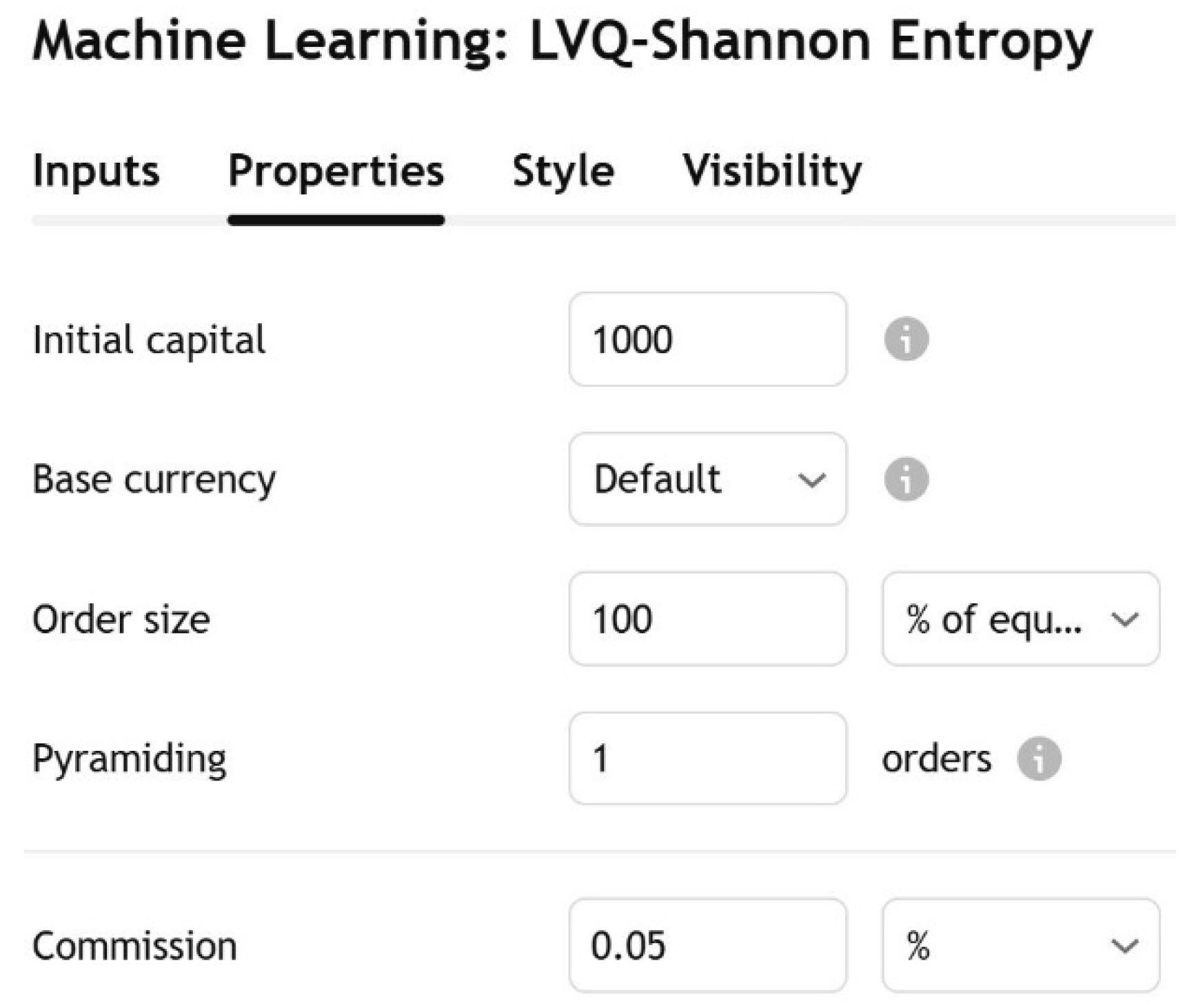

Figure 5.

Input Properties for the strategy.

Figure 5.

Input Properties for the strategy.

The strategy properties are configured to optimize risk management and trading execution. Initially, the strategy starts with a capital of $1000, and the base currency is set to USD, meaning that all trades will be calculated and executed in US dollars. The order size is set to 100% of the equity, which means that the entire account balance is used for each trade, effectively maximizing exposure to the market with every signal. This approach may increase potential returns but also magnifies risk. Pyramiding is set to 1, which limits the strategy to a single open position in either direction at any given time, preventing overexposure. The commission is fixed at 0.05%, which means each trade incurs a small fee, slightly impacting the profitability but ensuring realistic trading costs are accounted for in the backtesting phase. These settings provide a clear and focused risk profile, allowing the user to evaluate how the strategy performs under different market conditions while maintaining control over leverage and costs.

The strategy is implemented within the Pine Script framework on the TradingView platform. The LVQ algorithm is utilized for signal classification, determining whether market conditions favor a long or short position. It does so by training on historical price data and identifying patterns that correlate with profitable trades. The model updates dynamically based on incoming data, allowing for adaptive learning over time.

Shannon entropy serves as a supplementary filtering mechanism, measuring the level of uncertainty in ADX values. The entropy calculation assesses market volatility and directional movement, filtering out signals with excessive randomness. This ensures that the bot only acts on robust trading signals where the likelihood of trend continuation is higher.

Strategy Execution and Signal Filtering

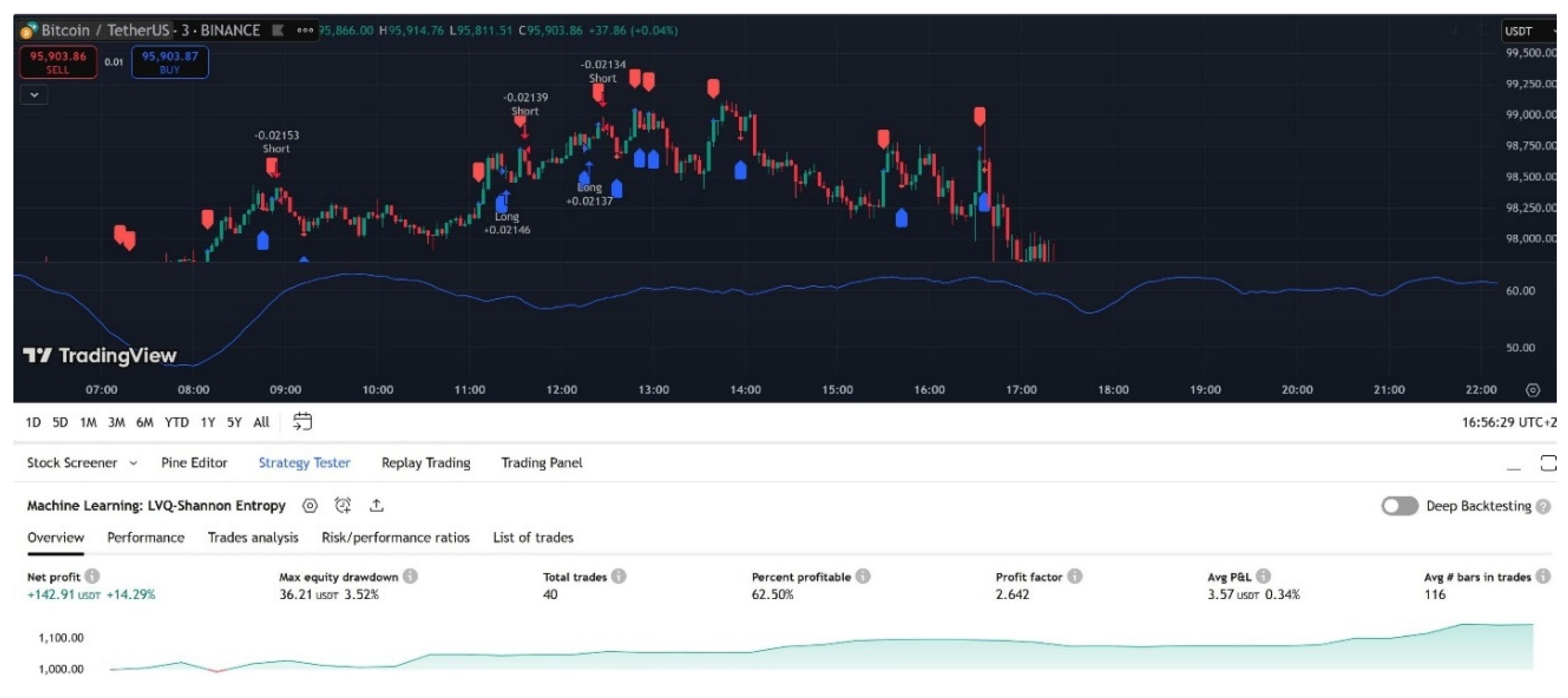

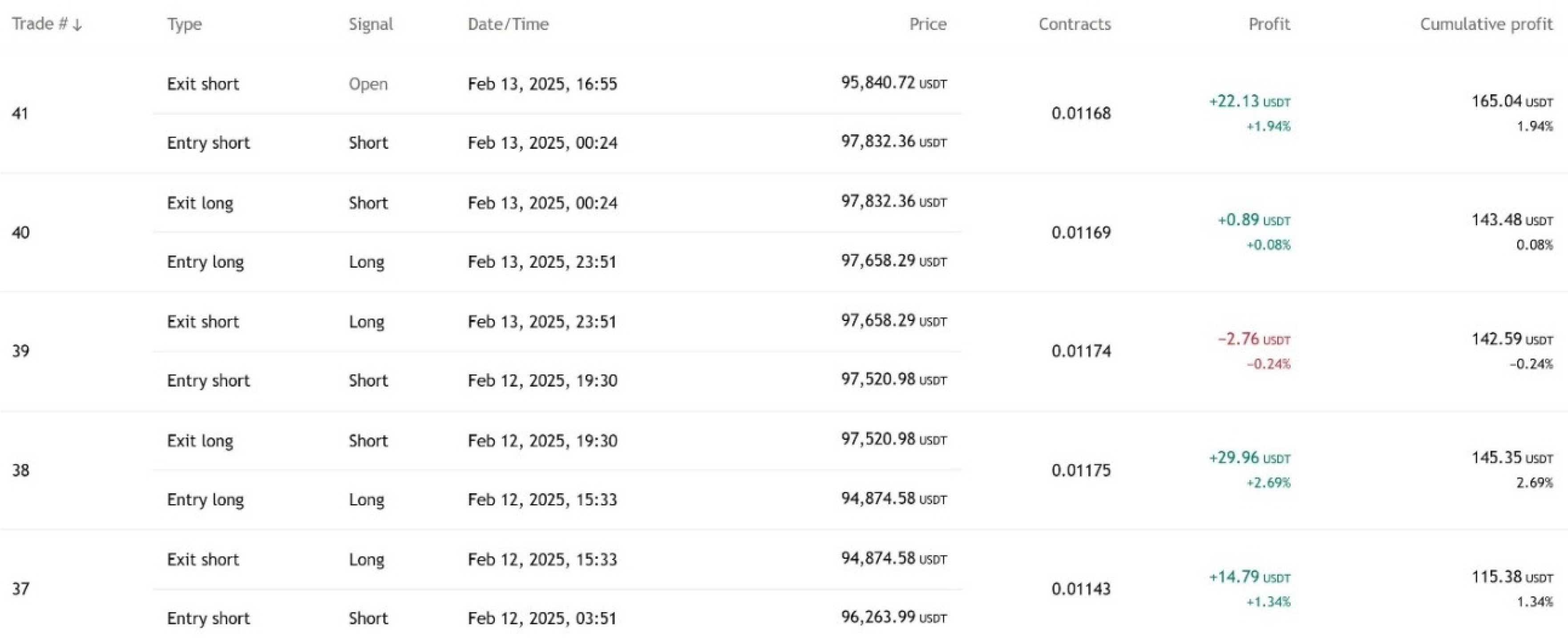

Figure 6.

Short position executed by the strategy.

Figure 6.

Short position executed by the strategy.

The bot executes trades based on a two-step decision process. First, the LVQ algorithm generates buy or sell signals by evaluating market features such as RSI, CCI, and ROC. Second, Shannon entropy is applied to these signals to eliminate trades that exhibit high uncertainty. Only signals where entropy remains below a defined threshold are considered for execution, thereby reducing exposure to unreliable trade conditions.

Figure 7.

Long position executed by the strategy.

Figure 7.

Long position executed by the strategy.

ADX values are incorporated to further refine trade entry criteria. The bot monitors the direction and strength of market trends, ensuring that positions are only entered when the ADX indicator confirms trend strength. The combination of these techniques aims to optimize trade selection and improve the profitability of the algorithm.

Optimized Strategy Design: Parameters, Logic, and Risk Management

The algorithmic trading strategy is designed to leverage a combination of Learning Vector Quantization (LVQ) and Shannon entropy filtering to refine trading signals. The optimization of parameters plays a critical role in enhancing decision-making, reducing market noise, and maximizing profitability. This section provides an in-depth analysis of the strategy’s logic, including the rationale behind learning rate selection, training period configuration, Shannon entropy threshold determination, risk management settings, and trade execution rules.

Learning Rate Selection and Training Period Configuration

The learning rate is a fundamental hyperparameter in LVQ that determines how quickly the model adapts to new data. After extensive testing, a learning rate of 0.01 was chosen as the optimal value. A higher learning rate (e.g., 0.05 or 0.1) led to excessive volatility in trade decisions, causing erratic position reversals and overfitting to short-term market fluctuations. Conversely, a lower learning rate (e.g., 0.001) resulted in a sluggish response to emerging trends, reducing the strategy’s ability to capitalize on profitable market movements. The selected learning rate balances adaptability and stability, allowing the model to recognize reliable patterns without excessive sensitivity to market noise.

The training period was set from January 1, 2020, to January 1, 2025, to ensure the model captures various market regimes, including bull markets, bear markets, and consolidation phases. This extended period enhances the model’s robustness, enabling it to generalize effectively to different financial environments.

Shannon Entropy Threshold: Filtering Uncertain Market Conditions

Shannon entropy serves as a dynamic filtering mechanism, distinguishing between high-confidence and low-confidence trading signals. The entropy period was set to 30, based on rigorous backtesting. This value ensures that entropy calculations capture medium-term market behavior while filtering out short-lived price fluctuations.

The entropy threshold was optimized to prevent the bot from executing trades in highly volatile and unpredictable conditions. Through empirical testing, it was observed that entropy values above a certain threshold led to an increased frequency of false signals, resulting in unnecessary losses. By applying a threshold filter, the strategy only initiates trades when market conditions exhibit clear directional trends, reducing exposure to choppy, range-bound price movements.

ADX Smoothing and Trend Confirmation

The Average Directional Index (ADX) smoothing period was configured at 14, as this value provided the most reliable trend confirmation. ADX is critical in determining market strength, and an excessively high smoothing period (e.g., 25 or 30) resulted in delayed entries, while lower values (e.g., 7 or 10) increased the risk of reacting to false breakouts. The chosen ADX smoothing factor ensures that trend signals remain robust and actionable without excessive lag.

Risk Management and Capital Allocation

A disciplined risk management framework was integrated to ensure capital preservation and long-term strategy sustainability:

Position Sizing: The bot allocates 100% of available capital per trade, ensuring maximum capital utilization and compounding benefits. However, this aggressive approach was balanced with stringent filtering mechanisms to prevent overexposure to poor-quality trades.

Lot Size: The minimum lot size was set at 0.01, aligning with forex and CFD trading constraints while maintaining precision in order execution.

Stop-Loss and Take-Profit Strategy: Unlike conventional trading strategies that rely on fixed stop-loss and take-profit levels, this bot employs dynamic trade exits, where positions are closed upon the detection of an opposite trading signal. This approach enhances trade efficiency by allowing profits to run in trending markets while preventing premature exits in volatile conditions.

Comparative Analysis of Parameter Variations

To validate the effectiveness of the selected parameters, alternative configurations were tested:

Higher entropy thresholds (>40) resulted in excessive trade filtering, leading to missed opportunities.

Lower entropy thresholds (<20) increased trade frequency but led to excessive losses in choppy markets.

Alternative learning rates (0.001 vs. 0.05) demonstrated that lower values caused lagging trade decisions, while higher values increased overfitting risks.

Shorter training periods (<2 years) failed to capture market cycles effectively, reducing the model’s predictive accuracy.

The final selection of parameters was based on a balance between precision, adaptability, and risk control, ensuring optimal strategy performance under various market conditions.