1. Intro

Let

and suppose function

, where

A and

f are Borel. We want a satisfying average for all pathological

f taking finite values only. The problem is the expected value of certain examples of

f (§

2.1,§

2.2), w.r.t the Hausdorff measure in its dimension, is undefined (§

2.3). To fix this, we take the expected value of a sequence of bounded functions converging to

f (§

2.3.2); however, depending on the sequence of bounded functions chosen, the expected value could be one of several values (thm. 1). Hence, we define a leading question (§

3.1) which chooses sequences of bounded functions with the same satisfying and finite expected value, such the term “satisfying" is explained rigorously.

Note, the leading question (§3.1) was inspired by two problems (i.e., informal versions of thm. 2 and 5):

- (1)

-

If

is the set of all

, where the expected value of

f w.r.t the Hausdorff measure

in its dimension is finite, then

F is shy [

13].

If

is shy, we say “

almost no" element of

lies in

F (§

2.4).

- (2)

-

If

is the set of all

, where two sequences of bounded functions that converge to

f have different expected values, then

F is prevelant [

13].

If

is prevelant, we say “

almost all" elements of

lies in

F (§

2.4).

In section §5, we clarify the leading question (§

3.1) by applying the rigorous definitions of the leading question to specific examples (§

5.2.1). We also define a “measure" (§

5.3.1,§

5.3.2) of the sequence of a bounded functions’ graphs. This is crucial for defining a satisfying expected value, where “measure" is defined by:

- (1)

Covering each graph with minimal, pairwise disjoint sets of equal

Hausdorff measure (§

Appendix 8.1)

- (2)

- (3)

Taking a “pathway of line segments" starting with sample point

to the sample point with the smallest Euclidean distance from

(i.e., when more than one point has the smallest Euclidean distance to

, take either of those points). Next, repeat this process until the pathway intersects with every sample point once (§

Appendix 8.3.1)

- (4)

Taking the length of each line segments in the pathway and remove the outliers which are more than

times the interquartile range of the length of each line segment as

(§

Appendix 8.3.2),

- (5)

Multiply the remaining lengths by a constant to get a probability distribution (§

Appendix 8.3.3)

- (6)

- (7)

We give examples of how to apply the “measure" (§

5.3.3-§

5.3.5), then define the actual rate of expansion of a bounded functions’ graph (§

5.4).

Finally, we answer the leading question in §6. Since the answer is complicated, is likely incorrect, and the leading question might not admit an unique expected value, it is best to keep refining the leading question (§

3.1) rather than worrying about an immediate solution.

2. Formalizing the Intro

Let and suppose function , where A and f are Borel. Let be the Hausdorff dimension, where is the Hausdorff measure in its dimension on the Borel -algebra.

We want an unique, satisfying average for each of the following functions (§

2.1,§

2.2) taking finite values only. We explain the method of averaging in later sections, starting from §

2.3.1.

2.1. First Special Case of f

If the graph of f is G, we want an explicit f where:

- (1)

The function

f is everywhere surjective [

1] (i.e.,

f is defined on a topological space where its’ restriction to any non-empty open subset is surjective).

- (2)

2.1.1. Potential Answer

If

, using this post [

3]:

Consider a Cantor set

with Hausdorff dimension 0 [

4]. Now consider a countable disjoint union

such that each

is the image of

C by some affine map and every open set

contains

for some

m. Such a countable collection can be obtained by e.g. at letting

be contained in the biggest connected component of

(with the center of

being the middle point of the component).

Note that

has Hausdorff dimension 0, so

has Hausdorff dimension one [

2].

Now, let

such that

is a bijection

for all

m (all of them can be constructed from a single bijection

, which can be obtained without choice, although it may be ugly to define) and outside

let

g be defined by

, where

has a graph with Hausdorff dimension 2 [

14] (this doesn’t require choice either).

Then the function g has a graph with Hausdorff dimension 2 and is everywhere surjective, but its graph has Lebesgue measure 0 because it is a graph (so it admits uncountably many disjoint vertical translates).

Note, we can make the construction with union of

rather explicit as follows. Split the binary expansion of

x as strings of size with a power of two, say

becomes

. If this sequence eventually contains only strings of the form

or

, say after

, then send it to

, where

. Otherwise, send it to the explicit continuous function

h given by the linked article [

14]. This will give you something from

Finally, compose an explicit (reasonable) bijection from to . In your case, the construction can be easily adapted so that the or target space is actually , then compose with .

In case we cannot obtain a unique, satisfying average (§3.1) from §2.1.1, consider the following:

2.2. Second Special Case of f

Suppose, we define

, where

, such that:

In the next section, we state why we want §2.1 and §

2.2.

2.3. Attempting to Analyze/Average f

Suppose, the expected value of

f w.r.t the Hausdorff measure in its dimension is:

Then, using §2.1, the integral of

f w.r.t the Hausdorff measure

in its dimension is undefined: i.e., the graph of

f has Hausdorff dimension 2 with a zero 2-d Hausdorff measure (§

2.1.1). Hence,

is undefined.

Moreover, obseve that in §2.2,

f is nowhere continuous and defined on a countably infinite set, which means depending on the enumeration of

A or the sequence

, where the expected value of

f (when it exists) is:

the expected value

is any number from

to

. Hence, we need a specific enumeration that gives a unique, satisfying, and finite expected value, generalizing this process to nowhere continuous functions defined on

uncountable domains.

Thus, we want the “expected value of chosen sequences of bounded functions converging to

f with the same satisfying and finite expected value" which we describe rigorously in later sections; however, consider the following definitions beginning with §

2.3.1:

2.3.1. Definition of Sequences of Functions Converging to f

Let and suppose function , where A and f are Borel.

The sequence of functions , where and is a sequence of sets, converges to f when:

For any , there exists a sequence s.t. and .

2.3.2. Expected Value of Sequences of Functions Converging to f

Hence, suppose:

(§

2.3.1)

is the absolute value

be the Hausdorff dimension

is the Hausdorff measure in its dimension on the Borel -algebra

the integral is defined, w.r.t the Hausdorff measure in its dimension

The expected value of

is a real number

, when the following is true:

when no such

exists,

is infinite or undefined.

2.3.3. The Set of All Bounded Functions/Sets

Let

and suppose the function

, where

A and

f are Borel. Then, we define the following:

is the set of all bounded Borel functions in a function space

is the set of all bounded Borel subsets of a set

For example,

is the set of all bounded Borel

and

is the set of all bounded Borel subsets of

. Note, however:

Theorem 1. For all , suppose and . There exists a , where and (§2.3.2)

For example, the expected values of the sequences of bounded functions converging to

f (§

2.3.1, §

2.3.2) in §2.1 and §2.2 satisfy thm. 1. For simplicity, we illustrate this with §2.2.

2.3.4. Example Illustrating Theorem 1

For the second case of Borel

(§

2.2), where

, and:

suppose:

and

where for

,

and for

Note, for all

:

Hence,

and

. Also, the set-theoretic limit of

and

is

: i.e.,

where:

(We’re unsure how to prove the set-theoretic limits; however, a mathematician specializing in limits should be able to check.)

Therefore, (thm. 1).

Now, suppose we want to average

and

, which we denote

and

. Note, this is the same as computing the following (i.e., the cardinality is

and the absolute value is

):

Thus, if we assume

in eq.

8, using [

9]:

The sum counts the number of fractions with an even denominator and an odd numerator in set , after canceling all possible factors of 2 in the fraction. Let us consider the first case. We can write:

where

counts the fractions

in

that are not counted in

, i.e., for which

. This is the case when the denominator is odd after the cancellation of the factors of 2, i.e., when the numerator

c has a number of factors of 2 greater than or equal to that of

, which we will denote by

a.k.a the 2-valuation of

,

[

11]. That means,

c must be a multiple of

. The number of such

c with

is simply the length of that inteval, equal to

, divided by

. Thus,

This obviously tends to zero, proving

Last, we need to show

in eq.

9, where

, proving theorem 1.

Concerning the second case [

9], it is again simpler to consider the complementary set of

such that the denominator is odd when all possible factors of 2 are canceled. We can see that for

, and these obviously include all those we had for smaller

v. The “new" elements in

with

are those that have the denominator

when written in lowest terms. Their number is equal to the number of

,

, which is given by Euler’s

function. Since we also consider negative fractions, we have to multiply this by 2. Including

, we have

. There is no simple explicit expression for this (cf. oeis:A99957 [

12]), but we know that

[

12]. On the other hand, the total number of all elements of

is

, since each time we increase

v by 1, we have the additional fractions with the new denominator

and the numerators are coprime with

d, again with the sign + or −. From oeis:A002088 [

10] we know that

, so

, which finally gives

as desired.

Hence, and proving thm.1.

Thus, consider:

2.4. Definition of Prevalent and Shy Sets

A Borel set is said to be prevalent if there exists a Borel measure on X such that:

- (1)

for some compact subset C of X, and

- (2)

the set has full -measure (that is, the complement of has measure zero) for all .

More generally, a subset F of X is prevalent if F contains a prevalent Borel Set.

Moreover:

Hence:

If is prevelant, we say “almost every" element of X lies in F.

If is shy, we say “almost no" element of X lies in F.

2.5. Motivation for Averaging §2.1 and §2.2

If

is the expected value of

f, w.r.t the Hausdorff measure

in its dimension,

Consider the following problems:

Theorem 2. If is the set of all , where is finite, then F is shy (§2.4).

Note 3 (

Proof theorem 2 is true).

We follow the argument presented in Example 3.6 of this paper [13], take (measurable functions over A), let P denote the one-dimensional subspace of A consisting of constant functions (assuming the Lebesgue measure on A) and let (measurable functions over A without finite integral). Let denote the Lebesgue measure over P, for any fixed :

Meaning P is a one-dimensional, so f is a 1-prevalent set.

Note 4 (Way of Approaching Theorem 2). For all , suppose that and . If is the set of all , where there exists and such that and is finite (§2.3.2), then F should be prevalent (§2.4) or neither prevalent nor shy (§2.4).

Theorem 5. For all , suppose and . When is the set of all , where and , then F is prevalent (§2.4).

Note 6 (Possible method to proving theorem 5 true). For all , suppose and . Therefore, suppose is the set of all whose lines of symmetry intersect at one point, where if , then . In addition, is the set of symmetric which clearly forms a shy subset of . Since , we have proven that Q is also shy (i.e., a subset of a shy set is also shy). Since the complement of the shy set Q is prevalent, is prevalent, such that for all , and . If this is correct, we have partially proven thm. 5.

Note 7 (Way of Approaching Theorem 5). Suppose and are arbitrary sets, such that for all , and . If is the set of all , where and is unique, then F should be prevelant (§2.4).

Since thm. 2 and 5 are true, we need to solve both theorems at once with the following:

2.5.1. Approach

Suppose and are arbitrary sets, such that for all , and . If is the collection of all , where and is unique, satisfying (§3) and finite, then F should be:

- (1)

a prevalent (§

2.4) subset of

- (2)

If not prevalent (§

2.4) then neither prevalent (§

2.4) nor shy (§

2.4) subset of

.

3. Attempt to Define “Satisfying" in The Approach of §2.5.1

3.1. Leading Question

To define

satisfying in the blockquote of the §

2.5.1, we ask the

leading question...

Suppose, there exists arbitrary sets and , where for all :

- (A)

and

- (B)

and

- (C)

is the sequence of the graph of each

- (D)

□ is the logical symbol for “it’s necessary"

- (E)

C is the chosen center point of (e.g., the origin)

- (F)

E is the fixed, expected rate of expansion of

w.r.t center point

C: e.g.,

(§

3.1.C, §

3.1.E)

- (G)

is the actual rate of expansion of

w.r.t center point

C (§3.1.C, §

3.1.E, §5.4)

Does there exist an unique choice function, which chooses an unique set and , where for all , and , such that:

- (1)

(§

2.3.1)

- (2)

For all

, where for all

and

, assuming

, the “measure" (§

5.3.1, §

5.3.2) of

(§

3.1.C) must increase at a rate linear or superlinear to that of

(§

3.1.C)

- (3)

is unique and finite (§

2.3.2)

- (4)

-

For some

and

satisfying (1), (2) and (3), when

f is unbounded (i.e, skip (4) when

f is bounded), for all sets

and

, where

,

,

, and

in (1), (2) and (3), s.t.

(§

2.3.1, §

2.3.2, §

3.1.D), note that for any

, where

and

satisfy (1), (2) and (3):

If the absolute value is

and the

-th coordinate of

C (§

3.1.E) is

,

(§

2.3.1, §

2.3.2)

If

, then for all linear

, where

and the Big-O notation is

, there exists a function

, where (§

3.1.F-G):

such that:

In simpler terms, “the rate of divergence" of

(§

3.1.F-G) is

less than or equal to “the rate of divergence" of

(§

3.1.F-G).

- (5)

-

When set is the set of all , where a choice function chooses a collection and , such that and satisfy (1), (2), (3) and (4), then F should be:

- (a)

a prevelant (§

2.4) subset of

- (b)

If not (5a) then neither a prevelant (§

2.4) nor shy (§

2.4) subset of

- (6)

Out of all choice functions which satisfy (1), (2), (3), (4) and (5), we choose the one with the simplest form, meaning for each choice function fully expanded, we take the one with the fewest variables/numbers?

(In case this is unclear, see §

5.)

We are convinced

in (§

3.1 crit. 3) isn’t

unique nor

satisfying enough to answer the approach of §

2.5.1. Still, adjustments are possible by changing the criteria or by adding new criteria to

the question.

4. Question Regarding My Work

Most don’t have time to address everything in my research, hence I ask the following:

Is there a research paper which already solves the ideas I’m woring on? (Non-published papers, such as mine [7], don’t count.)

5. Clarifying §3

See §

3.1 once reading §

5, and consider the following:

Is there a simpler version of the definitions below?

5.1. Example of Sequences of Bounded Functions Converging to f (§2.3.1)

The sequence of bounded functions , where and is a sequence of bounded sets, converges to Borel when:

For any

there exists a sequence

s.t.

and

(see [

5] for info).

Example (Example of §

2.3.1).

If and , where , then an example of , such that is:

- (1)

- (2)

for

Example (More Complex Example). If and , where , then an example of , such that is:

- (1)

- (2)

for

5.2. Expected Value of Bounded Sequence of Functions

The expected value of

is a real number

, when the following is true:

otherwise when no such

exists,

is infinite or undefined.

5.2.1. Example

Using example 0.1, when where:

- (1)

- (2)

for

If we assume

:

To prove eq. 19 is true, recall:

Hence, for all

Since eq. 19 is true,

. Note, if we simply took the average of

f from

, using the improper integral, the expected value:

is

(when

,

, and

) or

(when

,

, and

), making

undefined. (However, using eq.

12-, we get the

instead of an undefined value.)

5.3. Defining the “Measure"

5.3.1. Preliminaries

We define the “

measure" of

, in §5.3.2, which is the sequence of the graph of each

(§

2.3.1). To understand this “measure", continue reading.

- (1)

For every

, “over-cover"

with minimal, pairwise disjoint sets of equal

measure. (We denote the equal measures

, where the former sentence is defined

: i.e.,

enumerates all collections of these sets covering

. In case this step is unclear, see §

Appendix 8.1.)

- (2)

For every

,

r and

, take a sample point from each set in

. The set of these points is “the sample" which we define

: i.e.,

enumerates all possible samples of

. (If this is unclear, see §

Appendix 8.2.)

- (3)

-

For every , r, and ,

- (a)

Take a “pathway" of line segments: we start with a line segment from arbitrary point

of

to the sample point with the smallest

-dimensional Euclidean distance to

(i.e., when more than one sample point has the smallest

-dimensional Euclidean distance to

, take either of those points). Next, repeat this process until the “pathway" intersects with every sample point once. (In case this is unclear, see §

Appendix 8.3.1.)

- (b)

Take the set of the length of all segments in (3a), except for lengths that are outliers (i.e., for any constant

, the outliers are more than

C times the interquartile range of the length of all line segments as

). Define this

. (If this is unclear, see §

Appendix 8.3.2.)

- (c)

Multiply remaining lengths in the pathway by a constant so they add up to one (i.e., a probability distribution). This will be denoted

. (In case this is unclear, see §

Appendix 8.3.3)

- (d)

Take the shannon entropy [

8] of step (3c). We define this:

which will be

shortened to

. (If this is unclear, see §

Appendix 8.3.4.)

- (e)

Maximize the entropy w.r.t all "pathways". This we will denote:

(In case this is unclear, see §

8.3.5.)

- (4)

Therefore, the

maximum entropy, using (1) and (2) is:

5.3.2. What Am I Measuring?

We define

and

, which respectively are sequences of the graph for each of the bounded functions

and

(§

2.3.1). Hence, for

constant and

cardinality

- (a)

Also, using (2) and (3e) of

5.3.1, suppose:

then (using

) we also get:

- (b)

Using (2) and (3e) of

5.3.1, suppose:

then (using

) we get

- (1)

If using

and

we have:

then

what I’m measuring from increases at a rate

superlinear to that of

.

- (2)

If using equations

and

(where, using

and

, we swap

r with

v and

with

) we get:

then

what I’m measuring from increases at a rate

sublinear to that of

.

- (3)

-

If using equations , , , and , we both have:

- (a)

or are equal to zero, one or

- (b)

or are equal to zero, one or

then what I’m measuring from increases at a rate linear to that of .

5.3.3. Example of The “Measure" of Increasing at Rate Super-Linear to That of

Suppose, we have function

, where

, and:

such that:

and

where for

,

and

Hence, when

is:

and

is:

Note, the following:

-

Since

and

is countably infinite, there exists minimum

which is 1. Therefore, we don’t need

. We also maximize

(§

5.3.1 step 3e) by the following procedure:

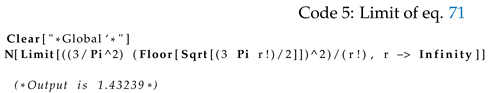

- (1)

For every

, group

into elements with an even numerator when simplified: i.e.,

which we call

, and group

into elements with an odd denominator when simplified: i.e.,

which we call

- (2)

Arrange the points in from least to greatest and take the 2-d Euclidean distance between each pair of consecutive points in . In this case, since all points lie on , take the absolute difference between the x-coordinates of then call this . (Note, this is similar to §5.3.1 step 3a).

- (3)

Repeat step (3) for , then call this . (Note, all point of lie on .)

- (4)

Remove any outliers from (i.e., d is the 2-d Euclidean distance between points and ). Note, in this case, and should be outliers (i.e., for any , the lengths of are more than C times the interquartile range of the lengths of ) leaving us with .

- (5)

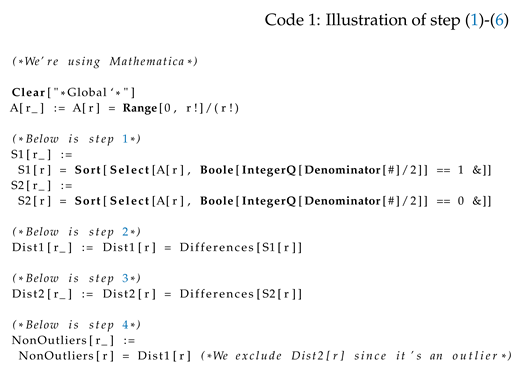

Multiply the remaining lengths in the pathway by a constant so they add up to one. (See P[r] of code 1 for an example)

- (6)

Take the entropy of the probability distribution. (See entropy[r] of code 1 for an example.)

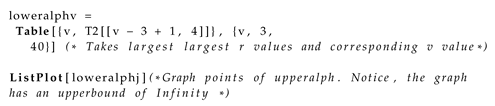

We can illustrate this process with the following code:

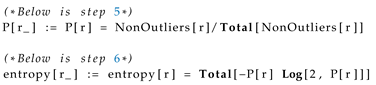

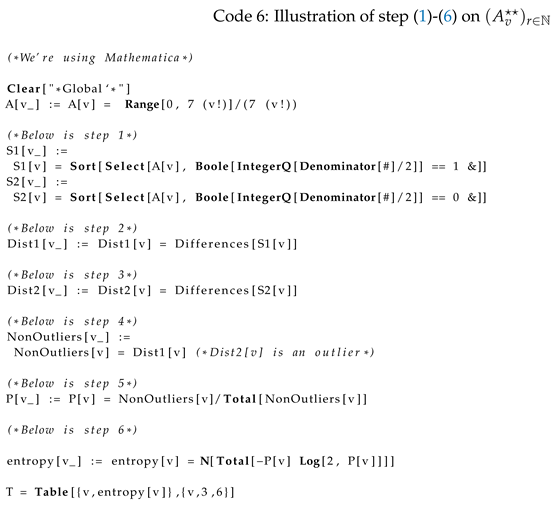

Taking Table[{r,entropy[r]},{r,3,8}], we get:

and notice when:

- (1)

- (2)

- (3)

the output of code 2 can be defined:

Hence, since

:

and

(I need help proving this):

Hence,

entropy[r] is the same as:

Using this post [

15], we assume an approximation of

Table[entropy[v],{v,3,Infinity}] or

is:

Hence, using §

5.3.2 (a) and §

5.3.2 (2), take

(where

is Euler’s Totient function) to compute the following:

where:

- (1)

-

For every , we find a , where , but the absolute value of is minimized. In other words,

for every

, we want

where:

Finally, since

, we wish to prove

within §

5.3.2 crit. 1:

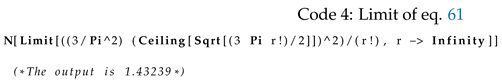

where using mathematica, we get the limit is greater than one:

Also, using §

5.3.2 (b) and §

5.3.2 (1), take

(where

is Euler’s Totient function) computing the following:

where:

- (1)

For every

, we find a

, where

, but the absolute value of

is minimized. In other words, for every

, we want

where:

Finally, since

, we wish to prove

within §

5.3.2 crit. 1:

where using mathematica, we get the limit is greater than one:

Hence, since the limits in eq.

70 and eq.

60 are greater than one and less than

: i.e.,

what we’re measuring from increases at a rate

superlinear to that of

(i.e.,

5.3.2 crit. 1).

5.3.4. Example of The “Measure" from Increasing at a Rate Sub-Linear to That of

Using our previous example, we can use the following theorem:

Theorem 8.

If what we’re measuring from increases at a rate superlinear to that of , then what we’re measuring from increases at a ratesublinearto that of

Hence, in our definition of super-linear (§

5.3.2 crit. 1), swap

for

and for

regarding

and

(i.e.,

and

) and notice thm. 8 is true when:

5.3.5. Example of The “Measure" from Increasing at a Rate Linear to That of

Suppose, we have function

, where

, and:

such that:

and

where for

,

and

Hence, when

is:

and

is:

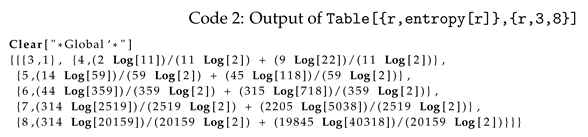

We already know, using eq.

50:

Also, using §

5.3.3 steps 1-6 on

:

where the output is

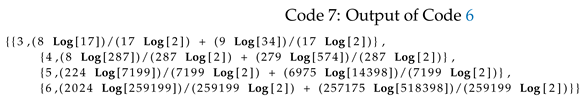

Notice when:

- (1)

- (2)

- (3)

the output of code 7 can be defined:

Hence, since

:

since

(this is proven in [

16]):

Hence,

entropy[r] is the same as:

Therefore, using §

5.3.2 (b) and §

5.3.2 (44a), take

to compute the following:

where:

- (1)

For every

, we find a

, where

, but the absolute value of

is minimized. In other words, for every

, we want

where:

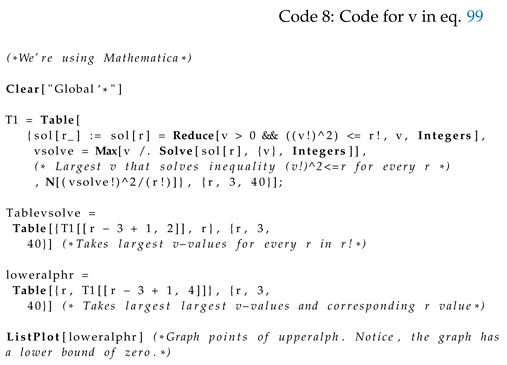

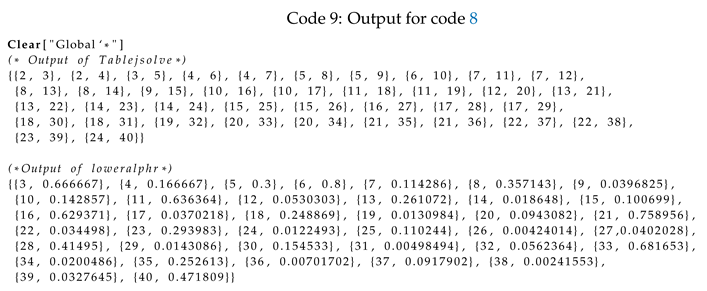

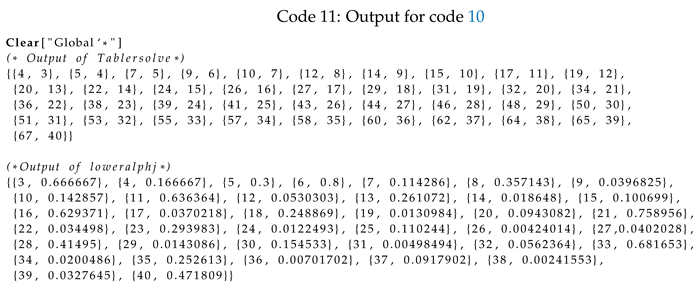

To solve for v, we try the following code:

Note, the output is:

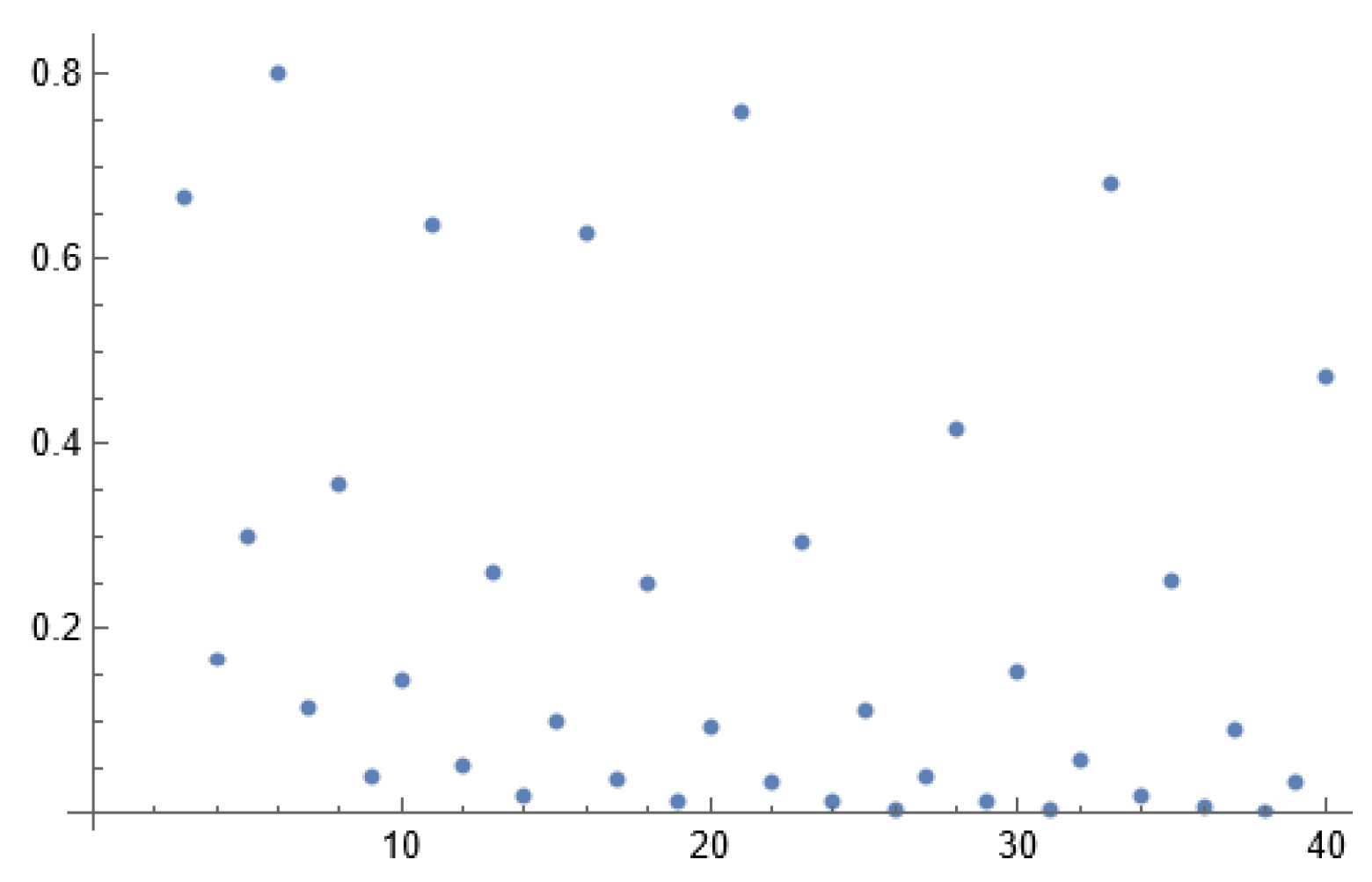

Figure 1.

Plot of loweralphr.

Figure 1.

Plot of loweralphr.

Finally, since the lower bound of

loweralphr is zero, we have shown:

Next, using §

5.3.2 (b) and §

5.3.2 (3b), take

and swap

and

with

and

, to compute the following:

where:

- (1)

For every

, we find a

, where

, but the absolute value of

is minimized. In other words, for every

, we want

where:

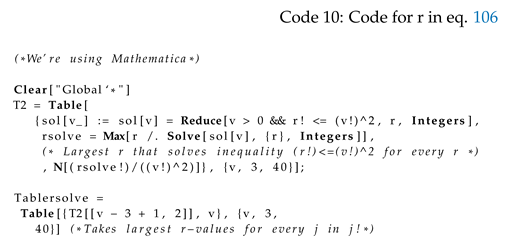

To solve r, we try the following code:

Note, the output is:

Figure 2.

Plot of loweralphj.

Figure 2.

Plot of loweralphj.

since the lower bound of

loweralphj is zero, we have shown:

Hence, using eq.

100 and

107, since

both:

- (1)

or are equal to zero, one or

- (2)

or are equal to zero, one or

then what I’m measuring from increases at a rate linear to that of .

5.4. Defining The Actual Rate of Expansion of Sequence of Bounded Sets

5.4.1. Definition of Actual Rate of Expansion of Sequence of Bounded Sets

Suppose,

is the sequence of the graph for each function

(§

2.3.1). When

is the Euclidean distance between points

and a “chosen" center point is

, where

the

actual rate of expansion is:

Note, there are cases of

when

isn’t fixed and

(i.e.,

the expected, fixed rate of expansion).

5.4.2. Example

Suppose, we have

, where

and

, such that

and for

:

Hence, when

is:

such that

, note the farthest point of

from

C is either

or

. Hence, to compute

, we can take

or

:

and the actual rate of expansion is:

5.5. Reminder

See if §

3.1 is easier to understand.

6. My Attempt At Answering The Approach of §2.5.1

6.1. Choice Function

Suppose we define the following:

- (1)

If

(§

2.3.3) and

(§

2.3.3) are arbitrary sets, then

and

satisfy (1), (2), (3), (4) and (5) of the

leading question in §3.1

- (2)

For all , and

Further note, from §

5.3.2 (a), if we take:

and from §

5.3.2 (b), we take:

Then, §

5.3.1 (2), eq.

121, and eq.

122 is:

6.2. Approach

We manipulate the definitions of §

5.3.2 (a) and §

5.3.2 (b) to solve (1), (2), (3), (4) and (5) of the

leading question in §3.1

6.3. Potential Answer

6.3.1. Preliminaries (Definition of T)

Suppose

is the sequence of the graph on each function

(§

2.3.1). Then, when:

We define an explicit injective , where , such that:

- (1)

If , then

- (2)

If , then

- (3)

If , then

6.3.2. Question

Does T exist? If so, how do we define it?

Hence, using

,

,

,

E,

(§

5.4), and

, such that with the absolute value function

, ceiling function

, and nearest integer function

, we define:

where

,

E, and

T are “removed" when

, the choice function which answers the

leading question in §3.1 could be the following, s.t.we explain the reason behind choosing the choice function in §

6.4:

Theorem 9.

If we define:

where for , we define to be the same as when swapping “" with “" (for eq. 121 & 122) and sets with (for eq. 121–128), then for constant and variable , if:

and:

where for all , there exists a and (§6.1 crit. 1), such for all and (§6.1 crit. 2), whenever:

where is the ceiling function, E is the fixed rate of expansion, Γ is the gamma function, n is the dimension of , is the Hausdorff dimension of set , and is area of the smallest -dimensional box that contains , then:

& the choice function is:

such that satisfies eq. 133 & eq. 134. (Note, we want and ) where the expected value answering the approach of §2.5.1 using the leading question (§3.1) is

6.4. Explaining the Choice Function and Evidence The Choice Function Is Credible

Notice, before reading the programming in code 12, without the “

c"-terms in eq.

133 and eq. 134:

- (1)

The choice function in eq.

133 and eq. 134 is zero, when

what I’m measuring from

(§

5.3.2 criteria 1) increases at a rate superlinear to that of

, where

.

- (2)

The choice function in eq.

133 and eq. 134 is zero, when for a given

and

there doesn’t exist

c where eq. 131 is satisfied or

.

- (3)

-

When

c does exist, suppose:

- (a)

When

, then:

- (b)

When

, then:

Hence, for each sub-criteria under crit. (3), if we subtract one of their limits by their limit value, then eq.

133 and eq. 134 is zero. (We do this using the “

c"-term in eq.

133 and 134). However, when the exponents of the “

c"-terms aren’t equal to

, the limits of eq.

133 and 134 aren’t equal to zero. We want this, infact, whenever we swap

with

. Moreover, we define function

(i.e., eq.

132), where:

- i.

When

, then eq.

133 and 134 without the “c"-terms are zero. (The “c"-terms approach zero and still allow eq.

133 and 134 to equal zero.)

- ii.

When

, then

is zero which makes eq.

133 and 134 equal zero.

- iii.

-

Here are some examples of the numerator of

(eq.

132):

- A.

When , , and , the numerator of is

- B.

When , , and , the numerator of is

- C.

When

,

, and

, the numerator of

is ceiling of constant

times the volume of an

n-dimensional ball with finite radius: i.e.,

- D.

When

,

, and

, the numerator of

is ceiling of the volume of the

n-dimensional ball: i.e.,

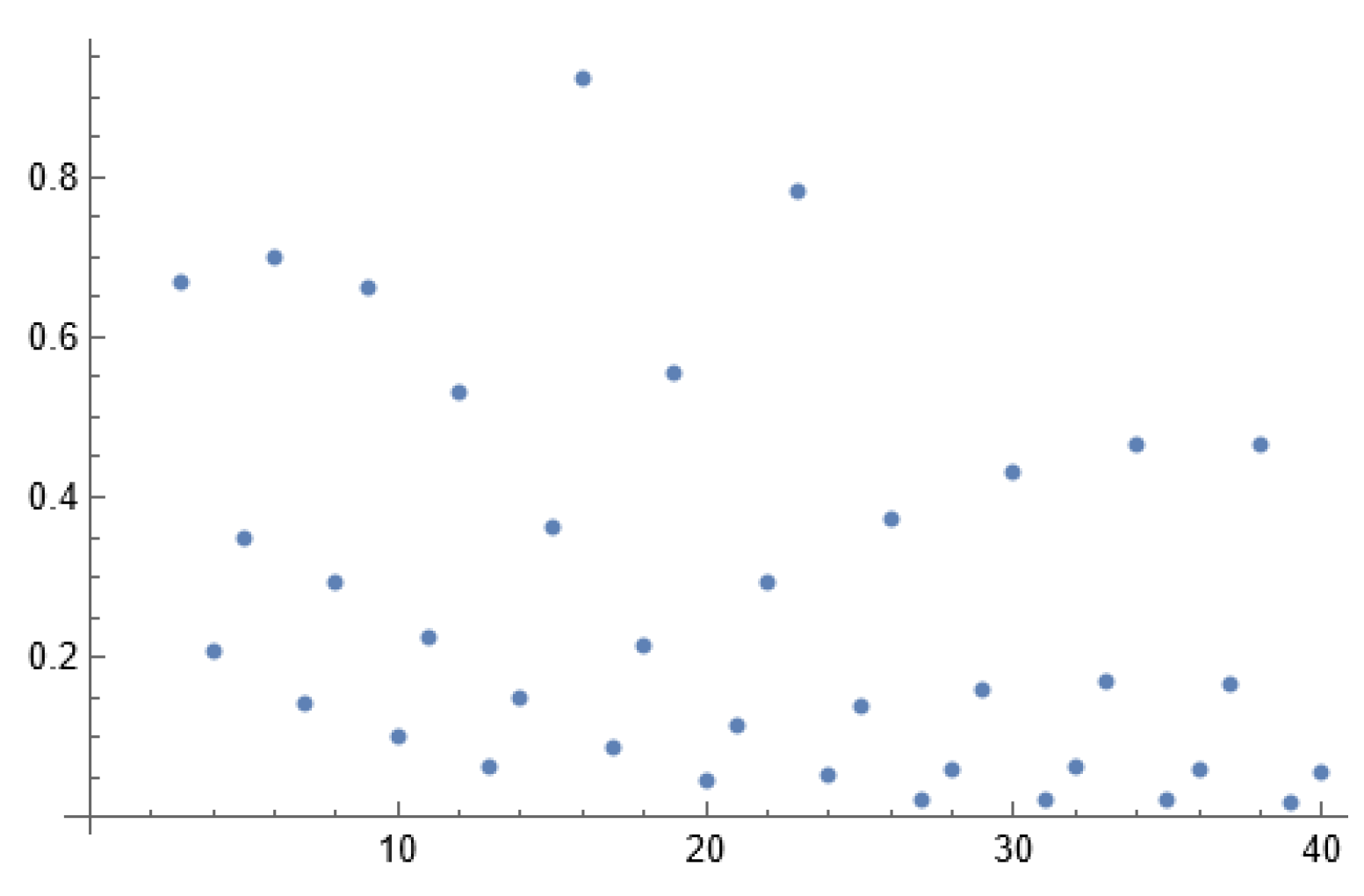

Now, consider the code for eq.

133 and eq. 134. (Note, the set theoretic limit of

is the graph of function

.) In this example,

, and:

such that:

the ceiling function is

, and:

such for

,

and

Hence, when

is:

and

is:

Note, the following (we leave this to mathematicians to figure

LengthS1,

LengthS2,

Entropy1 and

for other

A and

f in code 12).

6.4.1. Evidence with Programming

7. Questions

- (1)

Does §6 answer the in §3.1

- (2)

Using thm. 9, when f is defined in §2.1, does have a finite value?

- (3)

Using thm. 9, when f is defined in §2.2, does have a finite value?

- (4)

If there’s no time to check questions 1, 2 and 3, see §4.

8. Appendix of §5.3.1

8.1. Example of §5.3.1, step 1

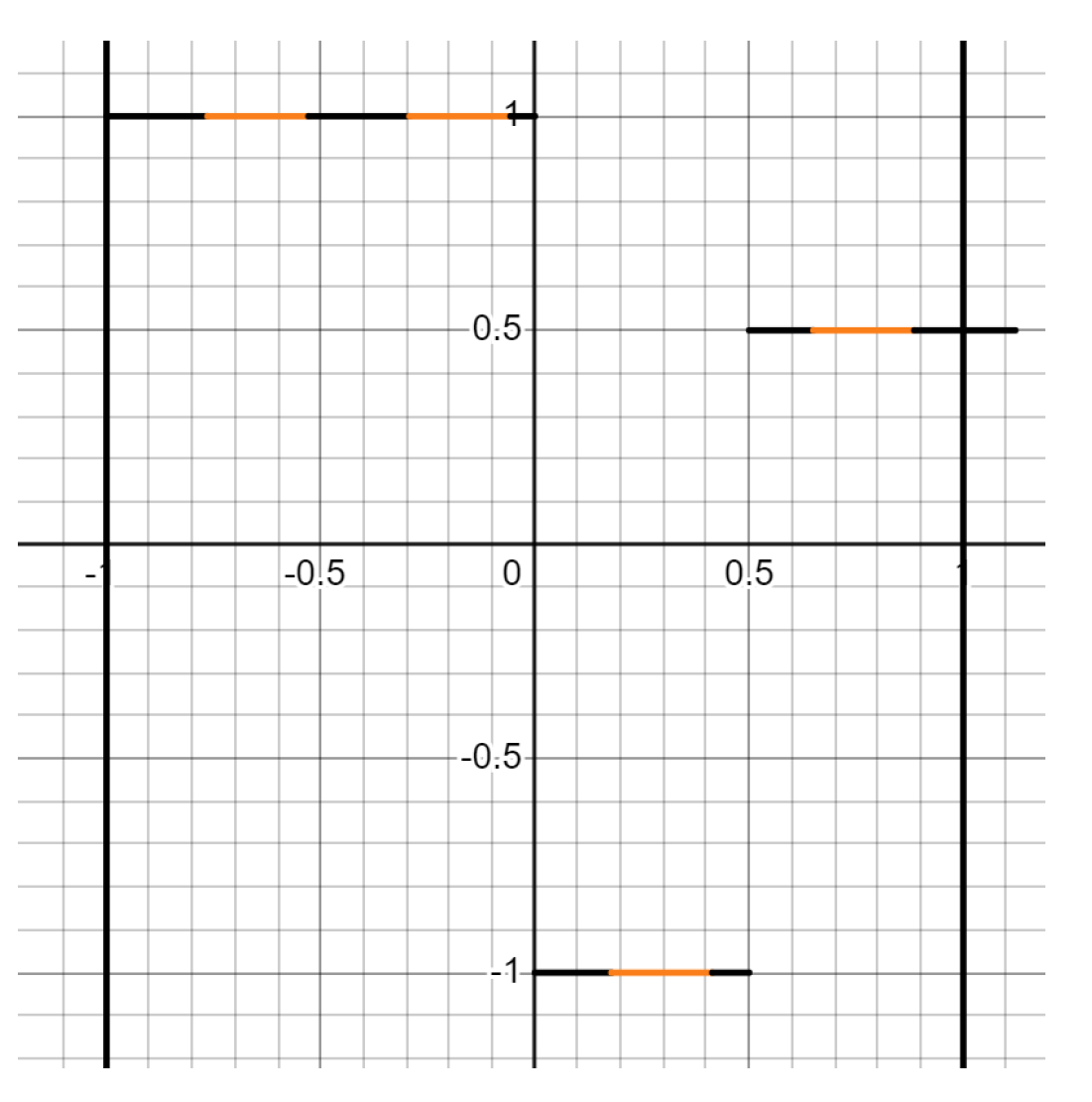

Suppose

- (1)

- (2)

When defining

:

- (3)

Then one example of

, using §

5.3.1 step 1, (where

) is:

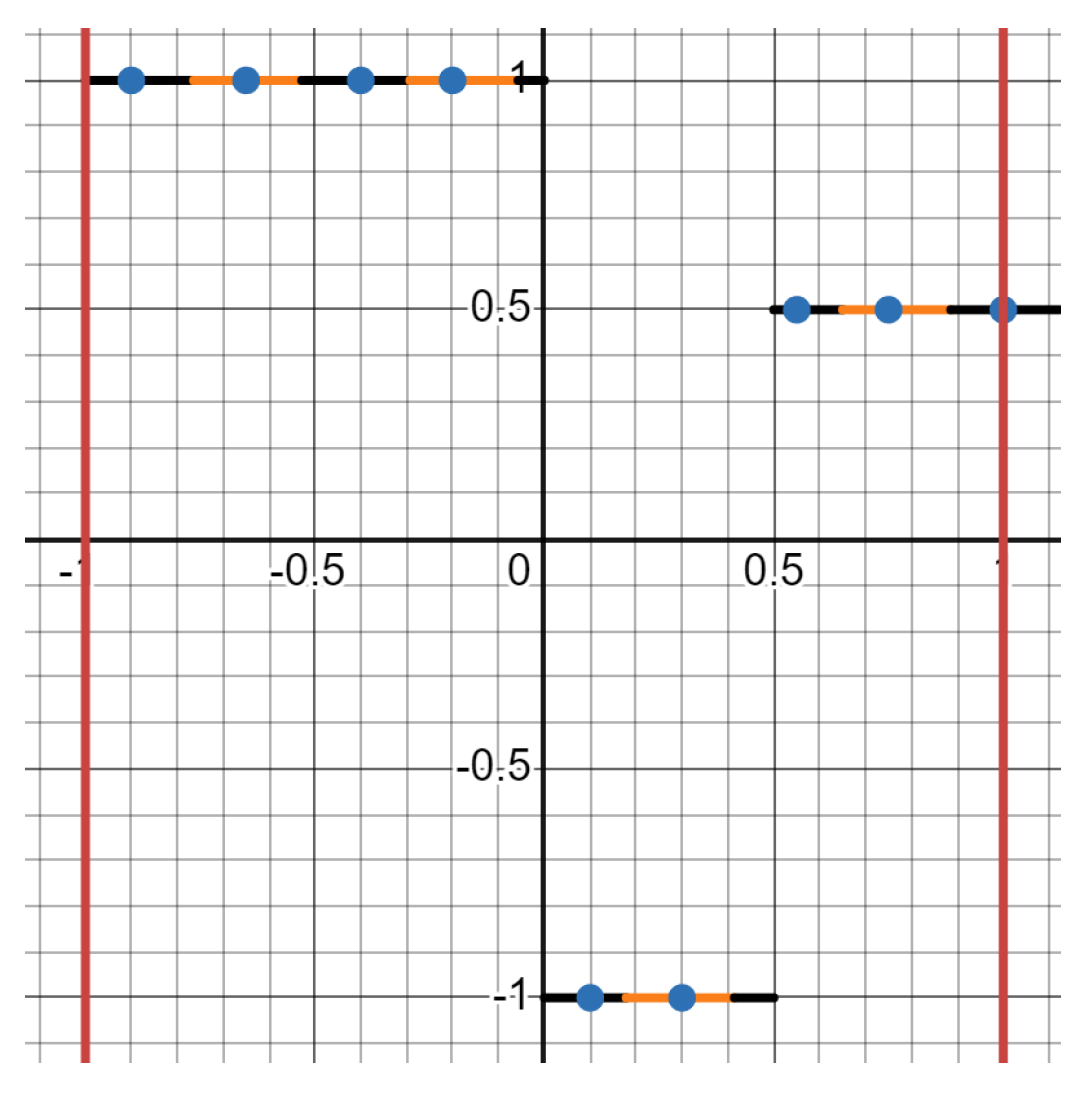

Note, the length of each partition is

, where the borders could be approximated as:

which is illustrated using

alternating orange/black lines of equal length covering

(i.e., the black vertical lines are the smallest and largest

x-cooridinates of

).

(Note, the alternating covers in

Figure 3 satisfy step (1) of §

5.3.1, because the Hausdorff measure

in its dimension of the covers is

and there are 9 covers over-covering

: i.e.,

Figure 3.

The alternating orange & black lines are the “covers" and the vertical lines are the boundaries of .

Figure 3.

The alternating orange & black lines are the “covers" and the vertical lines are the boundaries of .

Definition 1 (

Minimum Covers of Measure covering ).

We can compute the minimum covers of , using the formula:

where

).

Note there are other examples of for different . Here is another case:

which can be defined (see eq.

146 for comparison):

In the case of

, there are uncountable

different covers which can be used. For instance, when

(i.e.,

) consider:

When

and

, we get

Figure 4 and when

and

, we get

Figure 3

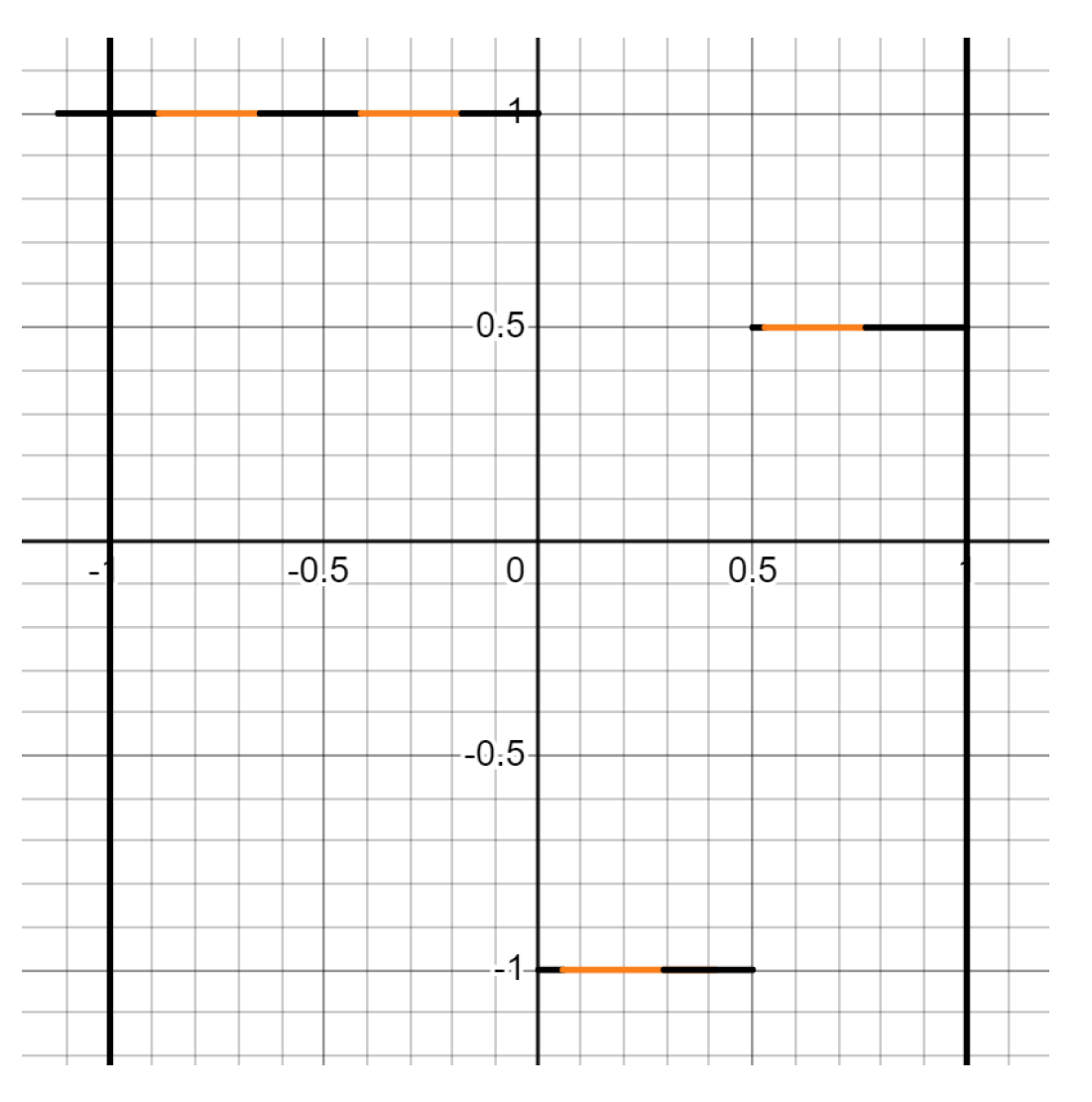

Figure 4.

This is similar to

Figure 3, except the start-points of the covers are shifted all the way to the left.

Figure 4.

This is similar to

Figure 3, except the start-points of the covers are shifted all the way to the left.

8.2. Example of §5.3.1, step 2

. Suppose:

- (1)

- (2)

When defining

: i.e.,

- (3)

- (4)

- (5)

, using eq.

147 and fig.

Figure 3, which is

approximately

Then, an example of

is:

Below, we illustrate the sample: i.e., the set of all blue points

in each orange and black line of covering

:

Figure 5.

The blue points are the “sample points", the alternative black and orange lines are the “covers", and the red lines are the smallest & largest x-coordinates .

Figure 5.

The blue points are the “sample points", the alternative black and orange lines are the “covers", and the red lines are the smallest & largest x-coordinates .

Note, there are multiple samples that can be taken, as long as one sample point is taken from each cover in .

8.3. Example of §5.3.1, step 3

Suppose

- (1)

- (2)

When defining

:

- (3)

- (4)

- (5)

, using eq.

147 and fig.

Figure 3, is approx.

- (6)

, using eq.

152, is:

Therefore, consider the following process:

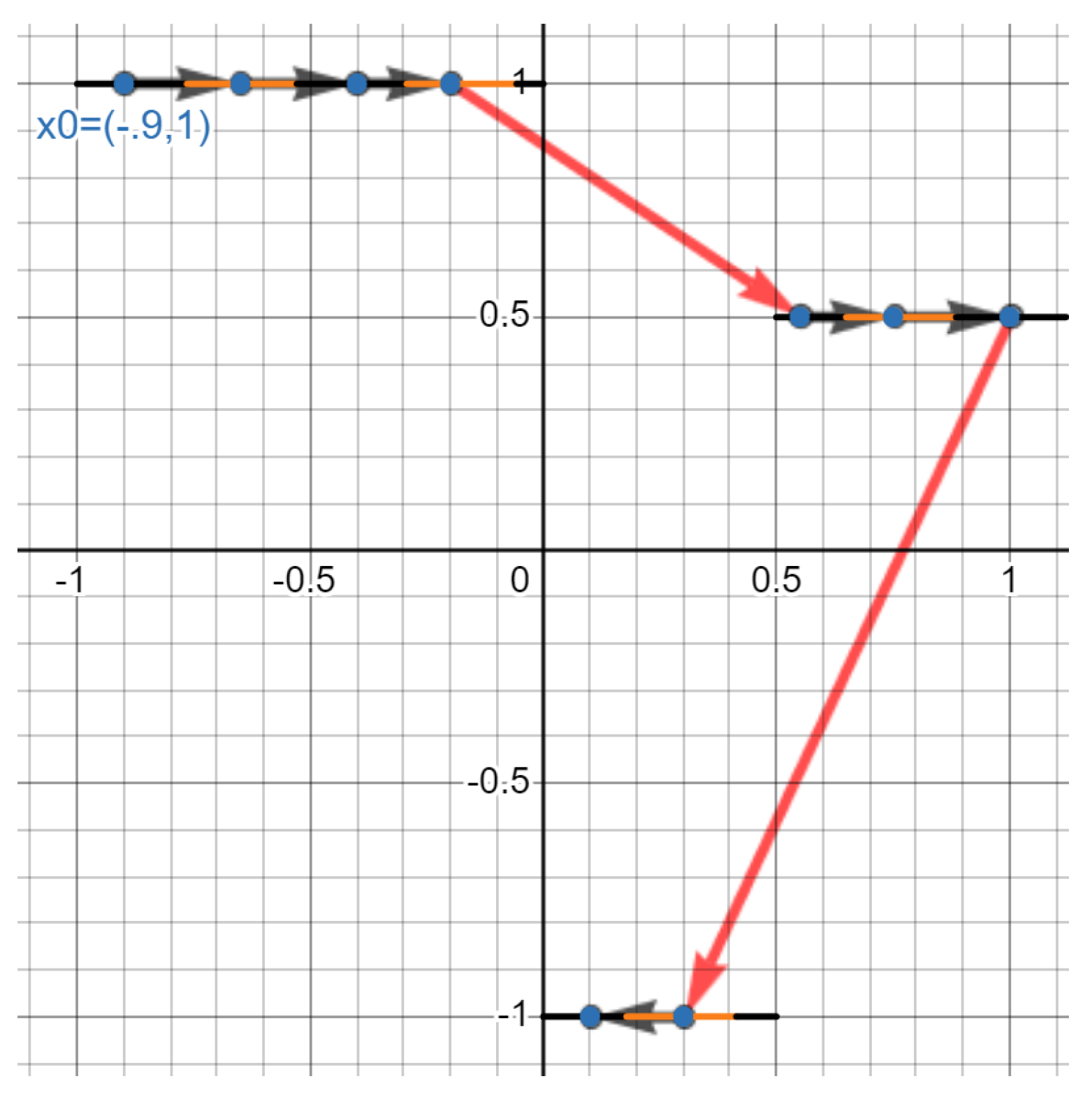

8.3.1. Step 3a

If

is:

suppose

. Note, the following:

- (1)

is the next point in the “pathway" since it’s a point in with the smallest 2-d Euclidean distance to instead of .

- (2)

is the third point since it’s a point in with the smallest 2-d Euclidean distance to instead of and .

- (3)

is the fourth point since it’s a point in with the smallest 2-d Euclidean distance to instead of , , and .

- (4)

we continue this process, where the “pathway" of is:

Note 10.

If more than one point has the minimum 2-d Euclidean distance from , , , etc. take all potential pathways: e.g., using the sample in eq. 156, if , then since and have the smallest Euclidean distance to , taketwopathways:

and also:

8.3.2. Step 3b

Next, take the length of all line segments in each pathway. In other words, suppose

is the

n-th dim.Euclidean distance between points

. Using the pathway in eq.

157, we want:

Whose distances can be approximated as:

Also, we see the outliers [

6] are

and

(i.e., notice that the outliers are more prominent for

). Therefore, remove

and

from our set of lengths:

This is illustrated using:

Figure 6.

The black arrows are the “pathways" whose lengths aren’t outliers. The length of the red arrows in the pathway are outliers.

Figure 6.

The black arrows are the “pathways" whose lengths aren’t outliers. The length of the red arrows in the pathway are outliers.

Hence, when

, using §

5.3.1 step 3b & eq.

156, we note:

8.3.3. Step 3c

To convert the set of distances in eq.

159 into a probability distribution, we take:

Then divide each element in

by 1.35

which gives us the probability distribution:

Hence,

8.3.4. Step 3d

Take the shannon entropy of eq.

161:

We shorten

to

, giving us:

8.3.5. Step 3e

Take the entropy, w.r.t all pathways, of the sample:

In other words, we’ll compute:

We do this by repeating §

8.3.1-§

8.3.4 for different

(i.e., in the equation with multiple values, see note 10)

Hence, since the largest value out of eq.

164-172 is

:

References

- Claudio Bernardi and Claudio Rainaldi. Everywhere surjections and related topics: Examples and counterexamples. Le Matematiche, 73(1):71–88, 2018. https://www.researchgate.net/publication/325625887_Everywhere_surjections_and_related_topics_Examples_and_counterexamples.

- Pablo Shmerkin (https://mathoverflow.net/users/11009/pablo shmerkin). Hausdorff dimension of r x x. MathOverflow. https://mathoverflow.net/q/189274.

- Arbuja (https://mathoverflow.net/users/87856/arbuja). Is there an explicit, everywhere surjective whose graph has zero hausdorff measure in its dimension? MathOverflow. https://mathoverflow.net/q/476471.

- JDH (https://math.stackexchange.com/users/413/jdh). Uncountable sets of hausdorff dimension zero. Mathematics Stack Exchange. https://math.stackexchange.com/q/73551.

- SBF (https://math.stackexchange.com/users/5887/sbf). Convergence of functions with different domain. Mathematics Stack Exchange. https://math.stackexchange.com/q/1063261.

- Renze John. Outlier. https://en.m.wikipedia.org/wiki/Outlier.

- Bharath Krishnan. Bharath krishnan’s researchgate profile. https://www.researchgate.net/profile/Bharath-Krishnan-4.

- Gray M. Entropy and Information Theory. Springer New York, New York [America];, 2 edition, 2011. https://ee.stanford.edu/~gray/it.pdf.

- MFH. Prove the following limits of a sequence of sets? Mathchmaticians, 2023. https://matchmaticians.com/questions/hinaeh.

- OEIS Foundation Inc. A002088. The On-Line Encyclopedia of Integer Sequences, 1991. https://oeis.org/A002088.

- OEIS Foundation Inc. A011371. The On-Line Encyclopedia of Integer Sequences, 1999. https://oeis.org/A011371.

- OEIS Foundation Inc. A099957. The On-Line Encyclopedia of Integer Sequences, 2005. https://oeis.org/A099957.

- William Ott and James A. Yorke. Prevelance. Bulletin of the American Mathematical Society, 42(3):263–290, 2005. https://www.ams.org/journals/bull/2005-42-03/S0273-0979-05-01060-8/S0273-0979-05-01060-8.pdf.

- T.F. Xie and S.P. Zhou. On a class of fractal functions with graph hausdorff dimension 2. Chaos, Solitons & Fractals, 32(5):1625–1630, 2007. https://www.sciencedirect.com/science/article/pii/S0960077906000129.

- ydd. Finding the asymptotic rate of growth of a table of value? Mathematica Stack Exchange. https://mathematica.stackexchange.com/a/307050/34171.

- ydd. How to find a closed form for this pattern (if it exists)? Mathematica Stack Exchange. https://mathematica.stackexchange.com/a/306951/34171.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).