1. Introduction

Linear classifiers are often favored over nonlinear models, such as neural networks, for certain tasks due to their comparable performance in high-dimensional data spaces, faster training speeds, reduced tendency to overfit, and greater interpretability in decision-making ([

1,

2]). Notably, linear classifiers have demonstrated performance on par with convolutional neural networks (CNNs) in medical classification tasks, such as predicting Alzheimer’s disease from structural or functional brain MRI images ([

3,

4]).

These linear classifiers can be categorized into several types based on the principles they use to define the decision boundary or classification discriminant, as described below, where N is the number of samples, M is the number of features and k denotes an iteration term:

The minimum distance classifier (MDC) ([

5]) which is a prototype-based classifier that assigns points according to the perpendicular bisector boundary between the centroids of two groups. This classifier has

training time complexity.

Fisher’s linear discriminant analysis (LDA, specifically refer to Fisher’s LDA in this study) is a variance-based classifier which can be trained in cubic time complexity

. While faster implementations like spectral regression discriminant analysis (SRDA) ([

6]) claim lower training time complexity, their efficiency depends on specific conditions, such as a sufficiently small iterative term and sparsity in the data. These constraints limit SRDA’s applicability in real-world classification tasks.

Support vector machine (SVM) ([

7]) with a linear kernel is a maximum-margin classifier, which has a training time complexity of

. Fast implementations include Liblinear (referred to as fast SVM) and SVM-SGD, which use coordinate descent and stochastic gradients respectively, achieving quasi-quadratic time complexity

.

Perceptron ([

8]) is a misclassification-triggered ruled-based classifier. Its training time complexity is

.

Logistic regression (LR) ([

9]) is a statistics-based classifier. It can be trained using either maximum likelihood estimation (MLE) or iteratively reweighted least squares, with time complexity of

and

respectively, and with

using the same coordinate descent technique in Liblinear.

Among linear classifiers, MDC offers the lowest training time complexity but suffers from limited performance due to its overly simplified decision boundary. Widely used methods such as LDA and SVM are often favored for their strong predictive capabilities. However, these methods can be computationally expensive, particularly for large-scale datasets. Hence, achieving both high scalability and strong predictive performance remains a challenging tradeoff, highlighting the need for new approaches that balance these competing demands.

To address this challenge, this paper makes the following 3 key contributions:

A geometric theoretical framework for classifiers: (See

Appendix A.1 for explanation of the term theoretical framework.) This study introduces a geometric framework, geometric discriminant analysis (GDA), to unify certain linear classifiers under a common theoretical model. GDA leverages a special type of centroid discriminant basis (CDB0), a vector connecting the centroids of two classes, which serves as the foundation for constructing classifier decision boundaries. The GDA framework adjusts the CDB0 through geometric corrections under various constraints, enabling the derivation of classifiers with desirable properties. Notably, we show that: MDC is a special case of GDA, where geometric corrections are not applied to CDB0; linear discriminant analysis (LDA) is a special case of GDA, where the CDB0 is corrected by maximizing the projection variance ratio.

A high-performance and scalable linear geometric classifier: Building upon the GDA framework, we propose centroid discriminant analysis (CDA), a novel geometric classifier that iteratively adjusts the CDB through performance-dependent rotations on 2D planes. These rotations are optimized via Bayesian optimization, enhancing the decision boundary’s adaptability efficiently. CDA achieves lower training time complexity (quadratic) and is more efficient than LDA and SVM. Experimental evaluations on 27 real-world datasets of standard images, medical images and chemical property data, reveal that CDA consistently outperforms LDA, SVM and and LR in predictive performance, scalability, and stability.

Nonlinear geometric classification via kernel method: For complex data where linear models are not enough, CDA supports nonlinear classification via kernel method. We demonstrated with challenging image and chemical datasets that kernel CDA improved over linear CDA and outperformed kernel SVM. Nonetheless, while kernel CDA offers greater expressiveness and improved capability, linear CDA remains highly valuable for real-world tasks due to its superior training efficiency, interpretability, and reduced risk of overfitting.

More importantly, we emphasize that CDA not only achieves robust predictive performance but also offers superior computational efficiency. Unlike traditional methods such as LDA and SVM, which typically exhibit cubic time complexity, CDA operates with quadratic complexity, resulting in significantly faster runtimes in practice. These advantages make CDA particularly attractive for real-world applications, where scalability, interpretability, and efficiency are essential. As linear classifiers remain widely used across scientific domains for their transparency and speed, CDA represents a valuable advancement for practitioners seeking reliable and computationally lightweight solutions. Lastly, the GDA theoretical framework, from which CDA is derived, may inspire new classifiers under the definition of different geometric constraints.

2. Geometric Discriminant Analysis (GDA)

In this study, we propose a generalized geometric theoretical framework for centroid-based linear classifiers. In geometry, the generalized definition of centroid is the weighted average of points. For binary classification problem, training a linear classifier involves finding a discriminant (perpendicular to the decision boundary) and a bias. In GDA, we focus on the centroid discriminant basis (CDB) which is defined as the unit vector from the centroid of negative class to positive class. Specifically, we focus on a particular discriminant termed as CDB0, which is constructed from centroids with uniform sample weights. GDA theoretical framework incorporates all the classifiers whose classification discriminant is CDB0 adjusted by geometric corrections on CDB0, which is described in details in the following using an instance with LDA. Moreover, in the GDA theoretical framework, the classifier discriminants are scaling-invariant, which is explained in

Appendix A.2. Thus, throughout,

denotes a generic constant independent of the variable of interest.

Without loss of generality, assume a two-dimensional space (see

Appendix D for any-dimensional proofs). We derive the GDA theoretical framework as a generalization of Fisher’s LDA (hereafter referred to simply as LDA) and includes it under a certain geometric constraint. In LDA, the linear discriminant (LD) is derived as the maximization of between-class variance to within-class variance:

where

and

are the means of negative and positive class,

and

are their covariance matrices,

is the projection coefficient. The maximum is obtained when

([

10]), or

, where

is the normalized LDA discriminant in the GDA theoretical framework with a normalizing constant

,

N is the total number of samples.

is the sum of within-class covariance matrices of each class

:

And the inverse of the covariance matrix

is:

Where

is the adjugate of

. Let

denote the unit vector of

, then

, and

. Further,

where

is a correction matrix that acts as the second-order term associated with the sum of covariance matrices. Since

is the unit vector constructed from centroids with uniform sample weights (i.e., arithmetic means), it can be written as

As in the covariance matrix,

, and let

,

, the linear discriminant in Eq.

4 can be compressed into the following general form (

Figure A1a-c, general case):

Since from Equation (

5),

can be decomposed into the basis

and a correction on the basis, we write out

to indicate that

is a geometrical discriminant (GD), a discriminant geometrically modified from

. This geometrical modification can be intuitively interpreted as performing rotations on

.

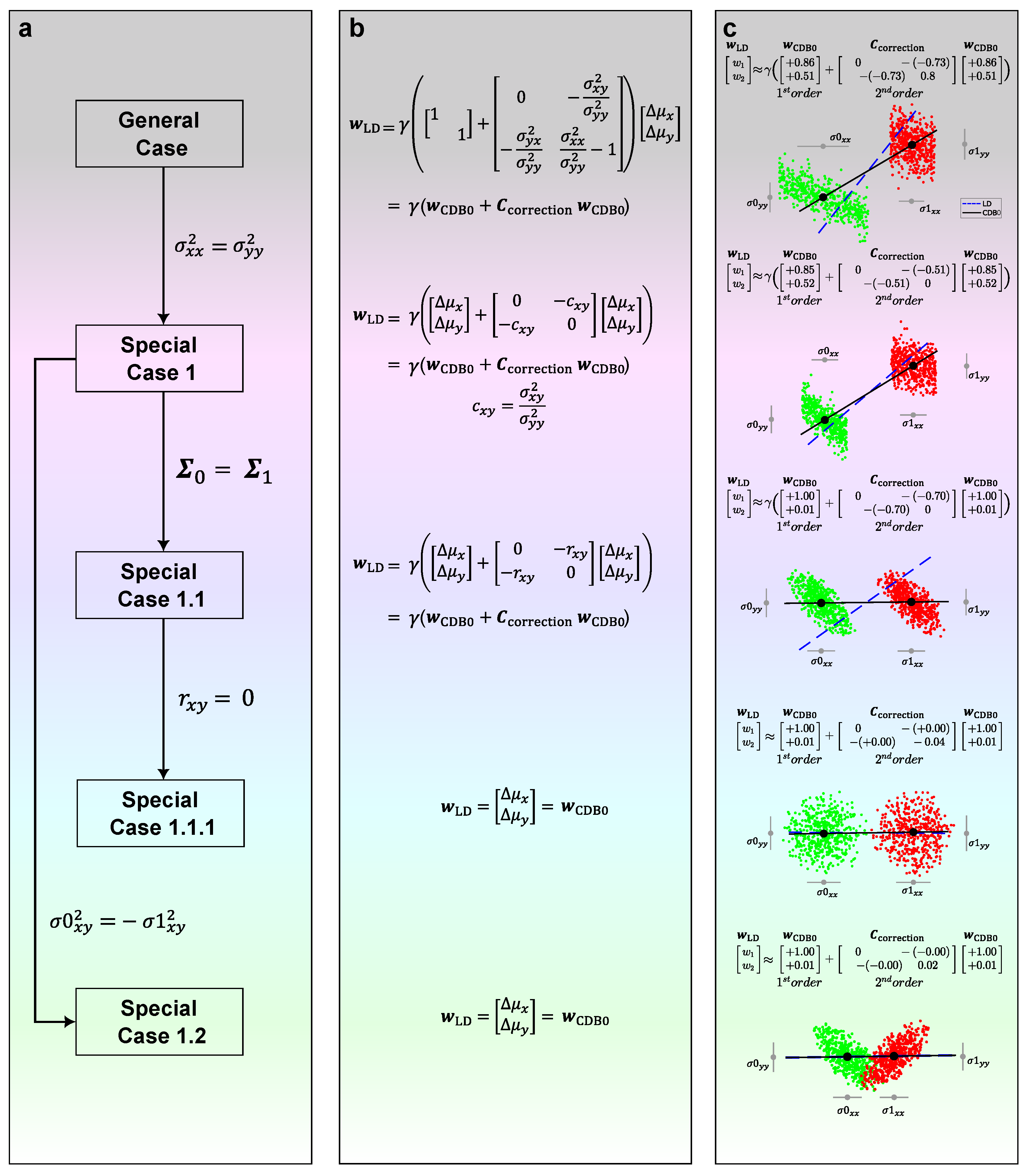

Starting from Equation (

5), we have the following special cases to consider, which represent different forms of geometrical modification applied to

:

Special case 1. If we assume two variables have the same variance (

), then

. Equation (

5) becomes (

Figure A1, special case 1):

From Equation (

6), there are two special cases:

Special case 1.1. If two covariance matrices are same (

), then the following also holds:

where

is the Pearson correlation coefficient (PCC) between x and y of the samples in each class. Equation (

6) becomes (

Figure A1, special case 1.1):

Special case 1.1.1. From Equation (

7), if there is no correlation between x and y variables (e.g.,

), then the equation becomes (

Figure A1, special case 1.1.1):

which is equivalent to MDC method except for the bias. The second equal mark is from the fact that

is already a unit vector, thus

.

Special case 1.2. From Equation (

6), if two classes are symmetric about one variable, i.e.,

, then

. In this case, the obtained discriminant is the same as in Special case 1.1.1 (

Figure A1, special case 1.2):

Figure A1c shows how LDA applies geometric modifications to

as a shape adjustment for different shapes of data. When two covariance matrices are similar (Special case 1.1), the correction term acts only when the variables

x and

y have a certain extent of correlation and increases with this correlation (

Figure A1, the third row). Interestingly, when further assumptions are made that two variables have no correlation (Special case 1.1.1), or when two classes are symmetric about one variable (Special case 1.2), we can see from Equations (

8)-(equation

9) that

will approach

, which is indeed what we observe in the last two rows of

Figure A1. Videos showing these special cases using 2D simulated data can be found from this link

1.

The demonstrations of all these cases for higher-dimensional space are in the

Appendix D.

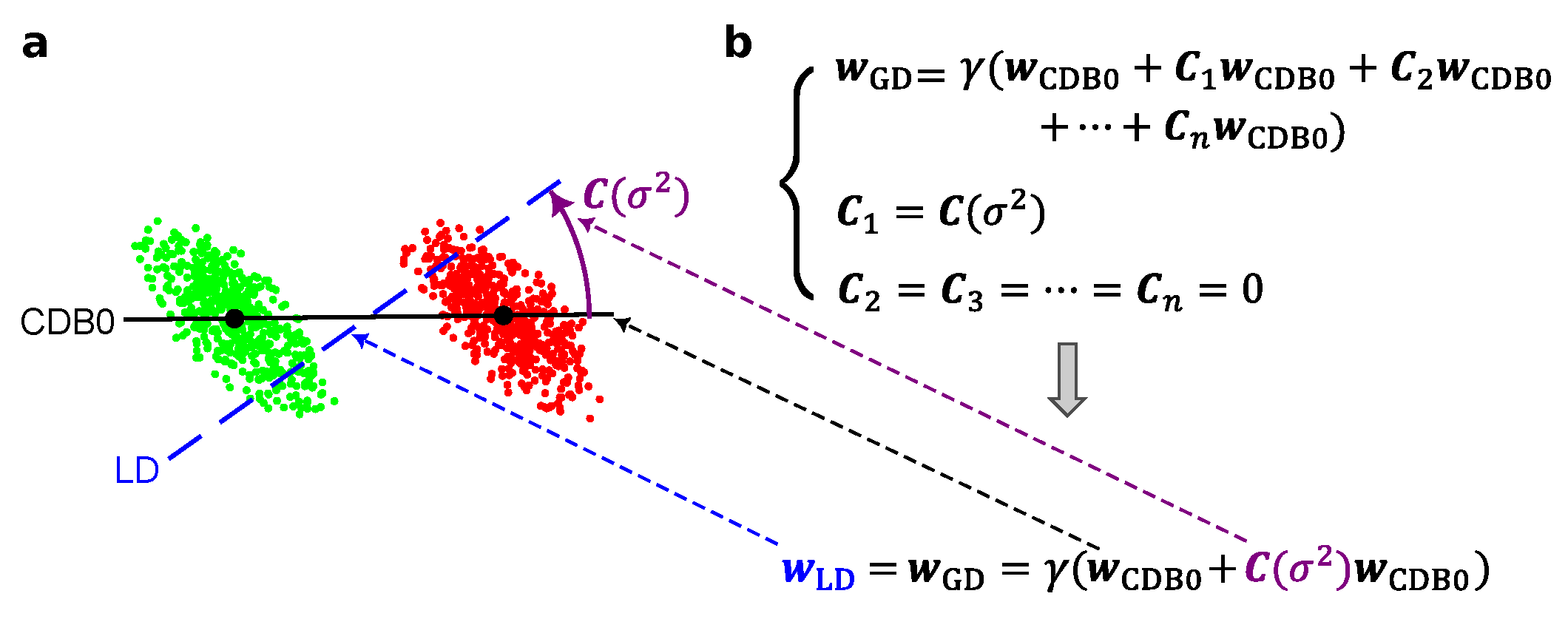

From the above derivation, Equation (

5)-(equation

9) show that the solution of LDA can be represented by

superimposed with a geometric correction on

, and the geometric correction term is obtained under the constraint of Equation (

1), which solves the maximization of the projection variance ratio. Without loss of generality, the conclusion can be extended to other linear classifiers with different constraints imposed on the geometric correction term, for instance MDC, where the correction terms are all zero.

Here, we propose the generalized GDA theoretical framework in which not only the geometric correction term can impose any constraint based on different principles, but also any number of correction terms can act together on

to create the classification discriminant, given by:

Figure 1 shows that LDA is derived by the general GD equation imposing one variance-based geometric correction in

Figure 1b, shown in

Figure 1a using an instance of 2d artificial data with specific covariance.

3. Centroid Discriminant Analysis (CDA)

Based on the GDA theoretical framework, in this study we propose an efficient geometric centroid-based linear classifier named centroid discriminant analysis (CDA), then introduce the extension to nonlinear classification via kernel method in

Section 5. As described by Eq.

10 in the GDA thoery, the model of a geometric classifier can be expressed by the basis CDB0 imposed by geometric corrections on this basis. We first give the conclusion that the final discriminant of CDA after

n rotations is in the form

, where

is the geometric correction operator term,

is the identity operator, and

is the operator of a single CDA rotation). The complete derivation of CDA in GDA theoretical framework can be found in

Appendix E.

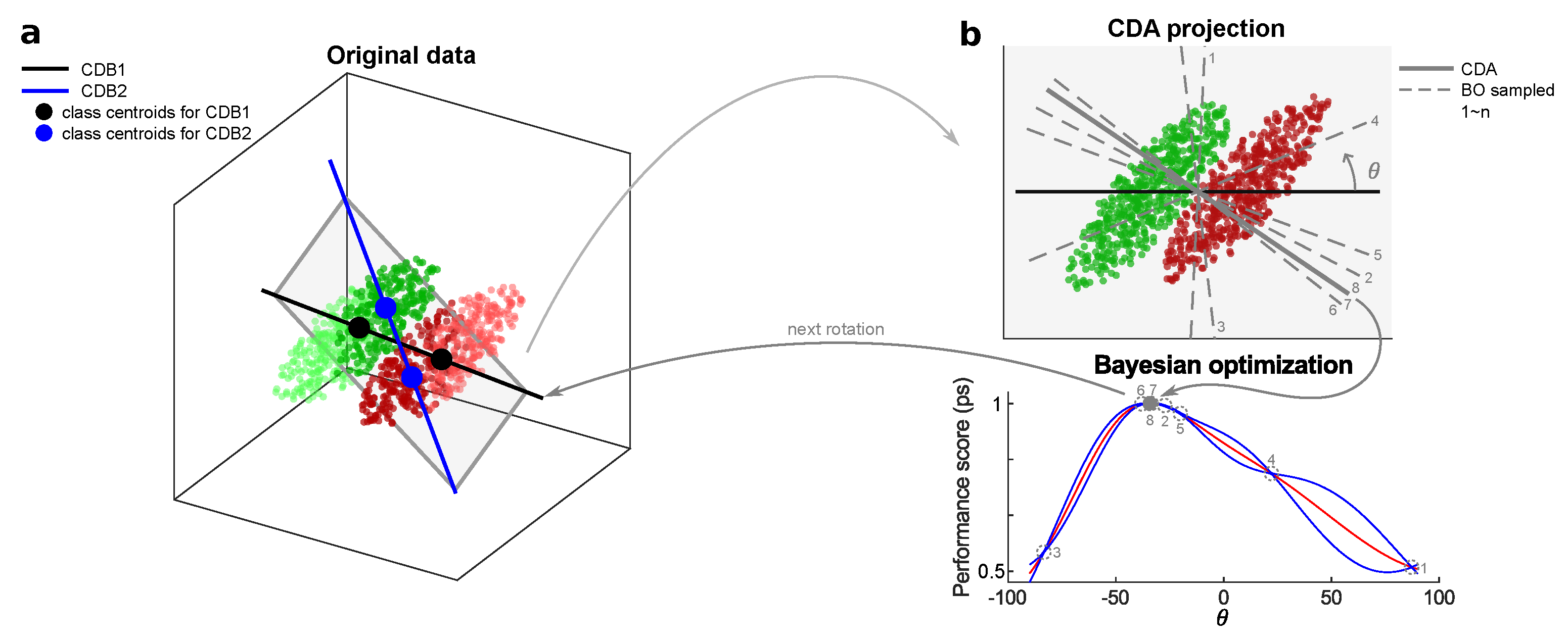

From the geometric point of view, CDA is built on the basis CDB0 in the GDA theoretical framework, then subjected to a series of geometric constraints guiding the rotations of the discriminant CDB in high-dimensional spaces. CDA follows a performance-dependent training that starting from CDB0 involves the iterative calculation of new adjusted centroid discriminant lines whose optimal rotations on the associated 2D planes are searched via Bayesian optimization. The following parts together with

Figure A2 describe the CDA workflow, mechanisms and principles in detail.

Performance-associated CDB Classifier: In GDA theoretical framework, CDB is defined as the unit vector pointing from the geometric centroid of the negative class to positive class. The geometric centroid is defined in a general sense which considers the weights of the data. The space of CDB consists of unit vectors obtained with all possible weights.

Apart from the discriminant line, a bias is further required to realize classification by offering a decision boundary for data projected onto the discriminant. We perform a search for this bias by checking the middle points of every two consecutive sorted projections. Thus, given

N samples, there are

candidates. We name the best candidate as optimal operating point (OOP) and select OOP according to a performance-score, defined as

(see

Appendix F for definitions), where pos and neg means evaluating the metric for each class. The performance score is a comprehensive metric that simultaneously considers sensitivity, recall, and specificity, providing a fair evaluation that accounts for biased models trained on imbalanced data, owing to the more conservative AC-score metric ([

11]). With this OOP search strategy, any vector in the space of CDB is associated with a performance-score. The OOP search can be performed efficiently in

time (see Algorithm A3 for pseudocode). Importantly, during each rotation within the 2D plane, CDA explicitly selects the direction that maximizes the performance score, and progressively refines this choice through continuous rotations. Because the optimization target is transparent at every step, CDA offers an inherently explainable learning process.

CDA as Consecutive Geometric Rotations of CDB in 2D planes: Our idea is to start the optimization path from CDB0, continuously rotate the classification discriminant on 2D planes on which there is a high probability of having a classification discriminant with better performance. To construct such a 2D plane with another vector, a key observation is that the samples, whose projections onto CDB are close to the decision boundary (i.e. OOP), should have more weights, because these samples are prone to overlapping with samples from the other class, causing misclassification. Thus, we compute another CDB using centroids with shifted sample weights toward OOP (see next part). On the plane formed by these two CDBs, the best rotation is found by Bayesian optimization. For clarity, we have the following definition: the first vector in each rotation is termed as CDB1, the second vector in each rotation is termed as CDB2, the CDB searched with the best performance is termed as CDA, and at the end of each rotation CDA becomes CDB1 for the next rotation. CDA rotation is used to refer to this rotation process.

Figure A2a shows the diagram for the first CDA rotation, where CDB1 equals to CDB0 calculated using uniform sample weights. The CDA training stops when meeting any of the two criteria: (1) Reach the maximum 50 iterations. (2) the coefficient of variation (CV) of the last 10 performance-score is less than a threshold, indicating that the training has converged (see Algorithms A1, A2 and A4 for pseudocode).

Sample Weights Update Strategy: Given CDB1 and the associated OOP in each CDA rotation, the distance of all projections to OOP can be obtained by , which is then reversed by , in align with the purpose that points close to decision boundary should have larger weights. Since only the relative information of sample weights is important, they are L2-normalized and decay smoothly from previous sample weights by , where ⊙ indicates the Hadamard (element-wise) product. Specifically, in the first CDA rotation, the CDB1 is obtained with uniform sample weights, which corresponds to CDB0 in the GDA theoretical framework.

Bayesian Optimization (BO): The CDA rotation aims to search for a unit vector CDB with the best performance-score, which can be efficiently realized by BO ([

12]). BO is a statistical-based technique to estimate the global minimum of a function with as fewer evaluations as possible. CDA leverages this high-efficiency characteristic of BO to achieve fast training. BO has

time complexity when it searches a single parameter, where

Z is the number of sampling-and-evaluations. CDA employs a strategy that grows BO sampling times from 4 to the maximum 10 with CDA iterations (see

Appendix C.1).

Figure A2b shows an instance of BO working process. Algorithm A5 shows the pseudocode for the CDA rotation as the black box function to optimize by BO.

Finalization: As a finalization step, on the best plane CDA refines the discriminant with a null-model statistical test using 100 random CDB lines drawn from the plane (see

Appendix C.2 and Algorithm A6).

Multiclass Prediction: Error-correcting output codes (ECOC) ([

13]) is a vote/penalization-based method to make multiclass predictions from trained binary classifiers. To predict the class of a new sample, multiple scores can be obtained from the set of trained binary classifiers. These scores are interpreted as they either vote for or are against a particular class. Depending on the coding matrix and the loss function chosen, ECOC takes the class with the highest overall reward or lowest overall penalty as the predicted class. In this study, the coding matrix for one-versus-one training scheme is selected, as it creates more linear separability ([

14,

15]). For the type of loss function, hinge-loss is chosen, as our internal tests suggested that this loss leads to the best classification performance for all tested linear classifiers.

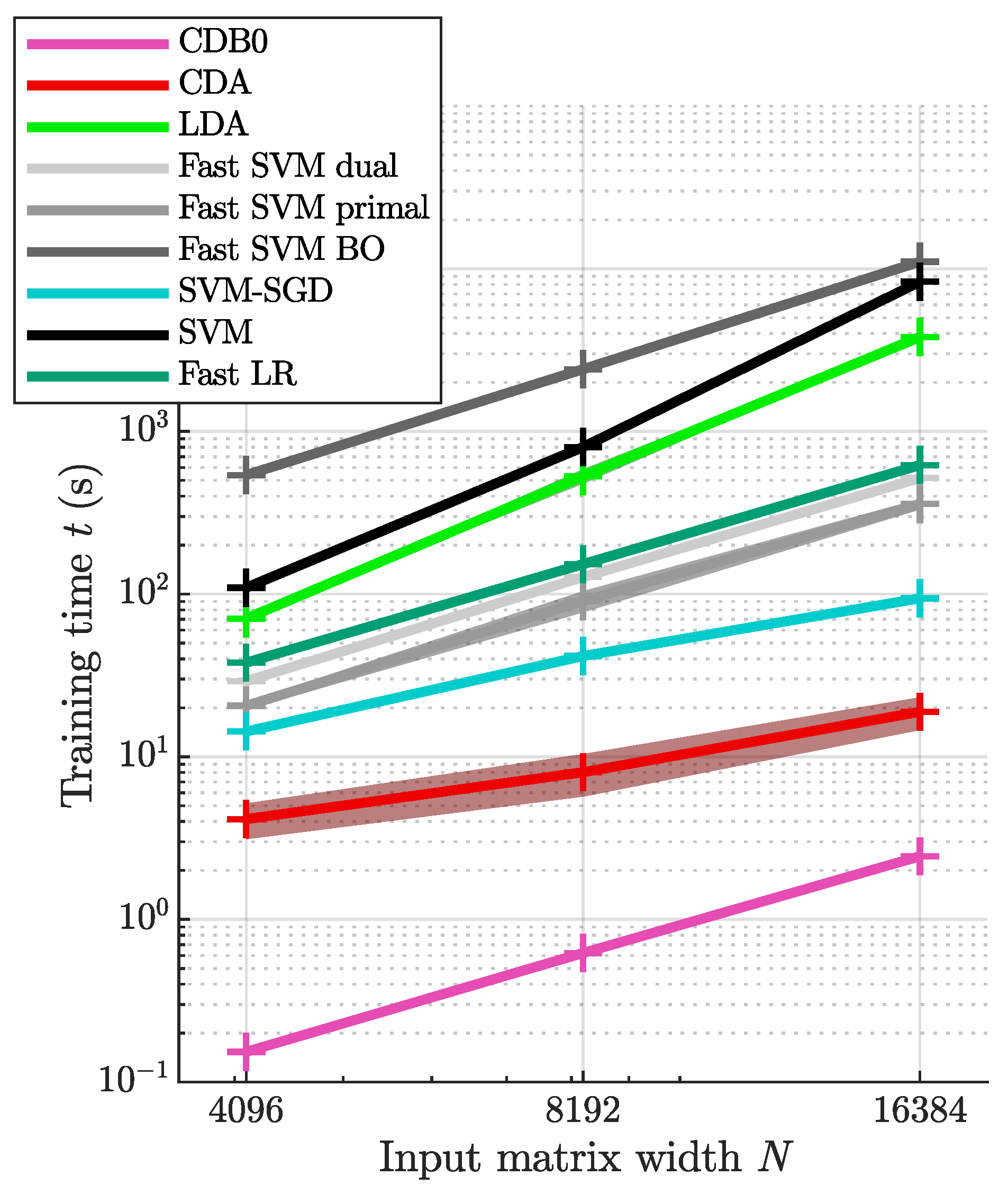

4. Experimental Evidences on Linear Classification of Real Data

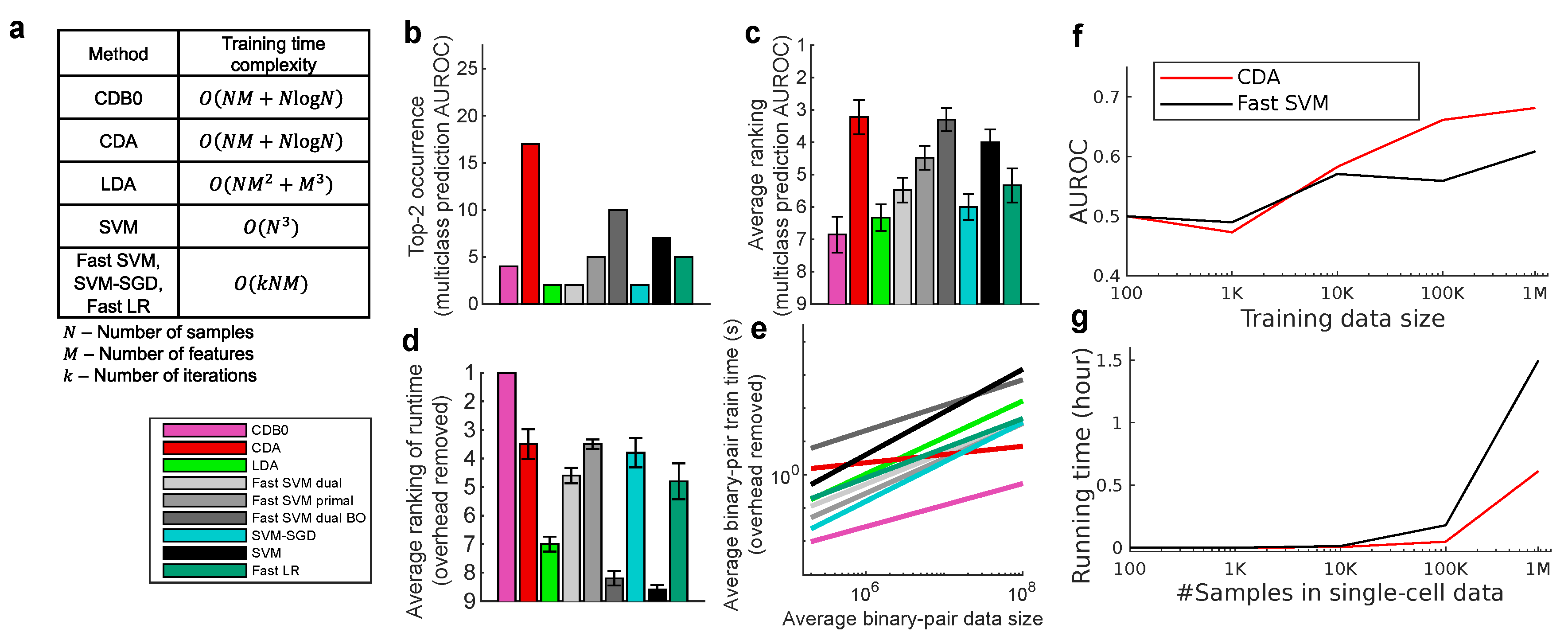

In this section, we compared the proposed CDA with other linear classifiers including LDA, SVM and LR. The 5 tested SVM variants includes the original SVM, dual and primal fast SVM implemented by Liblinear, SVM-SGD, and one fast SVM with BO hyperparameter search. LR uses fast implementation by Liblinear (fast SVM and fast LR refers to the dual version unless otherwise specified). Additionally, we include the baseline method, CDB0 (equivalent to MDC equipped with OOP bias), to quantify the improvements made by CDA over a simplistic centroid-based classification approach. Their theoretical time complexity is shown in

Figure 2a. Experiment details are in

Appendix I.

Classification Performance on Real Data: We assessed linear classification performance across 27 datasets, including standard image classification ([

16,

17,

18,

19,

20,

21,

22,

23,

24]), medical image classification ([

25]), and chemical property prediction tasks ([

26]). These datasets represent a broad range of real-world applications and varying data sizes, enabling evaluations for both training speed and predictive performance. Image data were used in their original value flattened to 1d vectors; chemical formula were processed by simplified molecular input line entry system (SMILES) tokenized encoding (see

Appendix J). Each dataset was split into a 4:1 ratio for training and test sets. The final model for each method was an unweighted ensemble of the five cross-validated models on the training set.

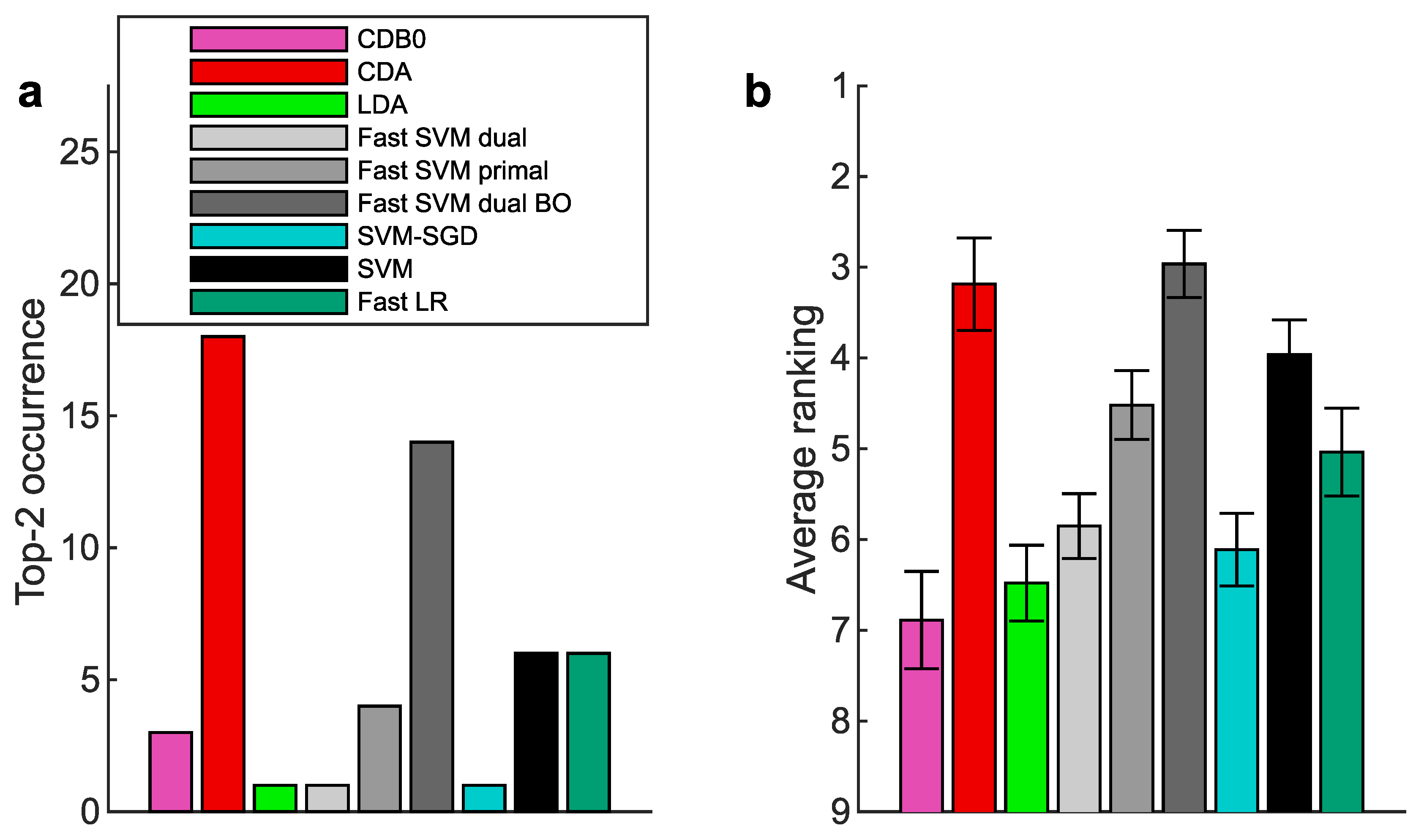

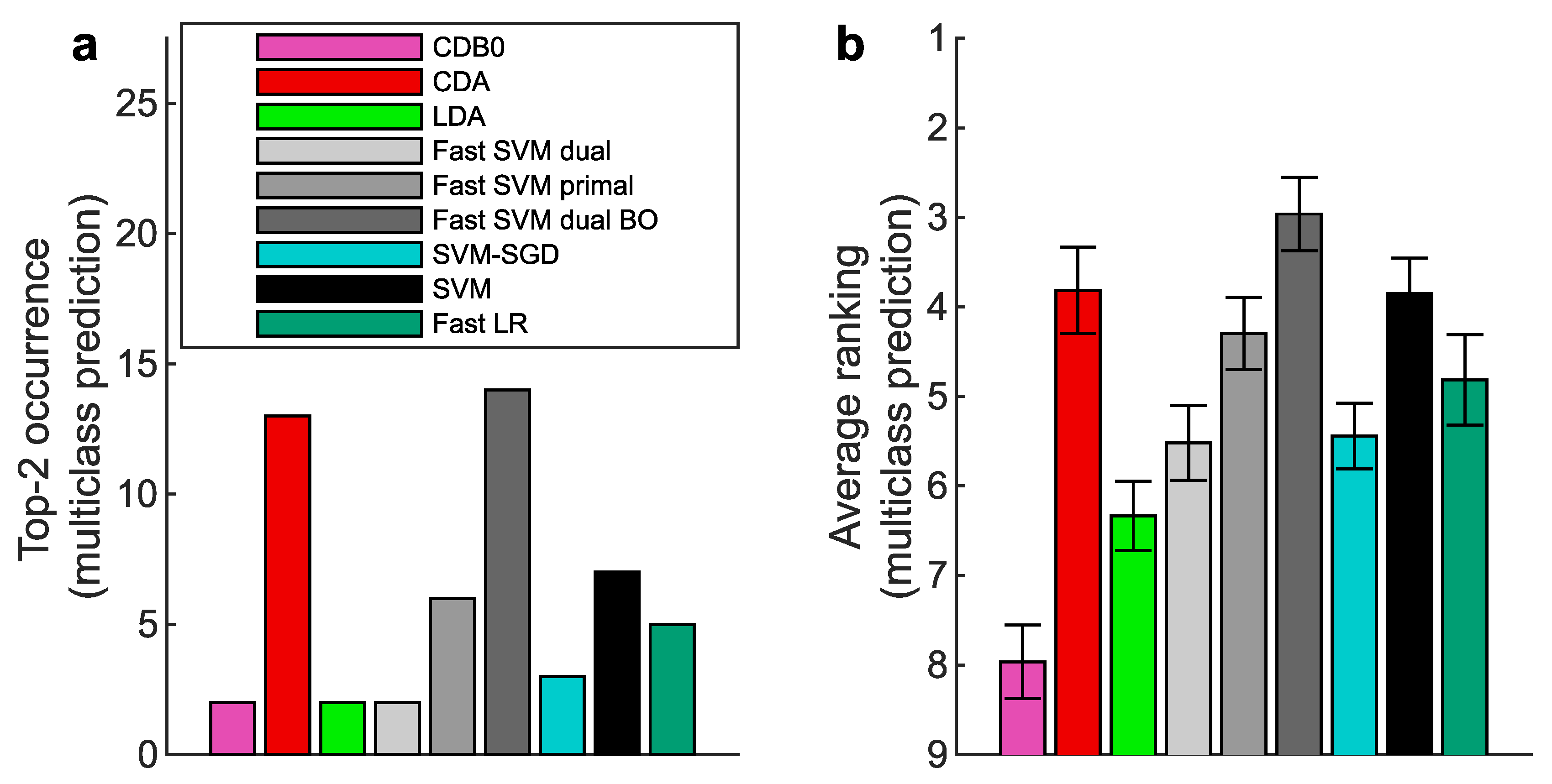

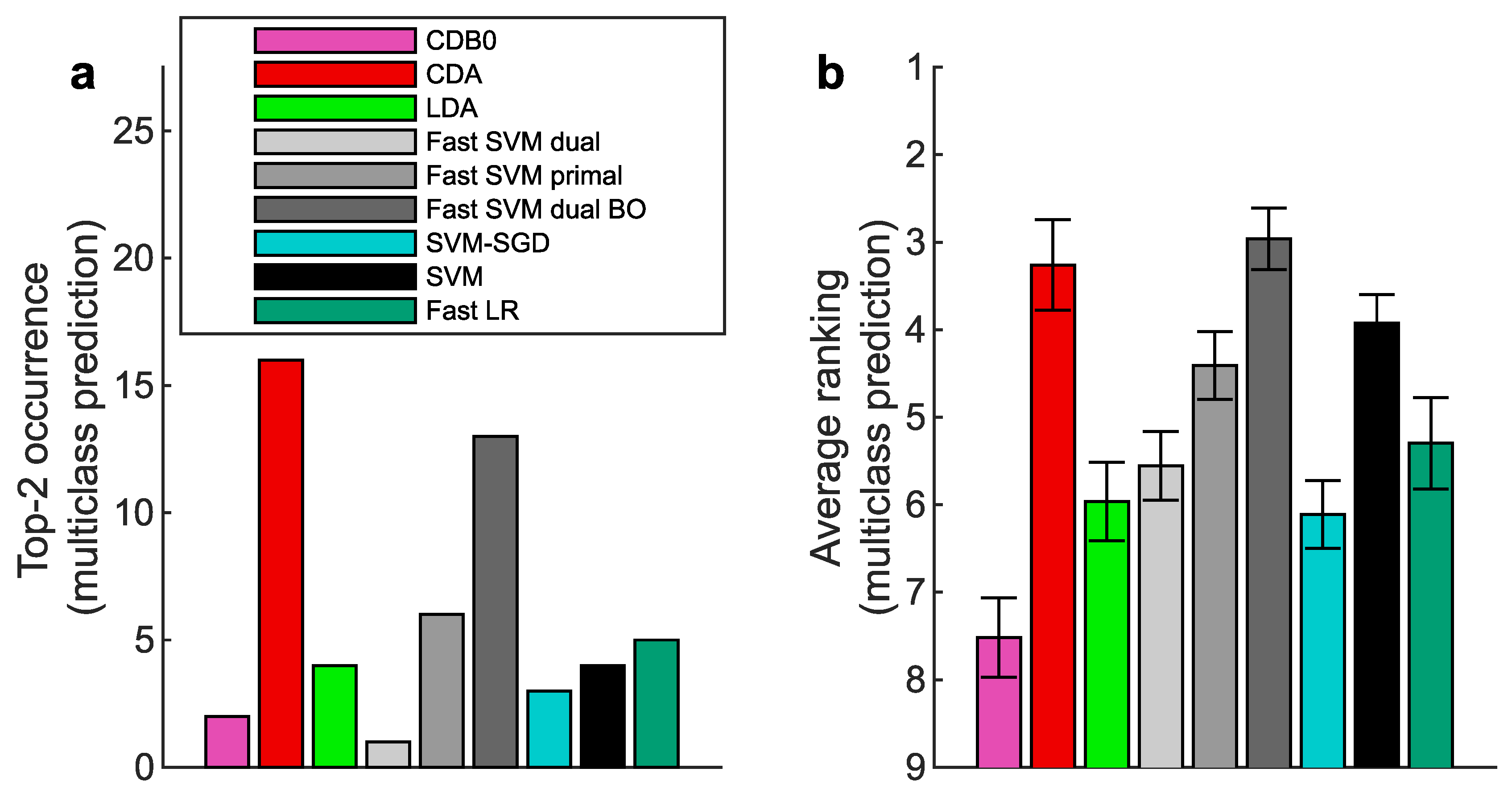

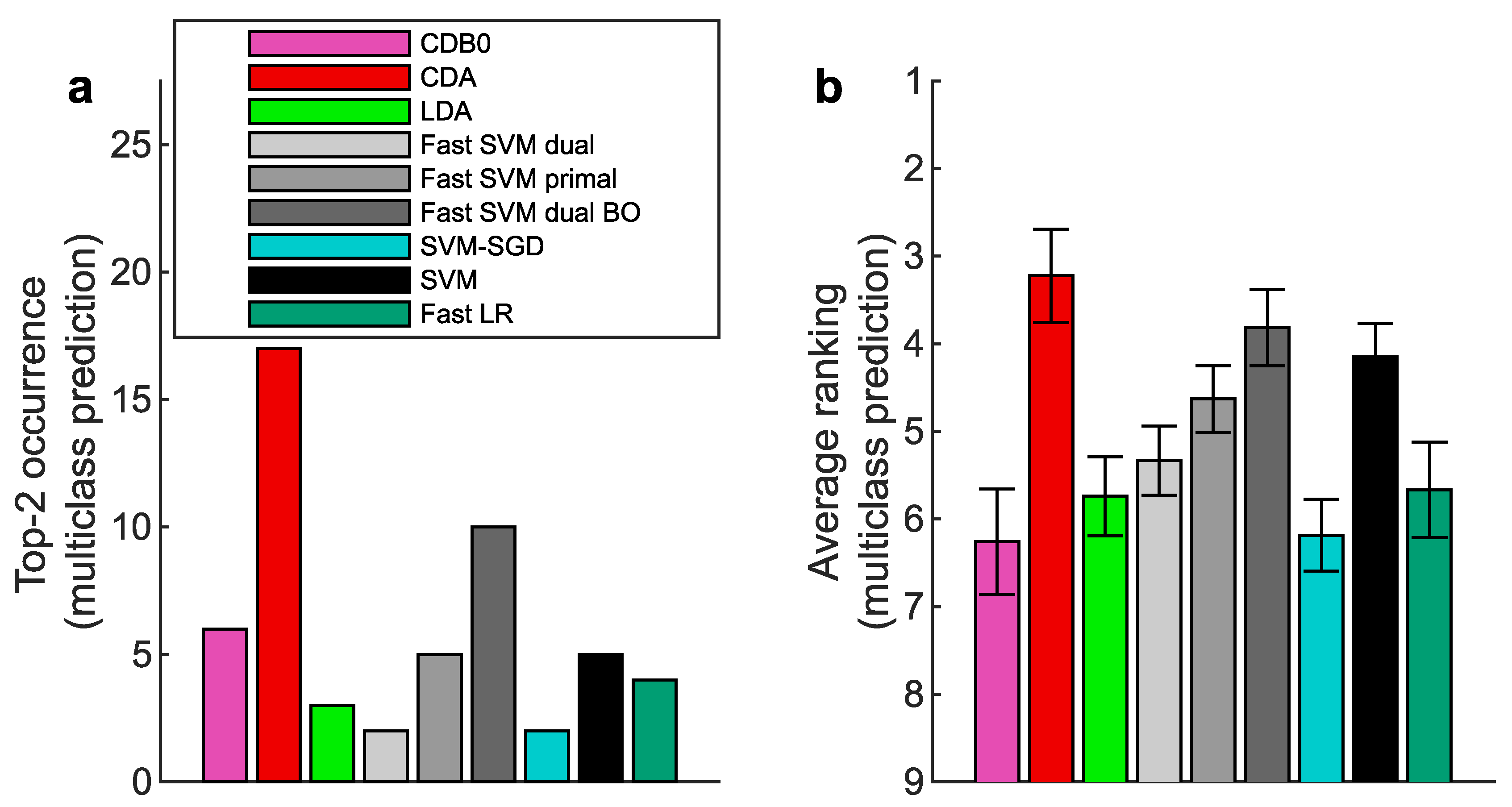

Figure 2b-e show the test set multiclass prediction performance. In

Figure 2b, CDA achieved a top-2 occurrence of AUROC on 17 out of 27 datasets, outperforming all the other linear classifiers, indicating its stability and competitiveness.

Figure 2c shows that CDA achieved the highest average ranking around 3.3, followed by fast SVM BO and SVM, however, their extremely low average ranking of training speed in

Figure 2d indicates the impracticality for large-scale datasets (Panel (d) shows results on large datasets with average class-pair data size

, because in practice we care more about the time consumption on large datasets rather than small ones). See

Appendix K-

Appendix L for binary classification results, ranking on other metrics, and complete performance tables.

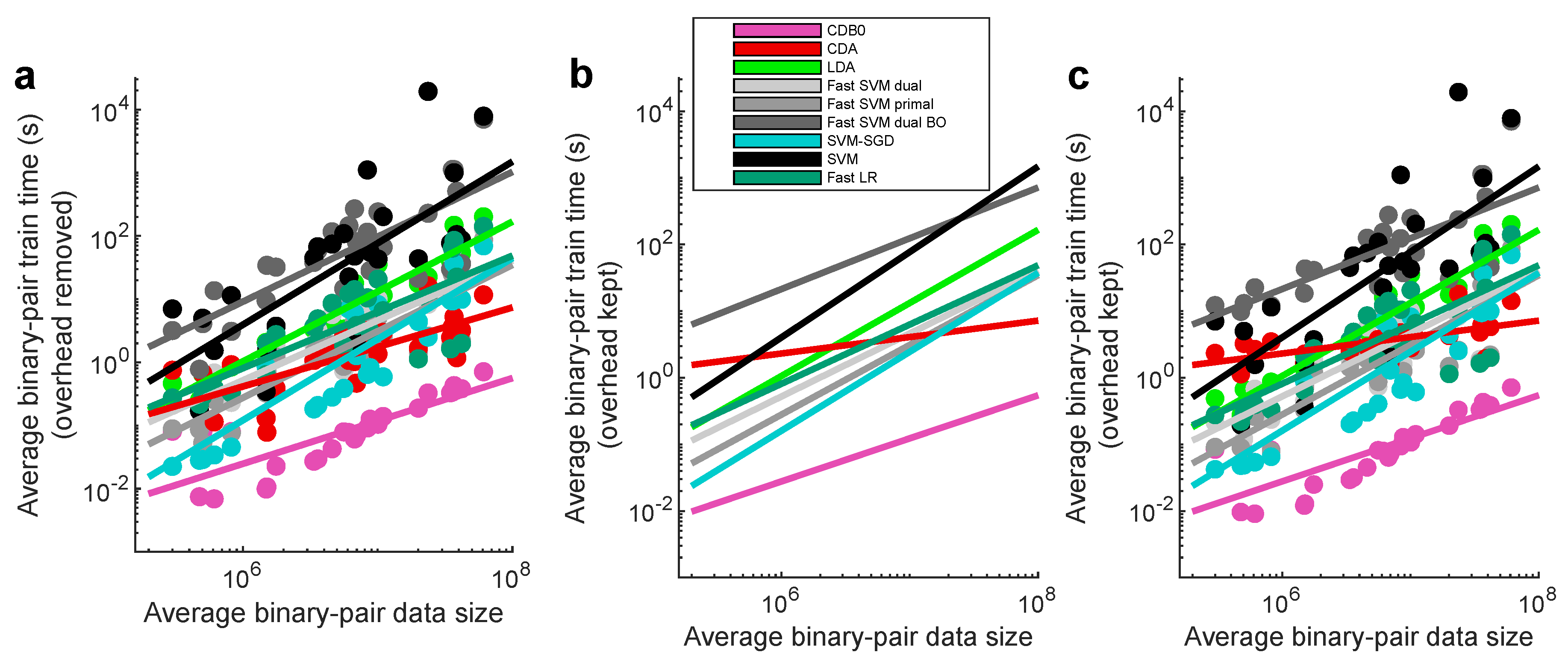

In

Figure 2e we performed linear regressions between training times and data sizes averaged across binary pairs within each dataset with overhead deducted (See

Figure A5a for original data points, and

Figure A5b-c for results with overhead kept). The regression results show that CDB0 exhibits the best scalability due to its simplicity. SVM primal and SVM-SGD are fast for small data size, however, CDA outperforms them when data size gets large. CDA not only improves significantly over CDB0 in performance but also retains similar scalability. This improvement is driven by three key components: generalized centroids with non-uniform weights, sample weight shift strategy, and rotations by Bayesian optimization. The weighted average number of iterations required by CDA per dataset was 29.33 - a small constant that does not contribute to time complexity. In contrast, the iterations required by SVM variants increase significantly with more challenging datasets to achieve a reasonable classification performance. Considering the diversity of tested datasets, CDA demonstrates itself as a generic classifier with strong performance and scalability, making it applicable to large-scale classification tasks across various domains.

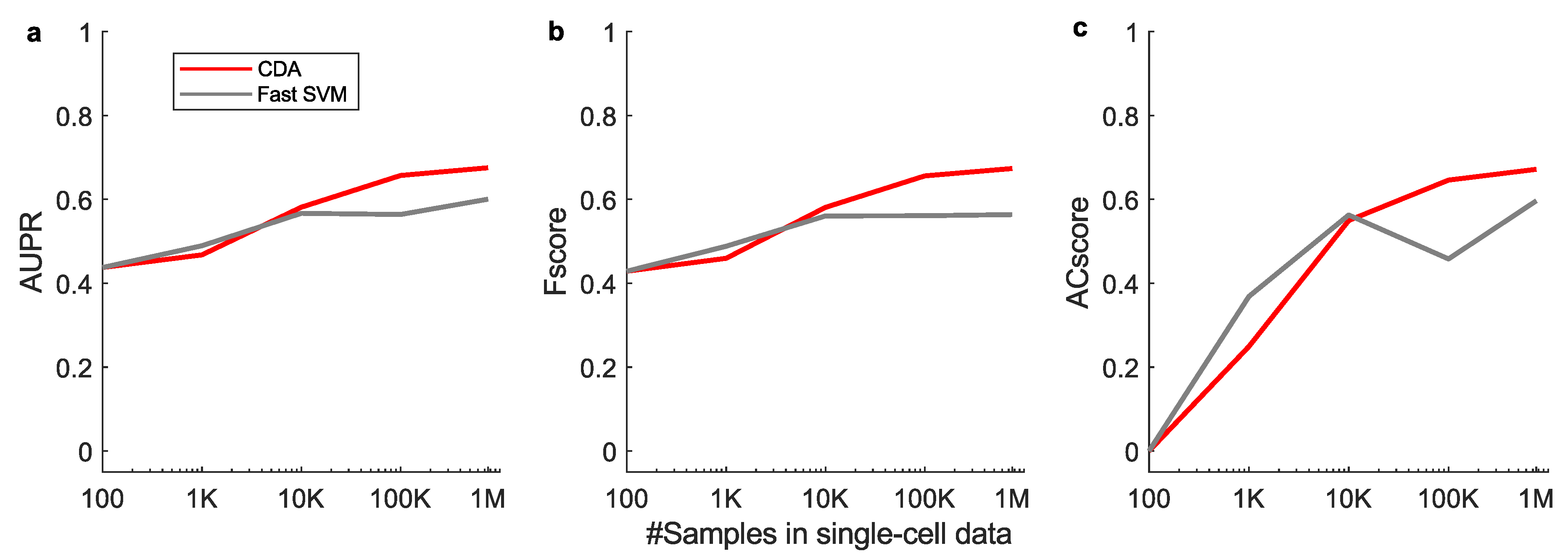

We further test CDA on a large-scale dataset, the 1.3-million-cell mouse brain dataset ([

27]), with the largest two classes (See Section.

Appendix G for details). The results show that with growing sample sizes, CDA outperforms fast SVM on both classification AUROC (

Figure 2f and Appendix

Figure A3), and training speed (

Figure 2g). These results indicate that linear CDA is even more efficient and scalable than the flagship SVM method in efficiency. Hence, CDA as a fast approach has the potential to drive large-scale real-world applications in fields such as biomedicine and autonomous driving.

In addition, we made a comparison between CDA with two prevalent neural network architectures - MLP and ResNet. See

Appendix M.1 for this comparison. In addition, we found that it is feasible to incorporate neural networks in CDA, using their extracted features to train CDA, which improves significantly over directly training on the original data if the data is complex. This combined approach and the associated experiment is discussed in

Appendix M.2.

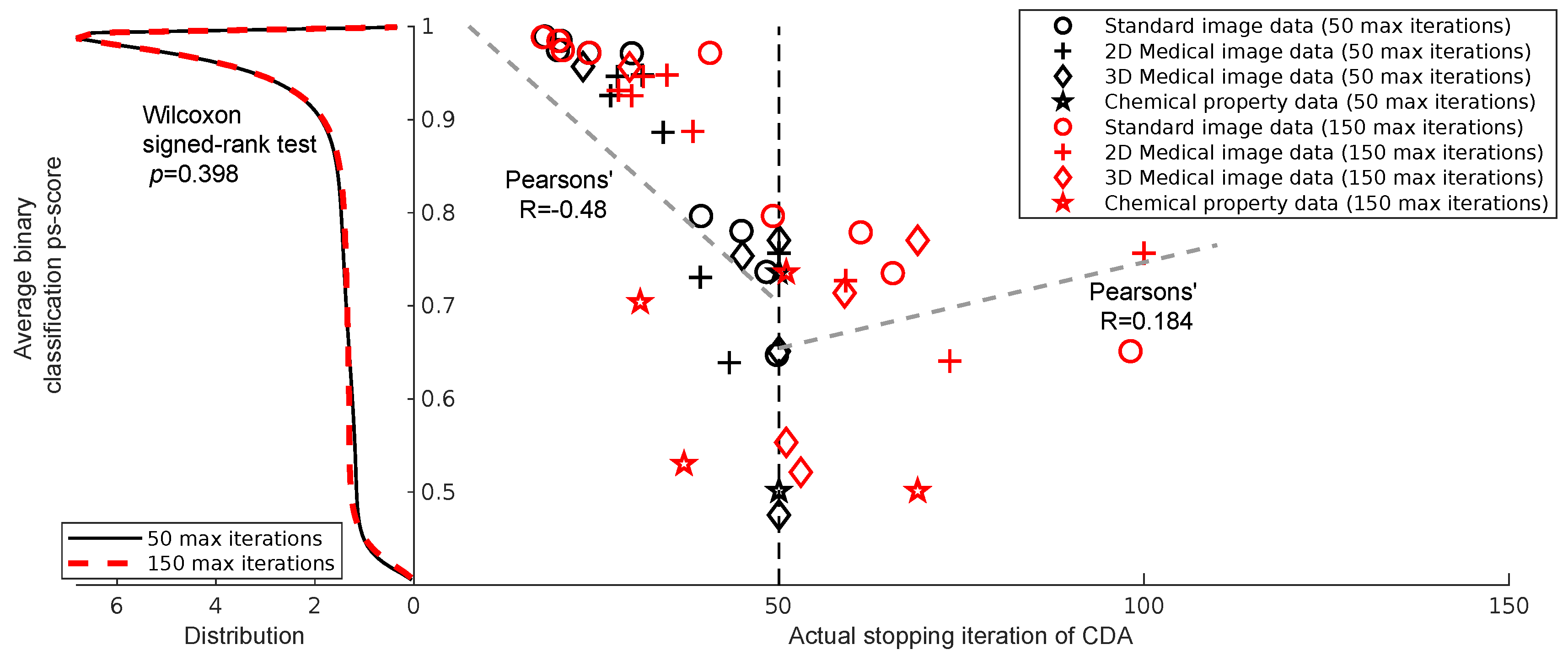

CDA’s Convergence Property: We analyzed the relationship between the actual stopping iteration of CDA and the average binary classification performance (ps-score) across different datasets and iteration limits. The results revealed two distinct regimes. In the right half of

Figure 3, for tasks converging before 50 iterations, we observed a significant negative correlation (Pearson’s R = –0.48), indicating that datasets with lower performance required more iterations to converge. This highlights the importance of allowing at least 50 iterations, as early stopping before this point may prevent convergence for more challenging tasks. In contrast, for tasks exceeding 50 iterations, the correlation was weak and positive (Pearson’s R = 0.184), suggesting that beyond this threshold, the number of iterations no longer plays a significant role in ensuring convergence. This indicates that once 50 iterations are reached, CDA can stabilize regardless of the underlying task performance.

To further validate this observation, in the left half of

Figure 3, we compared the distributions of ps-scores obtained under maximum iteration limits of 50 and 150. The estimated probability densities were nearly identical, and the Wilcoxon signed-rank test confirmed no significant difference between the two conditions (p=0.398). This result supports the conclusion that extending the maximum number of iterations beyond 50 does not provide systematic benefits in terms of classification performance.

5. Preliminary Test on Nonlinear Kernel CDA

When data exhibit strong nonlinearity, linear classifiers often underperform. To address this problem, models must exploit these nonlinear patterns to enhance expressiveness and improve classification performance. In fact, linear CDA can be naturally extended to a nonlinear variant using kernel method while retaining efficiency (see

Appendix C.4). The main computational bottleneck lies in the kernel matrix computation, which is a common cost shared by all kernel-based classifiers.

To compare the performance of nonlinear CDA and other nonlinear classifiers, we performed tests on two challenging datasets, image dataset SVHN and chemical property dataset ClinTox (see

Appendix J for data processing). We choose these datasets because they are difficult to classify with linear classifiers, and we are interested in to what extent can nonlinear classifiers improve over linear ones. We used a subset of SVHN samples (24,000) due to the time limitation to test with kernel method. We compared linear CDA, nonlinear gaussian CDA (nCDA), linear SVM, nonlinear gaussian SVM (nSVM). For kernel methods, we performed hyperparameter search for the gaussian parameter

. The data were divided into train, validation and test set, where on the validation set the hyperparameter was tuned. The training specifics can be found in

Appendix I.2. The multiclass test set results (on ClinTox binary results since it is a binary task) in

Table 1 show that nonlinear kernel CDA outperforms gaussian SVM and linear methods on both the SVHN subset and ClinTox on all classification metrics. Despite the fact that gaussian CDA improves substantially over linear CDA in tasks such as the SVHN image classification, we emphasize that linear CDA is still very useful and important, with high efficiency and low resource requirement in computation, among other merits.

6. Conclusions and Discussions

Linear classifiers, while inherently simpler, are favored in certain contexts due to their reduced tendency to overfit and their interpretability in decision-making. However, achieving both high scalability and robust predictive performance simultaneously remains a significant challenge.

In this study, the introduction of the Geometric Discriminant Analysis (GDA) framework marks a notable step forward in addressing this challenge. By leveraging the geometric properties of centroids – a fundamental concept in multiple disciplines – GDA provides a unifying framework for certain linear classifiers. The core innovation lies in a special type of Centroid Discriminant Basis (CDB0), which serves as the foundation for deriving discriminants. These discriminants, when augmented with geometric corrections under varying constraints, extend the theoretical flexibility of GDA. Notably, Minimum Distance Classifier (MDC) and Linear Discriminant Analysis (LDA) are shown to be a subset of this broader framework, demonstrating how they converge to CDB0 under specific conditions. This theoretical generalization not only validates the GDA framework but also sets the stage for novel classifier designs.

A key practical contribution of this work is the Centroid Discriminant Analysis (CDA), a specialized implementation of GDA. CDA employs geometric rotations of the CDB within planes defined by centroid-vectors with shifted sample weights. These rotations, combined with Bayesian optimization techniques, enhance the method’s scalability, achieving quadratic time complexity . Across diverse datasets—including standard images, medical images, and chemical property classifications—CDA demonstrated superior performance in scalability, predictive performance, and stability. We emphasize that CDA not merely robustly outperforms most of existing linear classification methods, but crucially, it achieves this with superior computational efficiency. Specifically, CDA exhibits a quadratic time complexity in the worst-case scenario, compared to the cubic complexity typical of established methods such as LDA and SVM, with significantly shorter runtimes that highlight its practical advantage in real-world applications. These findings hold particular relevance for the broader machine learning community, as linear classifiers remain widely used across numerous scientific domains where interpretability, scalability, and computational efficiency are critical. In such contexts, practitioners often prefer models that are not only robust and fast but also transparent and easy to deploy in real-world decision-making scenarios.

In cases where the data exhibit complex nonlinear structures that linear classifiers cannot adequately capture, linear CDA can be extended to a nonlinear form via kernel methods. This generalization significantly broadens the applicability of CDA by enabling it to handle nonlinear patterns in the data. Together, linear and kernel-based CDA form a complementary toolkit for classification tasks. A practical strategy is to begin with linear CDA to see whether the predictions are satisfying, which offers fast and interpretable results. Our preliminary results also suggest that extending CDA with nonlinear kernels is a promising direction for future research, since the variety of available kernels broadens the opportunity to tailor the mapping of original data onto higher-dimensional spaces according to the specific structure of the data - where it may become more easily separable.

In conclusion, the GDA framework and its CDA implementation represent a paradigm shift in classifier design, combining the interpretability of geometric principles with state-of-the-art computational efficiency. This work not only advances the field of classification but also lays groundwork for innovative approaches to supervised learning.

Appendix A. Supplementary: GDA Theoretical Framework

Appendix A.1. Difference Between Theory and Theoretical Framework

We emphasize that GDA does not constitute a new theory, but rather a theoretical framework. A theory in data science and machine learning provides a foundational explanation often accompanied by rigorous mathematical derivations, such as performance bounds or convergence guarantees. In contrast, a theoretical framework offers a structured set of concepts and assumptions, typically formalized through equations, that guide the design of methods and the interpretation of results - without necessarily proving theoretical performance guarantees. Within this clarified scope, GDA serves as the framework under which we define and motivate an efficient geometric, centroid-based linear classification method: Centroid Discriminant Analysis (CDA), which is discussed in

Section 3.

Appendix A.2. Scaling Invariance of the Discriminants in the GDA Framework

GDA is a geometrical projection-based theoretical framework. In this framework, the discriminant (the high-dimensional vector orthogonal to the classification boundary) of the involved classifiers (including CDA), is normalized to a unit vector before projecting data. The final classification depends solely on these projections; scaling the discriminant length by any factor (including terms like

in the derivation of GDA by LDA) uniformly scales all projections without changing their relative positions. Consequently, the optimal threshold (which can be found by search) and classification results remain unchanged. This normalization is explicitly implemented in CDA (see, for example,

Appendix C.3, Algorithm 1, line 4: “Normalize

…”), ensuring that only the direction is refined during iterations.

This property distinguishes GDA and CDA from approaches like SVM, where the magnitude of the discriminant vector is tied to the margin width during training. However, even for SVM, once the model is trained, scaling and b together leaves predictions unchanged because the decision function is homogeneous in and b. (y: predicted class; : Heaviside function; : sample; b: bias). In CDA training, magnitude carries no interpretive meaning - only direction matters. These also reflect that GDA reinterprets certain existing classifiers from the geometric projection perspective, which is an innovation that inherently differs from their own interpretations.

Figure A1.

The GDA theoretical framework in 2 dimensions showing how the relation between LD and CDB0 evolves under specific conditions. (a) The relations between LD and CDB0 proceeding from the general case to different special cases under the conditions shown along each arrow. (b) The specific expressions of LD in terms of CDB0 and corrections corresponding to each case in column (a). (c) Binary classifications models of LD (blue dashed) and CDB0 (black solid) on different 2D data corresponding to each case in column (a). The lines show the direction instead of the unit vector of the discriminants. LD: Linear Discriminant; CDB0: Centroid Discriminant Basis 0. denotes a generic normalizing factor.

Figure A1.

The GDA theoretical framework in 2 dimensions showing how the relation between LD and CDB0 evolves under specific conditions. (a) The relations between LD and CDB0 proceeding from the general case to different special cases under the conditions shown along each arrow. (b) The specific expressions of LD in terms of CDB0 and corrections corresponding to each case in column (a). (c) Binary classifications models of LD (blue dashed) and CDB0 (black solid) on different 2D data corresponding to each case in column (a). The lines show the direction instead of the unit vector of the discriminants. LD: Linear Discriminant; CDB0: Centroid Discriminant Basis 0. denotes a generic normalizing factor.

Appendix A.3. GDA Demonstration with 2D Artificial Data

Appendix B. CDA Schematic Diagram

Figure A2.

Diagram for the first CDA rotation. (a) CDB1 is obtained from specific sample weights. CDB2 is obtained from centroids with a shifted sample weights toward the decision boundary. Darker points represent larger sample weights. CDB1 and CDB2 form a 2d plane on which BO is performed to estimate the best classification discriminant. (b) The process of BO to estimate the best discriminant. The rotation angle from CDB1 is the only independent variable to search in BO. The estimated optimal line CDA servers as the new CDB1 in the next CDA rotation. CDB: Centroid Discriminant Basis; CDA: Centroid Discriminant Analysis.

Figure A2.

Diagram for the first CDA rotation. (a) CDB1 is obtained from specific sample weights. CDB2 is obtained from centroids with a shifted sample weights toward the decision boundary. Darker points represent larger sample weights. CDB1 and CDB2 form a 2d plane on which BO is performed to estimate the best classification discriminant. (b) The process of BO to estimate the best discriminant. The rotation angle from CDB1 is the only independent variable to search in BO. The estimated optimal line CDA servers as the new CDB1 in the next CDA rotation. CDB: Centroid Discriminant Basis; CDA: Centroid Discriminant Analysis.

Appendix C. The CDA Algorithm

Appendix C.1. Bayesian Optimization

One factor related to the effectiveness of CDA is the estimation with varying precision in BO. We set the number of BO sampling times to as default parameter during the CDA rotation, where is the -th rotation. During the first few CDA rotations, the BO estimation has relatively less precision. This gives CDA enough randomness to first enter a large region in which there are more discriminants with high performance, under the hypothesis that regions close to global optimum or suboptimum have more high-performance solutions that are more likely to be randomly selected. Starting from this large region, BO precision is gradually enhanced to refine the search in or close to this region in terms of Euclidean distance, and force CDA to converge. The upper limit of 10 CDA rotations still creates a small level of imprecision, in order to make CDA escape from small-size local minimum, to acquire higher performance, and improve training efficiency.

Appendix C.2. Refining CDA with statistical examination on 2D plane

In CDA, the plane associated with the best performance is selected, but it is uncertain whether there are higher-performance discriminants on this plane, since the precision might not be high enough with 10 BO samplings and even lower for less samplings. To determine whether the BO is precise enough, a statistical examination using p-value with respect to null-model is performed. On this best plane, we generate 100 random CDBs, run BO in turn for 10, 20 and to the maximum 30 BO samplings, until the p-value of training performance-score of the BO-estimated CDB with regard to the null model is 0, otherwise using the best random CDB. In this way, we are able to give a confidence level of the BO estimated discriminant in the best CDA rotation plane. Algorithm A6 shows the pseudocode for the refining finalization step.

Appendix C.3. Linear CDA Pseudocode

This study deals with a supervised binary classification problem on labeled data

with

N samples and

M features. The samples consist of positive and negative class data

and

with sizes

and

, respectively, and the corresponding labels are tokenized as 1 and 0, respectively. The sample features are flattened to 1D if they have higher dimensions, such as image data. The following section introduces CDA in pseudo-code. CDA rotations, BO sampling and p-value computing involve constant numbers, thus, they do not contribute to the time complexity.

|

Algorithm A1 CDA Main Algorithm (CDA)

|

Input: data matrix , labels

Initialize

Compute

Normalize

Initialize

for to 50 do

Compute

Normalize

Set Number of BO sampling

if then

end if

if coefficient of variation of the last 10 then

break {Early stop convergence} end if

Update

end for Compute

Output:

|

|

Algorithm A2 Update Sample Weights (updateSampleWeights)

|

Input: data matrix , labels , sample weights , vector

Initialize

Compute

Compute

Compute

Update is Hadamard product}

Output:

|

|

Algorithm A3 Search Optimal Operating Point (searchOOP)

|

Input: projected points , labels

Sort sort according to } Initialize initial confusion matrix

for to do

Update scan each label and adjust cm Compute

end for Compute

Compute

Compute

Output:

|

|

Algorithm A4 Approximate Optimal Line by BO (CdaRotation)

|

Input: lines , data matrix , labels , total BO iteration

Compute

Normalize

Estimate ,

Compute

Compute

Get the mean of with 5-fold cross-validation

Output:

|

|

Algorithm A5 Evaluate the Line with Rotation Angle (evaluateRotation)

|

Input: Rotation angle , lines , data matrix , labels

Compute

Compute

Get mean with 5-fold cross-validation scheme

Output:

|

|

Algorithm A6 Refine on the Best Model (refineOnBestPlane)

|

Input: and its orthogonal vector , data matrix , labels

for to 100 do

Randomly choose

Compute

Compute

Get mean with 5-fold cross-validation

end for Sort sort returning position indices Compute

Initialize

while and do

Increment

Compute

Sort sort returning position indices Compute

end while

if then

Compute

Set

end if

Output:

|

Appendix C.4. Nonlinear kernel CDA

The CDA algorithm involves computing the CDBs using the equation provided in

Appendix C.3, Algorithm 1:

Combining this with the normalization step for each CDB

, the equation can be rewritten as:

where

is the vector of sample weights;

for all

is the weight sum division for each class, and

maps sample index

i to class index

c;

are the tokenized labels (

or

);

k is the factor to normalize each CDB as a unit vector.

For the rotated CDBs used in Bayesian optimization (Appendix B, Algorithms 4–5), they can be expressed as linear combinations of CDB1 and orthogonalized CDB2, mixing their corresponding vectors. Thus, the sample coefficient is tracked throughout the training process for each CDB.

The data projection can be expressed as:

With this expression, the data projection can incorporate kernel methods to realize nonlinear classification, shown by the following equation.

In fact, kernel methods implicitly projects data into a high-dimensional space, then applying linear classifiers in the transformed feature space.

Appendix D. Deriving LDA in the GDA Theoretical Framework for m-Dimensions (m>2)

In the GDA theoretical framework, we have demonstrated that linear discriminant (LD) can be expressed by the basis CDB0 with different geometric corrections on CDB0 under different conditions, as shown in

Figure A1. The conclusions are not confined only to the 2-dimensional case but also applicable to the

m-dimensional case (

). Here we derive the generalization of the LD coefficient to the

m-dimensional case. The discriminant of LDA is given by

, where

is a constant, and it is assumed that the within-class covariance matrix

is invertible, and

is the sum of covariance matrices of individual classes. Denote the elements in

,

, and

by

,

, and

for all

, respectively.

Special Case 1. Assume that pairwise features have the same covariance

, and all pairwise features have the same covariance

(In experiments, we relax the conditions to have similar variance and similar covariance). Then,

, where

is the identity matrix, and

is the all-one matrix. According to the Woodbury matrix identity, we have

, where

is the matrix with all ones except on the diagonal. Thus,

, which corresponds to the row for special case 1 in

Figure A1.

Special Case 1.1. Based on the assumptions in Special Case 1, we further assume that the two covariance matrices are similar, i.e.,

, so that

for all

. We further have

, where

is the Pearson correlation coefficient (PCC) between features

i and

j. Based on the assumptions made in this special case, all pairwise PCCs are the same, i.e.,

for all

. Thus,

, which shows that the geometric correction part is related to feature correlations. Accordingly,

, which corresponds to the row for special case 1.1 in

Figure A1.

Special Case 1.1.1. Based on Special Case 1.1, assume further that all pairwise features have no correlations (i.e.,

), then according to the equation,

. Thus,

, where

since

is already a unit vector, which corresponds to the row for special case 1.1.1 in

Figure A1. By these derivations, we show how LDA converges to CDB0 under specific conditions of the data.

Appendix E. Deriving CDA in the GDA Theoretical Framework

In this section, we give the mathematical demonstration of how CDA is formulated in the GDA theoretical framework. Since the correction matrix for LDA in

Section 2 is a special case of a linear operator, we extend to a more general correction term deriving CDA in the GDA theoretical framework. The construction of the geometric discriminant

can be realized by continuously rotating CDB0 in planes that satisfy several constraints, shown by:

where

is the CDA rotation operator,

, in which

is the identity operator. This equation matches with Equation (

10) and involves only one correction term, where

because both the CDB0 and CDA are unit vectors and

is an overall matrix of high-dimensional rotation. For a given dataset

are fixed, it is simplified by:

, which defines the linear operator that maps the line CDB1 to CDA in each CDA iteration and maps the sample weights

to the updated sample weights.

In Equation (

A5), the second equality holds because

obtained at the end of each rotation is used as

in the next rotation. The fifth equality shows that the final CDA line can be written in a form that only depends on variables of the first iteration. This is because, in the first CDA rotation, the initial sample weights

are uniform, and CDB1 is the same as CDB0 of the GDA theoretical framework. Thus, from the sixth equality,

are omitted. The last three equalities show that the final CDA discriminant is a subcase of the generalized GDA theoretical framework.

The operator

can be decomposed as two sequential operators:

where

is the linear operator that maps CDB1 to CDB2 and updates sample weights by

which corresponds to

Appendix C.3 Algorithm 2.

is the linear operator that maps two vectors CDB1 and CDB2 to the BO-estimated discriminant on the plane spanned by CDB1 and CDB2, and updates samples weights.

performs the mapping to CDB2 through the following equation:

The operator

does the mapping to CDA through the following equation:

Where the first two equations find the unit orthogonal vector to CDB1 by the Gram-Schmidt process. The orthogonalization process ensures an efficient search in the space of CDBs during the rotation. The last two equations estimate the optimal rotation angle using Bayesian optimization and the corresponding discriminant.

is the operator that outputs the performance score given a classification discriminant, which projects all data onto the discriminant and performs the OOP search of the score.

Appendix F. Classification Performance Evaluation

The datasets used in this study span standard image classification, medical image classification, and chemical property classification, most of which involve multiclass data. To comprehensively assess classification performance across these diverse tasks, we employed four key metrics: AUROC, AUPR, F-score, and AC-score. Accuracy was excluded due to its limitations in reflecting true performance, especially on imbalanced data. Though data augmentation can make data balanced, it introduces uncertainty due to the augmentation strategy and the data quality.

Since we do not prioritize any specific class (as designing a dataset-specific metric is beyond this study’s scope), we perform two evaluations—one assuming each class as positive—and take the average. This approach applies to AUPR and F-score, whereas AUROC and AC-score, being symmetric about class labels, require only a single evaluation.

For multiclass prediction, a C-dimensional confusion matrix is obtained by comparing predicted labels with true labels. This is converted to C binary confusion matrices by each time taking one class as positive and all the others as negative, and the final evaluation is the average of evaluations on these individual confusion matrices.

To interpret this evaluation scheme, consider the MNIST dataset and the AUPR metric as an example. In binary classification, for the pair of digits "1" and "2", it calculates the sensitivity-recall for detecting "1" and the sensitivity-recall for detecting "2", then takes the average. For other pairs, the calculation follows the same pattern. In the multiclass scenario, it considers the sensitivity-recall for detecting "1" and the sensitivity-recall for detecting "not 1", then takes the average. The same approach applies to other pairs.

Appendix Individual Metrics

(a) AUROC

Area Under the Receiver Operating Characteristic curve involves the true positive rate (TPR) and false positive rate (FPR) as follows:

This measure indicates how well the model distinguishes between classes. A high score means that the model can identify most positive cases with few false positives.

(b) AUPR

Area Under the Precision-Recall curve considers two complementary indices: precision (

) and recall (

), defined as:

AUPR measures whether the classifier retrieves most positive cases.

(c) F-score

F-score (using F1-score in this study) is the harmonic mean of precision and recall which contribute equally:

(d) AC-score

An accuracy-related metric AC-score ([

11]) addresses the limitations of traditional accuracy for imbalanced data. It imposes a stronger penalty for deviations from optimal performance on imbalanced data and is defined as:

This metric assigns equal importance to both classes and penalizes imbalanced performance by ensuring that if one class is poorly classified, the overall score remains low. Thus, AC-score provides a more conservative but reliable evaluation of classifier performance.

Appendix G. Experiment on Linear Classification of Large-Scale Data

There are real-world problems where the samples are at million-level in addition to plenty of features, posing a significant challenge to train classifiers in reasonable time. One of these problems is omics data classification such as single-cell sequencing data. We collected the 1.3-million-cell mouse brain dataset ([

27]) and took the largest two classes to create a binary classification task (757,526 samples and 27,998 features), with varying number of samples by random subsampling till all samples were included. We compared CDA and fast SVM (dual optimizer), which is one of the most efficient and scalable linear SVM, with regard to performance and speed. Original data of sequencing counts were used, and the training and test sets were created using a 4:1 split. The results show that with growing sample sizes, linear CDA outperforms fast SVM not only on classification performance AUROC (

Figure 2a and Appendix

Figure A3), but also on the single-core training speed (

Figure 2b). These results indicate that linear CDA is even more efficient and scalable than the flagship SVM method in efficiency. Hence, CDA as a fast approach has the potential to drive large-scale real-world applications in fields such as biomedicine and autonomous driving.

Figure A3.

Test set performance with varying sizes of training samples in single-cell mouse brain data, evaluated by (a) AUPR, (b) Fscore and (c) ACscore. CDA: Centroid Discriminant Analysis; SVM: Support Vector Machine; AUPR: Area under precision-recall curve; ACscore: Accuracy score.

Figure A3.

Test set performance with varying sizes of training samples in single-cell mouse brain data, evaluated by (a) AUPR, (b) Fscore and (c) ACscore. CDA: Centroid Discriminant Analysis; SVM: Support Vector Machine; AUPR: Area under precision-recall curve; ACscore: Accuracy score.

Appendix H. Supplemental Training Speed Results

Figure A4.

The training time with constant overhead kept. The training time of linear classifiers with increasing input matrix sizes. The number of features were set the same as number of samples N. Shaded area represents standard error. Log-scale is used for both axes to reveal the scalability of algorithms. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; BO: Bayesian Optimization; SGD: Stochastic Gradient Descent; LR: Logistic Regression.

Figure A4.

The training time with constant overhead kept. The training time of linear classifiers with increasing input matrix sizes. The number of features were set the same as number of samples N. Shaded area represents standard error. Log-scale is used for both axes to reveal the scalability of algorithms. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; BO: Bayesian Optimization; SGD: Stochastic Gradient Descent; LR: Logistic Regression.

Figure A5.

Running time results of linear classifiers on 27 real datasets. (a) Linear regression fit of the running time against data sizes, together with original running time data shown by the circles. Overhead is removed. (b-c) Running time and speed results with overhead kept. (b) Linear regression fit of the running time against data sizes. (c) The same as (b) with original running time data shown by the circles. The log-log scale is applied then linear regression is performed in (a-c) to reflect scaling behavior. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; BO: Bayesian Optimization; SGD: Stochastic Gradient Descent; LR: Logistic Regression.

Figure A5.

Running time results of linear classifiers on 27 real datasets. (a) Linear regression fit of the running time against data sizes, together with original running time data shown by the circles. Overhead is removed. (b-c) Running time and speed results with overhead kept. (b) Linear regression fit of the running time against data sizes. (c) The same as (b) with original running time data shown by the circles. The log-log scale is applied then linear regression is performed in (a-c) to reflect scaling behavior. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; BO: Bayesian Optimization; SGD: Stochastic Gradient Descent; LR: Logistic Regression.

Appendix I. Implementation Details

All data samples were converted into 1d representations without feature extraction or standardization throughout the paper, as the focus of this study is to compare the general performance of various classifiers on common tasks, rather than optimizing feature extraction for specific applications such as image classification.

Appendix I.1. Linear Classifiers

Linear classifiers compared in this study include LDA, SVM, and fast SVM. Data were input to classifiers in their original values without standardization.

For CDA, the threshold for the coefficient of variation (CV) of performance-score was set to 0.001. 5-fold cross-validation is applied on the OOP search.

For LDA, the MATLAB toolbox function fitcdiscr was applied, with pseudolinear as the discriminant type, which solves the pseudo-inverse with SVD to adapt to poorly conditioned covariance matrices.

For SVM, the MATLAB toolbox function fitcsvm was applied, with sequential minimal optimization (SMO) as the optimizer. The cost function used the L2-regularized (margin) L1-loss (misclassification) form. The misclassification cost coefficient was set to by default. The maximum number of iterations was linked to the number of training samples in the binary pair as . The convergence criteria were set to the default values.

For fast SVM, the LIBLINEAR MATLAB mex function was applied. For the dual form SVM using dual coordinate descent (DCD), the cost function used the L2-regularized (margin) L1-loss (misclassification) form; For the primal form SVM, both regularization and loss are in L2 form. The misclassification cost coefficient was set to by default. A bias term was added, and correspondingly, the data had an additional dimension with a value of 1. The convergence criteria were set to the default values. Training epochs were set to 300 in general tasks and 100 in large-scale single-cell test.

For SVM-SGD, the passes of all training data are 10; batchsize is 10; learning rate is .

Hyperparameter tuning was performed for fast SVM to achieve the its best performance. CDA, as well as CDB0, do not require parameter tuning.

Speed tests and performance test were from different computational conditions. To obtain classification performance in a reasonable time span, GPU was enabled for SVM, and multicore was enabled for CDA and LDA. To compare speed fairly, all linear classifiers used single-core computing mode.

Appendix I.2. Nonlinear Classifiers

For the experiments on the nonlinear kernel-based approaches, on SVHN subset and chemical Clintox, the data were first divided into train+validation and test set with 5:1 and 4:1 ratio respectively, then the train+validation set was further divided into train set and validation set with a 4:1 ratio.

The gaussian kernel parameter sigma was tuned on the validation set by Bayesian optimization with 30 sampling. For the tuned gaussian parameter, on SVHN subset gaussian CDA has and gaussian SVM has ; on ClinTox gaussian CDA has and gaussian SVM has , where in gaussian kernel .

For CDA, the threshold for the coefficient of variation (CV) of performance-score was set to 0.001. For SVM, libSVM was implemented with L2-regularized (margin) L1-loss (misclassification) cost function and with default misclassification cost coefficient .

Appendix J. Dataset Description

The datasets tested in this study encompass standard image classification, medical image classification, and chemical property prediction, described in details in Table. 1.

Table A1.

Dataset description

Table A1.

Dataset description

| Dataset |

#Samples |

#Features |

#Classes |

Balancedness |

Modality/source |

Classification task |

| Standard images |

| MNIST |

70000 |

400 |

10 |

imbalanced |

image |

digits |

| USPS |

9298 |

256 |

10 |

imbalanced |

image |

digits |

| EMNIST |

145600 |

784 |

26 |

balanced |

image |

letters |

| CIFAR10 |

60000 |

3072 |

10 |

balanced |

image |

objects |

| SVHN |

99289 |

3072 |

10 |

imbalanced |

image |

house numbers |

| flower |

3670 |

1200 |

5 |

imbalanced |

image |

flowers |

| GTSRB |

26635 |

1200 |

43 |

imbalanced |

image |

traffic signs |

| STL10 |

13000 |

2352 |

10 |

balanced |

image |

objects |

| FMNIST |

70000 |

784 |

10 |

balanced |

image |

fashion objects |

| Medical images |

| dermamnist |

10015 |

2352 |

7 |

imbalanced |

dermatoscope |

dermal diseases |

| pneumoniamnist |

5856 |

784 |

2 |

imbalanced |

chest X-Ray |

pneumonia |

| retinamnist |

1600 |

2352 |

5 |

imbalanced |

fundus camera |

diabetic retinopathy |

| breastmnist |

780 |

784 |

2 |

imbalanced |

breast ultrasound |

breast diseases |

| bloodmnist |

17092 |

2352 |

8 |

imbalanced |

blood cell microscope |

blood diseases |

| organamnist |

58830 |

784 |

11 |

imbalanced |

abdominal CT |

human organs |

| organcmnist |

23583 |

784 |

11 |

imbalanced |

abdominal CT |

human organs |

| organsmnist |

25211 |

784 |

11 |

imbalanced |

abdominal CT |

human organs |

| organmnist3d |

1472 |

21952 |

11 |

imbalanced |

abdominal CT |

human organs |

| nodulemnist3d |

1633 |

21952 |

2 |

imbalanced |

chest CT |

nodule malignancy |

| fracturemnist3d |

1370 |

21952 |

3 |

imbalanced |

chest CT |

fracture types |

| adrenalmnist3d |

1584 |

21952 |

2 |

imbalanced |

shape from abdominal CT |

adrenal gland mass |

| vesselmnist3d |

1908 |

21952 |

2 |

imbalanced |

shape from brain MRA |

aneurysm |

| synapsemnist3d |

1759 |

21952 |

2 |

imbalanced |

electron microscope |

excitatory/inhibitory |

| Chemical formula |

| bace |

1513 |

198 |

2 |

imbalanced |

chemical formula |

BACE1 enzyme |

| BBBP |

2050 |

400 |

2 |

imbalanced |

chemical formula |

blood-brain barrier permeability |

| clintox |

1484 |

339 |

2 |

imbalanced |

chemical formula |

clinical toxicity |

| HIV |

41127 |

575 |

2 |

imbalanced |

chemical formula |

HIV drug activity |

| Large-scale single-cell sequencing data |

| Mouse brain |

1306127 |

27998 |

10 |

imbalanced |

single-cell sequencing |

cell type |

Data processing: Image data were used in their original value flattened to 1d vectors; chemical formula were processed by simplified molecular input line entry system (SMILES) tokenized encoding. SMILES is a line notation for describing the structure of chemical entities using short ASCII strings. Chemical formula were stacked and aligned from the left since they have different SMILES lengths. The strings were mapped to natural numbers using a predefined dictionary, and those missing string positions were filled with 0. On these converted data we performed different classifiers.

Appendix K. Supplemental Performances on Real Datasets of linear CDA

Appendix K.1. Binary classification

Figure A6.

Binary classification performance on 27 real datasets according to AUROC. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Figure A6.

Binary classification performance on 27 real datasets according to AUROC. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

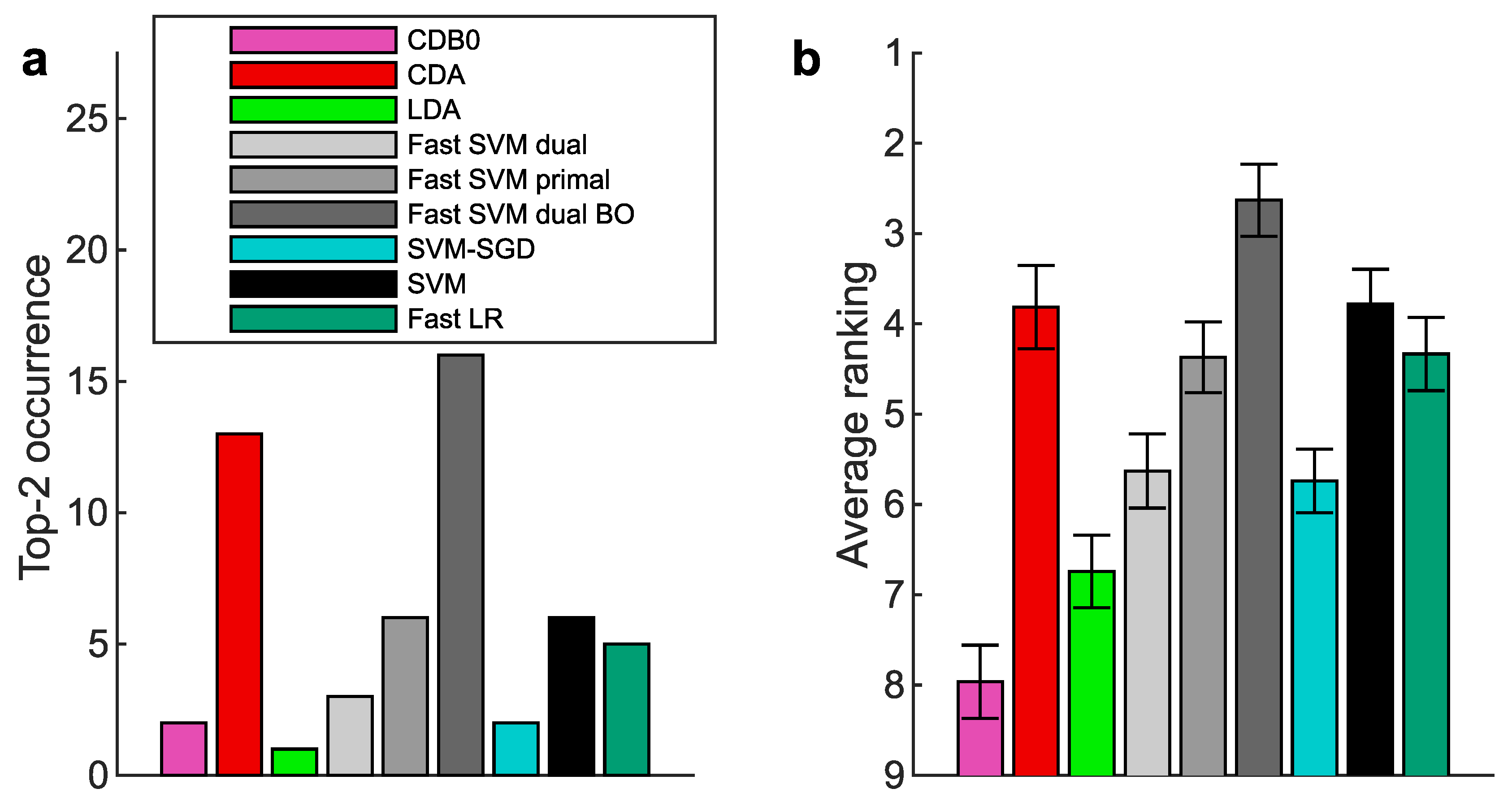

Figure A7.

Binary classification performance on 27 real datasets according to AUPR. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Figure A7.

Binary classification performance on 27 real datasets according to AUPR. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

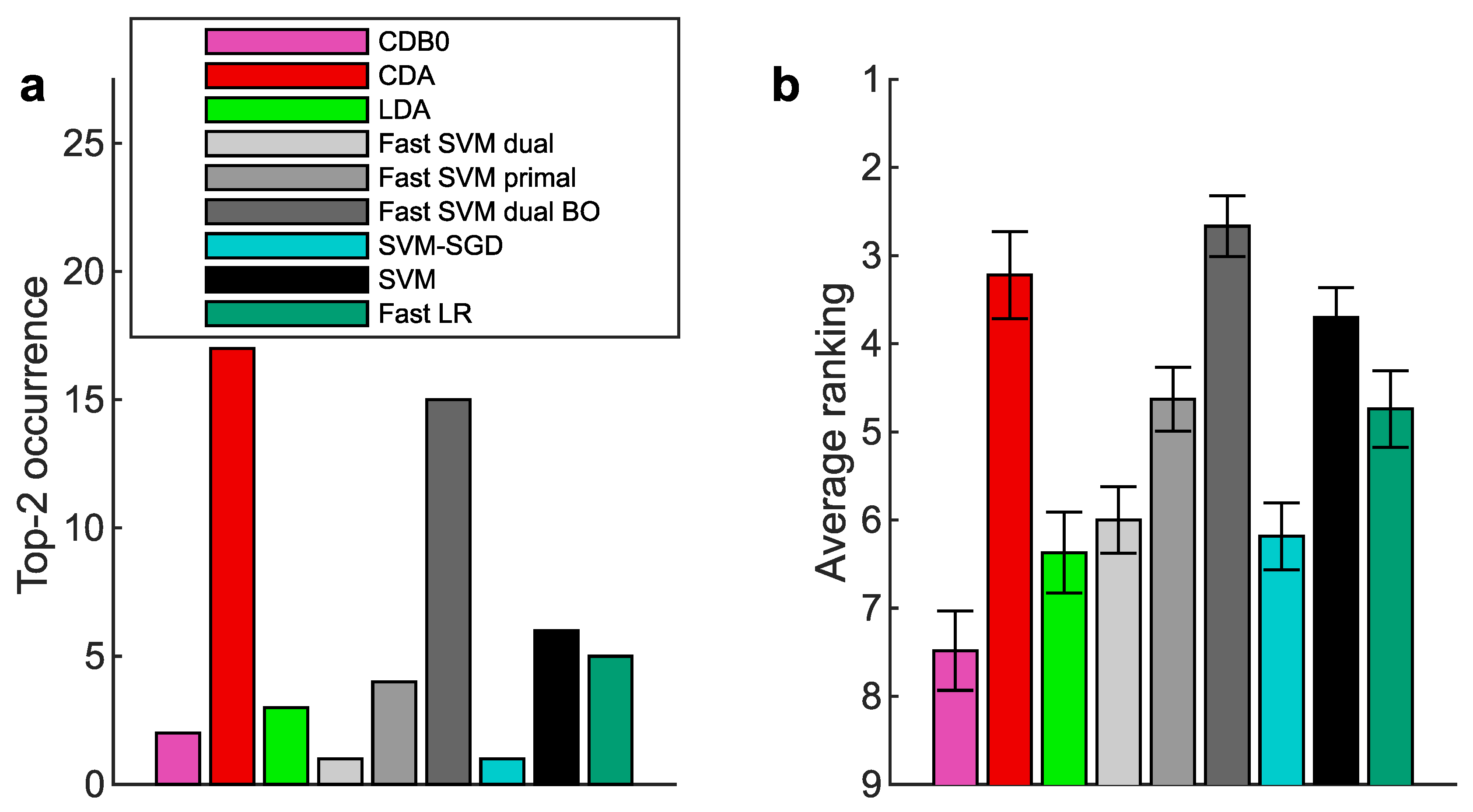

Figure A8.

Binary classification performance on 27 real datasets according to F-score. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Figure A8.

Binary classification performance on 27 real datasets according to F-score. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Figure A9.

Binary classification performance on 27 real datasets according to AC-score. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Figure A9.

Binary classification performance on 27 real datasets according to AC-score. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Appendix K.2. Multiclass prediction

Figure A10.

Multiclass prediction performance on 27 real datasets according to AUPR. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Figure A10.

Multiclass prediction performance on 27 real datasets according to AUPR. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Figure A11.

Multiclass prediction performance on 27 real datasets according to F-score. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Figure A11.

Multiclass prediction performance on 27 real datasets according to F-score. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Figure A12.

Multiclass prediction performance on 27 real datasets according to AC-score. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Figure A12.

Multiclass prediction performance on 27 real datasets according to AC-score. (a) Top-2 occurrences of algorithms. (b) Average ranking of algorithms. Error bars represent standard errors. CDB0: Centroid Discriminant Basis 0; CDA: Centroid Discriminant Analysis; LDA: Linear Discriminant Analysis; SVM: Support Vector Machine; LR: Logistic Regression; BO: Bayesian Optimization

Appendix L. Full Classification Performance on Real Datasets of linear CDA

In the below tables we give full classification performance on 27 real datasets based on 4 metrics with binary classification (Table. 1-4) and multiclass prediction (Table. 5-8). In multiclass prediction, the performances on datasets with only two classes were filled with the one of binary classification.

Appendix L.1. Binary classification

Table A2.

AUROC (Binary Classification Performance)

Table A2.

AUROC (Binary Classification Performance)

| Dataset |

CDB0 |

CDA |

LDA |

Fast SVM dual |

Fast SVM primal |

Fast SVM BO |

SVM SGD |

SVM |

Fast LR |

| Standard images |

| MNIST |

0.957±0.004 |

0.985±0.002 |

0.981±0.002 |

0.985±0.002 |

0.986±0.002 |

0.986±0.002 |

0.986±0.002 |

0.986±0.002 |

0.986±0.002 |

| USPS |

0.966±0.005 |

0.989±0.001 |

0.982±0.002 |

0.99±0.002 |

0.99±0.002 |

0.99±0.001 |

0.991±0.001 |

0.99±0.002 |

0.991±0.001 |

| EMNIST |

0.928±0.003 |

0.972±0.001 |

0.964±0.001 |

0.97±0.001 |

0.97±0.001 |

0.973±0.001 |

0.97±0.002 |

0.97±0.001 |

0.97±0.002 |

| CIFAR10 |

0.696±0.01 |

0.797±0.01 |

0.741±0.01 |

0.754±0.01 |

0.784±0.01 |

0.807±0.01 |

0.762±0.01 |

0.787±0.01 |

0.757±0.02 |

| SVHN |

0.528±0.003 |

0.667±0.005 |

0.555±0.003 |

0.55±0.004 |

0.578±0.004 |

0.592±0.005 |

0.537±0.003 |

0.591±0.004 |

0.57±0.008 |

| flower |

0.703±0.02 |

0.739±0.02 |

0.571±0.01 |

0.71±0.03 |

0.705±0.03 |

0.754±0.03 |

0.722±0.03 |

0.71±0.03 |

0.734±0.02 |

| GTSRB |

0.767±0.003 |

0.972±0.001 |

0.942±0.002 |

0.995±0.0004 |

0.995±0.0003 |

0.995±0.0004 |

0.99±0.0006 |

0.995±0.0004 |

0.994±0.0004 |

| STL10 |

0.723±0.02 |

0.781±0.02 |

0.667±0.01 |

0.758±0.02 |

0.757±0.02 |

0.791±0.02 |

0.766±0.02 |

0.761±0.02 |

0.712±0.03 |

| FMNIST |

0.937±0.01 |

0.975±0.006 |

0.973±0.006 |

0.976±0.006 |

0.976±0.006 |

0.978±0.005 |

0.975±0.006 |

0.976±0.006 |

0.976±0.006 |

| Medical images |

| ermamnist |

0.682±0.01 |

0.753±0.02 |

0.684±0.02 |

0.676±0.02 |

0.708±0.02 |

0.698±0.02 |

0.608±0.02 |

0.712±0.02 |

0.663±0.03 |

| pneumoniamnist |

0.837±0 |

0.933±0 |

0.912±0 |

0.941±0 |

0.943±0 |

0.941±0 |

0.942±0 |

0.941±0 |

0.944±0 |

| retinamnist |

0.63±0.03 |

0.662±0.04 |

0.616±0.02 |

0.631±0.03 |

0.626±0.02 |

0.632±0.03 |

0.615±0.03 |

0.622±0.03 |

0.619±0.03 |

| breastmnist |

0.66±0 |

0.763±0 |

0.703±0 |

0.726±0 |

0.757±0 |

0.705±0 |

0.734±0 |

0.709±0 |

0.688±0 |

| bloodmnist |

0.89±0.02 |

0.947±0.01 |

0.898±0.02 |

0.951±0.01 |

0.955±0.01 |

0.955±0.01 |

0.946±0.01 |

0.957±0.01 |

0.955±0.01 |

| organamnist |

0.897±0.009 |

0.948±0.008 |

0.95±0.008 |

0.928±0.01 |

0.953±0.008 |

0.958±0.008 |

0.939±0.01 |

0.957±0.008 |

0.954±0.008 |

| organcmnist |

0.89±0.01 |

0.925±0.01 |

0.908±0.01 |

0.895±0.01 |

0.913±0.01 |

0.928±0.01 |

0.911±0.01 |

0.919±0.01 |

0.902±0.02 |

| organsmnist |

0.831±0.01 |

0.886±0.01 |

0.866±0.01 |

0.842±0.02 |

0.871±0.01 |

0.888±0.01 |

0.853±0.02 |

0.88±0.01 |

0.844±0.03 |

| organmnist3d |

0.924±0.01 |

0.957±0.008 |

0.953±0.008 |

0.965±0.007 |

0.966±0.007 |

0.966±0.007 |

0.962±0.007 |

0.966±0.007 |

0.937±0.02 |

| nodulemnist3d |

0.715±0 |

0.781±0 |

0.732±0 |

0.687±0 |

0.702±0 |

0.735±0 |

0.691±0 |

0.724±0 |

0.743±0 |

| fracturemnist3d |

0.671±0.06 |

0.556±0.03 |

0.525±0.04 |

0.576±0.007 |

0.578±0.008 |

0.6±0.004 |

0.592±0.004 |

0.612±0.007 |

0.592±0.01 |

| adrenalmnist3d |

0.653±0 |

0.756±0 |

0.692±0 |

0.697±0 |

0.619±0 |

0.647±0 |

0.637±0 |

0.665±0 |

0.641±0 |

| vesselmnist3d |

0.605±0 |

0.685±0 |

0.681±0 |

0.61±0 |

0.648±0 |

0.628±0 |

0.604±0 |

0.6±0 |

0.584±0 |

| synapsemnist3d |

0.539±0 |

0.544±0 |

0.508±0 |

0.518±0 |

0.527±0 |

0.518±0 |

0.525±0 |

0.539±0 |

0.517±0 |

| Chemical formula |

| bace |

0.621±0 |

0.705±0 |

0.684±0 |

0.618±0 |

0.677±0 |

0.697±0 |

0.639±0 |

0.637±0 |

0.693±0 |

| BBBP |

0.711±0 |

0.743±0 |

0.693±0 |

0.667±0 |

0.707±0 |

0.71±0 |

0.646±0 |

0.697±0 |

0.712±0 |

| clintox |

0.65±0 |

0.575±0 |

0.543±0 |

0.517±0 |

0.515±0 |

0.519±0 |

0.508±0 |

0.514±0 |

0.515±0 |

| HIV |

0.6±0 |

0.616±0 |

0.537±0 |

0.51±0 |

0.506±0 |

0.51±0 |

0.505±0 |

0.506±0 |

0.51±0 |

Table A3.

AUPR (Binary Classification Performance)

Table A3.

AUPR (Binary Classification Performance)

| Dataset |

CDB0 |

CDA |

LDA |

Fast SVM dual |

Fast SVM primal |

Fast SVM BO |

SVM SGD |

SVM |

Fast LR |

| Standard images |

| MNIST |

0.957±0.004 |

0.985±0.002 |

0.981±0.002 |

0.985±0.002 |

0.986±0.002 |

0.986±0.002 |

0.986±0.002 |

0.986±0.002 |

0.986±0.002 |

| USPS |

0.966±0.005 |

0.989±0.001 |

0.983±0.002 |

0.99±0.002 |

0.99±0.002 |

0.99±0.001 |

0.991±0.001 |

0.99±0.002 |

0.991±0.001 |

| EMNIST |

0.928±0.003 |

0.972±0.001 |

0.964±0.001 |

0.97±0.001 |

0.97±0.001 |

0.973±0.001 |

0.97±0.002 |

0.97±0.001 |

0.97±0.002 |

| CIFAR10 |

0.697±0.01 |

0.797±0.01 |

0.741±0.01 |

0.762±0.01 |

0.784±0.01 |

0.807±0.01 |

0.774±0.01 |

0.787±0.01 |

0.757±0.02 |

| SVHN |

0.528±0.003 |

0.682±0.006 |

0.559±0.003 |

0.577±0.005 |

0.604±0.004 |

0.638±0.006 |

0.566±0.005 |

0.634±0.006 |

0.587±0.009 |

| flower |

0.704±0.02 |

0.741±0.02 |

0.571±0.01 |

0.712±0.03 |

0.707±0.03 |

0.759±0.03 |

0.73±0.03 |

0.712±0.03 |

0.736±0.02 |

| GTSRB |

0.757±0.003 |

0.973±0.001 |

0.934±0.002 |

0.995±0.0003 |

0.996±0.0003 |

0.996±0.0003 |

0.991±0.0005 |

0.995±0.0003 |

0.995±0.0003 |

| STL10 |

0.724±0.02 |

0.782±0.02 |

0.667±0.01 |

0.759±0.02 |

0.757±0.02 |

0.791±0.02 |

0.772±0.02 |

0.762±0.02 |

0.713±0.03 |

| FMNIST |

0.937±0.01 |

0.975±0.006 |

0.973±0.006 |

0.976±0.006 |

0.976±0.006 |

0.978±0.005 |

0.975±0.006 |

0.976±0.006 |

0.977±0.006 |

| Medical images |

| dermamnist |

0.653±0.01 |

0.743±0.02 |

0.681±0.02 |

0.729±0.02 |

0.746±0.02 |

0.744±0.02 |

0.646±0.03 |

0.752±0.02 |

0.717±0.03 |

| pneumoniamnist |

0.817±0 |

0.931±0 |

0.922±0 |

0.937±0 |

0.944±0 |

0.946±0 |

0.927±0 |

0.945±0 |

0.946±0 |

| retinamnist |

0.614±0.03 |

0.649±0.03 |

0.612±0.02 |

0.641±0.02 |

0.634±0.02 |

0.637±0.03 |

0.622±0.03 |

0.632±0.03 |

0.631±0.03 |

| breastmnist |

0.653±0 |

0.759±0 |

0.69±0 |

0.743±0 |

0.766±0 |

0.718±0 |

0.726±0 |

0.725±0 |

0.736±0 |

| bloodmnist |

0.889±0.02 |

0.947±0.01 |

0.895±0.02 |

0.953±0.01 |

0.955±0.01 |

0.957±0.01 |

0.945±0.01 |

0.957±0.01 |

0.956±0.01 |

| organamnist |

0.902±0.009 |

0.95±0.007 |

0.951±0.008 |

0.929±0.01 |

0.953±0.008 |

0.959±0.008 |

0.941±0.01 |

0.957±0.008 |

0.955±0.008 |

| organcmnist |

0.901±0.009 |

0.931±0.009 |

0.908±0.01 |

0.893±0.01 |

0.911±0.01 |

0.932±0.009 |

0.913±0.01 |

0.918±0.01 |

0.902±0.02 |

| organsmnist |

0.839±0.01 |

0.892±0.01 |

0.867±0.01 |

0.84±0.02 |

0.87±0.01 |

0.894±0.01 |

0.86±0.02 |

0.88±0.01 |

0.845±0.03 |

| organmnist3d |

0.924±0.009 |

0.958±0.008 |

0.954±0.008 |

0.965±0.007 |

0.966±0.007 |

0.967±0.007 |

0.963±0.007 |

0.967±0.007 |

0.937±0.02 |

| nodulemnist3d |

0.7±0 |

0.771±0 |

0.745±0 |

0.695±0 |

0.709±0 |

0.749±0 |

0.711±0 |

0.732±0 |

0.752±0 |

| fracturemnist3d |

0.663±0.05 |

0.566±0.02 |

0.531±0.05 |

0.583±0.003 |

0.586±0.008 |

0.606±0.004 |

0.6±0.006 |

0.618±0.01 |

0.608±0.01 |

| adrenalmnist3d |

0.65±0 |

0.774±0 |

0.705±0 |

0.708±0 |

0.627±0 |

0.663±0 |

0.657±0 |

0.689±0 |

0.665±0 |

| vesselmnist3d |

0.582±0 |

0.671±0 |

0.694±0 |

0.627±0 |

0.7±0 |

0.692±0 |

0.668±0 |

0.646±0 |

0.661±0 |

| synapsemnist3d |

0.537±0 |

0.542±0 |

0.544±0 |

0.533±0 |

0.558±0 |

0.533±0 |

0.538±0 |

0.572±0 |

0.546±0 |

| Chemical formula |

| bace |

0.62±0 |

0.704±0 |

0.685±0 |

0.643±0 |

0.679±0 |

0.699±0 |

0.652±0 |

0.64±0 |

0.695±0 |

| BBBP |

0.701±0 |

0.747±0 |

0.734±0 |

0.712±0 |

0.743±0 |

0.751±0 |

0.701±0 |

0.723±0 |