Submitted:

31 December 2024

Posted:

03 January 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- We propose and implement a benchmark for x86_64 breakpoint approaches suitable to measure execution performance (timing) of VMI-based breakpoint implementations.

- We provide preliminary results of our breakpoint benchmark workloads for the VMI-based breakpoints implemented in SmartVMI.

2. Background

2.1. Breakpoint basics

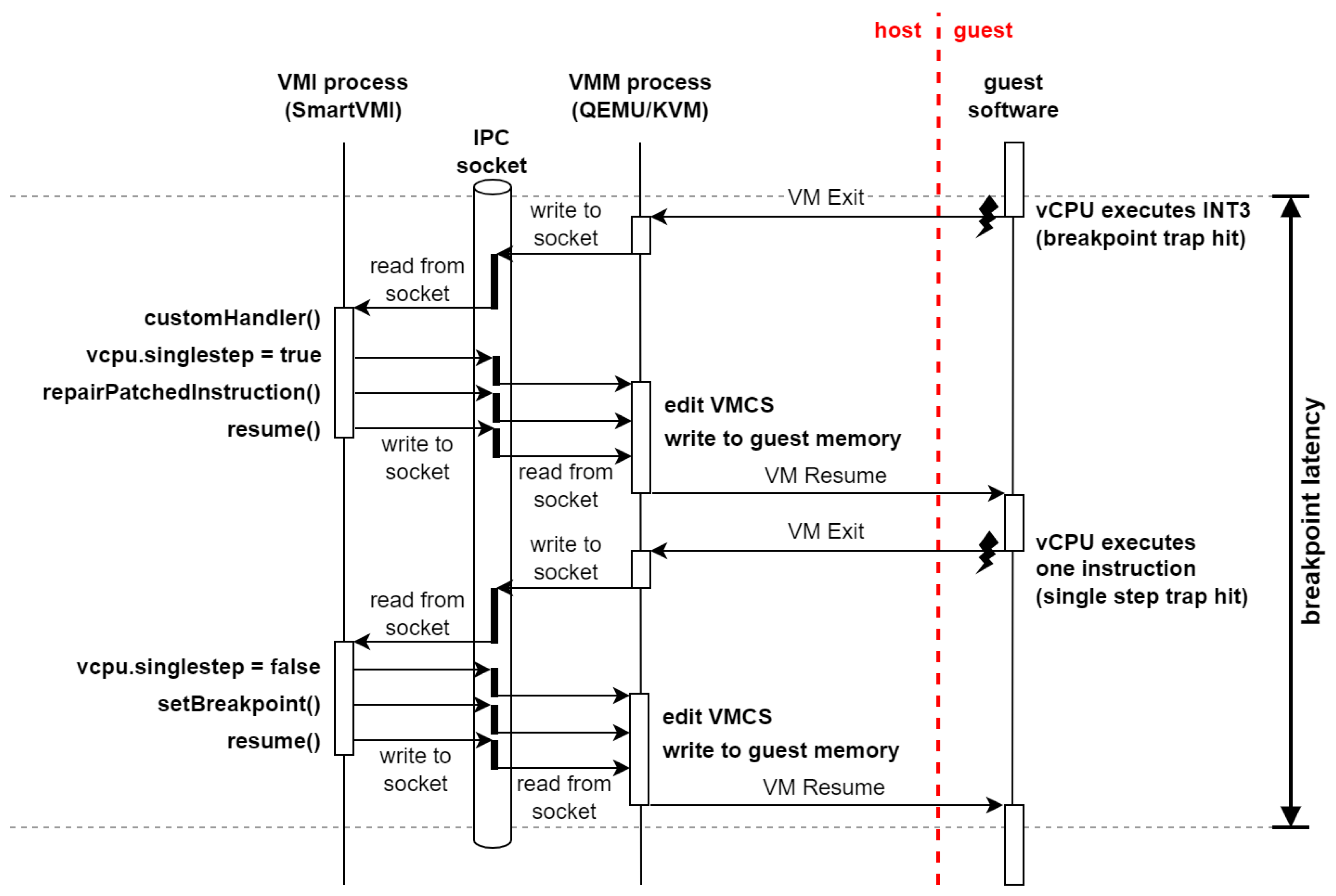

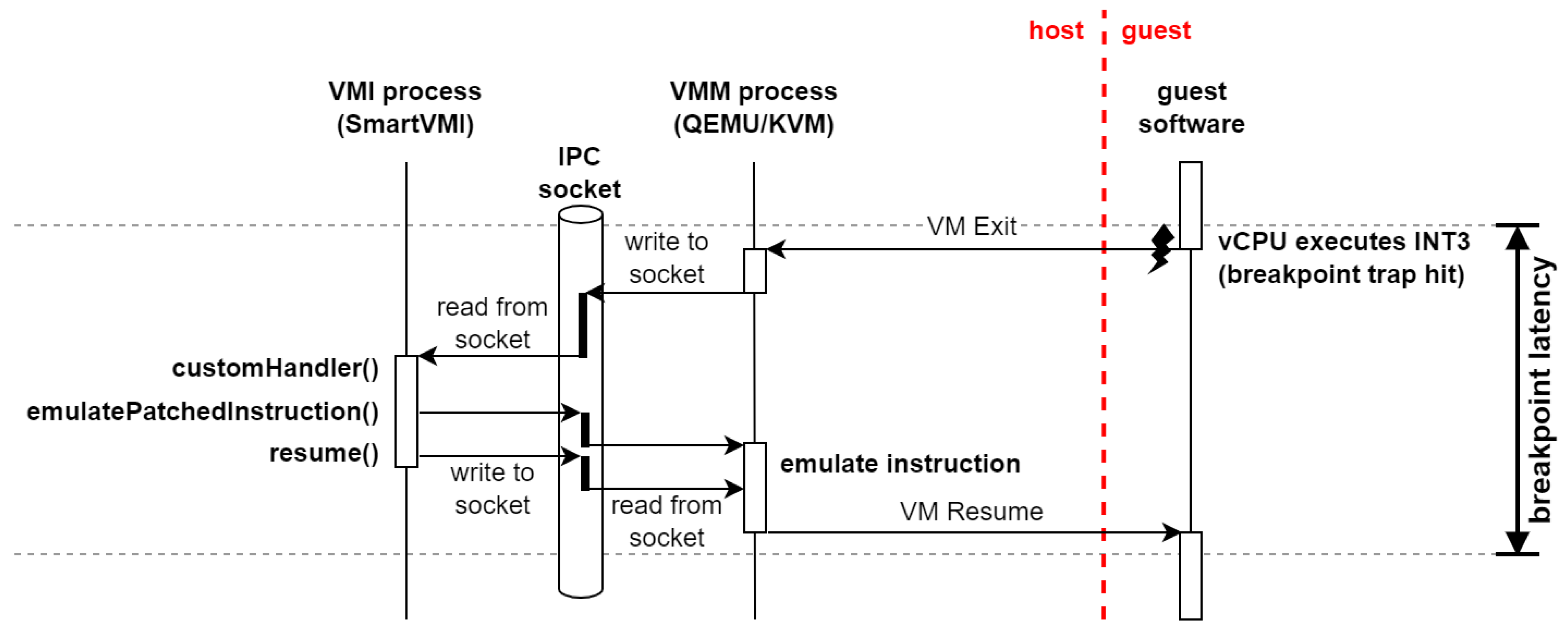

2.2. Breakpoint Handling Approaches for VMI debugging

2.3. Breakpoint Invocation Optimization

3. Benchmarking Design

- Is it faster to use instruction emulation or perform EPT switches and single-stepping?

- How expensive is it to handle reads with EPT violations and read emulations?

- What is the performance penalty for the whole system when a VM exit happens on every context switch to manage breakpoint statuses?

3.1. Workloads

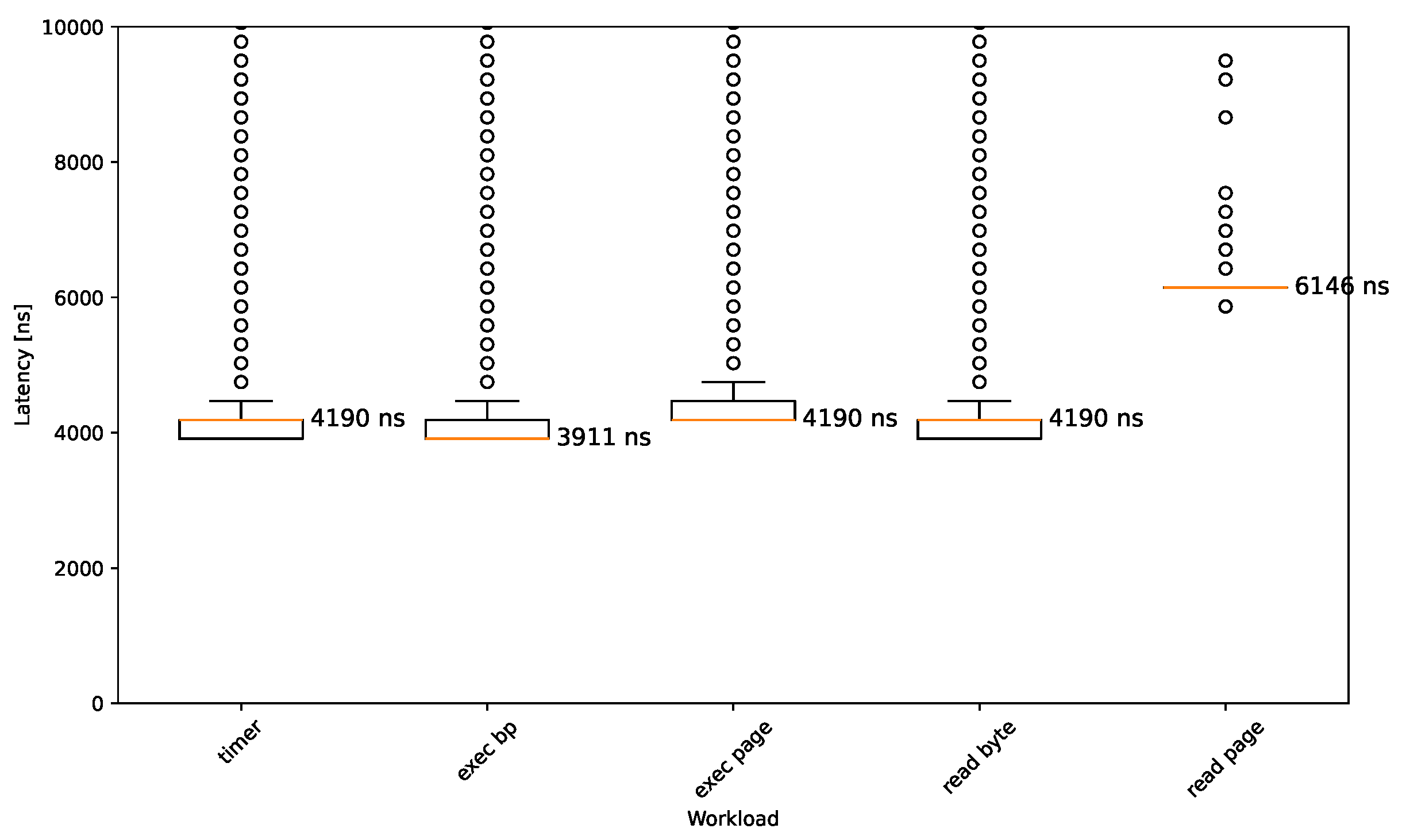

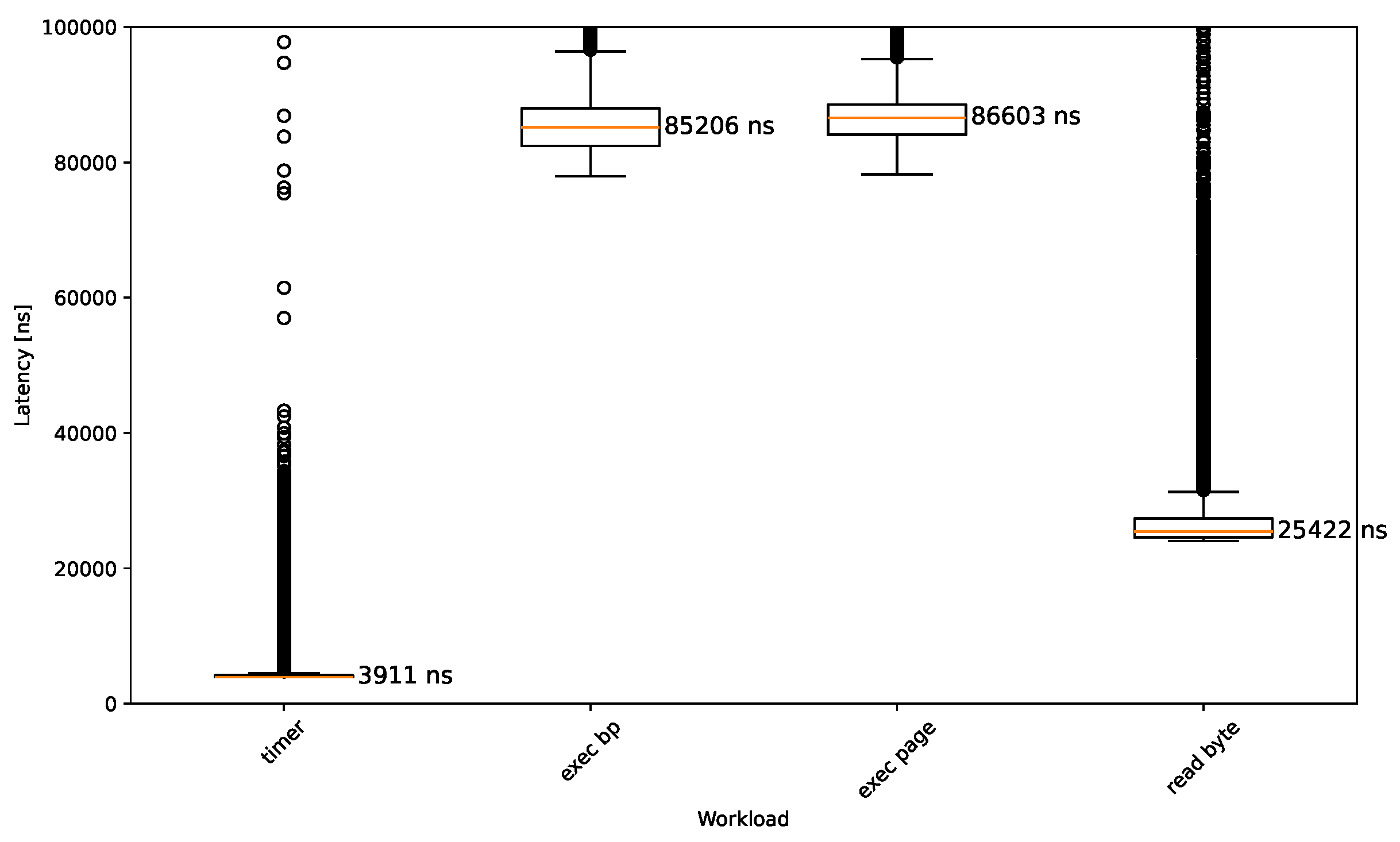

- Breakpoint execution This workload is supposed to measure how long it takes the VMI infrastructure to handle a breakpoint. There are a multitude of factors that comprise this latency: VM exits, processing in the hypervisor, communication between hypervisor and VMI application, processing in the VMI application, VM entries.

- Breakpoint execution + additional instructions Most of the approaches introduced do not add latency to the execution of an instruction where no breakpoint is placed. However, altp2m effectively also breakpoints all other instructions that are on the same page as the target instruction. The previous workload does not reflect that, so this one is supposed to measure the latency of executing the breakpoint as well as additional instructions that are located on the same page.

- Reading the breakpoint Using EPT permissions to control read accesses to breakpoint locations causes overhead for similar reasons as the breakpoint executions. This workload is designed to quantify this latency by reading from the exact memory location where a breakpoint is placed.

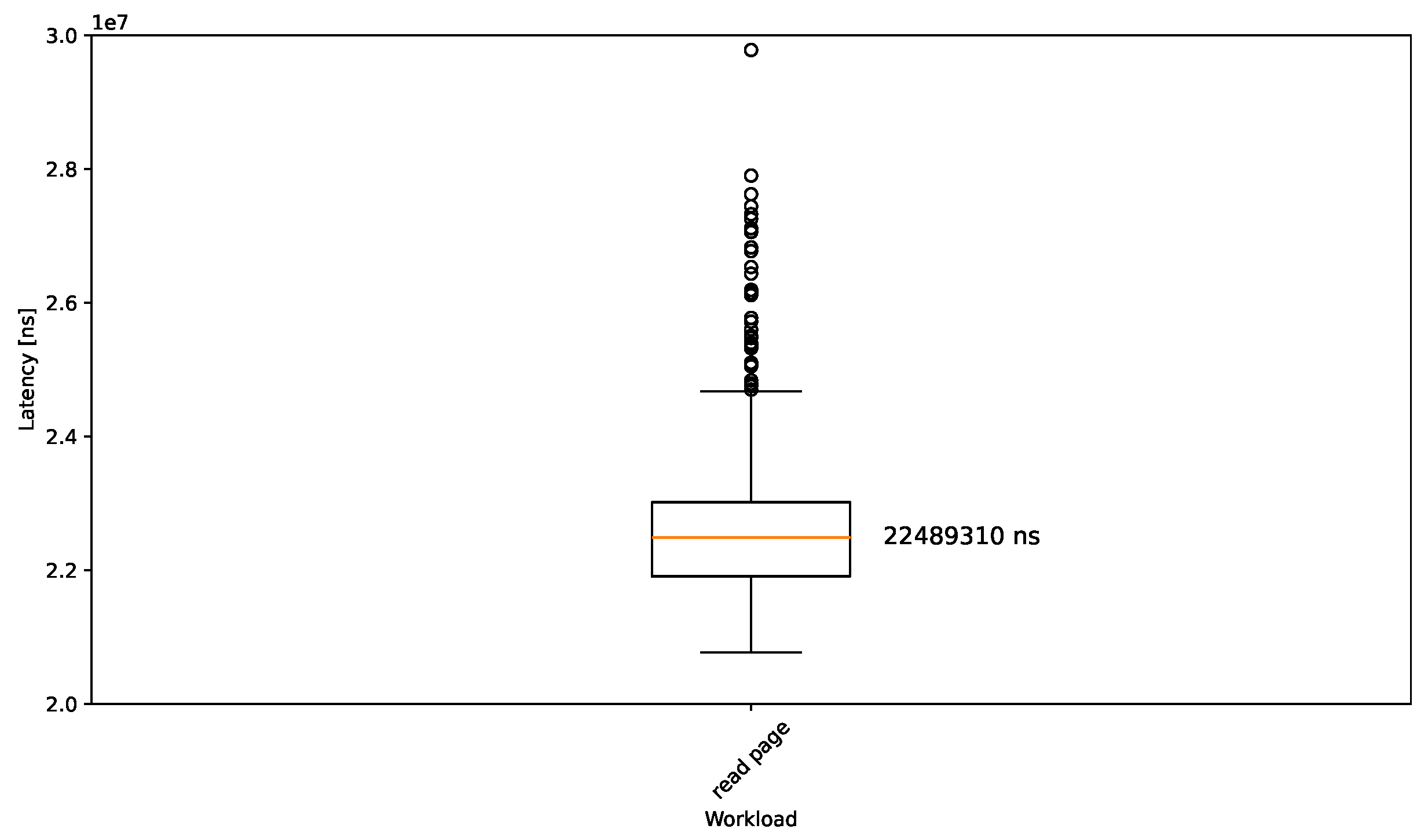

- Reading a page with breakpoint EPT read control has page-granularity, so all mentioned approaches behave the same independently of whether exactly the breakpoint or any address on the same page has been read. Thus, the results should show the same trend as the previous workload. The purpose of this approach is mainly to mimic the behavior of Microsoft’s PatchGuard.

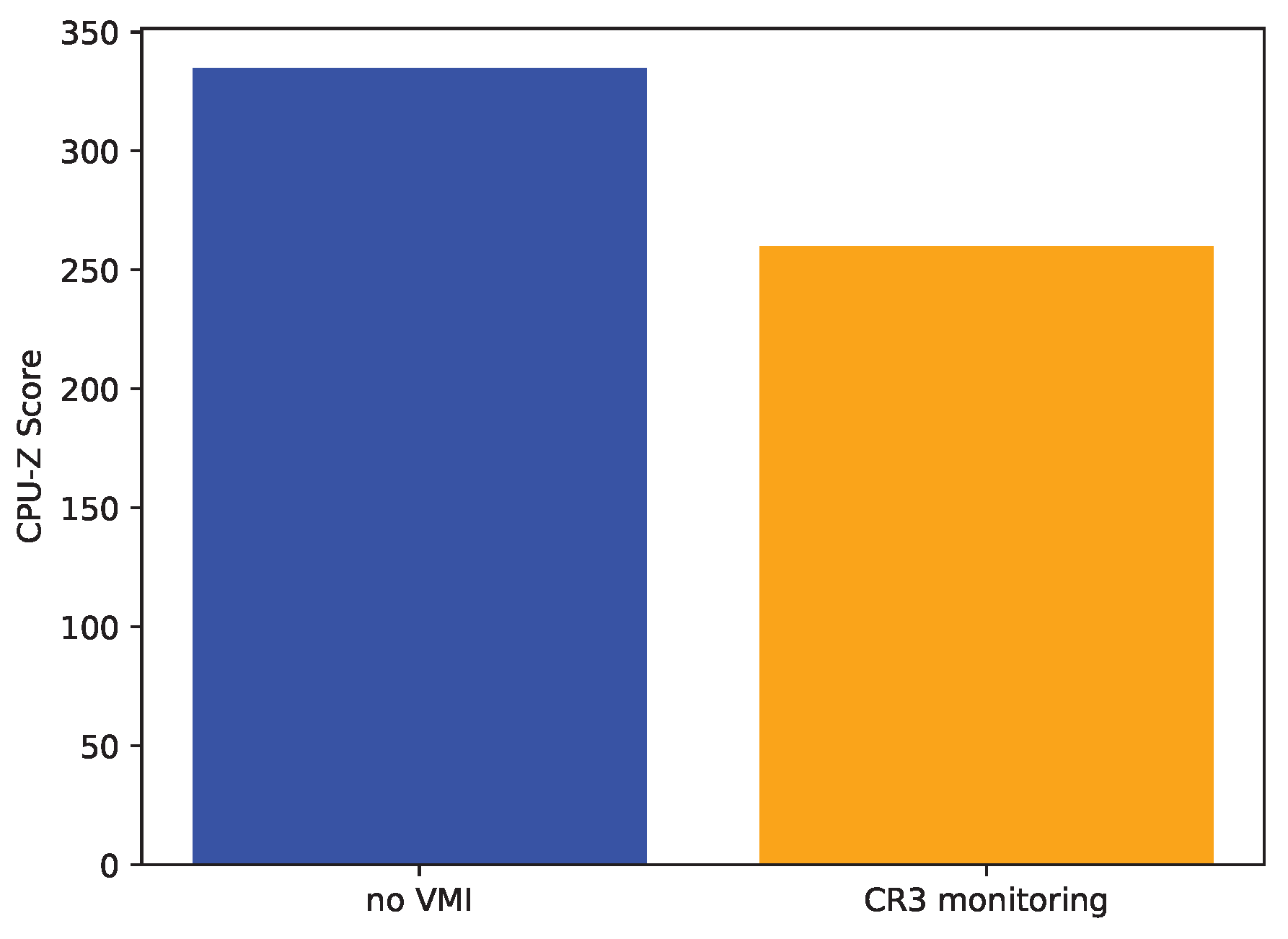

- CPU workload with and wihout CR3 monitoring The final workload is not concerned with breakpoints themselves. To implement process-specific breakpoints, context switches have to be detected and handled by intercepting at every CR3 register write. Given a CPU performance benchmark, it should be run with and without VMI monitoring CR3 writes. The difference in performance is the minimal cost of processing context switches with VMI. It is important to note that the chosen benchmark most likely has an impact on the measurable overhead. Performing only calculations will not cause any additional context switches, while repeatedly invoking systemcalls, e.g., to write to a file inevitably triggers context switches.

3.2. Implementation Considerations

3.3. The bpbench Benchmarking Application

4. Initial Measurement Results

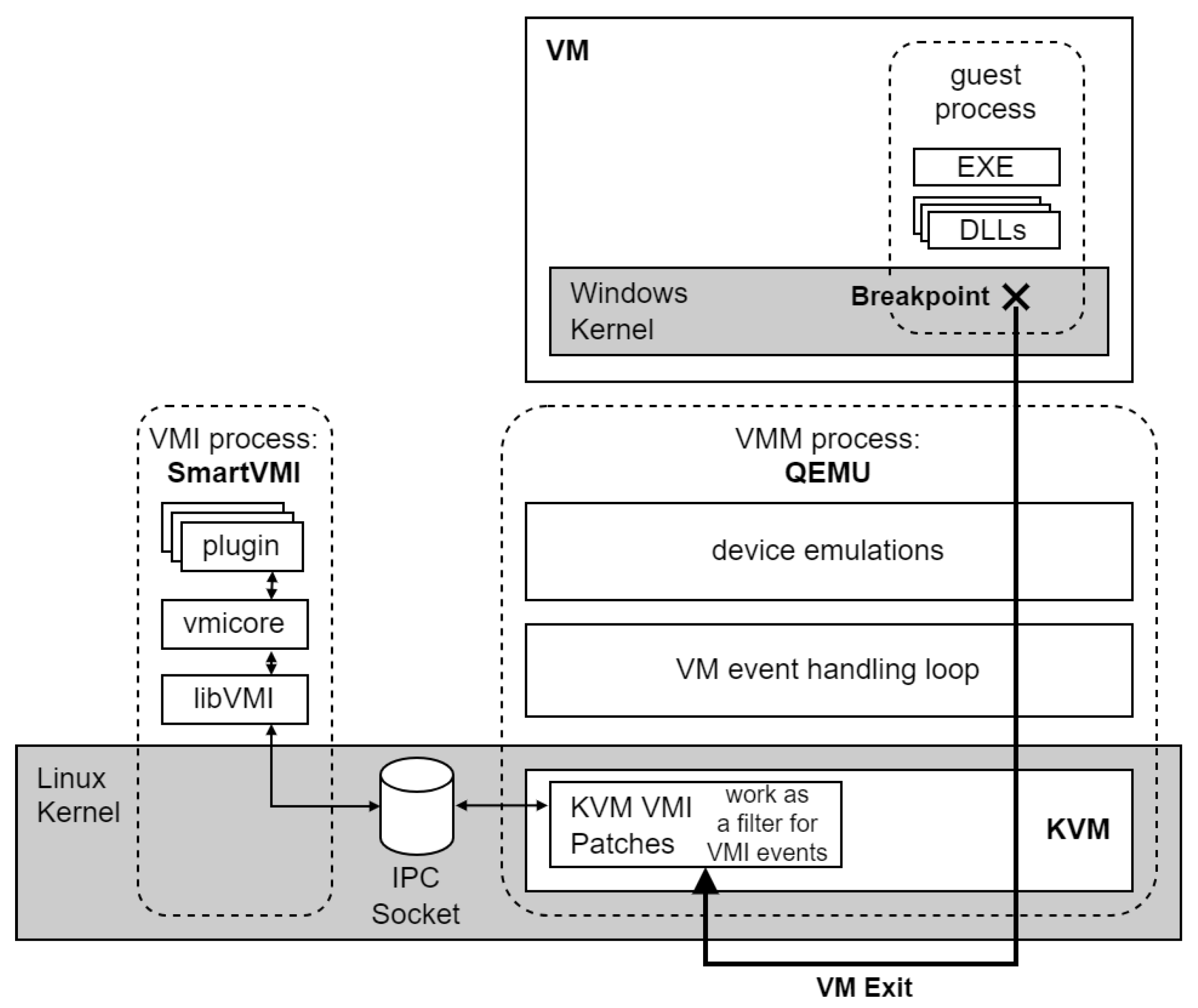

4.1. VMI Setup

4.2. Breakpoint Execution and Reading

4.3. CR3 Write Monitoring

5. Related Work

6. Conclusions

6.1. Summary

6.2. Future Work

Author Contributions

Funding

Data Availability Statement

Use of Artificial Intelligence

Conflicts of Interest

References

- Garfinkel, T.; Rosenblum, M.; et al. A virtual machine introspection based architecture for intrusion detection. In Proceedings of the Ndss. San Diega, CA, 2003, Vol. 3, pp. 191–206.

- wook Baek, H.; Srivastava, A.; Van der Merwe, J. CloudVMI: Virtual Machine Introspection as a Cloud Service. In Proceedings of the 2014 IEEE International Conference on Cloud Engineering. IEEE, IEEE, 3 2014, pp. 153–158. [CrossRef]

- Jiang, X.; Wang, X.; Xu, D. Stealthy malware detection through VMM-based ‘out-of-the-box’semantic view. In Proceedings of the Proceedings of the 14th ACM conference on Computer and communications security. ACM, 10 2007, Vol. 10, CCS07, pp. 128–138. [CrossRef]

- Dinaburg, A.; Royal, P.; Sharif, M.; Lee, W. Ether: malware analysis via hardware virtualization extensions. In Proceedings of the Proceedings of the 15th ACM conference on Computer and communications security. ACM, 10 2008, CCS08, pp. 51–62. [CrossRef]

- Willems, C.; Hund, R.; Holz, T. Cxpinspector: Hypervisor-based, hardware-assisted system monitoring. Technical report, Ruhr-Universitat Bochum, 2013.

- Dolan-Gavitt, B.; Leek, T.; Zhivich, M.; Giffin, J.; Lee, W. Virtuoso: Narrowing the Semantic Gap in Virtual Machine Introspection. In Proceedings of the 2011 IEEE Symposium on Security and Privacy. IEEE, IEEE, 5 2011, pp. 297–312. [CrossRef]

- Jain, B.; Baig, M.B.; Zhang, D.; Porter, D.E.; Sion, R. SoK: Introspections on Trust and the Semantic Gap. In Proceedings of the 2014 IEEE Symposium on Security and Privacy, 5 2014, pp. 605–620. ISSN: 2375-1207. [CrossRef]

- Dangl, T.; Taubmann, B.; Reiser, H.P. RapidVMI: Fast and multi-core aware active virtual machine introspection. In Proceedings of the Proceedings of the 16th International Conference on Availability, Reliability and Security, New York, NY, USA, 8 2021; ARES ’21, pp. 1–10. [CrossRef]

- Lengyel, T.K.; 3esca, S.; Payne, B.D.; Webster, G.D.; Vogl, S.; Kiayias, A. Scalability, fidelity and stealth in the DRAKVUF dynamic malware analysis system. In Proceedings of the Proceedings of the 30th Annual Computer Security Applications Conference. ACM, 12 2014, ACSAC ’14, pp. 386–395. [CrossRef]

- Roccia, T. Evolution of Malware Sandbox Evasion Tactics – A Retrospective Study, 2019.

- Lengyel, T.K. Stealthy monitoring With xen altp2m. 2016. Available online: https://xenproject.org/blog/stealthy-monitoring-with-xen-altp2m/ (accessed on 17 December 2024).

- Qiu, J.; Yadegari, B.; Johannesmeyer, B.; Debray, S.; Su, X. Identifying and Understanding Self-Checksumming Defenses in Software. In Proceedings of the Proceedings of the 5th ACM Conference on Data and Application Security and Privacy, New York, NY, USA, 3 2015; CODASPY ’15, pp. 207–218. [CrossRef]

- Sonawane, S.; Onofri, D. Malware Analysis: GuLoader Dissection Reveals New Anti-Analysis Techniques and Code Injection Redundancy. 2022. Available online: https://www.crowdstrike.com/en-us/blog/guloader-dissection-reveals-new-anti-analysis-techniques-and-code-injection-redundancy/ (accessed on 19 December 2024).

- Blum, D. PatchGuard and Windows security, 2007.

- Lengyel, T. DRAKVUF Black-box Binary Analysis. 2014. Available online: https://github.com/tklengyel/drakvuf (accessed on 19 December 2024).

- Vasudevan, A.; Yerraballi, R. Stealth Breakpoints. In Proceedings of the 21st Annual Computer Security Applications Conference (ACSAC’05). IEEE, IEEE, 2005, pp. 381–392. [CrossRef]

- Wahbe, R. Efficient data breakpoints. ACM SIGPLAN Notices 1992, 27, 200–212. [CrossRef]

- Wahbe, R.; Lucco, S.; Graham, S.L. Practical data breakpoints: Design and implementation. In Proceedings of the Proceedings of the ACM SIGPLAN 1993 conference on Programming language design and implementation. ACM, 6 1993, Vol. 28, PLDI93, pp. 1–12. [CrossRef]

| 1 | |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 6 | |

| 7 | |

| 8 | |

| 9 | |

| 10 | |

| 11 |

| Approach | BP Trigger | Handling of the Original Instruction | Stealth |

|---|---|---|---|

| Usermode debugging | int3 | Single-stepping (TF) | None |

| SmartVMI with Single-Stepping | int3 | Single-stepping (MTF) | EPT read permission (PageGuard) |

| Instruction Emulation | int3 | Instruction emulation | EPT read permission |

| altp2m | EPT violation | EPT switch + Single+stepping (MTF) | by design invisible |

| altp2m with int3 (DRAKVUF) | int3 | EPT switch + Single-stepping (MTF) | EPT read permission |

| Category | Host | VM |

|---|---|---|

| CPU | Intel Core i5 7300U | 1 vCPU |

| RAM | 2x4 GB DDR4 | 4 GB |

| OS | NixOS 24.05 | Windows 10 Pro 22H2, |

| Kernel version | 5.4.241 | Build 19045.2965 |

| Other software | QEMU 4.2.11 | bpbench |

| SmartVMI | CPU-Z 2.13.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).