1. Introduction:

AI, a transformative technology in the realm of medicine, is fundamentally the process by which computational programs emulate human cognitive functions, notably learning and problem-solving [

1]. In cardiology, AI applications have been particularly groundbreaking. For instance, the utilization of AI in analyzing data from seemingly straightforward devices like electrocardiograms (ECGs) has transformed these tools into potent predictive instruments [

2]. Additionally, AI's integration into cardiovascular imaging has significantly enhanced care delivery, influencing every stage of cardiac imaging from acquisition to final reporting [

3,

4,

5]. AI technologies, particularly Machine Learning (ML) and Deep Learning (DL), are tackling these challenges by offering more consistent and objective methods for evaluation. ML, A subset of AI that focuses on algorithms that allow systems to learn from and make predictions based on data, and DL, a specialized branch of ML that uses multi-layered neural networks to analyze complex patterns, help minimize human errors and reduce variability between operators [

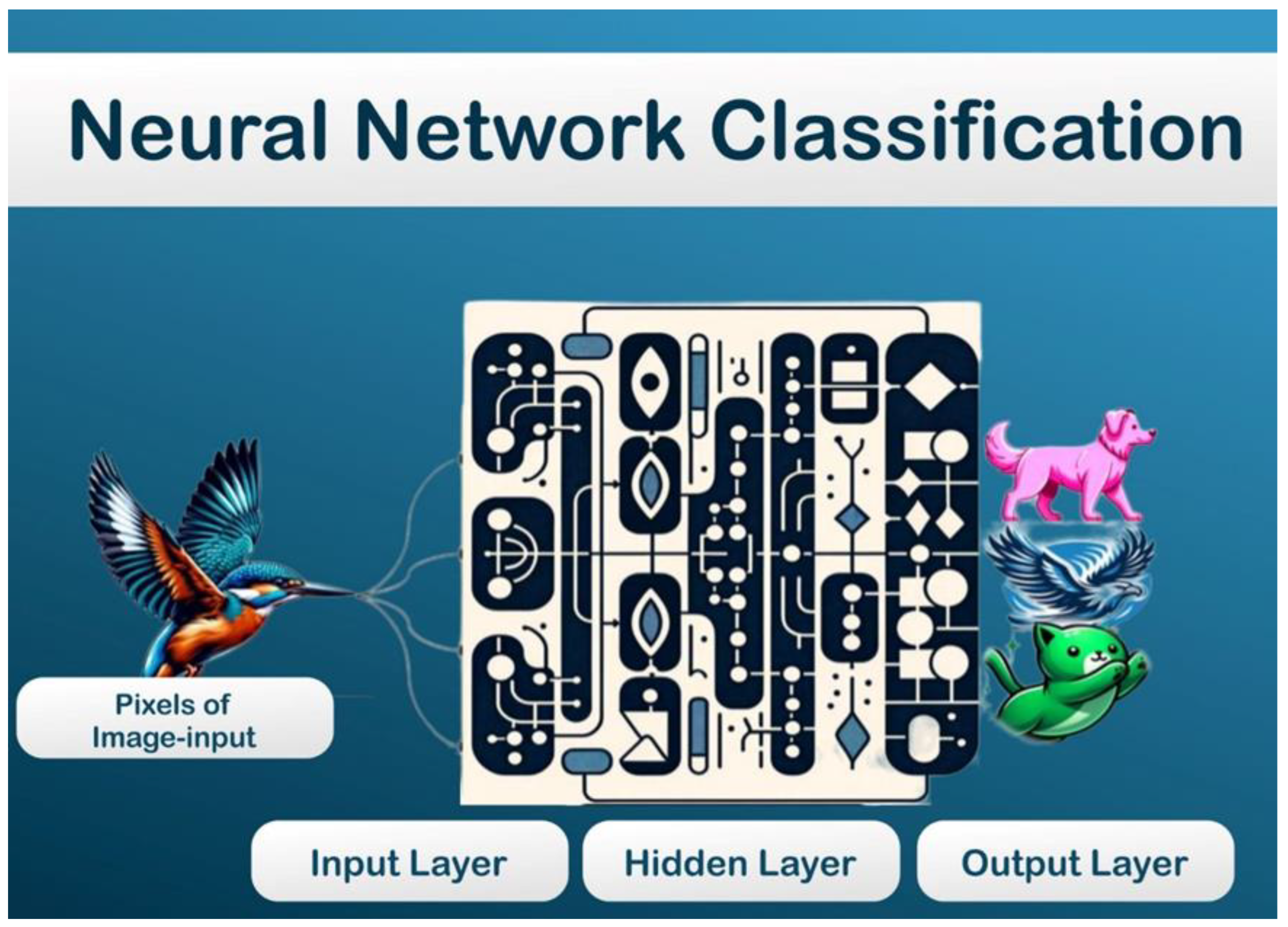

8]. DL uses higher level features such as neural networks derived from human brain and allow to read, learn and perform complex tasks (

Figure 1). The neural frame work has three layers: an input layer for data input, a hidden/intermediate layer for processing the weights flowing from the input layer, and an output layer for outputting the result (

Figure 2).

AI's role in echocardiography, a critical diagnostic tool in cardiology, is a testament to its revolutionary impact. Echocardiography, vital for real-time imaging and detection of cardiac anomalies, has traditionally been hampered by its dependence on the operator's skill and subjective interpretation of images. This reliance often leads to variability in diagnoses and a susceptibility to errors [

6,

7].

In critical care scenarios, where time and accuracy are paramount, AI's ability to rapidly and automatically analyze echocardiography data becomes invaluable. The technology promises to enhance the efficiency and accuracy of manual tracings, offering a more standardized evaluation of echocardiographic images and videos. This advancement is particularly beneficial in situations where quick analyses are necessary due to hemodynamic instability in patients [

8,

9,

10].

AI's integration into echocardiography and broader cardiology represents a significant stride towards more efficient, accurate, and standardized cardiac care. Its ability to minimize human errors, reduce inter-operator variability, and provide rapid, automated analyses is set to revolutionize the field. The current and future applications of AI, encompassing image acquisition, interpretation, diagnosis, and prognosis evaluation, As AI continues to evolve, its role in enhancing echocardiographic evaluations and overall cardiac care is expected to expand, ushering in a new era of advanced, technology-driven medical practice.

In light of the aforementioned advancements and challenges, the purpose of this paper is to meticulously examine and elucidate the burgeoning role of AI in the field of echocardiography and its broader implications in cardiology. This exploration aims to provide a comprehensive overview of how AI-driven technologies are reshaping cardiac diagnostics and treatment planning. We will delve into the nuances of AI's application in enhancing the accuracy, efficiency, and standardization of echocardiographic assessments, while also addressing the critical balance between technological innovation and the indispensable expertise of medical professionals. Furthermore, this paper intends to forecast the future trajectory of AI in cardiac imaging, contemplating its potential to revolutionize patient care and outcomes in cardiology.

2. AI Technologies in Echocardiography

ML and DL, subsets of AI, have revolutionized echocardiography by enabling automated image analysis and interpretation. The application of ML in echocardiography can be understood through the general framework of the three primary types of ML: supervised learning, unsupervised learning, and reinforcement learning. Each of these methodologies has distinct characteristics and potential applications within the field of echocardiography, although specific examples directly from this field were not identified in the search. Here's an overview of how these ML types could theoretically contribute to echocardiography, inspired by general principles and applications in related fields:

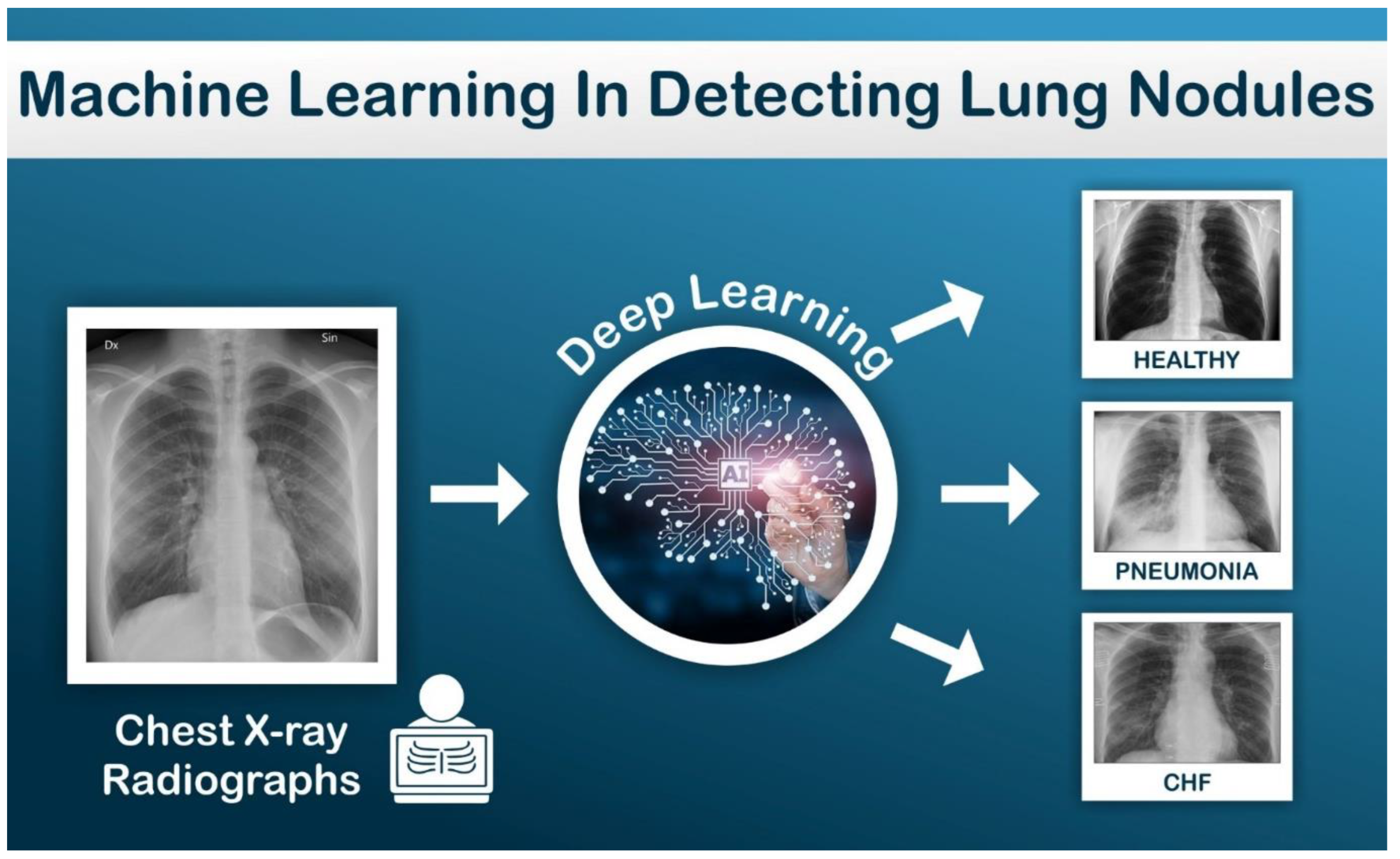

Supervised Learning: Supervised learning is training a model with known input and output data to predict future outputs to new data. In this model set, a parameter will be introduced to help with predicting output. Example: Automated detection of lung nodules and pneumonia based on parameters set up while interpreting lung X-rays (

Figure 3). This approach could be utilized in echocardiography for tasks such as the automatic classification of echocardiographic images into pathological categories or for predicting outcomes based on echocardiographic data. Over time, these models can make predictions on unseen data, a process that could significantly streamline the interpretation of echocardiographic images and enhance diagnostic accuracy [

4,

5,

6].

Unsupervised Learning: Unsupervised learning is type of algorithm that learns patterns from untagged data. These algorithms discover hidden pattern or data grouping without the need for human intervention. Note the contrast with supervised learning, there is no predicted outcome, we are only interested in identifying patterns in the data. In the context of echocardiography, unsupervised learning techniques could be employed to discover patterns or groupings within echocardiographic data without predefined labels.

Example: Identify Myocarditis in sub group of Systolic heart failure patients.

This method could be particularly useful for identifying novel phenotype saw of cardiac diseases or for clustering patients based on subtle echocardiographic features, potentially uncovering new insights into cardiovascular health and disease mechanisms [

4,

5,

6].

Reinforcement Learning: This type of learning differs significantly from supervised learning as it focuses on learning from the consequences of actions rather than from direct input/output pairs [

4,

5,

6]. Although direct applications in echocardiography were not highlighted, reinforcement learning involves training models to make decisions or take actions within an environment to maximize some notion of cumulative reward. In echocardiography, reinforcement learning could potentially be applied to optimize the acquisition of echocardiographic images or to automate the adjustment of imaging parameters in real-time for optimal image quality.

While these descriptions provide a theoretical foundation for the integration of ML in echocardiography, specific examples from the field remain to be explored further in the literature. The potential applications mentioned offer a glimpse into how each type of ML could enhance echocardiographic analysis and interpretation, highlighting the need for targeted research in this area to fully realize these benefits. These technologies process large datasets of echocardiographic images, learning to identify patterns and anomalies indicative of cardiac diseases. For instance, convolutional neural networks (CNNs), a type of DL, are particularly adept at image recognition tasks and have been extensively used in echocardiography to enhance diagnostic accuracy. The rapid expansion in data storage capacity and computational capabilities is significantly closing the gap in applying advanced clinical informatics discoveries to cardiovascular clinical practices. Cardiovascular imaging, in particular, offers an extensive amount of data ripe a for detailed analysis, yet it demands a high level of expertise for nuanced interpretation, a skill set possessed by only a select few. DL, a branch of ML, as demonstrated considerable potential, especially in image recognition, computer vision, and video classification. Echocardiographic data, characterized by a low signal-to-noise ratio, present classification challenges. However, the application of sophisticated DL frameworks has the potential to revolutionize this field.

Narula et al. (2016) study explores the potential of ML models to enhance cardiac phenotypic recognition by analyzing features of cardiac tissue deformation. The objective was to evaluate the diagnostic capabilities of a ML framework that utilizes speckle-tracking echocardiographic data for the automated differentiation between hypertrophic cardiomyopathy (HCM) and physiological hypertrophy observed in athletes (ATH). The study employed expert-annotated speckle-tracking echocardiographic datasets from 77 ATH and 62 HCM patients to develop an automated system. An ensemble ML model, comprising three different algorithms (support vector machines, random forests, and artificial neural networks), was constructed. A majority voting method, along with K-fold cross-validation, was used for making definitive predictions. Feature selection via an information gain (IG) algorithm indicated that volume was the most effective predictor for distinguishing HCM from ATH (IG = 0.24), followed by mid-left ventricular segmental (IG = 0.134) and average longitudinal strain (IG = 0.131). The ensemble ML model demonstrated enhanced sensitivity and specificity in comparison to traditional measures such as the early-to-late diastolic transmitral velocity ratio (p < 0.01), average early diastolic tissue velocity (e0) (p < 0.01), and strain (p = 0.04). Adjusted analysis in younger HCM patients, compared with ATH with left ventricular wall thickness >13 mm, revealed that the automated model maintained equal sensitivity but increased specificity relative to the traditional measures [

11].

The findings suggest that ML algorithms can aid in distinguishing physiological from pathological patterns of hypertrophic remodeling. This research represents a step towards developing a real-time, machine-learning-based system for the automated interpretation of echocardiographic images, which could potentially assist novice readers with limited experience. These technologies could enable clinicians and researchers to automate tasks traditionally performed by humans and facilitate the extraction of valuable clinical information from the vast volumes of collected imaging data. The vision extends to the development of contactless echocardiographic examinations, a particularly relevant advancement in the era of social distancing and public health uncertainties brought about by the pandemic. This review delves into the cutting-edge DL techniques and architectures pertinent to image and video classification, and it explores future research trajectories in echocardiography within the contemporary context [

11].

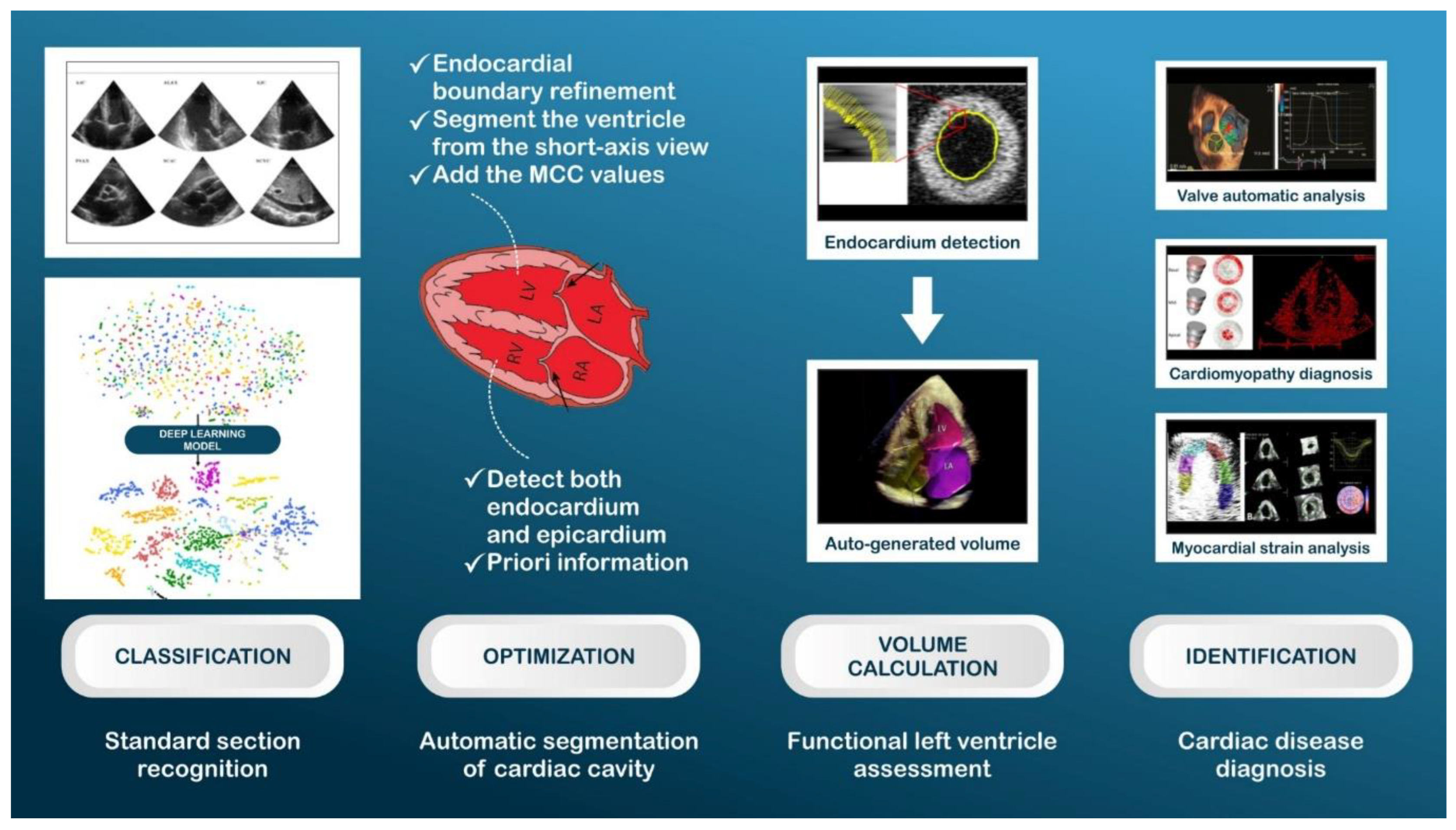

AI significantly improves image acquisition in echocardiography. AI algorithms can assist sonographers in acquiring standard echocardiographic views, thus reducing variability and ensuring consistency in image quality. Automated border detection and image segmentation techniques also facilitate more accurate and reproducible measurements of cardiac structures and functions. Recent study explores the development of an AI system to automate stress echocardiography analysis, aiming to enhance clinician interpretation in diagnosing coronary artery disease, a leading global cause of mortality and morbidity. Employing an automated image processing pipeline, the study extracted novel geometric and kinematic features from a large, multicenter dataset, training a ML classifier to identify severe coronary artery disease. The results showed high classification accuracy, with significant improvements in inter-reader agreement and clinician confidence when AI classifications were used, underscoring the potential of AI in refining diagnostic processes in stress echocardiography [

12]. Echocardiography utilizes ultrasound technology to produce high-resolution images of the heart and surrounding structures, making it a widely used imaging technique in cardiovascular medicine {(

Figure 4). By employing CNN on a large new dataset, one study demonstrate that deep learning can be applied to echocardiography to identify local cardiac structures, estimate cardiac function, and predict systemic phenotypes that modify cardiovascular risk but are not easily identifiable by human interpretation (figure 4). Recent study deep learning model, EchoNet, accurately identified the presence of pacemaker leads (AUC = 0.89), enlarged left atrium (AUC = 0.86), left ventricular hypertrophy (AUC = 0.75), left ventricular end-systolic and diastolic volumes (R2 = 0.74 and R2 = 0.70), and ejection fraction (R2 = 0.50). It also predicted systemic phenotypes such as age (R2 = 0.46), sex (AUC = 0.88), weight (R2 = 0.56), and height (R2 = 0.33) [

12].

Automatic semantic segmentation in 2D echocardiography is crucial for assessing various cardiac functions and improving the diagnosis of cardiac diseases. However, two main challenges have persisted in automatic segmentation in 2D echocardiography: the lack of an effective feature enhancement approach for contextual feature capture and the lack of label coherence in category prediction for individual pixels. Liu et al. (2021), proposed a deep learning model, called the Deep Pyramid Local Attention Neural Network (PLANet), to improve the segmentation performance of automatic methods in 2D echocardiography. Specifically, authors introduced a pyramid local attention module to enhance features by capturing supporting information within compact and sparse neighboring contexts. In this study a label coherence learning mechanism to promote prediction consistency for pixels and their neighbors by guiding the learning with explicit supervision signals. The proposed PLANet was extensively evaluated on the Cardiac Acquisitions for Multi-Structure Ultrasound Segmentation (CAMUS) dataset and the sub-EchoNet-Dynamic dataset, which are two large-scale and public 2D echocardiography datasets. The experimental results showed that PLANet outperforms traditional and deep learning-based segmentation methods on geometrical and clinical metrics [

13]. Moreover, PLANet can complete the segmentation of heart structures in 2D echocardiography in real-time, indicating its potential to assist cardiologists accurately and efficiently. Interpretation analysis confirms that EchoNet focuses on key cardiac structures when performing tasks explainable to humans and highlights regions of interest when predicting systemic phenotypes that are challenging for human interpretation. ML applied to echocardiography images can streamline repetitive tasks in the clinical workflow, provide preliminary interpretations in regions with a shortage of qualified cardiologists, and predict phenotypes that are difficult for human evaluation.

AI-driven systems can classify echocardiographic views, such as the parasternal long-axis or four-chamber views, with high accuracy. This classification is crucial for standardizing echocardiographic examinations and ensuring comprehensive cardiac evaluation. These systems use ML algorithms trained on large datasets to recognize and categorize different echocardiographic views. explore the potential of left ventricular pressure-volume (LV-PV) loops, derived non-invasively from echocardiography, as a comprehensive tool for classifying heart failure (HF) with greater detail. LV-PV loops are crucial for hemodynamic evaluation in both healthy and diseased cardiovascular systems. authors employed a time-varying elastance model to establish non-invasive PV loops, extracting left ventricular volume curves from apical-four-chamber views in echocardiographic videos. Subsequently, 16 cardiac structure and function parameters were automatically gathered from these PV loops. Utilizing six ML (ML) methods, we categorized four distinct HF classes and identified the most effective classifier for feature ranking. Handheld ultrasound devices have been developed to facilitate imaging in new clinical settings, but quantitative assessment has been challenging. In the field of echocardiography, quantifying the left ventricular ejection fraction (LVEF) typically involves identifying endocardial boundaries manually or through automation, followed by model-based calculation of end-systolic and end-diastolic LV volumes. Recent advancements in AI have led to computer algorithms that can almost automate the detection of endocardial boundaries and measure LV volumes and function. However, boundary identification remains error-prone, limiting accuracy in certain patients. We hypothesized that a fully automated ML algorithm could bypass border detection and estimate the degree of ventricular contraction, similar to a human expert trained on tens of thousands of images.

A ML algorithm was developed and trained to automatically estimate LVEF using a database of over 50,000 echocardiographic studies, including multiple apical 2- and 4-chamber views (AutoEF, BayLabs). Testing was conducted on an independent group of 99 patients, comparing their automated EF values with reference values obtained by averaging measurements from three experts using a conventional volume-based technique. Inter-technique agreement was assessed using linear regression and Bland-Altman analysis, while consistency was evaluated by the mean absolute deviation among automated estimates from different combinations of apical views. The sensitivity and specificity of detecting EF ≤35% were also calculated and compared side-by-side against the same reference standard to those obtained from conventional EF measurements by clinical readers. Automated estimation of LVEF was feasible in all 99 patients. AutoEF values demonstrated high consistency (mean absolute deviation = 2.9%) and excellent agreement with the reference values: r = 0.95, bias = 1.0%, limits of agreement = ±11.8%, with a sensitivity of 0.90 and specificity of 0.92 for detecting EF ≤35%. This was comparable to clinicians' measurements: r = 0.94, bias = 1.4%, limits of agreement = ±13.4%, sensitivity 0.93, specificity 0.87 [

14].

Aldaas et al. (2019) study conducted at the University of California, San Diego, 70 patients scheduled for echocardiography were enrolled. Each patient underwent a standard echocardiography examination by an experienced sonographer and a handheld brresident. The results showed a positive correlation between LVEFs obtained from the standard transthoracic echocardiogram and the handheld device in the hands of both novice and experienced sonographers. The sensitivity and specificity for detecting reduced LVEF (<50%) were 69% and 96% for the novice, and 64% and 98% for the experienced sonographer. For detecting severely reduced LVEF (<35%), the sensitivity and specificity were 67% and 97% for the novice, and 56% and 93% for the experienced sonographer. However, when limited to recordings of at least adequate quality, the sensitivity and specificity improved to 100% for both the novice and the experienced sonographer. These data demonstrate that the handheld ultrasound device paired with novel software can provide a clinically useful estimate of LVEF when the images are of adequate quality and yield results by novice examiners that are similar to experienced sonographers [

15]. Liu et al. (2023) study examined the varying contributions of these parameters to HF classification. The results were promising, with PV loops successfully acquired in 1076 cases. Using a single left ventricular ejection fraction (LVEF) for HF classification yielded an accuracy of 91.67%. However, incorporating additional parameters from ML-derived LV-PV loops increased this accuracy to 96.57%, an improvement of 5.1%. Notably, our parameters significantly enhanced the classification of non-HF controls and heart failure with preserved ejection fraction (HFpEF). In conclusion, the study demonstrates the efficacy of classifying HF using machine-derived, non-invasive LV-PV loops, which holds significant promise for improving HF diagnosis and management in clinical settings. Furthermore, our findings highlight the importance of ventriculo-arterial (VA) coupling and ventricular efficiency as key factors in the ML-based HF classification model, alongside LVEF [

16].

AI has been developed for echocardiography, but it has not yet undergone testing with blinding and randomization. In one study, authors conducted a blinded, randomized non-inferiority clinical trial (ClinicalTrials.gov ID: NCT0514064) no outside funding) to compare AI versus sonographer initial assessment of LVEF and evaluate the impact of AI on the interpretation workflow [

17]. The primary endpoint was the change in LVEF between initial AI or sonographer assessment and final cardiologist assessment, assessed by the proportion of studies with substantial change (more than 5% change). Out of 3,769 echocardiographic studies screened, 274 were excluded due to poor image quality. The proportion of studies with substantial changes was 16.8% in the AI group and 27.2% in the sonographer group (difference of -10.4%, 95% confidence interval: -13.2% to -7.7%, P < 0.001 for non-inferiority, P < 0.001 for superiority). The mean absolute difference between final cardiologist assessment and independent previous cardiologist assessment was 6.29% in the AI group and 7.23% in the sonographer group (difference of -0.96%, 95% confidence interval: -1.34% to -0.54%, P < 0.001 for superiority). The AI-guided workflow saved time for both sonographers and cardiologists, and cardiologists were unable to distinguish between initial assessments by AI versus the sonographer (blinding index of 0.088). For patients undergoing echocardiographic quantification of cardiac function, initial assessment of LVEF by AI was non-inferior to assessment by sonographers [

17].

In conclusion, the ML algorithm for volume-independent LVEF estimation is highly feasible and similar in accuracy to conventional volume-based measurements when compared with reference values provided by an expert pane Recently, software algorithms have been designed to provide rapid measurements of LVEF with minimal operator input

Segmentation of cardiac chambers is a vital step in quantifying cardiac structure and function. AI algorithms, particularly those based on DL, can delineate cardiac chambers from echocardiographic images, enabling automated quantification of parameters like ventricular volumes and ejection fraction. These algorithms are trained to differentiate between normal and pathological cardiac structures. Recent study developed a fully automated model for whole heart segmentation (encompassing all four cardiac chambers and great vessels) in Computed Tomography Pulmonary Angiography (CTPA), a crucial diagnostic tool for suspected pulmonary vascular diseases such as pulmonary hypertension and pulmonary embolism. Traditional CTPA evaluations rely on visual assessments and manual measurements, which suffer from poor reproducibility. Our approach involved creating a nine-structure semantic segmentation model of the heart and great vessels, validated on a cohort of 200 patients (split into 80/20/100 for training, validation, and internal testing) and further tested on 20 external patients. The segmentations were initially performed by expert cardiothoracic radiologists, and a failure analysis was conducted in 1,333 patients with diverse pulmonary vascular conditions. The segmentation process utilized deep learning via a CNN, and the resulting volumetric imaging biomarkers were correlated with invasive hemodynamics in the test cohort. The model exhibited high accuracy, with Dice Similarity Coefficients (DSC) ranging from 0.58 to 0.93 across both internal and external test cohorts. Lower DSC values were observed for the left and right ventricular myocardium segmentations. However, significant interobserver variability was noted in these areas. Notably, the right ventricular myocardial volume showed a strong correlation with mean pulmonary artery pressure. The deep learning-derived volumetric biomarkers demonstrated higher or equivalent correlation with invasive hemodynamics compared to manual segmentations, indicating their potential utility in cardiac assessment and prediction of invasive hemodynamics in CTPA. The model also showed excellent generalizability across different vendors and hospitals, with similar performance in the external test cohort and low failure rates in mixed pulmonary vascular disease patients. This study successfully presents a fully automated segmentation approach for CTPA, achieving high accuracy and low failure rates. The deep learning volumetric biomarkers derived from this model hold promise for enhancing cardiac assessments and predicting invasive hemodynamics in pulmonary vascular diseases [

18].

AI also aids in the functional assessment of the heart. By analyzing echocardiographic images, AI can detect subtle changes in cardiac function, which might be overlooked by human eyes. This is particularly useful in the early detection of conditions like heart failure or cardiomyopathies. AI algorithms can also predict patient outcomes based on echocardiographic parameters, offering valuable insights for patient management.

Precise evaluation of cardiac function is pivotal for diagnosing cardiovascular diseases, screening for cardiotoxic effects, and guiding clinical management in critically ill patients. Traditional human assessments, despite extensive training, are limited to sampling a few cardiac cycles and exhibit significant variability among observers. Addressing this limitation, in a recent study finding EchoNet-Dynamic, a video-based deep learning algorithm that outperforms human experts in critical tasks such as segmenting the left ventricle, estimating ejection fraction, and diagnosing cardiomyopathy. The model, trained on echocardiogram videos, achieves remarkable accuracy in left ventricle segmentation (with a Dice similarity coefficient of 0.92), predicts ejection fraction with a mean absolute error of only 4.1%, and reliably detects heart failure with reduced ejection fraction (boasting an area under the curve of 0.97). Furthermore, in an external dataset from a different healthcare system, EchoNet-Dynamic maintained its high performance, predicting ejection fraction with a mean absolute error of 6.0% and classifying heart failure with reduced ejection fraction with an area under the curve of 0.96. A prospective evaluation, including repeated human measurements, confirms that the model's variance is comparable to, or even surpasses, that of human experts. By analyzing multiple cardiac cycles, the model can swiftly detect subtle variations in ejection fraction, offering a more consistent evaluation than human analysis and establishing a foundation for real-time, precise cardiovascular disease diagnosis. To foster further advancements in this field, authors have also made available a substantial dataset of 10,030 annotated echocardiogram videos to the public [

19]. AI has been increasingly integrated into echocardiography, yet its effectiveness in this field has not been thoroughly evaluated under conditions of blinding and randomization. To address this gap, one study conducted a blinded, randomized non-inferiority clinical trial (registered at ClinicalTrials.gov ID: NCT05140642, without external funding) to compare AI with traditional sonographer assessments in determining LVEF. The primary endpoint was the variance in LVEF between the initial AI or sonographer assessment and the final evaluation by a cardiologist, particularly focusing on substantial changes (defined as more than a 5% shift). Out of 3,769 echocardiographic studies screened, 274 were excluded due to suboptimal image quality. The trial found that significant changes in LVEF assessment occurred in 16.8% of cases in the AI group, compared to 27.2% in the sonographer group, marking a notable difference of -10.4% (95% confidence interval: -13.2% to -7.7%, P < 0.001 for non-inferiority, P < 0.001 for superiority). Furthermore, the mean absolute disparity between the final cardiologist assessment and an independent prior cardiologist assessment was lower in the AI group (6.29%) compared to the sonographer group (7.23%), with a difference of -0.96% (95% confidence interval: -1.34% to -0.54%, P < 0.001 for superiority). This AI-guided approach not only saved time for both sonographers and cardiologists but also maintained the integrity of blinding, as cardiologists could not reliably distinguish between initial assessments made by AI or sonographers (blinding index of 0.088). Finally, study concludes that for patients undergoing echocardiographic evaluation of cardiac function, AI's initial assessment of LVEF is non-inferior to that performed by a sonographer [

20].

Strain imaging in echocardiography, which assesses myocardial deformation, benefits significantly from AI. AI algorithms enhance the accuracy and reproducibility of strain measurements, important in the prognosis and management of various cardiac conditions. Recently one study tested a novel AI method that automatically measures GLS using deep learning. It included 200 patients with varying levels of left ventricular function. The AI successfully identified standard apical views, timed cardiac events, and measured GLS with high accuracy and speed, showing potential to facilitate GLS implementation in clinical practice [

20]. Another study aims to validate an AI-based tool's performance in estimating LV-EF and LV-GLS from ECHO scans, conducted at Hippokration General Hospital in Greece, it involves two phases with 120 participants. The study will compare the AI tool's accuracy and time efficiency against cardiologists of varying experience levels [

21].

Doppler echocardiography, essential for evaluating blood flow and pressures within the heart, has also been augmented by AI. AI algorithms can automatically measure Doppler parameters, thus expediting the diagnostic process and enhancing precision. Yang et al. (2022) developed a cutting-edge DL framework to analyze echocardiographic videos autonomously for identifying valvular heart diseases (VHDs) [

22]. Despite the advancements in DL for echocardiogram interpretation, its application in analyzing color Doppler videos for diagnosing VHDs remained unexplored. The research team meticulously crafted a three-stage DL framework that not only classifies echocardiographic views but also detects the presence of VHDs such as mitral stenosis (MS), mitral regurgitation (MR), aortic stenosis (AS), and aortic regurgitation (AR), and quantifies key severity metrics. The algorithm underwent extensive training, validation, and testing using studies from five hospitals, supplemented by a real-world dataset of 1,374 consecutive echocardiograms. The results were impressive, showing high disease classification accuracy and limits of agreement (LOA) between the DL algorithm and physician estimates within acceptable ranges compared to two experienced physicians across multiple metrics. This innovative DL algorithm holds great promise in automating and streamlining the clinical workflow for echocardiographic screening of VHDs and accurately quantifying disease severity, marking a significant advancement in the integration of AI in echocardiographic diagnostics [

22].

While AI technologies have shown promising results in echocardiography, challenges remain in terms of algorithm standardization, data privacy, and the need for large, diverse datasets for training. Future research should focus on addressing these challenges and exploring the integration of AI with 3D echocardiography and other advanced imaging techniques. AI technologies, particularly ML and DL, are reshaping echocardiography, enhancing image acquisition, standard view classification, structural quantification, and functional assessment. As AI continues to evolve, its integration into echocardiography promises to significantly improve diagnostic accuracy, efficiency, and patient care in cardiology.

3. Current Applications of AI in Echocardiography

The integration of AI in echocardiography has been extensively validated through various case studies and clinical trials, illuminating its transformative impact on cardiovascular diagnostics. Asch et al. (2019) [

14] innovatively crafted a computer vision model adept at estimating LVEF, skillfully mirroring the expertise of specialists across a vast dataset of 50,000 studies. Their findings revealed a noteworthy correlation and uniformity between the automated measurements and traditional human assessments [

23]. Complementing this, Reynaud et al. (2021) [

23] introduced a transformative model based on the self-attention mechanism, proficient in analyzing echocardiographic videos regardless of length, pinpointing end-diastole (ED) and end-systole (ES) with precision, and accurately determining LVEF.

Zhang et al. (2018) [

24] unveiled the inaugural fully automated multitasking echocardiogram interpretation system, engineered to streamline clinical diagnosis and treatment. This groundbreaking model successfully categorized 23 distinct views, meticulously segmented cardiac structures, evaluated LVEF, and diagnosed three specific cardiac conditions: hypertrophic cardiomyopathy, cardiac amyloid, and pulmonary arterial hypertension. Building upon this, in 2022, Tromp et al. [

25] developed a comprehensive automated AI workflow. This system, adept at classifying, segmenting, and interpreting both two-dimensional and Doppler modalities, drew upon extensive international and interracial datasets, exhibiting remarkable efficacy in assessing LVEF with an area under the receiver operating characteristic curve (AUC) ranging from 0.90 to 0.92.

These studies shed light on the quantification of LVEF in routine medical imaging practices. However, Point-of-Care Ultrasonography (POCUS), prevalent in emergency and critical care scenarios, takes a slightly different route. POCUS, defined as the acquisition, interpretation, and immediate clinical application of ultrasound imaging conducted bedside by clinicians instead of radiologists or cardiologists [

26], facilitates direct patient-clinician interactions, thereby enhancing the accuracy of diagnoses and treatments. Its ease of portability and operation renders it invaluable for prompt diagnosis in emergency and critically ill patients. Nevertheless, the effective deployment of POCUS hinges on clinicians' proficiency in utilizing the device and interpreting the data, necessitating standardized training. To bridge this gap, the focus has shifted towards AI. In 2020, the FDA greenlighted two pioneering products from Caption Health: Caption Guidance and Caption Interpretation. Caption Guidance demonstrated its capability to efficiently guide novices lacking ultrasonographic experience to capture diagnostic views after brief training [

27], while Caption Interpretation aided doctors in the automatic measurement of LVEF [

14]. These products were then amalgamated to forge a novel AI algorithm within the POCUS framework [

26], enabling seamless image acquisition, quality assessment, and fully automated LVEF measurements, thereby assisting doctors in swiftly and accurately collecting and analyzing images.

Collectively, these advancements underscore the indispensable clinical value of AI algorithms. They not only elevate the precision of LVEF assessments but also simplify and streamline the diagnostic and treatment processes, significantly cutting down on time and labor costs in medical imaging

Comparative analyses have consistently shown that AI can match or surpass traditional echocardiographic methods.

Knackstedt et al. (2015) conducted a comprehensive multicenter study to evaluate the efficacy of an automated endocardial border detection method using a vendor-independent software package (Auto LV, TomTec-Arena 1.2, TomTec Imaging Systems, Unterschleissheim, Germany) [

28]. This software employed a ML algorithm specifically designed for image analysis. The automated technique demonstrated notable reproducibility and was found to be comparable to manual tracings in determining 2D ejection fraction, LV volumes, and global longitudinal strain [

28]. Additionally, this correlation remained consistent when image quality was rated as good or moderate. However, a slight decrease in correlation was observed with poorer image quality. The study also revealed that the results of automated global longitudinal strain maintained good agreement and correlation [

28].

Expanding the scope beyond global longitudinal strain (GLS) and LV volumes, a study by Zhang et al. (2018) showcased the potential of convolutional neural networks in precisely identifying echocardiographic views and providing specific measurements such as LV mass and wall thickness [

24]. In their research, a convolutional neural network model was specifically developed for the classification of echocardiographic views. Utilizing data from the segmentation model, chamber dimensions were accurately calculated in alignment with established echocardiographic guidelines [

24]. This study highlights the advanced capabilities of AI in enhancing the precision and efficiency of echocardiographic analysis. The superior efficiency of AI in processing and interpreting vast datasets has been highlighted as a significant advantage over conventional echocardiographic methods, which are often time-consuming and subject to inter-operator variability.

The diagnostic performance of AI algorithms in echocardiography has been nothing short of revelatory. AI's integration into echocardiographic diagnostics has led to the development of predictive models with impressive accuracy rates. The 2021 ESC guidelines (1) categorize heart failure into three distinct types: heart failure with reduced ejection fraction (LVEF ≤ 40%), heart failure with mildly reduced ejection fraction (LVEF 41~49%), and heart failure with preserved ejection fraction (LVEF ≥ 50%). These classifications reflect varying degrees of left ventricular systolic and diastolic dysfunction. Despite these distinctions, there lacks a sensitive prediction model based on echocardiographic indicators that can effectively stage heart failure in patients with analogous characteristics or forecast major adverse cardiac events. Addressing this gap, Tokodi et al. (2020) [

28] employed topological data analysis (TDA) to amalgamate echocardiographic parameters of left ventricular structures and functions into a cohesive patient similarity network. This TDA network adeptly represents geometric data structures, mapping similarities across multiple echocardiographic parameters, preserving essential data features, and capturing the topological intricacies of high-dimensional data. The findings were significant: the TDA network adeptly segregated patients into four distinct regions based on nine echocardiographic parameters. It exhibited commendable performance in dynamically evaluating disease progression and predicting major adverse cardiac events, with cardiac function progressively worsening from region one to region four. This observation underscores the efficacy of AI algorithms in extracting latent features from echocardiographic data, thereby bolstering early and precise diagnosis in the realm of cardiac healthcare.

The strain parameters derived from the post-processing of echocardiography are crucial in accurately reflecting myocardial systolic and diastolic deformation, playing a pivotal role in the early detection and prognosis of cardiac dysfunction. Nonetheless, these high-dimensional datasets often encompass redundant information, presenting considerable challenges in data mining and interpretation. The efficacy of their clinical application is frequently impeded by the limited feature extraction capabilities of conventional analysis techniques, thereby restricting the provision of comprehensive information necessary for clinicians to make swift and precise clinical decisions. It is in this context that AI emerges as a game-changer, boasting robust feature extraction capacities and excelling in the analysis of intricate, high-dimensional data, thus finding extensive application in the differential diagnosis of diseases.

Narula et al. (2016) developed a composite model integrating Support Vector Machine (SVM), Random Forest (RF), and Artificial Neural Network (ANN) with echocardiographic images from 77 patients with physiological myocardial hypertrophy and 62 patients with hypertrophic cardiomyopathy. Leveraging strain parameters, this model adeptly differentiated between physiological and pathological myocardial hypertrophy patterns and identified hypertrophic cardiomyopathy with a sensitivity of 0.96 and specificity of 0.77 [

11].

The study by Sengupta et al. (2016), explored the potential of utilizing a cognitive computing tool to learn and recall multidimensional attributes of speckle-tracking echocardiography (STE) datasets from patients with known constrictive pericarditis (CP) and restrictive cardiomyopathy (RCM), akin to the process of associating a patient's profile with the memories of prototypical patients in clinical judgment. Combined echocardiographic and clinical data from 50 CP patients and 44 RCM patients were utilized to create an associate memory classifier (AMC)-based ML algorithm. The STE data was normalized against 47 controls without structural heart disease, and the diagnostic area under the receiver operating characteristic curve (AUC) of the AMC was gauged for differentiating CP from RCM. From only the STE variables, the AMC attained a diagnostic AUC of 89.2%, improving to 96.2% along with four additional echocardiographic variables. However, the AUCs of early diastolic mitral annular velocity and left ventricular longitudinal strain were 82.1% and 63.7%, respectively. Additionally, the AMC showed better accuracy and shorter learning curves compared to other ML approaches, with accuracy approaching 90% after a training fraction of 0.3 and stabilizing at higher training fractions. This study showed the viability of a cognitive ML approach for learning and remembering patterns seen during echocardiographic evaluations. Therefore, it suggests that the integration of ML algorithms in cardiac imaging may help in standardized assessments and allow good quality of interpretations, particularly for novice readers with limited experience [

30].

Zhang et al. (2021) conducted a research encompassing 217 patients with coronary heart disease and 207 controls. By integrating various classification methods through stacked learning strategies and incorporating two-dimensional speckle tracking echocardiographic and clinical parameters, they constructed a predictive model for coronary heart disease. This integrated model amalgamated the strengths of multiple classification approaches, yielding a classification accuracy of 87.7% in the test set, with a sensitivity of 0.903, specificity of 0.843, and an AUC of 0.904, significantly surpassing the performance of any single model [

31].

Beyond direct utilization of GLS data, researchers like Loncaric et al. (2021) have delved into exploring disease phenotypes based on strain curves. In their study involving 189 hypertensive patients and 97 controls, an unsupervised ML algorithm was developed to automatically categorize patterns in strain and mitral and aortic valve pulse Doppler velocity curves throughout cardiac cycles [

32]. The algorithm effectively classified hypertension into four distinct functional phenotypes. Similarly, Yahav et al. (2020) created a fully automated ML algorithm focused on strain curves, successfully categorizing them into physiological, non-physiological, or uncertain categories with an impressive classification accuracy of 86.4% [

33].

These advancements downplay AI’s diagnostic prowess and further cement it’s role as an indispensable adjunct in echocardiographic evaluation.

AI has exhibited extraordinary proficiency in the identification of specific cardiac conditions, achieving diagnostic milestones that were previously unattainable.

Haimovich et al. (2023) adeptly trained a CNN model using data from both 12-lead and single-lead electrocardiograms to distinguish cardiac diseases associated with left ventricular (LV) hypertrophy. They conducted a retrospective analysis of a substantial cohort of 50,709 patients from the Enterprise Warehouse of Cardiology dataset, categorizing them into groups with cardiac amyloidosis (n = 304), hypertrophic cardiomyopathy (HCM) (n = 1056), hypertension (n = 20,802), aortic stenosis (n = 446), and other causes (n = 4766) [

34]. The DL model, trained on 12-lead electrocardiograms with a derivation cohort of 34,258 individuals and validated on 16,451 individuals, achieved an impressive Area Under the Receiver Operating Characteristic Curve (AUROC) of 0.96 [95% CI, 0.94–0.97] for cardiac amyloidosis, 0.92 [95% CI, 0.90–0.94] for HCM, 0.90 [95% CI, 0.88–0.92] for aortic stenosis, 0.76 [95% CI, 0.76–0.77] for hypertensive LV hypertrophy, and 0.69 [95% CI 0.68–0.71] for other LV hypertrophy etiologies. Furthermore, the CNN model trained on single-lead waveforms also demonstrated notable accuracy in discriminating between LV hypertrophy, with an AUROC of 0.90 [95% CI, 0.88–0.92] for both cardiac amyloidosis and HCM [

34].

In a parallel endeavor, an AI model focusing on electrocardiograms was developed to identify pediatric patients with HCM. Tested on 300 pediatric patients with confirmed echocardiographic and clinical diagnoses of HCM and 18,439 healthy controls, the CNN model achieved exceptional diagnostic performance with an AUC of 0.98 (95% CI 0.98–0.99), with sensitivity of 92%, specificity of 95%, positive predictive value of 22%, and a negative predictive value of 99%. The DL models maintained robust diagnostic efficacy across both sexes and in both genotype-positive and genotype-negative HCM patients [

35].

Exploring the prediction of LV hypertrophy, a ML model was developed combining clinical, laboratory, and echocardiographic data. In their retrospective monocentric study, the authors evaluated 591 LV hypertrophy patients. After partitioning them into training and testing sets (75%:25%), they developed three distinct ML models: decision tree, Random Forest (RF), and Support Vector Machine (SVM). The SVM model exhibited superior diagnostic performance, with an AUROC of 0.90 (0.85–0.94), sensitivity of 0.31 (0.17–0.44), specificity of 0.96 (0.91–0.99), and accuracy of 0.80 (0.75–0.85) in the testing set [

35].

Hwang et al. (2022) advanced this field by developing a CNN long short-term memory DL algorithm, aiding in differentiating common etiologies of LV hypertrophy, including hypertensive heart disease, HCM, and cardiac amyloidosis (41). They achieved average AUC values of 0.962, 0.982, and 0.996 in the test set for these diseases, respectively. Notably, the diagnostic accuracy for the DL algorithm (92.3%) was significantly higher than that of echocardiography specialists (80.0% and 80.6%) [

36].

Baeßler et al. (2018) took a novel approach by employing a ML model using radiomics data from Cardiac Magnetic Resonance (CMR) images to find myocardial tissue alterations in HCM. They retrospectively analyzed radiomics features from T1 mapping images of 32 patients with known HCM and compared them with 30 healthy individuals, achieving an AUC of 0.95, with a diagnostic sensitivity of 91% and a specificity of 93% [

37].

Lastly, Soto et al. (2022) innovated a multimodal fusion network integrating electrocardiogram and echocardiogram data to ascertain the etiology of LV hypertrophy. Trained on over 18,000 combined occurences of tests from 2728 patients, the LVH-fusion model achieved an AUC of 0.92 (95% CI [0.862–0.965]), an F1-score of 0.73 (95% CI [0.585–0.842]), a sensitivity of 0.73 (95% CI [0.562–0.882]), and a specificity of 0.96 (95% CI [0.929–0.985] 9550 [

38].

DCM is characterized LV dilatation and systolic dysfunction, which are conditions not solely attributable to coronary artery disease or altered loading conditions, such as hypertension, congenital heart disease, and valve disease (1). DCM presents as a heterogeneous myocardial disease with varying natural histories and patient outcomes. The advent of AI has enabled the identification of underlying causes of LV dilatation and the stratification of patients based on distinct pathophysiological mechanisms [

39].

Shrivastava et al. (2021) evaluated the efficacy of an AI-based model using electrocardiogram data as a screening tool. They analyzed 16,471 individuals, comprising 421 DCM patients and 16,025 control subjects, using a CNN trained with 12-lead electrocardiograms. The model demonstrated exceptional diagnostic performance with an AUC of 0.955, sensitivity of 98.8%, specificity of 44.8%, negative predictive value of 100%, and positive predictive value of 1.8% for detecting ejection fractions ≤45% [

40].

A radiomics-based ML approach was recently explored to distinguish between DCM, HCM, and healthy patients. Among the tested ML models, Random Forest (RF) with minimum redundancy maximum relevance achieved outstanding performance, exhibiting an accuracy of 91.2% and average AUC values of 0.936 for HCM, 0.966 for DCM, and 0.938 for healthy controls in the test set [

41].

Tayal et al. (2022) proposed a ML-based approach using profile regression mixture modeling to categorize patients with DCM into distinct sub-phenotypes. They utilized a multiparametric dataset including demographic, clinical, genetic, Cardiac Magnetic Resonance (CMR), and proteomics parameters. The model was tested on a derivation cohort of 426 patients from the National Institute for Health Research Royal Brompton Hospital Cardiovascular Biobank project, identifying three novel DCM subtypes: profibrotic metabolic, mild nonfibrotic, and biventricular impairment. These subtypes were further validated in a cohort of 239 DCM patients from the Maastricht Cardiomyopathy Registry [

39]. The profibrotic metabolic cluster exhibited mid-wall Late Gadolinium Enhancement (LGE) in all cases, a prevalence of diabetes, and primarily NYHA functional class II symptoms. The mild nonfibrotic group, comprising 249 patients, showed no myocardial fibrosis and generally milder symptoms, with many in NYHA functional class I. The biventricular impairment group included 27 patients with more severe symptoms, half of whom were in NYHA functional class III or IV [

39].

Furthermore, Zhou et al. (2023) developed an ML model based on clinical and echocardiography data to differentiate between ischemic and non-ischemic causes of LV dilatation, a crucial distinction in patient management. Evaluating 200 patients with dilated LV, they developed four ML models - RF, Logistic Regression (LR), neural network, and XGBoost, post training and testing splits. The XGBoost model showed superior accuracy with a sensitivity of 72%, specificity of 78%, accuracy of 75%, F-score of 0.73, and AUC of 0.934. This model also demonstrated high accuracy in differentiating between etiologies in an external validation cohort, with a sensitivity of 64%, specificity of 93%, and an AUC of 0.804 [

42].

Arrhythmogenic Right Ventricular Cardiomyopathy (ARVC) is distinguished by right ventricular dilatation and/or dysfunction, histological and electrocardiographic abnormalities, as defined by the Task Force criteria (1,56). Non-Dilated Left Ventricular Cardiomyopathy (NDLVC) is a newly recognized category, characterized by left ventricular systolic impairment (either regional or global), with non-ischemic myocardial scarring or fatty replacement (1). This includes a diverse group of patients previously categorized under various cardiomyopathy classifications but not meeting the Task Force criteria (1). AI-based models showed promise in diagnosing these subtypes of cardiomyopathy [

43,

44]. Zhang et al. (2023) developed a ML algorithm to distinguish between arrhythmogenic and dilated cardiomyopathy using myocardial sample datasets, with the RF model performing best, achieving an AUC of 0.86 [

43]. Similarly, Bleijendaal et al. (2021) developed ML and DL models based on electrocardiographic features to diagnose Phospholamban p.Arg14del cardiomyopathy, with the AI models outperforming expert cardiologists in accuracy and sensitivity [

45].

Papageorgiou et al. (2022) developed an ML model using electrocardiograms to discriminate patients with ARVC, employing a CNN and achieving high accuracy, specificity, and sensitivity [

44]. RCM is characterized by non-dilated left or right ventricles with normal wall thickness and normal or reduced volume (1). Differentiating RCM from constrictive pericarditis (CP) can be challenging, however, Chao et al. (2023) have demonstrated the potential of AI in addressing this challenge. They trained a ResNet50 deep learning model using transthoracic echocardiography studies to effectively differentiate between CP and RCM. With a dataset of 381 patients, the model achieved a high AUC of 0.97 in distinguishing CP from cardiac amyloidosis (CA), a representative disease of RCM. The interpretation of the model using GradCAM, emphasized the significance of the ventricular septal area in the diagnosis. This AI approach shows promise in enhancing the identification of CP and improving clinical workflow efficiency, leading to timely intervention and improved patient outcomes [

46]. Sengupta et al. (2016) designed an ML approach combining clinical and echocardiographic data to discriminate between RCM and constrictive pericarditis, achieving high accuracy and AUC [

47].

Additionally, AI models have been proposed for identifying myocardial iron deposits in thalassemia, aiding early recognition of myocardial dysfunction. Taleie et al (2023) [

48] and Asmarian et al. (2022) [

49] developed ML models to discriminate between beta-thalassemia major patients with and without myocardial iron overload, demonstrating good diagnostic performance. For Fabry diseases, an AI-based approach using health record data successfully identified patients with Fabry disease among a large cohort [

50]. Eckstein et al. (2022) developed a supervised ML model integrating various strain parameters to identify cardiac amyloidosis, with the non-linear SVM model showing excellent performance [

51].

When it comes to other cardiac syndromes associated with cardiomyopathy phenotypes, Cau et al. (2023) designed an ML model to identify Takotsubo syndrome in patients with acute chest pain, outdoing clinical reader diagnoses in terms of accuracy, sensitivity, and specificity [

52]. An ML approach based on radiomics data was also developed to differentiate LV non-compaction from other cardiomyopathy phenotypes, achieving outstanding diagnostic performance [

53]. These AI and ML approaches represent significant advancements in the diagnosis and differentiation of various cardiomyopathy subtypes, offering enhanced accuracy and efficiency in clinical practice.

These advancements not only underscore the precision of AI in cardiac diagnosis but also its potential in prognostication, paving the way for a more preventative approach in cardiology. The applications of AI in echocardiography not only demonstrate its current capabilities but also lay the groundwork for future exploration and integration of AI into routine cardiac care.

4. Challenges and Limitations of AI echocardiography

AI in echocardiography has heralded a new era of diagnostic precision and patient care. However, it's not without its challenges, particularly in the realm of image quality and analysis. Echocardiographic images are inherently complex and subject to a wide range of variabilities, stemming from patient anatomy, operator expertise, and equipment quality. AI models, although robust, often struggle with inconsistencies in image acquisition. This can lead to suboptimal analysis, where AI algorithms may either miss subtle pathologies or misinterpret normal variations as abnormalities. Dey et al. (2020) study highlights the ability of AI to enhance image interpretation, automate measurements, and predict clinical outcomes in cardiovascular care. However, it also points out the challenges related to the variability in image quality. One key finding is that AI algorithms often struggle with images that deviate from the dataset norms they were trained on, leading to inconsistencies in diagnosis. This limitation emphasizes the necessity for comprehensive training datasets to improve the AI's accuracy and reliability in different clinical scenarios [

54]. Furthermore, the quality of the training data profoundly impacts the AI's performance. Another groundbreaking study, Madani and colleagues (2018) demonstrated the power of deep learning in classifying echocardiographic images. Their research, published in NPJ Digital Medicine, employed a CNN model to classify views in echocardiogram videos. One of the key findings was the model's high accuracy in view classification, showcasing the potential for AI to reduce the workload of clinicians by automating routine tasks. However, the study also emphasized the potential limitations in generalization if the model is trained on a non-diverse dataset. This finding is crucial in the context of algorithmic bias and the need for balanced training data [

55].

The integration of AI in echocardiography also raises significant ethical considerations. One of the primary concerns is algorithmic bias, where AI systems may exhibit biased decision-making due to skewed training datasets. For instance, if an AI model is predominantly trained on data from a particular demographic, its accuracy may diminish when applied to other populations. Recent study observations were that models trained on datasets from specific populations might not perform equally well on other populations, potentially leading to diagnostic inaccuracies [

56]. This study is a crucial reminder of the ethical considerations necessary in AI development and deployment, stressing the importance of varied training datasets to mitigate bias [

56]. This could lead to disparities in diagnosis and treatment, exacerbating existing healthcare inequalities. Additionally, there are concerns regarding patient data security, given the sensitivity of medical data. Therefore, ensuring that AI systems in echocardiography adhere to stringent data protection standards is crucial to maintain patient trust and ethical integrity [

57]. Furthermore, there is an ongoing debate about the accountability of AI decisions in medical settings, raising questions about responsibility in cases of misdiagnosis or treatment errors. Ensuring ethical AI deployment necessitates accountable algorithms with clear human oversight mechanisms.

Another significant challenge of AI in echocardiography is its interoperability with existing healthcare systems. For AI to be effectively integrated into clinical practice, it must seamlessly interact with various healthcare technologies, including electronic health records (EHRs), imaging software, and other diagnostic tools [

58]. In this study, authors study focused on arrhythmia detection rather than echocardiography, it illustrates the high potential of AI in cardiology. A significant finding was the AI's ability to analyze vast amounts of data with high accuracy, which has implications for echocardiography, where AI can be used to interpret complex imaging data efficiently. Often, healthcare systems operate on heterogeneous platforms with varying degrees of technological advancement, which can hinder the efficient integration of AI tools. The lack of standardization across systems can also pose challenges in data exchange and interpretation, potentially leading to errors or delays in patient care. Furthermore, the successful adoption of AI in echocardiography requires significant training and adaptation by healthcare professionals. There is a need for comprehensive training programs to familiarize clinicians with AI tools and their implications for clinical practice. Recent study highlighted the importance of interoperability between AI systems and existing healthcare infrastructures, emphasizing the need for AI tools to integrate seamlessly with current clinical workflows. This integration is crucial in echocardiography, where AI must work in tandem with existing diagnostic tools and electronic health records [

59]. Overcoming these challenges in interoperability is essential for the effective use of AI in echocardiography, ultimately enhancing clinical outcomes.

5. Integration of AI with Other Imaging Modalities:

The integration of AI in medical imaging has revolutionized how we approach diagnostics, particularly in the realm of cardiovascular care. Echocardiography, when combined with other imaging modalities like CT and MRI, creates synergies that can significantly enhance diagnostic accuracy and patient outcomes. Echocardiography provides detailed functional information, while CT and MRI offer superior spatial resolution and tissue characterization. The fusion of these modalities, guided by AI algorithms, can lead to a more holistic view of cardiovascular diseases. For instance, the study by Hahn and colleagues review paper [

60] focused on assessing the severity of tricuspid regurgitation using imaging techniques. They emphasized the importance of integrating echocardiography with other imaging modalities for a more comprehensive assessment. The key finding that echocardiography provides critical functional information, and the addition of CT/MRI could enhance anatomical understanding which is crucial for procedural planning and decision-making. This study highlights the collaboration between different imaging modalities, suggesting that a multimodal approach, potentially enhanced by AI, could lead to more accurate diagnoses and better patient outcomes in valvular heart diseases [

60]. AI algorithms can integrate and analyze data from these disparate sources, offering insights that might be missed by sole human interpretation. This synergy improves the accuracy of diagnoses and assists in tailoring individualized treatment plans.

AI's role in enhancing CT and MRI imaging is substantial. In CT, AI algorithms have shown proficiency in automating tasks such as calcium scoring, which is pivotal in assessing coronary artery disease. Previous studies explored the use of AI in automating calcium scoring in cardiac CT [

61,

62]. The significant finding from these studies was that AI algorithms could accurately and efficiently perform calcium scoring across CT images from various vendors. This automation represents a significant advancement in evaluating coronary artery disease, demonstrating AI's potential to standardize and expedite diagnostic processes while maintaining high accuracy. This development is pivotal in cardiac imaging, as it could lead to quicker, more reliable assessments of cardiac risk factors [

61,

62]. Similarly, in cardiac MRI, AI has been instrumental in automating the quantification of left ventricular volumes and ejection fraction, traditionally time-consuming tasks [

63]. Authors found that AI algorithms could accurately automate these measurements, which were traditionally time-consuming and subject to inter-observer variability. This automation streamlines the workflow and potentially improves diagnostic accuracy in cardiac MRI. Their research emphasizes the utility of AI in enhancing the efficiency and reliability of cardiac imaging analysis. These advancements not only improve efficiency but also reduce variability in interpretation. Moreover, AI-powered tools have shown promise in identifying subtle pathological features in CT and MRI images that might escape manual analysis, thereby aiding in early disease detection and intervention. The integration of AI with CT and MRI is transforming cardiac imaging into a more precise, efficient, and predictive practice, ultimately improving patient care and outcomes.

The concept of multi-modality imaging, augmented by AI, is becoming increasingly vital in comprehensive cardiac diagnosis. AI's ability to aggregate and analyze data from various imaging sources like echocardiography, CT, and MRI allows for a more comprehensive understanding of complex cardiovascular conditions. For example, in cases of cardiomyopathies, echocardiography can provide information on functional parameters, while MRI can assess myocardial fibrosis, and CT can evaluate coronary arteries. AI can integrate these data streams to offer a comprehensive diagnostic picture, crucial for complex clinical decision-making. Previous insights into the role of multi-modality imaging in diagnosing and managing cardiomyopathies. They found that combining data from echocardiography, MRI, and CT could offer a more comprehensive view of cardiomyopathies, aiding in accurate diagnosis and treatment planning. This study highlights the potential of AI in integrating and analyzing data from multiple imaging modalities, leading to a more thorough understanding of complex cardiac conditions and aiding in precision medicine [

64]. This integrated approach can lead to more accurate diagnoses, better risk stratification, and optimized treatment strategies, highlighting the transformative potential of AI in cardiac imaging.

AI's integration into cardiac imaging extends beyond diagnostics to influence clinical decision-making. AI models can predict disease progression, response to therapies, and even long-term patient outcomes by analyzing comprehensive multi-modal imaging data [

65]. In this study, a significant finding was AI's capability to not only enhance diagnostic processes but also predict disease progression and patient outcomes by analyzing complex imaging data. This predictive ability of AI models can revolutionize clinical decision-making, enabling personalized and proactive treatment strategies. The study highlighted the shift towards a more data-driven, predictive healthcare approach, facilitated by the integration of AI in cardiac imaging. These predictive insights enable clinicians to make more informed decisions, potentially leading to personalized and preemptive healthcare strategies. Furthermore, AI can assist in identifying patients who would benefit most from specific interventions, thereby optimizing resource usage and improving care delivery. This aspect of AI highlights a shift from reactive to proactive healthcare, where AI-driven insights guide clinical decisions to enhance patient outcomes.

This comprehensive exploration into the integration of AI with various imaging modalities underlines the transformative impact AI has on enhancing cardiac imaging, diagnostics, and clinical decision-making. The synergy between different modalities, augmented by AI's analytical prowess, is paving the way for a new era in cardiovascular care.

6. Role of AI in Clinical Pathways and Treatment Planning

The advent of AI in healthcare has initiated a paradigm shift in clinical pathways and treatment planning. AI's integration into these areas is redefining the landscape of patient care, making it more data-driven, efficient, and personalized.

AI's influence on cardiologists' decision-making is profound and multifaceted. AI algorithms are capable of analyzing vast amounts of patient data, including medical histories, diagnostic images, and genetic information, beyond the capacity of the human mind. Seetharam et al. (2019) extensively explores the implications of AI and ML in healthcare. A key finding is the capacity of AI to process and analyze complex medical data, enabling clinicians to make more informed decisions. The study emphasizes that AI can identify patterns and correlations in large datasets, including patient records, which would be impossible for humans to discern quickly [

66]. This capability leads to more accurate diagnoses and personalized treatment plans, significantly improving patient outcomes. This deep and nuanced analysis enables cardiologists to make more informed decisions, grounded in a comprehensive understanding of each patient's unique clinical profile. AI tools, such as predictive analytics, can forecast potential disease progression and response to treatments, allowing cardiologists to tailor their therapeutic strategies to individual patients [

67,

68,

69,

70,

71,

72,

73,

74]. For instance, AI algorithms can predict adverse cardiac events, helping cardiologists to preemptively modify treatment plans to prevent such outcomes [

75]. Baldassarre et al. (2022) reviewed the state-of-the-art applications of AI in cardiovascular imaging. They discovered that AI algorithms, particularly in imaging, could predict future cardiac events by identifying subtle patterns not easily visible to the human eye. The study also noted the potential of AI in automating routine tasks in imaging analysis, thereby reducing workload and enhancing diagnostic accuracy. The automation and predictive capability are crucial for early intervention and personalized treatment in cardiac care [

75]. This proactive approach to patient management is transforming cardiology from a reactive to a predictive discipline, enhancing the quality and precision of care.

The future of AI in clinical settings is vibrant and holds immense promise. One of the key directions is the development of integrated AI systems that can seamlessly interact with EHRs, diagnostic tools, and telemedicine platforms. These integrated systems would enable the real-time analysis of patient data, facilitating immediate and informed clinical decisions. Additionally, there is an ongoing effort to develop AI models that are not only predictive but also prescriptive, suggesting optimal treatment pathways based on individual patient profiles. Another exciting development is the use of AI in personalized medicine, particularly in the field of pharmacogenomics, where AI can help determine the most effective medication based on a patient's genetic makeup. These advancements are poised to make clinical care increasingly personalized, effective, and efficient.

The impact of AI on patient care and outcomes is substantial and far-reaching. AI-enhanced diagnostics and predictive analytics lead to earlier detection and intervention, which is crucial in managing chronic diseases like heart failure [

77,

78,

79,

80]. AI's ability to provide personalized treatment recommendations can significantly improve patient adherence to treatment plans, which is a critical factor in chronic disease management. Moreover, AI-powered telemedicine and remote monitoring systems enable continuous patient care outside the hospital setting, improving patient convenience and reducing hospital readmissions [

79,

81]. These advancements not only enhance the quality of patient care but also have the potential to reduce healthcare costs by optimizing resource utilization and preventing adverse health outcomes.

Based on these study findings, AI's role in clinical pathways and treatment planning is pivotal, offering new dimensions to cardiologists' decision-making, and significantly impacting patient care and outcomes. The integration of AI in healthcare is not just a technological upgrade but a comprehensive transformation towards a more data-driven, efficient, and patient-centric approach to medical care.

7. Improvements in Diagnostic Accuracy and Efficiency

The enhancement in diagnostic accuracy and efficiency, fueled by advancements in medical technology and methodologies, has been pivotal in elevating patient safety and the overall quality of care. Modern diagnostic tools and procedures, underpinned by innovative technologies such as AI and ML, have enabled healthcare providers to detect diseases earlier and with greater precision. For instance, AI-powered imaging analysis has shown remarkable proficiency in identifying anomalies that might escape human detection, thus aiding in early intervention and potentially reducing the risk of severe disease progression [

82]. Obermeyer and Emanuel's study (2016) discusses the transformative impact of big data and ML on clinical medicine, with a particular focus on improving diagnostic accuracy. They highlight how ML algorithms, trained on large datasets, can predict patient outcomes and disease progression with remarkable accuracy. This ability to predict aids in early intervention, thus enhancing patient safety and care quality [

82]. The study emphasizes that leveraging big data in healthcare can lead to more informed clinical decisions, reducing the incidence of misdiagnosis and improving treatment outcomes. Enhanced diagnostic accuracy not only improves treatment outcomes but also significantly minimizes the risk of misdiagnosis, thus increasing patient safety. Additionally, the use of predictive analytics in diagnostics helps in identifying patients at high risk of certain conditions, thereby facilitating proactive management and tailored care [

83]. These improvements in diagnostic processes contribute substantially to higher standards of patient care and safety.

The strides made in diagnostic accuracy and efficiency also have significant economic implications, contributing to the cost-effectiveness and accessibility of healthcare. Advanced diagnostic technologies, while requiring initial investments, can lead to substantial long-term savings by reducing unnecessary procedures and hospital readmissions. For example, precise diagnostics can eliminate the need for redundant testing, thus cutting down healthcare costs. Recent study demonstrated that a rapid point-of-care diagnostic platform could effectively determine disease severity and guide treatment decisions. This tool represents a significant advancement in personalized medicine, as it allows for quick and accurate assessment of patient conditions, leading to timely and appropriate treatment. This innovation not only improves the quality of care for patients with sickle cell disease but also showcases the potential for similar tools in other medical conditions [

84]. Furthermore, the integration of digital technologies in diagnostics has enabled remote monitoring and telehealth services, making healthcare more accessible, especially in underserved regions [

85]. By facilitating early and accurate disease detection, these technologies help in avoiding expensive and extensive treatments at later disease stages, thus aligning with cost-effective healthcare models. The ongoing development of portable and user-friendly diagnostic devices also plays a significant role in democratizing healthcare access, allowing patients in remote areas to benefit from advanced diagnostic tools.

Looking forward, the trajectory of improvements in diagnostic accuracy and efficiency points towards a future brimming with innovations and transformative practices. One of the most promising areas is the continued integration of AI and ML in diagnostic procedures. Future advancements are expected to focus on refining these technologies to handle more complex and subtle medical conditions. Additionally, there is a growing trend towards personalized medicine, where diagnostics are tailored to individual genetic profiles, offering the potential for highly targeted and effective treatment strategies. Another exciting prospect is the development of smart diagnostic devices, which could provide real-time health monitoring and instant diagnostic insights, thereby revolutionizing the way healthcare is delivered. The integration of blockchain technology in managing patient data could also enhance the security and efficiency of diagnostic processes. These innovations, together with continued research and development, promise to further elevate the standards of diagnostic accuracy and efficiency, significantly impacting global healthcare practices.

The advancements, ranging from big data analytics and ML to telemedicine and blockchain technology, are crucial in enhancing patient safety and quality of care. They enable early and precise detection of diseases, tailored treatment approaches, and improved management of high-risk patients. Additionally, these technologies contribute to the cost-effectiveness and accessibility of healthcare, particularly in managing chronic diseases and in remote patient monitoring. Looking ahead, the continued evolution of AI, along with personalized medicine and secure data management, promises to further revolutionize healthcare, making it more efficient, patient-centric, and adaptable to future challenges.

8. Emerging Trends in AI and Cardiac Imaging

The integration of AI in cardiac imaging is paving the way for a new era in personalized medicine and prognostic modeling. AI's ability to analyze large datasets, recognize patterns, and learn from outcomes is revolutionizing how cardiologists approach patient care. Personalized medicine, tailored to the individual characteristics, risks, and preferences of each patient, is becoming increasingly achievable with AI-driven insights derived from cardiac imaging data.

In prognostic modeling, AI algorithms are being developed to predict patient outcomes more accurately. Johnson et al (2018) study provided a comprehensive overview of the role of AI in cardiology. A key finding of this study is the potential of AI to significantly enhance diagnostic accuracy and patient management in cardiology. The authors highlighted how AI algorithms, especially deep learning models, are increasingly used to interpret complex cardiac imaging data, such as echocardiograms and MRIs. They also noted AI's ability to integrate and analyze vast amounts of patient data, leading to more personalized and precise treatments. For example, AI could help identify patients at risk of conditions like atrial fibrillation or heart failure earlier than traditional methods. This study underscored AI's transformative potential in cardiac care, particularly in improving diagnostics, treatment personalization, and patient outcome prediction [