Submitted:

09 April 2025

Posted:

09 April 2025

You are already at the latest version

Abstract

Keywords:

I. Introduction

Main Contributions

- Proposed an Enhanced Grey Wolf Optimizer (SCGWO): A novel improvement of the GWO algorithm is presented, integrating Sinusoidal Mapping for population initialization and a Transverse-Longitudinal Crossover strategy, significantly enhancing both global exploration and local exploitation capabilities.

- Introduced Dynamic Weight Adjustment Mechanism: A dynamic weight adjustment mechanism is developed to adaptively balance the roles of α, β, and δ wolves, ensuring better exploration in early stages and faster convergence in later stages.

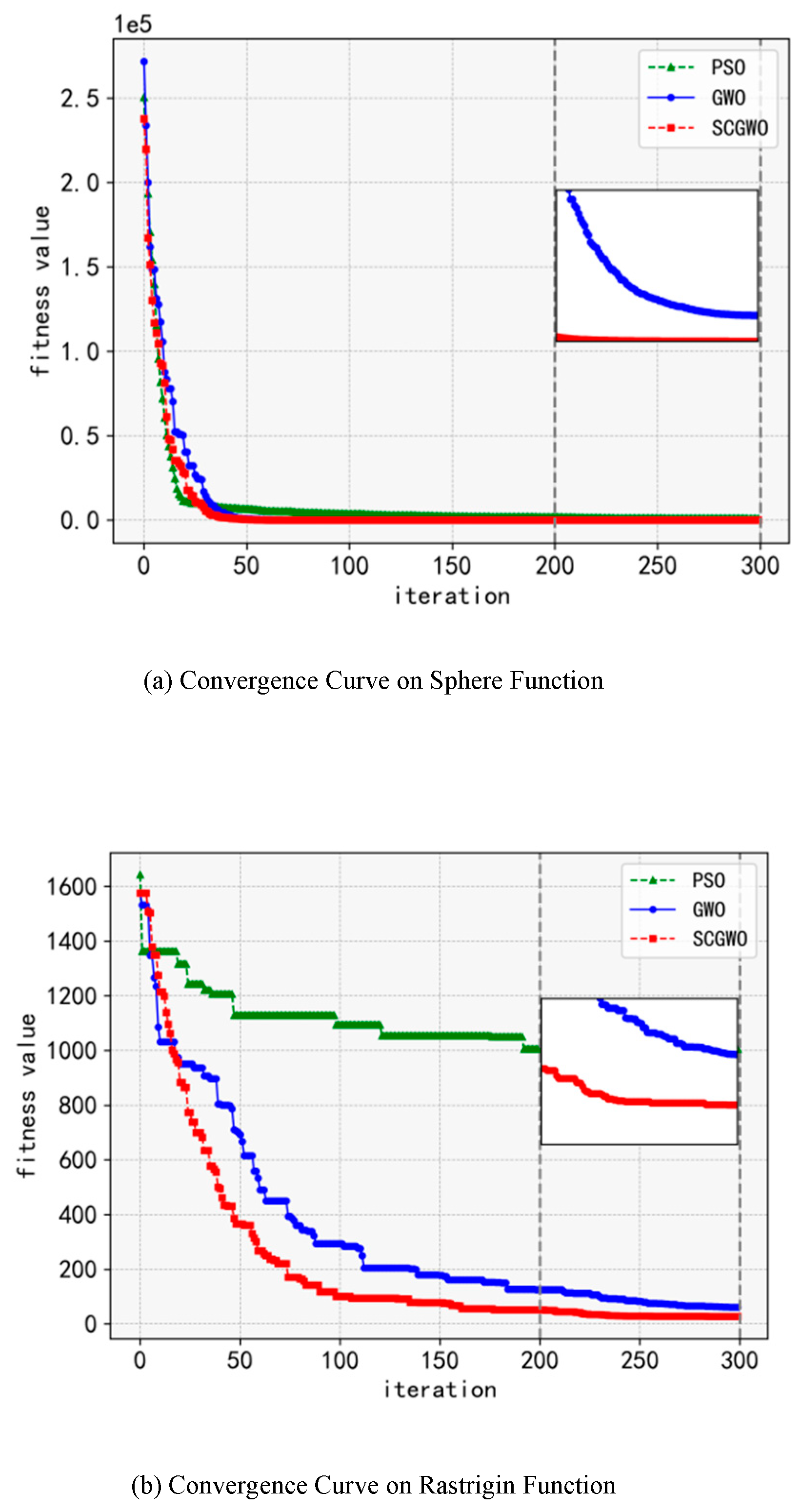

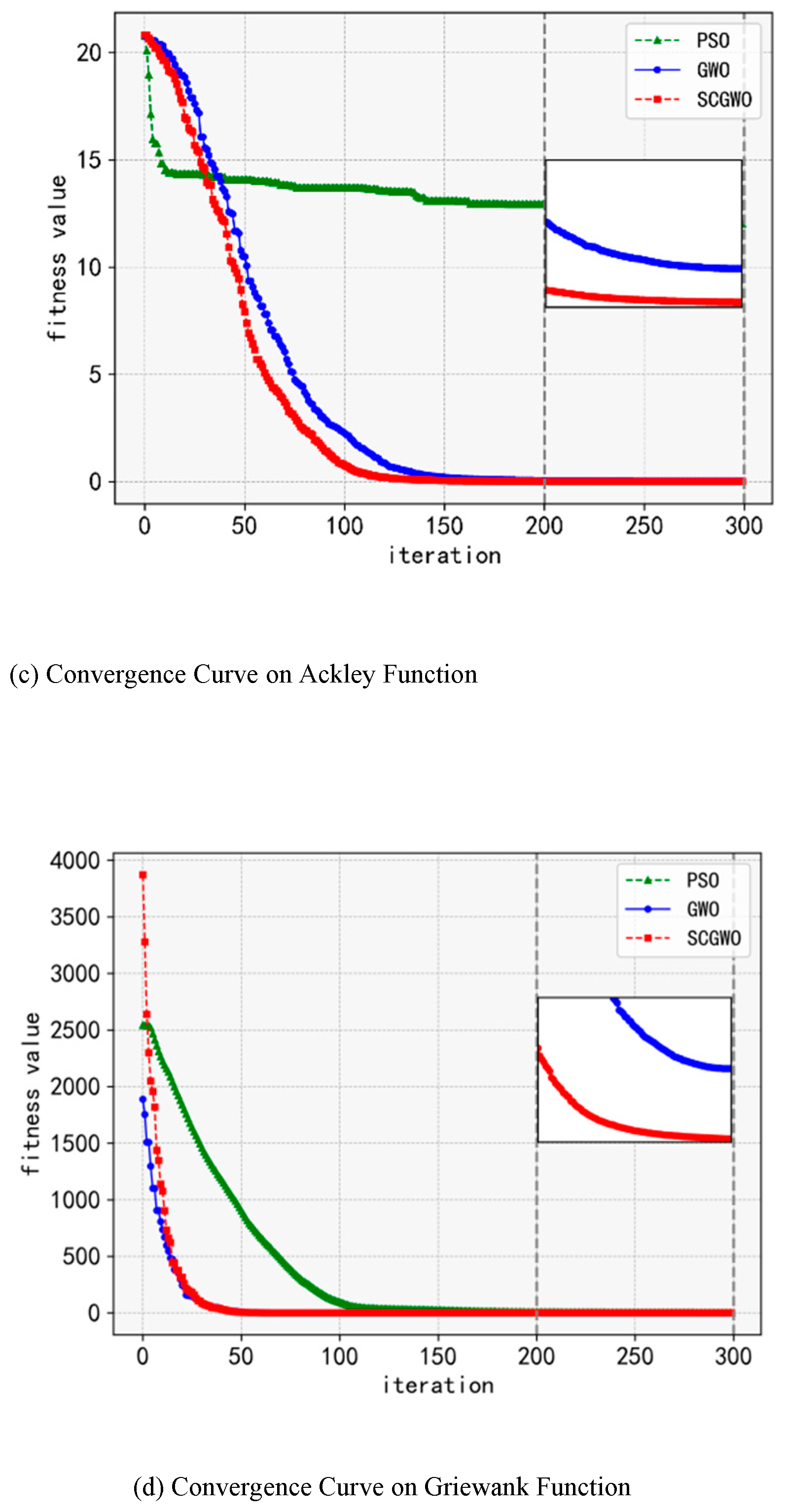

- Evaluated on Comprehensive Benchmark Functions: The proposed SCGWO is rigorously tested on 10 complex benchmark functions, demonstrating superior performance in terms of convergence speed, solution accuracy, and robustness when compared to the classic GWO.

- Validated through Real-World Application: The effectiveness of SCGWO is further validated through its application to the hyperparameter optimization of a random forest model, achieving better tuning results than conventional optimization methods.

II. Related Work

A. Grey Wolf Optimizer (GWO)

B. Improvements to GWO

III. Enhanced Grey Wolf Optimizer (SCGWO)

A. Sinusoidal Chaos Mapping for Population Initialization

B. Transverse-Longitudinal Crossover Strategy

IV. Simulations and Results

A. Experiment Setup

B. Results and Discussion

V. Random Forest Hyperparameter Optimization

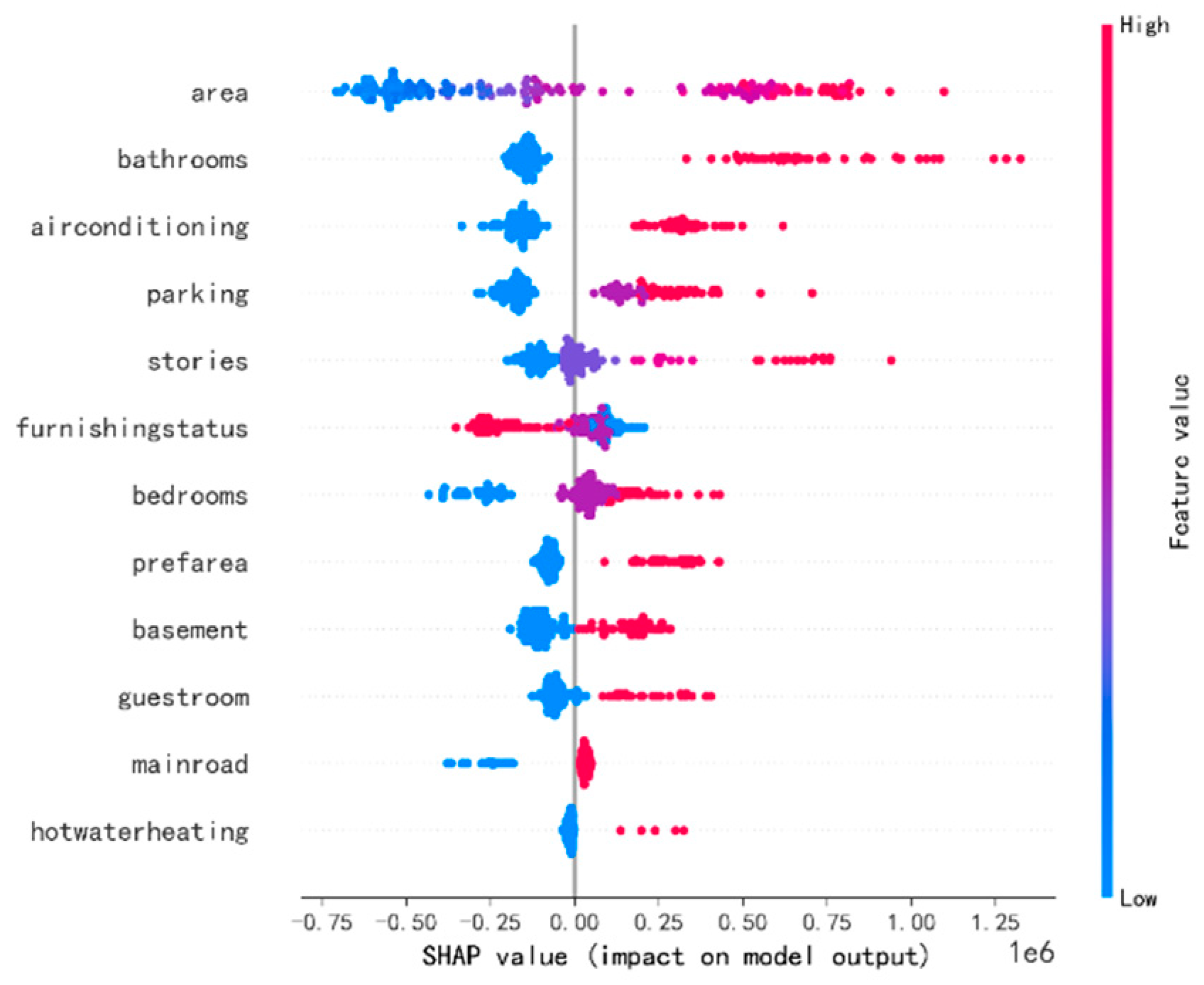

VI. Shap Analysis

VII. Future Work

VIII. Conclusion

References

- Behnam Abdollahzadeh, Reza Ebrahimi, and Saeed Arani Arani. Improved grey wolf optimization algorithm based on chaos theory for optimization problems. Applied Soft Computing, 90:106187, 2020.

- Sanjay Arora and Satvir Singh. A review on nature-inspired optimization algorithms. International Journal of Industrial Engineering Computations, 10(4):681–709, 2019.

- Jagdish Bansal and Himanshu Sharma. Enhanced grey wolf optimizer with levy flight for engineering design optimization. Journal of Computational Design and Engineering, 9(1):23– 38, 2022.

- Xingyuan Bu, Yuwei Wu, Zhi Gao, and Yunde Jia. Deep convolutional network with locality and sparsity constraints for texture classification. Pattern Recognition, 91:34–46, 2019. [CrossRef]

- Gaurav Dhiman and Vijay Kumar. Hybrid optimization strategies combining grey wolf optimizer with differential evolution and simulated annealing. Expert Systems with Applications, 159:113584, 2021.

- Ebrahim Emary, Hossam M Zawbaa, and Aboul Ella Hassanien. Binary grey wolf optimization approaches for feature selection. Neurocomputing, 172:371–381, 2016. [CrossRef]

- Zhi Gao, Yuwei Wu, Xingyuan Bu, Tan Yu, Junsong Yuan, and Yunde Jia. Learning a robust representation via a deep network on symmetric positive definite manifolds. Pattern Recognition, 92:1–12, 2019. [CrossRef]

- Soliman Khalil. Machine learning model for housing dataset. https://www.kaggle.com/code/solimankhalil/ ml-model-linear-regression-housing-dataset, 2021. Kaggle.

- Keqin Li, Lipeng Liu, Jiajing Chen, Dezhi Yu, Xiaofan Zhou, Ming Li, Congyu Wang, and Zhao Li. Research on reinforcement learning based warehouse robot navigation algorithm in complex warehouse layout. arXiv preprint arXiv:2411.06128, 2024. [CrossRef]

- Xinjin Li, Yuanzhe Yang, Yixiao Yuan, Yu Ma, Yangchen Huang, and Haowei Ni. Intelligent vehicle classification system based on deep learning and multi-sensor fusion. Preprints, July 2024. [CrossRef]

- Dong Liu. Contemporary model compression on large language models inference. arXiv preprint arXiv:2409.01990, 2024. [CrossRef]

- Dong Liu. Mt2st: Adaptive multi-task to single-task learning. arXiv preprint arXiv:2406.18038, 2024. [CrossRef]

- Dong Liu and Meng Jiang. Distance recomputator and topology reconstructor for graph neural networks. arXiv preprint arXiv:2406.17281, 2024. [CrossRef]

- Dong Liu, Meng Jiang, and Kaiser Pister. Llmeasyquant– an easy to use toolkit for llm quantization. arXiv preprint arXiv:2406.19657, 2024. [CrossRef]

- Dong Liu, Roger Waleffe, Meng Jiang, and Shivaram Venkataraman. Graphsnapshot: Graph machine learning acceleration with fast storage and retrieval. arXiv preprint arXiv:2406.17918, 2024. [CrossRef]

- Xiaoyi Liu, Zhou Yu, Lianghao Tan, Yafeng Yan, and Ge Shi. Enhancing skin lesion diagnosis with ensemble learning. 2024.

- Danqing Ma, Meng Wang, Ao Xiang, Zongqing Qi, and Qin Yang. Transformer-based classification outcome prediction for multimodal stroke treatment. 2024.

- Seyedali Mirjalili, Seyed Mohammad Mirjalili, and Andrew Lewis. Grey wolf optimizer. Advances in Engineering Software, 69:46–61, 2014.

- Haowei Ni, Shuchen Meng, Xupeng Chen, Ziqing Zhao, Andi Chen, Panfeng Li, Shiyao Zhang, Qifu Yin, Yuanqing Wang, and Yuxi Chan. Harnessing earnings reports for stock predictions: A qlora-enhanced llm approach. arXiv preprint arXiv:2408.06634, 2024. [CrossRef]

- Haowei Ni, Shuchen Meng, Xieming Geng, Panfeng Li, Zhuoying Li, Xupeng Chen, Xiaotong Wang, and Shiyao Zhang. Time series modeling for heart rate prediction: From arima to transformers. arXiv preprint arXiv:2406.12199, 2024. [CrossRef]

- Junran Peng, Xingyuan Bu, Ming Sun, Zhaoxiang Zhang, Tieniu Tan, and Junjie Yan. Large-scale object detection in the wild from imbalanced multi-labels. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 9709–9718, 2020. [CrossRef]

- Yiyi Tao. Sqba: sequential query-based blackbox attack. In Fifth International Conference on Artificial Intelligence and Computer Science (AICS 2023), volume 12803, page 128032Q. International Society for Optics and Photonics, SPIE, 2017.

- Yiyi Tao. Meta learning enabled adversarial defense. In 2023 IEEE International Conference on Sensors, Electronics and Computer Engineering (ICSECE), pages 1326–1330, 2023.

- Zeyu Wang, Yue Zhu, Zichao Li, Zhuoyue Wang, Hao Qin, and Xinqi Liu. Graph neural network recommendation system for football formation. Applied Science and Biotechnology Journal for Advanced Research, 3(3):33–39, 2024.

- Yijie Weng and Jianhao Wu. Fortifying the global data fortress: a multidimensional examination of cyber security indexes and data protection measures across 193 nations. International Journal of Frontiers in Engineering Technology, 6(2), 2024. [CrossRef]

- Yijie Weng, Jianhao Wu, Tara Kelly, and William Johnson. Comprehensive overview of artificial intelligence applications in modern industries. arXiv preprint arXiv:2409.13059, 2024. [CrossRef]

- Wangjiaxuan Xin, Kanlun Wang, Zhe Fu, and Lina Zhou. Let community rules be reflected in online content moderation. 2024.

- Xiaofei Yang and Jinsong Guo. A novel hybrid algorithm of ant colony optimization and grey wolf optimizer for continuous optimization problems. Expert Systems with Applications, 150:113282, 2020.

- Haowei Zhang, Kang Gao, Huiying Huang, Shitong Hou, Jun Li, and Gang Wu. Fully decouple convolutional network for damage detection of rebars in rc beams. Engineering Structures, 285:116023, 2023. [CrossRef]

- Hongye Zheng, Bingxing Wang, Minheng Xiao, Honglin Qin, Zhizhong Wu, and Lianghao Tan. Adaptive friction in deep learning: Enhancing optimizers with sigmoid and tanh function. arXiv preprint arXiv:2408.11839, 2024. [CrossRef]

- Hua Zhu, Yi Wang, and Jian Zhang. An adaptive multipopulation differential evolution with cooperative co-evolution for high-dimensional optimization. Swarm and Evolutionary Computation, 44:226–239, 2019.

- Wenbo Zhu. Optimizing distributed networking with big data scheduling and cloud computing. In International Conference on Cloud Computing, Internet of Things, and Computer Applications (CICA 2022), volume 12303, pages 23–28. SPIE, 2022.

- Pluhacek M, Kazikova A, Kadavy T, Viktorin A, Senkerik R. Leveraging large language models for the generation of novel metaheuristic optimization algorithms. In Proceedings of the Companion Conference on Genetic and Evolutionary Computation 2023 Jul 15 (pp. 1812-1820).

- Mai Z, Zhang J, Xu Z, Xiao Z. Is llama 3 good at sarcasm detection? a comprehensive study. In Proceedings of the 2024 7th International Conference on Machine Learning and Machine Intelligence (MLMI) 2024 Aug 2 (pp. 141-145).

- Mai Z, Zhang J, Xu Z, Xiao Z. Financial sentiment analysis meets llama 3: A comprehensive analysis. In Proceedings of the 2024 7th International Conference on Machine Learning and Machine Intelligence (MLMI) 2024 Aug 2 (pp. 171-175). [CrossRef]

- Xiao Z, Blanco E, Huang Y. Analyzing large language models’ capability in location prediction. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024) 2024 May (pp. 951-958).

- Xiao Z, Mai Z, Xu Z, Cui Y, Li J. Corporate event predictions using large language models. In 2023 10th International Conference on Soft Computing & Machine Intelligence (ISCMI) 2023 Nov 25 (pp. 193-197). IEEE.

- Zhang J, Mai Z, Xu Z, Xiao Z. Is llama 3 good at identifying emotion? a comprehensive study. In Proceedings of the 2024 7th International Conference on Machine Learning and Machine Intelligence (MLMI) 2024 Aug 2 (pp. 128-132).

- Xiao Z, Mai Z, Cui Y, Xu Z, Li J. Short interest trend prediction with large language models. In Proceedings of the 2024 International Conference on Innovation in Artificial Intelligence 2024 Mar 16 (pp. 1-1).

- Liu Y, Shen X, Zhang Y, Wang Z, Tian Y, Dai J, Cao Y. A Systematic Review of Machine Learning Approaches for Detecting Deceptive Activities on Social Media: Methods, Challenges, and Biases. arXiv preprint arXiv:2410.20293. 2024 Oct 26. [CrossRef]

- Cao Y, Dai J, Wang Z, Zhang Y, Shen X, Liu Y, Tian Y. Machine Learning Approaches for Depression Detection on Social Media: A Systematic Review of Biases and Methodological Challenges. Journal of Behavioral Data Science. 2025 Feb 14;5(1):1-36. [CrossRef]

| Function | Name | Search Range | DIM | OPT Value |

|---|---|---|---|---|

| F1 | Sphere | [-100, 100] | 30 | 0 |

| F2 | Schwefel2.22 | [-10, 10] | 30 | 0 |

| F3 | Schwefel1.2 | [-100, 100] | 30 | 0 |

| F4 | Schwefel2.21 | [-100, 100] | 30 | 0 |

| F5 | Rosenbrock | [-30, 30] | 30 | 0 |

| F6 | Step | [-100, 100] | 30 | 0 |

| F7 | Rastrigin | [-5.12, 5.12] | 30 | 0 |

| F8 | Ackley | [-32, 32] | 30 | 0 |

| F9 | Griewank | [-600, 600] | 30 | 0 |

| F10 | Penalized | [-50, 50] | 30 | 0 |

| F11 | Michalewicz | [-100,100 | 30 | 0 |

| F12 | Levy | [-10,10] | 30 | 0 |

| F13 | Easom | [-100,100] | 30 | 0 |

| F14 | Bird | [-100,100] | 30 | 0 |

| F15 | Rotated Hyper-Ellipsoid | [-30,30] | 30 | 0 |

| F16 | Weierstrass | [-100,100 | 30 | 0 |

| Method | MAE (train) | RMSE (train) | MAE (test) | RMSE (test) |

|---|---|---|---|---|

| Default | 285109 | 398584 | 957916 | 1357261 |

| GWO | 413922 | 547712 | 922632 | 1262471 |

| SCGWO | 448753 | 616299 | 917518 | 1238437 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).