1. Introduction

Time series forecasting is a critical challenge with significant practical implications across various domains, such as economics, energy, weather, traffic monitoring, and healthcare [

1,

2,

3,

4]. The field of economics, in particular, has demonstrated a longstanding interest in accurately predicting economic time series data. Financial markets, which inherently generate data in time series form, have been extensively studied over the past decades. The primary goal of these studies has been to uncover deeper insights into market trends. As researchers gain a better understanding of market behaviors, they are increasingly able to develop more effective investment strategies, thereby enhancing financial decision-making processes. However, the efficient market hypothesis (EMH) [

5] posits that asset prices reflect all available information, theoretically rendering them unpredictable as new data is instantly assimilated. Despite this, practical forecasting efforts continue to show that financial markets are indeed a combination of efficient and non-efficient markets, suggesting that they are, to some extent, predictable [

6,

7].

Time series forecasting primarily aims to project future values based on historical data, often exploiting repeating long-term or short-term patterns inherent in the data. Over the years, the field has explored various methodologies to capture these patterns, including linear and nonlinear time series models, and artificial intelligence techniques. However, in the economic field, the characteristics of data present unique challenges that complicate the application of these models. Firstly, financial data are highly volatile and can exhibit extreme value changes, which undermines the assumption of stable patterns over time [

8]. Secondly, compared to other types of time series, financial data typically exhibit more complex seasonality and more variable trends [

9]. This variability is often exacerbated by sudden and unpredictable events, adding a layer of complexity to the forecasting. These factors make economic forecasting particularly challenging.

Different approaches for economic time series forecasting have been explored, ranging from econometric theory and statistical methodologies to artificial intelligence models. Statistical methods, such as the autoregressive integrated moving average (ARIMA) model [

10,

11] and the semi-functional partial linear model [

12], have also been employed in this field. These models benefit from a solid mathematical foundation and offer strong interpretability [

13], but their predictions are often imprecise due to the nonlinear and irregular nature of economic data.

In response to the complexity of economic time series, neural networks and support vector machines (SVM) have demonstrated considerable success in modeling non-linear relationships, offering robust alternatives to traditional linear models [

14]. Furthermore, recurrent neural networks (RNNs) and long short-term memory (LSTM) networks have become particularly prevalent due to their ability to effectively extract time dependencies from time series data, thanks to the memory features obtained from their feedback mechanisms [

15,

16]. More recently, transformer-based models have emerged for this task, employing attention mechanisms capable of learning global context and long-term dependencies, thus enhancing the depth of time series analysis [

17,

18].

As deep learning expands its application in time series forecasting, the integration of natural language processing (NLP) techniques is increasingly transforming economic predictions. Textual data, available in real-world economic time series forecasting applications, often contains nuanced information that may surpass the insights provided by numerical financial series alone [

19]. Recent studies have begun using textual data in economic forecasting models to capture these additional insights. For instance, some models analyze large volumes of financial news to identify sentiments and factual information that may affect investor behavior and market trends [

20].

In financial market forecasting, Farimani et al. [

21] propose generating sentiment-based features over time and combining them with time-series data to improve predictions. Similarly, Reis Filho et al. [

22] focus on agricultural commodity prices, using a low-dimensional representation of domain-specific text data enriched with selected keywords, which addresses issues of high dimensionality and data sparsity, improving forecast accuracy. Baranowski et al. [

23] take a different approach, developing a tone shock measure from European Central Bank communications to predict monetary policy decisions. In cryptocurrency forecasting, Erfanian et al. [

24] apply machine learning methods such as SVR, OLS, Ensemble learning, and MLP to examine how macroeconomic, microeconomic, technical, and blockchain indicators relate to Bitcoin prices over short and long terms. These studies show how NLP can enhance time series forecasting by integrating textual data with traditional economic indicators.

These studies demonstrate the potential of natural language processing (NLP) to enhance time series forecasting by incorporating textual data alongside traditional economic indicators. However, most existing approaches simplify the complexity of textual data by focusing on specific aspects, such as sentiment polarity, tone, or keyword-based representations, neglecting the rich, nuanced information embedded in the full text. This narrow scope limits their ability to capture the non-linear and qualitative impacts of news and other unstructured data sources. Incorporating comprehensive news content into financial forecasting can enhance datasets by introducing additional contextual information, improving the adaptability and accuracy of predictive models. Such an approach allows models to better reflect real-world dynamics, capturing the multifaceted interactions that drive market behavior. Therefore, a more comprehensive framework is required to address these challenges, leveraging both unstructured textual data and structured time series to establish a robust and realistic forecasting methodology.

This paper introduces a novel approach to economic time series forecasting that integrates textual information directly into the predictive framework as an additional variable. Unlike sentiment-centric methods, this approach fully leverages the richness of textual data, such as economic news and reports, to extract predictive signals absent from numerical data alone. By treating textual data as an extension of time series variables, the proposed method provides a unified framework for modeling the interplay between textual and numerical inputs. The primary contributions of this study are as follows:

(1) Multimodal Attention-Based Integration: The proposed method employs an inverted attention mechanism to directly model interactions between textual data and multivariate time series. By treating text as an additional variable, the model captures intricate relationships between historical price movements and textual information, enabling more accurate and robust economic forecasts.

(2) Seamless Integration of Textual and Numerical Data:: This study utilizes matched historical price and news datasets to construct multimodal datasets. By combining the contextual depth of textual data with the temporal patterns of price data, the proposed method enhances forecasting performance.

(3) Comprehensive Experimental Validation: Extensive experiments on economic datasets demonstrate the effectiveness of the proposed approach. Results highlight the model’s ability to outperform traditional forecasting techniques by leveraging both textual and numerical inputs, offering deeper insights into market dynamics.

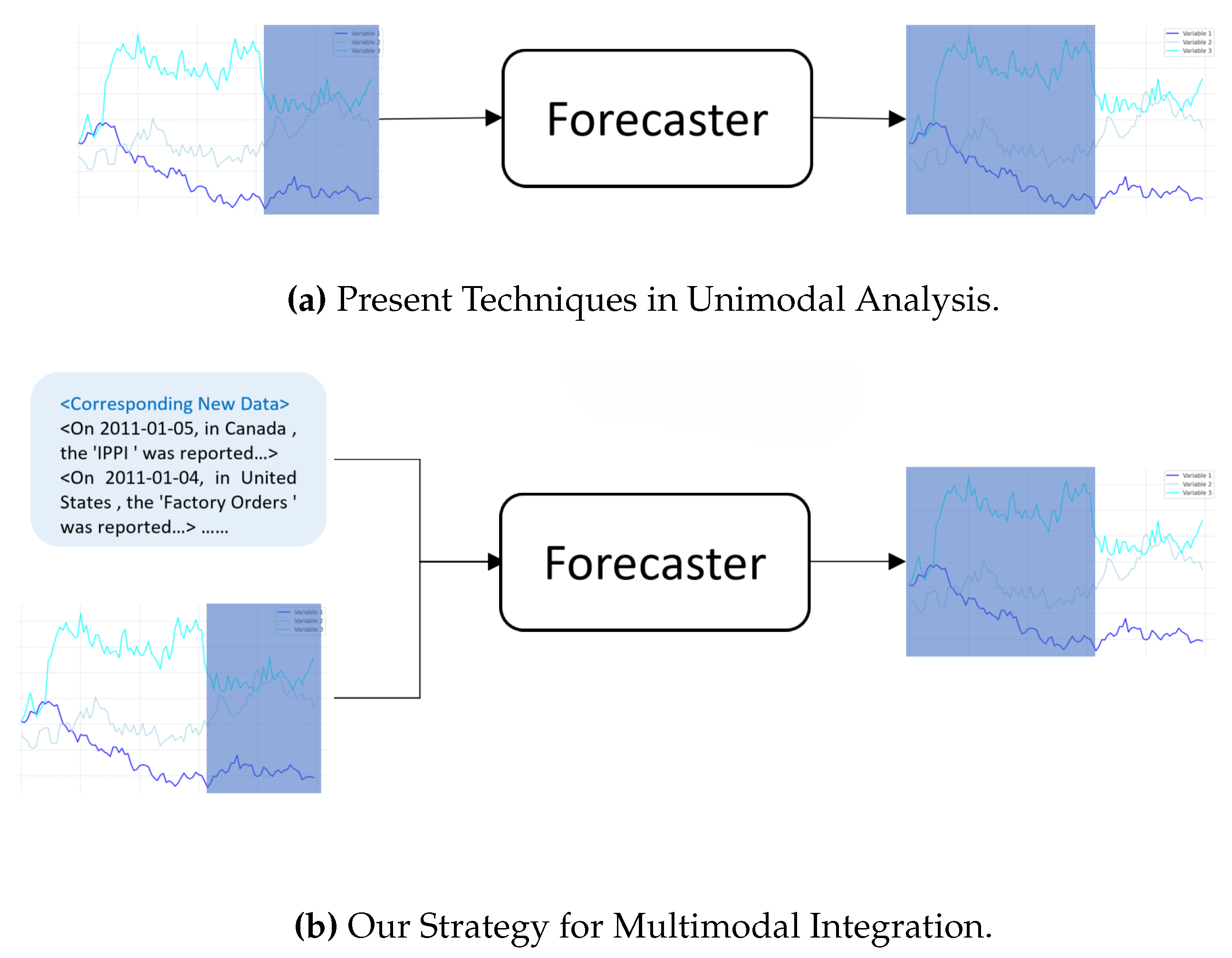

Figure 1 compares (b) our multimodal learning method with the existing (a) unimodal approach, demonstrating how this integration enhances the model’s ability to utilize diverse data sources and extract valuable insights, thereby improving the accuracy of time series forecasting.

The remainder of this paper is organized as follows:

Section 2 provides a detailed exploration of existing methods in time series forecasting.

Section 3 describes the proposed method and outlines the framework in detail.

Section 4 discusses the dataset, presents the experimental results, and explores some notable findings. Finally,

Section 5 concludes the paper.

4. Experiment

4.1. Datasets

In the economic domain, despite the abundance of news text and historical pricing data, there is a notable lack of publicly available datasets that directly correlate these two types of information, especially in analyzing the impact of textual news on economic indicators. To validate the ideas presented, we developed two specialized datasets.

The first dataset focuses on gold price forecasting and investigates the interactions between key economic events in major global economies—Canada, Japan, the US, Russia, the European Union and China—and fluctuations in gold prices. This dataset, covering the period from January 2019 to December 2023, captures how economic news from these influential regions impacts the volatile gold market

1. The second dataset targets forex forecasting, compiling relevant economic information

2 from the US, Britain, Japan, and other developed countries. It includes news on all available indicators, reflecting past economic data

3 and volatility assessments, providing a comprehensive view of global forex dynamics.

The original structured data, as illustrated in

Table 1, could not be directly utilized due to format incompatibilities with language models, which are primarily designed to process and generate natural language text. Therefore, for both datasets, the construction process involved:

Data Extraction and Transformation: We extracted raw data from existing public sources, comprising the date of the economic event, the country, event type, and associated economic indicators (actual and previous values).

Descriptive Text Generation: For each record, detailed descriptive text was generated using a predefined template. This template incorporates the event’s date, name, the current reported indicator value, and its comparison to the previous value. For example, for January 1, 2019, the entry reads: “On 01/01/19, the Caixin Manufacturing PMI for December was reported at 49.70, down from the previous value of 50.20, indicating a decrease of 0.50.” This approach not only provides the numerical data but also emphasizes the significance of the change in indicators.

Change Calculation: To highlight the importance of fluctuations in economic indicators, we calculated the difference between reported values and previous values, incorporating this variance directly into each event’s textual description.

Alignment with Historical Prices: We aligned these textual descriptions with corresponding historical price data in the time dimension. For dates on which no event-related text was available, we inserted a placeholder phrase (“no news”) to maintain dataset completeness and temporal continuity.

Ultimately, we developed two datasets tailored to financial market analysis. The first dataset focuses exclusively on gold prices, consisting of 1352 samples. The second dataset encompasses foreign exchange indices from three regions, totaling 2347 samples. Each dataset offers a structured compilation of the relevant financial data paired with detailed economic event descriptions to enhance the analysis of market behaviors and trends.

4.2. Evaluation Metrics

To assess the performance of the forecasting models, we adopt four evaluation metrics: Mean Squared Error (MSE), Root Mean Square Error (RMSE), Mean Absolute Error (MAE), and Mean Absolute Percentage Error (MAPE). MSE focuses on larger prediction errors, making it sensitive to outliers. RMSE provides an interpretable error magnitude by aligning the scale of errors with the original data. MAE offers a balanced view by equally weighting errors of different magnitudes, while MAPE expresses errors as a percentage of the actual values, indicating the average deviation in percentage terms. These metrics are defined as follows:

where

and

denote the actual and predicted values, respectively, and

n is the total number of samples.

4.3. Experimental Settings

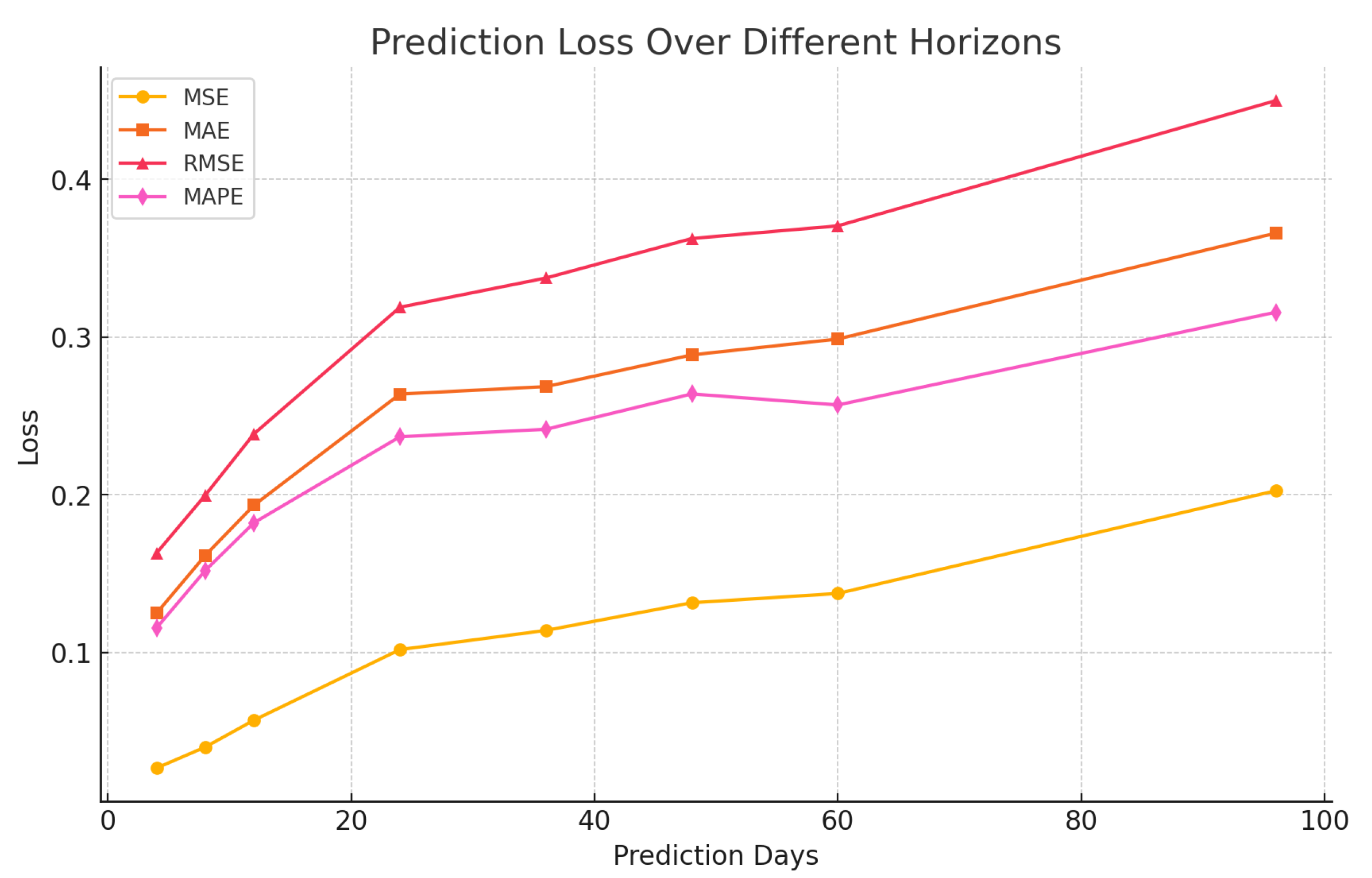

The proposed framework is trained using the MSE loss function and optimized with the ADAM [

39], initialized with a learning rate of

. Considering the small size of our datasets compared to mainstream configurations, we conducted an evaluation using varying prediction horizons

days to determine the most practical forecast horizon for economic forecasting. As illustrated in

Figure 4, based on the performance metrics and the practical utility of the predictions, we selected the 12-day prediction horizon. The time series historical window was set at 96 days, providing a comprehensive temporal context for generating accurate and actionable forecasts.

As described in

Section 3.2, we incorporated only the most recent day’s news as textual input. Aligning textual inputs with the full historical window would produce text embeddings with dimensions far exceeding those of the time series features, creating an imbalance between the two modalities and hindering effective fusion.

4.4. Baselines

To evaluate the effectiveness of the proposed MM-iTransformer, we compare its performance with the baseline iTransformer model. This analysis highlights the added value of incorporating textual information into economic forecasting through our multimodal approach.

In addition to the iTransformer and MM-iTransformer comparison, we employed several classical and baseline methods for further evaluation. First, we used the ARIMA model [

40], a well-established approach for time series forecasting, to assess the advantages of transformer-based architectures over traditional statistical models. Additionally, we included the random walk model [

41], a widely-used benchmark in financial forecasting, to establish a baseline and quantify the predictive improvements achieved by our proposed MM-iTransformer framework.

4.5. Experimental Results

This study compares the MM-iTransformer framework with the iTransformer, ARIMA, and the Random Walk Model using Forex and Gold-price datasets. We primarily focus on the Mean Squared Error (MSE), as the models are trained using this metric. Additionally, we evaluate their predictive capabilities using Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), and Mean Absolute Percentage Error (MAPE). The results, presented in

Table 2, show:

Comparison between MM-iTransformer and iTransformer: The MM-iTransformer integrates textual data with historical price data and demonstrated superior performance over the iTransformer. On the Forex dataset, it reduced the MSE from 0.022 to 0.019, showing a 13.64% reduction. For the Gold-price dataset, the MSE was reduced from 0.065 to 0.057, a reduction of 12.31%. These results highlight the significant benefits of incorporating textual data into forecasting models, enhancing the MM-iTransformer’s accuracy and robustness.

Single-variable and Multi-variable Prediction Performance: MM-iTransformer excelled in both single-variable (Gold-price) and multi-variable (Forex) predictions, affirming its capability to utilize textual data effectively. This inclusion provides additional predictive signals, enabling the model to capture market dynamics more accurately than using historical price data alone.

Comparison with Classical Models: Compared to classical models, both the MM-iTransformer and iTransformer exhibited superior performance, with MM-iTransformer consistently achieving the lowest MSE across datasets. However, the iTransformer did not consistently outperform the Random Walk Model on the Forex dataset, possibly due to difficulties in adapting to abrupt market changes—a scenario where the Random Walk Model, which assumes price continuity, may have an advantage.

In summary, the MM-iTransformer framework marks a significant advancement in economic forecasting by leveraging multimodal data, particularly through the integration of textual information. This study confirms the potential of advanced deep learning techniques to revolutionize financial market predictions by incorporating diverse data types.

Ablation study: To assess the impact of temporally aligned news on prediction accuracy, we conducted an ablation study by randomizing the order of news texts within the time series window. This change disrupts the contextual relevance of the news data, simulating scenarios where financial news is not aligned with historical market prices. By removing this alignment, we could isolate and examine the specific effect of temporal relevance of textual inputs on forecasting performance.

As shown in

Table 3, using randomized news texts shows a clear decline in performance compared to temporally aligned news. In the Gold-price dataset, the MSE increases to 0.069, and for the Forex dataset, it increases to 0.021 when random news is used. This indicates that non-contextual, misaligned news does not contribute meaningfully to prediction accuracy and may even reduce it. The observed rise in error rates underscores the critical role of temporal alignment, affirming that relevant, timely news data significantly enhances forecasting accuracy.

Statistical Significance Analysis of Textual Data Integration: To rigorously ascertain the impact of integrating textual data into economic forecasting models, we utilized the Diebold-Mariano (DM) test to statistically evaluate the enhancements observed with the MM-iTransformer compared to the traditional iTransformer. The DM test results are summarized in

Table 4, where the DM statistics for both the Gold-price and Forex datasets are presented.

The DM test results show statistically significant improvements in forecasting accuracy when textual data is integrated. Specifically, the Gold-price dataset yielded a DM statistic of 2.030 with a p-value of 0.043, and the Forex dataset exhibited a DM statistic of 3.010 with a p-value of 0.003. These results indicate that the improvements in predictive performance with the MM-iTransformer, which incorporates textual data, are statistically significant compared to the iTransformer, which relies solely on historical price data.

These findings provide statistical evidence that supports the hypothesis that textual integration enhances the ability of forecasting models to capture complex market dynamics more effectively than models relying on time-series data alone. This validation confirms the relevance and efficacy of incorporating textual data into economic forecasting models, reinforcing the value of multimodal approaches in enhancing prediction accuracy.

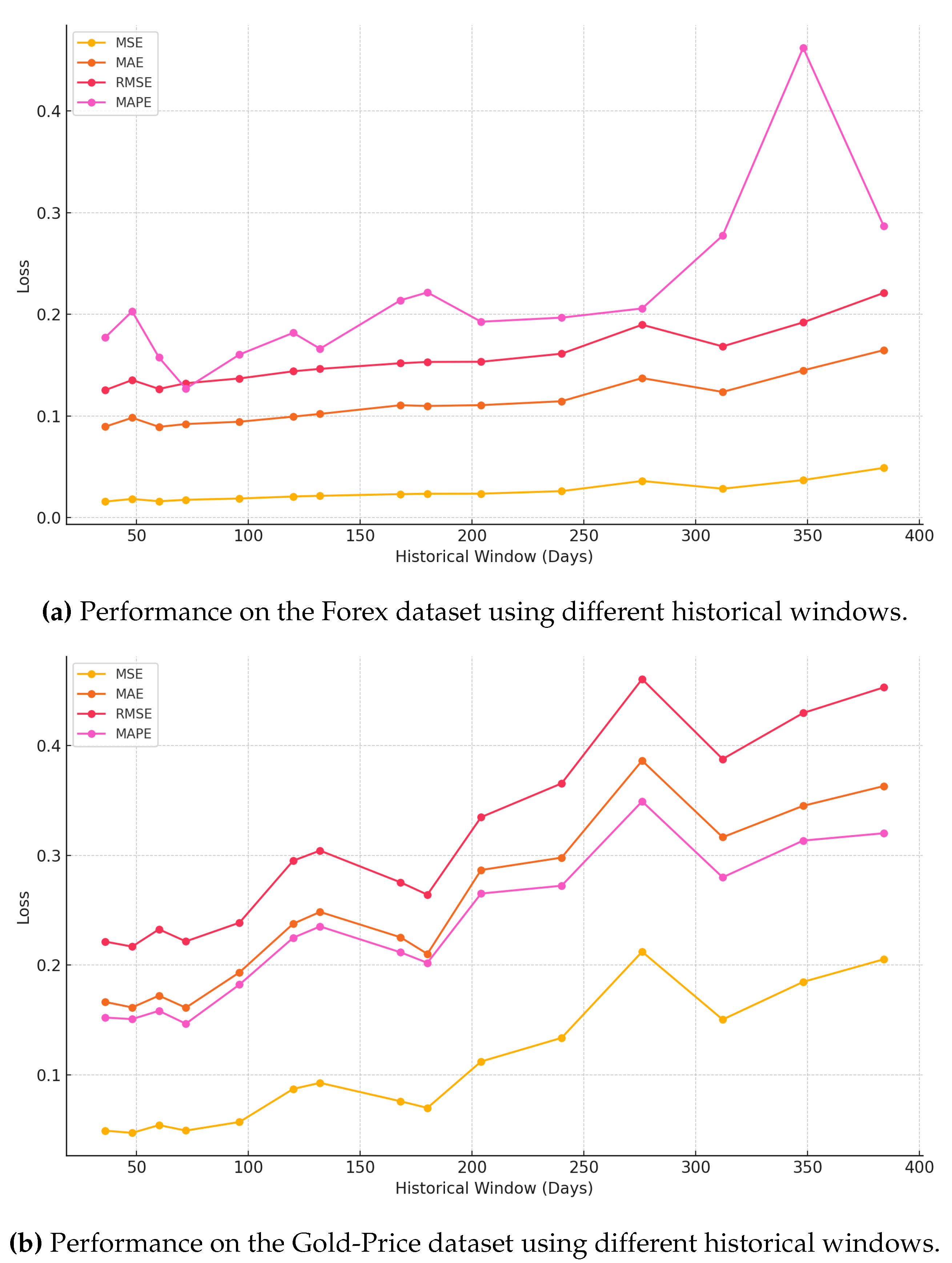

Analysis of Historical Window Lengths: To assess model performance stability, we experimented with various historical window lengths ranging from 36 to 384 days on the Gold-Price and Forex datasets. The results indicate that shorter windows typically result in lower loss, with a 96-day window yielding optimal performance, as shown in

Figure 5. Extending the window beyond 96 days led to increased errors from outdated and less relevant data, which is detrimental for short-term forecasting.

The sizes of our datasets—1352 samples for gold prices and 2347 for Forex—also guided our choice of window length to avoid overfitting. Larger windows risk models memorizing specific data features instead of generalizing from underlying patterns, reducing their effectiveness on unseen data. Furthermore, we did not opt for smaller windows as they could miss significant economic cycles and trends crucial for robust predictions. The chosen 96-day window provides a balanced approach, capturing essential market dynamics without the noise and overfitting associated with longer windows. This balance facilitates efficient learning and reliable forecasting, making it well-suited for our dataset constraints.

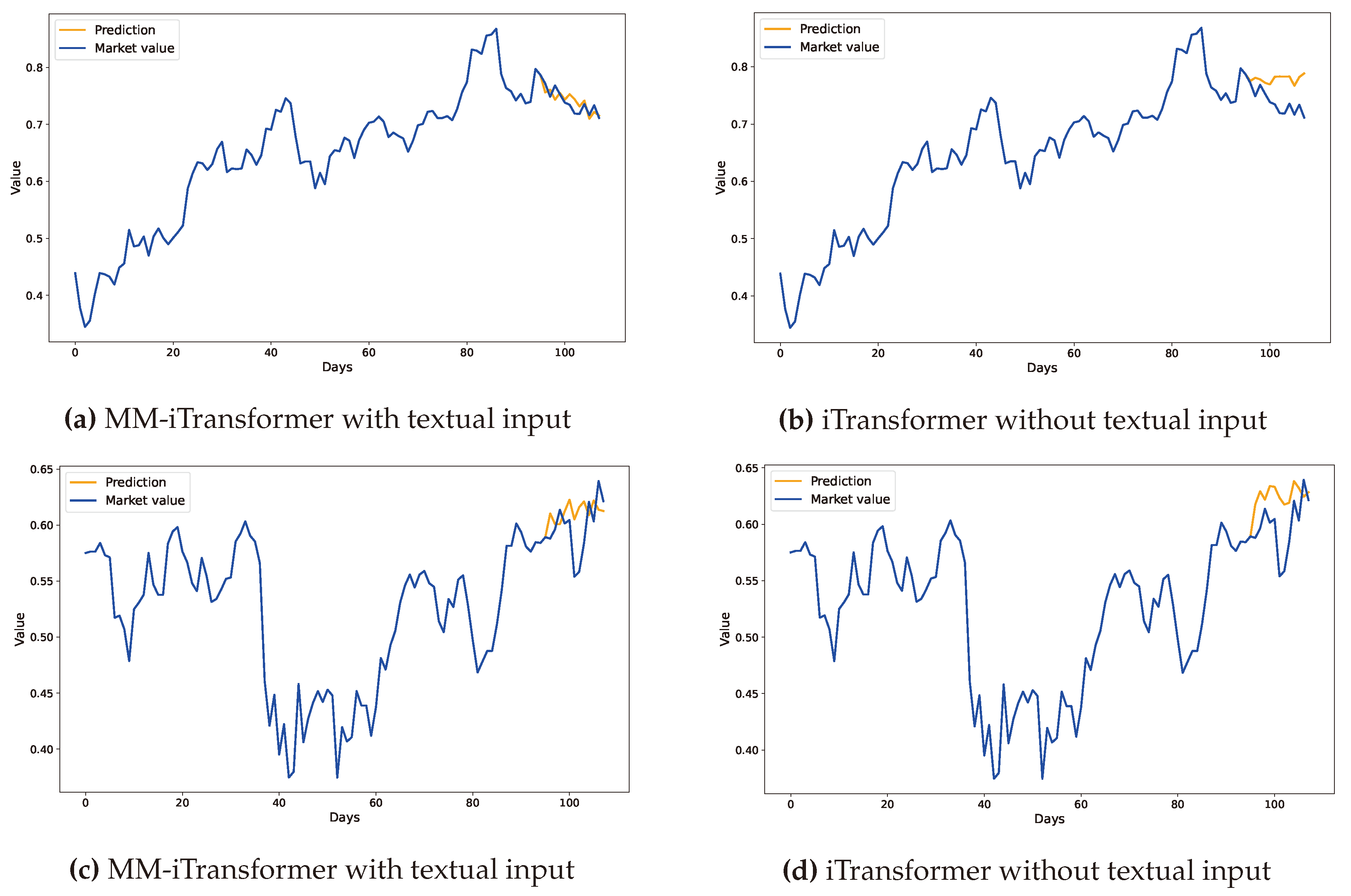

Example Forecasting Results:Figure 6 presents a detailed comparison of forecasting outcomes from the MM-iTransformer and iTransformer models applied to Forex datasets. The graphs illustrate a marked improvement in prediction accuracy through the integration of textual data. Specifically, the MM-iTransformer, which incorporates textual inputs alongside historical price data, demonstrates superior alignment with actual market trends and captures future price movements with significantly higher precision compared to the iTransformer, which relies solely on historical price data. This visual representation compellingly demonstrates how integrating relevant textual information can substantially enhance a model’s ability to comprehend and predict market dynamics, leading to more precise forecasts.

5. Conclusion

This study has successfully demonstrated the significant potential of integrating textual data into economic time series forecasting with the proposed MM-iTransformer. We have shown that incorporating textual information markedly enhances forecasting accuracy. Our findings underscore that the inclusion of news text not only aligns with the inherently multimodal nature of economic forecasting but also significantly improves predictive accuracy, as evidenced by the notable reduction in MSE for both the gold price and foreign exchange datasets.

However, we acknowledge certain limitations in this study, particularly concerning the scope and synchronization of the datasets used. While our results validate the utility of textual information for forecasting, the relatively limited scope of our datasets may not fully encompass the diverse and region-specific characteristics of global financial markets. Furthermore, the challenge of aligning textual data with time series data is exacerbated by the scarcity of large, well-structured, and temporally synchronized datasets in the financial sector.

To overcome these limitations, we plan to develop a larger, more comprehensive dataset in future work. This enhanced dataset will aim to better synchronize region-specific and real-time news data with financial time series, providing a more robust basis for evaluating and validating the proposed method. Through these efforts, we hope to further advance the field of multimodal economic forecasting by leveraging a broader spectrum of synchronized data, thereby enriching our understanding and predictive capabilities within this complex domain.

Figure 1.

Time series forecasting framework.

Figure 1.

Time series forecasting framework.

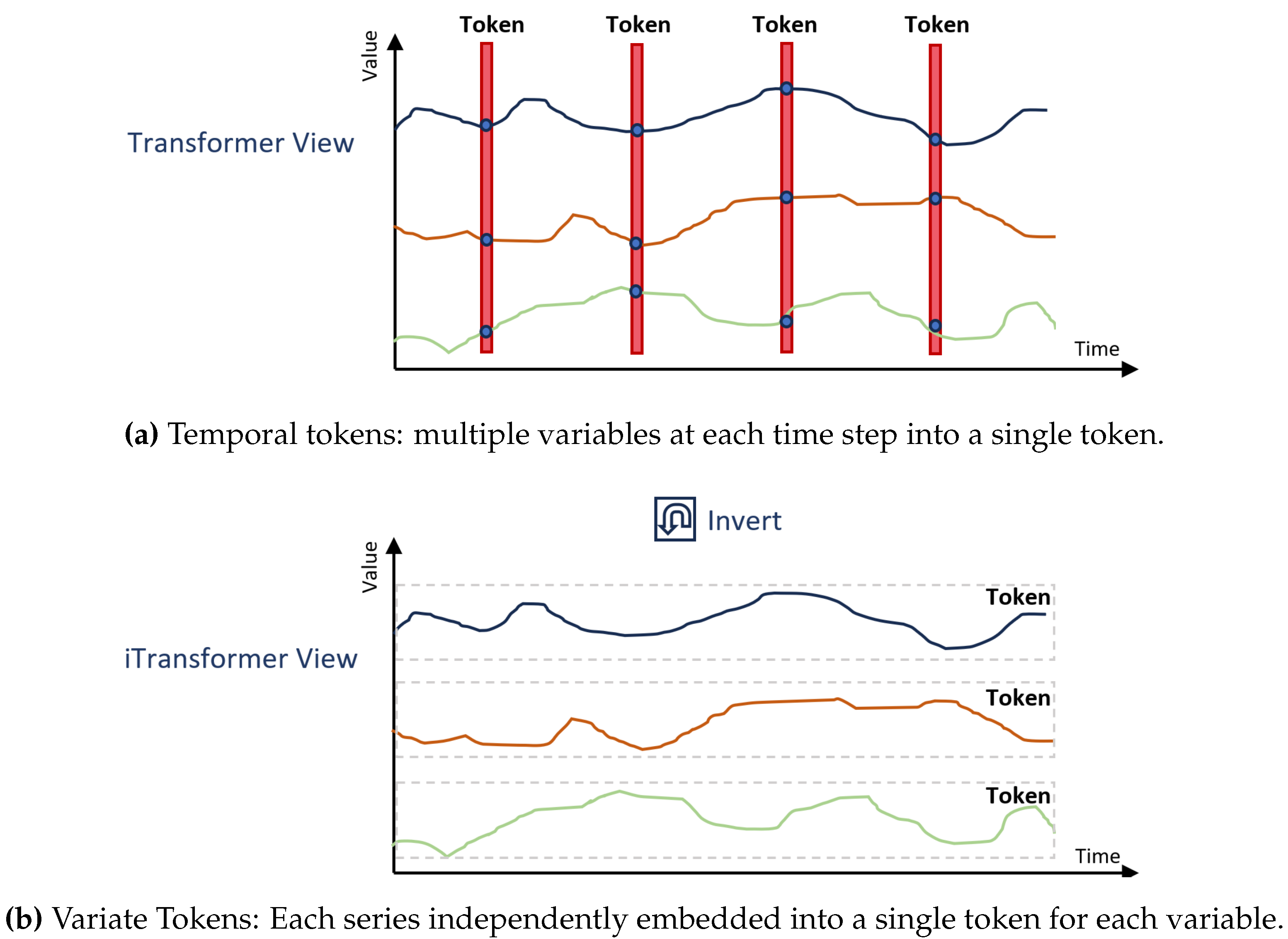

Figure 2.

Comparison of temporal and variate token embedding approaches in vanilla Transformer and iTransformer.

Figure 2.

Comparison of temporal and variate token embedding approaches in vanilla Transformer and iTransformer.

Figure 3.

The Workflow of MM-iTransformer: FinBERT Embed generates textual embeddings, Inverted Embed represents price data as variate tokens, and the Multivariate Correlations Map captures interactions for forecasting.

Figure 3.

The Workflow of MM-iTransformer: FinBERT Embed generates textual embeddings, Inverted Embed represents price data as variate tokens, and the Multivariate Correlations Map captures interactions for forecasting.

Figure 4.

Using a historical window of days and forecast horizons days for MM-iTransformer.

Figure 4.

Using a historical window of days and forecast horizons days for MM-iTransformer.

Figure 5.

Comparison of model performance with different historical window lengths () on the Forex and Gold-Price datasets for a 12-day forecast horizon ().

Figure 5.

Comparison of model performance with different historical window lengths () on the Forex and Gold-Price datasets for a 12-day forecast horizon ().

Figure 6.

Comparison of predictions on the Forex dataset with a historical window of 96 and a forecast horizon of 12; the vertical axis represents series values. Figures (a) and (c) showcase predictions from the MM-iTransformer, integrating textual data alongside historical price inputs, while figures (b) and (d) illustrate results from the iTransformer, which utilizes only historical price data.

Figure 6.

Comparison of predictions on the Forex dataset with a historical window of 96 and a forecast horizon of 12; the vertical axis represents series values. Figures (a) and (c) showcase predictions from the MM-iTransformer, integrating textual data alongside historical price inputs, while figures (b) and (d) illustrate results from the iTransformer, which utilizes only historical price data.

Table 1.

Economic Indicators.

Table 1.

Economic Indicators.

| Day |

Country |

Event |

Current |

Previous |

| 01/01/19 |

CN |

Caixin Manufacturing PMI DEC |

49.7 |

50.2 |

| 01/04/19 |

US |

Non Farm Payrolls DEC |

312K |

176K |

| 01/07/19 |

CA |

Ivey PMI s.a DEC |

59.7 |

57.2 |

Table 2.

Performance Comparison of Different Models on Forex and Gold-Price Datasets. The underlined values indicate the best performance.

Table 2.

Performance Comparison of Different Models on Forex and Gold-Price Datasets. The underlined values indicate the best performance.

| Models |

Forex |

Gold-price |

| |

MSE |

MAE |

RMSE |

MAPE |

MSE |

MAE |

RMSE |

MAPE |

| MM-iTransformer (Ours) |

0.019 |

0.094 |

0.137 |

0.160% |

0.057 |

0.185 |

0.239 |

0.182% |

| iTransformer [32] |

0.022 |

0.102 |

0.147 |

0.189% |

0.065 |

0.207 |

0.254 |

0.196% |

| ARIMA [40] |

0.030 |

0.092 |

0.174 |

0.859% |

0.105 |

0.279 |

0.325 |

2.786% |

| Random Walk Model [41] |

0.020 |

0.094 |

0.142 |

9.495% |

0.110 |

0.288 |

0.331 |

19.727% |

Table 3.

Ablation Study on the Impact of Textual Data Temporal Alignment on Forecasting Accuracy.

Table 3.

Ablation Study on the Impact of Textual Data Temporal Alignment on Forecasting Accuracy.

| |

Forex |

Gold-price |

| |

MSE |

MAE |

RMSE |

MAPE |

MSE |

MAE |

RMSE |

MAPE |

| With random text |

0.021 |

0.099 |

0.146 |

0.201% |

0.069 |

0.215 |

0.263 |

0.205% |

Table 4.

Diebold-Mariano (DM) test results comparing the forecasting performance of MM-iTransformer vs. iTransformer. Significance levels: * p < 0.05, ** p < 0.01.

Table 4.

Diebold-Mariano (DM) test results comparing the forecasting performance of MM-iTransformer vs. iTransformer. Significance levels: * p < 0.05, ** p < 0.01.

| Dataset |

DM Test Statistic (P-value) |

| Gold-price |

2.030 (0.043*) |

| Forex |

3.010 (0.003**) |