1. Introduction

Artificial Intelligence (AI) is a field of computer science focused on the development of systems and algorithms capable of emulating human intelligence to perform tasks such as problem-solving, decision-making, and pattern recognition [

1]. One of the significant applications of AI is in image analysis, also known as image recognition or computer vision. This involves the utilization of machine learning techniques, particularly deep neural networks, to analyze and interpret visual data, enabling computers to comprehend, classify, and make predictions based on images or videos [

2,

3].

The analysis of images with AI technology employs sophisticated algorithms to extract intricate patterns and features from images, enabling the accurate classification and differentiation of objects, structures, or characteristics within the images [

4]. This technology finds applications in areas such as medical diagnosis, quality control, and autonomous systems [

5]. An example of a tool that allows users to train AI to recognize patterns in images is the “Teachable Machine,” a tool developed by Google’s Creative Lab and available free of charge. This tool empowers individuals to train their own AI models without extensive coding knowledge, enabling machine learning-based AI to differentiate objects based on user-provided examples. This process contributes to democratizing and popularizing AI resources for a broader range of users [

6,

7].

The use of AI in image prediction has found widespread applications across various sectors. In the healthcare field, AI algorithms can assist medical professionals in disease diagnosis by analyzing medical images such as X-rays [

8], MRI scans [

9], and CT scans [

10]. Furthermore, AI image prediction has been extensively utilized in industries such as manufacturing for quality control, agriculture for crop monitoring, and e-commerce for product recommendation systems. These applications rely on AI to analyze extensive datasets of images and provide valuable insights or predictions [

11]. Overall, AI has revolutionized image prediction, enabling computers to comprehend visual data, and its applications span multiple sectors, enhancing efficiency, accuracy, and decision-making processes [

12].

Histological images provide detailed visual representations of tissues at the cellular level, essential for understanding tissue structure and pathological conditions [

13]. Similarly, histological images from hydrogels provide valuable insights into the structural characteristics of hydrogel materials, aiding in their analysis and applications in various fields, including biomedicine and materials science [

14]. The techniques for staining these samples involve the application of specialized dyes to tissue samples, enabling the visualization and differentiation of specific cellular structures and components for pathological analysis, such as hematoxylin and eosin. These techniques are essential for enhancing morphological details and facilitating pathological identification in tissue samples [

15,

16].

Therefore, to explore a new application of AI for image interpretation, the present study aims to utilize the Google platform ‘Teachable Machine’ for the learning and prediction of histological cross-section images of hydrogel filaments, distinguishing those mechanically stretched from those with distinct morphological characteristics. Hydrogels, like the ones studied here, have significant potential for applications in three-dimensional (3D) cell culture systems. Due to their biocompatibility, tunable mechanical properties, and ability to mimic the extracellular matrix, these materials can support cellular adhesion, proliferation, and differentiation. Such characteristics make them promising candidates for biomedical research, including the development of engineered tissues like nerves, tendons, and muscles.

2. Materials and Methods

2.1. Experimental Design, Filament Production from Hydrogels, and Electromechanical Tension

For the preparation of biopolymeric hydrogels, the concentrations of sodium alginate, gelatin, and calcium chloride were determined using the Chemoface

® software with a central composite experimental design.

Table 1 shows the seven selected combinations generated by the software. As a solubilizing agent, Dulbecco’s Modified Eagle Medium (DMEM) cell culture medium was chosen. Therefore, each combination was identified by adding the word DMEM followed by the generated combination number.

The hydrogels were prepared from combinations of the biopolymers sodium alginate (CRQ, Brazil) and gelatin (Vetec, Brazil) following the protocol defined by the experimental design. At room temperature, a glass beaker (Pyrex®, USA) was placed on a magnetic stirring plate (Lucadema, Brazil), and sodium alginate was added for solubilization in each solvent, followed by heating. Gelatin was added to the same beaker, and the temperature was gradually increased to approximately 40°C for 30 minutes until complete solubilization and homogeneity were achieved. Subsequently, the hydrogels were stored at room temperature in 5 mL disposable luer-lock syringes (Rynco, Brazil) and manually extruded into glass containers containing a calcium chloride solution (Dinâmica, Brazil) as the crosslinking agent, following the wet spinning technique. A 14 gauge needle was used for extrusion. These filaments were immersed for approximately 10-15 minutes, washed with PBS, and then gently dried on paper towels.

Finally, using an electromechanical extensometer attached to a load cell (MTS Insight®, USA), the produced filaments were clamped at their ends and tested in triplicate until rupture. The samples were subjected to stress using TestWorks® 4.10 software at a speed of 10 mm/min.

2.2. Light Microscopy and Histology of Hydrogel Filaments

The filaments produced using hydrogels based on the combinations of selected biopolymers were processed for evaluation under light microscopy. For this purpose, extruded filaments were used both before and after being stretched to their rupture point by an electromechanical extensometer. Thus, the analyzed biomaterials were divided into two groups: Unstretched Filaments (F) and Stretched Filaments (FE). Approximately 1 cm fragments of each filament were placed separately in 15 mL Falcon-type tubes and identified.

To begin the processing, the biomaterials were placed in methanol-Carnoy fixative solution, which consists of 60% methanol (J.T. Backer, USA), 30% chloroform (CRQ, Brazil), and 10% acetic acid (Merck, Germany), for 2 hours at room temperature. After removing the fixative, the samples underwent successive steps of decreasing ethanol concentrations (100%, 90%, 80%) with a 40-minute interval between each bath, and were kept in 70% ethanol overnight at 4°C. After this period, the samples were dehydrated using increasing ethanol concentrations (80%, 90%, and 100% twice), with a 40-minute interval between each bath. Subsequently, the clearing process took place, during which the samples were kept in ethanol/xylene solutions in a 1:1 ratio, followed by xylene 1 and then xylene 2, with a 40-minute interval in each bath. For embedding the filaments, infiltration with Paraplast (Sigma Life Science, Switzerland) was performed through two 1-hour baths (Paraplast 1 and Paraplast 2). After infiltration, the material was embedded in Paraplast blocks, allowed to solidify, and stored at room temperature.

Using the Vibratome 3000 plus equipment (The Vibratome Company, USA), the blocks were sectioned into approximately 5 μm thickness, and the sections were deposited on glass slides, separating longitudinal and transverse cuts, then placed on a heated plate at approximately 40°C to initiate the drying process, and then taken to an oven at 40°C overnight. The slides underwent a staining process with hematoxylin and eosin (H&E). The analysis of the micrometric sections of the filaments was conducted using a light microscope (Nikon, Japan) coupled with a digital camera (Digilab, Brazil) and digitally documented using ImageView software version 3.7.

2.3. Utilization of Artificial Intelligence as a Strategy for the Classification and Validation of Biomaterial Images

The light microscopy images of the sections obtained in the previous assay were subjected to a machine learning test, followed by prediction using the Teachable Machine AI tool.

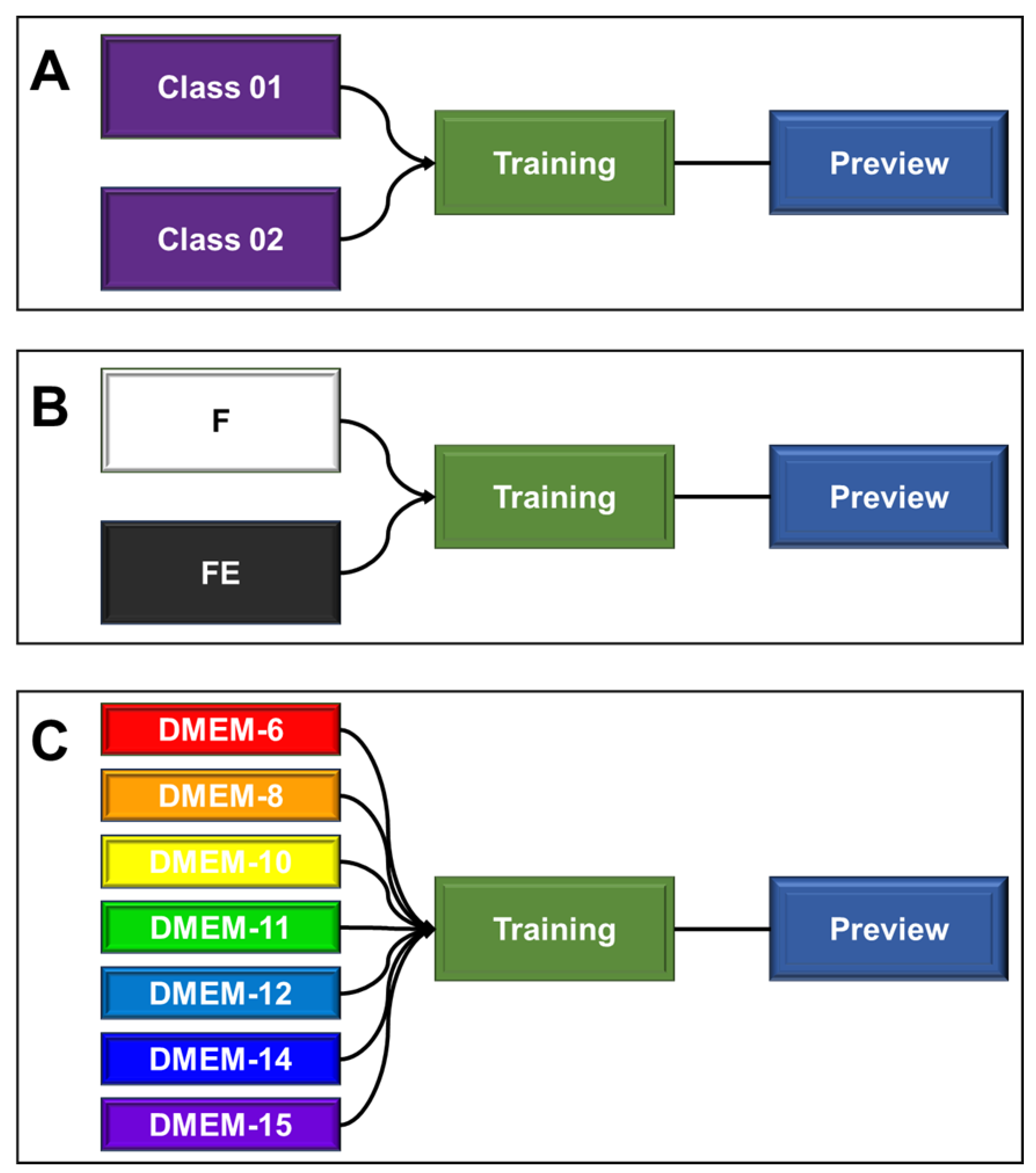

Figure 1 details the components of the tool used. In this case, the machine learning used for classification relies on the use of categorical data (classes), where the provision of images (“inputs”) and their respective identifications are required for recognition. Subsequently, through the algorithm itself, training occurs and the parameters that relate the images to their corresponding classes are learned. Then, the predicted model is ready to be validated. For this step, it is important to use a new set of images that were not implemented in the previous training but may or may not be associated with any of the trained classes. From this, the tested images will be identified and classified with a confidence percentage (certainty) with respect to the classifications (classes) to which the AI was trained (

Figure 1A).

For the training shown in

Figure 1B, the classes were identified as “F” (unstretched filaments) and “FE” (stretched filaments). Each class was composed of 250 distinct images (captured with the 4x and 10x microscope objectives). After training, forty-seven images, which were not used in the construction of this trained image dataset, were used for prediction. The hypothesis under evaluation was whether the AI could identify and classify the images into two groups based on the morphologies of stretched and unstretched filaments.

For the training depicted in

Figure 1C, the classes were identified as DMEM-6, DMEM-8, DMEM-10, DMEM-11, DMEM-12, DMEM-14, and DMEM-15. To challenge and identify the potential ‘sensitivity’ of prediction, the seven classes were provided with approximately seventy images (stretched and unstretched filaments) each. After training, forty-nine images that were not used in the construction of this trained image dataset were tested for prediction. The hypothesis under evaluation was whether the AI could identify and classify the images based on the seven distinct groups, determining which one they most resemble, guided by their morphologies.

During the AI prediction, it was set that the default threshold for image identification would be above 50% for recognition. After all predictions were made, the resulting values were compiled into a confusion matrix, which is a performance measure that compares the obtained classification results with the ones predicted by the machine, displaying the distribution of records in terms of their actual and predicted classes. The matrix was created using the ChatGPT platform, and the programming was conducted in Python language. All acquired images used are available at this link.

3. Results and Discussion

3.1. Analysis of the Sections Obtained from Each Filament by Light Microscopy

The biopolymeric hydrogel filaments developed in this study were designed with a focus on achieving structural integrity and versatility for diverse applications. By combining sodium alginate and gelatin, cross-linked with calcium chloride, these filaments exhibit tunable mechanical and morphological properties. Such characteristics are crucial for their evaluation under different conditions, including mechanical stretching, to explore their potential use in biomedical applications, such as scaffolds for tissue engineering and 3D cell culture systems. This approach ensures that the materials can provide a robust platform for further investigation into their functionality and adaptability.

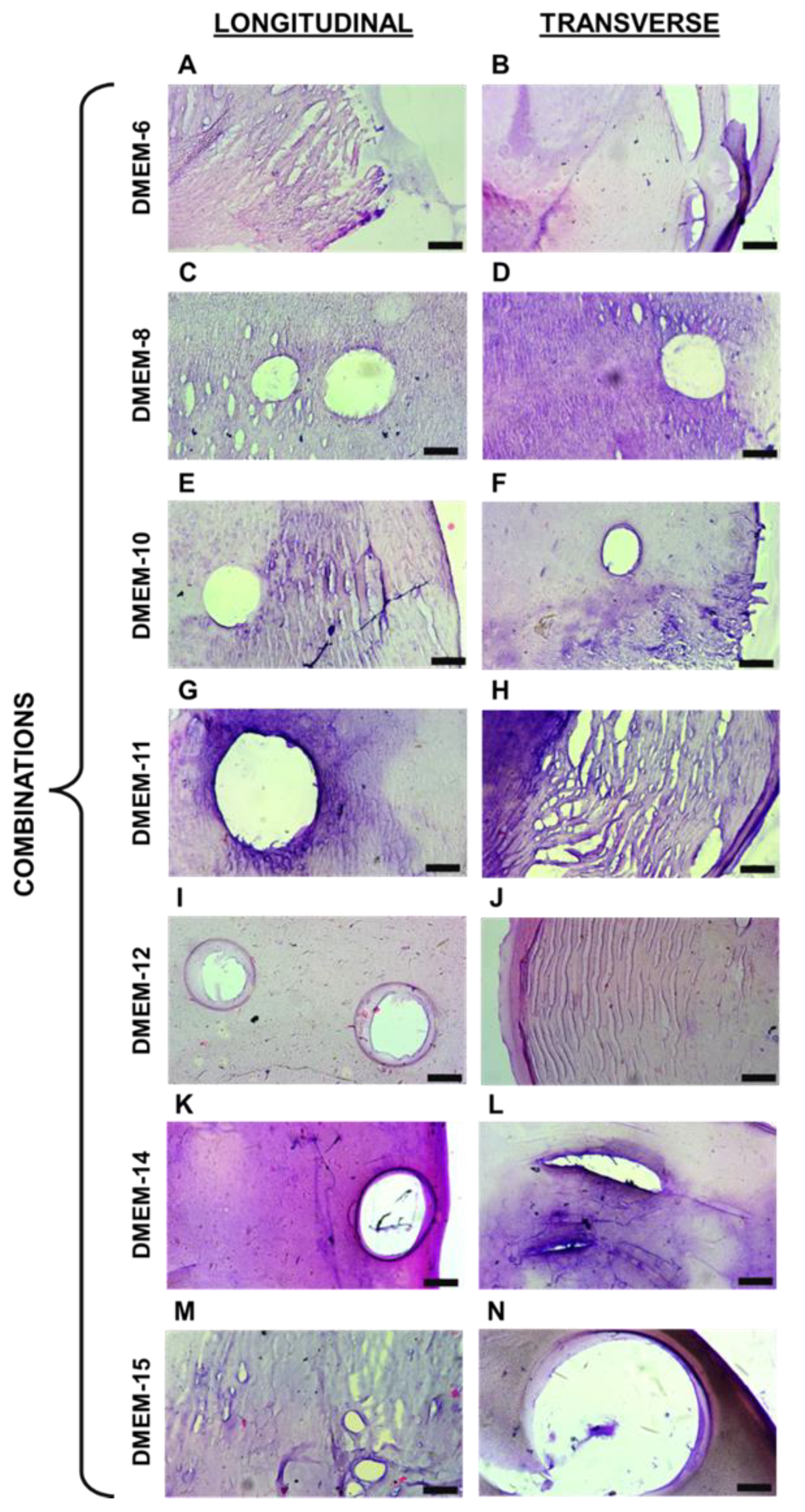

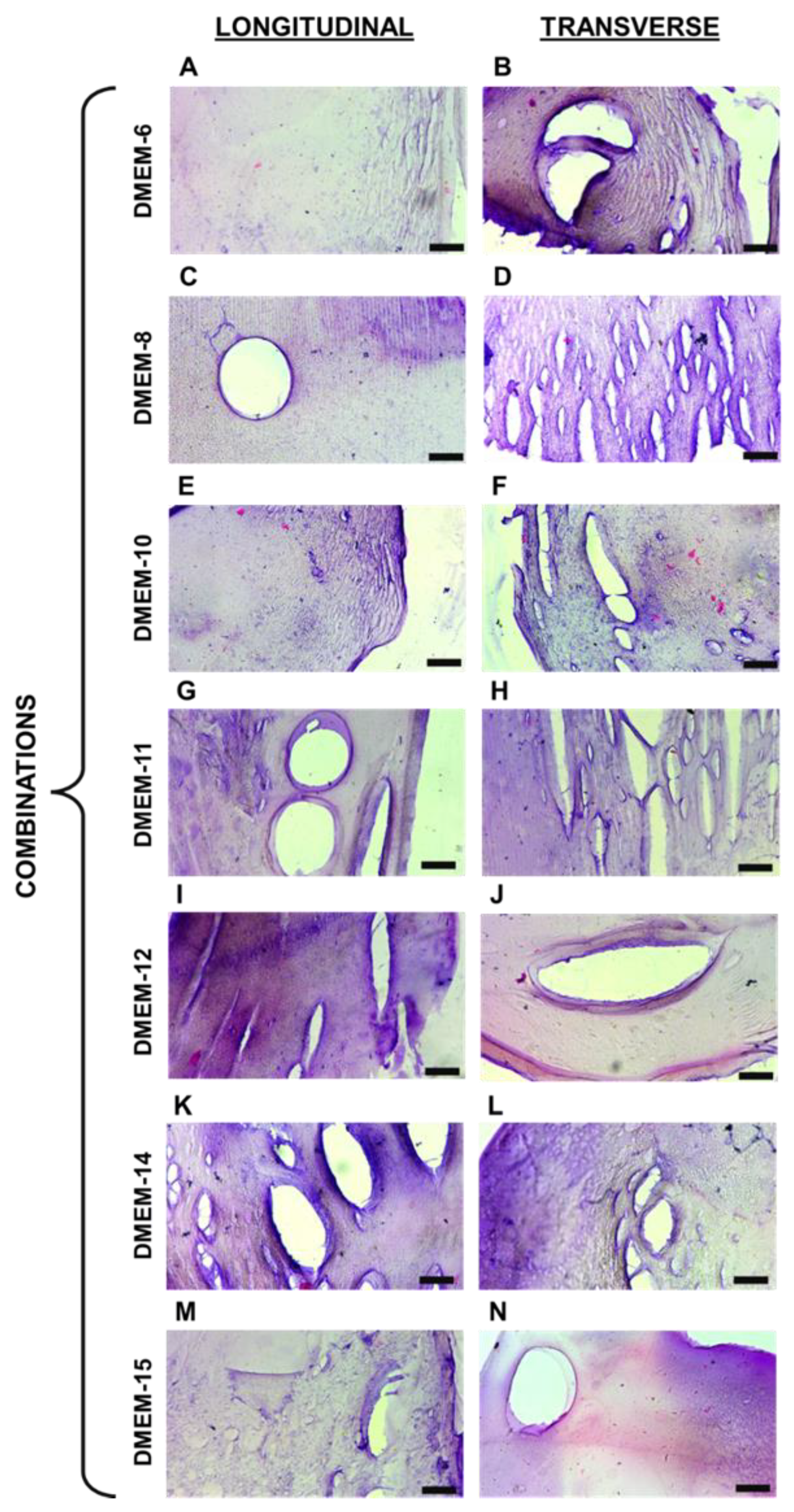

The composition and structural properties of these hydrogels were designed with versatility in mind. Their ability to sustain mechanical stress while maintaining structural integrity could be advantageous for supporting cell growth and tissue formation under dynamic conditions, such as those required for the regeneration of muscular or connective tissues. Regarding the captured images from histological sections, it was initially noticeable that variations in the proportion of biopolymers composing different hydrogels influenced the staining of each material. The dyes used have distinct charges, with hematoxylin having a positive charge, staining alginate [

17,

18], and eosin having a negative charge, primarily staining proteins like gelatin [

19]. It can be inferred that this factor accounts for the variations in the staining of the acquired images. Comparing the images in

Figure 2 and

Figure 3, it is possible to observe the presence of certain recurring structures in all the samples. For instance, there are numerous pores of various sizes, primarily visible through the 10× objective lens. In addition to these, the biopolymeric mesh can be seen to differ when comparing the stretched and unstretched filaments. For these types of biomaterials, particularly those based on alginate, the presence of pores is quite common, as observed [

20], and also associated with other polymers like gelatin [

21,

22], polyethylene glycol [

23], and chitosan [

24]. Another factor that may directly contribute to the behavior of these meshes is the concentration of the crosslinking agent used in each combination. Therefore, higher levels of calcium ions can produce distinct effects in the resulting hydrogel network, as previously shown by other authors [

25]. These elevated calcium ion concentrations can accelerate crosslinking, affecting handling time and potentially altering mechanical properties intrinsically [

26]. However, this denser network can also lead to reduced swelling capacity and altered diffusion characteristics, as observed in other studies [

27].

The observed differences in the structural and morphological characteristics of the hydrogel filaments, influenced by variations in biopolymer proportions and cross-linking density, suggest their potential for customizing scaffolds for specific 3D cell culture applications. For instance, hydrogels with higher porosity and tunable stiffness could be tailored for the cultivation of nerve or tendon cells, while more compact and elastic structures might be suitable for muscle tissue engineering.

3.2. AI-Based Class Prediction After Learning from Light Microscopy Filament Section Images

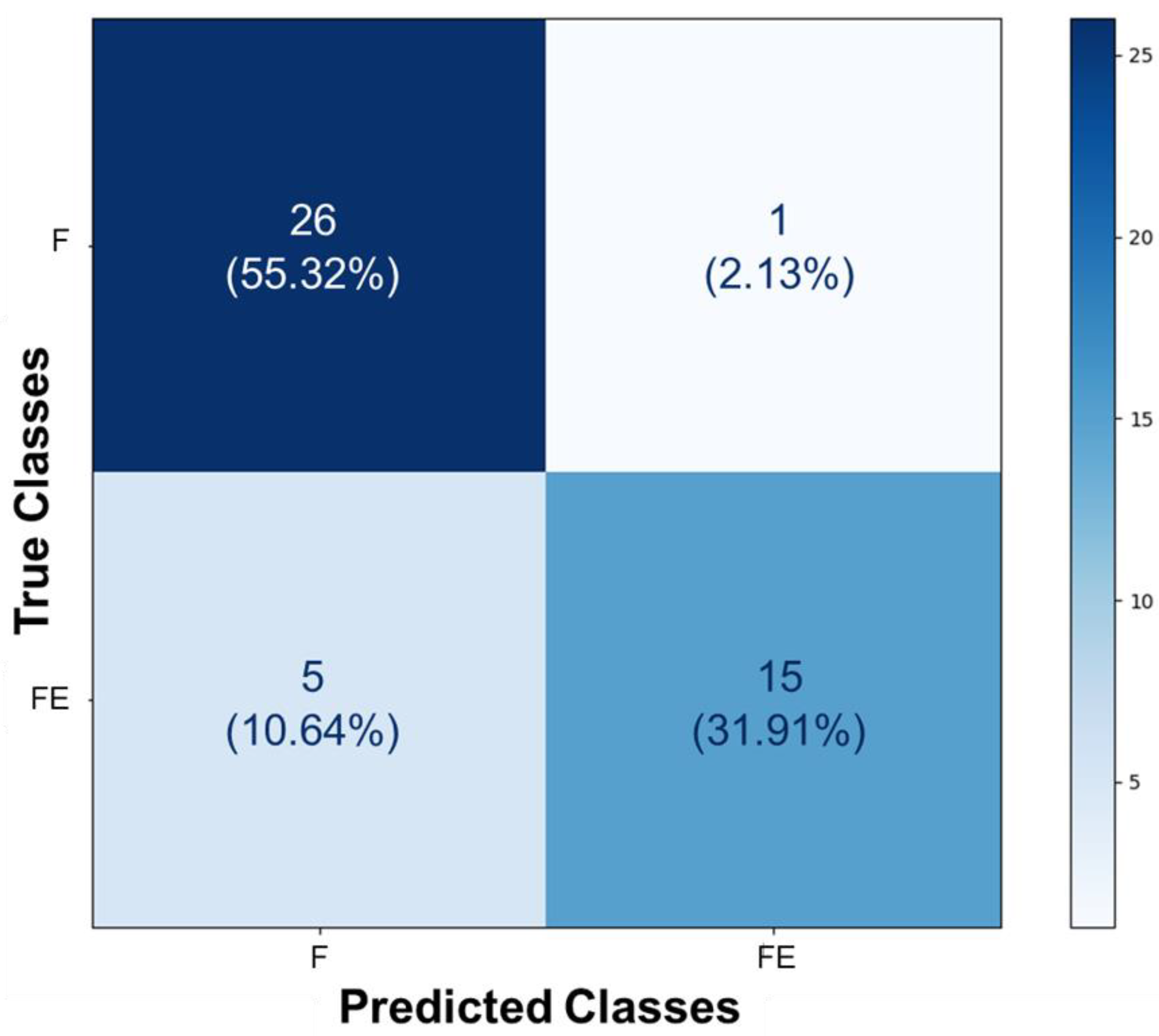

During the process of image classification and prediction using the Teachable Machine tool, the results from the test of identifying stretched and unstretched filaments were collected and added to the confusion matrix. In the section where ‘True Classes’ is written, it signifies the actual value of each image presented to the AI after its training. Conversely, where ‘Predicted Classes’ is written, it represents the value assigned to each image by the AI after prediction. When comparing the obtained results with the actual values of each section, the confusion matrix presented in

Figure 4 was constructed. It indicates that twenty-six images belonging to class F (55.32%) were correctly identified as such, and fifteen images from class FE (31.91%) were correctly identified as belonging to that class. Five images from class FE (10.64%) were misclassified as F, and only one image from class F (2.13%) was misclassified as FE. This demonstrates that, even though these images exhibit some colorimetric similarities and morphological differences discussed and highlighted in the previous section, the AI displayed sensitivity in distinguishing the classes as learned.

The ability of AI tools to classify and predict hydrogel characteristics could facilitate the selection of optimal formulations for specific biomedical purposes. In the context of 3D cell culture, this capability could streamline the development of scaffolds for applications ranging from regenerative medicine to the formation of complex tissues such as muscles and tendons.

Teachable Machine appears to be a reliable AI, particularly as it was developed by Google, a well-known and respected company in the field of technology and machine learning [

28]. Moreover, it has been utilized by many developers and academics to successfully train and deploy image classification models, as evidenced by numerous tutorials and guides available online [

29]. However, like any tool, the accuracy and reliability of predictions made by a model trained using Teachable Machine depend on the quality of the training data and the model’s suitability for the specific task [

30].

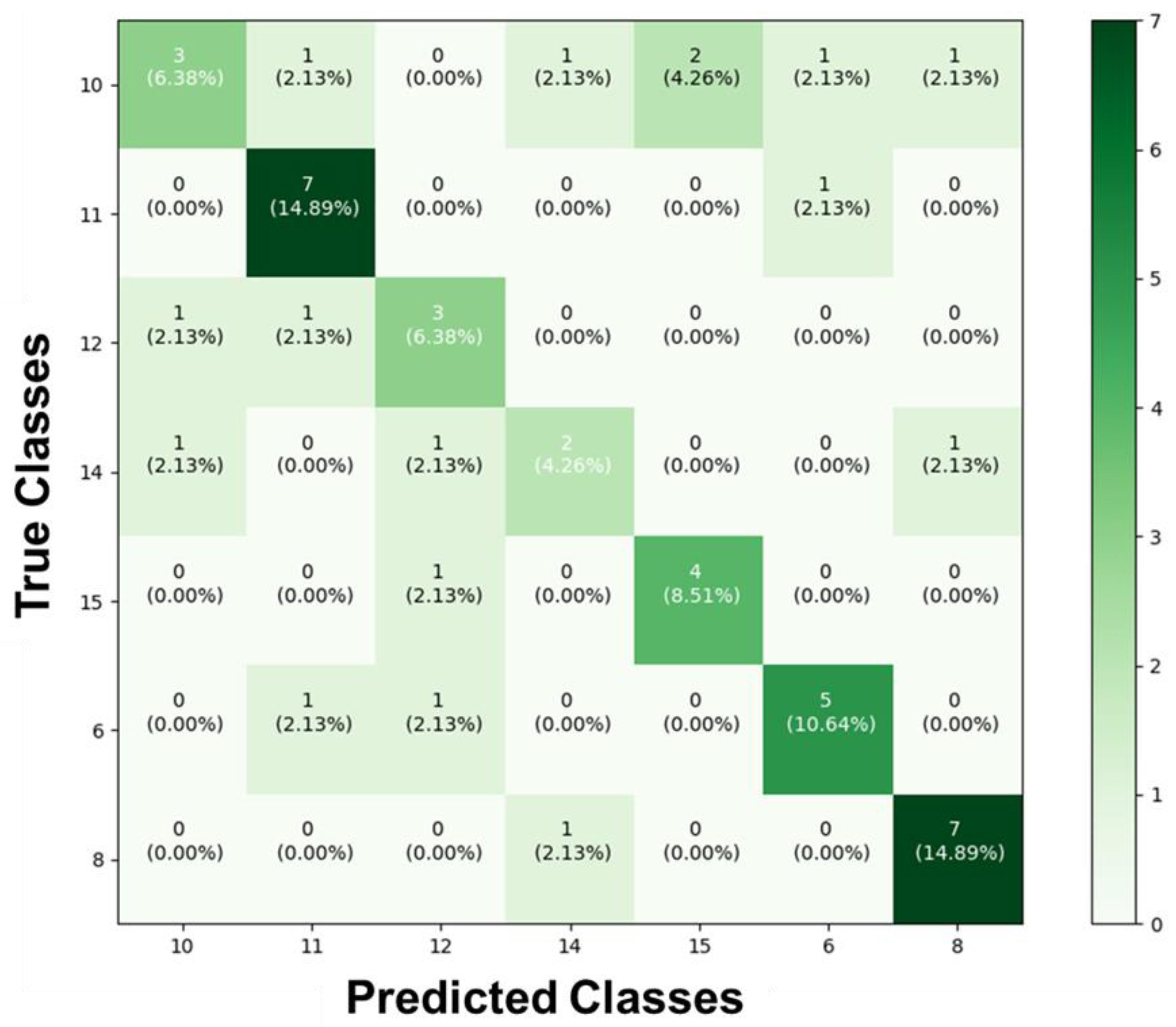

As for the results of the test to identify the different classes and determine to which specific hydrogel the images of stretched and unstretched filaments belonged, they were collected and added to the confusion matrix. When we compared the obtained results with the actual values of the images in each section, the confusion matrix shown in

Figure 5 was constructed. It reveals a significant disparity in the AI’s prediction classifications. Among all the groups presented and trained, substantial accuracy was achieved only for the stretched and unstretched filaments of DMEM-8 (14.89%) and DMEM-11 (14.89%). For the remaining classes, there was a confusion during predictions, where different types of filaments were misclassified in terms of the hydrogel to which they belonged.

Within the field of machine learning statistics, there is a term known as overfitting, which occurs when a statistical model fits precisely to its training data, including noise and random fluctuations in the data. This results in a model that performs well on the training data but poorly on new and unseen data, undermining its purpose [

31,

32]. This may be one of the reasons explaining the lack of consistency between the images predicted by the AI and their actual values.

The Teachable Machine artificial intelligence has been used in various types of studies and research involving imaging for different applications, such as tympanic membrane differentiation [

29], melanoma tomographies [

33], and plant pest-related diseases [

34], as well as studies focused on children’s education and development [

7,

35]. Using AI as a tool for the work, the results obtained have been positively surprising due to its effectiveness. Furthermore, it has impressed by demonstrating precise accuracy, proving to be highly capable of distinguishing proportionally between the categories of images F and FE after its training, resulting in consistently high accuracy rates. On the other hand, it was found that the AI’s performance was unsatisfactory in predicting the various classes of filaments based on the type of hydrogel, encompassing both stretched and unstretched ones. This was partly due to the similarities in composition and consequently similarities in the generated images, even though other attributes, such as micro- and nanomechanical properties, were distinct.

To enhance Teachable Machine’s ability to improve image prediction accuracy, the following enhancements can be considered: (i) an increase in the diversity and quantity of training data, representing an even wider range of hydrogel variations, could enhance the model’s capability to identify specific fiber classes [

36]; (ii) the incorporation of more advanced image preprocessing and segmentation techniques can assist in highlighting distinctive features in the images, making them more easily recognizable by the algorithm [

37]; (iii) optimizing the parameters of the machine learning model, such as the choice of classification algorithm and neural network architecture, is essential to ensure more accurate and consistent performance [

38]. Finally, a more detailed analysis of the model’s limitations and the types of errors it makes can guide specific adjustments to enhance the accuracy of image prediction.

4. Conclusions

The images obtained from histological cross-sections revealed that variations in the polymer proportions used in filament manufacturing were responsible for the differences in coloration observed in each. Furthermore, stretched filaments underwent changes in their conformation due to increased material compaction during the stretching process, which distinguished them from unstretched samples. The utilization of machine learning for analyzing images obtained through light microscopy of filament sections showed promising results, albeit with limitations in predicting specific classes. Teachable Machine’s AI demonstrated high accuracy in distinguishing between categories F and FE but encountered difficulties in accurately predicting different fiber classes based on the type of hydrogel. Therefore, to enhance the Teachable Machine’s image analysis capacity, it is crucial to consider diversifying and expanding the training data, implementing advanced image preprocessing and segmentation techniques to highlight distinctive features, and optimizing machine learning model parameters, including the choice of classification algorithm and neural network architecture, to ensure more precise and consistent predictions. Beyond their immediate use in histological studies, the hydrogels analyzed here represent a promising platform for 3D cell culture systems. By leveraging their customizable mechanical and morphological properties, they could support the formation of functional tissues for biomedical applications, including neural networks, tendons, and muscular systems. These findings underscore the broader potential of such materials in advancing tissue engineering and regenerative medicine.

Author Contributions

L.A. was responsible for conceptualization, literature review, state-of-the-art analysis, methodology design, results analysis, discussion, conclusions, manuscript drafting, and editing. A.M.F. contributed by developing the validation algorithm for AI-generated records and revising the manuscript. L.P.S. oversaw the experimental direction, provided resources, supervised the study, and participated in manuscript revision and editing.

Funding

This research was funded by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brazil (CAPES) - Finance Code 001 and also 23038.019088/2009-58. In addition, we appreciate the financial support of Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq no. 311825/2021-4, 307853/2018-7, 408857/2016-1, 306413/2014-0, and 563802/2010-3), Fundação de Apoio à Pesquisa do Distrito Federal (FAPDF no. 193.001.392/2016), Empresa Brasileira de Pesquisa Agropecuária (Embrapa nos. 10.20.03.009.00.00, 23.17.00.069.00.02, 13.17.00.037.00.00, 21.14.03.001.03.05, 13.14.03.010.00.02, 12.16.04.010.00.06, 22.16.05.016.00.04, and 11.13.06.001.06.03), and Universidade Federal do Paraná (UFPR).

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within this article.

Acknowledgments

We thanks the help of all members of the LNANO of Embrapa.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper and the funding agencies have not influenced with the conduct of the research. Artificial intelligence was solely used for image analysis, grouping, and grammatical and orthographic corrections, without being employed in any interpretative stage of the study. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

AI statement

Artificial intelligence was solely used for image analysis (Teachable Machine), grouping (Teachable Machine), and grammatical and orthographic corrections (ChatGPT), without being employed in any interpretative stage of the study.

References

- Soori, M.; Arezoo, B.; Dastres, R. Artificial Intelligence, Machine Learning and Deep Learning in Advanced Robotics, a Review. Cogn. Robot. 2023, 3, 54–70. [Google Scholar] [CrossRef]

- Taye, M.M. Understanding of Machine Learning with Deep Learning : Architectures, Workflow, Applications and Future Directions. Computers 2023, 12. [Google Scholar] [CrossRef]

- Dargan, S.; Kumar, M.; Ayyagari, M.R.; Kumar, G. A Survey of Deep Learning and Its Applications: A New Paradigm to Machine Learning. Arch. Comput. Methods Eng. 2020, 27, 1071–1092. [Google Scholar] [CrossRef]

- Durkee, M.S.; Abraham, R.; Clark, M.R.; Giger, M.L. Artificial Intelligence and Cellular Segmentation in Tissue Microscopy Images. Am. J. Pathol. 2021, 191, 1693–1701. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Wu, J.; Li, T.; Sun, C.; Yan, R.; Chen, X. Challenges and Opportunities of AI-Enabled Monitoring, Diagnosis & Prognosis: A Review. Chinese J. Mech. Eng. English Ed. 2021, 34. [Google Scholar]

- Carney, M.; Webster, B.; Alvarado, I.; Phillips, K.; Howell, N.; Griffith, J.; Jongejan, J.; Pitaru, A.; Chen, A. Teachable Machine: Approachable Web-Based Tool for Exploring Machine Learning Classification. Conf. Hum. Factors Comput. Syst. - Proc. 2020. [Google Scholar]

- Yogendra Prasad, P.; Prasad, D.; Malleswari, N.; Shetty, M.N.; Gupta, N. Implementation of Machine Learning Based Google Teachable Machine in Early Childhood Education. Artic. Int. J. Early Child. Spec. Educ. 2022, 14, 2022. [Google Scholar]

- Salehi, A.W.; Baglat, P.; Gupta, G. Review on Machine and Deep Learning Models for the Detection and Prediction of Coronavirus. Mater. Today Proc. 2020, 33, 3896–3901. [Google Scholar] [CrossRef] [PubMed]

- Subudhi, A.; Dash, P.; Mohapatra, M.; Tan, R.S.; Acharya, U.R.; Sabut, S. Application of Machine Learning Techniques for Characterization of Ischemic Stroke with MRI Images: A Review. Diagnostics 2022, 12. [Google Scholar] [CrossRef]

- Al-Antari, M.A. Artificial Intelligence for Medical Diagnostics—Existing and Future AI Technology! Diagnostics 2023, 13, 1–3. [Google Scholar] [CrossRef] [PubMed]

- Javaid, M.; Haleem, A.; Khan, I.H.; Suman, R. Understanding the Potential Applications of Artificial Intelligence in Agriculture Sector. Adv. Agrochem 2023, 2, 15–30. [Google Scholar] [CrossRef]

- Lu, Y. Artificial Intelligence: A Survey on Evolution, Models, Applications and Future Trends. J. Manag. Anal. 2019, 6, 1–29. [Google Scholar] [CrossRef]

- Hosseini, M.S.; Chan, L.; Tse, G.; Tang, M.; Deng, J.; Norouzi, S.; Rowsell, C.; Plataniotis, K.N.; Damaskinos, S. Atlas of Digital Pathology: A Generalized Hierarchical Histological Tissue Type-Annotated Database for Deep Learning. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2019, 2019, 11739–11748. [Google Scholar]

- Xue, X.; Hu, Y.; Deng, Y.; Su, J. Recent Advances in Design of Functional Biocompatible Hydrogels for Bone Tissue Engineering. Adv. Funct. Mater. 2021, 31, 1–20. [Google Scholar] [CrossRef]

- Salvi, M.; Michielli, N.; Molinari, F. Stain Color Adaptive Normalization (SCAN) Algorithm: Separation and Standardization of Histological Stains in Digital Pathology. Comput. Methods Programs Biomed. 2020, 193. [Google Scholar] [CrossRef]

- Dibal, N.I.; Garba, S.H.; Jacks, T.W. Histological Stains and Their Application in Teaching and Research. Asian J. Heal. Sci. 2022, 8, 43–43. [Google Scholar] [CrossRef]

- Barceló, X.; Eichholz, K.F.; Garcia, O.; Kelly, D.J. Tuning the Degradation Rate of Alginate-Based Bioinks for Bioprinting Functional Cartilage Tissue. Biomedicines 2022, 10, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Jin Ho Lee; Won Gon Kim; Sung Soo Kim; Ju Hie Lee; Hai Bang Lee Development and Characterization of an Alginateimpregnated Polyester Vascular Graft. J. Biomed. Mater. Res. 1997, 36, 200–208.

- Wang, Z.; Kumar, H.; Tian, Z.; Jin, X.; Holzman, J.F.; Menard, F.; Kim, K. Visible Light Photoinitiation of Cell-Adhesive Gelatin Methacryloyl Hydrogels for Stereolithography 3D Bioprinting. ACS Appl. Mater. Interfaces 2018, 10, 26859–26869. [Google Scholar] [CrossRef]

- Carvalho, B.S. Nanobiofabricação de Estruturas 3D Com Nanopartículas de Prata Antimicrobianas Utilizando Técnicas Manuais e Robóticas. Univ. Brasília, Brasília 2019, 1–94.

- Serafin, A.; Culebras, M.; Collins, M.N. Synthesis and Evaluation of Alginate, Gelatin, and Hyaluronic Acid Hybrid Hydrogels for Tissue Engineering Applications. Int. J. Biol. Macromol. 2023, 233, 123438. [Google Scholar] [CrossRef]

- Vincent, A. Study of Porosity of Gelatin-Alginate Hydrogels to Model Brain Study of Porosity of Gelatin-Alginate Hydrogels to Model Brain Matter for Studying Traumatic Brain Injuries Matter for Studying Traumatic Brain Injuries. 2022.

- Mary, C.S.; Swamiappan, S. Sodium Alginate with PEG/PEO Blends as a Floating Drug Delivery Carrier - In Vitro Evaluation. Adv. Pharm. Bull. 2016, 6, 435–442. [Google Scholar] [CrossRef]

- Li, Y.; Sun, S.; Gao, P.; Zhang, M.; Fan, C.; Lu, Q.; Li, C.; Chen, C.; Lin, B.; Jiang, Y. A Tough Chitosan-Alginate Porous Hydrogel Prepared by Simple Foaming Method. J. Solid State Chem. 2021, 294, 121797. [Google Scholar] [CrossRef]

- Giz, A.S.; Berberoglu, M.; Bener, S.; Aydelik-Ayazoglu, S.; Bayraktar, H.; Alaca, B.E.; Catalgil-Giz, H. A Detailed Investigation of the Effect of Calcium Crosslinking and Glycerol Plasticizing on the Physical Properties of Alginate Films. Int. J. Biol. Macromol. 2020, 148, 49–55. [Google Scholar] [CrossRef]

- Ebihara, L.; Acharya, P.; Tong, J.J. Mechanical Stress Modulates Calcium-Activated-Chloride Currents in Differentiating Lens Cells. Front. Physiol. 2022, 13, 1–9. [Google Scholar] [CrossRef]

- Norahan, M.H.; Pedroza-González, S.C.; Sánchez-Salazar, M.G.; Álvarez, M.M.; Trujillo de Santiago, G. Structural and Biological Engineering of 3D Hydrogels for Wound Healing. Bioact. Mater. 2023, 24, 197–235. [Google Scholar] [CrossRef] [PubMed]

- Wong, J.J.N.; Fadzly, N. Development of Species Recognition Models Using Google Teachable Machine on Shorebirds and Waterbirds. J. Taibah Univ. Sci. 2022, 16, 1096–1111. [Google Scholar] [CrossRef]

- Jeong, H. Feasibility Study of Google’s Teachable Machine in Diagnosis of Tooth-Marked Tongue. J. Dent. Hyg. Sci. 2020, 20, 206–212. [Google Scholar]

- Lee, Y.; Cho, J. Development of an Artificial Intelligence Education Model of Classification Techniques for Non-Computer Majors. Int. J. Informatics Vis. 2021, 5, 113–119. [Google Scholar] [CrossRef]

- Ying, X. An Overview of Overfitting and Its Solutions. J. Phys. Conf. Ser. 2019, 1168. [Google Scholar] [CrossRef]

- Zech, J.R.; Badgeley, M.A.; Liu, M.; Costa, A.B.; Titano, J.J.; Oermann, E.K. Variable Generalization Performance of a Deep Learning Model to Detect Pneumonia in Chest Radiographs: A Cross-Sectional Study. PLoS Med. 2018, 15, 1–17. [Google Scholar] [CrossRef]

- Forchhammer, S.; Abu-Ghazaleh, A.; Metzler, G.; Garbe, C.; Eigentler, T. Development of an Image Analysis-Based Prognosis Score Using Google’s Teachable Machine in Melanoma. Cancers (Basel). 2022, 14. [Google Scholar] [CrossRef] [PubMed]

- Gajendiran, M.; Rhee, J.-S.; Kim, K. Recent Developments in Thiolated Polymeric Hydrogels for Tissue Engineering Applications. Tissue Eng. Part B Rev. 2017, 24, 66–74. [Google Scholar] [CrossRef] [PubMed]

- Marco, S. Machine Learning and Artificial Intelligence. Volatile Biomarkers Hum. Heal. From Nat. to Artif. Senses 2022, 1, 454–471. [Google Scholar]

- L’Heureux, A.; Grolinger, K.; Elyamany, H.F.; Capretz, M.A.M. Machine Learning with Big Data: Challenges and Approaches. IEEE Access 2017, 5, 7776–7797. [Google Scholar] [CrossRef]

- Seo, H.; Badiei Khuzani, M.; Vasudevan, V.; Huang, C.; Ren, H.; Xiao, R.; Jia, X.; Xing, L. Machine Learning Techniques for Biomedical Image Segmentation: An Overview of Technical Aspects and Introduction to State-of-Art Applications. Med. Phys. 2020, 47, e148–e167. [Google Scholar] [CrossRef] [PubMed]

- Du, X.; Xu, H.; Zhu, F. Understanding the Effect of Hyperparameter Optimization on Machine Learning Models for Structure Design Problems. CAD Comput. Aided Des. 2021, 135, 103013. [Google Scholar] [CrossRef]

Figure 1.

Schematic representation of the teachable machine tool used as a machine learning-based artificial intelligence for predicting image classifications of filaments. A) Initial model structure showing the positions of each structure and its specific function. B) Encoding used for the training and prediction of images of stretched (FE) and unstretched (F) filament sections. C) Encoding used for the training and prediction of images of sections for the seven distinct groups of hydrogel filaments produced.

Figure 1.

Schematic representation of the teachable machine tool used as a machine learning-based artificial intelligence for predicting image classifications of filaments. A) Initial model structure showing the positions of each structure and its specific function. B) Encoding used for the training and prediction of images of stretched (FE) and unstretched (F) filament sections. C) Encoding used for the training and prediction of images of sections for the seven distinct groups of hydrogel filaments produced.

Figure 2.

Histological sections of unstretched hydrogel filaments. A, C, E, G, I, K, and M they are representative images of longitudinal sections. B, D, F, H, J, L, and N they are representative images of transverse sections. The black mark in the bottom right corner of each image represents 100 μm.

Figure 2.

Histological sections of unstretched hydrogel filaments. A, C, E, G, I, K, and M they are representative images of longitudinal sections. B, D, F, H, J, L, and N they are representative images of transverse sections. The black mark in the bottom right corner of each image represents 100 μm.

Figure 3.

Histological sections of stretched hydrogel filaments. A, C, E, G, I, K, and M they are representative images of longitudinal sections. B, D, F, H, J, L, and N they are representative images of transverse sections. The black mark in the bottom right corner of each image represents 100 μm.

Figure 3.

Histological sections of stretched hydrogel filaments. A, C, E, G, I, K, and M they are representative images of longitudinal sections. B, D, F, H, J, L, and N they are representative images of transverse sections. The black mark in the bottom right corner of each image represents 100 μm.

Figure 4.

Confusion matrix of the prediction for stretched and unstretched filaments. This matrix presents the proportions corresponding to the identifications of the 47 images, based on the class assignments of F and FE. In total, 26 images belonging to class F (55.32%) were correctly identified as such, and 15 images from class FE (31.91%) were correctly identified as belonging to that class. Five images from class FE (10.64%) were misclassified as F, and only one image from class F (2.13%) was misclassified as FE.

Figure 4.

Confusion matrix of the prediction for stretched and unstretched filaments. This matrix presents the proportions corresponding to the identifications of the 47 images, based on the class assignments of F and FE. In total, 26 images belonging to class F (55.32%) were correctly identified as such, and 15 images from class FE (31.91%) were correctly identified as belonging to that class. Five images from class FE (10.64%) were misclassified as F, and only one image from class F (2.13%) was misclassified as FE.

Figure 5.

Confusion matrix of the prediction for the seven distinct types of hydrogels. This matrix presents the proportions corresponding to the identifications of the forty-seven images, based on the inputs from the classes DMEM-6, DMEM-8, DMEM-10, DMEM-11, DMEM-12, DMEM-14, and DMEM-15. It reveals a significant disparity in the AI’s prediction classifications.

Figure 5.

Confusion matrix of the prediction for the seven distinct types of hydrogels. This matrix presents the proportions corresponding to the identifications of the forty-seven images, based on the inputs from the classes DMEM-6, DMEM-8, DMEM-10, DMEM-11, DMEM-12, DMEM-14, and DMEM-15. It reveals a significant disparity in the AI’s prediction classifications.

Table 1.

Names for the combinations of independent variables, representing the relative concentrations (% w/v) of sodium alginate and gelatin in the hydrogels, and calcium chloride solution as the crosslinking agent.

Table 1.

Names for the combinations of independent variables, representing the relative concentrations (% w/v) of sodium alginate and gelatin in the hydrogels, and calcium chloride solution as the crosslinking agent.

| |

Polymers |

Crosslinker |

| Combination’s name |

Soduim alginate (%) |

Gelatin (%) |

CaCl2(%) |

| DMEM-6 |

7 |

3 |

3 |

| DMEM-8 |

7 |

5 |

3 |

| DMEM-10 |

8.364 |

4 |

2 |

| DMEM-11 |

5 |

2.318 |

2 |

| DMEM-12 |

5 |

5.682 |

2 |

| DMEM-14 |

5 |

4 |

3.682 |

| DMEM-15 |

5 |

4 |

2 |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).