Submitted:

06 December 2024

Posted:

06 December 2024

You are already at the latest version

Abstract

Keywords:

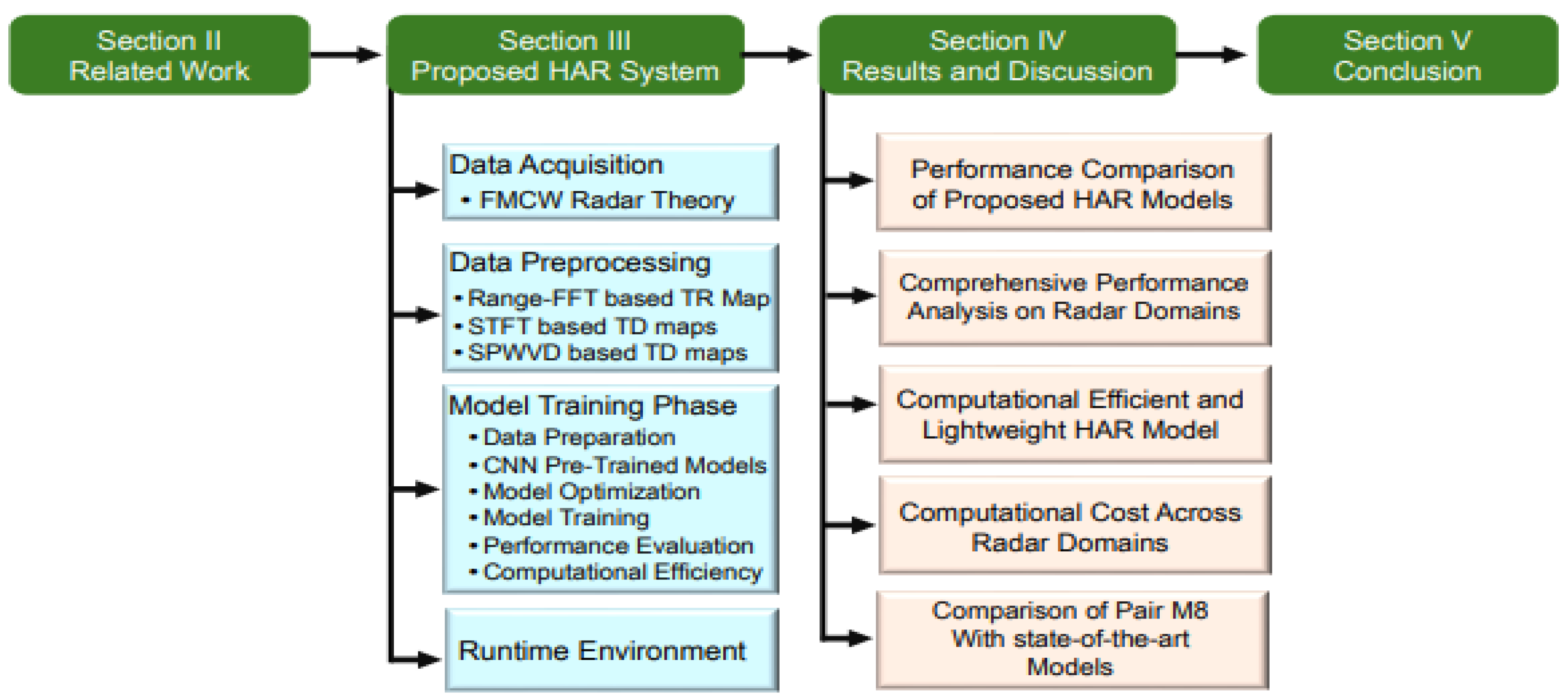

1. Introduction

- Evaluation of Radar 2D Domain Techniques: We empirically evaluated range-FFT based time-range (TR) maps and time-Doppler (TD) maps generated using STFT and SPWVD, quantifying their computational efficiency in real-time HAR systems.

- Optimizing models with Transfer Learning (TL): We evaluated the performance of state-of-the-art CNN architectures, including VGG-16, VGG-19, ResNet-50, and MobileNetV2, to improve the accuracy of the proposed HAR system using TL methods.

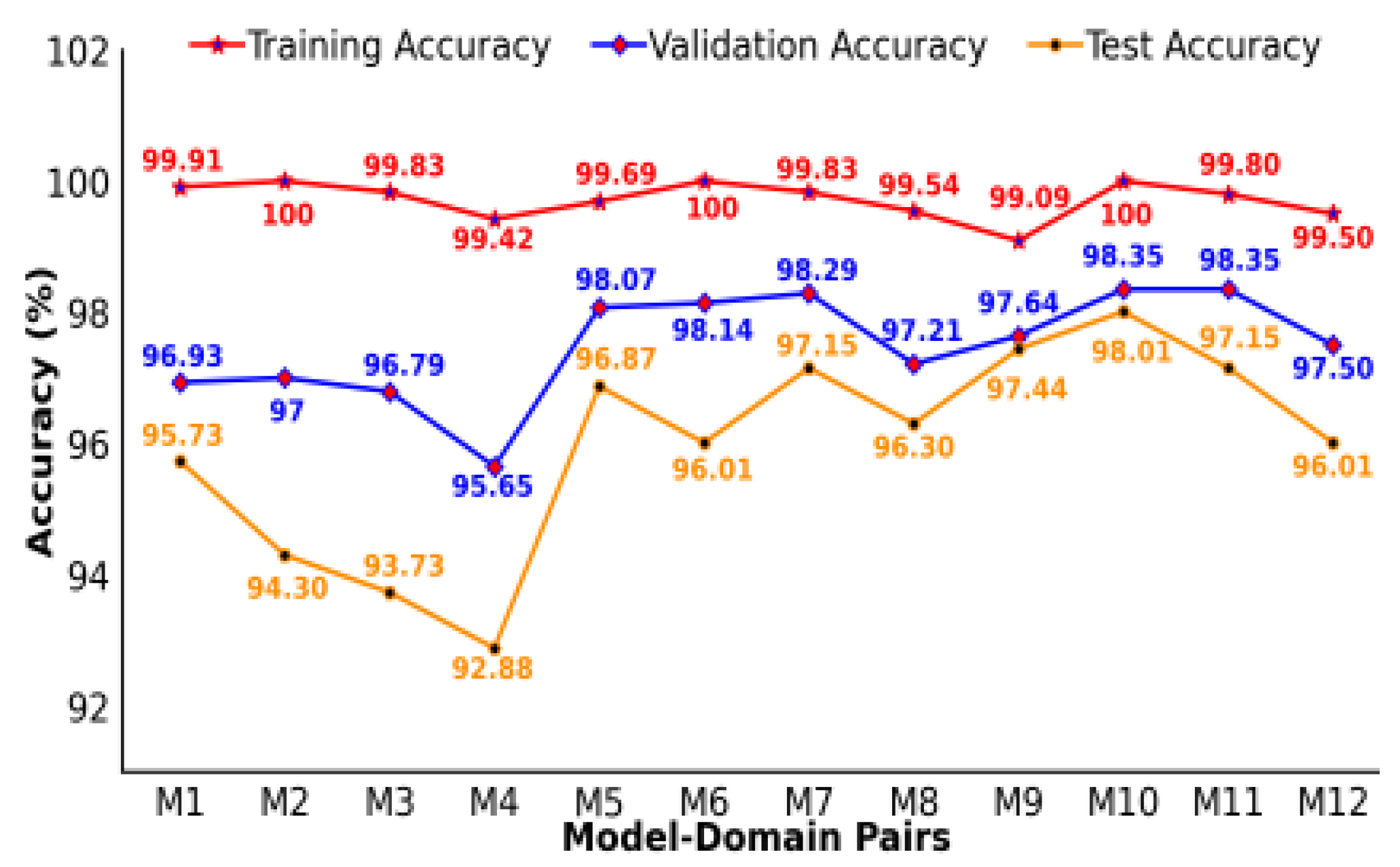

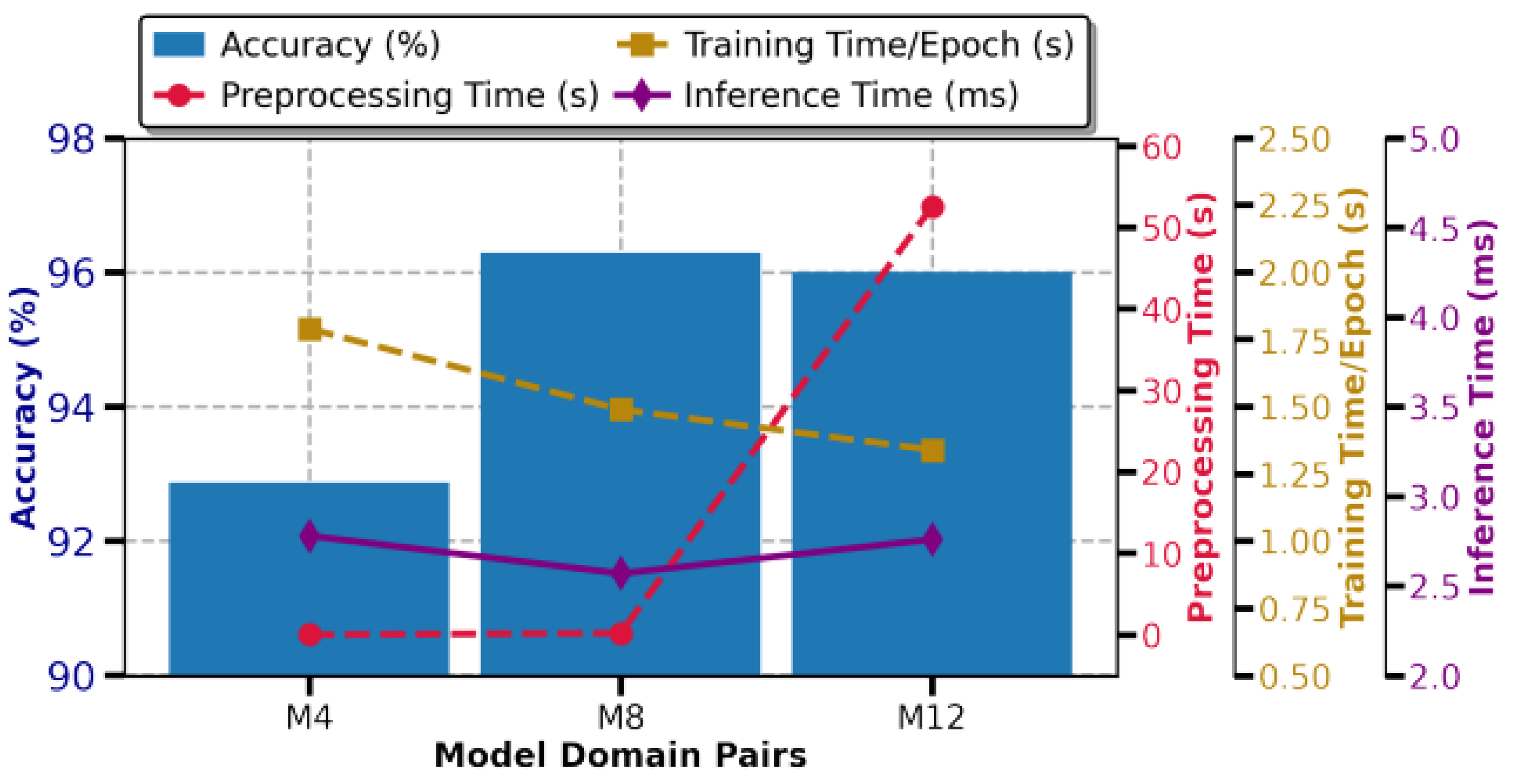

- Performance and Computational analysis of Model-Domain pairs: We conducted a comprehensive analysis of 12 model-domain pairs, focusing on real-time performance to optimize the balance between accuracy and computational efficiency (preprocessing, training and inference times). The analysis is also extended to performance metrics beyond accuracy, such as recall, precision, and F1 score, which are critical to evaluating effectiveness in real-world applications.

2. Related Work

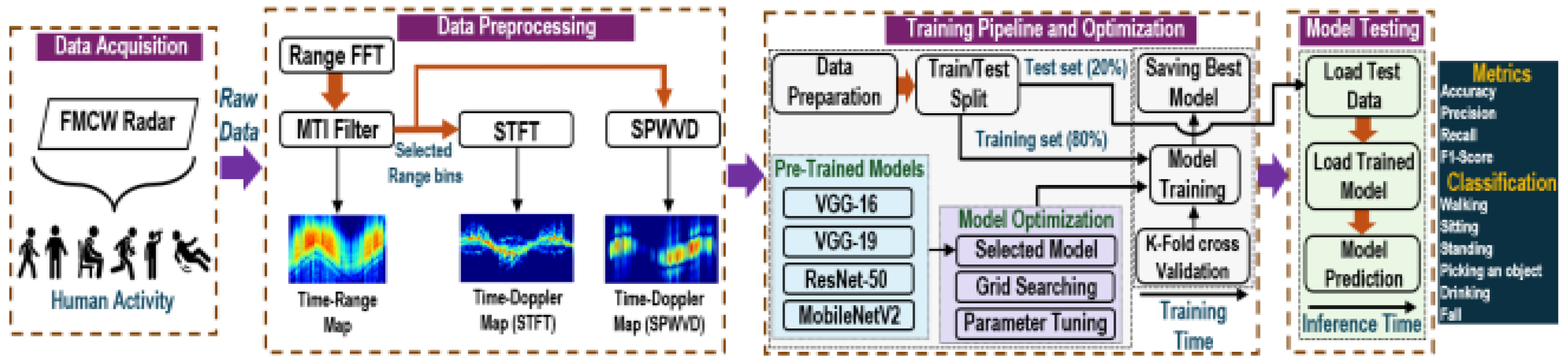

3. Radar-Based HAR System

3.1. Data Acquisition

3.1.1. FMCW Radar Principle

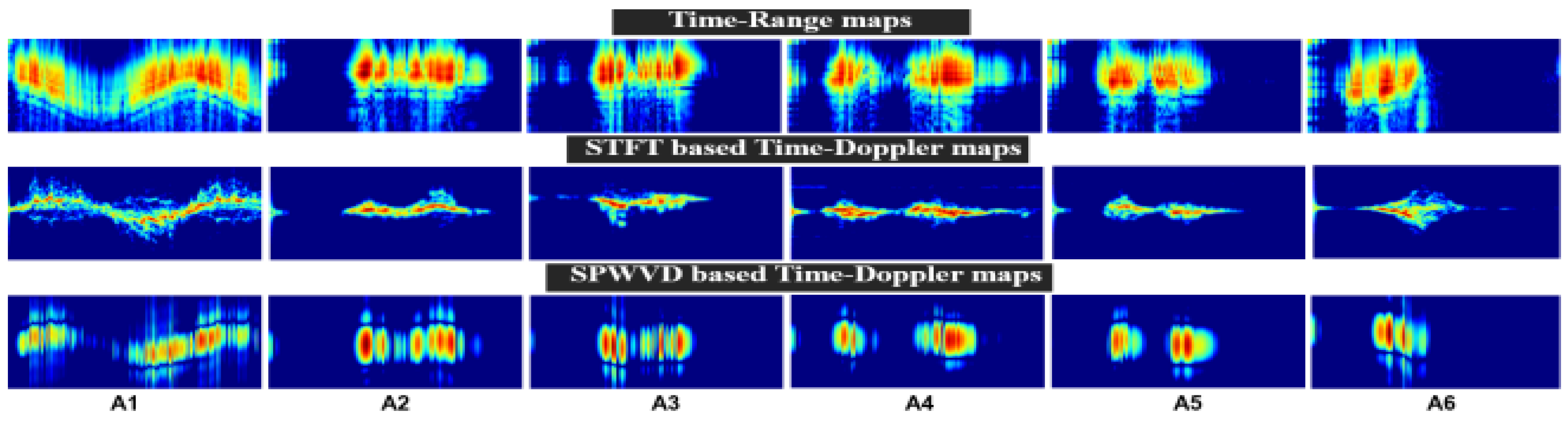

3.2. Data Preprocessing

3.2.1. Range-FFT Based Time-Range (TR) Maps

3.2.2. STFT Based TD Maps

3.2.3. SPWVD Based TD Maps

3.3. Training Pipeline and Optimization

3.3.1. Data Preparation

3.3.2. CNN Pre-Trained Models

3.3.3. Model Optimization

3.3.4. Model Training

3.3.5. Performance Evaluation

3.3.6. Computational Efficiency

- Training time: This is the time required to train the model using a particular radar-based domain. Training time is an important parameter because extended training can be difficult in cases where models need frequent updates or computing resources are limited. Achieving fast training times improves the utility of the model in a range of applications.

-

Inference Time: To measure the inference time () of the model, we adopted the simple method from [53], focusing on the time required to perform a single inference cycle on the test set. Specifically, the inference time is calculated as follows:Where represents the timestamp when the inference request is issued and represents the timestamp when the inference result is obtained.

3.4. Proposed Radar-Based HAR Algorithm

| Algorithm 1: Proposed Radar-Based HAR System |

|

3.5. Runtime Environment

4. Results and Discussion

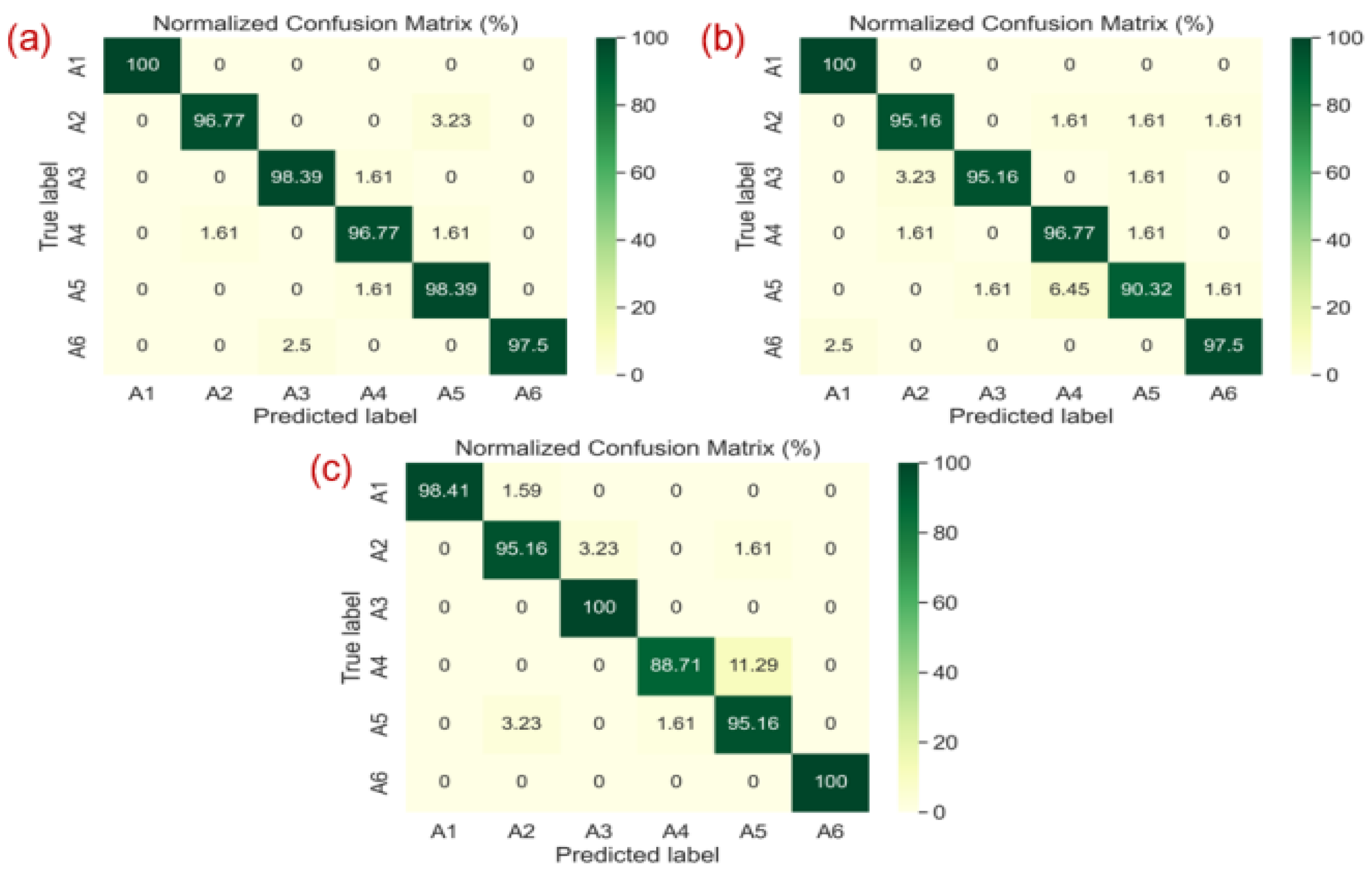

4.1. Performance Comparison of Proposed HAR Models

4.2. Comprehensive Performance Analysis on Radar Domains

4.3. Computational Efficient and LightWeight HAR Model

4.4. Computational Cost Across Radar Domains

4.5. Comparison of Pair M8 with State-of-the-Art Models

5. Conclusions

Author Contributions

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- W. Jiao, R. Li, J. Wang, D. Wang, and K. Zhang, “Activity recognition in rehabilitation training based on ensemble stochastic configuration networks,” Neural Computing and Applications, vol. 35, no. 28, pp. 21229–21245, 2023.

- D. Deotale, M. Verma, P. Suresh, and N. Kumar, “Physiotherapy-based human activity recognition using deep learning,” Neural Computing and Applications, vol. 35, no. 15, pp. 11431–11444, 2023.

- N. Golestani and M. Moghaddam, “Human activity recognition using magnetic induction-based motion signals and deep recurrent neural networks,” Nature Communications, vol. 11, no. 1, pp. 1551, 2020.

- C.M. Ranieri, S. MacLeod, M. Dragone, P.A. Vargas, and R.A.F. Romero, “Activity recognition for ambient assisted living with videos, inertial units and ambient sensors,” Sensors, vol. 21, no. 3, pp. 768, 2021.

- H. Storf, T. Kleinberger, M. Becker, M. Schmitt, F. Bomarius, and S. Prueckner, “An event-driven approach to activity recognition in ambient assisted living,” in Proc. Ambient Intelligence: European Conference, AmI 2009, Salzburg, Austria, Nov. 18-21, 2009, pp. 123–132.

- A. Zam, A. Bohlooli, and K. Jamshidi, “Unsupervised deep domain adaptation algorithm for video-based human activity recognition via recurrent neural networks,” Engineering Applications of Artificial Intelligence, vol. 136, pp. 108922, 2024.

- A.C. Cob-Parro, C. Losada-Gutiérrez, M. Marrón-Romera, A. Gardel-Vicente, and I. Bravo-Muñoz, “A new framework for deep learning video-based human action recognition on the edge,” Expert Systems with Applications, vol. 238, pp. 122220, 2024.

- Y. Lu, L. Zhou, A. Zhang, S. Zha, X. Zhuo, and S. Ge, “Application of deep learning and intelligent sensing analysis in smart home,” Sensors, vol. 24, no. 3, pp. 953, 2024.

- E.K. Kumar, D.A. Kumar, K. Murali, P. Kiran, and M. Kumar, “Three stream human action recognition using Kinect,” in AIP Conference Proceedings, vol. 2512, no. 1, 2024.

- S. Zhang, Y. Li, S. Zhang, F. Shahabi, S. Xia, Y. Deng, and N. Alshurafa, “Deep learning in human activity recognition with wearable sensors: A review on advances,” Sensors, vol. 22, no. 4, pp. 1476, 2022.

- S. Mekruksavanich and A. Jitpattanakul, “Device position-independent human activity recognition with wearable sensors using deep neural networks,” Applied Sciences, vol. 14, no. 5, pp. 2107, 2024.

- X. Li, Y. He, and X. Jing, “A survey of deep learning-based human activity recognition in radar,” Remote Sensing, vol. 11, no. 9, pp. 1068, 2019.

- Y. Yao, W. Liu, G. Zhang, and W. Hu, “Radar-based human activity recognition using hyperdimensional computing,” IEEE Transactions on Microwave Theory and Techniques, vol. 70, no. 3, pp. 1605–1619, 2021.

- J. Yousaf, S. Yakoub, S. Karkanawi, T. Hassan, E. Almajali, H. Zia, and M. Ghazal, “Through-the-wall human activity recognition using radar technologies: A review,” IEEE Open Journal of Antennas and Propagation, 2024.

- S. Huan, L. Wu, M. Zhang, Z. Wang, and C. Yang, “Radar human activity recognition with an attention-based deep learning network,” Sensors, vol. 23, no. 6, pp. 3185, 2023.

- R. Yu, Y. Du, J. Li, A. Napolitano, and J. Le Kernec, “Radar-based human activity recognition using denoising techniques to enhance classification accuracy,” IET Radar, Sonar & Navigation, vol. 18, no. 2, pp. 277–293, 2024.

- F. Ayaz, B. Alhumaily, S. Hussain, L. Mohjazi, M.A. Imran, and A. Zoha, “Integrating millimeter-wave FMCW radar for investigating multi-height vital sign monitoring,” in Proc. 2024 IEEE Wireless Communications and Networking Conference (WCNC), pp. 1–6, 2024. [CrossRef]

- G. Paterniani, D. Sgreccia, A. Davoli, G. Guerzoni, P. Di Viesti, A.C. Valenti, M. Vitolo, G.M. Vitetta, and G. Boriani, “Radar-based monitoring of vital signs: A tutorial overview,” Proceedings of the IEEE, vol. 111, no. 3, pp. 277–317, 2023. [CrossRef]

- S. Iyer, L. Zhao, M.P. Mohan, J. Jimeno, M.Y. Siyal, A. Alphones, and M.F. Karim, “mm-Wave radar-based vital signs monitoring and arrhythmia detection using machine learning,” Sensors, vol. 22, no. 9, pp. 3106, 2022.

- L. Qu, Y. Wang, T. Yang, and Y. Sun, “Human activity recognition based on WRGAN-GP-synthesized micro-Doppler spectrograms,” IEEE Sensors Journal, vol. 22, no. 9, pp. 8960–8973, 2022. [CrossRef]

- X. Li, Y. He, F. Fioranelli, and X. Jing, “Semisupervised human activity recognition with radar micro-Doppler signatures,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–12, 2022. [CrossRef]

- W.-Y. Kim and D.-H. Seo, “Radar-based HAR combining range–time–Doppler maps and range-distributed-convolutional neural networks,” IEEE Trans. on Geoscience and Remote Sensing, vol. 60, pp. 1–11, 2022. [CrossRef]

- W. Ding, X. Guo, and G. Wang, “Radar-based human activity recognition using hybrid neural network model with multidomain fusion,” IEEE Transactions on Aerospace and Electronic Systems, vol. 57, no. 5, pp. 2889–2898, 2021. [CrossRef]

- Z. Liu, L. Xu, Y. Jia, and S. Guo, “Human activity recognition based on deep learning with multi-spectrogram,” in Proc. 2020 IEEE 5th International Conference on Signal and Image Processing (ICSIP), pp. 11–15, 2020.

- Y. Qian, C. Chen, L. Tang, Y. Jia, and G. Cui, “Parallel LSTM-CNN network with radar multispectrogram for human activity recognition,” IEEE Sensors Journal, vol. 23, no. 2, pp. 1308–1317, 2022.

- Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

- A. Krizhevsky, I. Sutskever, and G.E. Hinton, “Imagenet classification with deep convolutional neural networks,” Advances in Neural Information Processing Systems, vol. 25, 2012.

- A. Alkasimi, A.-V. Pham, C. Gardner, and B. Funsten, “Human activity recognition based on 4-domain radar deep transfer learning,” in Proc. 2023 IEEE Radar Conference (RadarConf23), pp. 1–6, 2023.

- A. Dixit, V. Kulkarni, and V.V. Reddy, “Cross frequency adaptation for radar-based human activity recognition using few-shot learning,” IEEE Geoscience and Remote Sensing Letters, 2023.

- O. Pavliuk, M. Mishchuk, and C. Strauss, “Transfer learning approach for human activity recognition based on continuous wavelet transform,” Algorithms, vol. 16, no. 2, pp. 77, 2023.

- D. Theckedath and R.R. Sedamkar, “Detecting affect states using VGG16, ResNet50 and SE-ResNet50 networks,” SN Computer Science, vol. 1, pp. 1–7, 2020. [Online]. Available online: https://link.springer.com/article/10.1007/s42979-020-0114-9.

- N. Dey, Y.-D. Zhang, V. Rajinikanth, R. Pugalenthi, and N.S.M. Raja, “Customized VGG19 architecture for pneumonia detection in chest X-rays,” Pattern Recognition Letters, vol. 143, pp. 67–74, 2021.

- M. Sandler, A. Howard, M. Zhu, A. Zhmoginov, and L.-C. Chen, “MobileNetV2: Inverted residuals and linear bottlenecks,” in Proc. IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520, 2018.

- F. Kulsoom, S. Narejo, Z. Mehmood, H. N. Chaudhry, A. Butt, and A. K. Bashir, “A review of machine learning-based human activity recognition for diverse applications,” Neural Computing and Applications, vol. 34, no. 21, pp. 18289–18324, 2022.

- A. Biswal, S. Nanda, C. R. Panigrahi, S. K. Cowlessur, and B. Pati, “Human activity recognition using machine learning: A review,” in Progress in Advanced Computing and Intelligent Engineering: Proceedings of ICACIE 2020, pp. 323–333, 2021.

- M. Zenaldin and R. M. Narayanan, “Radar micro-Doppler based human activity classification for indoor and outdoor environments,” in Radar Sensor Technology XX, vol. 9829, pp. 364–373, 2016.

- L. Tang, Y. Jia, Y. Qian, S. Yi, and P. Yuan, “Human activity recognition based on mixed CNN with radar multi-spectrogram,” IEEE Sensors Journal, vol. 21, no. 22, pp. 25950–25962, 2021.

- F. J. Abdu, Y. Zhang, and Z. Deng, “Activity classification based on feature fusion of FMCW radar human motion micro-Doppler signatures,” IEEE Sensors Journal, vol. 22, no. 9, pp. 8648–8662, 2022.

- A. Shrestha, C. Murphy, I. Johnson, A. Anbulselvam, F. Fioranelli, J. Le Kernec, and S. Z. Gurbuz, “Cross-frequency classification of indoor activities with dnn transfer learning,” in 2019 IEEE Radar Conference (RadarConf), pp. 1–6, 2019.

- Z.A. Sadeghi and F. Ahmad, “Whitening-aided learning from radar micro-Doppler signatures for human activity recognition,” Sensors, vol. 23, no. 17, pp. 7486, 2023.

- X. Zhou, J. Tian, and H. Du, “A lightweight network model for human activity classification based on pre-trained MobileNetV2,” in IET Conference Proceedings CP779, vol. 2020, no. 9, pp. 1483–1487, 2020.

- A. Shrestha, H. Li, J. Le Kernec, and F. Fioranelli, “Continuous human activity classification from FMCW radar with Bi-LSTM networks,” IEEE Sensors Journal, vol. 20, no. 22, pp. 13607–13619, 2020.

- F. Fioranelli et al., “Radar signatures of human activities,” Univ. Glasgow, Glasgow, U.K., 2019. [Online]. Available online: https://researchdata.gla.ac.uk/848/.

- S. Yang, et al., “The Human Activity Radar Challenge: Benchmarking Based on the ‘Radar Signatures of Human Activities’ Dataset From Glasgow University,” IEEE Jr. Biomedical and Health Informatics, vol. 27, 2023.

- A. Safa, F. Corradi, L. Keuninckx, I. Ocket, A. Bourdoux, F. Catthoor, and G. G. E. Gielen, “Improving the Accuracy of Spiking Neural Networks for Radar Gesture Recognition Through Preprocessing,” IEEE Transactions on Neural Networks and Learning Systems, vol. 34, no. 6, pp. 2869–2881, 2023. [CrossRef]

- J. Deng, W. Dong, R. Socher, L. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255, 2009.

- K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

- A. Faghihi, M. Fathollahi, and R. Rajabi, “Diagnosis of skin cancer using VGG16 and VGG19 based transfer learning models,” Multimedia Tools and Applications, vol. 83, no. 19, pp. 57495–57510, 2024.

- Z. Wu, C. Shen, and A. Van Den Hengel, “Wider or deeper: Revisiting the resnet model for visual recognition,” Pattern Recognition, vol. 90, pp. 119–133, 2019.

- A. G. Howard, “Mobilenets: Efficient convolutional neural networks for mobile vision applications,” arXiv preprint arXiv:1704.04861, 2017.

- M. Sandler, A. Howard, M. Zhu, A. Zhmoginov, and L.-C. Chen, “Mobilenetv2: Inverted residuals and linear bottlenecks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520, 2018.

- S. Takano, “Chapter 2 - traditional microarchitectures,” in Thinking machines, S. Takano, Ed. Academic Press, 2021, pp. 19–47.

- K. Papadopoulos and M. Jelali, “A Comparative Study on Recent Progress of Machine Learning-Based Human Activity Recognition with Radar,” Applied Sciences, vol. 13, no. 23, pp. 12728, 2023.

| Ref. | Radar Domains | Data Preprocessing | CNN/LSTM | TL-Based | ||

|---|---|---|---|---|---|---|

| TR | STFT | SPWVD | Time | methods | ||

| [15] | ✗ | ✓ | ✗ | ✗ | ✓ | ✗ |

| [22] | ✓ | ✓ | ✗ | ✗ | ✓ | ✗ |

| [23] | ✓ | ✓ | ✗ | ✗ | ✓ | ✗ |

| [24] | ✗ | ✓ | ✓ | ✗ | ✗ | ✓ |

| [25] | ✗ | ✓ | ✓ | ✗ | ✓ | ✗ |

| [37] | ✗ | ✓ | ✓ | ✗ | ✓ | ✗ |

| [40] | ✗ | ✓ | ✗ | ✗ | ✓ | ✗ |

| [41] | ✗ | ✓ | ✗ | ✗ | ✗ | ✓ |

| [42] | ✗ | ✓ | ✗ | ✗ | ✓ | ✗ |

| Our | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Short Name | Activity Description | Samples | Duration |

|---|---|---|---|

| A1 | Walking back and forth | 312 | 10 s |

| A2 | Sitting on a chair | 311 | 5 s |

| A3 | Standing | 311 | 5 s |

| A4 | Bend to pick an object | 309 | 5 s |

| A5 | Drinking water | 311 | 5 s |

| A6 | Fall | 197 | 5 s |

| Parameters | VGG-16 | VGG-19 | ResNet-50 | MobileNetV2 |

|---|---|---|---|---|

| Batch size | 32 | 32 | 32 | 32 |

| Dropout | 0.5 | 0.2 | 0.2 | 0.2 |

| Learning rate | 2e-3 | 2e-3 | 2e-3 | 4e-4 |

| Optimizer | SGD | SGD | SGD | Adam |

| Decay | - | 1e-6 | 1e-6 | - |

| Momentum | 0.9 | 0.9 | 0.9 | - |

| Epochs/Fold | 25 | 25 | 25 | 25 |

| MDPs | Radar Domains | Models | Accuracy (%) | Precision | Recall | F1-score |

|---|---|---|---|---|---|---|

| M1 | TR | VGG-16 | 95.73 | 0.9576 | 0.9573 | 0.9572 |

| M2 | TR | VGG-19 | 94.30 | 0.9436 | 0.9430 | 0.9429 |

| M3 | TR | ResNet-50 | 93.73 | 0.9373 | 0.9373 | 0.9368 |

| M4 | TR | MobileNetV2 | 92.88 | 0.9307 | 0.9288 | 0.9284 |

| M5 | STFT | VGG-16 | 96.87 | 0.9697 | 0.9687 | 0.9687 |

| M6 | STFT | VGG-19 | 96.01 | 0.9639 | 0.9624 | 0.9624 |

| M7 | STFT | ResNet-50 | 97.15 | 0.9721 | 0.9731 | 0.9721 |

| M8 | STFT | MobileNetV2 | 96.30 | 0.9635 | 0.9651 | 0.9642 |

| M9 | SPWVD | VGG-16 | 97.44 | 0.9764 | 0.9744 | 0.9745 |

| M10 | SPWVD | VGG-19 | 98.01 | 0.9803 | 0.9801 | 0.9801 |

| M11 | SPWVD | ResNet-50 | 97.15 | 0.9720 | 0.9715 | 0.9715 |

| M12 | SPWVD | MobileNetV2 | 96.01 | 0.9629 | 0.9580 | 0.9600 |

| Model-Domain | Training | Inference |

|---|---|---|

| Pairs | Time/epoch (s) | Time/sample (ms) |

| M1 | 3.40 | 7.16 |

| M2 | 3.77 | 8.11 |

| M3 | 2.77 | 3.80 |

| M4 | 1.79 | 2.78 |

| M5 | 3.38 | 7.10 |

| M6 | 4.38 | 6.90 |

| M7 | 2.74 | 3.54 |

| M8 | 1.49 | 2.57 |

| M9 | 3.50 | 7.02 |

| M10 | 3.76 | 6.88 |

| M11 | 2.73 | 3.99 |

| M12 | 1.34 | 2.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).