1. Define the Scope and Requirements

Scope

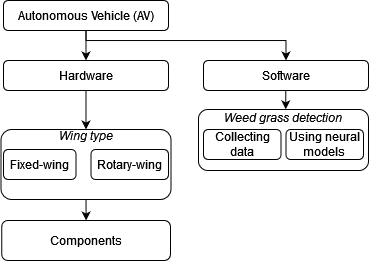

The main task at this stage is the problem of weed recognition.

Weed Recognition: The AI model will recognize weed, and then automatically spray the weed that been recognized with poison.

Features

Real-time Inference: The model needs to operate in real-time on the Jetson Nano microcomputer, which requires optimizing the model for speed and efficiency.

Edge Deployment: All processing will be done on the Jetson Nano, requiring the model to be lightweight and efficient.

More features: In addition to spraying weed, the AI-controlled AV should be also capable of capturing videos, high-quality photos, and send an alert to the operates in such situation.

A small compartment is included for such AVs with flight capability, in which the advantages and disadvantages are displayed as follows.

Table 1.

Compartment between fixed wing and rotor flying AVs.

Table 1.

Compartment between fixed wing and rotor flying AVs.

| Flying AV |

Fixed wings |

Rotary wings |

| Flight time |

Up to an hour |

Up to 20 min |

| Flexibility in altering direction during a flight |

Allow new directions for re-direction |

Allow new direction for re-direction direction during flight wind direction. |

| Runway |

Requires a runway to take off from the ground |

Not required runway |

| Adaptable |

Average level of adaptation |

High level of adaptation |

| Weed recognition |

More efficient |

Less efficient |

| Coverage area |

Large area |

Small area |

2. Collect and Prepare Data

2.1. Weed Data Collection

In order to train the AI on recognize harmful weed, we can either build our own data set, which is going to be helpful to our research because every area and crops have a different kind of harmful weed to protect it from.

As such, building a well-designed data set would be the optimal solution, but we can also implement the using of a generic dataset with limited efficiency / but using a generic dataset with limited efficiency is also an option [

2].

2.2. Data Augmentation

Augmentation Techniques: Apply random rotations, flips, lighting adjustments, and other augmentations to make the model more robust to variations in real-world conditions.

Frame Extraction: Extract individual frames from the videos if the full video data is not used for training.

3. Design the Model Architecture

Recurrent Neural Network (RNN): Starts with RNN for weed recognition It is possible to use some ready to go models such as YOLO, but developing an independent model is required in later stages of this research.

Output Layer: The final layer should have as many nodes as there are gestures, with a Softmax activation to output probabilities for each gesture.

Inference: The images from the RGB camera aimed downwards are captured at 1920×1080 and split into k=N×N segments. The number of segments can be adjusted.

4. Train the Model

Training Setup: In overall, it is required to train 3 models and after that include all these features in one model, it is a challenging task to perform such merging between these features, which is going to require a high-end machine learning development process.

Preprocessing and cleaning data: After gathering data it is important to make sure that the data collected is well presented and includes all the rare and common types of weeds, to ensure accurate weed detection.

Training process: this process will include utilizing high computational power, provided by the dedicated server.

Fine-tuning: after training, the process of fine-tuning starts with checking the accuracy and multitasking of the model.

Deploying the models and real-life checks: it would be possible to check the efficacy of our model by simply checking its ability to recognize weed in any flying field, or even before the AV itself is fully assembled using camera and Jetson Nano only.

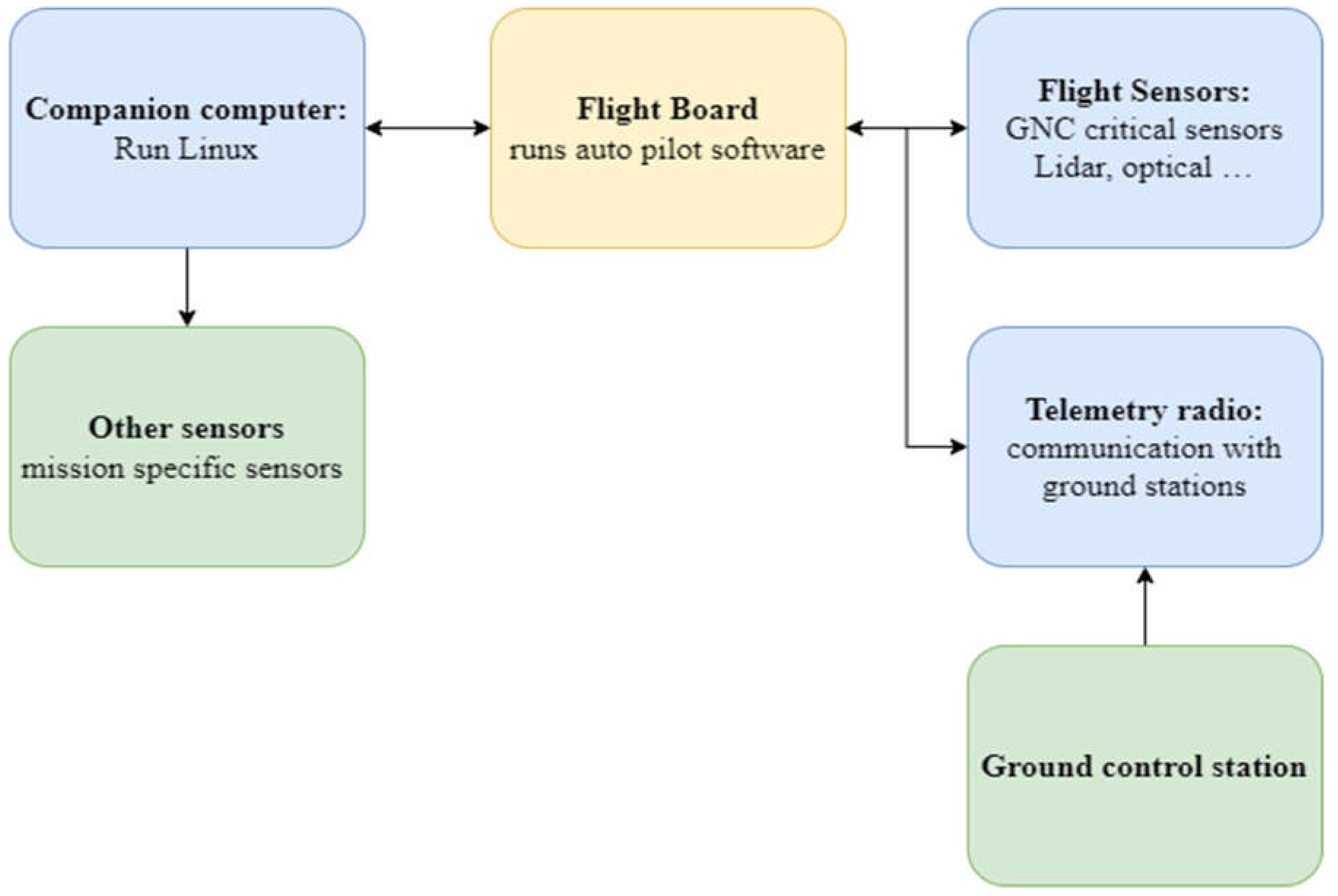

5. Hardware

The main requirements for a flying AV to be compatible with this project are as follows:

5.1. Rotor-Wing Type

1. It must be able to carry a payload of at least 500g, which includes the Jetson Nano, cameras, DC/DC converter, and radios.

2. The flight controller must support MAVlink communication. A CubePilot Orange+ running Ardupilot software is likely the most straightforward option.

3. The system should be configured using Mission Planner software for easy setup and management.

4. AV should be equipped with GPS for accurate positioning and autonomous flight capabilities.

5. A power system that supports the payload and provides sufficient flight time is essential, typically including a high-capacity LiPo battery.

6. Motors and ESCs (Electronic Speed Controllers) must be selected to handle the required payload while ensuring stable flight performance.

7. The frame should be durable and designed to accommodate all components, including the payload, flight controller, and power system.

8. Radio communication should include long-range telemetry for monitoring and controlling the AV in real-time.

Figure 1.

Structure of interaction of AV elements.

Figure 1.

Structure of interaction of AV elements.

5.2. Fixed-Wing Type

Carbon fiber wings that will be made manually.

Different type of motors will be presented in such an AV, as powerful and heavier as possible.

AV must be tested at a testing site with a runway.

6. Experiments to Be Conducted

6.1. Weed Detection

First, we have to identify the harmful weed families in the European part of Russia (application area), there are several studies of this problem. In order to build a suitable dataset, it is required to consider several harmful weeds, define the range of shapes that could be present in the studied area, gather as many photos as possible, build training and test datasets, and verify the results with real-life examples.

It is essential to take into consideration variables like the visibility of the harmful weed from vehicle position and its attitude, add to that the small time window, which can extend to 2-3 seconds in the best case based on its velocity.

7. Results

Thus, various hardware and software requirements for the creation of an agricultural AV with artificial intelligence were described. Based on [

3], it was noticeable that AVs with rotor wings are less efficient in tasks that require a relatively long flight time and significantly higher altitudes.

Initially, it was suggested to build an integrated weed spraying system with the AV, but due to high weigh and the need for a multiple refueling, A more practical solution is to limit the AV part in detecting and locating the weeds, and leave the spraying process to a more energy-efficient AV such as a ground bot.

8. Discussion and Conclusions

Notifying the limitation of this study, the best extension of this study could consider a multifunctional robotic environment that includes ground and aerial bots, that also can be fully automated with minimum human involvement.

After achieving the required goal at this stage, there are going to be more steps, such as building a ground bot and integrating all the working AVs in one virtual environment, which is going to include considerable research in IoT technologies.

Acknowledgement

This research was funded by the Ministry of Science and Higher Education of the Russian Science Foundation (Project “Goszadanie”, FSEE-2024-0011).

References

- Available online: https://developer.nvidia.com/embedded/jetson-nano.

- Boon, Marinus A. , Albert P. Drijfhout, and Solomon Tesfamichael. Comparison of a fixed-wing and multi-rotor UAV for environmental mapping applications: A case study. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2017, 42, 47–54. [Google Scholar]

- Wang, Pei, et al. Weed25: A deep learning dataset for weed identification. Frontiers in Plant Science 2022, 13, 1053329.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).