Submitted:

25 November 2024

Posted:

27 November 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

3. Methodology

4. Findings

4.1. High- and Low-Value Intellectual Work in SLRs

4.2. Automating the Low-Value Activities by Integrating Python with ChatGPT

4.3. Complete Automation of Systematic Reviews in Scientific Research – Future Reality or a Myth?

5. Concluding Remarks

5.1. Theoretical Contributions

5.2. Managerial Contributions

5.3. Limitations and Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- National Academies of Sciences, E.; Affairs, P. and G.; Committee on Science, E.; Science, C. on R. Identifying and Promoting Best Practices for Research Integrity. In Fostering Integrity in Research; National Academies Press (US), 2017.

- Welsh, E. Dealing with Data: Using NVivo in the Qualitative Data Analysis Process. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research 2002, Vol 3, No‐2 (2002): Using Technology in the Qualitative Research Process. [CrossRef]

- Field, A. Discovering Statistics Using IBM SPSS Statistics; SAGE, 2013; ISBN 978-1-4462-7458-3.

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. Opinion Paper: “So What If ChatGPT Wrote It?” Multidisciplinary Perspectives on Opportunities, Challenges and Implications of Generative Conversational AI for Research, Practice and Policy. International Journal of Information Management 2023, 71, 102642. [Google Scholar] [CrossRef]

- Wu, T.; He, S.; Liu, J.; Sun, S.; Liu, K.; Han, Q.-L.; Tang, Y. A Brief Overview of ChatGPT: The History, Status Quo and Potential Future Development. IEEE/CAA J. Autom. Sinica 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Syriani, E.; David, I.; Kumar, G. Screening Articles for Systematic Reviews with ChatGPT. Journal of Computer Languages 2024, 80, 101287. [Google Scholar] [CrossRef]

- Okoli, C.; Schabram, K. A Guide to Conducting a Systematic Literature Review of Information Systems Research. SSRN Journal 2010. [Google Scholar] [CrossRef]

- Huang, M.-H.; Rust, R.T. Artificial Intelligence in Service. Journal of Service Research 2018, 21, 155–172. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Hughes, L.; Ismagilova, E.; Aarts, G.; Coombs, C.; Crick, T.; Duan, Y.; Dwivedi, R.; Edwards, J.; Eirug, A.; et al. Artificial Intelligence (AI): Multidisciplinary Perspectives on Emerging Challenges, Opportunities, and Agenda for Research, Practice and Policy. International Journal of Information Management 2021, 57, 101994. [Google Scholar] [CrossRef]

- Hwang, S.I.; Lim, J.S.; Lee, R.W.; Matsui, Y.; Iguchi, T.; Hiraki, T.; Ahn, H. Is ChatGPT a “Fire of Prometheus” for Non-Native English-Speaking Researchers in Academic Writing? Korean J Radiol 2023, 24, 952–959. [Google Scholar] [CrossRef] [PubMed]

- Moradi, P.; Levy, K. The Future of Work in the Age of AI: Displacement or Risk-Shifting? 2020.

- Roumeliotis, K.I.; Tselikas, N.D. ChatGPT and Open-AI Models: A Preliminary Review. Future Internet 2023, 15, 192. [Google Scholar] [CrossRef]

- Adesso, G. Towards the Ultimate Brain: Exploring Scientific Discovery with ChatGPT AI. AI Magazine 2023, 44, 328–342. [Google Scholar] [CrossRef]

- Reis, J. Omni-channel services in the banking industry: qualitative multi-method research. doctoralThesis, 2018.

- Reis, J. From Artificial Intelligence Research to E-Democracy: Contributions to Political Governance and State Modernization. doctoralThesis, 2023.

- Breen, R.L. A Practical Guide to Focus-Group Research. Journal of Geography in Higher Education 2006, 30, 463–475. [Google Scholar] [CrossRef]

- Halcomb, E.J.; Davidson, P.M. Is Verbatim Transcription of Interview Data Always Necessary? Applied Nursing Research 2006, 19, 38–42. [Google Scholar] [CrossRef] [PubMed]

- Edhlund, B.M.; McDougall, A.G. NVivo 12 Essentials: Your Guide to the World’s Most Powerful Data Analysis Software; 2019; ISBN 978-1-387-74949-2.

- Drisko, J.W.; Maschi, T. Content Analysis; Pocket guides to social work research methods; Oxford University Press: New York, 2016; ISBN 978-0-19-021549-1. [Google Scholar]

- Reis, J.; Amorim, M.; Melão, N. Multichannel Service Failure and Recovery in a O2O Era: A Qualitative Multi-Method Research in the Banking Services Industry. International Journal of Production Economics 2019, 215, 24–33. [Google Scholar] [CrossRef]

- Reis, J. Exploring Applications and Practical Examples by Streamlining Material Requirements Planning (MRP) with Python. Logistics 2023, 7, 91. [Google Scholar] [CrossRef]

- Python Welcome to Python.Org. Available online: https://www.python.org/ (accessed on 29 October 2023).

- Mills, A.; Durepos, G.; Wiebe, E. Encyclopedia of Case Study Research; SAGE Publications, Inc.: 2455 Teller Road, Thousand Oaks California 91320 United States, 2010; ISBN 978-1-4129-5670-3. [Google Scholar]

- Mohamed, K.S. IoT Physical Layer: Sensors, Actuators, Controllers and Programming. In The Era of Internet of Things: Towards a Smart World; Mohamed, K.S., Ed.; Springer International Publishing: Cham, 2019; pp. 21–47; ISBN 978-3-030-18133-8. [Google Scholar]

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan—a Web and Mobile App for Systematic Reviews. Systematic Reviews 2016, 5, 210. [Google Scholar] [CrossRef] [PubMed]

- Ryyan Rayyan - AI Powered Tool for Systematic Literature Reviews 2021.

- Koubaa, A.; Boulila, W.; Ghouti, L.; Alzahem, A.; Latif, S. Exploring ChatGPT Capabilities and Limitations: A Survey. IEEE Access 2023, 11, 118698–118721. [Google Scholar] [CrossRef]

- Xiao, Y.; Watson, M. Guidance on Conducting a Systematic Literature Review. Journal of Planning Education and Research 2019, 39, 93–112. [Google Scholar] [CrossRef]

- McKinney, W. Pandas: A Foundational Python Library for Data Analysis and Statistics. Python for high performance and scientific computing 2011, 14, 1–9. [Google Scholar]

- Team, T.P.D. Pandas: Powerful Data Structures for Data Analysis, Time Series, and Statistics Available online:. Available online: https://pypi.org/project/pandas/ (accessed on 27 August 2023).

- Embarak, O. Data Exploring and Analysis. In Data Analysis and Visualization Using Python: Analyze Data to Create Visualizations for BI Systems; Embarak, Dr.O., Ed.; Apress: Berkeley, CA, 2018; pp. 243–292; ISBN 978-1-4842-4109-7. [Google Scholar]

| Approach | Description |

|---|---|

|

Step 1 Case Study |

A qualitative case study was conducted employing diverse data collection sources, including interviews, direct observation, and focus groups. This methodological approach was designed to discern both high- and low-value intellectual contributions within the context of SLRs. The findings derived from the case study substantiate the development of a conceptual model. |

|

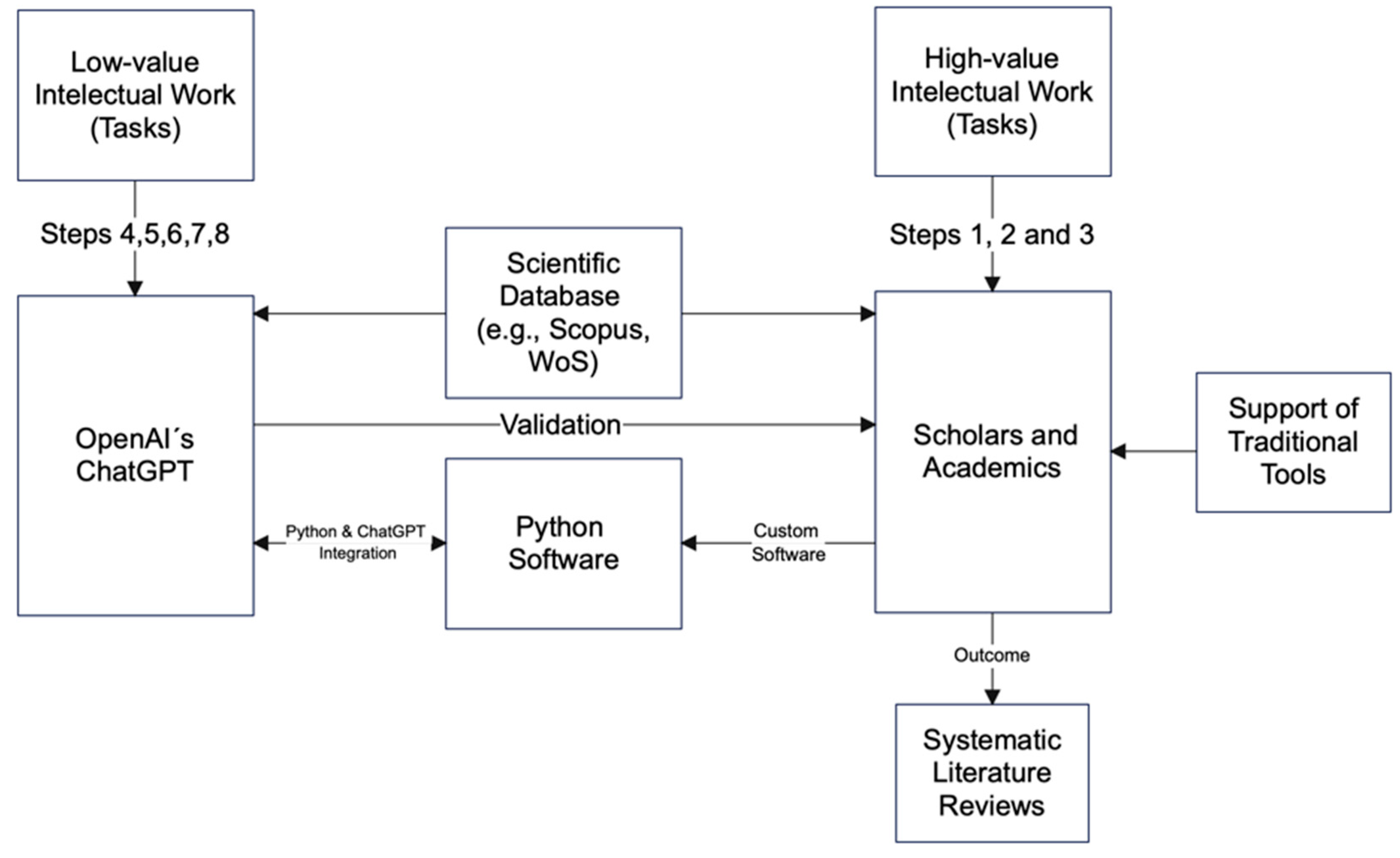

Step 2 Conceptual Framework |

We proposed a conceptual framework delineating the relationship between Python and the execution of low-value tasks, as defined in the case study research, within the contextual domain of ChatGPT. The formulation of this framework involved a rigorous methodology, incorporating insights derived from a comprehensive range of sources. These sources included peer-reviewed scientific articles, conference proceedings, and documentation from the official Python website. |

| Process of Literature Review (PLR) |

Short description (adapted from Xiao and Watson [28]) |

Low-value intellectual work (sources of data collection: interview, direct observation and focus group) |

High-value intellectual work (sources of data collection: interview, direct observation and focus group) |

|

Step 1 Formulate the Problem |

Research questions guide the entire literature review process, influencing the selection of studies, methodology for data extraction and synthesis, and reporting. | – | Research questions guide the literature review process, impacting study selection, methodology, and reporting. Formulating a research problem is highly intellectual, requiring critical thinking and deep knowledge of the research area. |

|

Step 2 Develop and Validate the Review Protocol |

A predefined review protocol is crucial for rigorous SLR, outlining essential elements such as the study's purpose, research questions, inclusion criteria, search strategies, quality assessment, screening procedures, and strategies for data extraction, synthesis, and reporting. | – | Defining protocols is a valuable intellectual task unlikely to be replaced by machines in the current technological era. It involves thoughtful reflection to delineate and describe various aspects. |

|

Step 3 Search the Literature |

SLRs rely on a thorough literature search, encompassing key elements like search channels, keywords, sampling strategy, result refinement, stopping rules, inclusion/exclusion criteria, and screening procedures. | – | The researcher should devise a thorough search strategy, utilizing appropriate databases and search terms to identify relevant studies comprehensively, and this is high-value work. |

|

Step 4 Screen for Inclusion |

Researchers should screen references in two stages: a preliminary review of abstracts for inclusion criteria, followed by a detailed quality assessment of the full text as outlined in step 5. | Efficiently removing duplicates in scientific databases is typically a low-value task. Python can enhance this process. ChatGPT also identifies themes and conducts initial analyses, assessing multiple articles for relevance to the research question. | While ChatGPT can perform low-value piecemeal activities no step 4, it is a high-value task when researchers validate the preliminary results, particularly in the initial review of abstracts for inclusion criteria. Following the exclusion of irrelevant texts, researchers should engage in a comprehensive examination of the full manuscripts. |

|

Step 5 Assessment Quality |

After screening for inclusion, researchers obtain full texts for quality assessment, the final stage before data extraction and synthesis. | Assess individual study quality using predefined criteria. This stage allows for automation using ChatGPT to conduct quality assessments, following criteria like those from the Critical Appraisal Skills Program (CASP). | ChatGPT efficiently conducts quality assessments using techniques like CASP. The researcher's high-value task is to verify the machine's accurate identification of all CASP items in articles. |

|

Step 6 Extracting Data |

Established methods (e.g., meta-synthesis) are employed for synthesizing research. Data extraction often involves coding, especially in extensive reviews. | In qualitative systematic reviews, traditionally, two researchers handle coding and validation. An innovative approach involves ChatGPT performing initial coding, with the researcher using established tools like NVIVO for validation. This integration combines advanced natural language processing with conventional validation methods, potentially enhancing the efficiency of qualitative data analysis. | ChatGPT takes the standardized task responsible of coding, while the second researcher (human) engages in the critical task of validating and reviewing the entire process with traditional tools looking for discrepancies and/or meeting points. This includes ensuring the accuracy of results and confirming the fidelity of the coding undertaken by ChatGPT. |

|

Step 7 Analyzing and Synthesizing Data |

After data extraction, the reviewer organizes the data based on the chosen review type, often using a combination of charts, tables, and textual descriptions with slight variations in reporting standards for different review methodologies. | Scholarly databases like Elsevier Scopus and Web of Science (WoS) offer crucial graphical tools for bibliometric analyses in SLRs. These visuals are more effective when paired with concise textual descriptions. ChatGPT can efficiently generate explanations based on Scopus or WoS articles, such as the temporal evolution of a topic or factors influencing research potential in different countries. It excels in creating succinct, scholarly narratives that enhance the interpretive value of graphical insights from these databases. | The researcher's high-value task is to verify the machine's accurate identification. This includes ensuring the accuracy of results and confirming the fidelity of the coding undertaken by ChatGPT. |

|

Step 8 Report Findings |

For reliable and replicable literature reviews, it is imperative to document the systematic review process in detail. This includes specifying inclusion and exclusion criteria with justifications and reporting findings from literature search, screening, and quality assessment. | During the conclusive stage, ChatGPT can offer valuable assistance, specifically in a capacity designed for comprehensive English proofreading. | Formulating well-founded conclusions from synthesized evidence is a high-value undertaking, paralleled by the discourse on the implications of the findings for theory, practice, and prospective research. |

| PLR | Potential Automation |

|---|---|

|

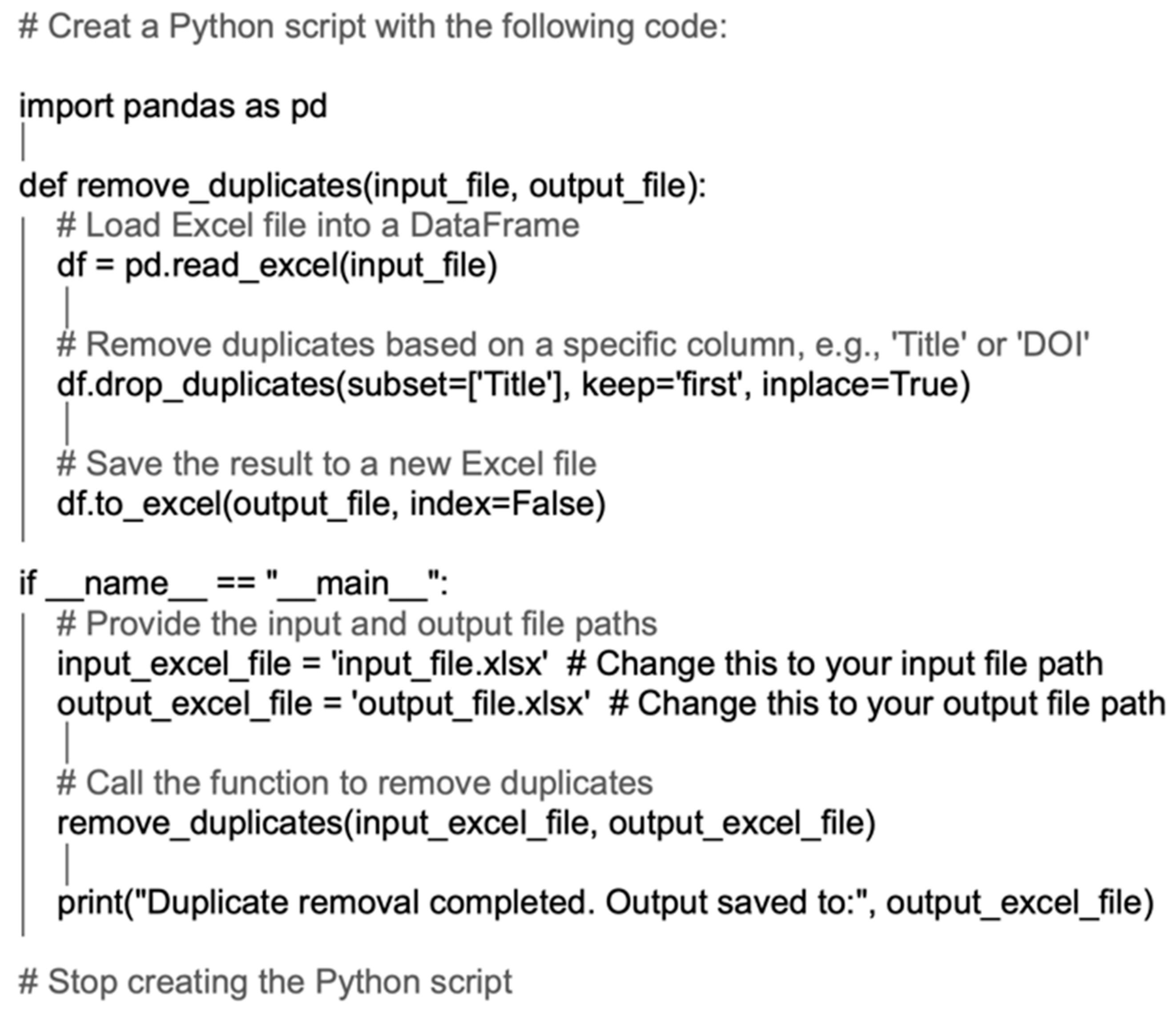

Step 4 Screen for Inclusion |

Customizing software is essential for the automated identification and elimination of duplicate entries within the literature review section of documents sourced from databases (e.g., Scopus) in CSV format. Furthermore, ChatGPT can assist in crafting algorithms for the initial screening and prioritization of pertinent articles. Lastly, ChatGPT can also identify themes and conduct initial analyses, assessing multiple articles for relevance to the research question. |

|

Step 5 Assessment Quality |

Implement algorithms or decision trees to automate the initial quality assessment, flagging studies that may require closer inspection by researchers. |

|

Step 6 Extracting Data |

Close to Step 4, the complete manuscripts may be submitted to ChatGPT, enabling the generation to perform the initial coding and elaboration of concise summaries. Combining traditional methods (NVIVO) with this approach increases the validity and reliability of the research. |

|

Step 7 Analyzing and Synthesizing Data |

Utilize NLP/ChatGPTs to access online content and generate concise text for explaining diverse Scopus/WoS graphs. |

|

Step 8 Report Findings |

The researcher has the option to either directly input the text from the manuscript for a comprehensive review by ChatGPT or configure the OpenAI API key to generate a response from ChatGPT. |

| Summary | Overreliance on automated tools in research reviews poses risks, involving including irrelevant studies and oversight of critical details. Researchers should balance automation with manual review for optimal accuracy and comprehensiveness. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).