Submitted:

16 November 2024

Posted:

20 November 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- 1)

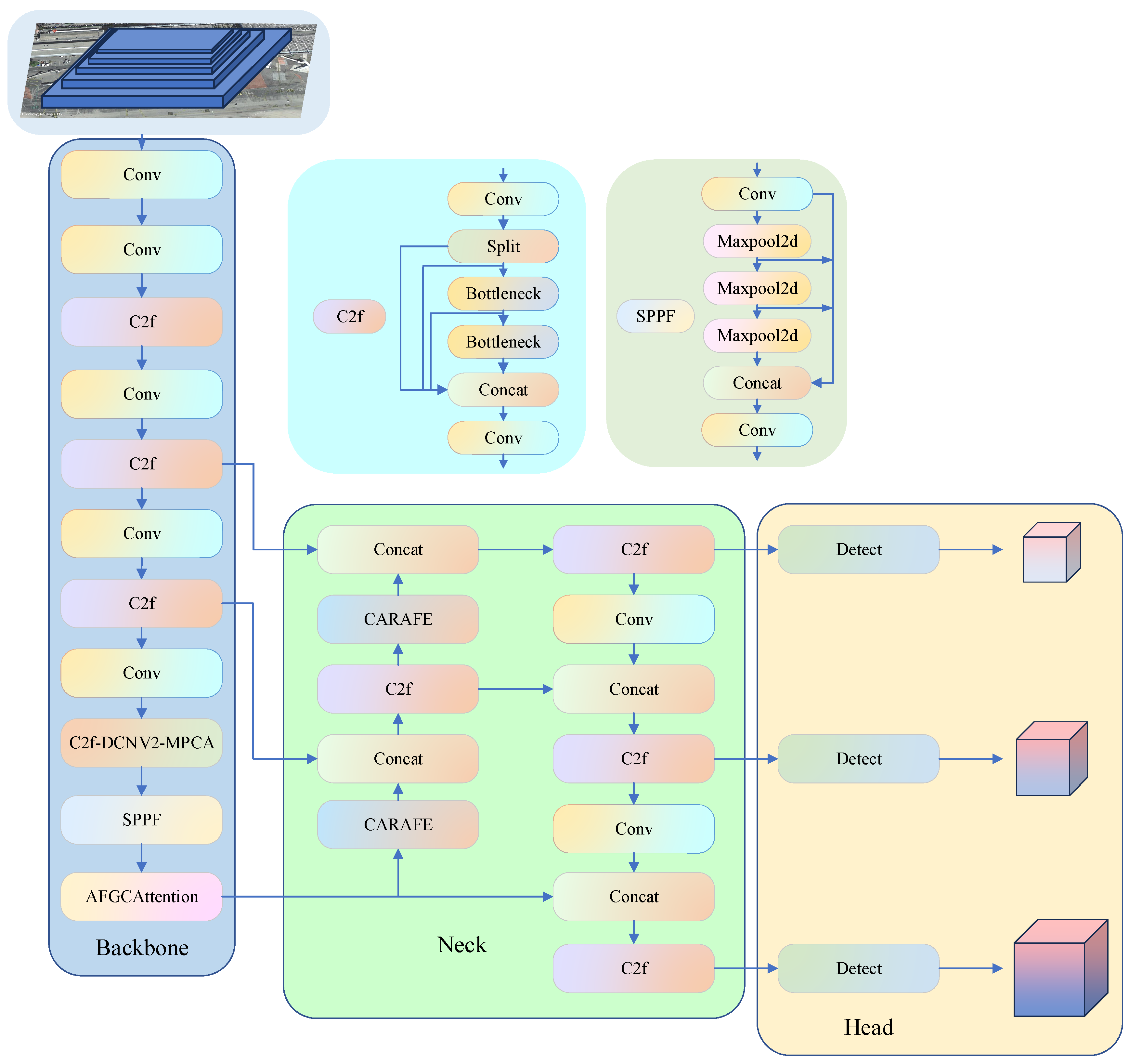

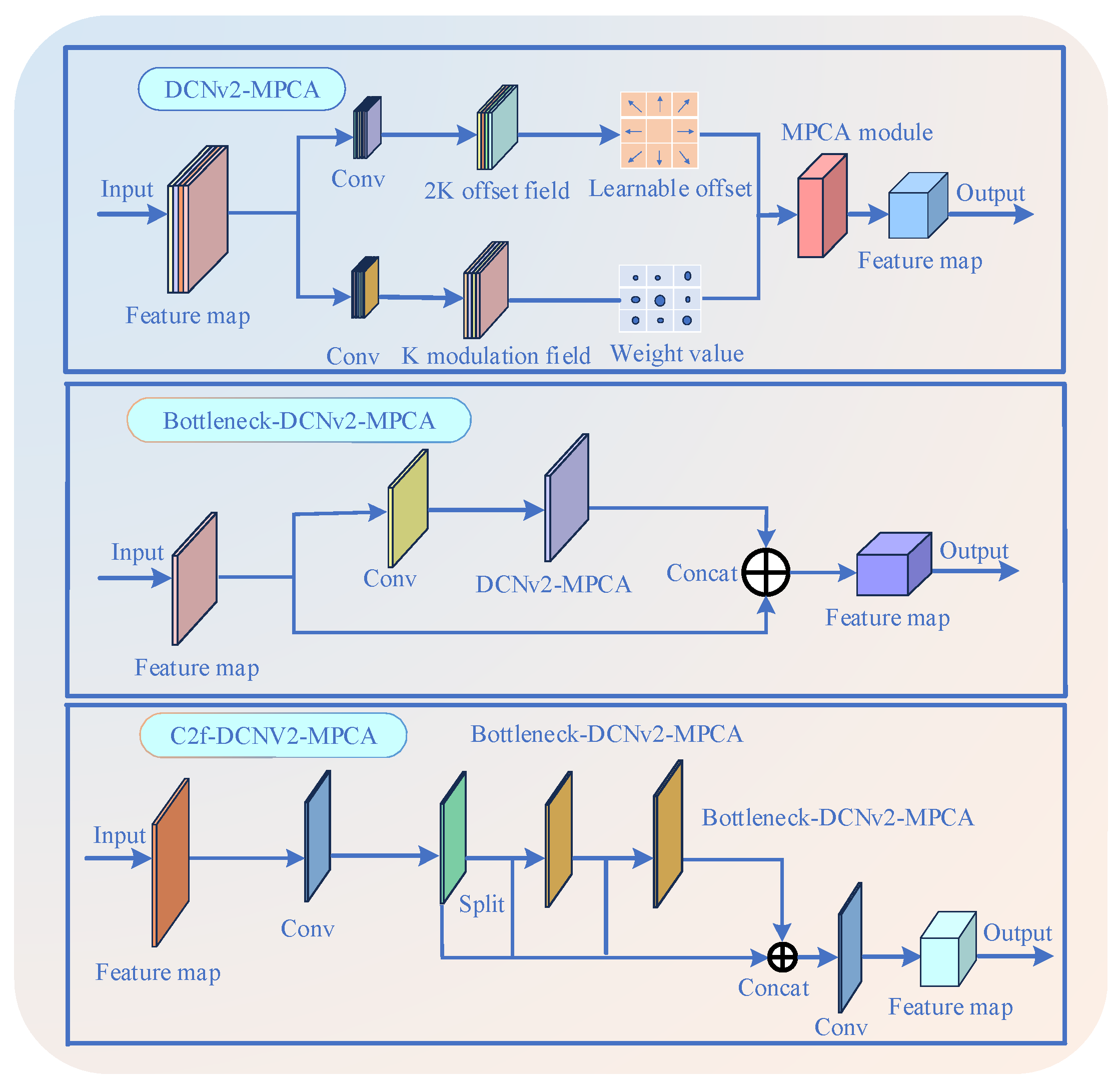

- Introduced the C2f-DCNv2-MPCA optimization module to enhance the model’s multi-scale feature extraction capability, enabling better capture of small object details.

- 2)

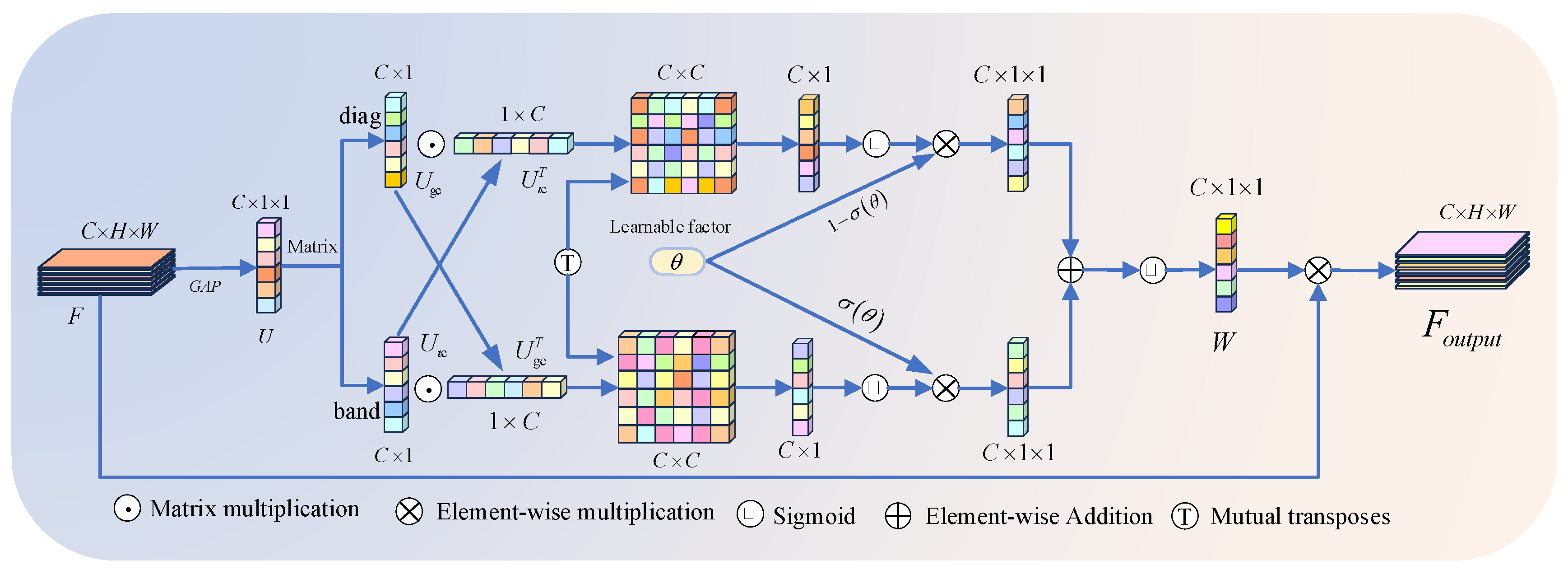

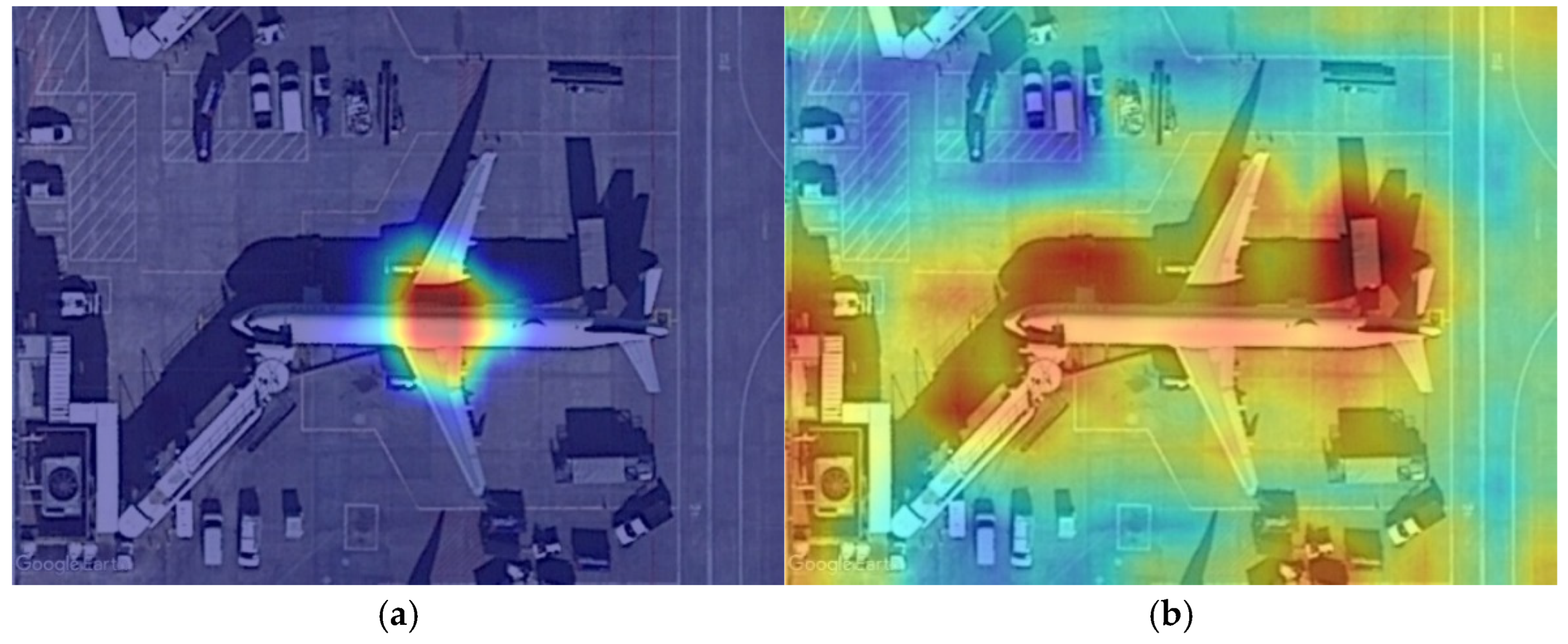

- Added the AFGCAttention mechanism to adaptively focus on key regions of the image, reducing interference from irrelevant information and thereby improving the model’s accuracy.

- 3)

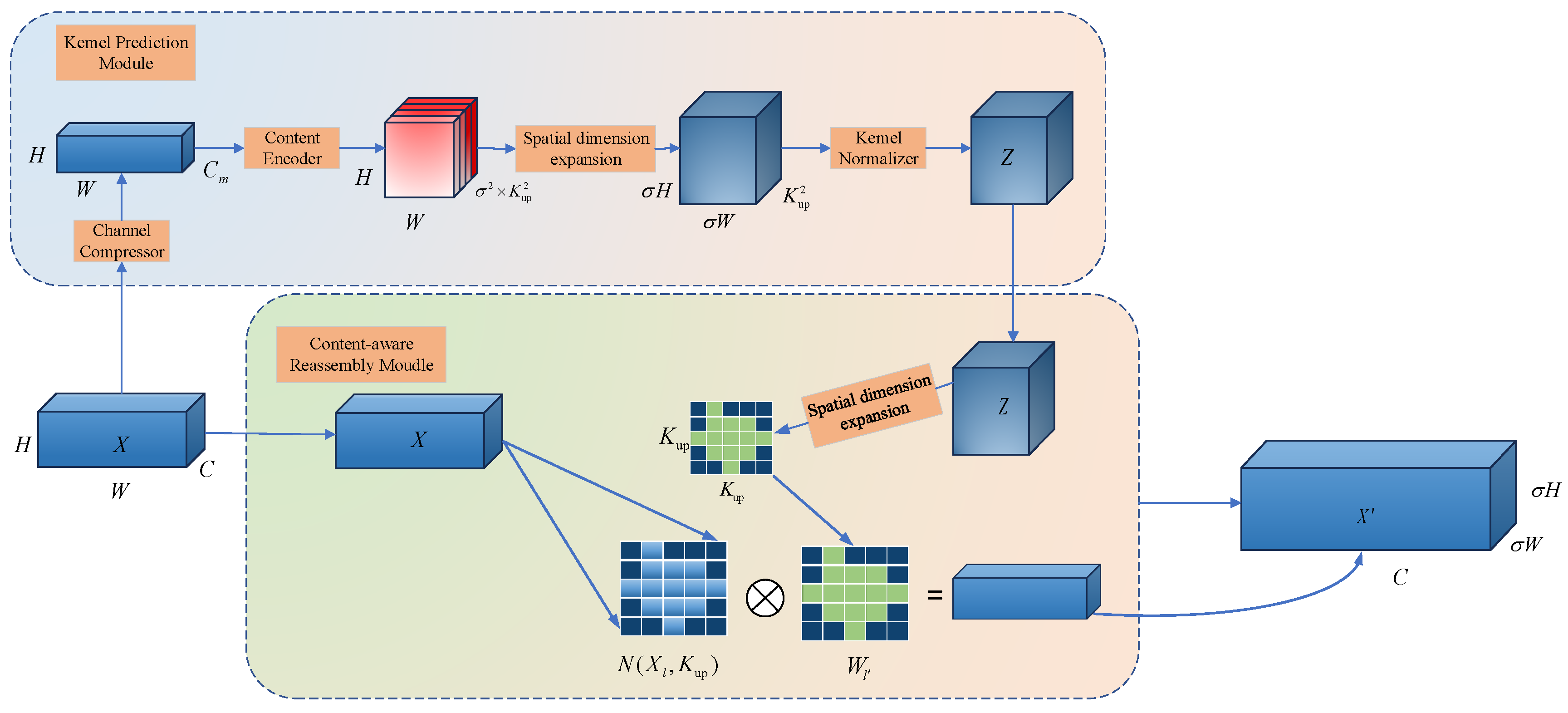

- Introduced the CARAFE (Content-Aware ReAssembly of Features) upsampling operator to adaptively reassemble feature maps with content-aware reconstruction, effectively enlarging the details of small objects, allowing the network to better restore the shape and location of small objects, thus improving small object detection accuracy.

- 4)

- Combined with the GIoU loss function to more accurately measure the matching degree between predicted and ground truth boxes, improving model training efficiency, reducing convergence time, and enhancing the accuracy of bounding box regression.

2. Related Work

2.1. Vision Transformer

2.2. Attention Mechanism

2.3. YOLOv8 Algorithm

3. Strategies for Improving YOLOv8

3.1. Improvement of the C2f Algorithm

3.2. AFGCAttention Module

3.3. CARAFE Upsampling Operator

3.4. GIoU Bounding Box Regression Loss Function

4. Experimental Setup

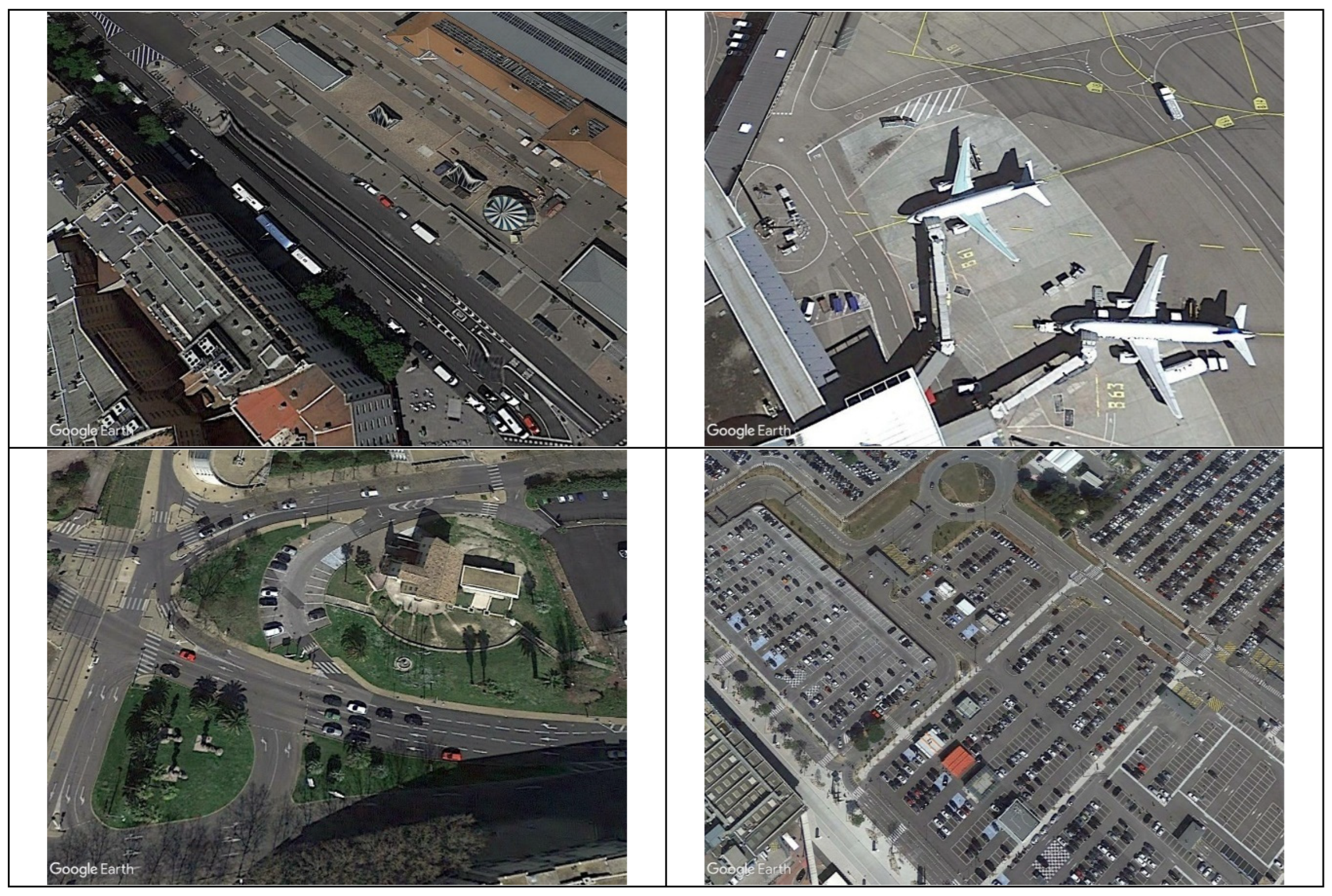

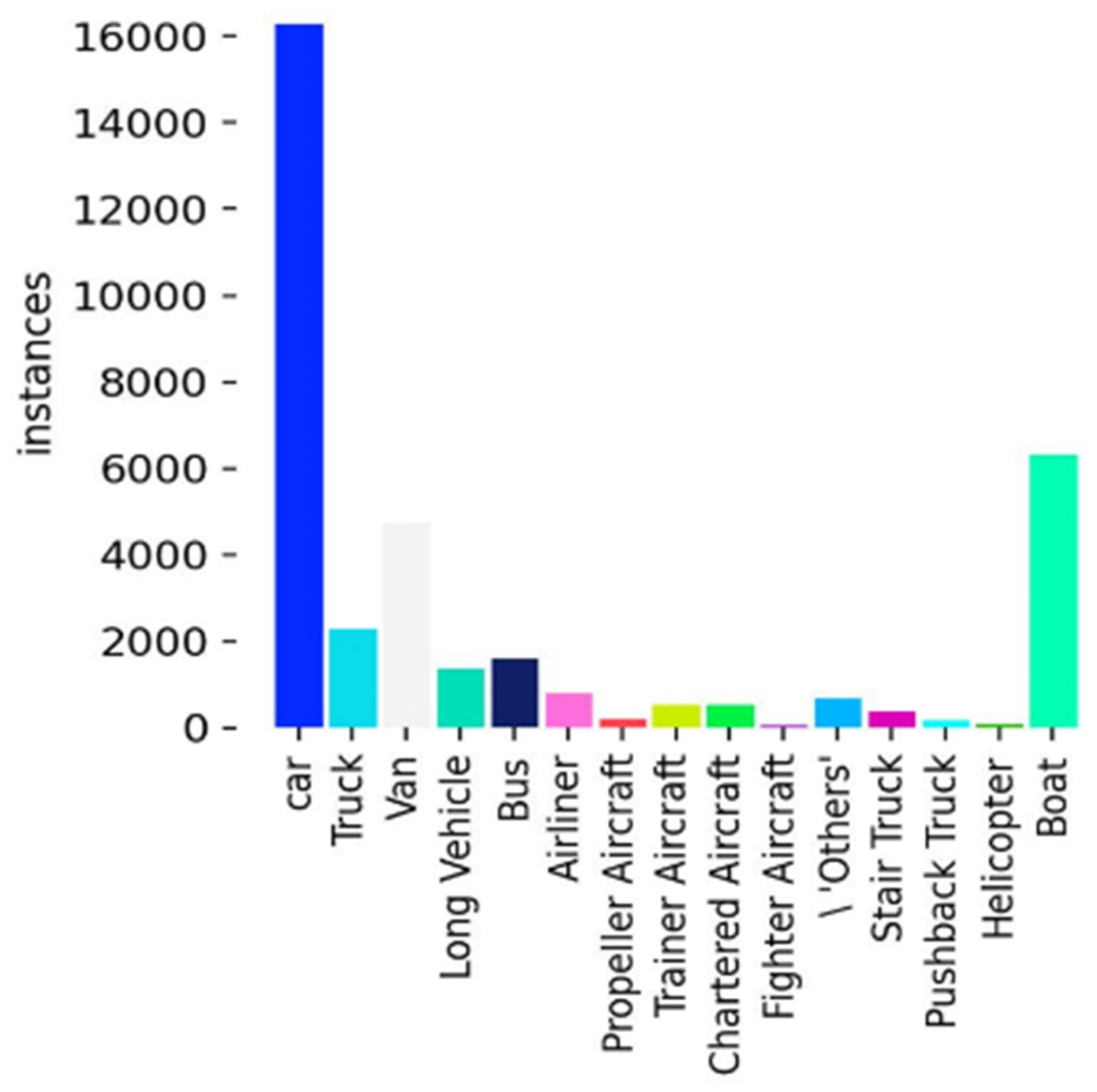

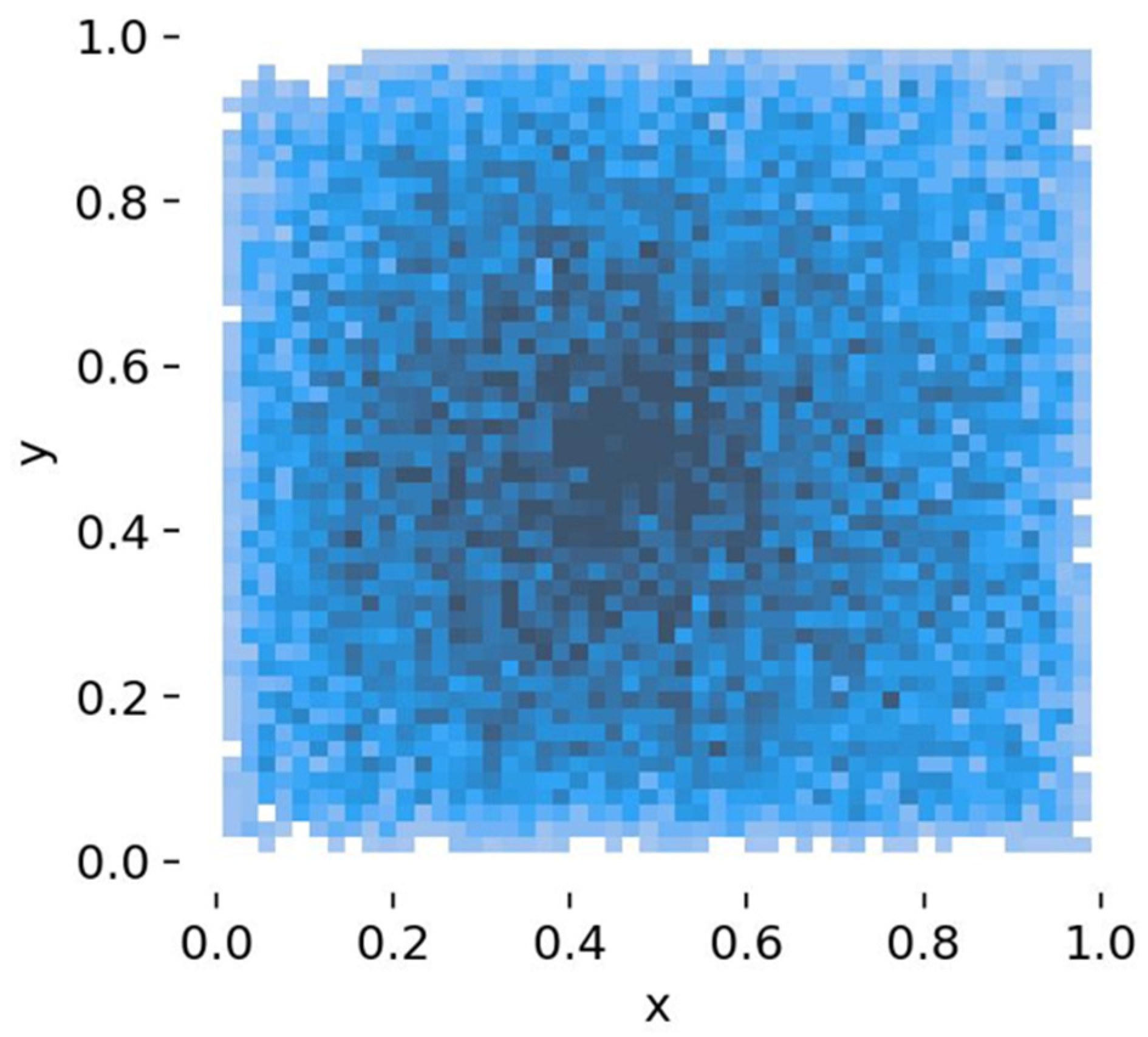

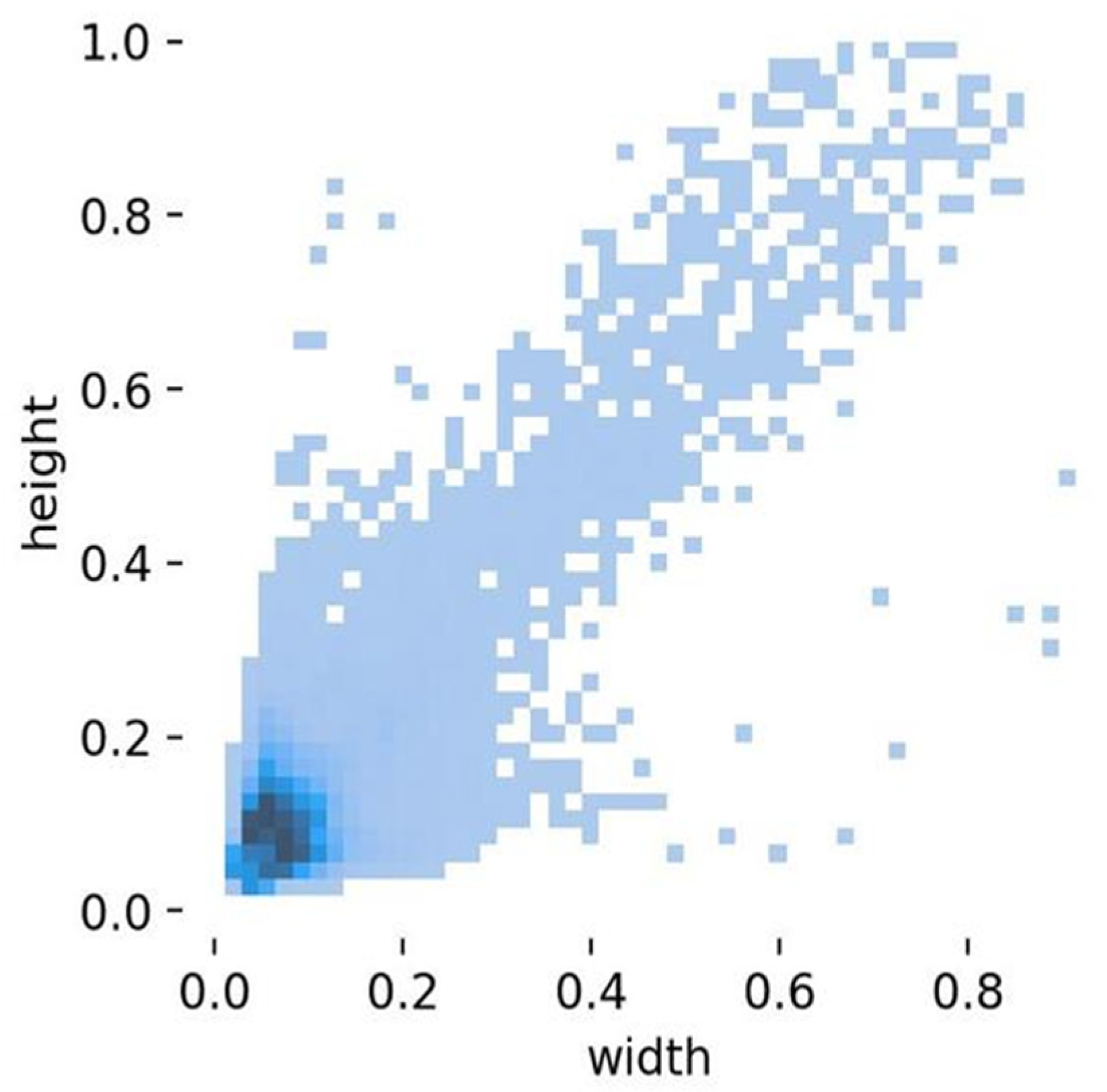

4.1. Dataset

4.2. Experimental Environment and Parameter Settings

4.3. Evaluation Metrics

5. Experiment Analysis

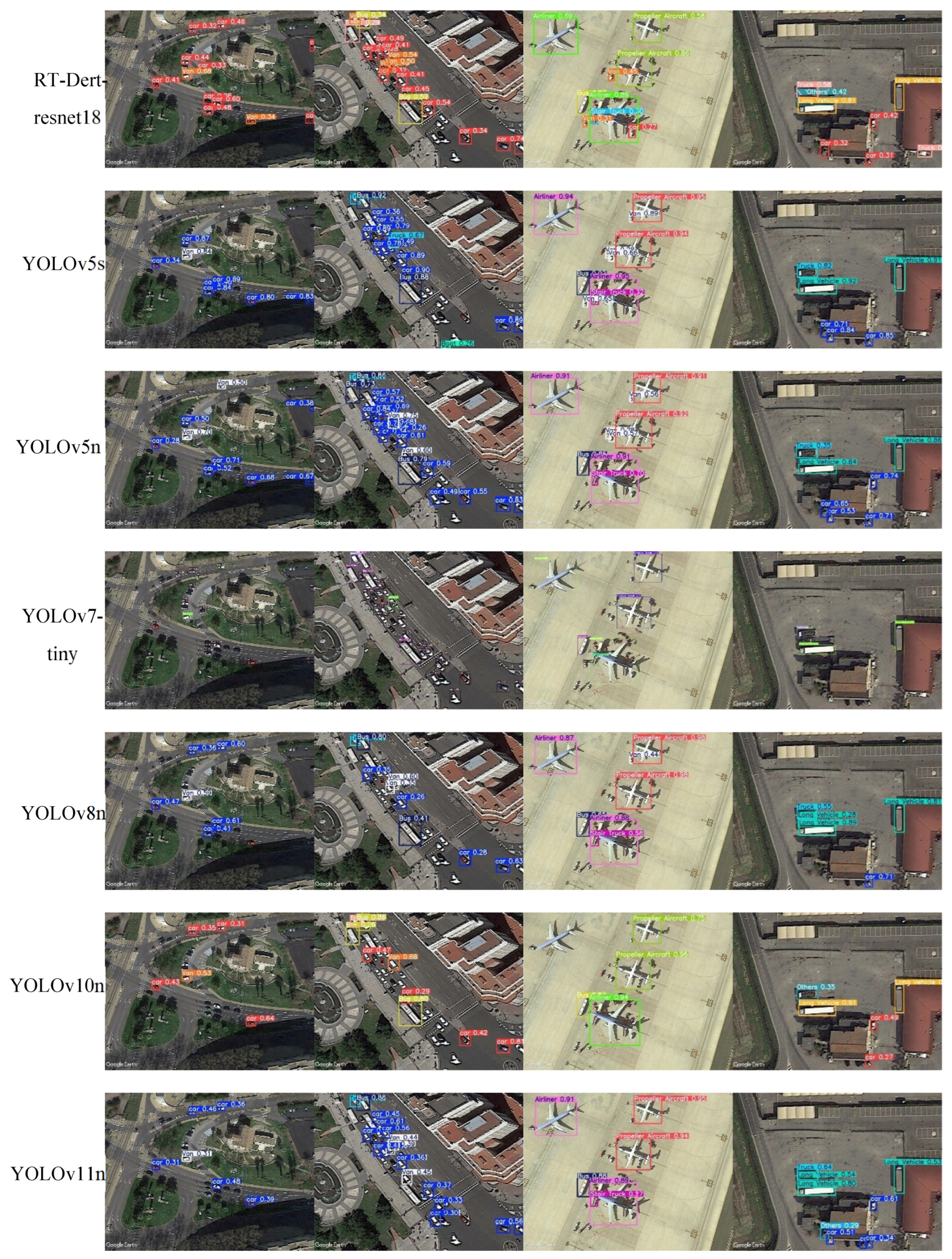

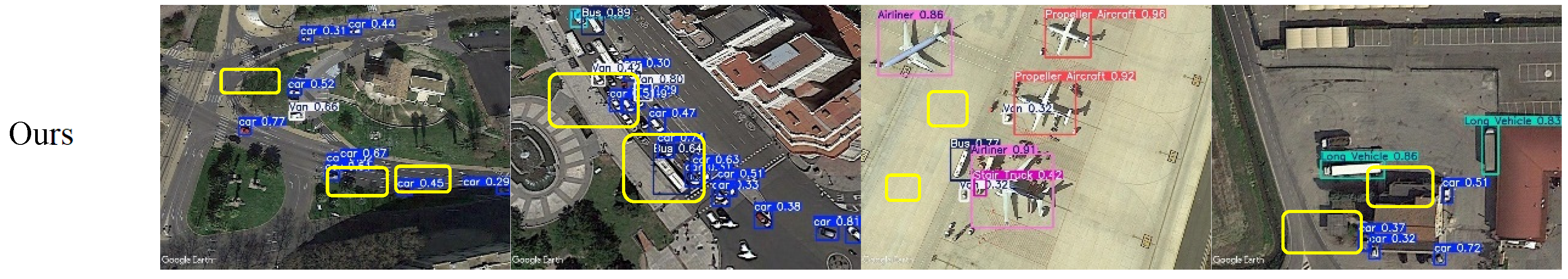

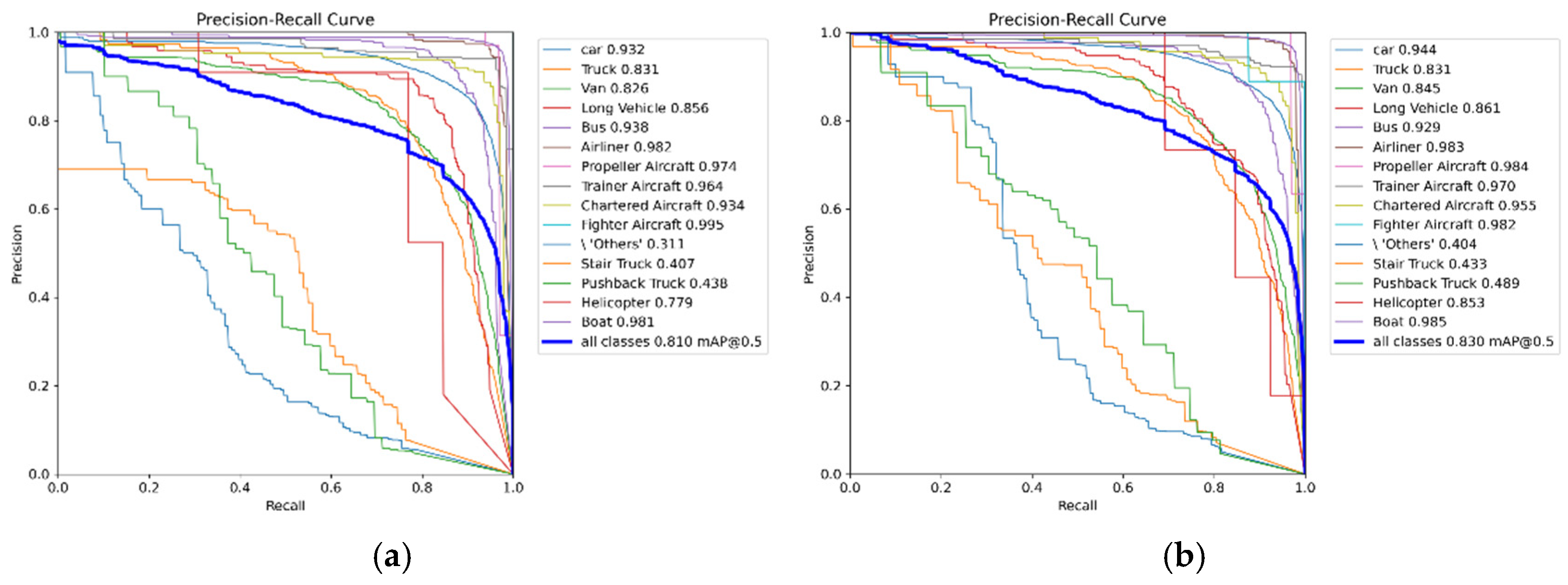

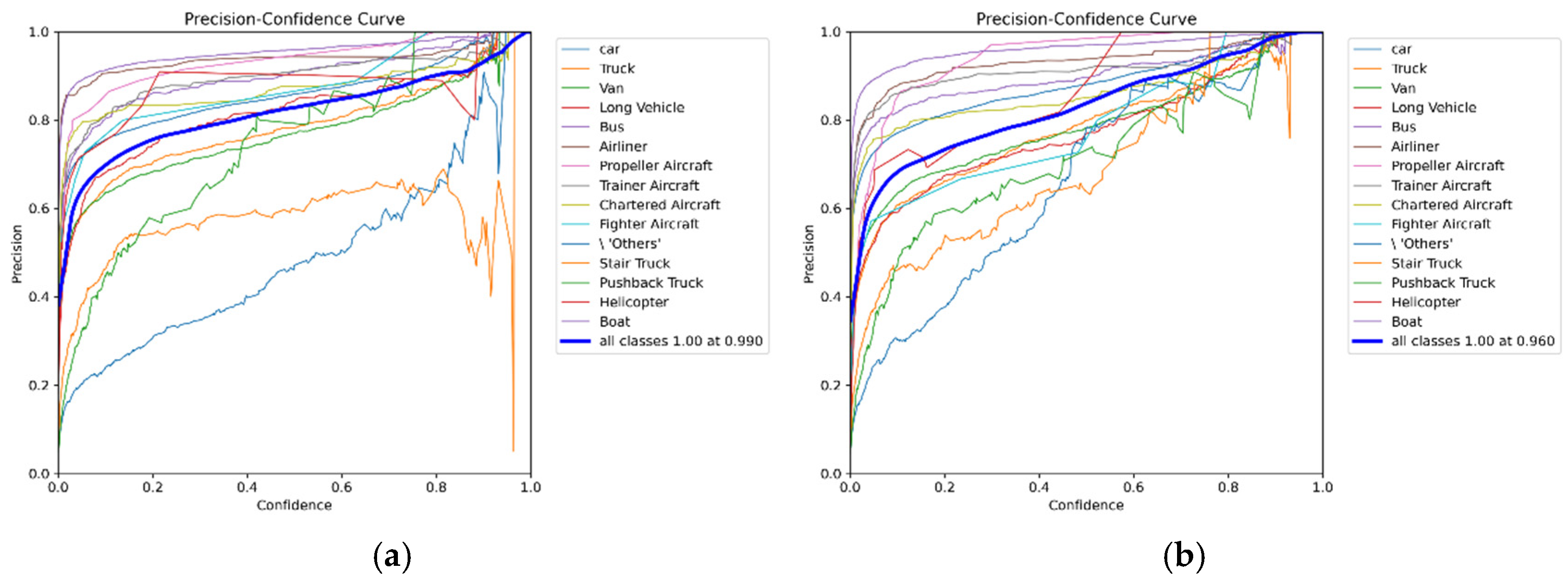

5.1. Algorithm Comparison Experiment

5.2. Ablation Study

5.3. Result Visualization

6. Discussion

6.1. Result Analysis

6.2. Comparison and Improvement

7. Conclusions

Data Availability Statement

References

- Ennouri, Karim, Slim Smaoui, and Mohamed Ali Triki. “Detection of urban and environmental changes via re0mote sensing.” Circular Economy and Sustainability 1.4 (2021): 1423-1437.

- Koukiou, Georgia. “SAR Features and Techniques for Urban Planning—A Review.” Remote Sensing 16.11 (2024): 1923.

- Mohan, M., et al. “Remote Sensing-Based Ecosystem Monitoring and Disaster Management in Urban Environments Using Machine Learnings.” Remote Sensing in Earth Systems Sciences (2024): 1-9.

- Lin, Chungan, and Ramakant Nevatia. “Building detection and description from a single intensity image.” Computer vision and image understanding 72.2 (1998): 101-121.

- Kim, Taejung, et al. “Tracking road centerlines from high resolution remote sensing images by least squares correlation matching.” Photogrammetric Engineering & Remote Sensing 70.12 (2004): 1417-1422.

- Low, David G. “Distinctive image features from scale-invariant keypoints.” Journal of Computer Vision 60.2 (2004): 91-110.

- Ok, Ali Ozgun. “Automated detection of buildings from single VHR multispectral images using shadow information and graph cuts.” ISPRS journal of photogrammetry and remote sensing 86 (2013): 21-40.

- Girshick, Ross, et al. “Rich feature hierarchies for accurate object detection and semantic segmentation.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2014.

- Girshick, R. Fast r-cnn. arXiv preprint 2015, arXiv:1504.08083. [Google Scholar]

- Ren, Shaoqing, et al. “Faster R-CNN: Towards real-time object detection with region proposal networks.” IEEE transactions on pattern analysis and machine intelligence 39.6 (2016): 1137-1149.

- Liu, Wei, et al. “Ssd: Single shot multibox detector.” Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14. Springer International Publishing, 2016.

- Redmon, J. “You only look once: Unified, real-time object detection.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

- Redmon, Joseph, and Ali Farhadi. “YOLO9000: better, faster, stronger.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.

- Redmon, Joseph. “Yolov3: An incremental improvement.”. arXiv preprint 2018, arXiv:1804.02767.

- Bochkovskiy, Alexey, Chien-Yao Wang, and Hong-Yuan Mark Liao. “Yolov4: Optimal speed and accuracy of object detection. arXiv preprint 2020, arXiv:2004.10934.

- Jocher, Glenn, et al. “ultralytics/yolov5: v6. 2-yolov5 classification models, apple m1, reproducibility, clearml and deci. ai integrations.” Zenodo (2022).

- Li, Chuyi, et al. “YOLOv6: A single-stage object detection framework for industrial applications.”. arXiv preprint 2022, arXiv:2209.02976.

- Wang, Chien-Yao, Alexey Bochkovskiy, and Hong-Yuan Mark Liao. “YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2023.

- Sohan, Mupparaju, et al. “A review on yolov8 and its advancements.” International Conference on Data Intelligence and Cognitive Informatics. Springer, Singapore, 2024.

- Wang, Chien-Yao, I-Hau Yeh, and Hong-Yuan Mark Liao. “Yolov9: Learning what you want to learn using programmable gradient information.”. arXiv preprint 2024, arXiv:2402.13616.

- Wang, Ao, et al. “Yolov10: Real-time end-to-end object detection.”. arXiv preprint 2024, arXiv:2405.14458.

- Khanam, Rahima, and Muhammad Hussain. “YOLOv11: An Overview of the Key Architectural Enhancements.”. arXiv preprint 2024, arXiv:2410.17725.

- Liu, Rui, et al. “An improved faster-RCNN algorithm for object detection in remote sensing images.” 2020 39th Chinese Control Conference (CCC). IEEE, 2020.

- Wang, Anrui, et al. “CDE-DETR: A Real-Time End-To-End High-Resolution Remote Sensing Object Detection Method Based on RT-DETR.” IGARSS 2024-2024 IEEE International Geoscience and Remote Sensing Symposium. IEEE, 2024.

- Bochkovskiy, Alexey, Chien-Yao Wang, and Hong-Yuan Mark Liao. “Yolov4: Optimal speed and accuracy of object detection.”. arXiv preprint 2020, arXiv:2004.10934.

- Patil, Shefali, Sangita Chaudhari, and Puja Padiya. “Small Object Detection in Remote Sensing Images using Modified YOLOv5.” 2023 1st DMIHER International Conference on Artificial Intelligence in Education and Industry 4.0 (IDICAIEI). Vol. 1. IEEE, 2023.

- Qi, Guanqiu, et al. “Small object detection method based on adaptive spatial parallel convolution and fast multi-scale fusion.” Remote Sensing 14.2 (2022): 420.

- Zhao, Xiaolei, et al. “Multiscale object detection in high-resolution remote sensing images via rotation invariant deep features driven by channel attention.” International Journal of Remote Sensing 42.15 (2021): 5764-5783.

- Duan, Jian, Xi Zhang, and Tielin Shi. “A hybrid attention-based paralleled deep learning model for tool wear prediction.” Expert Systems with Applications 211 (2023): 118548.

- Zhang, Jiarui, et al. “Faster and Lightweight: An Improved YOLOv5 Object Detector for Remote Sensing Images.” Remote Sensing 15.20 (2023): 4974.

- Ma, Mingyang, and Huanli Pang. “SP-YOLOv8s: An improved YOLOv8s model for remote sensing image tiny object detection.” Applied Sciences 13.14 (2023): 8161.

- Zhang, Cong, et al. “Efficient inductive vision transformer for oriented object detection in remote sensing imagery.” IEEE Transactions on Geoscience and Remote Sensing (2023).

- Wang, Di, et al. “Advancing plain vision transformer toward remote sensing foundation model.” IEEE Transactions on Geoscience and Remote Sensing 61 (2022): 1-15.

- Xu, Kejie, Peifang Deng, and Hong Huang. “Vision transformer: An excellent teacher for guiding small networks in remote sensing image scene classification.” IEEE Transactions on Geoscience and Remote Sensing 60 (2022): 1-15.

- Peng, Lintao, et al. “Image-free single-pixel object detection.” Optics Letters 48.10 (2023): 2527-2530.

- Azad, Reza, et al. “Advances in medical image analysis with vision transformers: a comprehensive review.” Medical Image Analysis 91 (2024): 103000.

- Wang, Qilong, et al. “ECA-Net: Efficient channel attention for deep convolutional neural networks.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020.

- Dosovitskiy, Alexey. “An image is worth 16x16 words: Transformers for image recognition at scale.”. arXiv preprint 2020, arXiv:2010.11929.

- Liu, Ze, et al. “Swin transformer: Hierarchical vision transformer using shifted windows.” Proceedings of the IEEE/CVF international conference on computer vision. 2021.

- Carion, Nicolas, et al. “End-to-end object detection with transformers.” European conference on computer vision. Cham: Springer International Publishing, 2020.

- Woo, Sanghyun, et al. “Cbam: Convolutional block attention module.” Proceedings of the European conference on computer vision (ECCV). 2018.

- Varghese, Rejin, and M. Sambath. “YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness.” 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS). IEEE, 2024.

- Zhu, Xizhou, et al. “Deformable convnets v2: More deformable, better results.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2019.

- Sun, Hang, et al. “Unsupervised Bidirectional Contrastive Reconstruction and Adaptive Fine-Grained Channel Attention Networks for image dehazing.” Neural Networks 176 (2024): 106314.

- Wang, Jiaqi, et al. “Carafe: Content-aware reassembly of features.” Proceedings of the IEEE/CVF international conference on computer vision. 2019.

- Rezatofighi, Hamid, et al. “Generalized intersection over union: A metric and a loss for bounding box regression.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2019.

- Haroon, Muhammad, Muhammad Shahzad, and Muhammad Moazam Fraz. “Multisized object detection using spaceborne optical imagery.” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 13 (2020): 3032-3046.

- Kou, Renke, et al. “Infrared small target segmentation networks: A survey.” Pattern Recognition 143 (2023): 109788.

| algorithm | Precision/% | Recall/% | mAP@0.5/% | mAP@0.5-0.95/% | FPS (f/s) | GFLOPS | Params (M) |

| SSD | 81.1 | 77.2 | 81.9 |  |

|

|

|

| Faster R-CNN | 64.5 | 81.3 | 76.7 |  |

|

|

|

| YOLOv5s | 81.4 | 78.0 | 81.4 | 65.7 | 227.2 | 15.9 | 7.05 |

| YOLOv5n | 85.1 | 74.9 | 81.0 | 63.5 | 454.5 | 4.2 | 1.77 |

| YOLOv7-tiny | 74.6 | 78.1 | 79.2 | 61.8 | 344.8 | 13.1 | 6.04 |

| YOLOv8n | 80.0 | 79.4 | 81.0 | 66.5 | 357.1 | 8.1 | 3.00 |

| YOLOv10n | 73.8 | 70.0 | 76.1 | 59.3 | 123.4 | 8.4 | 2.73 |

| YOLOv11n | 72.7 | 78.2 | 78.9 | 62.7 | 94.3 | 6.3 | 2.58 |

| RT-Detr-resnet18 | 72.9 | 73.9 | 74.7 | 59.7 | 59.1 | 78.1 | 25.47 |

| Ours | 85.0 | 75.3 | 83.0 | 66.9 | 111.1 | 8.3 | 3.24 |

| 算法 类别 |

SSD | Faster R-CNN |

YOLOv5s | YOLOv5n | YOLOv7-tiny | YOLOv8n | YOLOv10n | YOLOv11n | RT-Detr- resnet18 |

Ours |

| car | 83.2 | 55.6 | 86.1 | 87.4 | 78.0 | 83.6 | 83.9 | 81.4 | 77.9 | 88.7 |

| Truck | 70.5 | 61.7 | 77.2 | 81.3 | 69.1 | 75.2 | 73.3 | 75.6 | 71.8 | 81.0 |

| Van | 77.7 | 57.1 | 78.0 | 79.2 | 70.7 | 73.3 | 77.0 | 69.7 | 76.4 | 79.0 |

| Long Vehicle | 73.4 | 57.2 | 76.3 | 84.0 | 69.2 | 81.5 | 71.7 | 67.3 | 60.3 | 77.7 |

| Bus | 83.7 | 68.7 | 90.8 | 88.6 | 81.3 | 88.8 | 85.9 | 83.9 | 76.1 | 90.3 |

| Airliner | 91.2 | 80.8 | 95.7 | 93.4 | 89.1 | 94.0 | 91.3 | 90.5 | 86.2 | 94.1 |

| Propeller Aircraft | 90.9 | 75.6 | 94.1 | 95.1 | 96.7 | 93.0 | 89.7 | 81.9 | 83.1 | 98.7 |

| Trainer Aircraft | 87.3 | 68.0 | 93.3 | 93.6 | 86.1 | 89.7 | 85.6 | 87.1 | 72.4 | 92.2 |

| Chartered Aircraft | 79.6 | 81.1 | 87.3 | 88.4 | 80.5 | 84.3 | 85.7 | 79.5 | 75.2 | 85.7 |

| Fighter Aircraft | 100.0 | 85.7 | 79.1 | 84.2 | 82.0 | 84.3 | 67.8 | 60.2 | 75.8 | 80.3 |

| Others | 56.8 | 41.8 | 55.3 | 54.9 | 32.3 | 38.2 | 35.2 | 36.2 | 37.2 | 78.5 |

| Stair Truck | 71.1 | 39.1 | 65.2 | 69.9 | 54.1 | 58.2 | 67.2 | 60.1 | 58.2 | 66.1 |

| Pushback Truck | 64.7 | 35.9 | 65.1 | 81.7 | 64.4 | 69.8 | 39.4 | 47.6 | 59.1 | 72.4 |

| Helicopter | 100.0 | 90.0 | 82.6 | 98.8 | 74.3 | 90.5 | 59.0 | 75.8 | 100.0 | 92.7 |

| Boat | 87.1 | 69.5 | 94.4 | 96.4 | 91.7 | 95.0 | 94.4 | 94.3 | 83.4 | 97.1 |

| 算法 类别 |

SSD | Faster R-CNN |

YOLOv5s | YOLOv5n | YOLOv7-tiny | YOLOv8n | YOLOv10n | YOLOv11n | RT-Detr- resnet18 |

Ours |

| car | 91.2 | 84.5 | 92.2 | 93.5 | 94.4 | 93.2 | 92.7 | 94.3 | 91.1 | 94.4 |

| Truck | 77.1 | 74.8 | 82.5 | 81.0 | 82.6 | 83.1 | 81.1 | 83.6 | 74.9 | 83.1 |

| Van | 83.7 | 77.8 | 81.5 | 82.1 | 84.6 | 82.6 | 80.9 | 83.9 | 76.5 | 84.5 |

| Long Vehicle | 75.3 | 74.2 | 85.8 | 86.6 | 80.6 | 85.6 | 79.5 | 77.6 | 74.3 | 86.1 |

| Bus | 88.9 | 86.5 | 94.3 | 92.8 | 91.0 | 93.8 | 88.8 | 90.4 | 84.1 | 92.9 |

| Airliner | 97.9 | 98.2 | 97.8 | 97.9 | 97.7 | 98.2 | 96.9 | 98.3 | 95.2 | 98.3 |

| Propeller Aircraft | 94.9 | 94.1 | 97.1 | 99.1 | 96.3 | 97.4 | 90.8 | 94.6 | 93.5 | 98.4 |

| Trainer Aircraft | 95.2 | 91.8 | 98.2 | 98.1 | 96.7 | 96.4 | 95.5 | 96.1 | 92.3 | 97.0 |

| Chartered Aircraft | 96.1 | 96.4 | 93.7 | 94.2 | 94.8 | 93.4 | 93.7 | 95.1 | 89.2 | 95.5 |

| Fighter Aircraft | 100.0 | 97.0 | 95.5 | 98.2 | 99.5 | 99.5 | 87.3 | 89.3 | 96.7 | 98.2 |

| Others | 39.2 | 31.5 | 29.7 | 32.2 | 24.6 | 31.1 | 20.5 | 24.5 | 25.3 | 40.4 |

| Stair Truck | 52.5 | 45.1 | 48.9 | 45.1 | 38.3 | 40.7 | 44.0 | 48.5 | 40.7 | 43.3 |

| Pushback Truck | 42.7 | 26.1 | 34.2 | 32.5 | 26.3 | 43.8 | 23.0 | 39.2 | 30.8 | 48.9 |

| Helicopter | 100.0 | 81.8 | 92.1 | 84.0 | 82.5 | 77.9 | 69.3 | 70.3 | 59.3 | 85.3 |

| Boat | 94.6 | 91.0 | 98.1 | 98.5 | 98.2 | 98.1 | 97.3 | 98.2 | 96.0 | 98.5 |

| number | Experiment | Precision/% | Recall/% | mAP@0.5/% | FPS (f/s) | GFLOPS | Params (M) |

| 1 | YOLOv8n | 80.0 | 79.4 | 81.0 | 357.1 | 8.1 | 3.00 |

| 2 | YOLOv8n+AFGCAttention | 76.1 | 79.9 | 82.2 | 123.4 | 8.1 | 3.07 |

| 3 | YOLOv8n+AFGCAttention+CARAFE | 78.3 | 78.9 | 82.2 | 131.5 | 8.4 | 3.21 |

| 4 | YOLOv8n+AFGCAttention+CARAFE+C2f-DCNV2-MPCA | 84.0 | 77.0 | 82.7 | 126.5 | 8.3 | 3.24 |

| 5 | YOLOv8n+AFGCAttention+CARAFE+C2f-DCNV2-MPCA+GIoU | 85.0 | 75.3 | 83.0 | 111.1 | 8.3 | 3.24 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).