The emergence of large language models (LLMs) such as OpenAI’s o1 [

1], GPT-4o [

2], Google’s Gemini [

3], and Meta’s LLaMA [

4] has revolutionized the field of natural language processing (NLP). These advanced models have unlocked unprecedented capabilities in understanding and generating human-like text, enabling applications that range from intelligent conversational agents to sophisticated data analysis tools. However, harnessing the full potential of LLMs in real-world applications presents significant challenges. Developers must navigate complexities related to model integration, state management, scalability, contextual awareness, and security.

LangChain has rapidly gained prominence as a powerful framework designed to address these challenges in developing LLM-powered applications [

5]. By providing a modular and flexible architecture, LangChain simplifies the complexities inherent in working with LLMs, enabling developers to build scalable, stateful, and contextually aware applications with ease. Its suite of components—including LangGraph for stateful process modeling, LangServe for scalable API deployment, and LangSmith for monitoring and evaluation—collectively form a comprehensive toolkit for leveraging LLMs effectively [

6].

LangChain facilitates the integration of LLMs into a wide array of applications, empowering developers to create solutions that are not only functional but also efficient and secure. Its support for features like chat models, retrieval-augmented generation (RAG) [

7], and secure API interactions allows for the rapid deployment of sophisticated language model solutions across diverse domains such as healthcare, customer service, finance, and mental health.

Despite its strengths, LangChain’s emphasis on flexibility through modularity introduces certain complexities. Developers may encounter a steep learning curve when navigating its extensive components and integrations. Moreover, the reliance on external integrations and third-party providers necessitates a careful examination of security practices to mitigate risks associated with data exposure and dependency vulnerabilities.

This paper provides a comprehensive analysis of LangChain, delving into its architecture, core components, and the interplay between its modules. We explore how LangChain facilitates the development of LLM applications by examining each component’s functionality and their synergistic contributions to the framework. Furthermore, we critically evaluate the limitations and criticisms of LangChain, focusing on the complexities introduced by its modular design and the security implications of its extensive integrations.

By offering valuable insights into both the capabilities and challenges of LangChain, this paper aims to serve as a key resource for developers and researchers interested in LLM application development. We seek to illuminate the transformative potential of LangChain in advancing NLP applications while providing a nuanced understanding of its practical boundaries. Ultimately, this analysis guides users in effectively harnessing LangChain to build innovative and secure LLM-powered applications tailored to their specific needs.

The remainder of this paper is organized as follows:

Section 1 delves into the core architecture of LangChain, detailing its primary components and their functionalities.

Section 2 examines LangSmith and its role in monitoring and evaluation of LLM applications. In

Section 3, we explore LangGraph’s capabilities in stateful process modeling.

Section 4 discusses LangServe for scalable API deployment of LangChain applications. Finally,

Section 5 addresses the limitations and criticisms of LangChain, particularly focusing on the complexities and security concerns associated with its modular design and external integrations.

1. Architecture

LangChain is built with a modular architecture, designed to simplify the lifecycle of applications powered by large language models (LLMs), from initial development through to deployment and monitoring [

6]. This modularity allows developers to configure, extend, and deploy applications tailored to specific needs, providing a flexible foundation for building scalable, secure, and multi-functional applications.

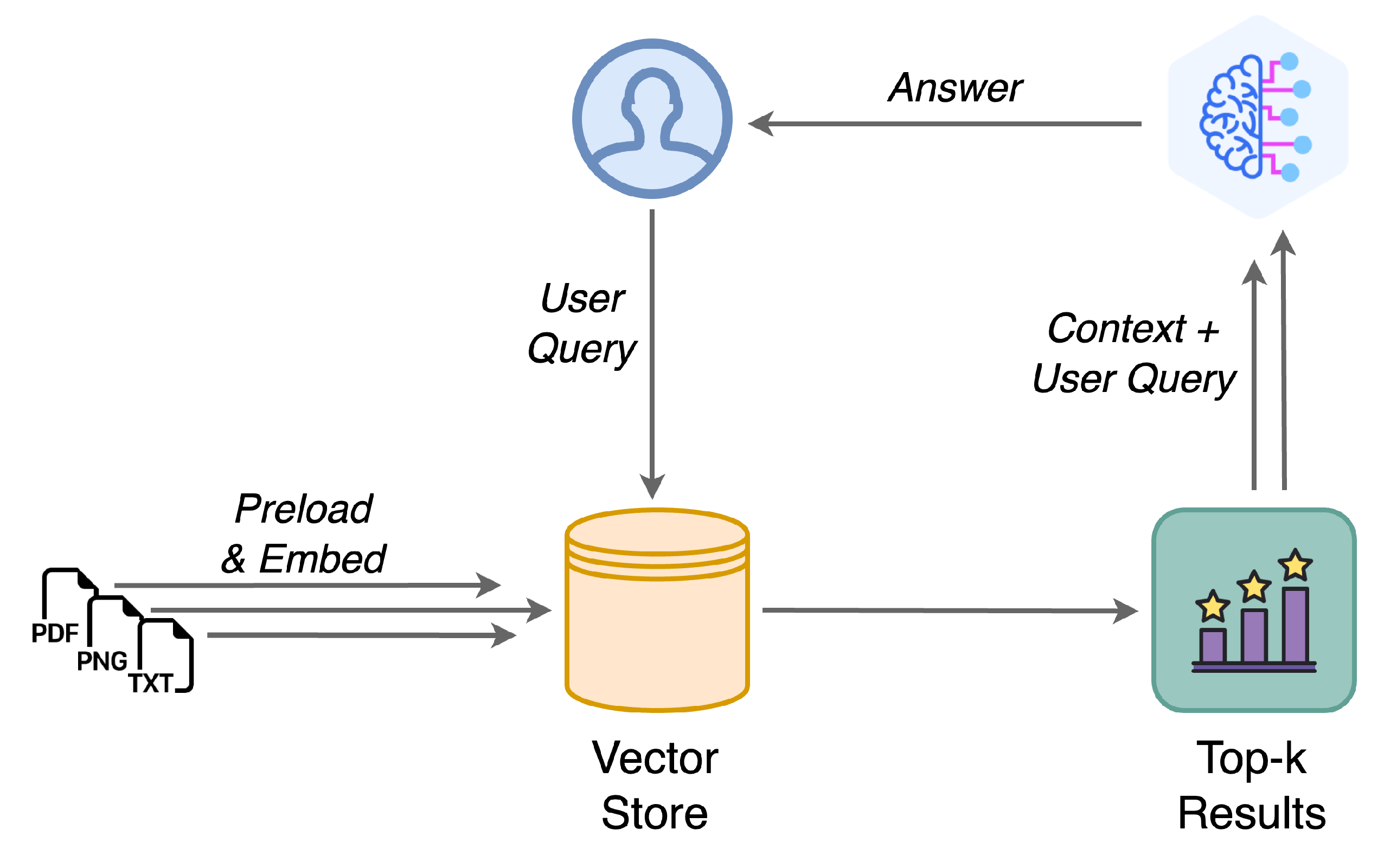

Figure 1 illustrates a fundamental LangChain pipeline. In this architecture, diverse data sources—including documents, text, and images—are embedded and stored within a vector store. Upon receiving a user’s query, the system retrieves the most relevant information from the vector store. This retrieved context is then provided to the large language model (LLM), enhancing its ability to generate accurate and factually grounded responses.

The rest of this section provides an overview of LangChain’s primary components, followed by a brief introduction to its advanced modules–LangSmith, LangGraph and LangServe–which are further discussed in

Section 2,

Section 3, and

Section 4 respectively:

LLM Interface: Provides APIs for connecting and querying various large language models, such as OpenAI’s GPT [

8], Google’s Gemini [

3], and Llama [

4], to facilitate seamless application integration.

Prompt Templates: Structured templates that standardize and format queries, ensuring consistency and precision in interactions with AI models. These templates help guide the model towards producing reliable and relevant outputs.

Memory: Enables applications to retain information from past interactions, supporting both basic and advanced memory structures. This component is critical for maintaining context across sessions and delivering contextually aware responses.

Indexes: Serve as structured databases that organize and store information, allowing for efficient data retrieval when processing language queries.

Retrievers: Designed to work alongside indexes, retrievers fetch relevant data based on query inputs, ensuring that the generated responses are well-informed and accurate.

Vector Store: Manages the embedding of words or phrases as numerical vectors, a core step in capturing semantic meaning and supporting tasks involving language understanding and similarity searches.

Output Parsers: Components that refine and structure the generated language outputs for specific tasks, ensuring usability and relevance for the application’s goals.

Agents: Custom chains that prompt the language model to identify and execute the most effective sequence of actions for a given query, enabling adaptive and dynamic decision-making.

Callbacks: Functions that log, monitor, and stream specific events within LangChain workflows, simplifying tracking and debugging processes.

1.1. Chat Models and Message Handling

LangChain supports chat models that manage complex, multi-turn conversations. These models use structured message sequences, allowing developers to control conversation flow and maintain state over time. The structured message handling system enables robust interactions with users by storing and retrieving conversation history as needed [

9]. Their key features include:

Multi-turn Interactions: LangChain maintains state across conversation turns, making it suitable for prolonged, context-dependent conversations.

Structured Output: Supports structured responses like JSON, allowing easy integration with downstream applications.

Conversation Memory: Maintains continuity by storing conversation history, ideal for applications requiring persistent context, such as customer support [

10].

1.2. Retrieval-Augmented Generation (RAG)

LangChain supports Retrieval-Augmented Generation (RAG), which integrates language models with external knowledge bases to enhance response accuracy and relevance. RAG allows models to access up-to-date information, extending their capabilities beyond their training data. LangChain’s RAG implementation uses:

Document Loaders and Text Splitters: Preprocess documents for indexing and efficient retrieval [

9].

Embedding Models and Vector Stores: Enable similarity-based retrieval by embedding documents into vector spaces. LangChain integrates with vector storage solutions like Chroma and Milvus for optimized searches [

6].

Retrievers and RAG Chains: Retrieve and merge external data with model responses, enhancing applications such as question answering systems and recommendation engines [

10].

1.3. Security and Permissions Management

Security is a critical focus in LangChain’s design, particularly given the potential access to external data sources. LangChain addresses these security challenges through best practices and internal controls [

6]:

1.4. Integrations and Extensibility

LangChain’s architecture supports a wide range of third-party integrations, allowing for custom component development and additional functionality, such as multi-modal data processing and AI tool integration [

6]:

Integration Packages: LangChain provides dedicated packages (e.g., langchain-openai, langchain-aws) that simplify connections to external platforms, tailoring applications to specific needs.

Support for Multi-modal Data: Supports image, text, and audio inputs, allowing for applications like chatbots capable of interpreting diverse data types.

Custom Component Development: Developers can build custom plugins or extend LangChain components, ensuring flexibility and adaptability for a wide range of application requirements.

LangChain’s modular and flexible architecture equips developers with a comprehensive toolkit for building, deploying, and monitoring LLM applications. Its advanced components—LangGraph, LangServe, and LangSmith—enable sophisticated functionality for scalable, interactive, and robust applications, meeting the demands of modern AI use cases.

1.5. Advanced Components

Beyond these core elements, LangChain offers advanced modules that support complex workflows, API deployments, and performance monitoring. These components are elaborated in the following sections:

LangGraph for Stateful Process Modeling: Explored in

Section 3, LangGraph enables developers to structure applications with nodes and edges, allowing for complex branching and multi-agent workflows.

LangServe for API Deployment: Detailed in

Section 4, LangServe facilitates the deployment of LangChain applications as REST APIs, supporting scalability in production [

11].

LangSmith for Monitoring and Evaluation: Discussed in

Section 2, LangSmith offers tools for real-time performance monitoring, error tracking, and version control to optimize applications iteratively [

10].

2. LangSmith

LangSmith is a developer platform tailored to streamline the deployment, monitoring, and evaluation of large language model (LLM) applications, providing essential tools for building production-grade systems. Integrated with the LangChain ecosystem, it enables users to trace, evaluate, and refine applications, enhancing precision in complex environments. The platform addresses key challenges in observability, testing, and optimization, allowing developers to monitor performance, troubleshoot issues, and maintain high standards over time [

12].

2.1. Tracing

Tracing is a central feature of LangSmith, providing detailed visibility into how applications interact with LLMs and external data sources. For developers using LangChain, LangSmith offers tracing without the need for direct SDK integration, simplifying the monitoring process. Tracing involves capturing inputs, outputs, and critical metadata from each interaction, allowing developers to observe and analyze component behavior in real time. This capability is especially useful for debugging and identifying bottlenecks within complex workflows.

LangSmith supports multiple ways to log traces, including languages like Python and TypeScript. Each trace logs every call made to an LLM, along with input parameters, function annotations, and generated outputs, making it easier to identify where adjustments may be necessary. By using traceable wrappers and decorators, developers can annotate functions or data pipelines, enabling automatic trace logging with minimal code alterations [

12].

2.2. Performance Testing

LangSmith’s evaluation tools enable developers to test and validate applications under real-world conditions. Evaluations require a defined dataset of test cases, including inputs and expected outputs. Using these datasets, developers can conduct performance tests and assess how well their models meet expected outcomes—an essential step for applications where accuracy and reliability are crucial. LangSmith supports custom evaluators, allowing developers to specify scoring functions based on specific needs. For instance, an evaluator may measure the exact match between outputs and expected answers, or use metrics like cosine similarity for open-ended tasks. By supporting both built-in and custom evaluators, LangSmith provides flexibility in performance measurement for deterministic outputs or nuanced language generation tasks [

12].

2.3. Dataset Management

Datasets are foundational to LangSmith’s evaluation system. Organizing test cases into structured datasets enables methodical testing and validation. Developers can create datasets manually or import them from existing sources, allowing diverse testing scenarios that reflect real-world use cases. Datasets can contain structured or unstructured data evaluations, accommodating a variety of testing needs. LangSmith’s dataset version control allows developers to maintain multiple dataset versions as applications evolve. This feature is critical for ensuring consistency in evaluation, especially as application logic changes or models are retrained, providing a robust foundation for testing and validation [

12].

2.4. LangSmith Workflow

LangSmith integrates tracing, evaluation, and dataset management into a cohesive framework, enabling developers to progress from debugging to optimization in a structured manner. A typical LangSmith workflow includes the following stages:

Trace Logging: Developers activate tracing on application functions, providing insights into model-component interactions.

Dataset Creation and Evaluation: Developers create datasets representing different scenarios to conduct comprehensive testing.

Evaluation and Iterative Optimization: Evaluation results indicate performance areas for refinement, guiding iterative application improvements.

Version Control and Historical Tracking: LangSmith logs all interactions, dataset versions, and evaluation scores, allowing developers to assess improvements over time.

2.5. Integration with LangChain and LangServe

LangSmith integrates seamlessly with LangChain and LangServe (

Section 4) to enhance the end-to-end LLM application development experience. For LangChain users, LangSmith can automatically log traces and integrate with existing workflows. Combined with LangServe, LangSmith provides robust observability for API deployments, monitoring real-time usage patterns, tracking request latencies, and identifying bottlenecks.

3. LangGraph

LangGraph is a low-level framework for building stateful, multi-actor applications with large language models (LLMs). It provides developers with fine-grained control over application flows, incorporating cycles, branching, and persistence to support complex agent workflows. Inspired by frameworks such as Pregel [

13] and Apache Beam [

14], LangGraph enables advanced human-in-the-loop applications and persistent state management, allowing for more reliable and adaptable LLM-powered systems [

15].

3.1. Core Features of LangGraph

3.1.1. Cycles and Branching

LangGraph distinguishes itself by supporting cycles and branching in application workflows. This feature is particularly beneficial for agentic architectures that require iterative or conditional logic. By enabling cycles within workflows, LangGraph provides a flexible structure that allows nodes to execute repeatedly until a specified condition is met. This contrasts with typical directed acyclic graph (DAG)-based architectures, which are limited to single-pass execution without feedback loops [

15].

3.1.2. Persistence and State Management

One of LangGraph’s key innovations is its built-in support for persistence, which enables state to be saved and accessed throughout the application’s lifecycle. This persistent state management is crucial for applications that require continuity across sessions, such as customer service agents or educational tools that need to recall previous interactions. LangGraph’s persistence feature also facilitates advanced human-in-the-loop workflows, allowing agents to pause, receive human input, and resume operations seamlessly.

LangGraph utilizes a stateful execution model where each node in the graph updates the application state as it processes input. For instance, in a multi-turn conversation, the graph maintains a memory of all previous messages, which can be accessed by subsequent nodes to ensure coherent responses. This persistent state can also be saved externally using the LangGraph Platform [

16], ensuring robust memory management across long-running sessions [

15].

3.1.3. Human-in-the-Loop and Streaming Support

LangGraph offers built-in support for human-in-the-loop interactions, which is essential for applications that require manual intervention or approval at certain stages. For example, a human operator can review an agent’s planned actions and approve, reject, or modify them before the agent proceeds. This level of control makes LangGraph suitable for high-stakes domains like healthcare or legal advice, where accuracy and oversight are critical.

Additionally, LangGraph supports streaming outputs from each node as they are produced. This capability is especially useful for applications like chatbots or real-time monitoring systems, where immediate feedback improves user experience. Streaming can be implemented within any node in the graph, enabling real-time updates as actions are processed [

15].

3.2. LangGraph Platform

The LangGraph Platform [

16] is an infrastructure solution that extends the open-source LangGraph framework for production deployments. It includes components like LangGraph Server (for API access), LangGraph SDKs (client libraries), and LangGraph CLI (a command-line interface for deployment management). The platform is designed to handle complex agent workflows, supporting long-running agents, background processing, and task queuing to ensure reliable performance even under heavy loads. The LangGraph Platform also includes features such as:

Background Execution: Allows agents to run asynchronously, handling user requests in parallel without blocking other tasks.

Support for Long-Running Agents: Provides infrastructure for agents that need to operate over extended periods, managing resource allocation and monitoring agent health.

Burst Handling and Task Queues: Uses queues to manage sudden increases in requests, ensuring that high-priority tasks are processed efficiently.

3.3. LangGraph Workflow

A typical LangGraph workflow begins by defining the state schema and nodes required for the application. Each node represents an independent function, such as calling an LLM, invoking a tool, or accessing external data. The developer sets an entry point for graph execution and defines the transitions (edges) between nodes, which can be conditional or sequential based on application requirements.

Defining Nodes and State: Developers initialize nodes, such as an LLM node for responses or a tool node for external API calls, and specify the state schema to manage conversation context.

Setting Entry Points and Edges: Nodes are connected by edges, with conditions determining the flow based on the application’s state.

Compiling and Executing the Graph: Once nodes and edges are defined, the graph is compiled into a runnable format, enabling calls to functions such as invoke() for execution and stream() for real-time updates.

LangGraph’s workflow design allows applications to cycle between nodes based on input conditions and dynamically update state, enabling applications that require complex interaction patterns.

3.4. Integration with LangChain and LangSmith

LangGraph integrates seamlessly with LangChain and LangSmith, although it operates independently if desired. For users within the LangChain ecosystem, LangGraph can utilize LangSmith’s tracing capabilities to monitor each node’s performance and capture detailed logs of agent interactions. Additionally, LangChain tools and APIs can be incorporated as graph nodes, expanding LangGraph’s functionality with LangChain’s extensive library of tools and connectors [

15].

4. LangServe

LangServe [

11] is an integral component of the LangChain ecosystem, specifically designed to facilitate the deployment of large language model (LLM) applications as scalable REST APIs. With LangServe, developers can create production-grade APIs that allow external systems and users to interact with LangChain applications. It enables LLM-powered applications to be served in real-time with robust support for load balancing, monitoring, and scalability.

4.1. Core Features of LangServe

4.1.1. API Deployment and Management

LangServe simplifies the process of turning LangChain applications into APIs, making LLM models accessible for various services and client applications. With LangServe, any LangChain workflow can be packaged and exposed via RESTful endpoints, enabling interactions with language models, data retrieval, and external tool integration. The API-centric design allows LangServe to support diverse use cases, from chatbots and recommendation systems to complex multi-agent interactions. It provides several tools for managing API endpoints, such as configurable routing, request handling, and response formatting, which make it easy to customize each endpoint based on application requirements. This flexibility allows developers to design APIs with specific functionalities, making LangServe suitable for applications of varying complexity [

11].

4.1.2. Scalability and Load Balancing

LangServe includes built-in support for scalability, making it ideal for applications that experience high traffic volumes. It can handle multiple API requests simultaneously, ensuring consistent performance by balancing the load across instances. This feature is critical for production environments where maintaining low response times under heavy loads is essential. To further enhance scalability, LangServe provides tools for setting up auto-scaling, which dynamically adjusts the number of instances based on demand. This allows applications to handle traffic spikes without degradation in performance, making LangServe suitable for real-world deployments with unpredictable usage patterns [

11].

4.1.3. Latency and Error Management

LangServe is designed to minimize latency, ensuring quick responses for each API request. It employs efficient request queuing and processing mechanisms to reduce waiting times. Additionally, LangServe includes error handling and retry logic, which helps maintain reliability by managing transient failures and minimizing downtime. This focus on latency and error resilience makes LangServe suitable for mission-critical applications that require high availability. For instance, LangServe can automatically retry failed requests and log errors for further investigation, allowing developers to identify issues promptly and maintain a stable API response rate [

11].

4.2. LangServe Workflow

The LangServe deployment workflow is straightforward, enabling developers to go from a LangChain application to a deployed API in a few steps. Here’s an outline of a typical LangServe workflow:

Defining Endpoints: Developers define endpoints based on application requirements, specifying which functions or models are exposed via API routes. Each endpoint can have customized parameters, allowing for flexibility in how the API interacts with different components.

Configuring Request Handling and Routing: LangServe allows for fine-grained control over how requests are processed. Developers can set up routing rules, parameter validation, and request parsing to tailor the API experience.

Setting Up Load Balancing and Scaling: For applications with high traffic, LangServe’s load balancing can be configured to distribute requests across multiple instances, ensuring consistent response times. Auto-scaling can also be set up to dynamically adjust resources based on demand.

Monitoring and Error Tracking: LangServe integrates with monitoring tools, including LangSmith, to provide real-time insights into API performance, usage metrics, and error rates. This monitoring helps developers maintain optimal performance and quickly resolve issues as they arise.

LangServe’s streamlined workflow ensures that developers can deploy robust APIs with minimal overhead, making it a practical choice for scaling LLM applications in production environments.

4.3. Integration with LangSmith and LangChain

LangServe integrates seamlessly with LangSmith, which offers observability features like tracing, logging, and performance monitoring. Through LangSmith, LangServe users can track metrics such as request frequency, latency, and error rates. This integration provides a comprehensive view of API performance, enabling developers to optimize applications based on real-time data.

Additionally, LangServe’s integration with LangChain allows developers to leverage LangChain’s extensive library of tools, models, and connectors as part of the API. LangChain workflows, tools, and chains can be directly exposed via LangServe endpoints, providing flexible API interactions and enhancing the functionality of LLM applications [

11].

5. Limitations and Criticisms

LangChain provides a versatile framework for the development of applications powered by large language models (LLMs). However, several limitations warrant attention, especially in the domains of complexity and security.

5.1. Complexity

LangChain’s modular architecture, while designed to simplify LLM-based application development, can paradoxically increase complexity. Effective use of LangChain often requires a nuanced understanding of its distinct components, such as LangGraph and LangSmith, as well as familiarity with its API ecosystem. Consequently, developers may face a steep learning curve, particularly those aiming for rapid prototyping or deployment. The need for comprehensive knowledge of each module may present a barrier to new users, complicating onboarding and initial implementation phases.

5.2. Security Concerns

Given LangChain’s modular design and extensive reliance on external integrations, security presents a notable challenge. Although LangChain incorporates a range of security measures, including fine-grained permission control and sandboxing, the complexity of securing LLM-based applications remains high, especially for applications managing sensitive data. Below, we outline several critical security risks associated with LangChain and explore strategies for risk mitigation.

5.2.1. Risks Associated with External Providers

To enhance functionality, LangChain integrates with numerous external services, such as vector databases, API providers, and cloud storage platforms. However, these integrations expose applications to security vulnerabilities:

Data Exposure: Accessing external resources can inadvertently expose sensitive data to third-party providers, a risk particularly relevant for applications handling personal or confidential information. Without stringent data encryption and access control mechanisms, the potential for data leaks or unauthorized access increases.

Third-Party Dependency: Reliance on third-party services introduces dependencies on their security protocols. Any compromise within a provider’s infrastructure could affect LangChain applications, resulting in data breaches or service interruptions. This underscores the importance of thoroughly vetting providers and monitoring them for potential security issues.

LangChain’s security model addresses many of these concerns, yet challenges persist, particularly in sectors with rigorous compliance standards, such as finance and healthcare. Key areas for ongoing improvement include:

Dynamic Permission Adjustment: Current permission settings in LangChain are defined at deployment, but in dynamic applications, permissions may need to adapt based on user interactions. Implementing adaptive permissions responsive to application state or user roles could enhance security.

Advanced Encryption Standards: For applications processing highly sensitive data, adopting advanced encryption practices—such as end-to-end or field-level encryption—could bolster data security within even trusted environments.

Proactive Security Analytics: Integrating predictive analytics to preemptively identify risks could further secure applications. Machine learning models analyzing application logs could flag anomalous patterns indicative of potential breaches or misuse.

In summary, LangChain’s security framework includes robust features such as granular permissions, sandboxing, and real-time monitoring. While these measures provide a solid foundation, the ongoing challenge of securing LLM-driven applications—particularly those relying on external providers—demands continued advancements in security practices.

6. Conclusion

LangChain significantly advances the development of applications powered by large language models (LLMs). Its modular framework—including components like LangGraph for stateful process modeling, LangServe for scalable API deployment, and LangSmith for monitoring and evaluation—enables developers to build scalable, context-aware applications tailored to specific needs across diverse domains, including NLP, cybersecurity, healthcare, finance, and customer service.

While its versatility extends beyond NLP, allowing for applications in fields like cybersecurity (e.g., threat detection and automated incident response), the framework’s emphasis on flexibility introduces complexities that may present a learning curve for developers new to LangChain. Additionally, reliance on external integrations raises important security considerations, such as data exposure and dependency vulnerabilities, which are critical in sensitive areas where data integrity and privacy are paramount.

References

- OpenAI. Introducing OpenAI O1-Preview, 2024.

- OpenAI. Hello GPT-4O, 2024.

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K. Gemini: A Family of Highly Capable Multimodal Models. arXiv, 2023; arXiv:2312.11805 2023. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F. LLaMA: Open and Efficient Foundation Language Models. arXiv, 2023; arXiv:2302.13971 2023. [Google Scholar]

- Chase, H. LangChain, 2022. Available at https://github.com/langchain-ai/langchain.

- LangChain, Inc.. LangChain Documentation: Integration Providers. LangChain, Inc., San Francisco, CA, 2024. Available at https://python.langchain.com/docs/integrations/providers/.

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; others. Retrieval-augmented generation for knowledge-intensive nlp tasks. Advances in Neural Information Processing Systems 2020, 33, 9459–9474. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S. GPT-4 Technical Report. arXiv, 2023; arXiv:2303.08774 2023. [Google Scholar]

- LangChain, Inc.. LangChain Documentation: Security Best Practices. LangChain, Inc., San Francisco, CA, 2024. Available at https://python.langchain.com/docs/security/.

- LangChain, Inc.. LangChain Documentation: Key Concepts. LangChain, Inc., San Francisco, CA, 2024. Available at https://python.langchain.com/docs/concepts/.

- LangChain, Inc.. LangChain Documentation: LangServe. LangChain, Inc., San Francisco, CA, 2024. Available at https://python.langchain.com/docs/langserve/.

- LangChain, Inc.. LangSmith: A Developer Platform for LLM Applications, 2024. Accessed: 2024-11-04.

- Malewicz, G.; Austern, M.H.; Bik, A.J.; Dehnert, J.C.; Horn, I.; Leiser, N.; Czajkowski, G. Pregel: A System for Large-Scale Graph Processing. Proceedings of the 2010 ACM SIGMOD International Conference on Management of Data, 2010, pp. 135–146.

- The Apache Software Foundation. Apache Beam: An AdvancedUnified Programming Model, 2024. Accessed: 2024-11-04.

- LangChain, Inc.. LangGraph: Building Language Agents as Graphs, 2024. Accessed:2024-11-04.

- LangChain, Inc.. LangGraph Platform Documentation, 2024. Accessed: 2024-11-04.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).