Submitted:

22 October 2024

Posted:

22 October 2024

You are already at the latest version

Abstract

Keywords:

I. Introduction

II. Methods and Materials

A. Data Collection and Preprocessing

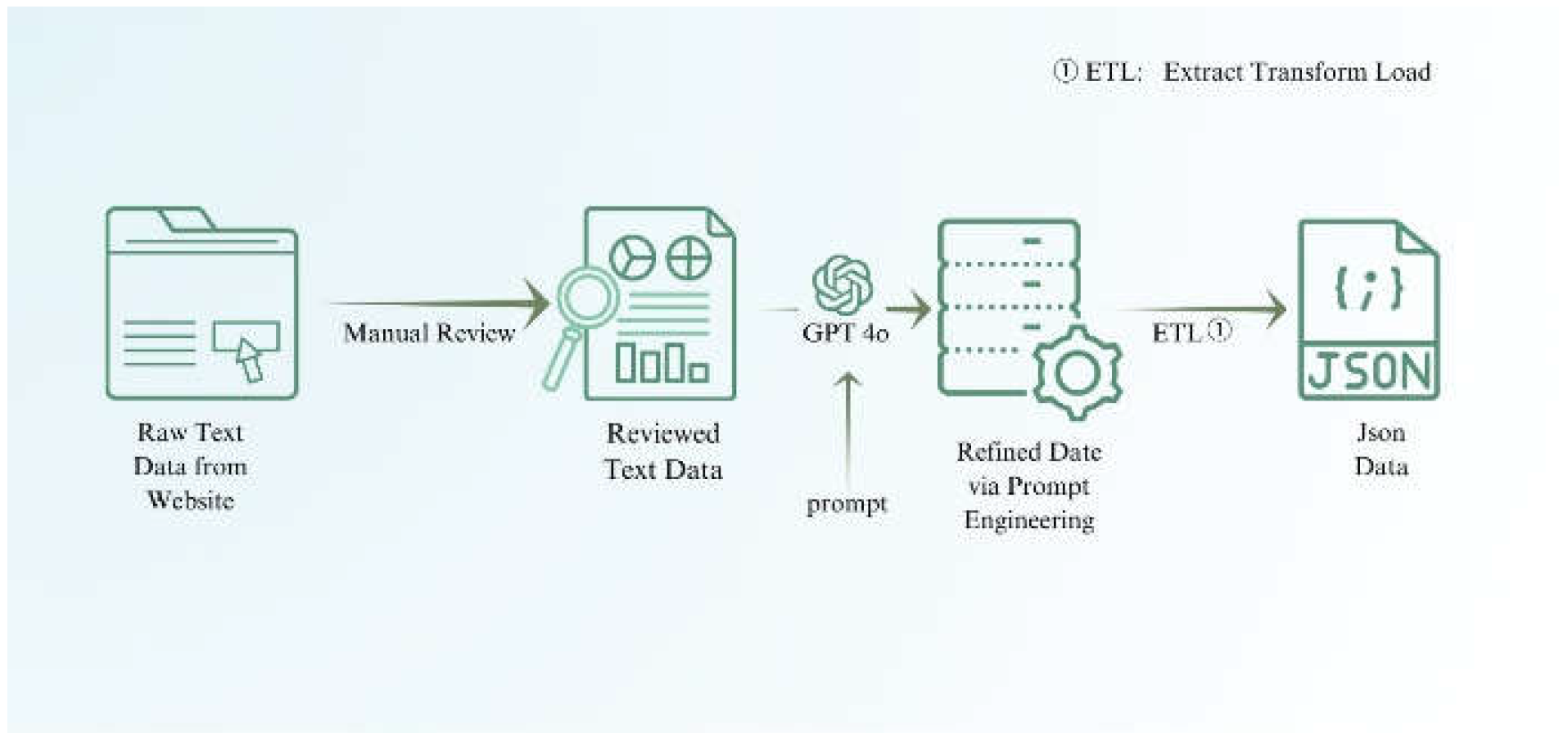

- Online Sources: Publicly available nursing instructions and academic papers were processed into JSON format.

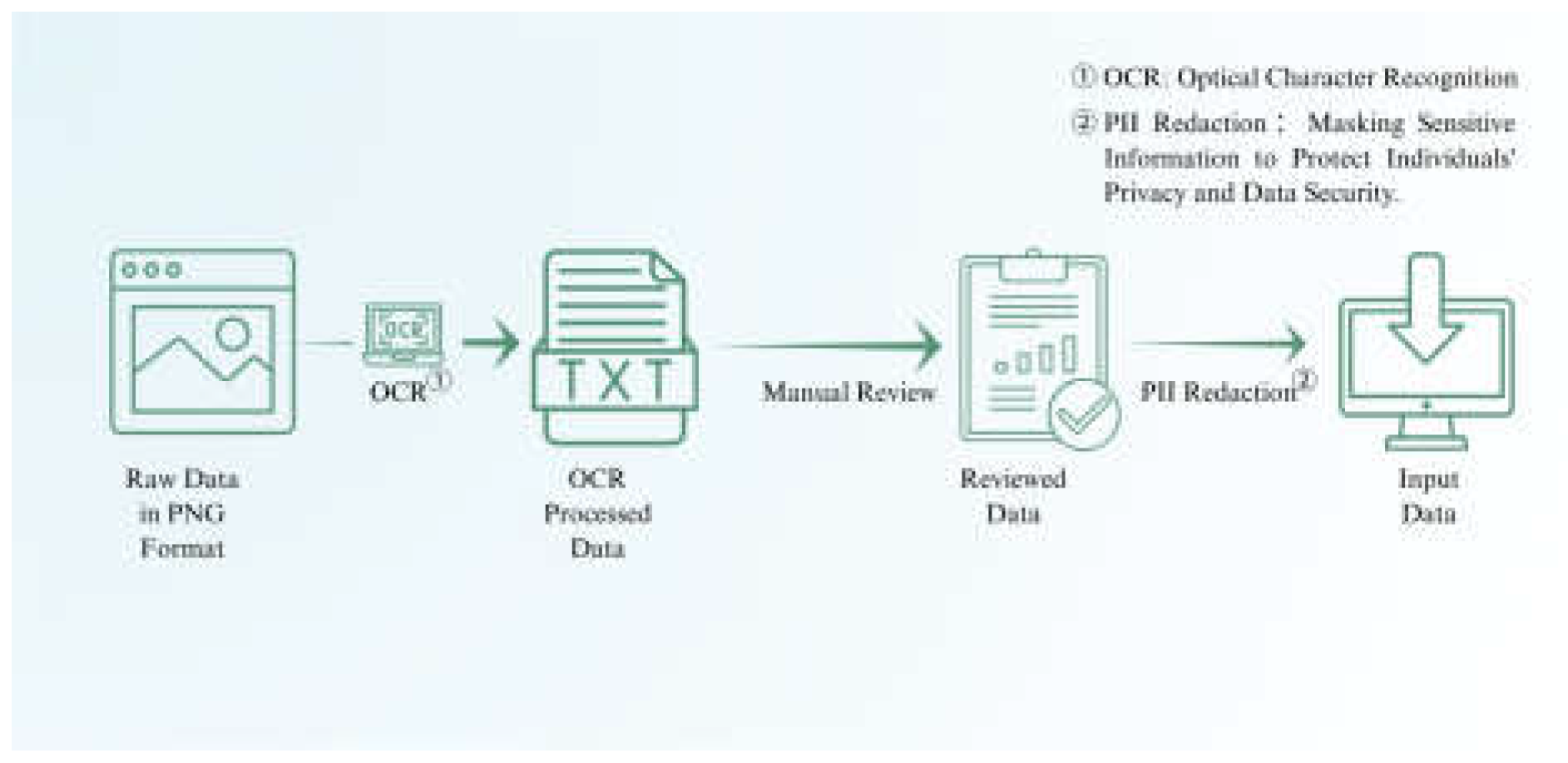

- Hospital Data: We obtained data from 100 patients from a specific hospital’s neurology department. These data are patient discharge summaries, which include admission and discharge symptoms, tests conducted during admis- sion and discharge, and diagnoses made during admission and discharge. In addition, through doctors’ follow-up with patients, we collected some of the problems that patients often face and their daily symptoms after dis- charge. These data are used to test the performance of the system.

B. Comparison of Traditional vs RAG-Based Systems

III. Software System Design

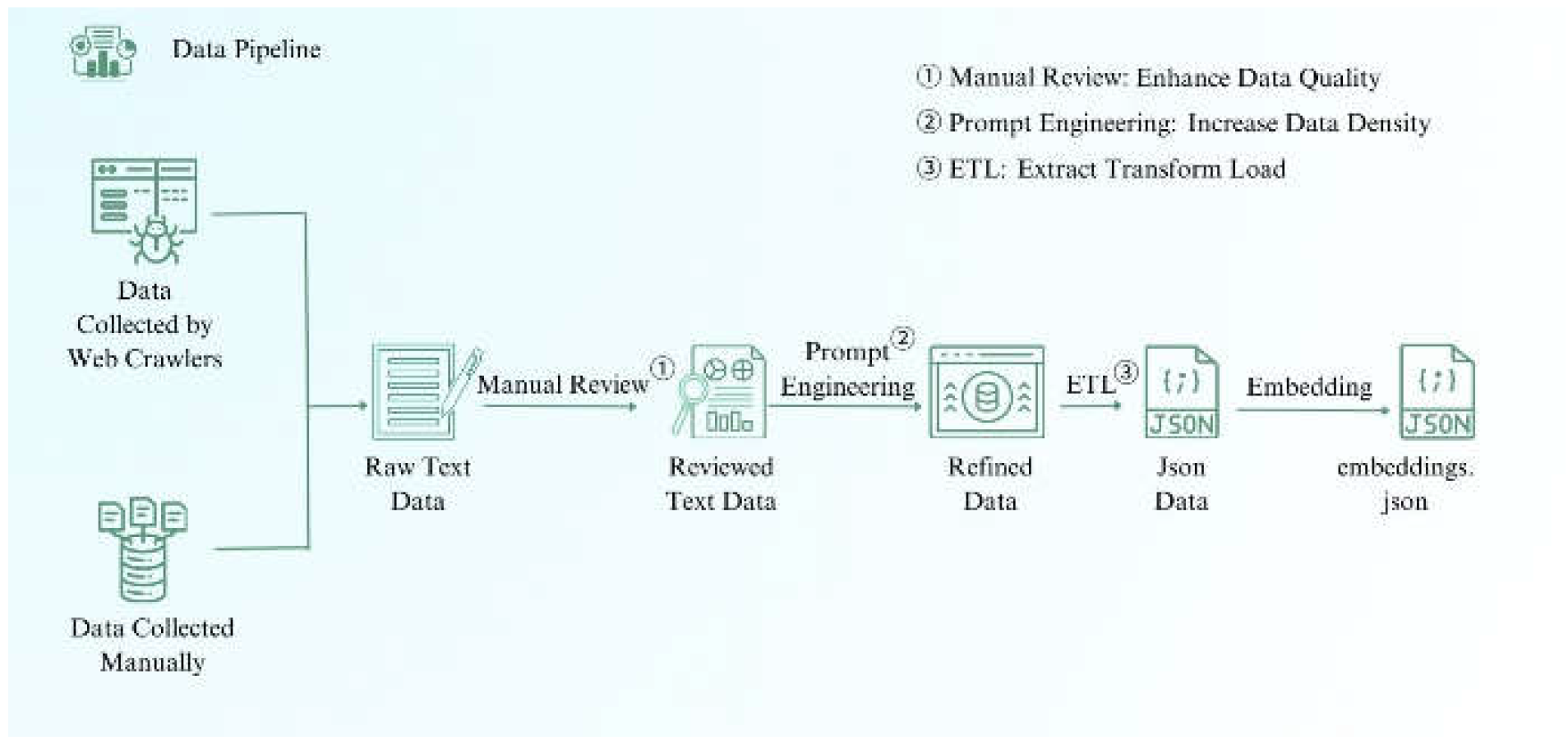

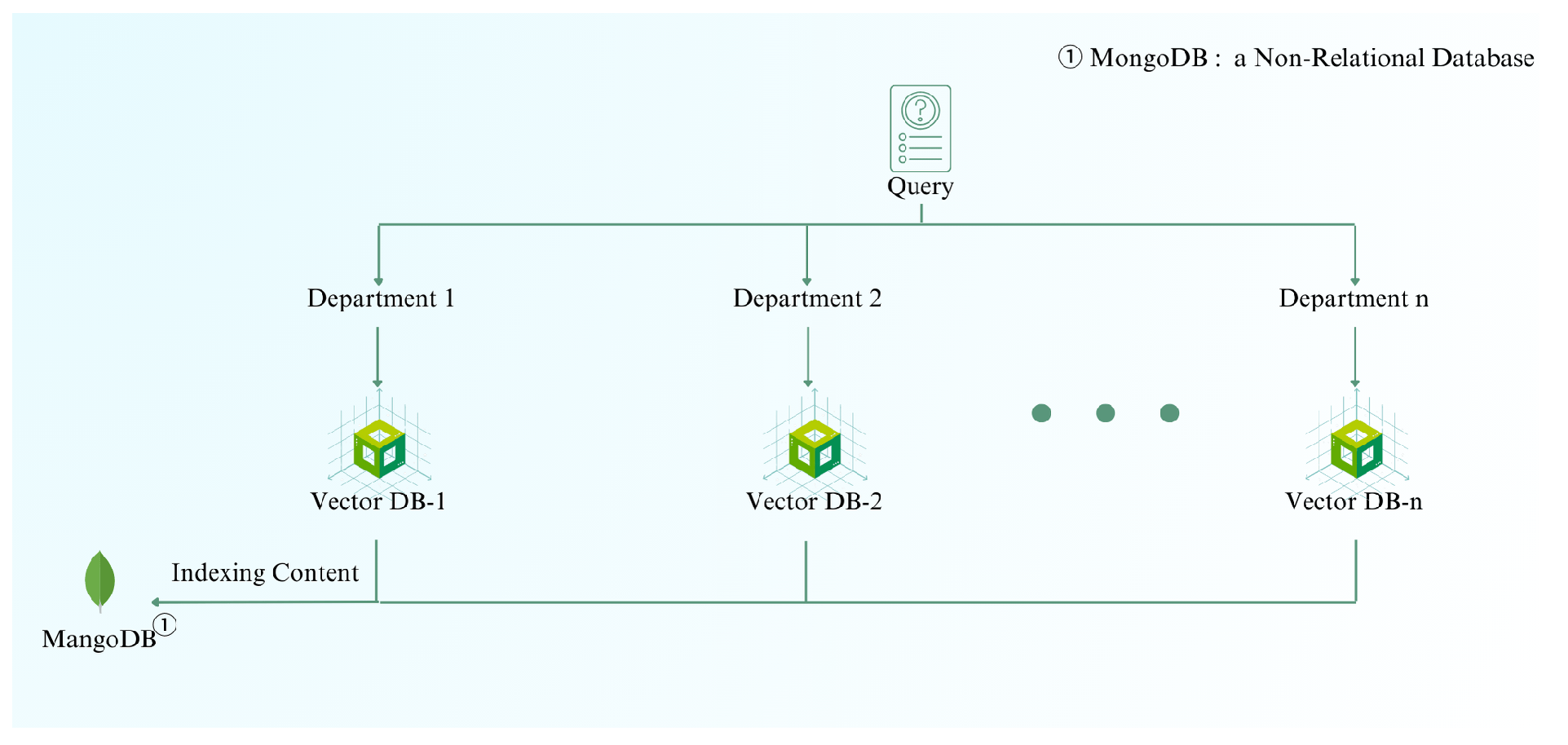

A. Data Pipeline

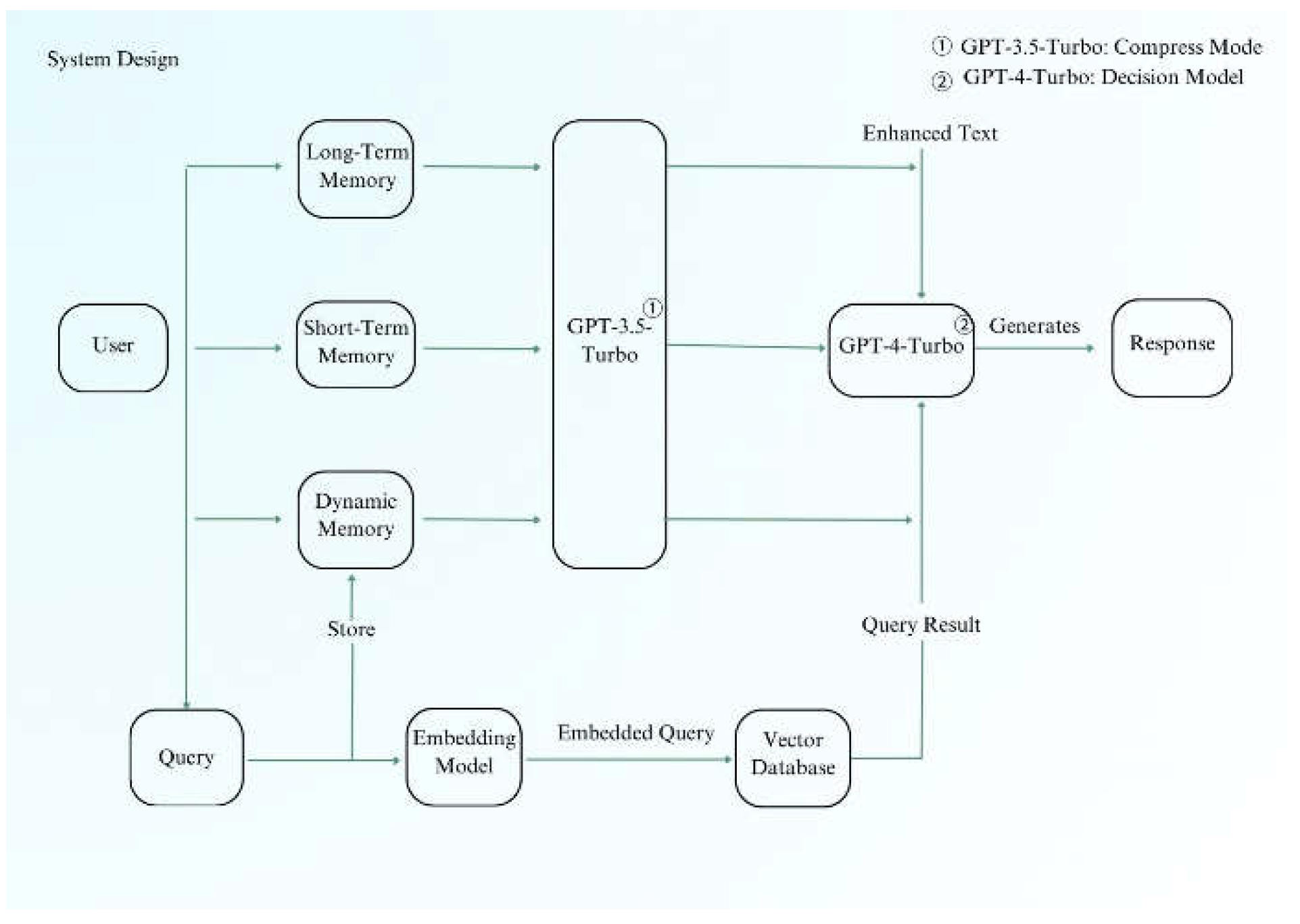

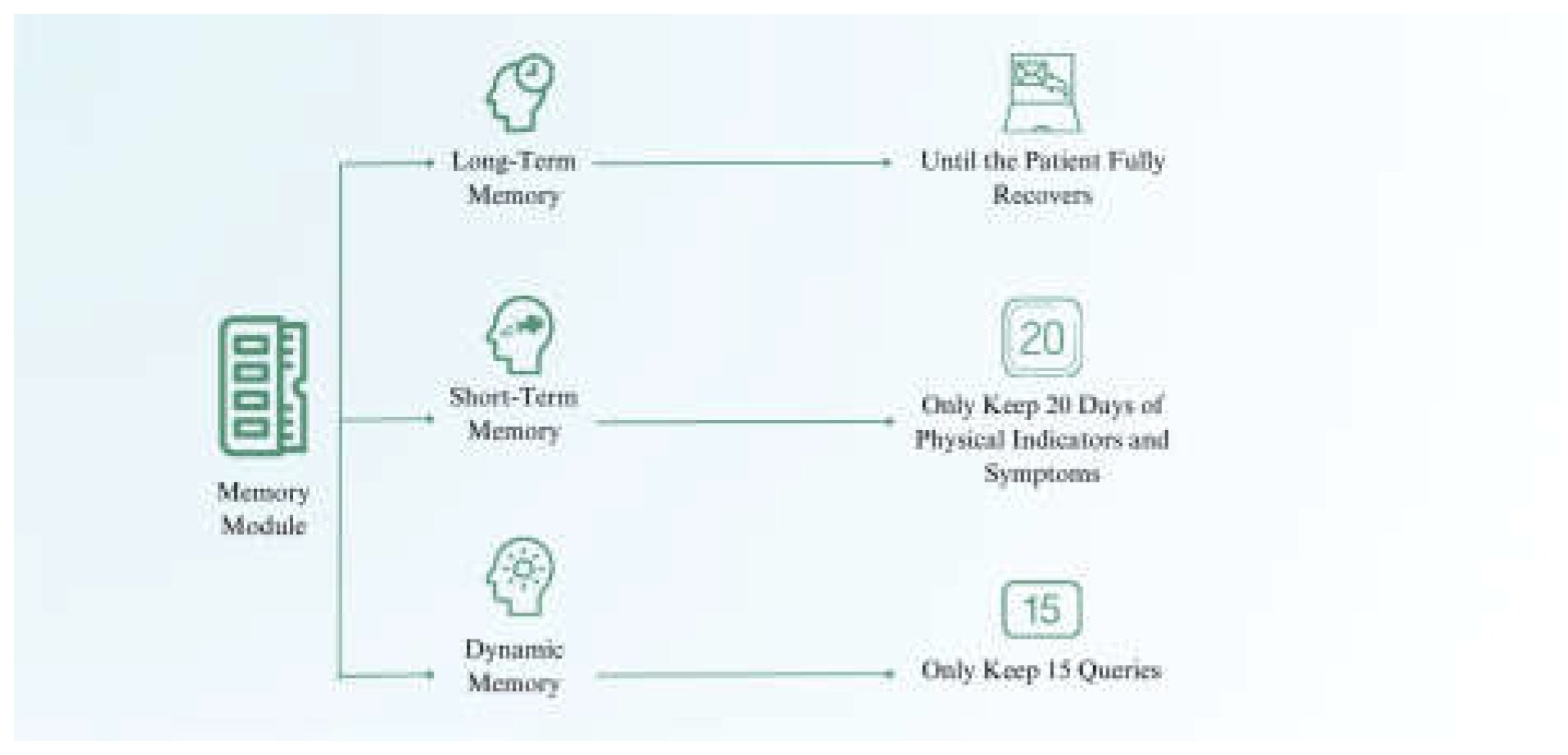

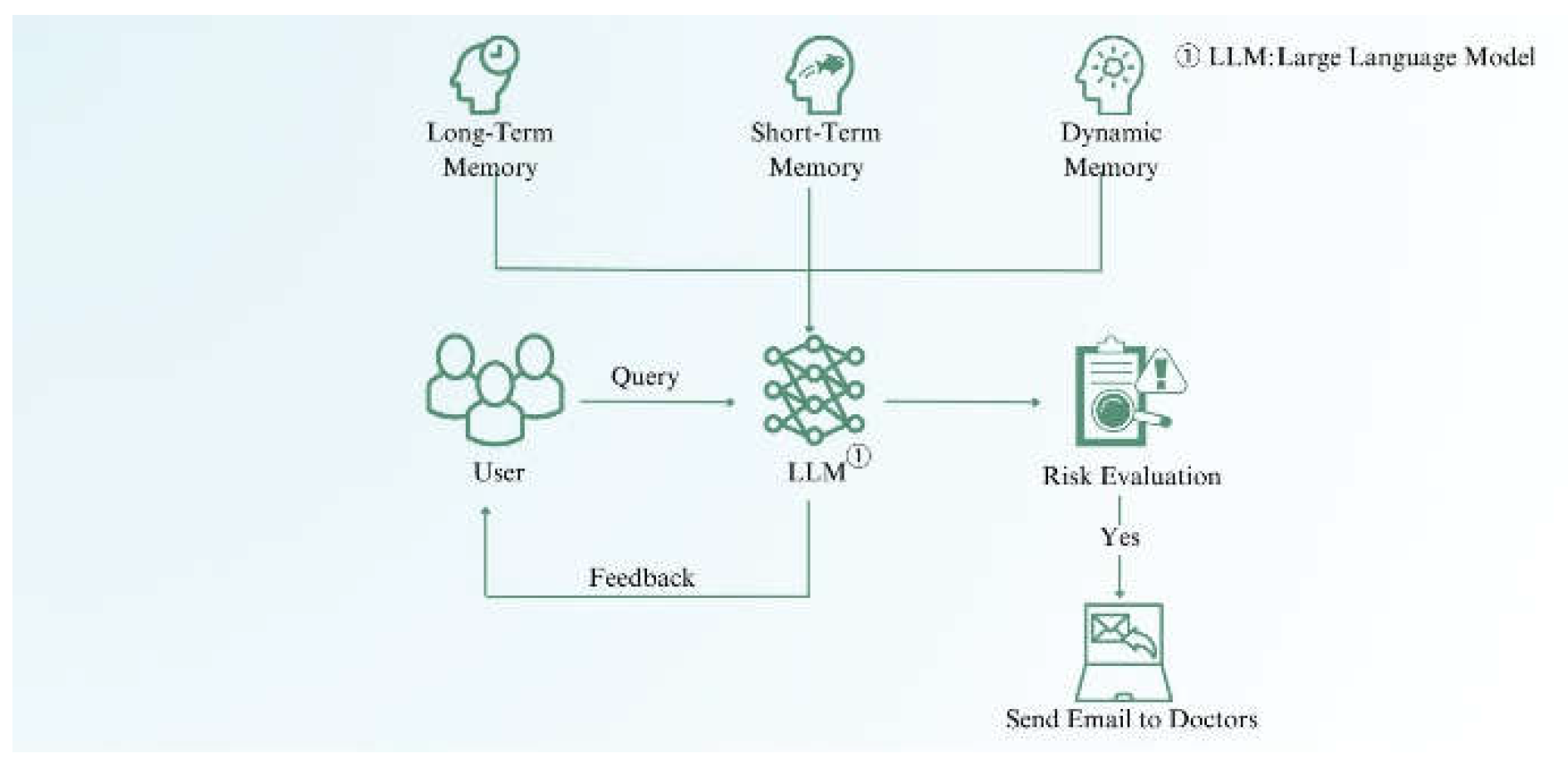

B. System Design

C. Implementation Details

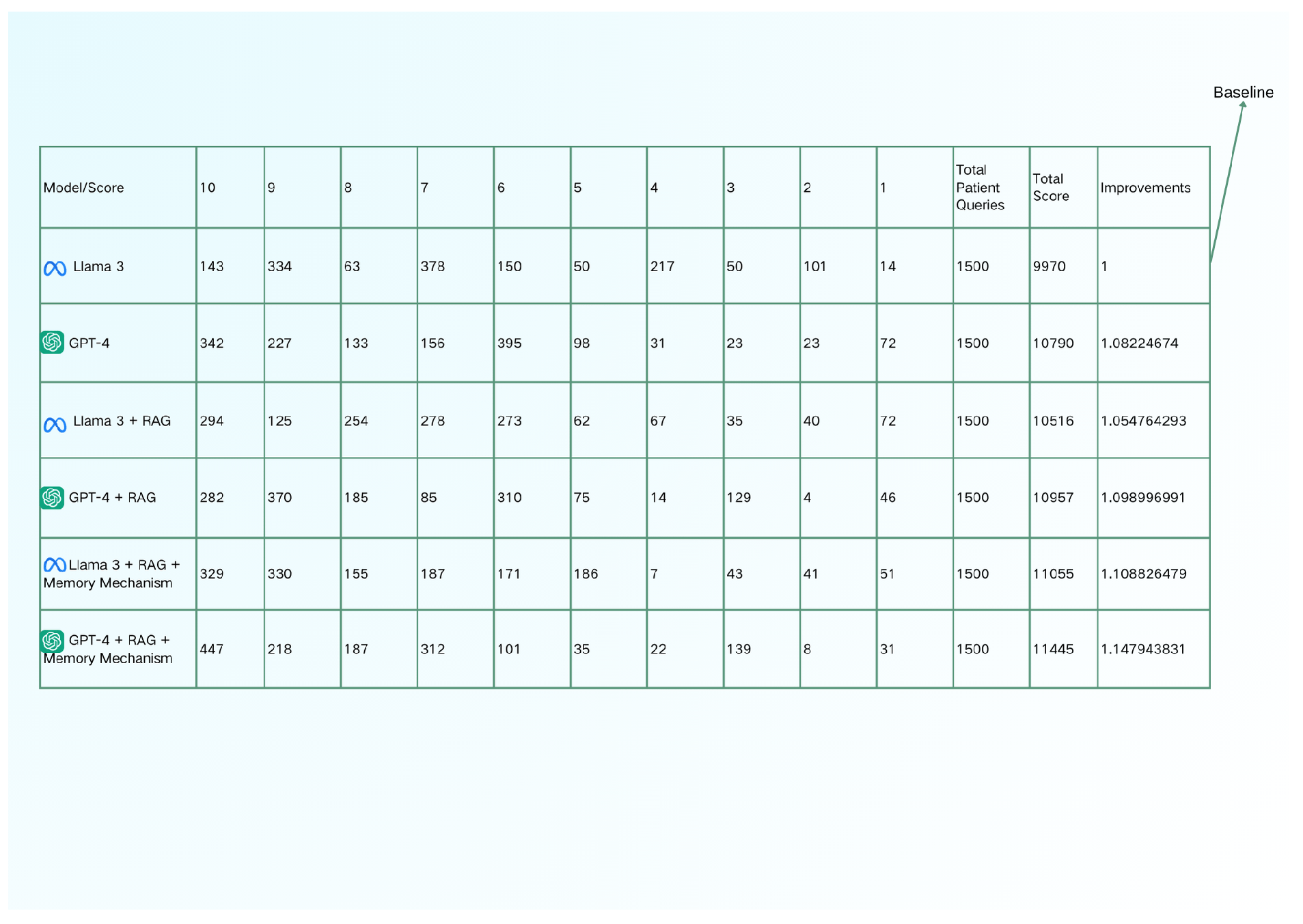

IV. Results and Evaluation

A. Results

B. Possible Drawbacks

V. Application and Future Work

A. System Improvements

B. Application

VI. Conclusion

References

- Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., ... & Riedel, S. (2020). Retrieval-augmented generation for knowledge- intensive NLP tasks. Advances in Neural Information Processing Sys- tems, 33, 9459-9474.

- Howard, J., & Ruder, S. (2018). Universal Language Model Fine-tuning for Text Classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (pp. 328-339). Melbourne, Australia. Retrieved from ACL Anthology.

- Pan, S. J., & Yang, Q. (2010). A Survey on Transfer Learning. IEEE Transactions on Knowledge and Data Engineering, 22(10), 1345-1359. [CrossRef]

- Hoshi, Y., Miyashita, D., Ng, Y., Tatsuno, K., Morioka, Y., Torii, O., & Deguchi, J. (2023). Ralle: A framework for developing and evaluating retrieval-augmented large language models. arXiv preprint arXiv:2308.10633. arXiv:2308.10633.

- Huang, J., Ping, W., Xu, P., Shoeybi, M., Chang, K. C., & Catanzaro, B. (2023). Raven: In-context learning with retrieval augmented encoder- decoder language models. arXiv preprint arXiv:2308.07922. arXiv:2308.07922.

- Wang, X., et al. (2024). Searching for Best Practices in Retrieval- Augmented Generation. arXiv preprint arXiv:2407.01219. arXiv:2407.01219.

- Zhang, Z., et al. (2023). RAGCache: Efficient Knowledge Caching for Retrieval-Augmented Generation. arXiv preprint arXiv:2404.12457. arXiv:2404.12457.

- Smith, J., Doe, J., & Brown, A. (2023). Scalability analysis comparisons of cloud-based software services. Journal of Cloud Computing, 45(3), 123-134.

- Adams, K. (2021). Modular Data Management: Best Practices. Journal of Information Technology, 34(4), 203-215.

- Izacard, G., Lewis, P., Lomeli, M., Hosseini, L., Petroni, F., Schick, T., Dwivedi-Yu, J., Joulin, A., & Riedel, S. (2022). Few-shot learning with retrieval-augmented language models. arXiv preprint arXiv:2208.03299. arXiv:2208.03299.

- Papers with Code. (n.d.). RAG Explained. Retrieved from https://paperswithcode.com/method/rag.

- Advanced RAG techniques: an illustrated overview. (2023). Towards AI. Retrieved from https://pub.towardsai.net/advanced-rag-techniques- an-illustrated-overview-04d193d8fec6.

- Chen, J., Zhu, L., Mou, W., Liu, Z., Cheng, Q., Lin, A., Zhang, J., & Luo, P. (2023). STAGER checklist: Standardized Testing and Assessment Guidelines for Evaluating Generative AI Reliability. arXiv preprint arXiv:2312.10074. arXiv:2312.10074.

- BMC Medical Informatics and Decision Making. (2021). Deep learning with sentence embeddings pre-trained on biomedical corpora improves the performance of finding similar sentences in electronic medical records. Retrieved from https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911- 021-01490-6.

- Han, Y., Liu, C., & Wang, P. (2023). A Comprehensive Survey on Vector Database: Storage and Retrieval Techniques, Challenges, and Future Directions. arXiv preprint arXiv:2310.11703. arXiv:2310.11703.

- The Epoch Times. (2023). 2 Warning Signs of Cerebral Infarction and 5 Tips to Prevent It. Retrieved from https://www.theepochtimes.com/health/2-warning-signs-of-cerebral- infarction-and-5-tips-to-prevent-it-5017186.

- Drugs.com. (n.d.). Ischemic stroke. Retrieved July 2, 2024, from https://www.drugs.com/cg/ischemic-stroke.html.

- Drugs.com. (n.d.). Brainstem infarction. Retrieved July 2, 2024, from https://www.drugs.com/cg/brainstem-infarction.html.

- Drugs.com. (n.d.). Atherosclerosis. Retrieved July 2, 2024, from https://www.drugs.com/health-guide/atherosclerosis.htmlprognosis.

- Drugs.com. (2024, June 5). Hypertension during pregnancy. Drugs.com. Retrieved July 2, 2024, from https://www.drugs.com/cg/hypertension- during-pregnancy.html.

- Zamora, L. R., Lui, F., & Budd, L. A. (2021). Acute Stroke. In StatPearls. StatPearls Publishing. Retrieved July 2, 2024, from https://www.ncbi.nlm.nih.gov/books/NBK568693/nurse-article- 17174.s3.

- Belleza, M. (2024, May 10). Cerebrovascular accident (stroke) nursing care and management: A study guide. Nurseslabs. Retrieved July 2, 2024, from https://nurseslabs.com/cerebrovascular-accident-stroke/.

- SimpleNursing. (2024, May 10). Nursing care plan for stroke. Retrieved July 2, 2024, from https://simplenursing.com/nursing-care-plan-stroke/.

- Chippewa Valley Technical College. (n.d.). Arteriosclerosis & atherosclerosis. In Health alterations. Retrieved July 2, 2024, from https://wtcs.pressbooks.pub/healthalts/chapter/5-6-arteriosclerosis- atherosclerosis.

- NurseTogether. (2023, March 22). Coronary artery disease: Nursing diagnoses, care plans, assessment & interventions. Retrieved July 2, 2024, from https://www.nursetogether.com/coronary-artery-disease- nursing-diagnosis-care-plan/.

- Made for Medical. (2023, February 14). Nursing care plan for arteriosclerosis. Retrieved July 2, 2024, from https://www.madeformedical.com/nursing-care-plan-for- arteriosclerosis/.

- Kristinsson, O., & Rowe, K. (2020). Diagnosis and treatment of acute aortic dissection in the emergency department. The Nurse Practitioner, 45(1), 34-38. Retrieved July 2, 2024, from https://www.npjournal.org/article/S1555-4155(19)30937-7/fulltext.

- DeSai, C., & Shapshak, A. H. (2023). Cerebral ischemia. In StatPearls. StatPearls Publishing. Retrieved July 2, 2024, from https://www.ncbi.nlm.nih.gov/books/NBK560510/: :text=Cerebral.

- Busl, K. M., & Greer, D. M. (2010). Hypoxic-ischemic Brain Injury: Pathophysiology, Neuropathology and Mechanisms. Journal of Neuro- critical Care, 5(1), 5–13.

- Mayo Clinic. (n.d.). High blood pressure (hypertension) - Diagnosis & treatment. Mayo Clinic. Retrieved July 2, 2024, from https://www.mayoclinic.org/diseases-conditions/high-blood- pressure/diagnosis-treatment/drc-20373417.

- National Library of Medicine. (n.d.). Controlling your high blood pressure. MedlinePlus. Retrieved July 2, 2024, from https://medlineplus.gov/ency/patientinstructions/000101.htm.

- Medscape. (n.d.). Ischemic Stroke Overview. Retrieved [date you ac- cessed the page], from https://emedicine.medscape.com/article/1916852- overview.

- Chen, L., Han, Z., & Gu, J. (2019). Early path nursing on neurological function recovery of cerebral infarction. Translational Neuroscience, 10(1), 160-163. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).