Submitted:

05 October 2024

Posted:

08 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Related Work

1.2. Paper Highlights

- ➢

- An innovative approach is utilized in this paper to solve the Fractional Fokker–Planck–Levy (FFPL) equation. The equation contains the Levy noise and fractional Laplacian, making the equation computationally complex.

- ➢

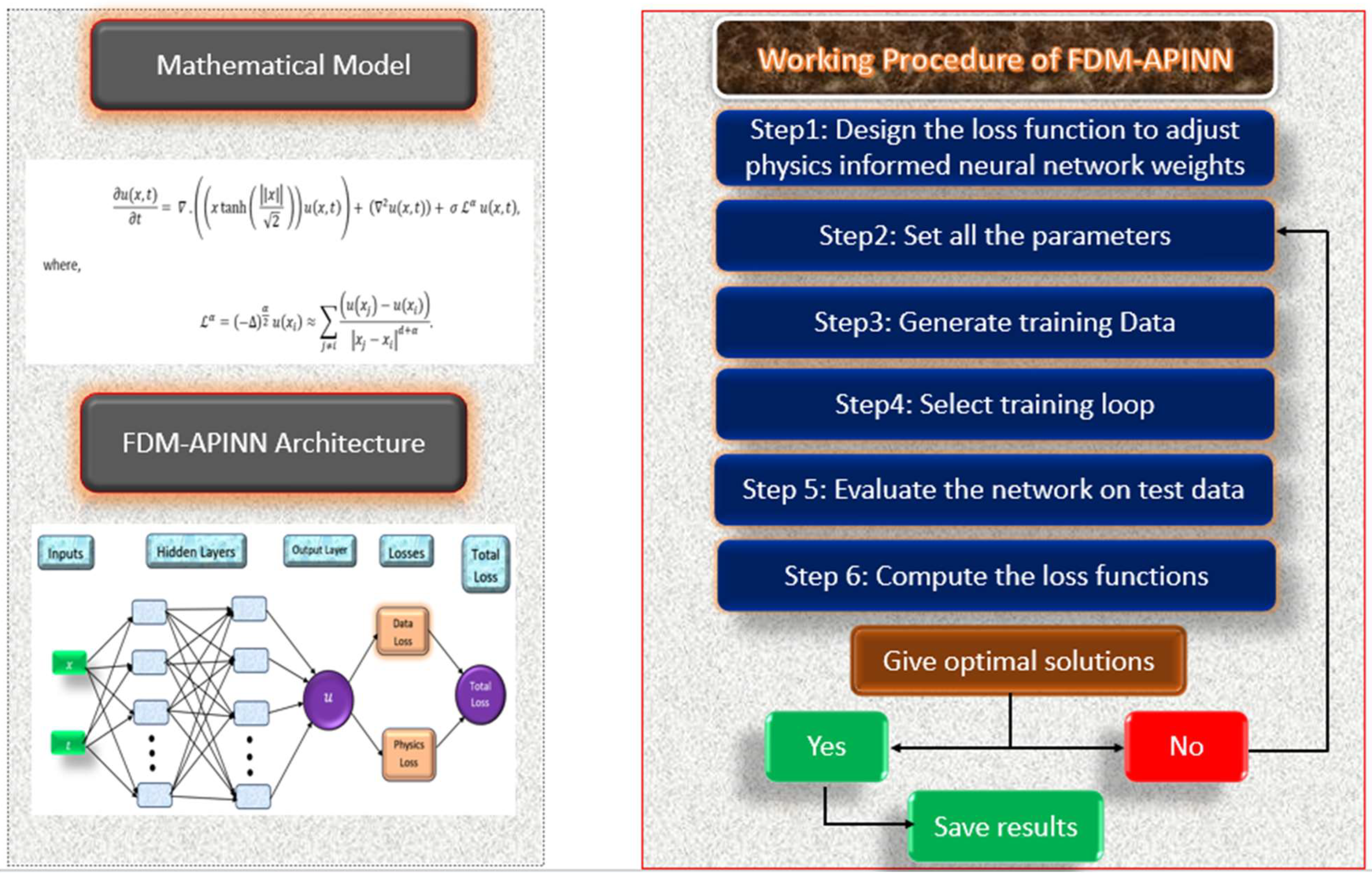

- A hybrid technique is designed by combining the finite difference method (FDM), Adams numerical technique, and physics-informed neural network (PINN) architecture, namely, the FDM-APINN, to solve the fractional Fokker‒Planck‒Levy (FFPL) equation numerically.

- ➢

- Related work on solving partial differential equations (PDEs) and fractional partial differential equations (FPDEs) is discussed.

- ➢

- The fractional Fokker–Planck-Levy (FFPL) equation is solved numerically via the proposed technique.

- ➢

- The manuscript is categorized into two main scenarios by varying the value of the fractional order parameter . The equation is solved for the two values of , i.e., ().

- ➢

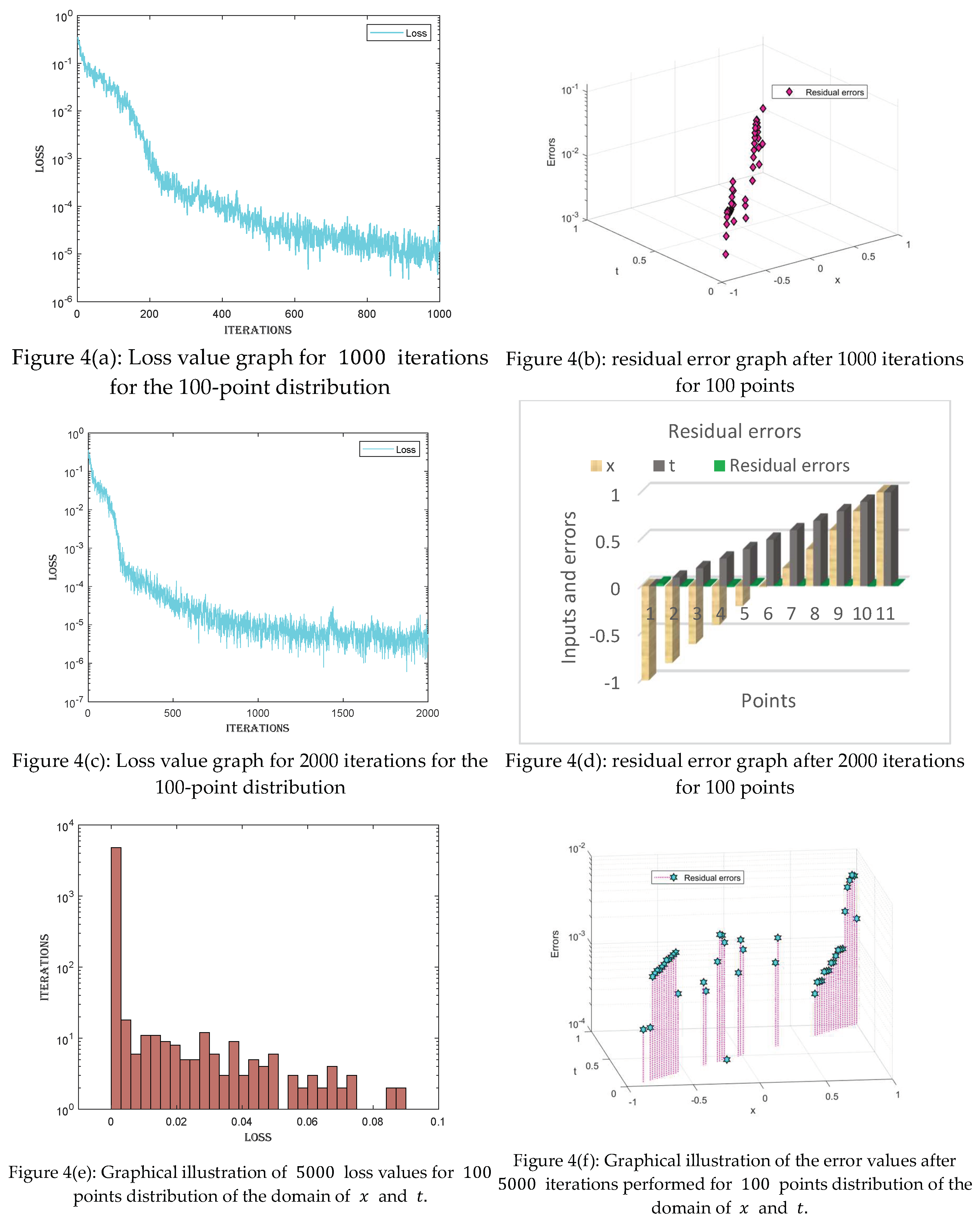

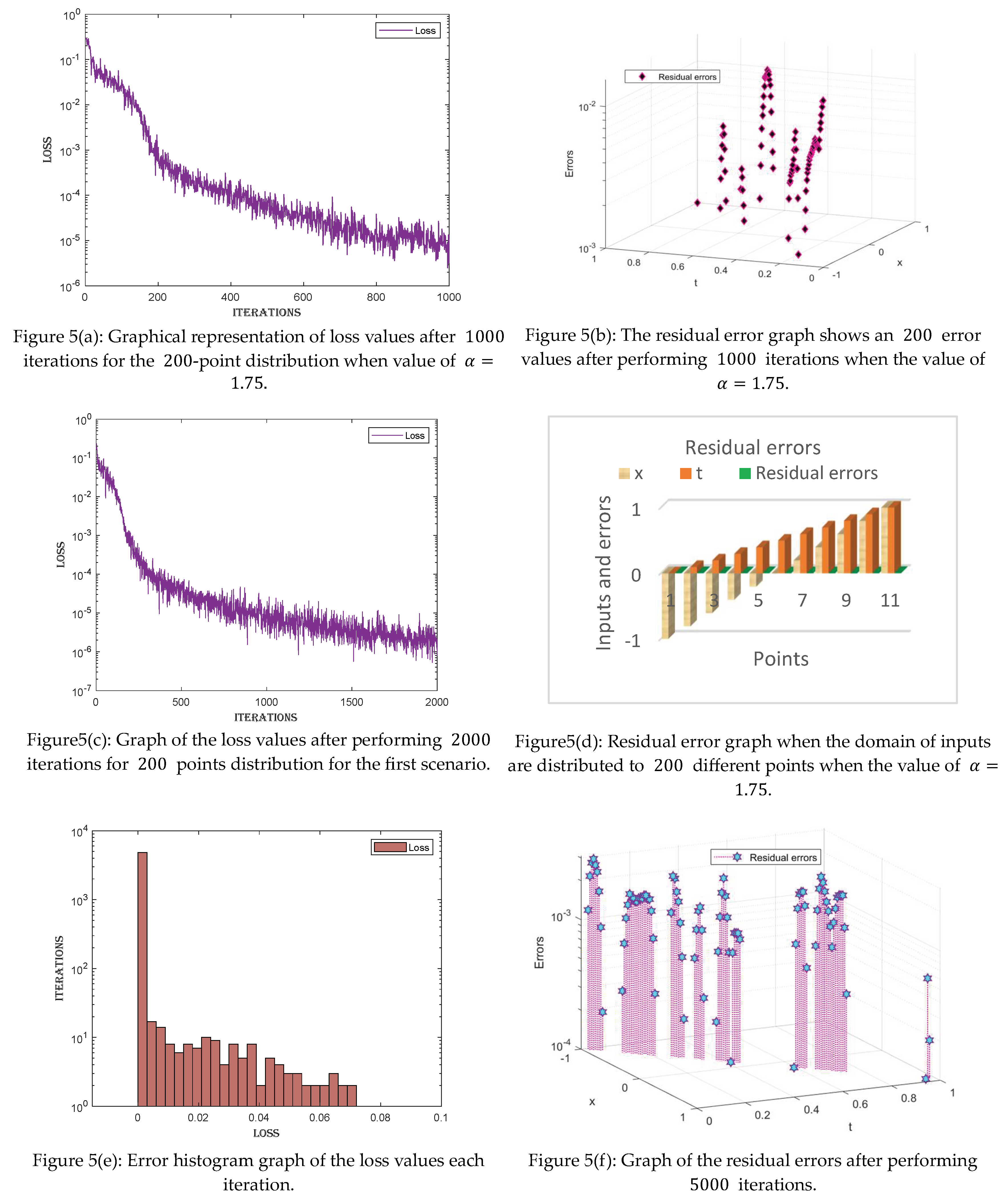

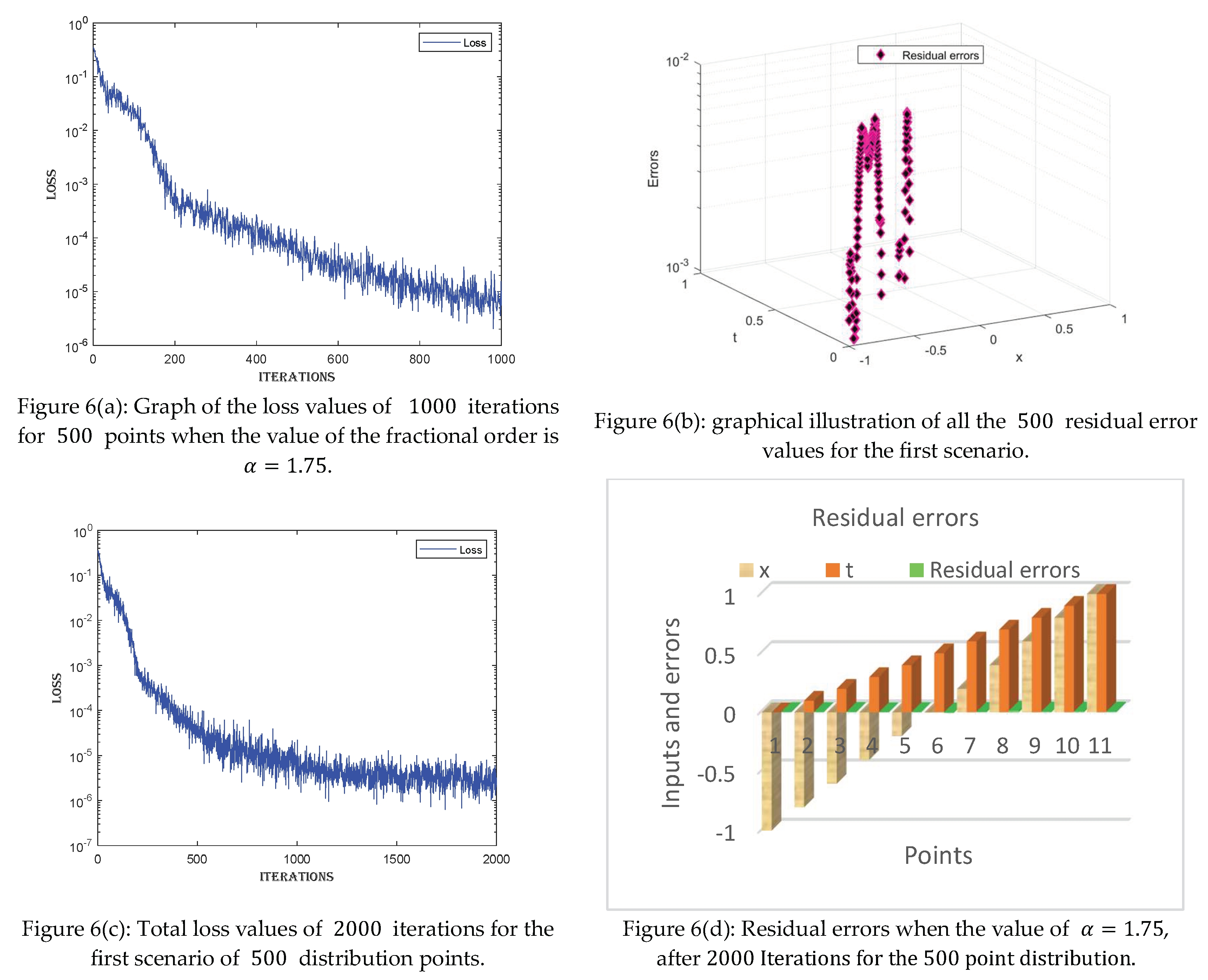

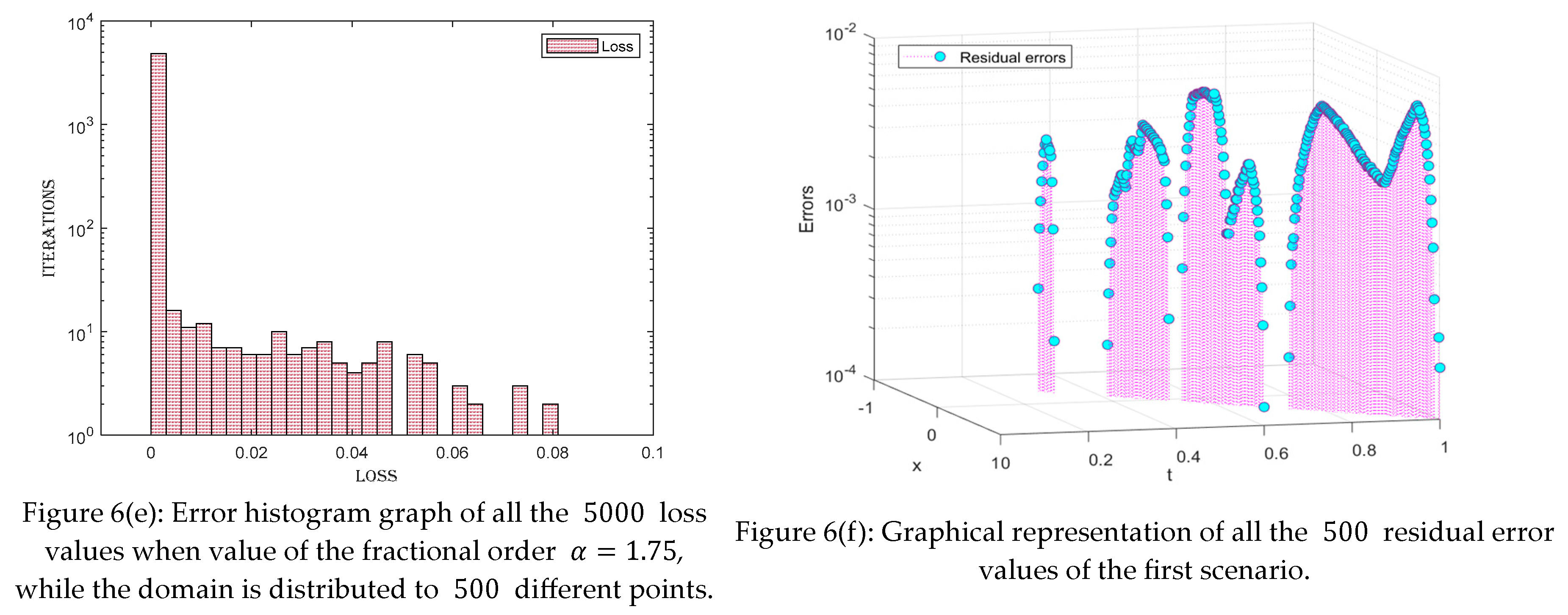

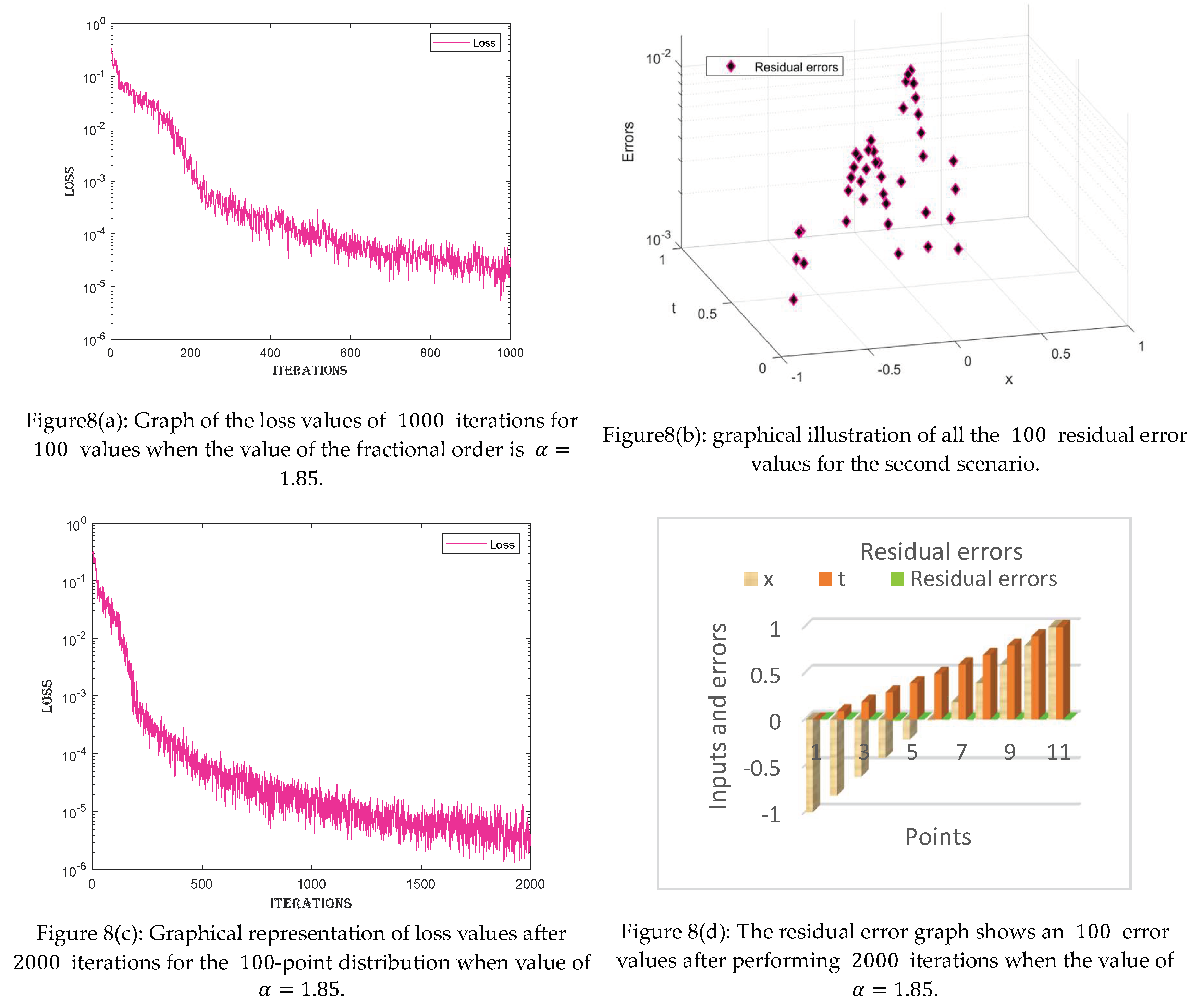

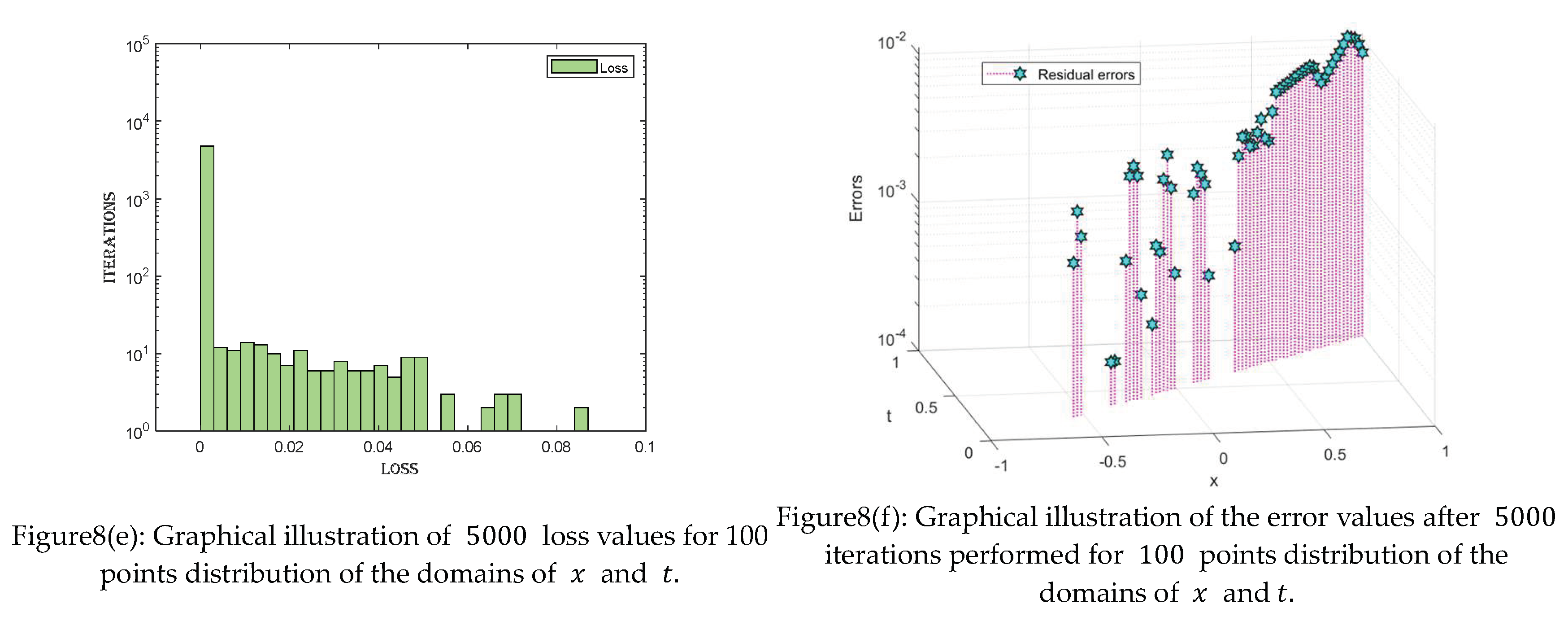

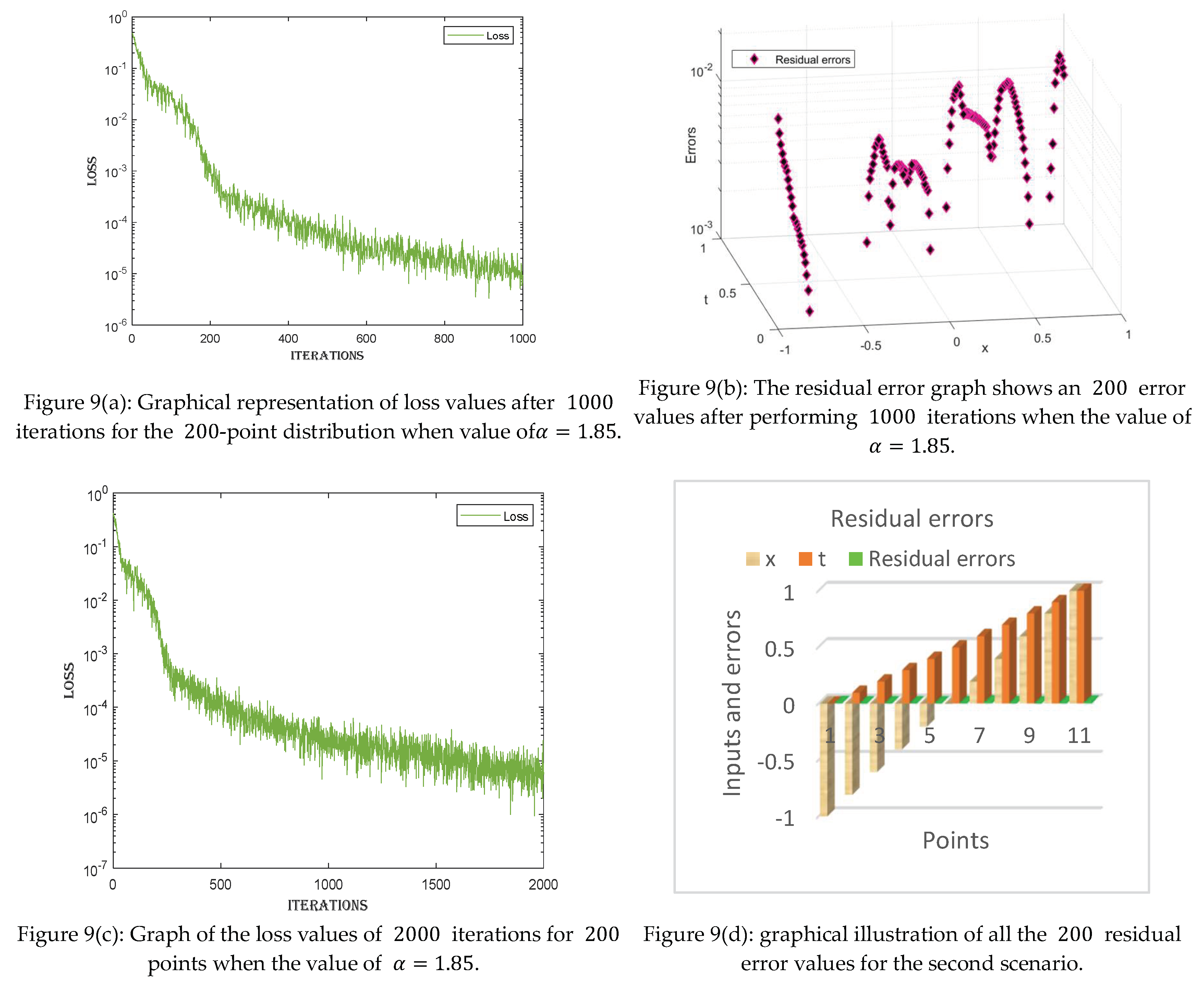

- The loss values given for each case can be visualized in the tables. The loss values are very minimal, ranging between and , indicating the precision of the proposed technique.

- ➢

- All the solutions of the proposed technique are compared with those of the score-fPINN technique, which is a well-known technique for solving fractional differential equations.

- ➢

- The residual error graph and tables show the errors between both techniques. The errors range between and . The small error indicates the validity of our proposed technique.

- ➢

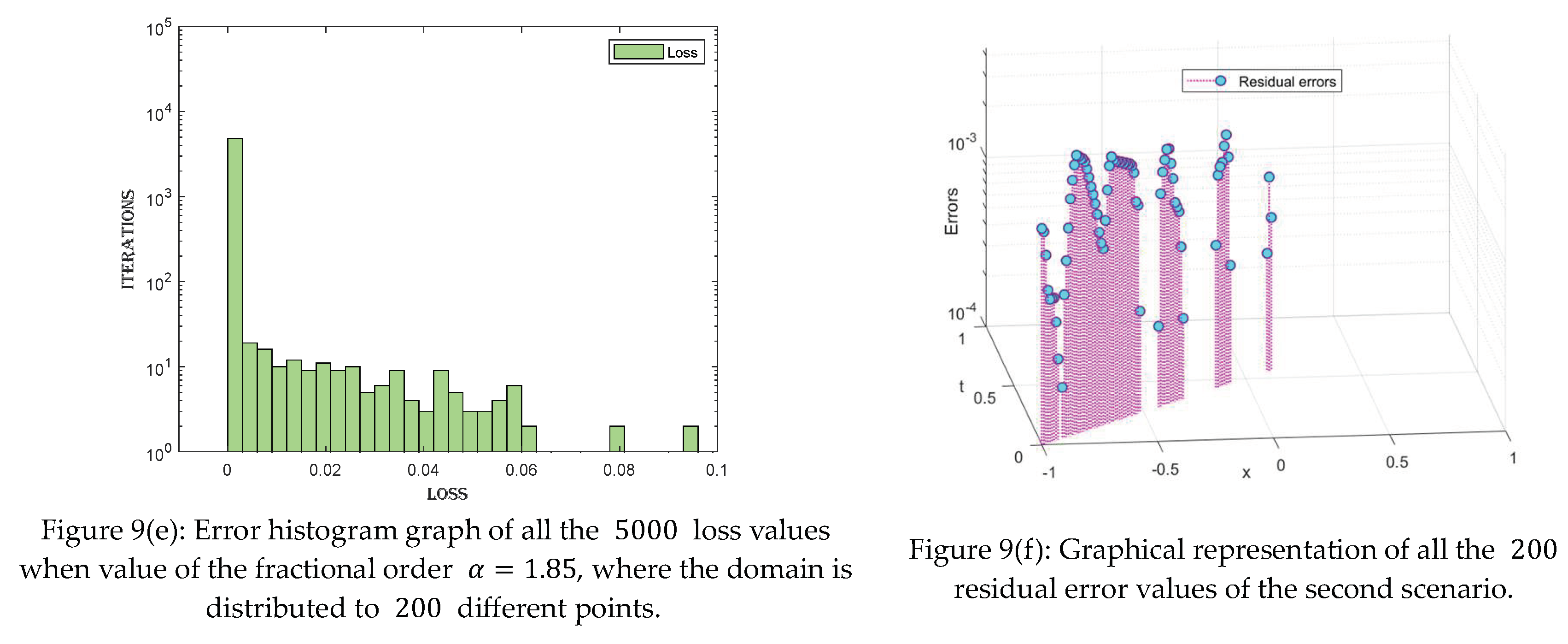

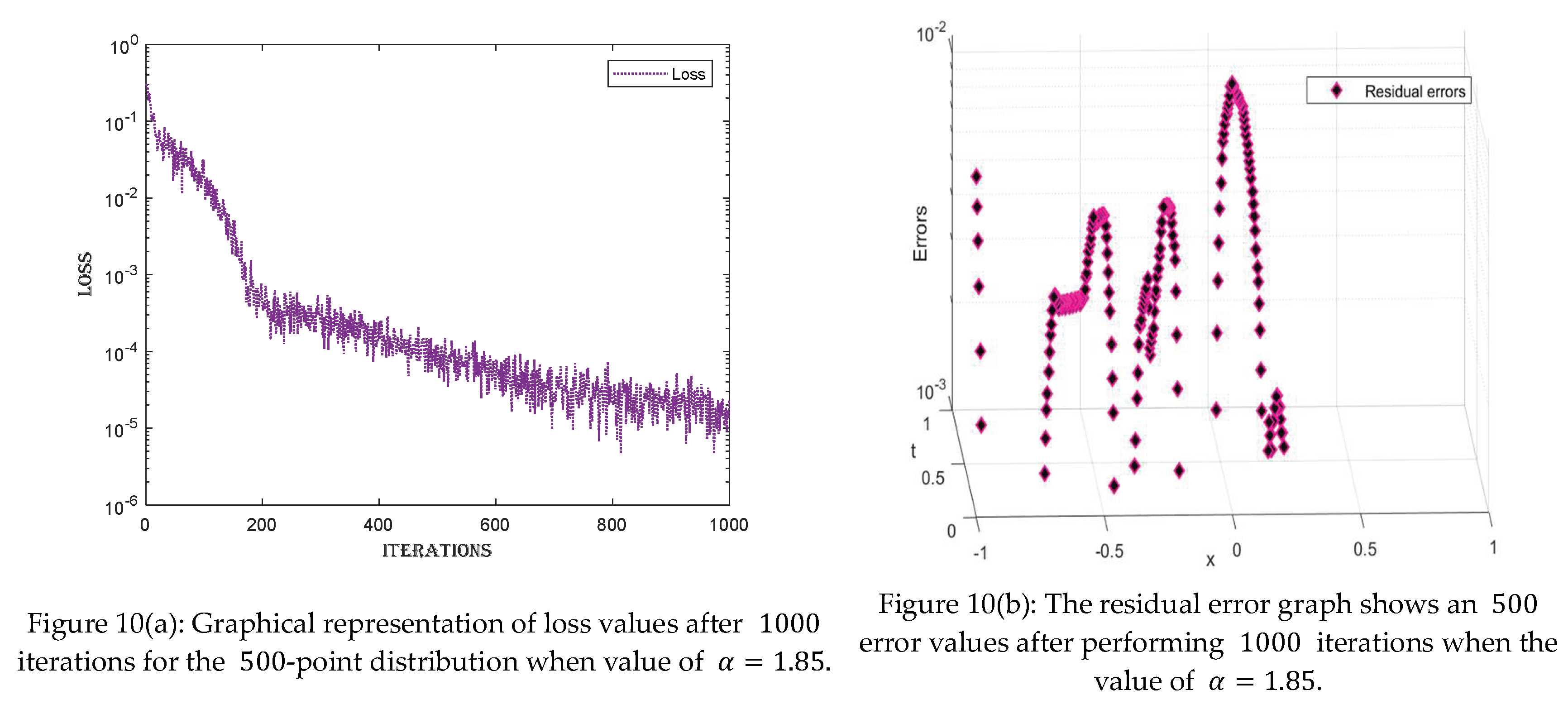

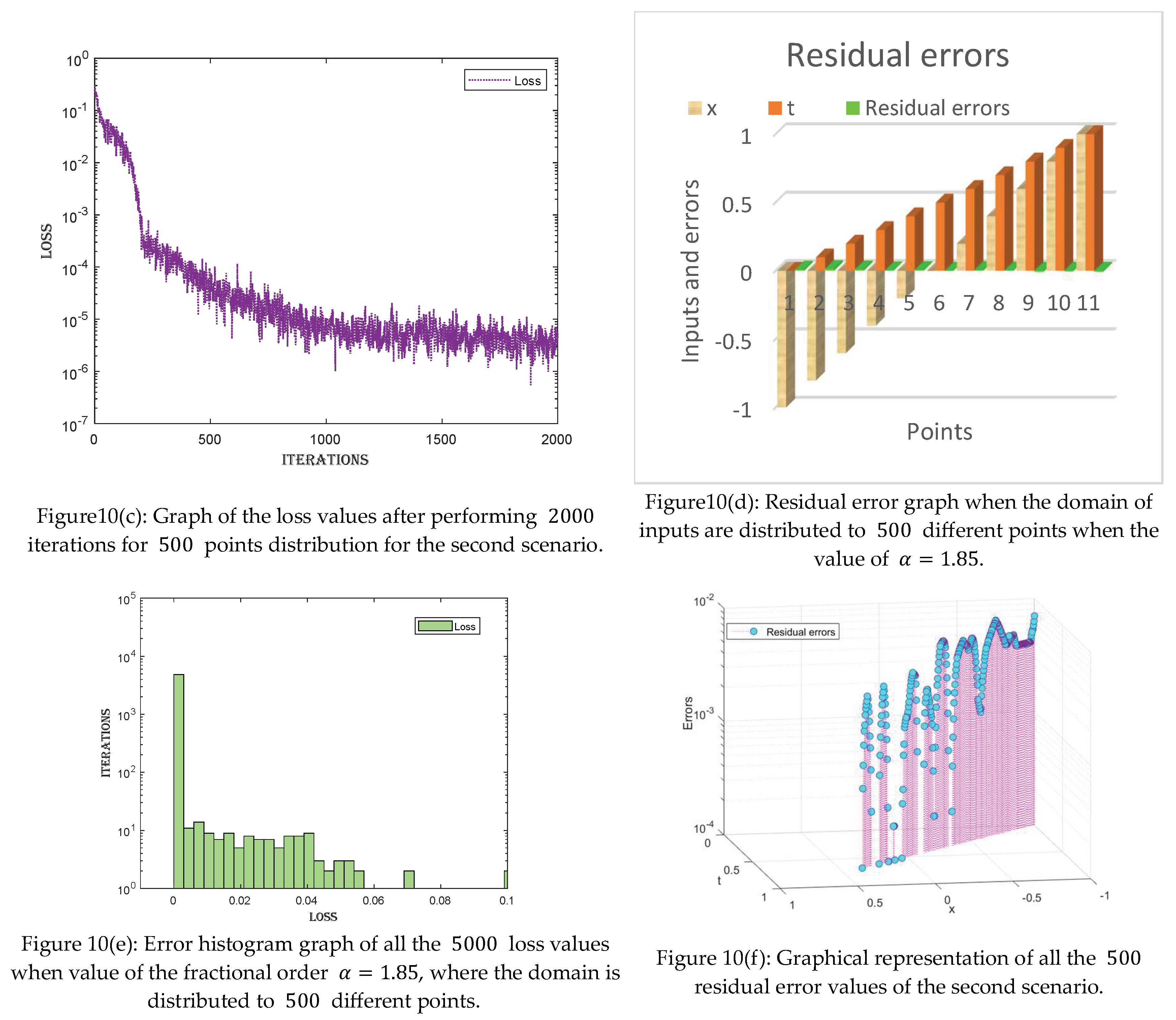

- Furthermore, loss and error graphs have been added to the manuscript to explore the proposed technique further. The histogram graph shows the consistency of the proposed technique.

- ➢

- All the results presented in the tables and graphs indicate that the proposed technique is a state-of-the-art technique that is robust.

2. Defining the Problem

- , is defined as

- ➢

- represents the diffusion term; in this problem, it is taken as the identity matrix.

- ➢

- where is the Levy noise.

- ➢

- is the order of the fractional Laplacian, where .

- ➢

- represents the fractional Laplacian operator of order .

- ➢

- is the drift term.

- ➢

- is the diffusion term

- If , then it is a Gaussian distribution.

- If , then it is a Cauchy distribution.

- If , then it is a Holtsmark distribution, whose PDF is a hypergeometric function.

- where represents the fractional order of the Laplacian.

- is the dimension.

3. Proposed Methodology

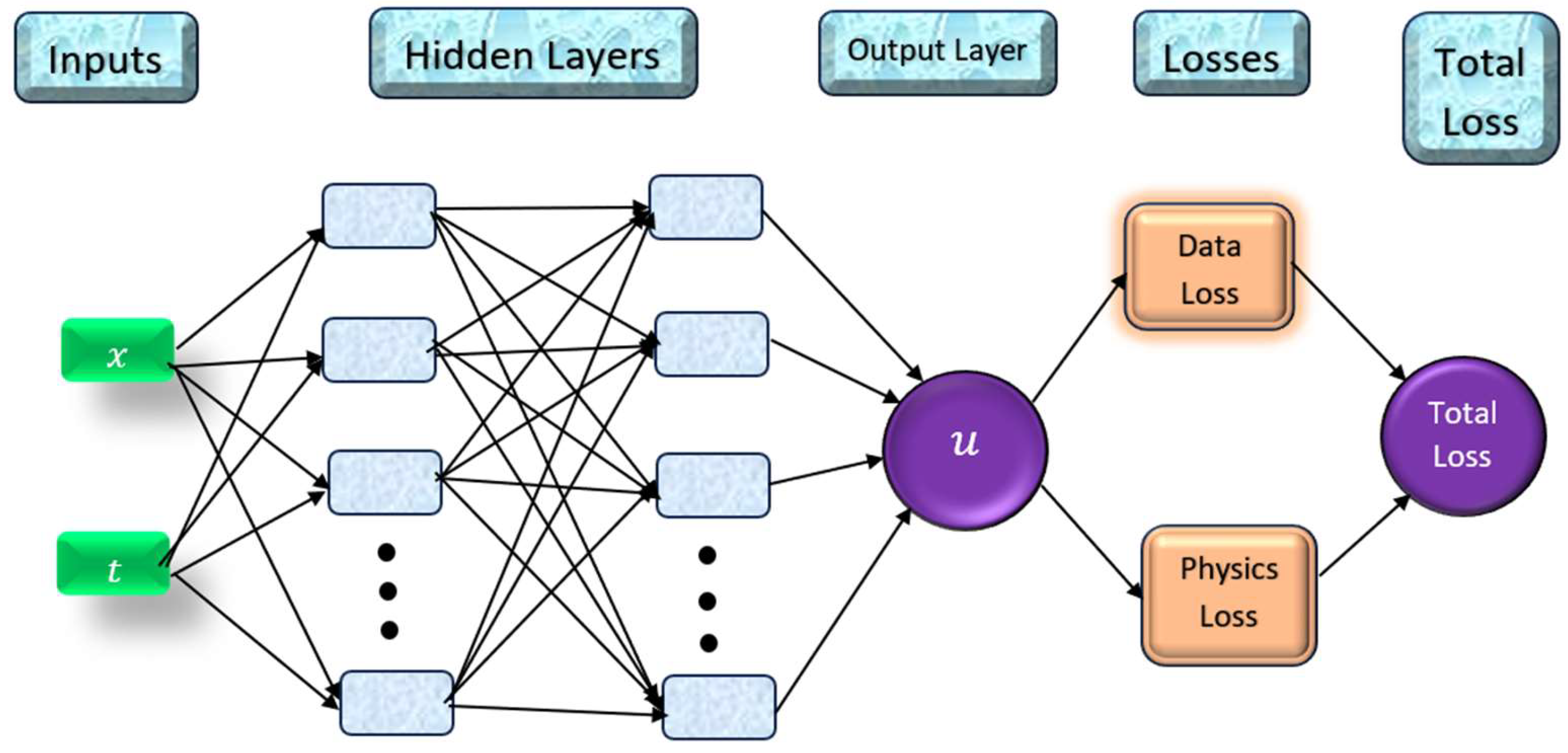

3.1. Loss Function

3.1.1. Data Loss

3.1.2. Errors

3.1.3. Physics Loss

3.1.4. Total Loss

3.2. Optimizer

- ➢

- is the time step.

- ➢

- represents the parameters at time step .

- ➢

- is the gradient of the objective function w.r.t. at time step .

- ➢

- is the 1st moment vector (mean of the gradients).

- ➢

- is the 2nd moment vector (uncentered variance of the gradients).

- ➢

- is the learning rate.

- ➢

- are the exponential decay rates for the moment estimates.

- ➢

- where is a small constant for numerical stability.

| Algorithm 1: Pseudocode representing all the working procedures of FDM-APINN | ||||||

| Starting FDM-APINN | ||||||

| 1 | Defining Neural Networks | |||||

| 2 | Select number of input layers as 2 | |||||

| 3 | 50 is the number of hidden neurons selected | |||||

| 4 | Parameters setting | |||||

| 5 | Select the number of iterations and learning rate | |||||

| 6 | Set values of physical parameters and | |||||

| 7 | Generate training data | |||||

| 8 | Grid points creation of and | |||||

| 9 | Set the initial condition | |||||

| 10 | Training Loop | |||||

| 11 | Select a mini-batch sample | |||||

| 12 | Prepare input data and convert it to a deep-learning array | |||||

| 13 | Compute gradients and loss functions | |||||

| 14 | Use the Adam optimizer to update the network | |||||

| 15 | Display loss | |||||

| 16 | Evaluation of the network | |||||

| 17 | Predict and plot using test data | |||||

| 18 | Calculate the targeted functions | |||||

| 19 | Compute the derivatives . | |||||

| 20 | Compute the function of fraction Laplacian using FDM | |||||

| 21 | Compute the data loss function | |||||

| 22 | Compute the physics loss functions | |||||

| 23 | Compute gradients and total loss | |||||

| End of the algorithm FDM-APNN | ||||||

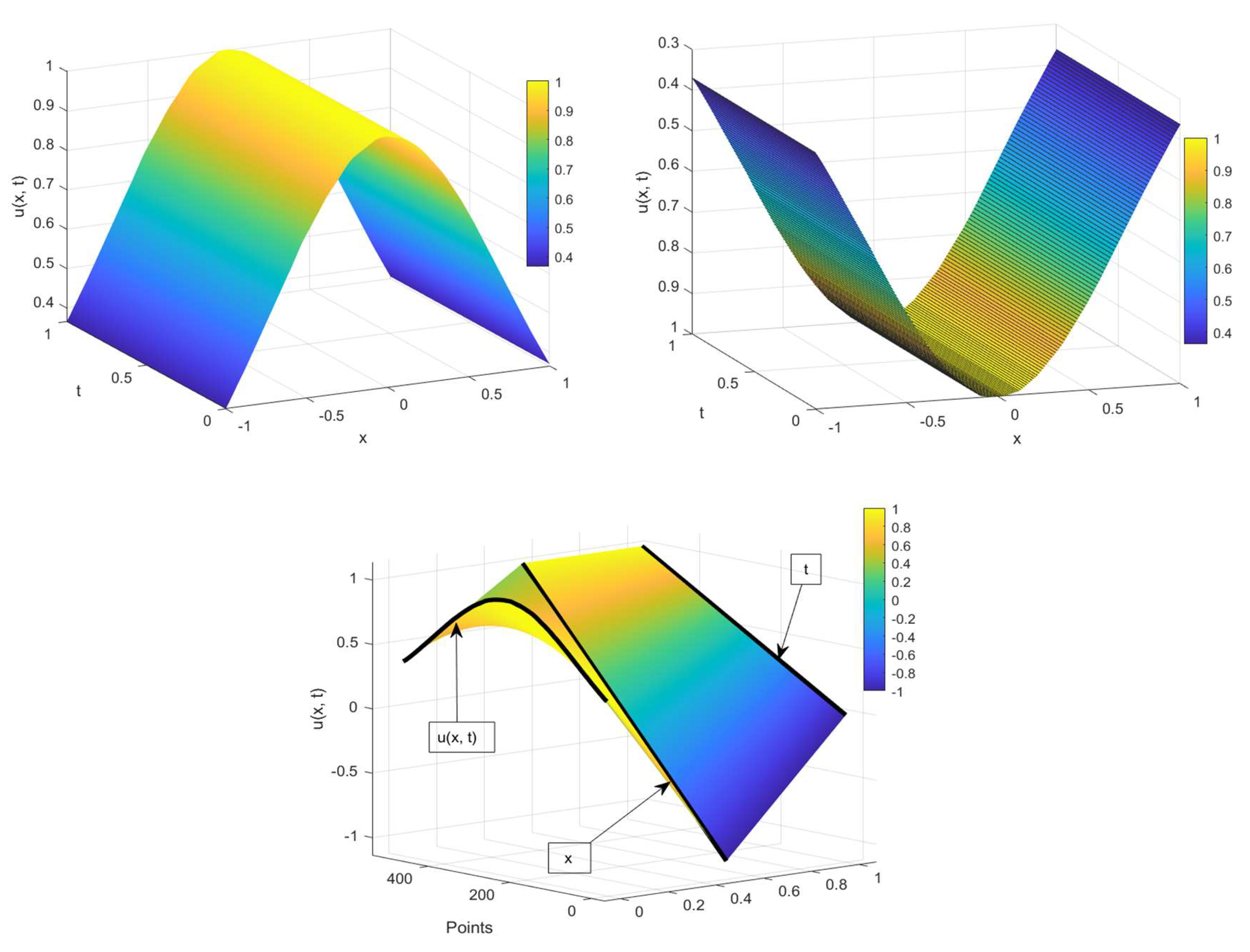

4. Results and Discussion

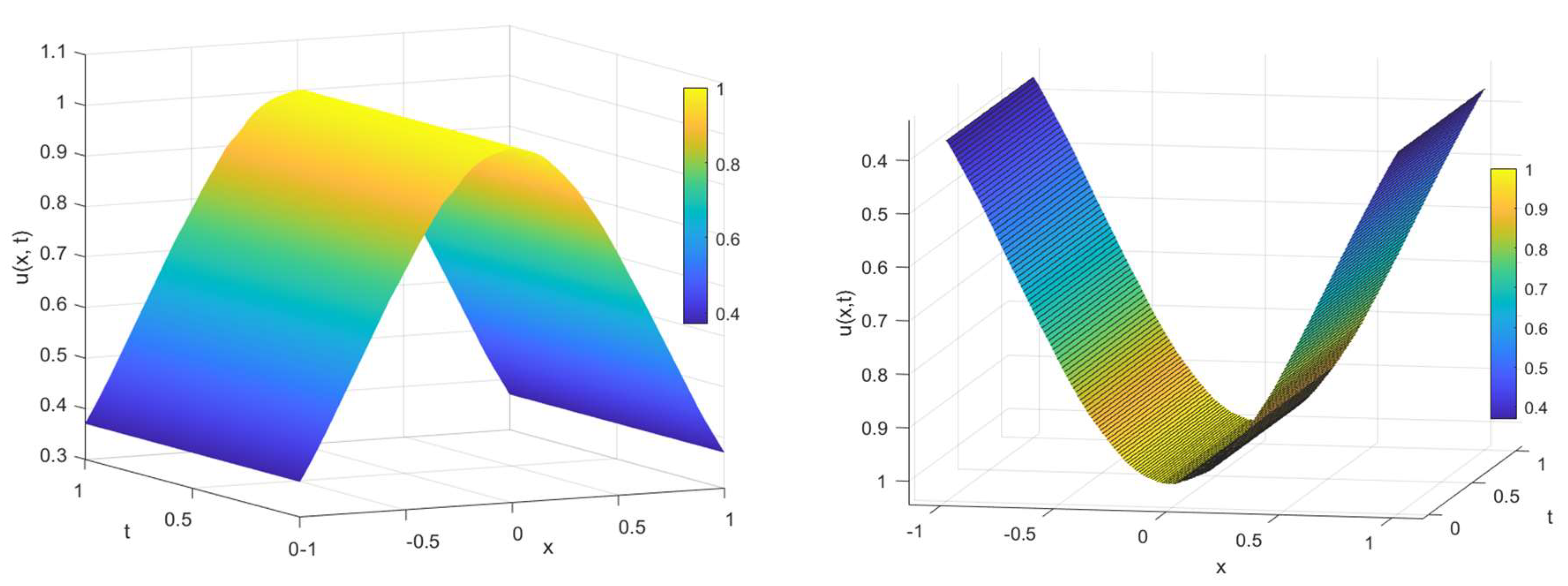

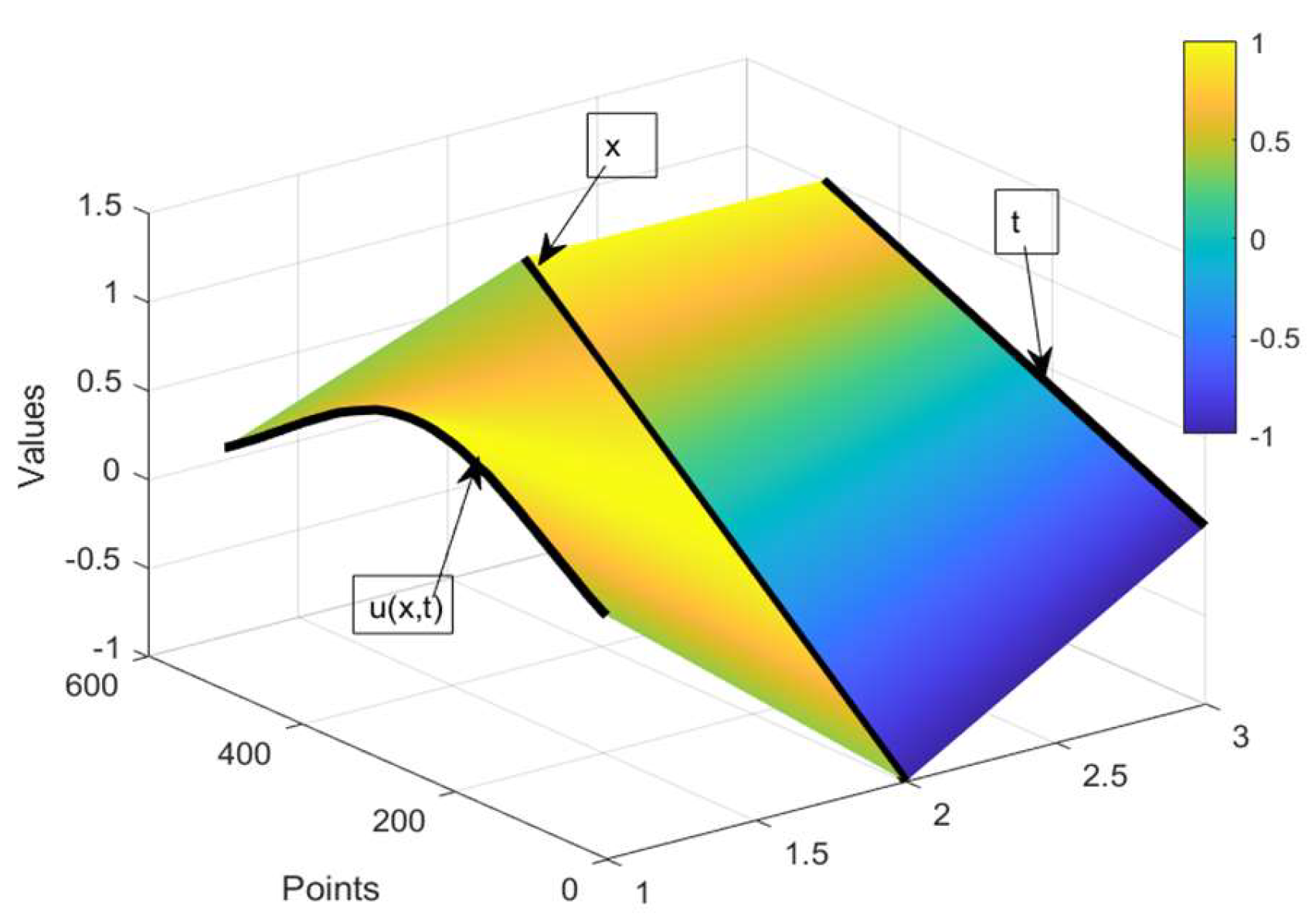

4.1. Scenario 1

4.2. Scenario2

5. Conclusions

- ➢

- The fractional Fokker–Planck-Levy (FFPL) equation is solved in this manuscript. The equation contains the Levy noise and fractional Laplacian, making the equation computationally complex.

- ➢

- The analytical solution is not possible. The proposed technique to solve the FFPL equation is a hybrid technique that involves the finite difference method (FDM), Adams numerical techniques, and physics-informed neural networks (PINNs).

- ➢

- The FDM technique calculates the term fractional Laplacian, which is used in the equation.

- ➢

- The PINN technique then approximates the solutions via the ADAMS optimizer and minimizes the overall loss function.

- ➢

- The manuscript is categorized into two main scenarios by varying the value of the fractional order parameter . The equation is solved for the two values of , i.e., ().

- ➢

- For each value of the fractional order parameter , three cases are made by distributing the domain of into 100, 200, and 500 points.

- ➢

- The equation is solved via the proposed technique for each case. One thousand, 2000, and 5000 iterations are performed for each case individually.

- ➢

- The loss values given for each case can be visualized in the tables. The loss values are very minimal, ranging between and , indicating the precision of the proposed technique.

- ➢

- All the solutions of the proposed technique are compared with those of the score-fPINN technique, which is a well-known technique for solving fractional differential equations.

- ➢

- The residual error graph and tables show the errors between both techniques. The errors range between and . The small error indicates the validity of our proposed technique.

- ➢

- Furthermore, loss and error graphs have been added to the manuscript to explore the proposed technique further. The histogram graph shows the consistency of the proposed technique.

- ➢

- All the results presented in the tables and graphs indicate that the proposed technique is a state-of-the-art technique that is robust.

CRediT authorship contribution statement

Declaration of competing interest

Data availability

Acknowledgments

References

- Mainardi, F. Fractional calculus and waves in linear viscoelasticity: an introduction to mathematical models; World Scientific, 2022. [Google Scholar]

- Cen, Z.; Huang, J.; Xu, A.; Le, A. Numerical approximation of a time-fractional Black–Scholes equation. Computers & Mathematics with Applications 2018, 75, 2874–2887. [Google Scholar]

- Nuugulu, S.M.; Gideon, F.; Patidar, K.C. A robust numerical scheme for a time-fractional Black-Scholes partial differential equation describing stock exchange dynamics. Chaos, Solitons & Fractals 2021, 145, 110753. [Google Scholar]

- Chen, Q.; Sabir, Z.; Raja, M.A.Z.; Gao, W.; Baskonus, H.M. A fractional study based on the economic and environmental mathematical model. Alexandria Engineering Journal 2023, 65, 761–770. [Google Scholar] [CrossRef]

- Nikan, O.; Avazzadeh, Z.; Tenreiro Machado, J.A. Localized kernel-based meshless method for pricing financial options underlying fractal transmission system. Mathematical Methods in the Applied Sciences 2024, 47, 3247–3260. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Barron, A. Universal approximation bounds for superposition of a sigmoidal function. IEEE Transaction on Information Theory 1991, 19, 930–944. [Google Scholar] [CrossRef]

- Kawaguchi, K. On the theory of implicit deep learning: Global convergence with implicit layers. arXiv 2021, arXiv:2102.07346. [Google Scholar]

- Hu, Z.; Jagtap, A.D.; Karniadakis, G.E.; Kawaguchi, K. When do extended physics-informed neural networks (XPINNs) improve generalization? arXiv 2021, arXiv:2109.09444. [Google Scholar] [CrossRef]

- Kharazmi, E.; Cai, M.; Zheng, X.; Zhang, Z.; Lin, G.; Karniadakis, G.E. Identifiability and predictability of integer-and fractional-order epidemiological models using physics-informed neural networks. Nature Computational Science 2021, 1, 744–753. [Google Scholar] [CrossRef]

- Pang, G.; Lu, L.; Karniadakis, G.E. fPINNs: Fractional physics-informed neural networks. SIAM Journal on Scientific Computing 2019, 41, A2603–A2626. [Google Scholar] [CrossRef]

- Avcı, İ.; Lort, H.; Tatlıcıoğlu, B.E. Numerical investigation and deep learning approach for fractal–fractional order dynamics of Hopfield neural network model. Chaos, Solitons & Fractals 2023, 177, 114302. [Google Scholar]

- Avcı, İ.; Hussain, A.; Kanwal, T. Investigating the impact of memory effects on computer virus population dynamics: A fractal–fractional approach with numerical analysis. Chaos, Solitons & Fractals 2023, 174, 113845. [Google Scholar]

- Ul Rahman, J.; Makhdoom, F.; Ali, A.; Danish, S. Mathematical modelling and simulation of biophysics systems using neural network. International Journal of Modern Physics B 2024, 38, 2450066. [Google Scholar] [CrossRef]

- Lou, Q.; Meng, X.; Karniadakis, G.E. Physics-informed neural networks for solving forward and inverse flow problems via the Boltzmann-BGK formulation. Journal of Computational Physics 2021, 447, 110676. [Google Scholar] [CrossRef]

- Hu, Z.; Shi, Z.; Karniadakis, G.E.; Kawaguchi, K. Hutchinson trace estimation for high-dimensional and high-order physics-informed neural networks. Computer Methods in Applied Mechanics and Engineering 2024, 424, 116883. [Google Scholar] [CrossRef]

- Hu, Z.; Shukla, K.; Karniadakis, G.E.; Kawaguchi, K. Tackling the curse of dimensionality with physics-informed neural networks. Neural Networks 2024, 176, 106369. [Google Scholar] [CrossRef]

- Lim, K.L.; Dutta, R.; Rotaru, M. Physics informed neural network using finite difference method. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), 2022; pp. 1828–1833. [Google Scholar]

- Lim, K.L. Electrostatic Field Analysis Using Physics Informed Neural Net and Partial Differential Equation Solver Analysis. In Proceedings of the 2024 IEEE 21st Biennial Conference on Electromagnetic Field Computation (CEFC); 2024; pp. 01–02. [Google Scholar]

- Deng, W. Finite element method for the space and time fractional Fokker–Planck equation. SIAM journal on numerical analysis 2009, 47, 204–226. [Google Scholar] [CrossRef]

- Sepehrian, B.; Radpoor, M.K. Numerical solution of nonlinear Fokker–Planck equation using finite differences method and the cubic spline functions. Applied mathematics and computation 2015, 262, 187–190. [Google Scholar] [CrossRef]

- Chen, X.; Yang, L.; Duan, J.; Karniadakis, G.E. Solving Inverse Stochastic Problems from Discrete Particle Observations Using the Fokker--Planck Equation and Physics-Informed Neural Networks. SIAM Journal on Scientific Computing 2021, 43, B811–B830. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, Y.; Liu, Q.; Wang, X.; Li, Y. Solving Fokker–Planck equations using deep KD-tree with a small amount of data. Nonlinear dynamics 2022, 108, 4029–4043. [Google Scholar] [CrossRef]

- Zhai, J.; Dobson, M.; Li, Y. (2022, April). A deep learning method for solving Fokker-Planck equations. In Mathematical and scientific machine learning (pp. 568-597). PMLR.

- Wang, T.; Hu, Z.; Kawaguchi, K.; Zhang, Z.; Karniadakis, G.E. Tensor neural networks for high-dimensional Fokker-Planck equations. arXiv 2024, arXiv:2404.05615. [Google Scholar]

- Lu, Y.; Maulik, R.; Gao, T.; Dietrich, F.; Kevrekidis, I.G.; Duan, J. Learning the temporal evolution of multivariate densities by normalizing flows. Chaos: An Interdisciplinary Journal of Nonlinear Science 2022, 32. [Google Scholar] [CrossRef]

- Feng, X.; Zeng, L.; Zhou, T. Solving time dependent Fokker-Planck equations via temporal normalizing flow. arXiv 2021, arXiv:2112.14012. [Google Scholar] [CrossRef]

- Guo, L.; Wu, H.; Zhou, T. Normalizing field flows: Solving forward and inverse stochastic differential equations using physics-informed flow models. Journal of Computational Physics 2022, 461, 111202. [Google Scholar] [CrossRef]

- Tang, K.; Wan, X.; Liao, Q. Adaptive deep density approximation for Fokker-Planck equations. Journal of Computational Physics 2022, 457, 111080. [Google Scholar] [CrossRef]

- Hu, Z.; Zhang, Z.; Karniadakis, G.E.; Kawaguchi, K. Score-based physics-informed neural networks for high-dimensional Fokker-Planck equations. arXiv 2024, arXiv:2402.07465. [Google Scholar]

- Evans, L.C. An introduction to stochastic differential equations; American Mathematical Soc., 2012. [Google Scholar]

- Gardiner, C. Stochastic Methods, 4th ed.; Springer, 2009. [Google Scholar]

- Oksendal, B. Stochastic Differential Equations, 6th ed.; Springer, 2003. [Google Scholar]

- Boffi, N.M.; Vanden-Eijnden, E. Probability flow solution of the fokker–planck equation. Machine Learning: Science and Technology 2023, 4, 035012. [Google Scholar] [CrossRef]

- Zeng, S.; Zhang, Z.; Zou, Q. Adaptive deep neural networks methods for high-dimensional partial differential equations. Journal of Computational Physics 2022, 463, 111232. [Google Scholar] [CrossRef]

- Hanna, J.M.; Aguado, J.V.; Comas-Cardona, S.; Askri, R.; Borzacchiello, D. Residual-based adaptivity for two-phase flow simulation in porous media using physics-informed neural networks. Computer Methods in Applied Mechanics and Engineering 2022, 396, 115100. [Google Scholar] [CrossRef]

- Wu, C.; Zhu, M.; Tan, Q.; Kartha, Y.; Lu, L. A comprehensive study of nonadaptive and residual-based adaptive sampling for physics-informed neural networks. Computer Methods in Applied Mechanics and Engineering 2023, 403, 115671. [Google Scholar] [CrossRef]

- Lu, L.; Meng, X.; Mao, Z.; Karniadakis, G.E. DeepXDE: A deep learning library for solving differential equations. SIAM review 2021, 63, 208–228. [Google Scholar] [CrossRef]

- Nabian, M.A.; Gladstone, R.J.; Meidani, H. Efficient training of physics-informed neural networks via importance sampling. Computer-Aided Civil and Infrastructure Engineering 2021, 36, 962–977. [Google Scholar] [CrossRef]

- Jagtap, A.D.; Shin, Y.; Kawaguchi, K.; Karniadakis, G.E. Deep Kronecker neural networks: A general framework for neural networks with adaptive activation functions. Neurocomputing 2022, 468, 165–180. [Google Scholar] [CrossRef]

- Jagtap, A.D.; Karniadakis, G.E. How important are activation functions in regression and classification? A survey, performance comparison, and future directions. Journal of Machine Learning for Modelling and Computing 2023, 4. [Google Scholar] [CrossRef]

- Jagtap, A.D.; Kawaguchi, K.; Em Karniadakis, G. Locally adaptive activation functions with slope recovery for deep and physics-informed neural networks. Proceedings of the Royal Society A 2020, 476, 20200334. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Teng, Y.; Perdikaris, P. Understanding and mitigating gradient flow pathologies in physics-informed neural networks. SIAM Journal on Scientific Computing 2021, 43, A3055–A3081. [Google Scholar] [CrossRef]

- Wang, S.; Yu, X.; Perdikaris, P. When and why PINNs fail to train: A neural tangent kernel perspective. Journal of Computational Physics 2022, 449, 110768. [Google Scholar] [CrossRef]

- Xiang, Z.; Peng, W.; Liu, X.; Yao, W. Self-adaptive loss balanced physics-informed neural networks. Neurocomputing 2022, 496, 11–34. [Google Scholar] [CrossRef]

- Haghighat, E.; Amini, D.; Juanes, R. Physics-informed neural network simulation of multiphase poroelasticity using stress-split sequential training. Computer Methods in Applied Mechanics and Engineering 2022, 397, 115141. [Google Scholar] [CrossRef]

- Amini, D.; Haghighat, E.; Juanes, R. Inverse modelling of nonisothermal multiphase poromechanics using physics-informed neural networks. Journal of Computational Physics 2023, 490, 112323. [Google Scholar] [CrossRef]

- Jagtap, A.D.; Kharazmi, E.; Karniadakis, G.E. Conservative physics-informed neural networks on discrete domains for conservation laws: Applications to forward and inverse problems. Computer Methods in Applied Mechanics and Engineering 2020, 365, 113028. [Google Scholar] [CrossRef]

- Meng, X.; Li, Z.; Zhang, D.; Karniadakis, G.E. PPINN: Parareal physics-informed neural network for time-dependent PDEs. Computer Methods in Applied Mechanics and Engineering 2020, 370, 113250. [Google Scholar] [CrossRef]

- Yang, L.; Meng, X.; Karniadakis, G.E. B-PINNs: Bayesian physics-informed neural networks for forward and inverse PDE problems with noisy data. Journal of Computational Physics 2021, 425, 109913. [Google Scholar] [CrossRef]

- Hu, Z.; Jagtap, A.D.; Karniadakis, G.E.; Kawaguchi, K. When do extended physics-informed neural networks (XPINNs) improve generalization? arXiv 2021, arXiv:2109.09444. [Google Scholar] [CrossRef]

- Jagtap, A.D.; Karniadakis, G.E. Extended physics-informed neural networks (XPINNs): A generalized space-time domain decomposition based deep learning framework for nonlinear partial differential equations. Communications in Computational Physics 2020, 28. [Google Scholar]

- Yang, L.; Zhang, D.; Karniadakis, G.E. Physics-informed generative adversarial networks for stochastic differential equations. SIAM Journal on Scientific Computing 2020, 42, A292–A317. [Google Scholar] [CrossRef]

- Yu, J.; Lu, L.; Meng, X.; Karniadakis, G.E. Gradient-enhanced physics-informed neural networks for forward and inverse PDE problems. Computer Methods in Applied Mechanics and Engineering 2022, 393, 114823. [Google Scholar] [CrossRef]

- Eshkofti, K.; Hosseini, S.M. A gradient-enhanced physics-informed neural network (gPINN) scheme for the coupled nonfickian/non-Fourierian diffusion-thermoelasticity analysis: A novel gPINN structure. Engineering Applications of Artificial Intelligence 2023, 126, 106908. [Google Scholar] [CrossRef]

- Eshkofti, K.; Hosseini, S.M. The novel PINN/gPINN-based deep learning schemes for non-Fickian coupled diffusion-elastic wave propagation analysis. Waves in Random and Complex Media 2023, 1–24. [Google Scholar] [CrossRef]

| Scenarios | Cases | Iterations |

|---|---|---|

| 100 points | 1000 | |

| 2000 | ||

| 5000 | ||

| 200 points | 1000 | |

| 2000 | ||

| 5000 | ||

| 500 points | 1000 | |

| 2000 | ||

| 5000 | ||

| 100 points | 1000 | |

| 2000 | ||

| 5000 | ||

| 200 points | 1000 | |

| 2000 | ||

| 5000 | ||

| 500 points | 1000 | |

| 2000 | ||

| 5000 |

| Points | Iterations | Average Loss |

| 100 | 1000 | 1.7910-5 |

| 2000 | 4.69×10-6 | |

| 5000 | 1.05×10-6 | |

| 200 | 1000 | 3.17×10-6 |

| 2000 | 1.27×10-6 | |

| 5000 | 2.06×10-6 | |

| 500 | 1000 | 5.56×10-6 |

| 2000 | 2.21×10-6 | |

| 5000 | 1.12×10-6 | |

| Residual errors ( iterations) |

Residual errors ( iterations) |

Residual errors ( iterations) |

||

| -1.00 | 0.00 | -2.2910-2 | 2.4910-2 | -1.60×10-3 |

| -0.80 | 0.10 | 1.90×10-3 | -5.20×10-3 | -7.00×10-4 |

| -0.60 | 0.20 | 5.90×10-3 | -4.00×10-3 | 2.20×10-3 |

| -0.40 | 0.30 | 2.80×10-3 | -8.80×10-3 | -8.00×10-4 |

| -0.20 | 0.40 | -9.50×10-3 | 8.60×10-3 | 1.40×10-3 |

| 0.00 | 0.50 | -1.00×10-3 | 4.00×10-3 | 2.10×10-3 |

| 0.20 | 0.60 | -1.00×10-4 | 3.20×10-3 | -1.70×10-3 |

| 0.40 | 0.70 | -5.20×10-3 | 6.00×10-3 | -2.20×10-3 |

| 0.60 | 0.80 | 7.70×10-3 | 1.90×10-3 | -1.20×10-3 |

| 0.80 | 0.90 | 3.60×10-3 | -4.00×10-3 | 6.00×10-4 |

| 1.00 | 1.00 | 9.90×10-3 | 5.00×10-4 | 1.60×10-3 |

| Residual error ( iterations) |

Residual error ( iterations) |

Residual error (5000 iterations) |

||

| -1.00 | 0.00 | 1.51-2 | 1.70×10-3 | -3.40×10-3 |

| -0.80 | 0.10 | 5.70×10-3 | 3.50×10-3 | -1.10×10-3 |

| -0.60 | 0.20 | 1.00×10-3 | -8.00×10-4 | 1.30×10-3 |

| -0.40 | 0.30 | -4.50×10-3 | 4.00×10-4 | -4.00×10-4 |

| -0.20 | 0.40 | 3.30×10-3 | 2.40×10-3 | 2.60×10-3 |

| 0.00 | 0.50 | 3.10×10-3 | -9.00×10-4 | -1.90×10-3 |

| 0.20 | 0.60 | -1.60×10-3 | -1.10×10-3 | 9.00×10-4 |

| 0.40 | 0.70 | -7.00×10-3 | -1.50×10-3 | 9.00×10-4 |

| 0.60 | 0.80 | 3.70×10-3 | -2.10×10-3 | -3.00×10-3 |

| 0.80 | 0.90 | -8.30×10-3 | 0.00 | -1.80×10-3 |

| 1.00 | 1.00 | 1.00×10-3 | 4.60×10-3 | -2.30×10-3 |

| .. | Residual error (1000 iteration) |

Residual error (2000 iteration) |

Residual error (5000 iteration) |

|

| -1.00 | 0.00 | 1.30×10-3 | 2.80×10-3 | -7.00×10-4 |

| -0.80 | 0.10 | 7.10×10-3 | 2.40×10-3 | -2.20×10-3 |

| -0.60 | 0.20 | 7.20×10-3 | 3.70×10-3 | -3.70×10-3 |

| -0.40 | 0.30 | -6.00×10-4 | 1.20×10-3 | 2.10×10-3 |

| -0.20 | 0.40 | -6.40×10-3 | 1.80×10-3 | -1.70×10-3 |

| 0.00 | 0.50 | 2.30×10-3 | -5.10×10-3 | 3.20×10-3 |

| 0.20 | 0.60 | -5.10×10-3 | 4.10×10-3 | 6.20×10-3 |

| 0.40 | 0.70 | -5.20×10-3 | 1.4610-2 | -2.00×10-4 |

| 0.60 | 0.80 | -1.3310-2 | 6.00×10-3 | 5.80×10-3 |

| 0.80 | 0.90 | -9.40×10-3 | 6.50×10-3 | 2.30×10-3 |

| 1.00 | 1.00 | 1.00×10-4 | 6.00×10-3 | 2.00×10-4 |

| Points | Iterations | Average Loss |

| 100 | 1000 | 3.38×10-5 |

| 2000 | 2.63×10-6 | |

| 5000 | 1.90×10-6 | |

| 200 | 1000 | 9.25×10-6 |

| 2000 | 5.61×10-6 | |

| 5000 | 3.30×10-6 | |

| 500 | 1000 | 2.36×10-5 |

| 2000 | 4.27×10-6 | |

| 5000 | 4.33×10-6 | |

| .. | Residual error (1000 iteration) |

Residual error (2000 iteration) |

Residual error (5000 iteration) |

|

| -1.00 | 0.00 | -5.00×10-4 | 3.10×10-3 | -1.3610-2 |

| -0.80 | 0.10 | 2.80×10-3 | 1.60×10-3 | -6.00×10-3 |

| -0.60 | 0.20 | -1.80×10-3 | -2.70×10-3 | -2.40×10-3 |

| -0.40 | 0.30 | 6.60×10-3 | -1.1810-2 | -3.20×10-3 |

| -0.20 | 0.40 | 5.80×10-3 | 9.00×10-4 | 3.20×10-3 |

| 0.00 | 0.50 | 9.80×10-3 | -1.80×10-3 | 6.00×10-4 |

| 0.20 | 0.60 | 1.40×10-3 | -2.30×10-3 | -1.90×10-3 |

| 0.40 | 0.70 | 3.50×10-3 | 1.30×10-3 | 3.10×10-3 |

| 0.60 | 0.80 | -6.00×10-3 | 1.40×10-3 | 7.00×10-3 |

| 0.80 | 0.90 | -7.60×10-3 | -6.00×10-4 | 6.40×10-3 |

| 1.00 | 1.00 | 5.00×10-4 | -3.60×10-3 | 8.10×10-3 |

| . | Residual error (1000 iteration) |

Residual error (2000 iteration) |

Residual error (5000 iteration) |

|

| -1.00 | 0.00 | 2.17×10-2 | 3.10×10-3 | 1.90×10-3 |

| -0.80 | 0.10 | 2.30×10-3 | -6.00×10-4 | 4.10×10-3 |

| -0.60 | 0.20 | -3.10×10-3 | 3.00×10-4 | 3.30×10-3 |

| -0.40 | 0.30 | -4.00×10-3 | 2.00×10-4 | 2.40×10-3 |

| -0.20 | 0.40 | 3.30×10-3 | 9.00×10-4 | -4.10×10-3 |

| 0.00 | 0.50 | 4.60×10-3 | 1.90×10-3 | -2.50×10-3 |

| 0.20 | 0.60 | 6.00×10-3 | 9.60×10-3 | -3.20×10-3 |

| 0.40 | 0.70 | 7.30×10-3 | 1.60×10-3 | -2.30×10-3 |

| 0.60 | 0.80 | 1.06×10-2 | -7.00×10-4 | -4.20×10-3 |

| 0.80 | 0.90 | -4.00×10-4 | 3.30×10-3 | -6.50×10-3 |

| 1.00 | 1.00 | 8.80×10-3 | 7.90×10-3 | -1.21×10-2 |

| Residual error (1000 iteration) |

Residual error (2000 iteration) |

Residual error (5000 iteration) |

||

| -1.00 | 0.00 | 9.00×10-3 | 8.50×10-3 | 7.00×10-3 |

| -0.80 | 0.10 | -2.10×10-3 | 1.29×10-2 | 5.30×10-3 |

| -0.60 | 0.20 | 3.40×10-3 | 9.50×10-3 | 3.30×10-3 |

| -0.40 | 0.30 | -2.60×10-3 | 2.00×10-3 | 4.80×10-3 |

| -0.20 | 0.40 | 5.30×10-3 | 5.00×10-3 | 5.00×10-4 |

| 0.00 | 0.50 | 5.10×10-3 | -6.00×10-4 | 1.80×10-3 |

| 0.20 | 0.60 | 1.20×10-3 | 4.20×10-3 | 2.50×10-3 |

| 0.40 | 0.70 | -3.60×10-3 | 1.10×10-3 | -4.20×10-3 |

| 0.60 | 0.80 | -5.70×10-3 | -7.70×10-3 | -2.50×10-3 |

| 0.80 | 0.90 | -2.80×10-3 | -4.50×10-3 | -5.80×10-3 |

| 1.00 | 1.00 | -1.95×10-2 | -7.90×10-3 | -5.30×10-3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).