Submitted:

25 September 2024

Posted:

26 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

3. Methodology

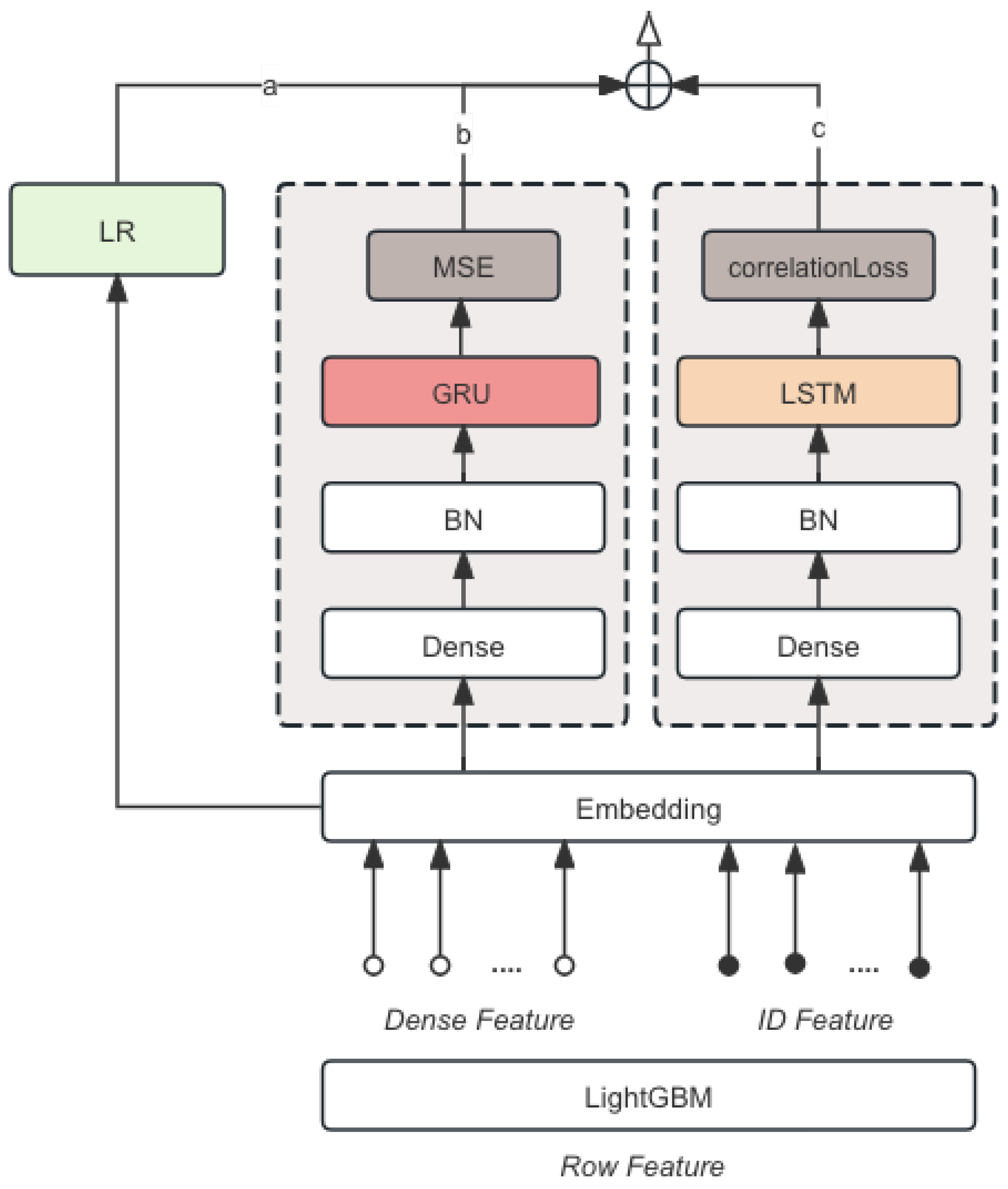

3.1. LSTM

- Batch Normalization: Standardizes the inputs.

- Dense Layers: Introduces non-linearity and reduces dimensionality.

- Dropout Layers: Prevents overfitting by randomly setting a fraction of input units to 0 at each update during training time.

- Reshape Layers: Prepares the data for LSTM layers.

- LSTM Layers: Processes the sequential data.

3.2. GRU

- Dense Layers: Introduces non-linearity.

- Batch Normalization: Ensures standardized inputs.

- Reshape Layers: Prepares the data for GRU layers.

- GRU Layers: Processes the sequential data.

3.3. LR

3.4. LightGBM

- Feature Engineering: Creation of new features such as returns, moving averages, and volatility.

- Tree-based Learning: Utilizes decision trees to iteratively improve model performance.

3.5. Loss Function

3.5.1. Mean Squared Error

3.5.2. Correlation Loss

3.5.3. Sharpe Ratio Loss

3.6. Data Preprocessing

3.6.1. Missing Values

3.6.2. Outliers

3.6.3. Feature Scaling and Technical Indicators

4. Experimental Results

4.1. Root Mean Squared Error

4.2. Correlation Coefficient

4.3. Mean Squared Error

4.4. Performance

5. Conclusion

References

- Fama, E.F. Two pillars of asset pricing. American Economic Review 2014, 104, 1467–1485. [Google Scholar] [CrossRef]

- Rather, A.M.; Agarwal, A.; Sastry, V. Recurrent neural network and a hybrid model for prediction of stock returns. Expert Systems with Applications 2015, 42, 3234–3241. [Google Scholar] [CrossRef]

- He, C.; Liu, M.; Hsiang, S.M.; Pierce, N. Synthesizing ontology and graph neural network to unveil the implicit rules for us bridge preservation decisions. Journal of Management in Engineering 2024, 40, 04024007. [Google Scholar]

- He, C.; Liu, M.; Alves, T.d.C.; Scala, N.M.; Hsiang, S.M. Prioritizing collaborative scheduling practices based on their impact on project performance. Construction management and economics 2022, 40, 618–637. [Google Scholar] [CrossRef]

- He, C.; Liu, M.; Zhang, Y.; Wang, Z.; Hsiang, S.M.; Chen, G.; Chen, J. Exploit social distancing in construction scheduling: Visualize and optimize space–time–workforce tradeoff. Journal of Management in Engineering 2022, 38, 04022027. [Google Scholar] [CrossRef]

- Wang, D.; Wang, Y.; Xian, X. A Latent Variable-Based Multitask Learning Approach for Degradation Modeling of Machines with Dependency and Heterogeneity. IEEE Transactions on Instrumentation and Measurement 2024. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, D. An Entropy-and Attention-Based Feature Extraction and Selection Network for Multi-Target Coupling Scenarios. 2023 IEEE 19th International Conference on Automation Science and Engineering (CASE). IEEE, 2023, pp. 1–6.

- Sun, Y.; Ortiz, J. Rapid Review of Generative AI in Smart Medical Applications. arXiv, 2024; arXiv:2406.06627 2024. [Google Scholar]

- Cao, Y.; Yang, L.; Wei, C.; Wang, H. Financial Text Sentiment Classification Based on Baichuan2 Instruction Finetuning Model. 2023 5th International Conference on Frontiers Technology of Information and Computer (ICFTIC). IEEE, 2023, pp. 403–406.

- Yan, H.; Xiao, J.; Zhang, B.; Yang, L.; Qu, P. The Application of Natural Language Processing Technology in the Era of Big Data. Journal of Industrial Engineering and Applied Science 2024, 2, 20–27. [Google Scholar]

- Xia, Y.; Liu, S.; Yu, Q.; Deng, L.; Zhang, Y.; Su, H.; Zheng, K. Parameterized Decision-Making with Multi-Modality Perception for Autonomous Driving. 2024 IEEE 40th International Conference on Data Engineering (ICDE). IEEE, 2024, pp. 4463–4476.

- Brownlee, J. Long short-term memory networks with python: develop sequence prediction models with deep learning; Machine Learning Mastery, 2017.

- Fischer, T.; Krauss, C. Deep learning with long short-term memory networks for financial market predictions. European journal of operational research 2018, 270, 654–669. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. Advances in neural information processing systems 2017, 30. [Google Scholar]

- Zhou, Z.H. Ensemble methods: foundations and algorithms; CRC press, 2012.

- Fernández-Delgado, M.; Cernadas, E.; Barro, S.; Amorim, D. Do we need hundreds of classifiers to solve real world classification problems? The journal of machine learning research 2014, 15, 3133–3181. [Google Scholar]

- Barboza, F.; Kimura, H.; Altman, E. Machine learning models and bankruptcy prediction. Expert Systems with Applications 2017, 83, 405–417. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining, 2016, pp. 785–794.

- Sagi, O.; Rokach, L. Ensemble learning: A survey. Wiley interdisciplinary reviews: data mining and knowledge discovery 2018, 8, e1249. [Google Scholar] [CrossRef]

- Livieris, I.E.; Pintelas, E.; Pintelas, P. A CNN–LSTM model for gold price time-series forecasting. Neural computing and applications 2020, 32, 17351–17360. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980 2014. [Google Scholar]

- Pawaskar, S. Stock price prediction using machine learning algorithms. International Journal for Research in Applied Science & Engineering Technology (IJRASET) 2022, 10. [Google Scholar]

- Nelson, D.M.; Pereira, A.C.; De Oliveira, R.A. Stock market’s price movement prediction with LSTM neural networks. 2017 International joint conference on neural networks (IJCNN). Ieee, 2017, pp. 1419–1426.

- Qiu, M.; Song, Y. Predicting the direction of stock market index movement using an optimized artificial neural network model. PloS one 2016, 11, e0155133. [Google Scholar] [CrossRef] [PubMed]

| Model | RMSE | Correlation Coefficient |

|---|---|---|

| LightGBM | 0.172 | 0.182 |

| LSTM | 0.214 | 0.234 |

| Keras DNN + RNN | 0.261 | 0.245 |

| Keras DNN + Lightgbm | 0.315 | 0.324 |

| LSTM + GRU + LR + LightGBM | 0.351 | 0.362 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).