Submitted:

22 September 2024

Posted:

24 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Creation of a comprehensive and linguistically diverse dataset that accurately represents the phonetic and dialectal variations of the Kashmiri language. It will serve as a foundational resource for training and evaluating the recognition system.

- Developing a feature extraction technique that captures both spectral and temporal characteristics of Kashmiri speech for enhancing the system’s ability to recognize and distinguish the unique phonetic patterns of the language.

- Development of a robust spoken Kashmiri recognition system that effectively addresses the phonetic diversity and dialectical variations within the Kashmiri language.

2. Literature Survey

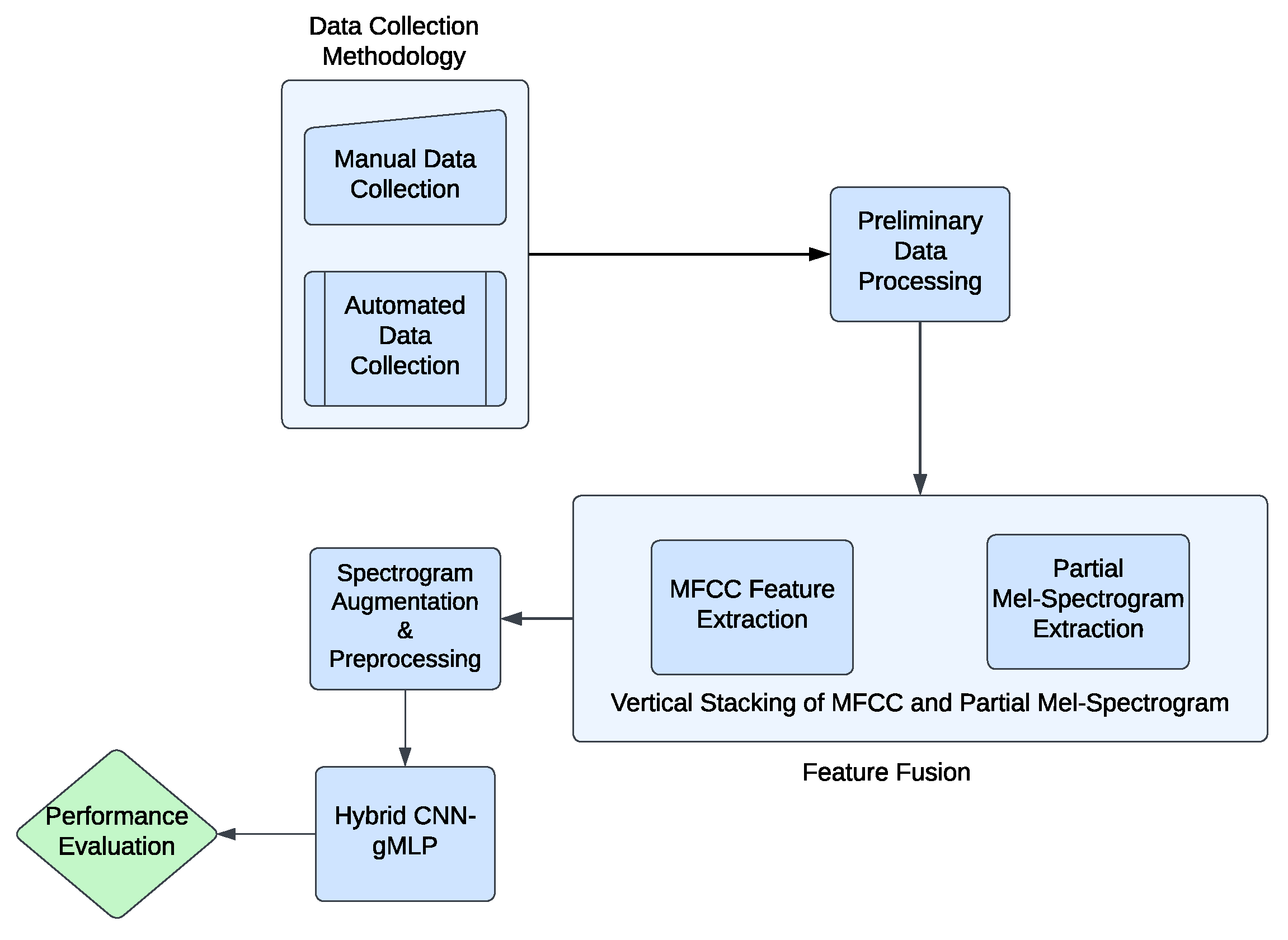

3. Methodology

3.1. Data Collection

3.1.1. Geographical and Demographic Diversity

- North Kashmir (Baramullah, Kupwara): Known for its conservative dialects, this region retains older phonetic features of Kashmiri, thus providing a rich source of traditional linguistic elements [21].

- Central Kashmir (Srinagar, Magam): The variety of Kashmiri spoken in Srinagar is often regarded as the standard dialect, forming the linguistic baseline against which other dialects are compared [22].

- South Kashmir (Pulwama, Shopian): Characterized by softer pronunciations and unique intonations, the dialects here offer a distinct contrast to those of the northern and central regions, contributing to the overall diversity of the dataset [23].

3.1.2. Participant Selection and Data Collection Process

3.1.3. Dataset Overview

3.2. Preliminary Data Analysis and Processing

3.2.1. Zero-Crossing Rate (ZCR):

3.2.2. Root Mean Square (RMS) Energy:

3.2.3. Spectral Entropy:

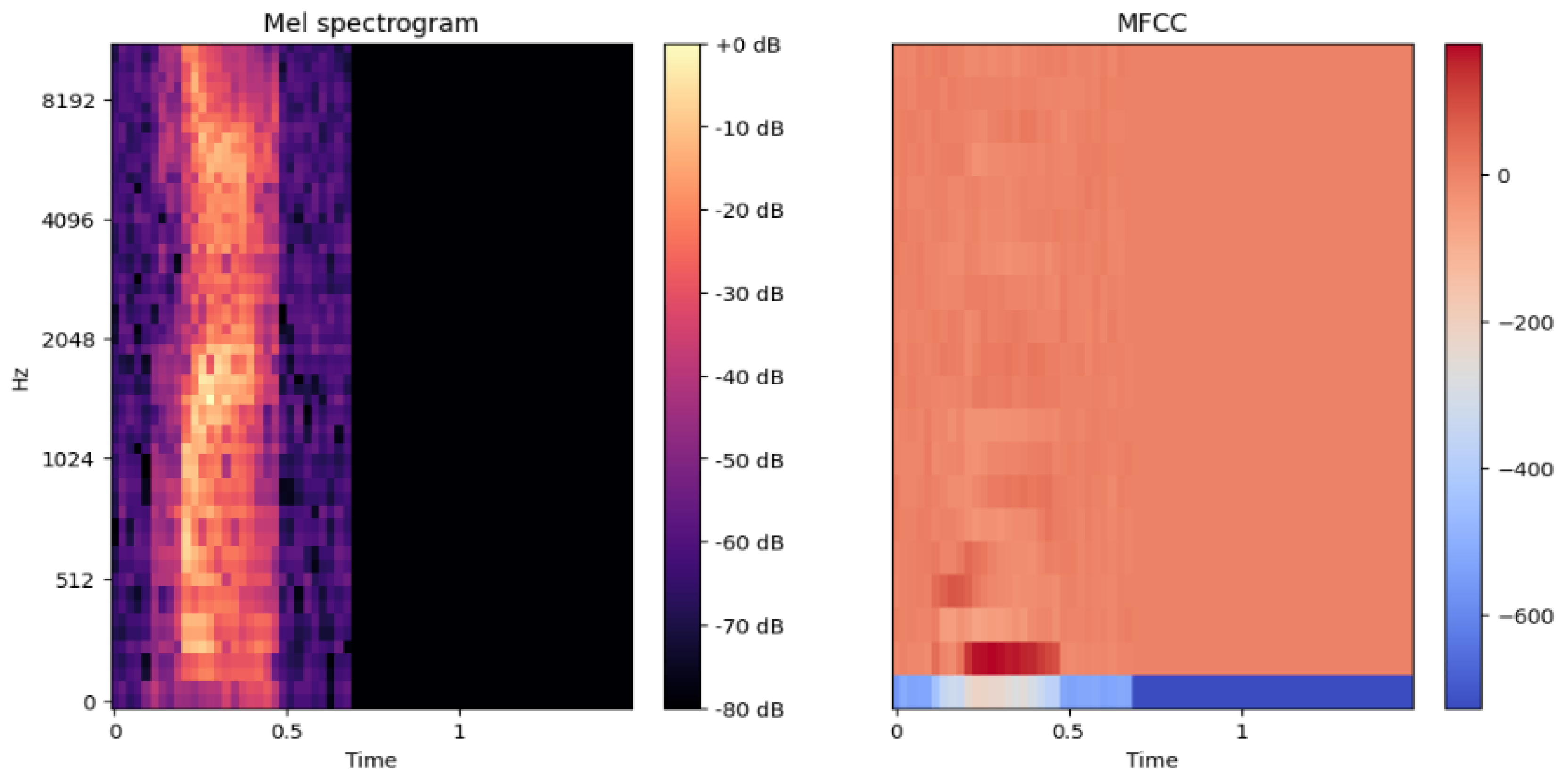

3.3. Feature Extraction

3.3.1. Existing Methods:

3.3.2. Challenges and Limitations:

3.3.3. Rationale for Our Approach:

| Feature Set | Accuracy | Precision | Recall |

|---|---|---|---|

| MFCC + Partial Mel-Spectrogram (Random Forest) | 0.86 | 0.87 | 0.87 |

| MFCC + Partial Mel-Spectrogram (Ridge Classifier) | 0.80 | 0.83 | 0.82 |

| MFCC + Partial Mel-Spectrogram (K-Nearest Neighbors) | 0.61 | 0.61 | 0.62 |

| MFCC + Partial Mel-Spectrogram (Decision Tree) | 0.77 | 0.77 | 0.78 |

| MFCC only (Random Forest) | 0.79 | 0.80 | 0.80 |

| Mel-Spectrogram only (Random Forest) | 0.75 | 0.77 | 0.77 |

3.4. Deep Learning Models

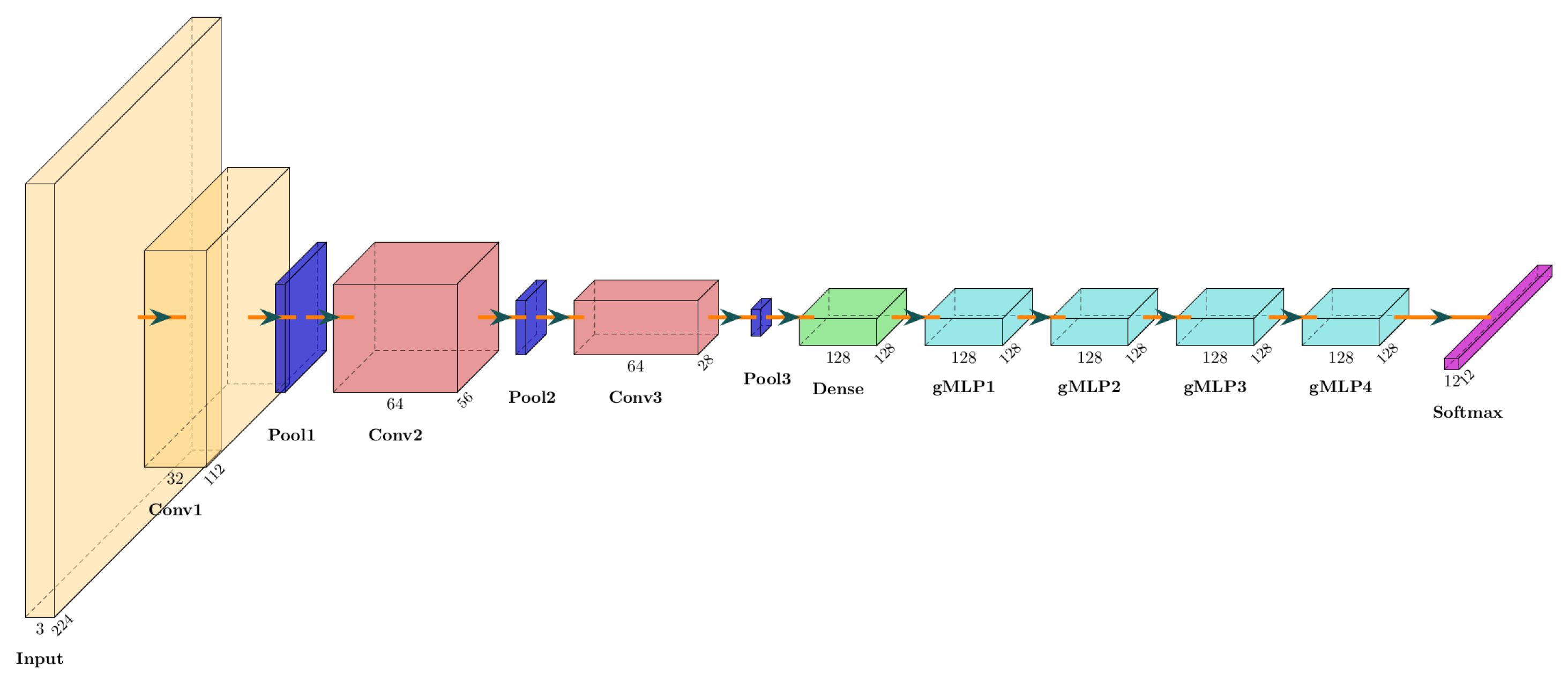

3.4.1. Convolutional Neural networks (CNN)

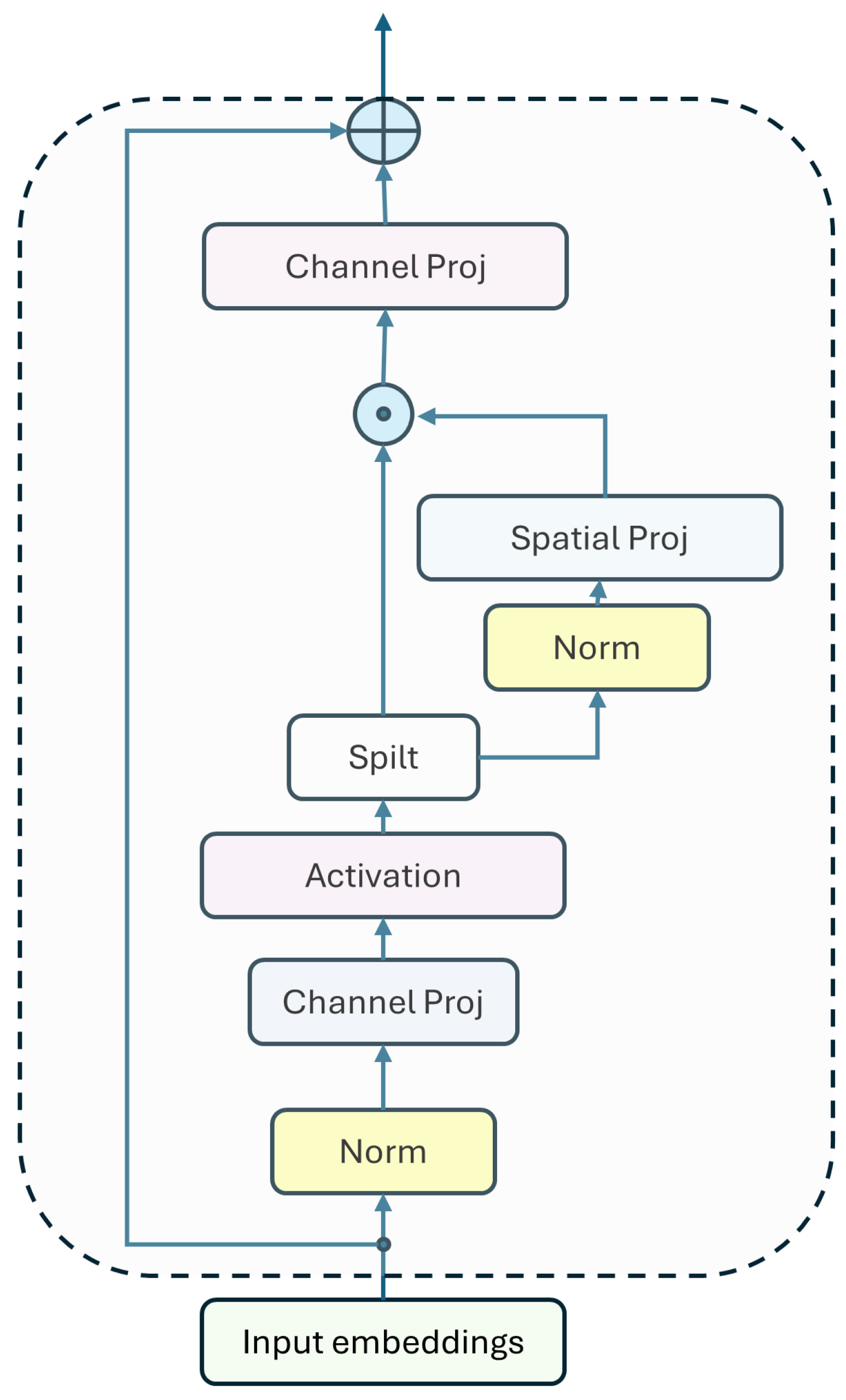

3.4.2. Gated Multi-Layer Perceptrons (gMLPs)

3.4.3. Hybrid CNN-gMLP Model

4. Results and Discussion

| Parameter | Value |

|---|---|

| Learning rate | 0.000235 |

| Learning rate schedule | Step decay |

| Number of gMLP layers | 4 |

| Optimizer | Adam |

| Dropout rate | 0.378036 |

| Epochs | 50 |

4.1. Comparative Analysis and Validation

4.2. Limitations

- Dataset Size and Diversity: The dataset used in this study, although representative of the phonetic and dialectal diversity of the Kashmiri language, is limited to 12 commonly used words. This narrow vocabulary may not reflect the full range of complexities in real-world speech patterns and interactions. Moreover, the dataset contains a total of 840 samples, which may limit the generalization of the model to more complex speech recognition tasks involving extended vocabulary and spontaneous speech.

- Dialectal Variations: Although efforts were made to capture dialectal diversity from different regions of Kashmir (North, Central, and South Kashmir), there may still be unaccounted variations in pronunciation, intonation, and accent that could affect the model’s performance. The current model may not generalize well to speakers from less-represented dialects or regions.

- Environmental Conditions:The dataset was collected in controlled environments to ensure high audio quality. However, in practical applications, speech recognition systems are often used in noisy or variable acoustic environments. The model’s robustness in such conditions is yet to be fully evaluated, and additional noise-robust techniques, such as advanced denoising methods, may be necessary to enhance its performance in real-world settings. Feature Extraction Methodology: The dual feature extraction approach, combining MFCCs and partial Mel-Spectrograms, showed significant improvement in capturing the phonetic nuances of Kashmiri speech. However, alternative feature extraction techniques, such as wavelet transforms or more advanced self-supervised learning models like HuBERT, could potentially enhance performance further, especially in noisy or resource-constrained environments.

- Computational Complexity: While the hybrid CNN-gMLP model balances local and global feature extraction effectively, the computational cost associated with this approach could limit its deployment on resource-constrained devices, such as mobile phones or embedded systems. Optimizing the model for efficiency, without compromising accuracy, remains a challenge.

- Bias and Fairness: Although participant selection aimed to capture a diverse range of age, gender, and regional backgrounds, there is potential for demographic bias in the dataset. The performance of the model across different demographic groups (e.g., gender or age) has not been explicitly evaluated and may reveal disparities that need to be addressed.

- Application to Continuous Speech: The model was trained and tested on isolated word recognition, which differs significantly from continuous speech recognition tasks. Future research should explore extending the model’s capabilities to handle continuous speech, where word boundaries are less defined and contextual dependencies play a more critical role.

5. Conclusion

Funding

Data Availability Statement

Conflicts of Interest

References

- Team, G.R. Google USM: Scaling Automatic Speech Recognition Beyond 100 Languages. arXiv, arXiv:2303.01037 2023. [CrossRef]

- Singh, A.; Kadyan, V.; Kumar, M.; Bassan, N. A comprehensive survey on automatic speech recognition using neural networks. Multimedia Tools and Applications 2020, 79, 3673–3704. [Google Scholar]

- Koul, O.N. Kashmiri: A Descriptive Grammar; Routledge, 2009.

- Wali, K.; Koul, O.N. Kashmiri: A Cognitive-Descriptive Grammar; Routledge, 1997.

- Besacier, L.; Barnard, E.; Karpov, A.; Schultz, T. Automatic Speech Recognition for Under-Resourced Languages: A Survey. Speech Communication 2014, 56, 85–100. [Google Scholar] [CrossRef]

- Liu, H.; Dai, Z.; So, D.R.; Le, Q.V. Pay Attention to MLPs. Advances in Neural Information Processing Systems 2021, 34, 9204–9215. [Google Scholar]

- O’Neill, J.; Carson-Berndsen, J. Challenges in ASR Systems for Underrepresented Dialects. Proceedings of the Annual Conference on Computational Linguistics, 2023, pp. 233–241.

- Adams, O.; Neubig, G.; Cohn, T.; Bird, S. Learning a Lexicon and Translation Model from Phoneme Lattices. Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2017, pp. 2379–2389. [CrossRef]

- Hannun, A.; Case, C.; Casper, J.; Catanzaro, B.; Diamos, G.; Elsen, E.; Prenger, R. Deep Speech: Scaling up end-to-end speech recognition. arXiv preprint arXiv:1412.5567, arXiv:1412.5567 2014. [CrossRef]

- Aggarwal, A.; Srivastava, A.; Agarwal, A.; Chahal, N.; Singh, D.; Alnuaim, A.; Alhadlaq, A.; Lee, H.N. Two-Way Feature Extraction for Speech Emotion Recognition Using Deep Learning. Sensors 2022, 22, 2378. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; Uszkoreit, J.; Houlsby, N. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. International Conference on Learning Representations (ICLR), 2020. [CrossRef]

- Zhao, J.; Shi, G.X.; Wang, G.B.; Zhang, W. Automatic Speech Recognition for Low-Resource Languages: The Thuee Systems for the IARPA Openasr20 Evaluation. Proceedings of the IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), 2021, pp. 113–119. [CrossRef]

- Kipyatkova, I.; Kagirov, I. Analytical Review of Methods for Solving Data Scarcity Issues Regarding Elaboration of Automatic Speech Recognition Systems for Low-Resource Languages. Information Technologies and Computing Systems 2022, 21, 2–15. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, W. Improving Automatic Speech Recognition Performance for Low-Resource Languages with Self-Supervised Models. IEEE Journal of Selected Topics in Signal Processing 2022, 16, 1105–1115. [Google Scholar] [CrossRef]

- Min, Z.; Ge, Q.; Li, Z.; Weinan, E. MAC: A Unified Framework Boosting Low Resource Automatic Speech Recognition. arXiv, arXiv:2302.03498 2023.

- Bartelds, M.; San, N.; McDonnell, B.; Jurafsky, D.; Wieling, M.B. Making More of Little Data: Improving Low-Resource Automatic Speech Recognition Using Data Augmentation. arXiv preprint arXiv:2305.10951, arXiv:2305.10951 2023.

- Yeo, J.H.; Kim, M.; Watanabe, S.; Ro, Y. Visual Speech Recognition for Languages with Limited Labeled Data Using Automatic Labels from Whisper. Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2023, pp. 6650–6654. [CrossRef]

- Pratama, R.S.A.; Amrullah, A. Analysis of Whisper Automatic Speech Recognition Performance on Low Resource Language. Pilar Nusa Mandiri Journal of Computer and Information Science 2024, 20, 1–8. [Google Scholar] [CrossRef]

- Bekarystankyzy, A.; Mamyrbayev, O.; Anarbekova, T. Integrated End-to-End Automatic Speech Recognition for Agglutinative Languages. Proceedings of the ACM on Human-Computer Interaction 2024, 8, 1–19. [Google Scholar] [CrossRef]

- Sullivan, T.; Harding, E. Domain Shift in Speech Dialect Identification: Challenges and Solutions. Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2023, pp. 510–517.

- Bhatt, R.M. The Kashmiri Language; Vol. 46, Studies in Natural Language and Linguistic Theory, Springer, 1999. [CrossRef]

- Koka, N.A. Social Distribution of Linguistic Variants in Kashmiri Speech. Academy Publication 2016. [Google Scholar]

- Zampieri, M.; Nakov, P. Phonetic Variation in Dialects; Cambridge University Press, 2021. [CrossRef]

- Rather, S.N.; Singh, N. Phonetic and Phonological Variations in Kashmiri Language Dialects. International Journal of Linguistics 2017, 9, 12–20. [Google Scholar]

- Kachru, B.B. Kashmiri. In The Indo-Aryan Languages; Cardona, G.; Jain, D., Eds.; Routledge, 2003; pp. 895–930.

- Bhat, M.A.; Hassan, S. Prosodic Features of Kashmiri Dialect of Maraaz: A Comparative Study. IRE Journals 2020. [Google Scholar]

- Open Speech Corpus Contributors. Open Speech Corpus Tool. https://github.com/open-speech-corpus/open-speech-corpus-tool, 2023. Accessed: 2024-08-12.

- Rabiner, L.; Juang, B.H. Fundamentals of Speech Recognition; Prentice-Hall, Inc.: Englewood Cliffs, NJ, USA, 1993. [Google Scholar]

- Journals, I. Zero Crossing Rate and Energy of the Speech Signal of Devanagari Script. IOSR Journals 2015. [Google Scholar]

- Shen, J.L.; Hung, J.W.; Lee, L.S. Robust entropy-based endpoint detection for speech recognition in noisy environments. Proc. 5th International Conference on Spoken Language Processing (ICSLP 1998), 1998, p. paper 0232. [CrossRef]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition, 2nd ed.; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Wang, D.; Brown, G.J. Computational Auditory Scene Analysis: Principles, Algorithms, and Applications; IEEE Press/Wiley, 2016.

- Ganie, H.A.; Hasnain, S.K. Computational Linguistics and the Kashmiri Language: Issues and Challenges. International Journal of Computer Applications 2015, 128, 1–6. [Google Scholar] [CrossRef]

- Winursito, A.; Hidayat, R.; Bejo, A. Improvement of MFCC feature extraction accuracy using PCA in Indonesian speech recognition. 2018 International Conference on Information and Communications Technology (ICOIACT), 2018. [CrossRef]

- Hidayat, R. Frequency Domain Analysis of MFCC Feature Extraction in Children’s Speech Recognition System. Infotel Journal 2022, 14, 28–36. [Google Scholar] [CrossRef]

- Setianingrum, A.; Hulliyah, K.; Amrilla, M.F. Speech Recognition of Sundanese Dialect Using Convolutional Neural Network Method with Mel-Spectrogram Feature Extraction. 2023 8th International Conference on Information Technology, Information Systems and Mechatronics (CITSM), 2023. [CrossRef]

- Permana, S.D.H.; Rahman, T.K.A. Improved Feature Extraction for Sound Recognition Using Combined Constant-Q Transform (CQT) and Mel Spectrogram for CNN Input. 2023 International Conference on Mechatronics, Robotics, and Automation (ICMERALDA), 2023. [CrossRef]

- Li, Q.; Yang, Y.; Lan, T.; Zhu, H.; Wei, Q.; Qiao, F.; Liu, X.; Yang, H. MSP-MFCC: Energy-Efficient MFCC Feature Extraction Method With Mixed-Signal Processing Architecture for Wearable Speech Recognition Applications. IEEE Access 2020. [Google Scholar] [CrossRef]

- Amodei, D.; Ananthanarayanan, S.; Anubhai, R.; Bai, J.; Battenberg, E.; Case, C.; Walker, J.; Zhu, Z. Deep Speech 2: End-to-End Speech Recognition in English and Mandarin. Proceedings of The 33rd International Conference on Machine Learning, 2016, pp. 173–182.

- Joshi, D.; Pareek, J.; Ambatkar, P. Comparative Study of MFCC and Mel Spectrogram for Raga Classification Using CNN. Indian Journal of Science and Technology, 2023. [CrossRef]

- Hafiz, N.F.; Mashohor, S.; Shazril, M.H.S.E.M.A.; Rasid, M.F.A.; Ali, A. Comparison of Mel Frequency Cepstral Coefficient (MFCC) and Mel Spectrogram Techniques to Classify Industrial Machine Sound. Proceedings of the 16th International Conference on Software, Knowledge, Information Management, and Applications (SKIMA). [CrossRef]

- Abayomi-Alli, O.O.; Damaševičius, R.; Qazi, A.; Adedoyin-Olowe, M.; Misra, S. Data Augmentation and Deep Learning Methods in Sound Classification: A Systematic Review. Electronics 2022, 11, 3795. [Google Scholar] [CrossRef]

- Qazi, A.; Damaševičius, R.; Abayomi-Alli, O.O. Combining Transformer, Convolutional Neural Network, and Long Short-Term Memory Architectures: A Novel Ensemble Learning Technique That Leverages Multi-Acoustic Features for Speech Emotion Recognition in Distance Education Classrooms. Applied Sciences 2022, 12, 1–21. [Google Scholar] [CrossRef]

- Liu, H.; Dai, Z.; So, D.; Le, Q.V. Pay attention to mlps. Advances in neural information processing systems 2021, 34, 9204–9215. [Google Scholar]

- Yu, P.; Artetxe, M.; Ott, M.; Shleifer, S.; Gong, H.; Stoyanov, V.; Li, X. Efficient language modeling with sparse all-mlp. arXiv, arXiv:2203.06850 2022.

- Passricha, V.; Aggarwal, R. A Hybrid of Deep CNN and Bidirectional LSTM for Automatic Speech Recognition. Journal of Intelligent Systems 2018, 27, 555–563. [Google Scholar] [CrossRef]

- Wubet, Y.A.; Lian, K.Y. Voice Conversion Based Augmentation and a Hybrid CNN-LSTM Model for Improving Speaker-Independent Keyword Recognition on Limited Datasets. IEEE Access 2022, 10, 114222–114234. [Google Scholar] [CrossRef]

- Atila, O.; Şengür, A. Attention Guided 3D CNN-LSTM Model for Accurate Speech-Based Emotion Recognition. Applied Acoustics 2021, 175, 108260. [Google Scholar] [CrossRef]

- John Lorenzo Bautista, Y. Lee, H.S. Speech Emotion Recognition Based on Parallel CNN-Attention Networks with Multi-Fold Data Augmentation. Electronics 2023, 11, 3935. [Google Scholar] [CrossRef]

- Hu, K.; Sainath, T.N.; Pang, R.; Prabhavalkar, R. Deliberation Model Based Two-Pass End-To-End Speech Recognition. IEEE/ACM Transactions on Audio, Speech, and Language Processing 2020, 28, 123–134. [Google Scholar] [CrossRef]

- Li, G.; Sun, Z.; Hu, W.; Cheng, G.; Qu, Y. Position-aware relational transformer for knowledge graph embedding. IEEE Transactions on Neural Networks and Learning Systems 2023. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.H.H.; Gu, Y.; Liu, Y.C.; Ghosh, S.; Bulyko, I.; Stolcke, A. Generative Speech Recognition Error Correction With Large Language Models and Task-Activating Prompting. IEEE/ACM Transactions on Audio, Speech, and Language Processing 2023, 31, 234–245. [Google Scholar] [CrossRef]

- Bai, Y.; Yi, J.; Tao, J.; Tian, Z.; Wen, Z.; Zhang, S. Fast End-to-End Speech Recognition Via Non-Autoregressive Models and Cross-Modal Knowledge Transferring From BERT. IEEE/ACM Transactions on Audio, Speech, and Language Processing 2021, 29, 456–467. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv, arXiv:1412.6980 2014.

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. Journal of Machine Learning Research 2014, 15, 1929–1958. [Google Scholar]

- Powers, D.M. Evaluation: from precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv preprint arXiv:2010.16061, arXiv:2010.16061 2020.

- Goutte, C.; Gaussier, E. A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation. Advances in Information Retrieval. ECIR 2005. Lecture Notes in Computer Science, vol 3408. Springer, Berlin, Heidelberg, 2005, pp. 345–359. [CrossRef]

- Baevski, A.; Schneider, S.; Auli, M. Wav2Vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. arXiv preprint arXiv:2006.11477, arXiv:2006.11477 2020. [CrossRef]

- Chan, W.; Jaitly, N.; Le, Q.; Vinyals, O. Listen, Attend and Spell: A Neural Network for Large Vocabulary Conversational Speech Recognition. 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2016, pp. 4960–4964. [CrossRef]

| Kashmiri (Koshur / کٲشُر | Pronunciation | English | Voice Samples |

|---|---|---|---|

| آ | āh | Yes | 70 |

| اَڈسا. | aḍsā | OK | 70 |

| بَند | band | Closed | 70 |

| بۄہ | bē | Me | 70 |

| خَبَر | khabar | News | 70 |

| کیاہ | k’ah | What | 70 |

| نَہ | na | No | 70 |

| نٔو | nov (m.) | New | 70 |

| ٹھیک | ’theek | Well/Okay | 70 |

| وارَے | va:ray | Well | 70 |

| وچھ | vuch | See | 70 |

| یلہٕ | ye:le | Open | 70 |

| Metric | Min Value | Max Value |

|---|---|---|

| Average Duration (s) | 0.574 | 1.536 |

| Zero-Crossing Rate | 0.0515 | 0.3160 |

| RMS Energy | 0.0156 | 0.1411 |

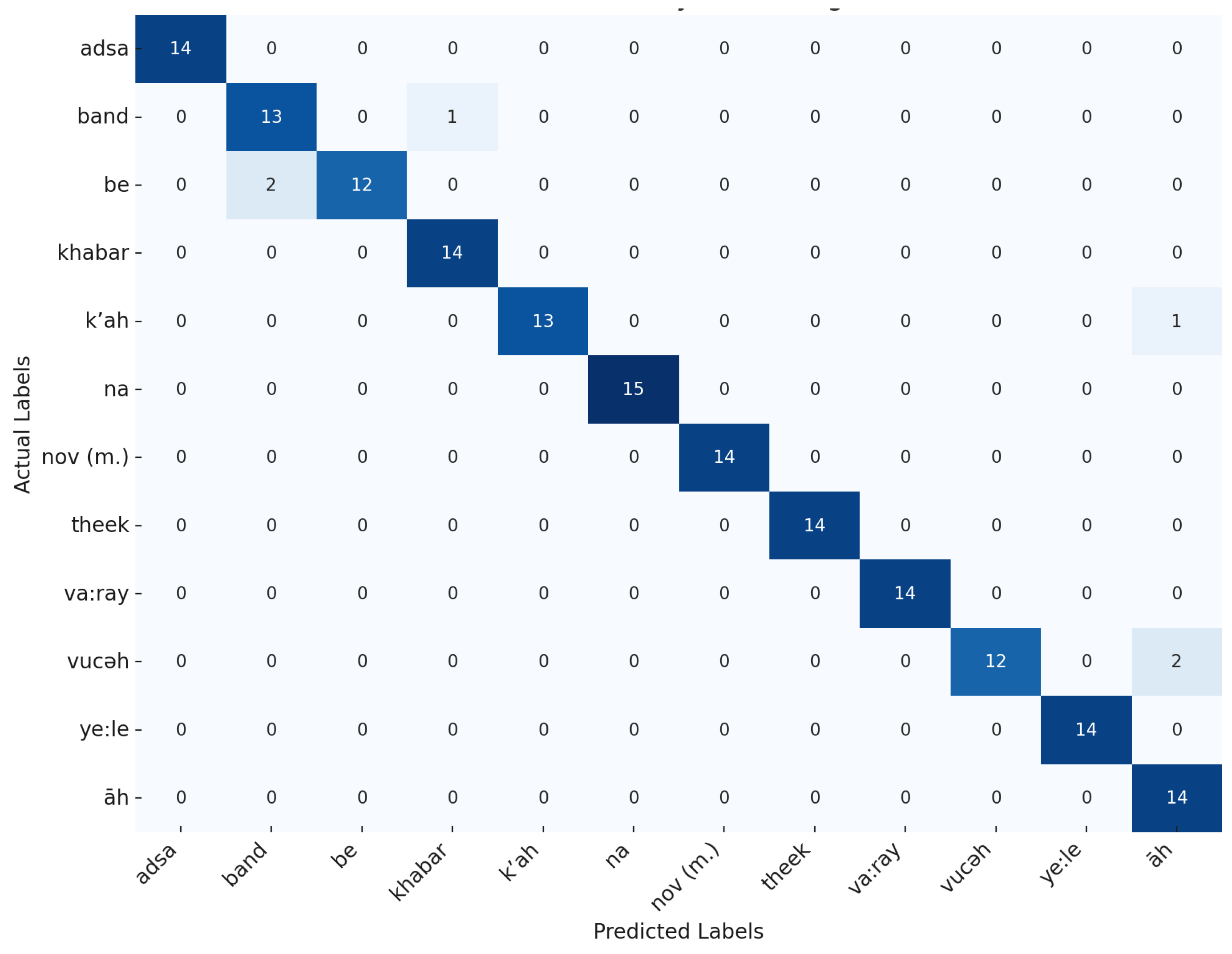

| Metric | Precision | Recall | F1-Score |

|---|---|---|---|

| adsa | 1.00 | 1.00 | 1.00 |

| āh | 0.82 | 1.00 | 0.90 |

| nov (m.) | 1.00 | 1.00 | 1.00 |

| khabar | 0.93 | 1.00 | 0.97 |

| be | 1.00 | 0.86 | 0.92 |

| vuch | 1.00 | 0.86 | 0.92 |

| na | 1.00 | 1.00 | 1.00 |

| band | 0.87 | 0.93 | 0.90 |

| va:ray | 1.00 | 1.00 | 1.00 |

| k’ah | 1.00 | 0.93 | 0.96 |

| ye:le | 1.00 | 1.00 | 1.00 |

| theek | 1.00 | 1.00 | 1.00 |

| Metric | Precision | Recall | F1-Score |

|---|---|---|---|

| Macro Avg | 0.97 | 0.96 | 0.96 |

| Weighted Avg | 0.97 | 0.96 | 0.96 |

| Accuracy | 0.96 | ||

| Model | Architecture | Dataset Used | Accuracy | Precision | Recall | F1-Score | Unique Features |

|---|---|---|---|---|---|---|---|

| Hybrid CNN-gMLP | CNN + gMLP (4 layers) | Kashmiri Speech Dataset | 96% | 0.97 | 0.96 | 0.96 | Dual feature extraction (MFCC + Mel-Spectrogram), local and global feature capture, robust for phonetic diversity of Kashmiri language. |

| CNN + LSTM Hybrid | CNN + LSTM | TIMIT | 91.3% | 0.90 | 0.89 | 0.89 | Local feature extraction via CNN combined with sequential modeling by LSTM, effective in handling phonetic variations in speech data [46]. |

| Deep Speech (RNN) | RNN-based end-to-end | Various speech datasets | N/A | N/A | N/A | WER: 10.55% | End-to-end speech recognition, large-scale training with parallelization for scalability, robust handling of continuous speech [9]. |

| Wav2Vec (CNN) | CNN for pre-training | LibriSpeech | 95.5% | N/A | N/A | WER: 8.5% | Unsupervised pre-training with contrastive loss to improve robustness on noisy and limited data [58]. |

| Listen, Attend, and Spell | Attention-based neural net | Large Vocabulary Dataset | N/A | N/A | N/A | WER: 13.1% | Attention mechanisms for large vocabulary speech recognition, efficient handling of conversational speech [59]. |

| Hybrid CNN-RNN Emotion Recognition | CNN + RNN (LSTM/ GRU) | Speech Emotion Dataset | 89.2% | 0.87 | 0.88 | 0.87 | Captures local features and temporal dependencies, well-suited for emotional and contextual speech analysis [48]. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).