Submitted:

26 August 2024

Posted:

28 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

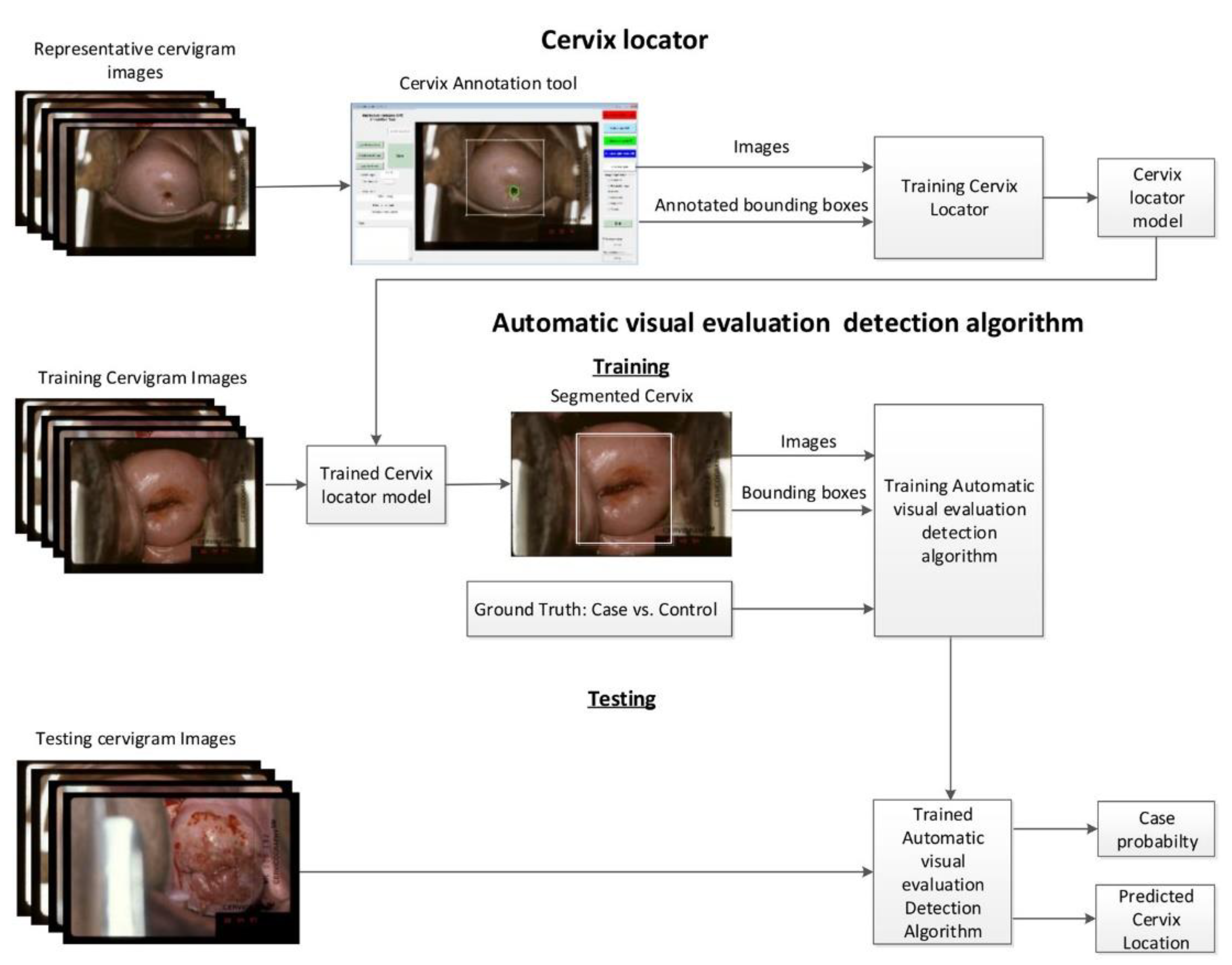

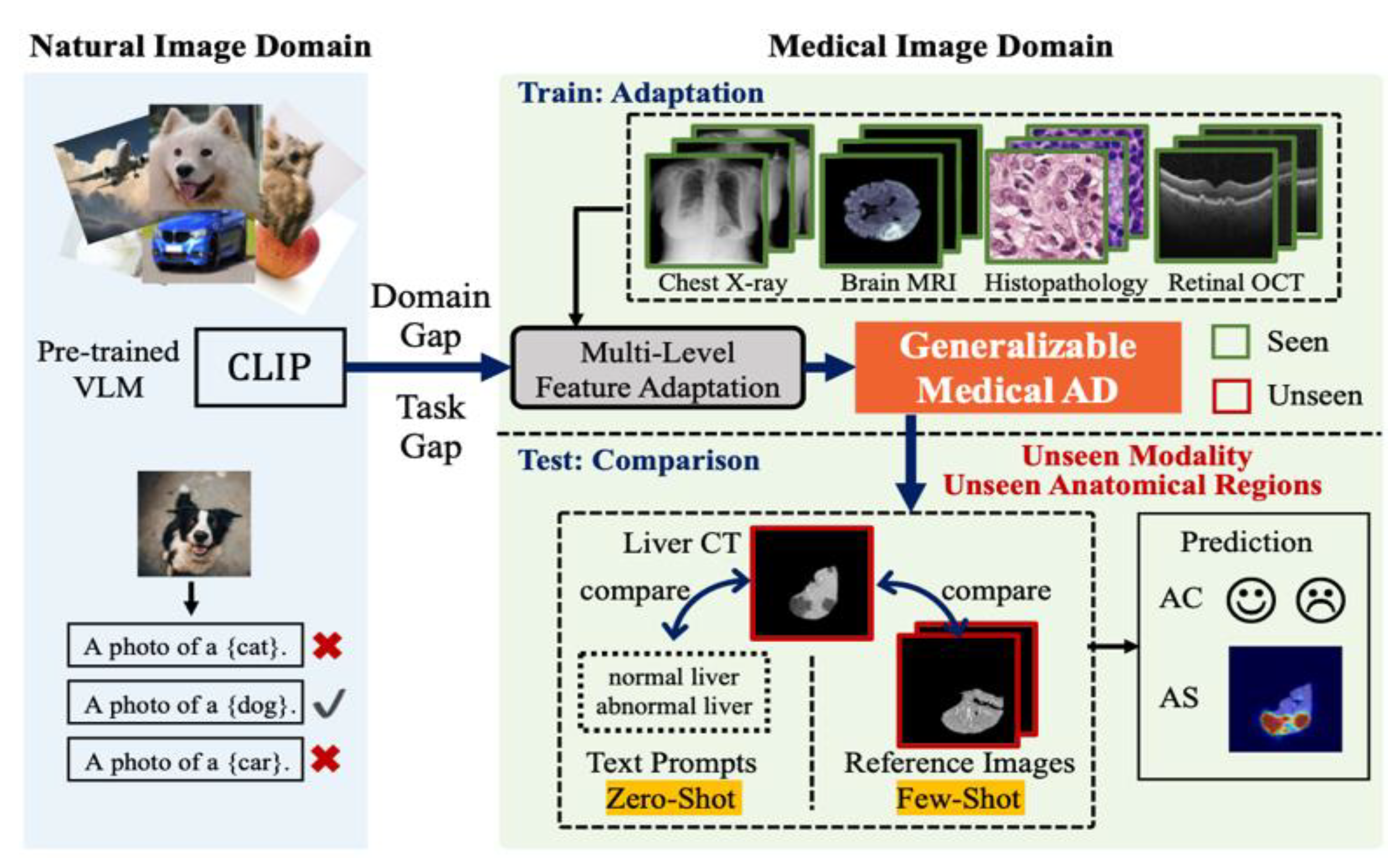

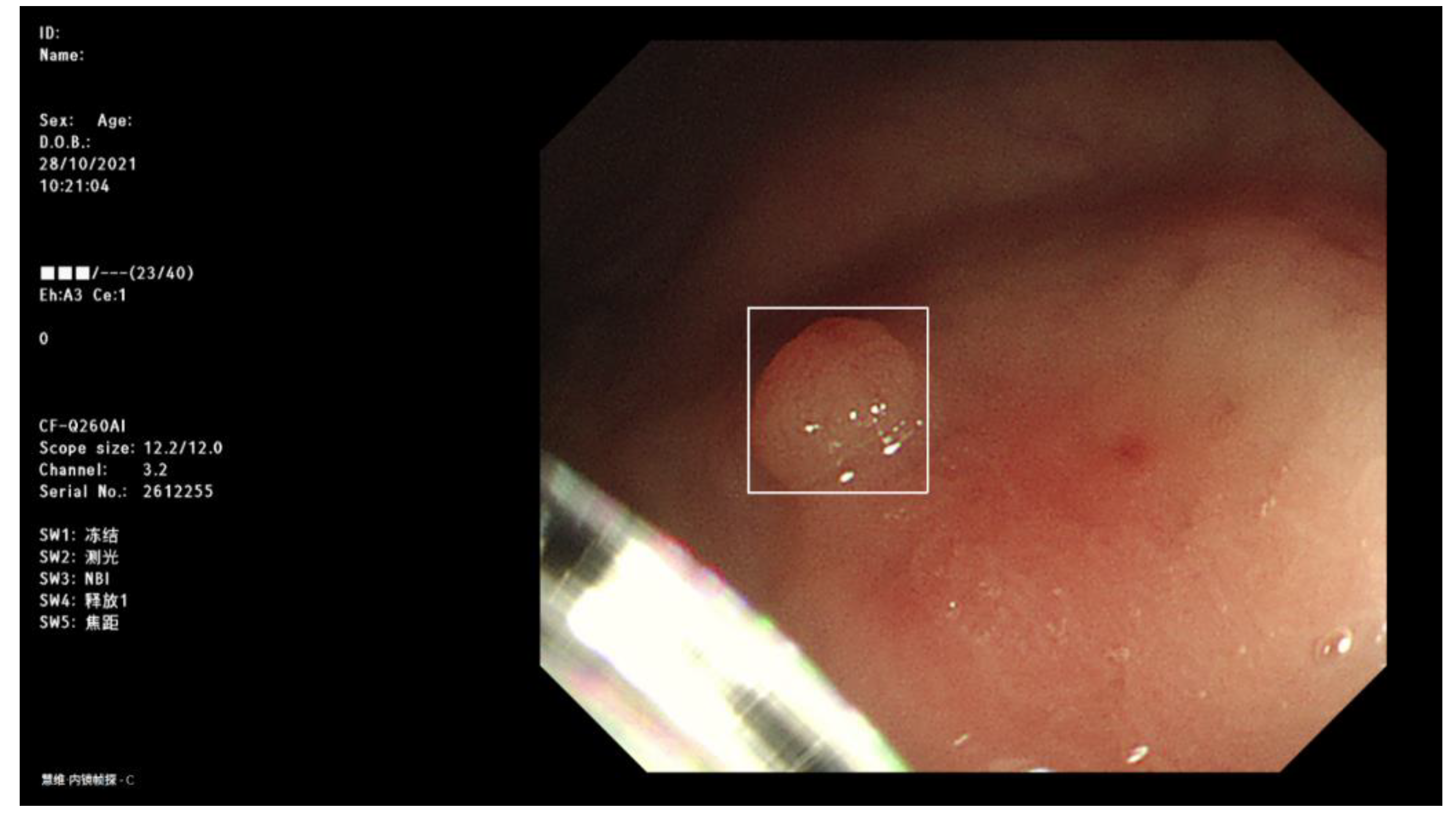

2. Computer Vision Technology and Medical Image Detection

2.1. Current Incidence of Cervical Cancer

2.2. Computer Vision Technology Assists in Medical Imaging Diagnosis

3. Computer Vision Technology in the Medical Field

3.1. Detection of Disease Mutation

3.2. Pathological Image Segmentation

3.3. Pathological Image Registration

3.4. Three-Dimensional Modeling and Simulation Based on Pathological Images

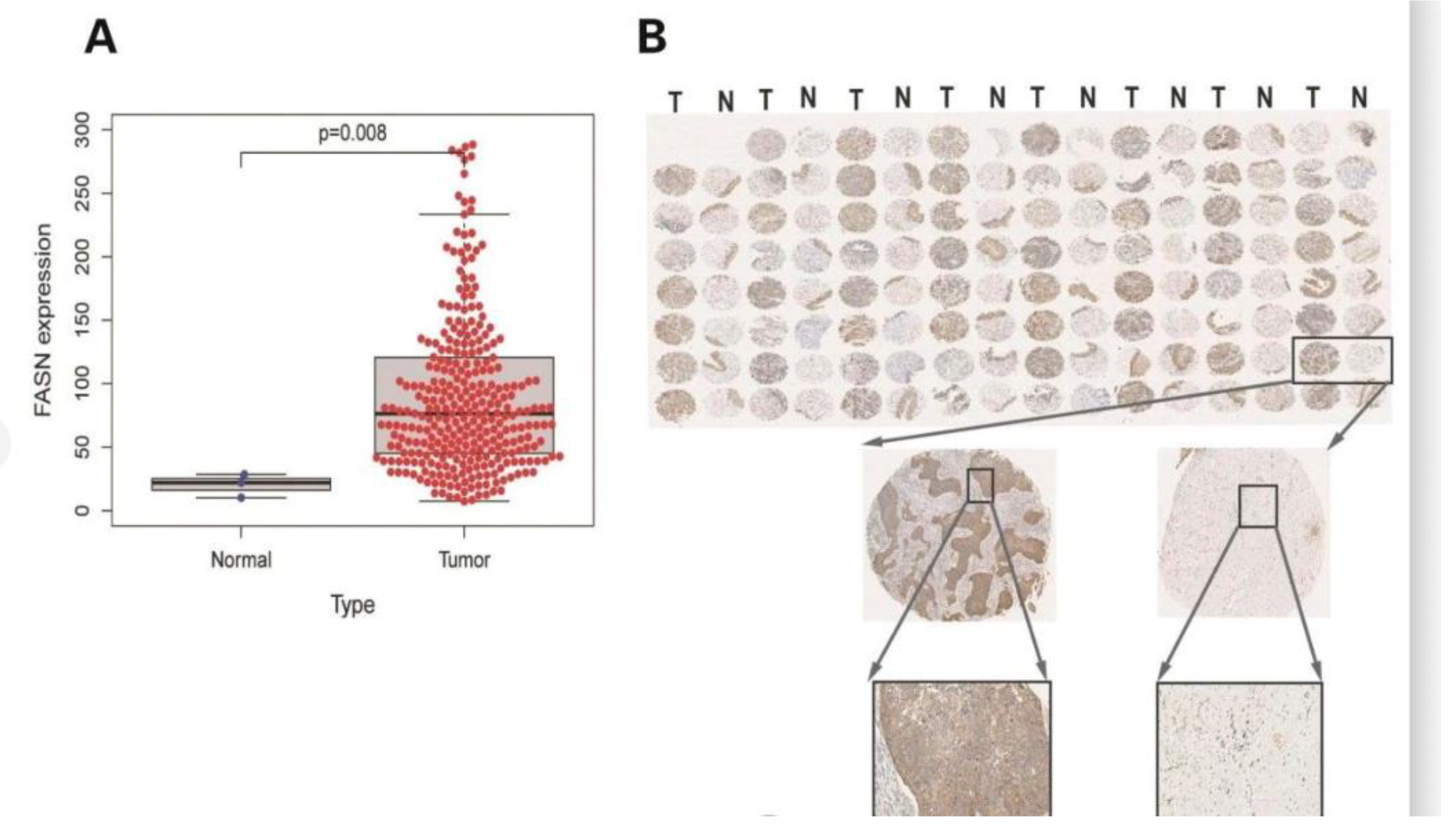

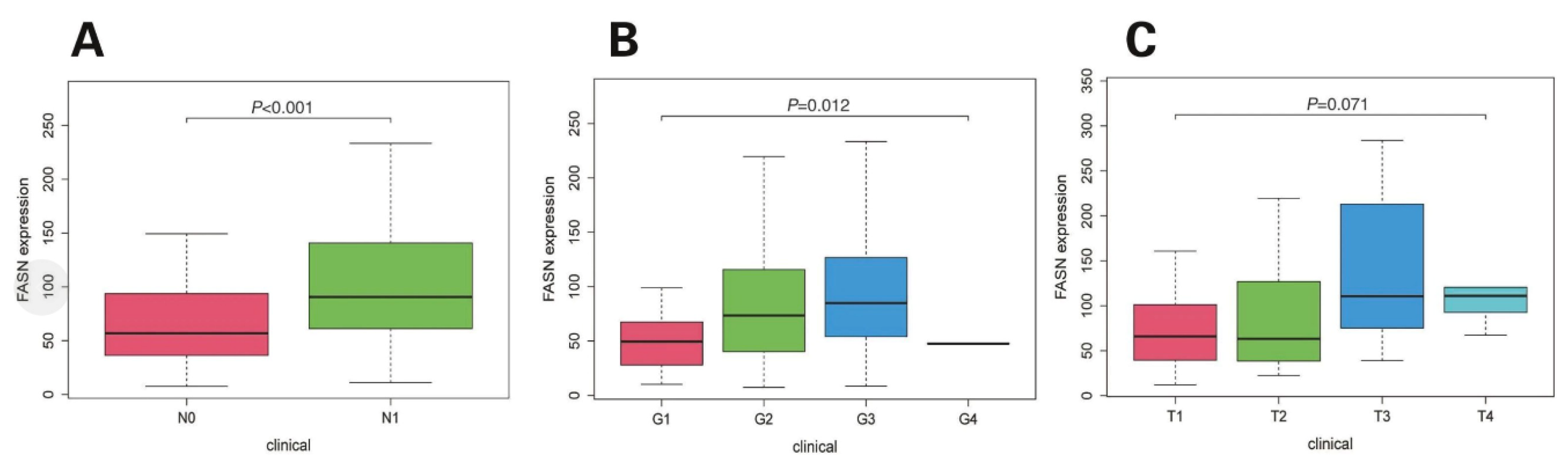

4. Immunohistochemistry Analyzed the Expression Of FASN in Cervical Cancer

4.1. Role of FASN in Cervical Cancer

4.2. Immunohistochemical Analysis of FASN in Cervical Cancer

4.3. Experimental Status

4.4. Discussion and Conclusion

References

- Li, B., Zhang, K., Sun, Y., & Zou, J. (2024). Research on Travel Route Planning Optimization based on Large Language Model.

- Li, B., Zhang, X., Wang, X. A., Yong, S., Zhang, J., & Huang, J. (2019, April). A Feature Extraction Method for Daily-periodic Time Series Based on AETA Electromagnetic Disturbance Data. In Proceedings of the 2019 4th International Conference on Mathematics and Artificial Intelligence (pp. 215-219).

- Huang, D., Liu, Z., & Li, Y. (2024). Research on Tumors Segmentation based on Image Enhancement Method. arXiv preprint arXiv:2406.05170. [CrossRef]

- Huang, D., Xu, L., Tao, W., & Li, Y. (2024). Research on Genome Data Recognition and Analysis based on.

- Jin, Y., Shimizu, S., Li, Y., Yao, Y., Liu, X., Si, H., ... & Xiao, W. (2023). Proton therapy (PT) combined with concurrent chemotherapy for locally advanced non-small cell lung cancer with negative driver genes. Radiation Oncology, 18(1), 189. [CrossRef]

- Nitta, H., Mizumoto, M., Li, Y., Oshiro, Y., Fukushima, H., Suzuki, R., ... & Sakurai, H. (2024). An analysis of muscle growth after proton beam therapy for pediatric cancer. Journal of Radiation Research, 65(2), 251-255. [CrossRef]

- Nakamura, M., Mizumoto, M., Saito, T., Shimizu, S., Li, Y., Oshiro, Y., ... & Sakurai, H. (2024). A systematic review and meta-analysis of radiotherapy and particle beam therapy for skull base chondrosarcoma: TRP-chondrosarcoma 2024. Frontiers in Oncology, 14, 1380716. [CrossRef]

- Li, Y., Mizumoto, M., Oshiro, Y., Nitta, H., Saito, T., Iizumi, T., ... & Sakurai, H. (2023). A retrospective study of renal growth changes after proton beam therapy for Pediatric malignant tumor. Current Oncology, 30(2), 1560-1570. [CrossRef]

- Shimizu, S., Mizumoto, M., Okumura, T., Li, Y., Baba, K., Murakami, M., ... & Sakurai, H. (2021). Proton beam therapy for a giant hepatic hemangioma: A case report and literature review. Clinical and Translational Radiation Oncology, 27, 152-156. [CrossRef]

- Restrepo, D., Wu, C., Cajas, S. A., Nakayama, L. F., Celi, L. A. G., & Lopez, D. M. (2024). Multimodal Deep Learning for Low-Resource Settings: A Vector Embedding Alignment Approach for Healthcare Applications. medRxiv, 2024-06.

- Zhang, X., Xu, L., Li, N., & Zou, J. (2024). Research on Credit Risk Assessment Optimization based on Machine Learning. [CrossRef]

- Wang, H., Li, J., & Li, Z. (2024). AI-Generated Text Detection and Classification Based on BERT Deep Learning Algorithm. arXiv preprint arXiv:2405.16422.

- Lai, S., Feng, N., Sui, H., Ma, Z., Wang, H., Song, Z., ... & Yue, Y. (2024). FTS: A Framework to Find a Faithful TimeSieve. arXiv preprint arXiv:2405.19647.

- Liu, H., Shen, F., Qin, H., & Gao, F. (2024). Research on Flight Accidents Prediction based Back Propagation Neural Network. arXiv preprint arXiv:2406.13954.

- Li, J., Wang, Y., Xu, C., Liu, S., Dai, J., & Lan, K. (2024). Bioplastic derived from corn stover: Life cycle assessment and artificial intelligence-based analysis of uncertainty and variability. Science of The Total Environment, 174349. [CrossRef]

- Liu, H., Xie, R., Qin, H., & Li, Y. (2024). Research on Dangerous Flight Weather Prediction based on Machine Learning. arXiv preprint arXiv:2406.12298.

- Li, S., & Tajbakhsh, N. (2023). Scigraphqa: A large-scale synthetic multi-turn question-answering dataset for scientific graphs. arXiv preprint arXiv:2308.03349.

- Li, S., Lin, R., & Pei, S. (2024). Multi-modal preference alignment remedies regression of visual instruction tuning on language model. arXiv preprint arXiv:2402.10884.

- Wang, D. (Ed.). (2016). Information Science and Electronic Engineering: Proceedings of the 3rd International Conference of Electronic Engineering and Information Science (ICEEIS 2016), January 4-5, 2016, Harbin, China. CRC Press.

- Dhand, A., Reeves, M. J., Mu, Y., Rosner, B. A., Rothfeld-Wehrwein, Z. R., Nieves, A., ... & Sheth, K. N. (2024). Mapping the Ecological Terrain of Stroke Prehospital Delay: A Nationwide Registry Study. Stroke, 55(6), 1507-1516. [CrossRef]

- Dhand, A., Reeves, M. J., Mu, Y., Rosner, B. A., Rothfeld-Wehrwein, Z. R., Nieves, A., ... & Sheth, K. N. (2024). Mapping the Ecological Terrain of Stroke Prehospital Delay: A Nationwide Registry Study. Stroke, 55(6), 1507-1516.

- Bi, S., & Bao, W. (2024). Innovative Application of Artificial Intelligence Technology in Bank Credit Risk Management. arXiv preprint arXiv:2404.18183. [CrossRef]

- Chung, T. K., Doran, G., Cheung, T. H., Yim, S. F., Yu, M. Y., Worley Jr, M. J., ... & Wong, Y. F. (2021). Dissection of PIK3CA aberration for cervical adenocarcinoma outcomes. Cancers, 13(13), 3218.

- Yu, C., Jin, Y., Xing, Q., Zhang, Y., Guo, S., & Meng, S. (2024). Advanced User Credit Risk Prediction Model using LightGBM, XGBoost and Tabnet with SMOTEENN. arXiv preprint arXiv:2408.03497.

- Zheng, Q., Yu, C., Cao, J., Xu, Y., Xing, Q., & Jin, Y. (2024). Advanced Payment Security System: XGBoost, CatBoost and SMOTE Integrated. arXiv preprint arXiv:2406.04658.

- Kumada, H., Li, Y., Yasuoka, K., Naito, F., Kurihara, T., Sugimura, T., ... & Sakae, T. (2022). Current development status of iBNCT001, demonstrator of a LINAC-based neutron source for BNCT. Journal of Neutron Research, 24(3-4), 347-358. [CrossRef]

- Allman, R., Mu, Y., Dite, G. S., Spaeth, E., Hopper, J. L., & Rosner, B. A. (2023). Validation of a breast cancer risk prediction model based on the key risk factors: family history, mammographic density and polygenic risk. Breast Cancer Research and Treatment, 198(2), 335-347. [CrossRef]

- Shimizu, S., Nakai, K., Li, Y., Mizumoto, M., Kumada, H., Ishikawa, E., ... & Sakurai, H. (2023). Boron neutron capture therapy for recurrent glioblastoma multiforme: imaging evaluation of a case with long-term local control and survival. Cureus, 15(1). [CrossRef]

- Gupta, S., Motwani, S. S., Seitter, R. H., Wang, W., Mu, Y., Chute, D. F., ... & Curhan, G. C. (2023). Development and validation of a risk model for predicting contrast-associated acute kidney injury in patients with cancer: evaluation in over 46,000 CT examinations. American Journal of Roentgenology, 221(4), 486-501. [CrossRef]

- Weng A. Depression and Risky Health Behaviors[J]. Available at SSRN 4843979.

- Rosner, B., Glynn, R. J., Eliassen, A. H., Hankinson, S. E., Tamimi, R. M., Chen, W. Y., ... & Tworoger, S. S. (2022). A multi-state survival model for time to breast cancer mortality among a cohort of initially disease-free women. Cancer Epidemiology, Biomarkers & Prevention, 31(8), 1582-1592. [CrossRef]

- Yaghjyan, L., Heng, Y. J., Baker, G. M., Bret-Mounet, V., Murthy, D., Mahoney, M. B., ... & Tamimi, R. M. (2022). Reliability of CD44, CD24, and ALDH1A1 immunohistochemical staining: Pathologist assessment compared to quantitative image analysis. Frontiers in Medicine, 9, 1040061. [CrossRef]

- Zhou, Q. (2024). Portfolio Optimization with Robust Covariance and Conditional Value-at-Risk Constraints. arXiv preprint arXiv:2406.00610.

- Zhou, Q. (2024). Application of Black-Litterman Bayesian in Statistical Arbitrage. arXiv preprint arXiv:2406.06706.

- Haowei, M., Ebrahimi, S., Mansouri, S., Abdullaev, S. S., Alsaab, H. O., & Hassan, Z. F. (2023). CRISPR/Cas-based nanobiosensors: A reinforced approach for specific and sensitive recognition of mycotoxins. Food Bioscience, 56, 103110. [CrossRef]

- Zhang, J., Cao, J., Chang, J., Li, X., Liu, H., & Li, Z. (2024). Research on the Application of Computer Vision Based on Deep Learning in Autonomous Driving Technology. arXiv preprint arXiv:2406.00490.

- Rosner, B., Tamimi, R. M., Kraft, P., Gao, C., Mu, Y., Scott, C., ... & Colditz, G. A. (2021). Simplified breast risk tool integrating questionnaire risk factors, mammographic density, and polygenic risk score: development and validation. Cancer Epidemiology, Biomarkers & Prevention, 30(4), 600-607. [CrossRef]

- Sarkis, R. A., Goksen, Y., Mu, Y., Rosner, B., & Lee, J. W. (2018). Cognitive and fatigue side effects of anti-epileptic drugs: an analysis of phase III add-on trials. Journal of neurology, 265(9), 2137-2142. [CrossRef]

- Li, B., Jiang, G., Li, N., & Song, C. (2024). Research on Large-scale Structured and Unstructured Data Processing based on Large Language Model.

- Yaghjyan, L., Heng, Y. J., Baker, G. M., Bret-Mounet, V., Murthy, D., Mahoney, M. B., ... & Tamimi, R. M. (2022). Reliability of CD44, CD24, and ALDH1A1 immunohistochemical staining: Pathologist assessment compared to quantitative image analysis. Frontiers in Medicine, 9, 1040061. [CrossRef]

- Li, Y., Matsumoto, Y., Chen, L., Sugawara, Y., Oe, E., Fujisawa, N., ... & Sakurai, H. (2023). Smart Nanofiber Mesh with Locally Sustained Drug Release Enabled Synergistic Combination Therapy for Glioblastoma. Nanomaterials, 13(3), 414. [CrossRef]

- Yu, C., Xu, Y., Cao, J., Zhang, Y., Jin, Y., & Zhu, M. (2024). Credit card fraud detection using advanced transformer model. arXiv preprint arXiv:2406.03733.

- Chen, Z., Ge, J., Zhan, H., Huang, S., & Wang, D. (2021). Pareto self-supervised training for few-shot learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 13663-13672).

- Zhang, Y., Qu, T., Yao, T., Gong, Y., & Bian, X. (2024). Research on the application of BIM technology in intelligent building technology. Applied and Computational Engineering, 61, 29-34. [CrossRef]

- Yang, J., Qin, H., Por, L. Y., Shaikh, Z. A., Alfarraj, O., Tolba, A., ... & Thwin, M. (2024). Optimizing diabetic retinopathy detection with inception-V4 and dynamic version of snow leopard optimization algorithm. Biomedical Signal Processing and Control, 96, 106501. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).