Submitted:

19 August 2024

Posted:

27 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

Materials and Methods

2.1. Materials

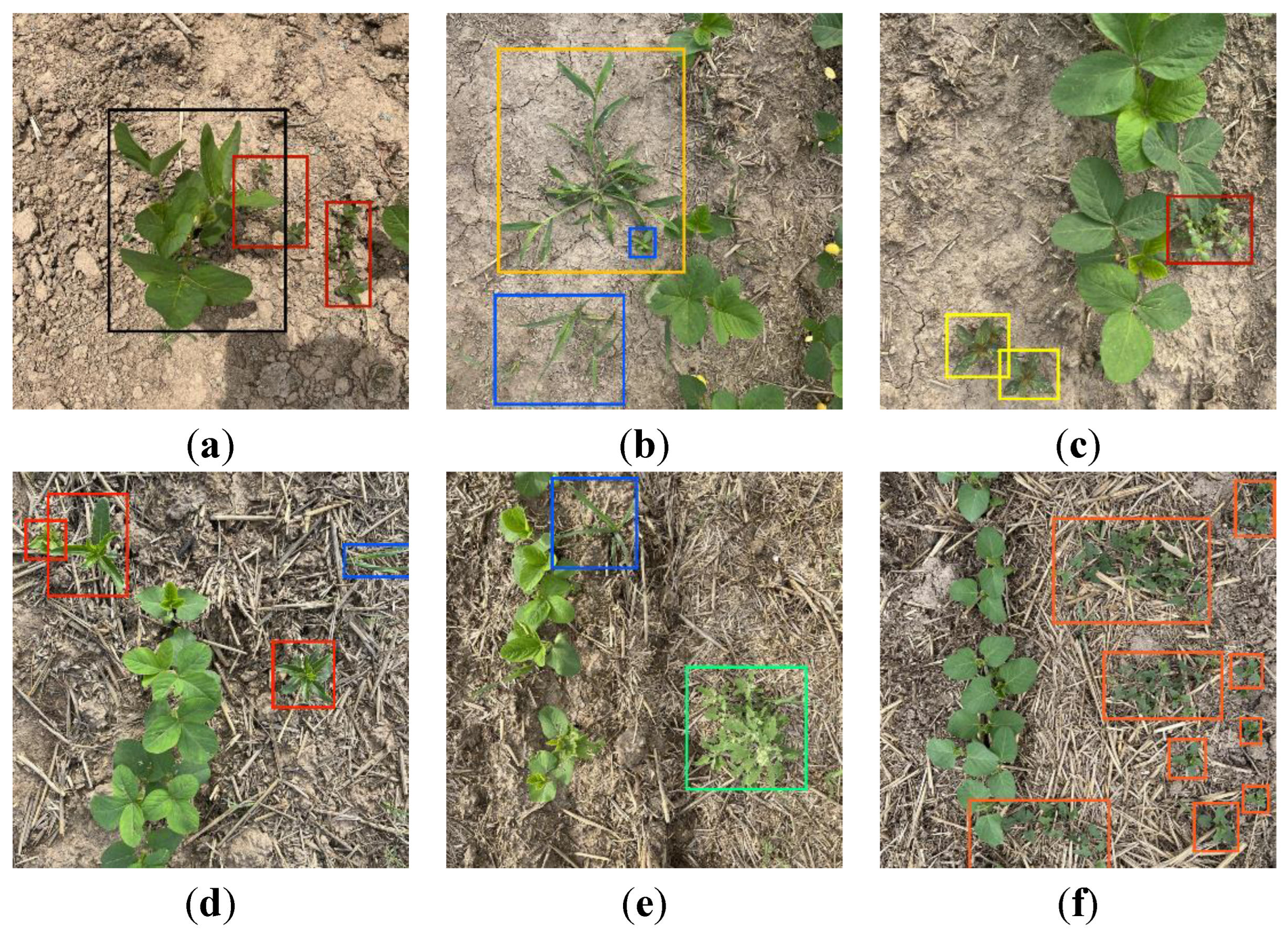

2.1.1. Data Acquisition

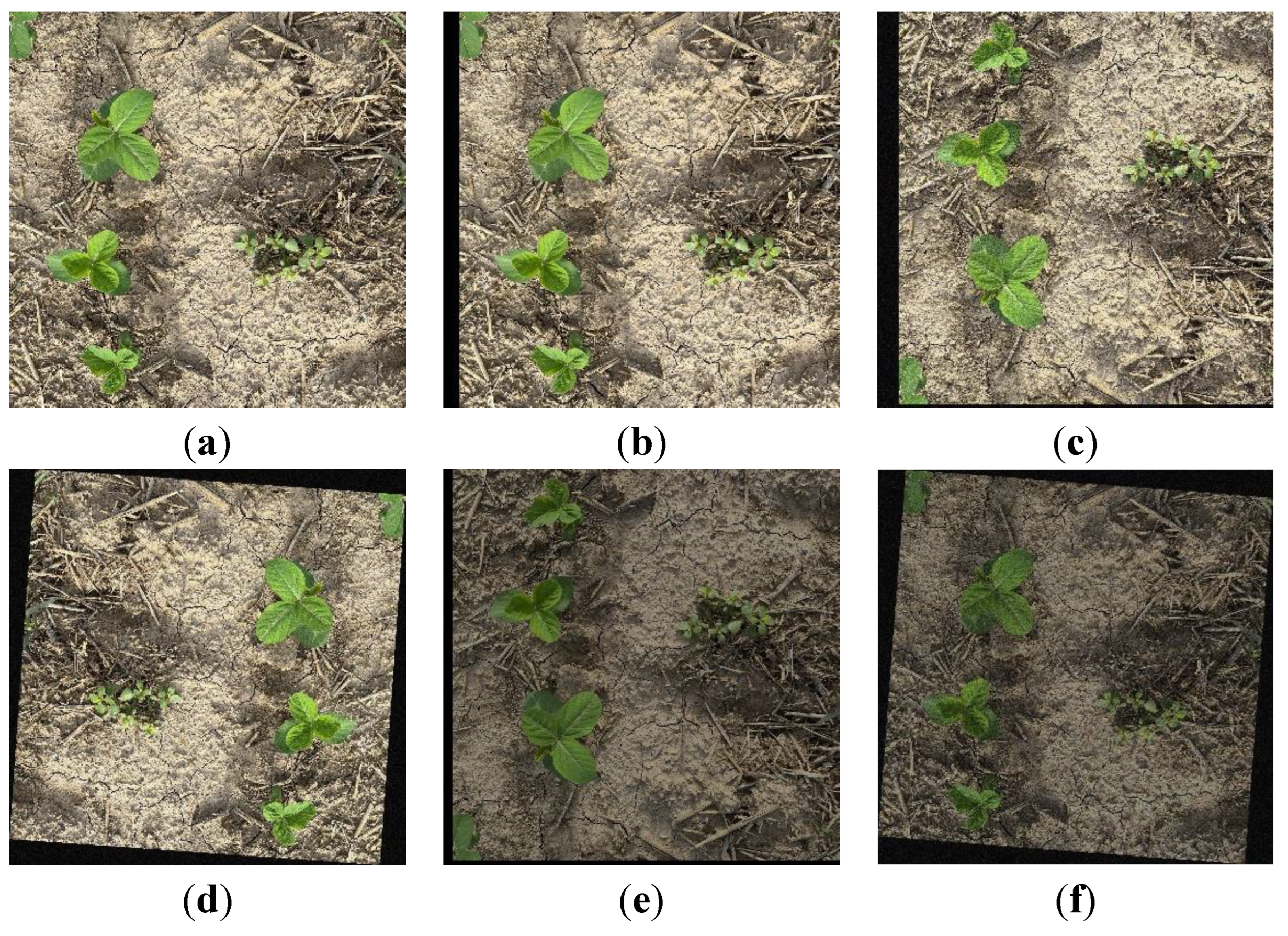

2.1.2. Data Pre-Processing

- Shift Parameter

- 2.

- Rotation Parameter

- 3.

- Brightness Coefficient

- 4.

- Image Noise Addition

2.2. Methods

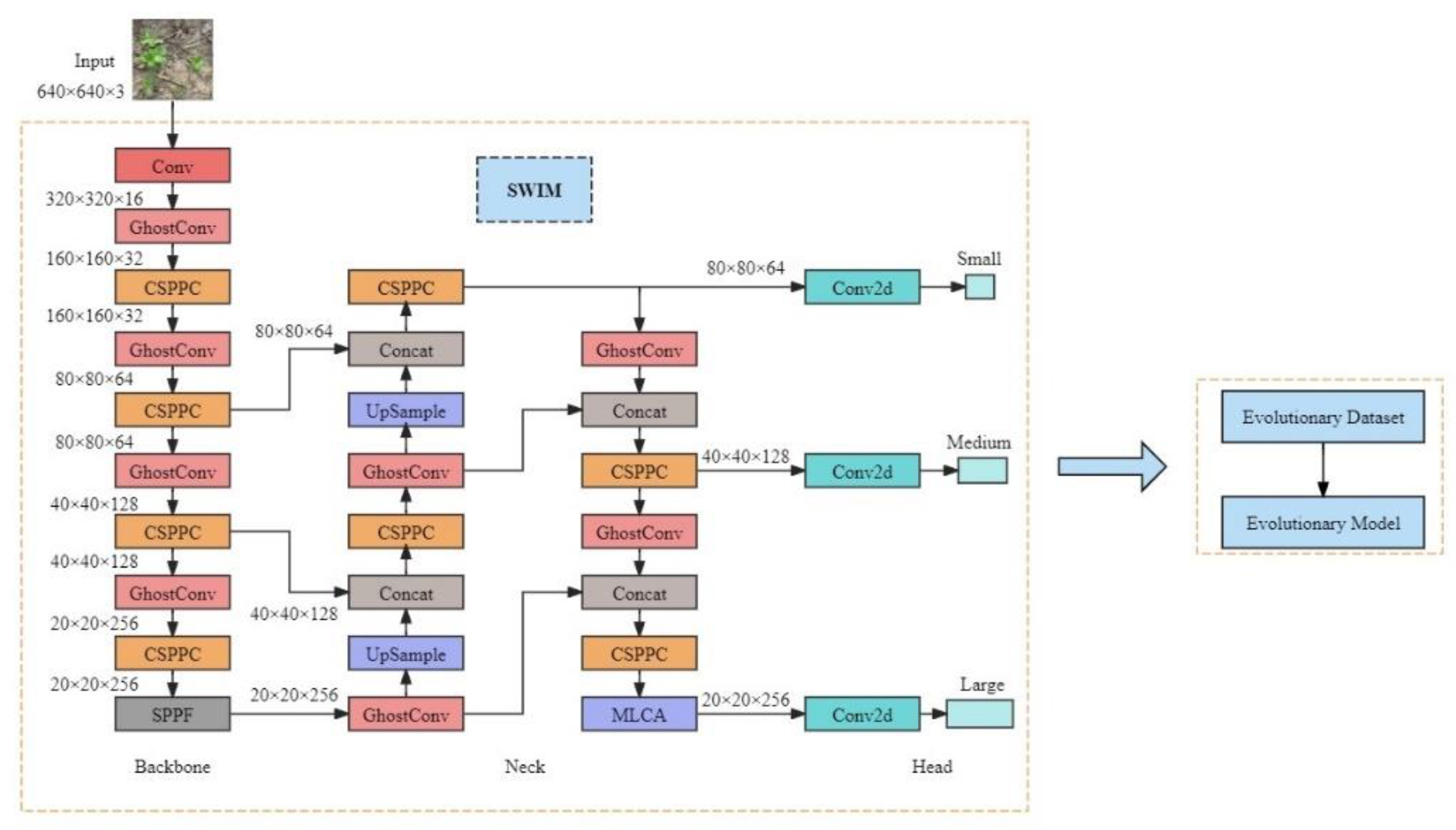

2.2.1. Design of a Computational Complexity-Driven Model for Soybean Seedling and Weed Identification

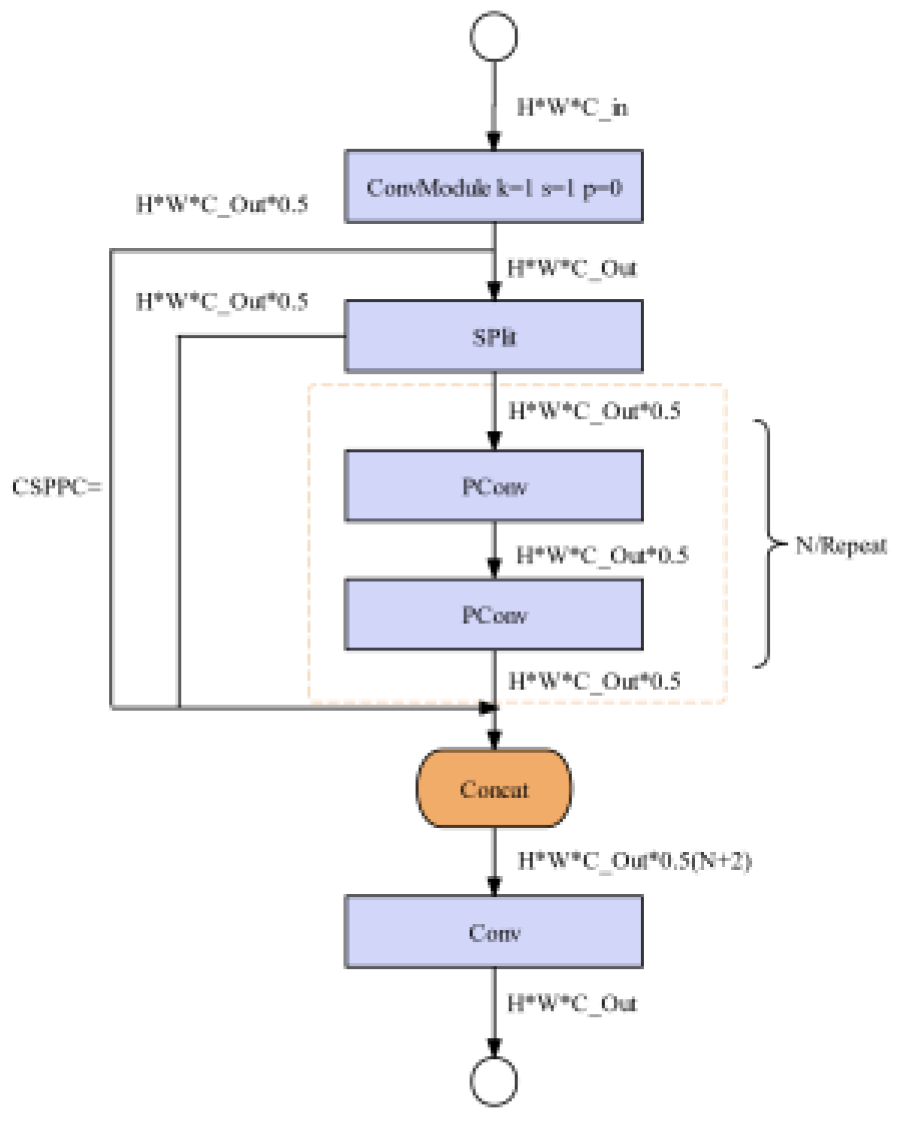

- Computational Complexity-driven CSPPC Module

- 2.

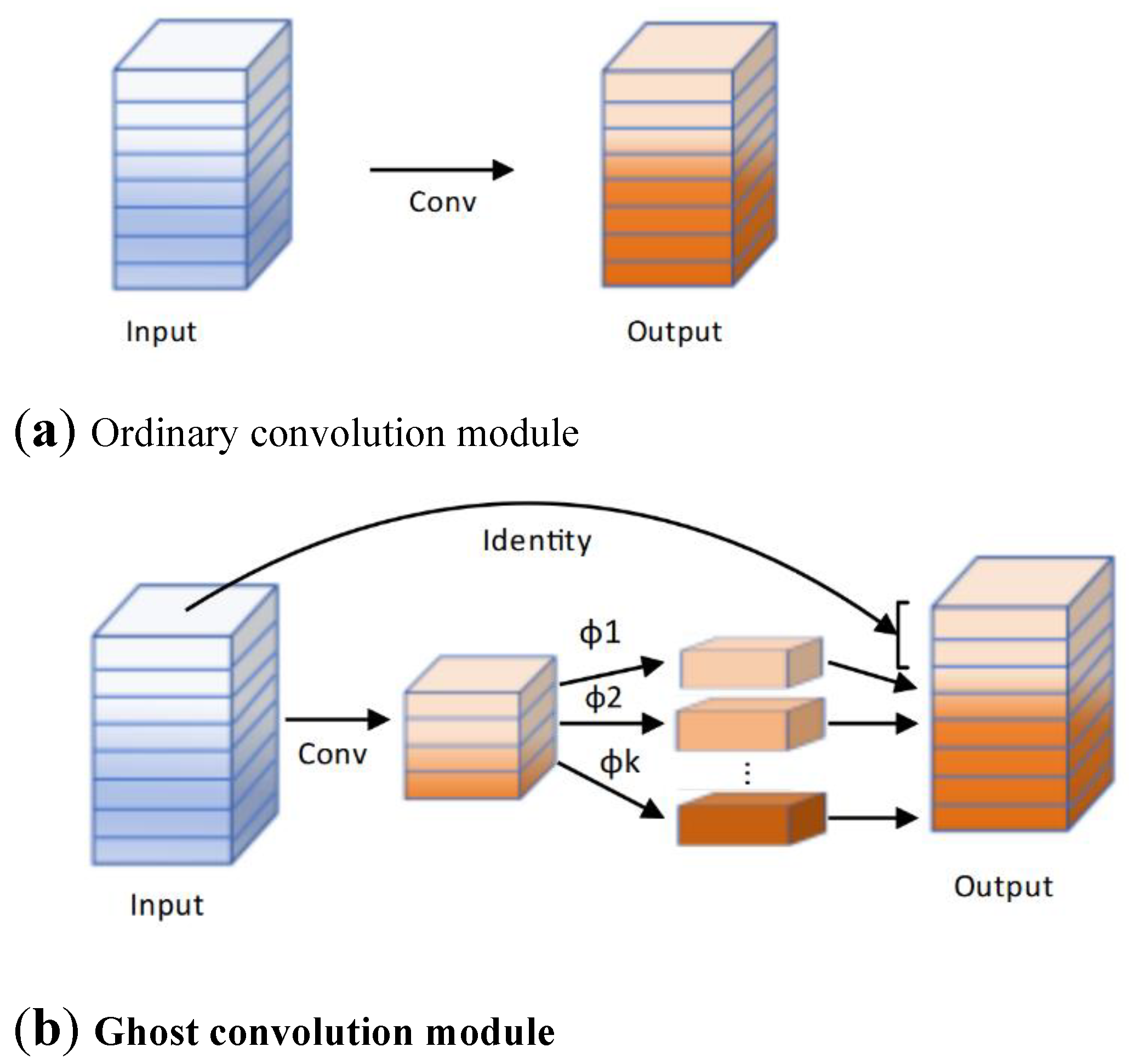

- Computational Complexity-driven Ghost Module

- 3.

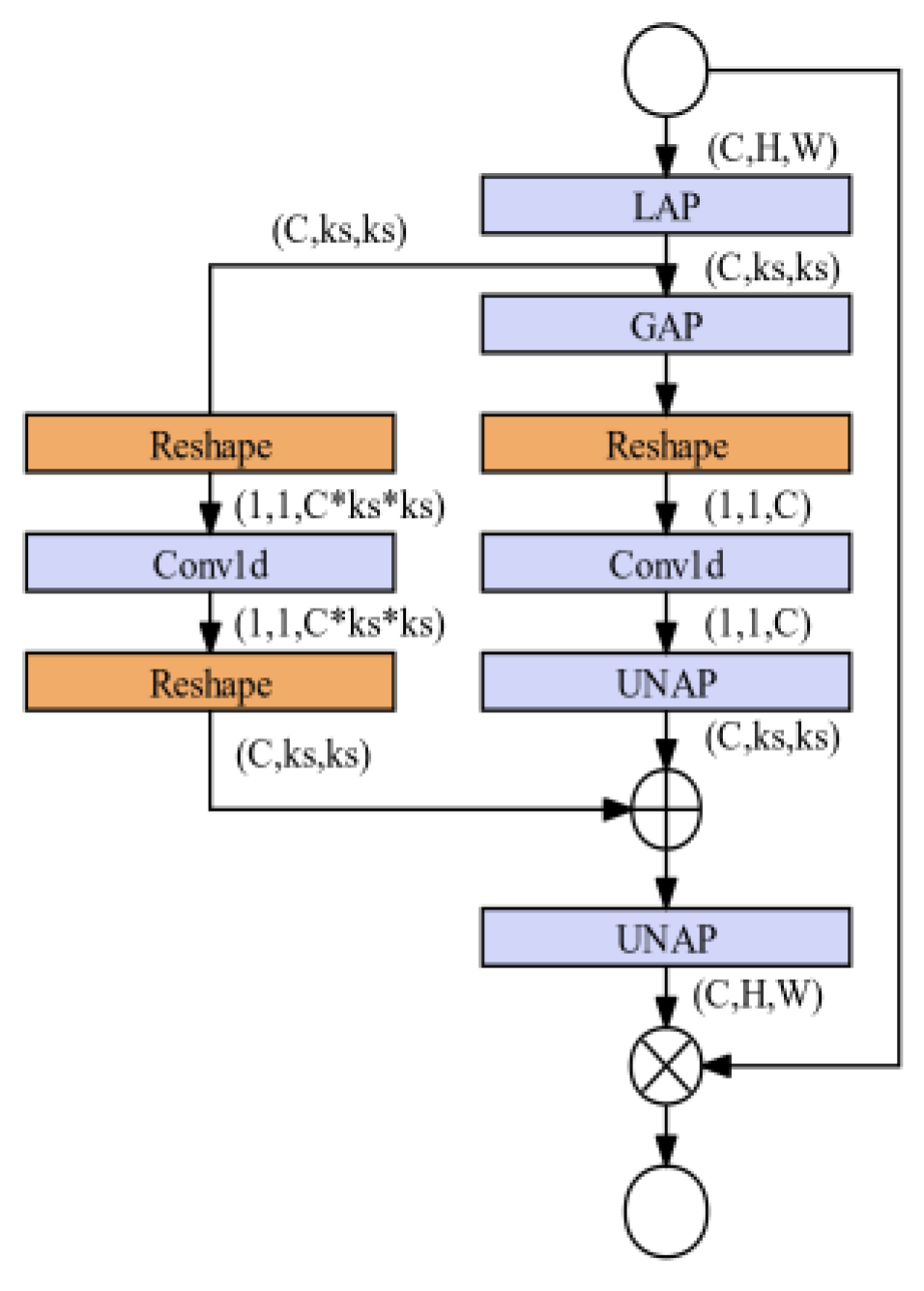

- MLCA Attention Mechanism

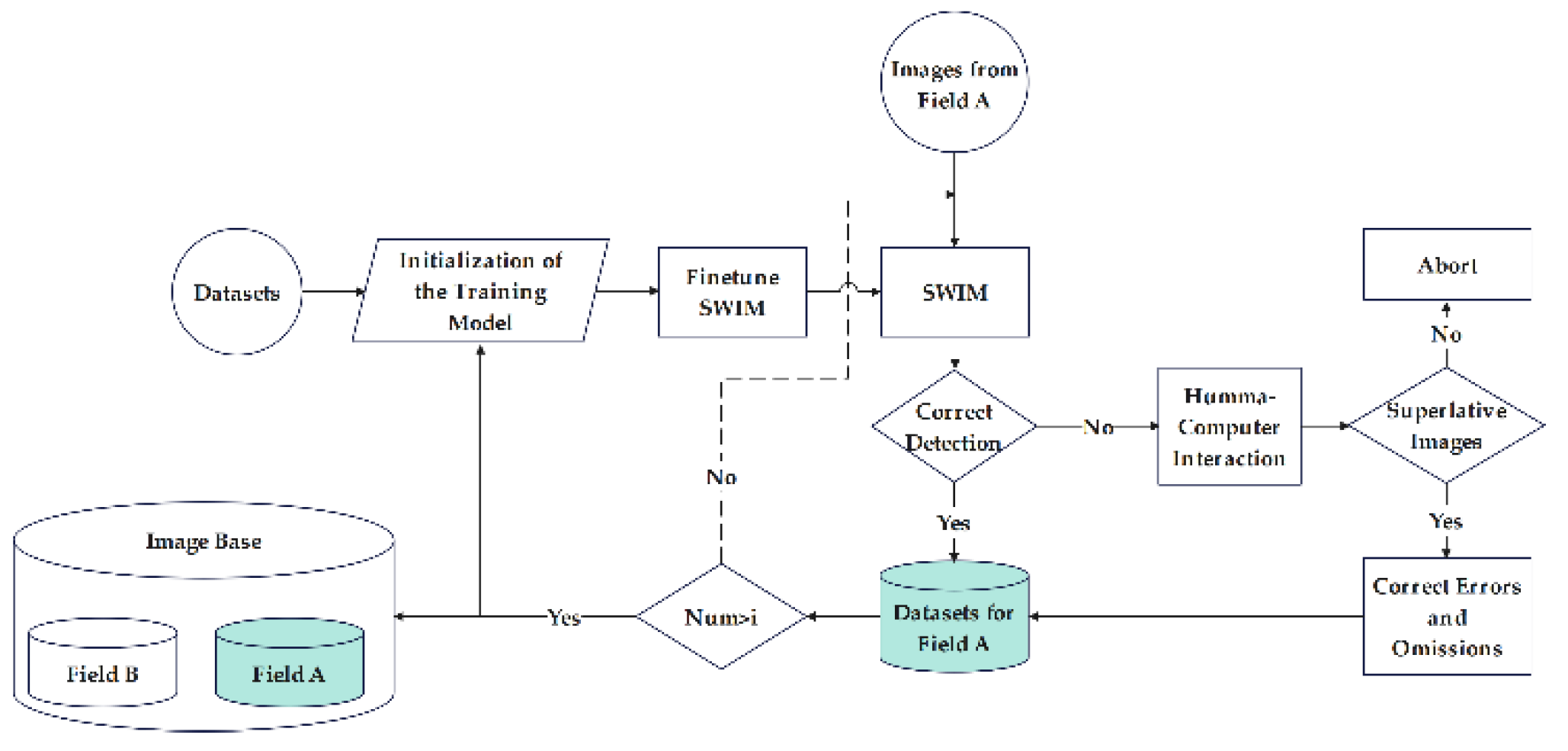

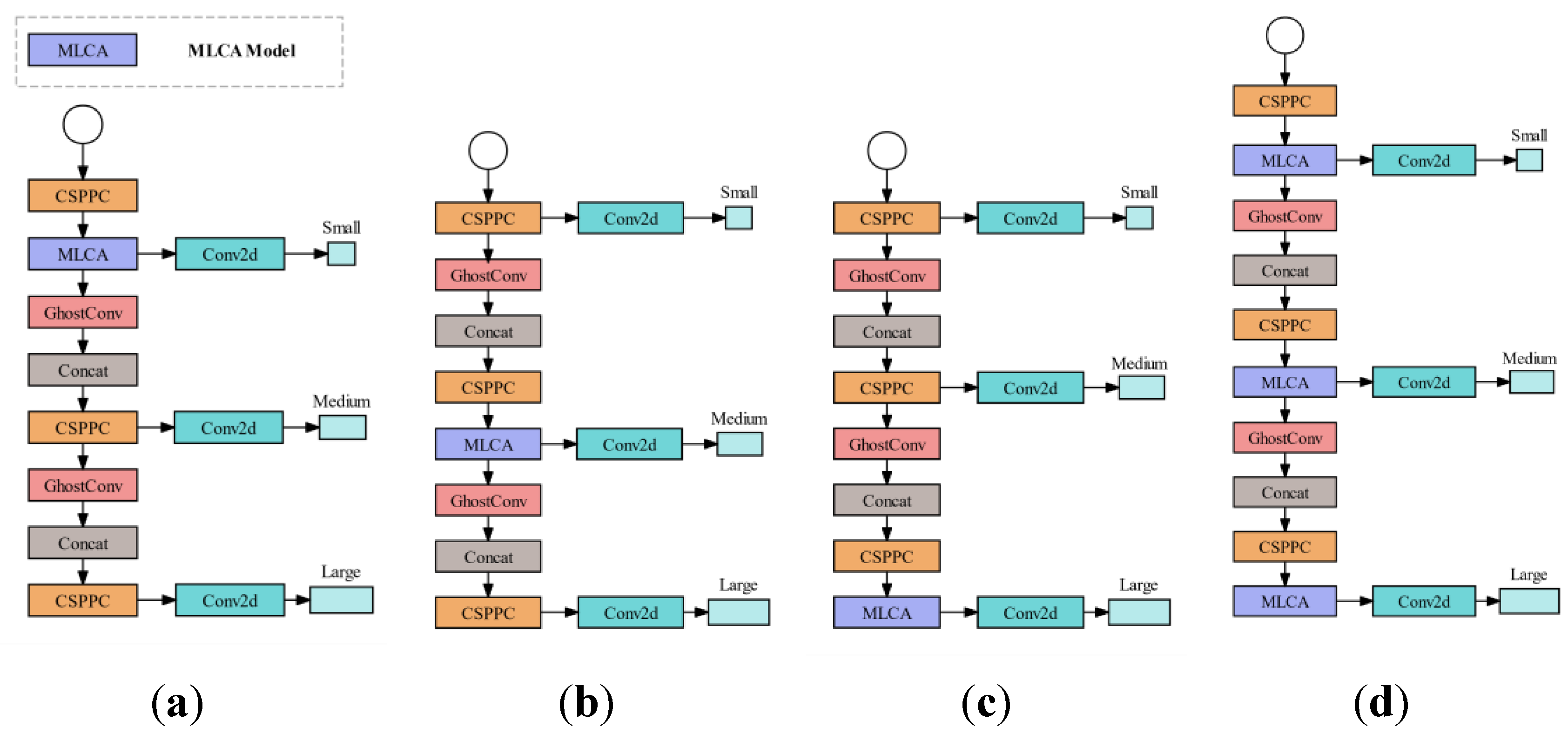

2.2.2. Model Evolution

2.2.3. Network Model Training

2.2.4. Trial Evaluation Indicators

- Calculation of performance metrics for each category of target

- 2.

- Calculation of overall performance metrics for the model

3. Results

3.1. Ablation Experiment and Analysis of SWIM Model

3.1.1. Experiment on Computational Space Complexity and Time Complexity

3.1.2. Accuracy Compensation Experiments

3.1.3. Comparative Experiment on Dataset Augmentation and Partitioning Order

3.1.4. Comparative Experiment on Datasets with Various Enhancement Ratios

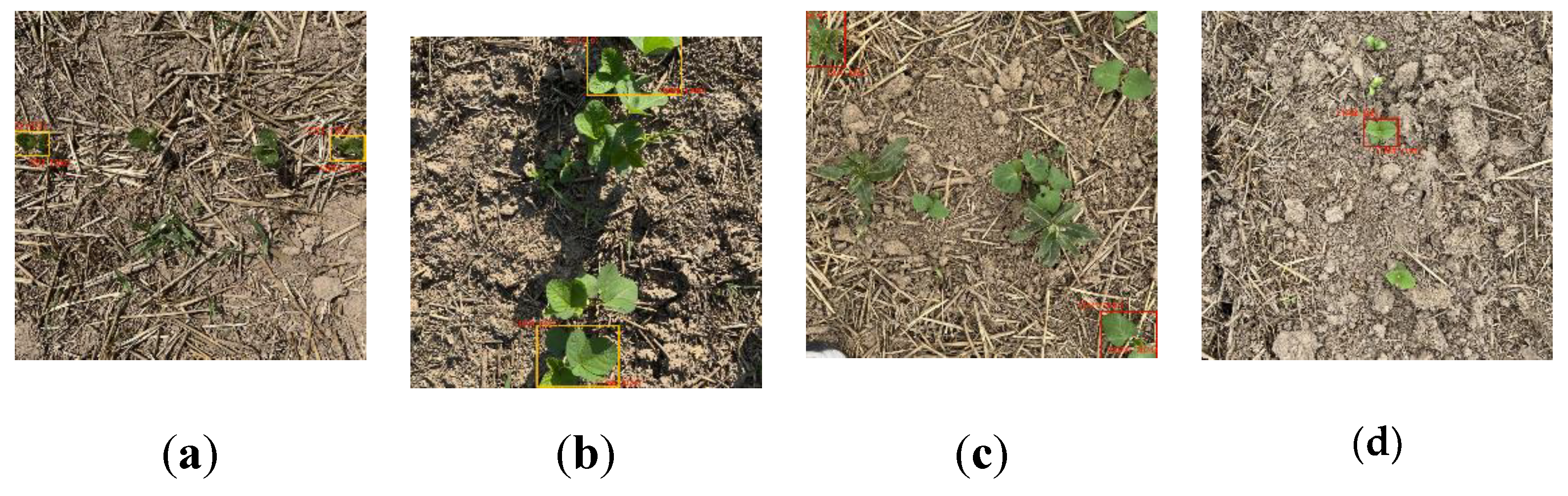

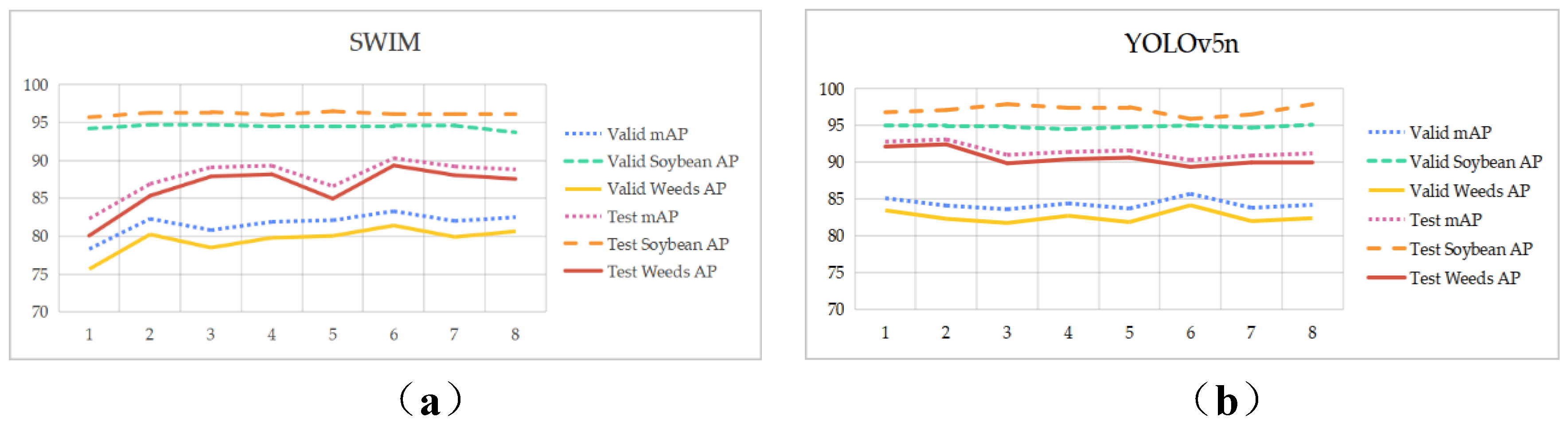

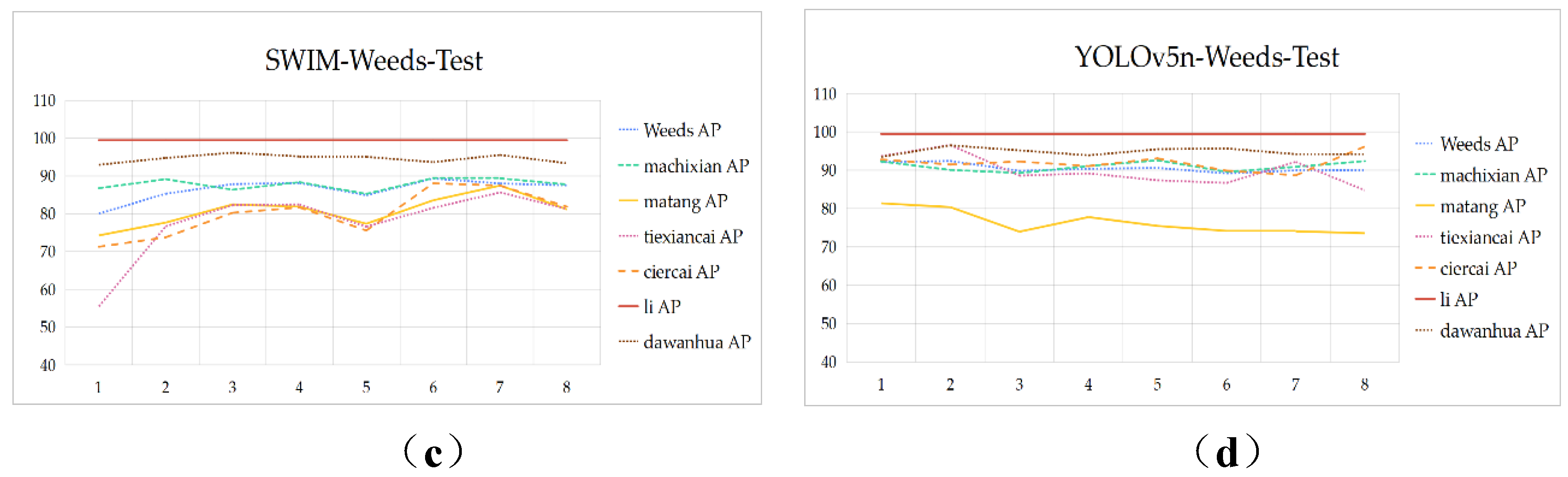

3.2. Evolutionary Experiment

4. Conclusions and Outlooks

4.1. Conclusions

4.2. Outlooks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, Z.; Ying, H.; Chen, M.; Bai, J.; Xue, Y.; Yin, Y.; Batchelor, W.D.; Yang, Y.; Bai, Z.; Du, M.; et al. Optimization of China’s maize and soy production can ensure feed sufficiency at lower nitrogen and carbon footprints. Nature Food 2021, 2, 426–433. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Men, Z.; Bi, C.; Liu, H. Research on Field Soybean Weed Identification Based on an Improved UNet Model Combined With a Channel Attention Mechanism. Frontiers in Plant Science 2022, 13. [Google Scholar] [CrossRef]

- Klerkx, L.; Jakku, E.; Labarthe, P. A review of social science on digital agriculture, smart farming and agriculture 4.0: New contributions and a future research agenda. NJAS - Wageningen Journal of Life Sciences 2019, 90-91, 100315–100315. [Google Scholar] [CrossRef]

- Liu, B.; Li, R.; Li, H.; You, G.; Yan, S.; Tong, Q. Crop/Weed Discrimination Using a Field Imaging Spectrometer System. Sensors 2019, 19, 5154. [Google Scholar] [CrossRef]

- Nahina, I.; Mamunur, R.M.; Santoso, W.; ChengYuan, X.; Ahsan, M.; A., W.S.; Steven, M.; Mostafizur, R.S. Early Weed Detection Using Image Processing and Machine Learning Techniques in an Australian Chilli Farm. Agriculture 2021, 11, 387–387. [Google Scholar] [CrossRef]

- Zuoyu, Z.; Qian, T.; Wu, L. Research on plant image classification method based on convolutional neural network. Internet of things Technology 2020, 10, 72–75. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2015; pp. 3431–3440. [CrossRef]

- Desheng, W.; Yan, X.; Jianping, Z.; Xiangpeng, F.; Yang, L. Weed identification method based on deep convolutional neural network and color migration under the influence of natural illumination. China Sciencepaper 2020, 15. [Google Scholar]

- He, C.; Wan, F.; Ma, G.; Mou, X.; Zhang, K.; Wu, X.; Huang, X. Analysis of the Impact of Different Improvement Methods Based on YOLOV8 for Weed Detection. Agriculture 2024, 14, 674. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Zhang, X.; Cui, J.; Liu, H.; Han, Y.; Ai, H.; Dong, C.; Zhang, J.; Chu, Y. Weed identification in soybean seedling stage based on optimized Faster R-CNN algorithm. Agriculture 2023, 13, 175. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems 2015, 28. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. pp. 21–37. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.1093. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Michael, K.; Fang, J.; Yifu, Z.; Wong, C.; Montes, D. ultralytics/yolov5: v7. 0-yolov5 sota realtime instance segmentation. Zenodo 2022. [Google Scholar]

- Lou, F.; Lu, Y.; Wang, G. Design of a Semantic Understanding System for Optical Staff Symbols. Applied Sciences 2023, 13, 12627. [Google Scholar] [CrossRef]

- Wang, B.; Yan, Y.; Lan, Y.; Wang, M.; Bian, Z. Accurate detection and precision spraying of corn and weeds using the improved YOLOv5 model. IEEE Access 2023, 11, 29868–29882. [Google Scholar] [CrossRef]

- Deng, J.; Zhong, Z.; Huang, H.; Lan, Y.; Han, Y.; Zhang, Y. Lightweight Semantic Segmentation Network for Real-Time Weed Mapping Using Unmanned Aerial Vehicles. Applied Sciences 2020, 10, 7132. [Google Scholar] [CrossRef]

- Wang, S.; Wang, S.; Zhang, H.; Wen, C. Soybean field weed recognition based on light sum-product networks and UAV remote sensing images. Journal of Agricultural Engineering 2019, 35, 89–97. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, 2020; pp. 390–391191111929.

- Ren, D.; Yang, W.; Lu, Z.; Chen, D.; Su, W.; Li, Y. A Lightweight and Dynamic Feature Aggregation Method for Cotton Field Weed Detection Based on Enhanced YOLOv8. Electronics 2024, 13, 2105. [Google Scholar] [CrossRef]

- Cui, C.; Gao, T.; Wei, S.; Du, Y.; Guo, R.; Dong, S.; Lu, B.; Zhou, Y.; Lv, X.; Liu, Q. PP-LCNet: A lightweight CPU convolutional neural network. arXiv 2021, arXiv:2109.15099. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Teng, Y.; Hoffmann, N. NAM: Normalization-based attention module. arXiv 2021, arXiv:2111.12419. [Google Scholar] [CrossRef]

- Razfar, N.; True, J.; Bassiouny, R.; Venkatesh, V.; Kashef, R. Weed detection in soybean crops using custom lightweight deep learning models. Journal of Agriculture and Food Research 2022, 8, 100308. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2016; pp. 770–778. [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. http://arxiv.org/abs/1704.04861.

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural computation 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Liu, R.-M.; Su, W.-H. APHS-YOLO: A Lightweight Model for Real-Time Detection and Classification of Stropharia Rugoso-Annulata. Foods 2024, 13, 1710. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020; pp. 1580–1589. http://arxiv.org/abs/1911.11907.

- Wan, D.; Lu, R.; Shen, S.; Xu, T.; Lang, X.; Ren, Z. Mixed local channel attention for object detection. Engineering Applications of Artificial Intelligence 2023, 123, 106442. [Google Scholar] [CrossRef]

- Alhazmi, K.; Alsumari, W.; Seppo, I.; Podkuiko, L.; Simon, M. Effects of annotation quality on model performance. In Proceedings of the 2021 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), 13-16 April 2021; pp. 063–067. [Google Scholar] [CrossRef]

- Huang, F.; Wang, J.; Yi, P.; Peng, J.; Xiong, X.; Liu, Y. SCSQ: A sample cooperation optimization method with sample quality for recurrent neural networks. Information Sciences 2024, 674, 120730. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.-h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, don't walk: chasing higher FLOPS for faster neural networks. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2023; pp. 12021–12031. [CrossRef]

- Zhong, J.; Chen, J.; Mian, A. DualConv: Dual convolutional kernels for lightweight deep neural networks. IEEE Transactions on Neural Networks and Learning Systems 2022, 34, 9528–9535. [Google Scholar] [CrossRef] [PubMed]

- Al-Hourani, A.; Kandeepan, S.; Lardner, S. Optimal LAP altitude for maximum coverage. IEEE Wireless Communications Letters 2014, 3, 569–572. [Google Scholar] [CrossRef]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar] [CrossRef]

- Zhao, B.; Lu, H.; Chen, S.; Liu, J.; Wu, D. Convolutional neural networks for time series classification. Journal of systems engineering and electronics 2017, 28, 162–169. [Google Scholar] [CrossRef]

- Howard, J.; Ruder, S. Universal language model fine-tuning for text classification. arXiv 2018, arXiv:1801.06146. [Google Scholar] [CrossRef]

| Datasets | Overall | Soybean | Weeds | machixian | matang | tiexiancai | ciercai | li | dawanhua |

|---|---|---|---|---|---|---|---|---|---|

| Test set (89) | 529 | 307 | 222 | 115 | 24 | 21 | 13 | 3 | 46 |

| Valid set (312) | 1957 | 1206 | 751 | 367 | 93 | 47 | 75 | 14 | 155 |

| Training set (935) | 6141 | 3476 | 2665 | 1158 | 321 | 146 | 225 | 24 | 470 |

| Dataset (1336) | 8627 | 4989 | 3638 | 1640 | 438 | 214 | 313 | 41 | 671 |

| Proportion of each target (%) | 100 | 57.83 | 42.17 | 19.01 | 5.08 | 2.48 | 3.64 | 0.48 | 7.78 |

| Parameters | Values |

|---|---|

| Image size | 640×640 |

| Optimizer | SGD |

| Epoch | 200 |

| Batch size | 16 |

| Learning rate | 0.02 |

| Weight decay | 0.0005 |

| Momentum | 0.937 |

| Methods | Ghost | CSPPC | mAP@0.5 | Parameters/M | GFLOPs | Model Size/MB |

|---|---|---|---|---|---|---|

| YOLOv5n | 90.2 | 1.77 | 4.2 | 3.76 | ||

| C_YOLOv5n | √ | 91.8 | 1.27 | 3.0 | 2.75 | |

| G_YOLOv5n | √ | 89.1 | 1.47 | 3.6 | 3.21 | |

| SWIM_T | √ | √ | 88.4 | 0.97 | 2.3 | 2.20 |

| Methods | mAP@0.5 | Parameters/M | GFLOPs | Model Size/MB |

|---|---|---|---|---|

| SWIM_S | 86.8 | 0.97 | 2.7 | 2.21 |

| SWIM_M | 88.1 | 0.97 | 2.7 | 2.21 |

| SWIM | 90.3 | 0.97 | 2.7 | 2.21 |

| SWIM_A | 88.4 | 0.97 | 2.7 | 2.21 |

| Model | Datasets | Valid mAP@0.5(%) | Application Scenario Test mAP@0.5(%) | ||||

|---|---|---|---|---|---|---|---|

| mAP | Soybean AP | Weeds AP | mAP | Soybean AP | Weeds AP | ||

| SWIM | dataset 1 | 99.5 | 99.5 | 99.5 | 79.7 | 94.0 | 77.3 |

| dataset 2 | 68.8 | 92.4 | 64.8 | 78.0 | 94.7 | 75.2 | |

| Models | Datasets | mAP@0.5(%) | ||

|---|---|---|---|---|

| mAP | Soybean Seedling AP | Weeds AP | ||

| SWIM | Original Dataset | 90.3 | 95.9 | 89.4 |

| ESWIM | Evolutionary Dataset | 90.7 | 96.7 | 89.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).