Introduction

Social robots are commonly known as autonomous robots that possess human-like characteristics and engage in social interactions with humans [

1]. Over the past few years, there has been a rising need for social robots in sectors such as healthcare, service [

2], education [

3], and entertainment. This surge in demand has driven a significant advancement in this technology [

4]. We are currently witnessing an increase in the number of new social robots, accompanied by research initiatives from both academic and industry areas.

Social robots have found diverse applications, catering to a broad spectrum of human demographics spanning from children to elderly. It reveals that social robots have been favourably received and embraced by the elderly adults, as opposed to their acceptance in other realms like children’s education [

5].

In recent times, there has been a notable focus on the utilization of social robots for PwD and MCI [

6,

7]. Social robots offer a ray of hope for PwD by providing companionship and engagement, alleviating feelings of isolation and loneliness [

8]. These robots can enhance cognitive functions through interactive games, memory prompts, and personalized activities, contributing to the overall well-being of PwD [

9]. With their ability to provide reminders for medication, appointments, and daily routines, social robots empower PwD and MCI to maintain a sense of independence and control over their lives. Social robots can reduce caregiver burden by assisting in routine tasks, allowing caregivers to focus on providing emotional support and strengthening their relationship with the person with dementia [

10]. Through stimulating interactions, reminiscence therapy, and emotional support, social robots create a conducive environment for improved emotional states and enhanced communication.

The User Experience (UX) and usability of these robots are rooted in their intuitive interfaces, enabling users to communicate and interact seamlessly. This blurs the boundaries between humans and machines, ensuring overall satisfaction and the system’s effectiveness. Through advanced sensors and artificial intelligence, social robots can perceive and respond to human gestures, expressions, and speech, creating an immersive and personalized UX. This tailored interaction enhances engagement, making tasks like companionship, education, and assistance more enjoyable and efficient [

11]. The evolving design of these robots focuses on user-centred principles, aiming to minimize complexity and optimize ease of use. As a result, the realm of social robotics continues to redefine the boundaries of technological interaction by creating intuitive, meaningful, and emotionally resonant experiences for users across diverse contexts.

Even though interacting with social robots can be entertaining, it could pose specific challenges for PwD and MCI, stemming from problems such as memory and language deficits and their special needs. Careful consideration of design and interaction methods, as well as ongoing customization, is essential to address the challenges and ensure that social robots effectively support and engage this target group in a meaningful and respectful manner [

12]. Assessing the usability and UX of the robot agent should commence early in the development process, enabling adjustments or substitutions in its attributes and operations to ensure a favourable UX prior to market launch [

13].

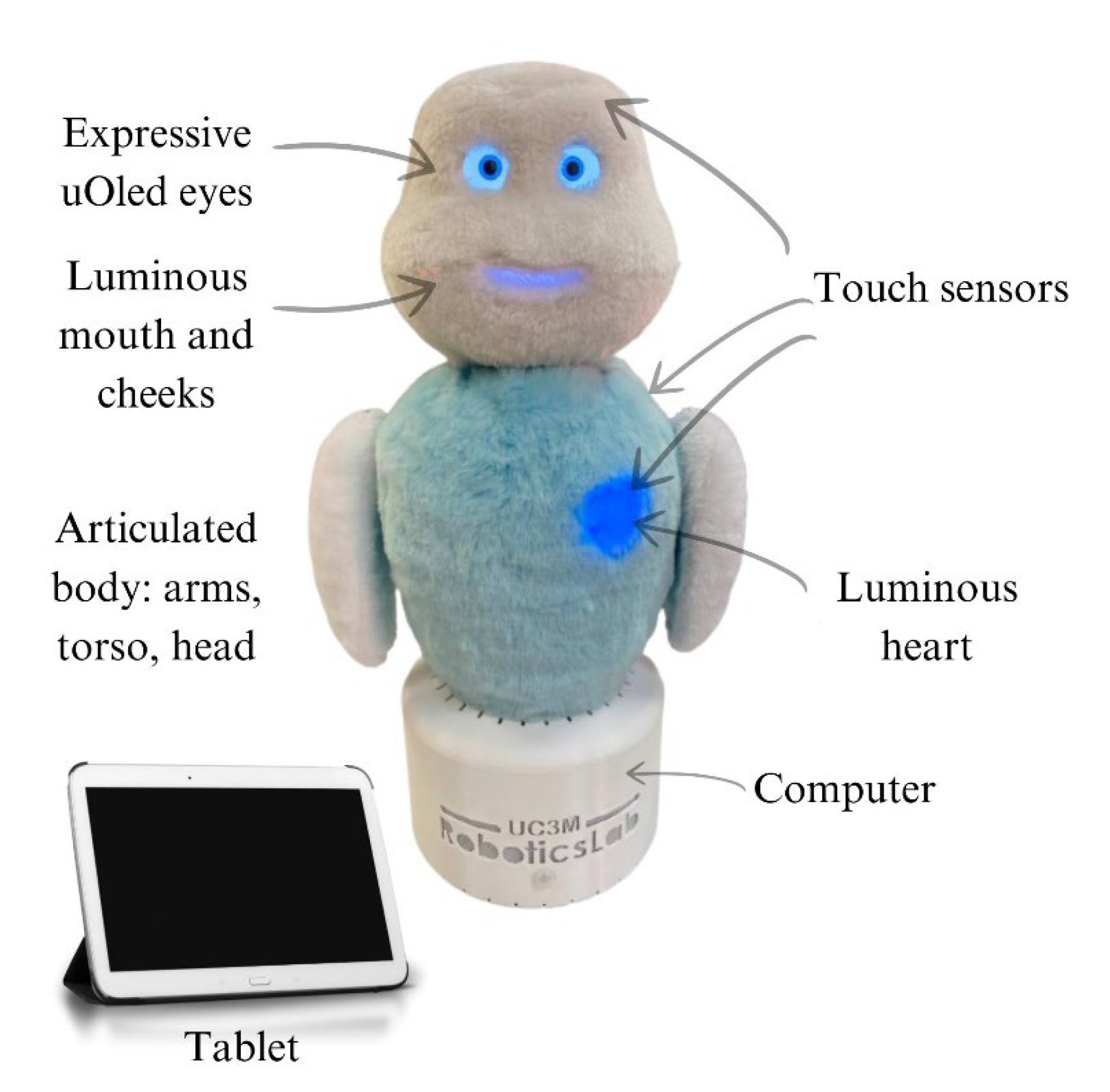

Lately, a robotic system named MINI has been designed and developed within the Robotics Lab research group at Carlos III University in Madrid. Its primary objective is to assist seniors, offering cognitive and social engagement to elderly individuals affected by neurodegenerative disorders, particularly those dealing with cognitive decline [

14]. The robot has the ability to initiate user engagement using both spoken and non-spoken methods. This compact, desktop robot is enveloped in synthetic fur and furnished with a speech recognition mechanism, touch-sensitive areas on its shoulders and heart, an RGB-D camera for assessing depth in the surroundings, expressive uOLED eyes, and a tablet-based interface serving as an input and output mechanism to showcase software content. The robot’s head and arms have movement restricted to a single dimension [

15,

16] (see

Figure 1).

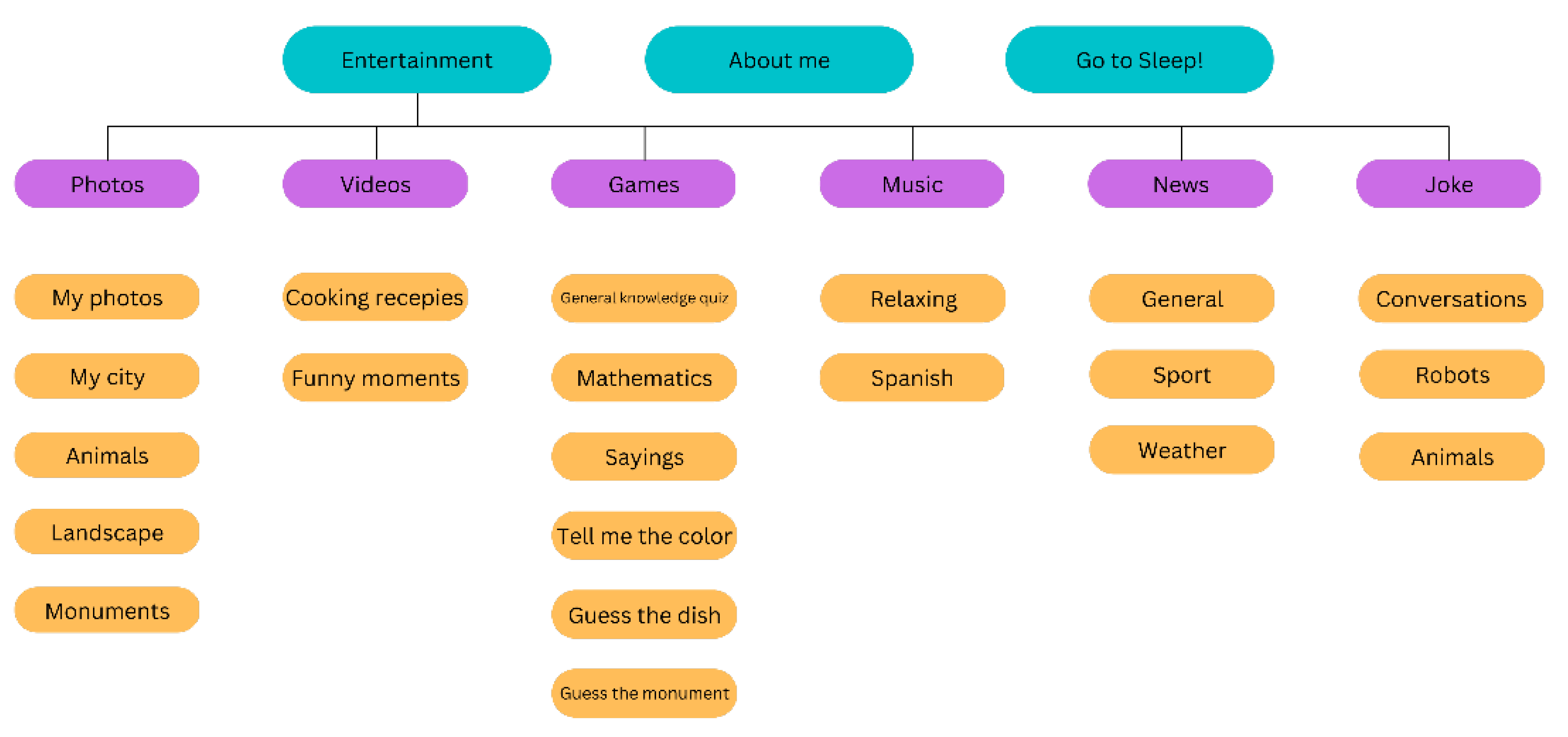

A series of games and activities are designed and integrated into the robotic platform.

Figure 2 demonstrates an overview of the app and games provided by the MINI robot. The service has been designed in a co-creation process and is centred on the needs and preferences of elderly adults and to fulfil the goal of a social companion for the MINI robot.

Methods

Participants and Settings

This mixed-methods study employed ten people with MD and MCI (M = 77.3 years and SD = 4.9). The suggested inclusion criteria were as follows: Age 65 and above, being able to make decisions, being able to read and write, and not having any sense loss that would prevent the usage of the devices (e.g., blindness or deafness). Patients from the INTRAS Foundation’s Memory Clinic and memory workshops were recruited to participate in the study, and the trial was undertaken within the controlled environment of the INTRAS Foundation’s usability laboratory, situated in the locale of Zamora, Spain. Ethical approval for this research was previously obtained from Zamora Health Area Medication Research Ethics Committee, under registration number 574.

Procedure of the Field Test

Participants and/or their next of kin provided informed consent and socio-demographic information via a questionnaire before the test. Following that, the evaluator explained the approach and tasks associated with the MINI robot interaction. The test methodology required the engagement of just one participant at a time, allowing for rigorous monitoring of their task performance and behaviour. The evaluation consisted of a series of predetermined activities divided into sub-tasks. Following the completion of these tasks, participants were invited to complete a questionnaire designed to assess the usability of the MINI robot. Notably, the field tests were thoroughly recorded by audio and video recordings, allowing for further thorough examination. The following protocol was adhered to throughout the users’ interaction with the robot:

The user initiated the test by sitting before the robot and interacting after receiving task instructions. The test was initiated with the robot in a dormant state.

Interaction with MINI began with the user waking the robot with the phrase: “Hello MINI! Upon activation, the robot introduced itself to familiarise the user and allay any initial fears.

Subsequently, the robot inquired about the user’s intended actions, with participants adhering closely to the provided instructions (conveyed on A4-sized sheets). The session encompassed nine distinct tasks, each employing varied forms of interaction; voice interaction (VI) and touch interaction (TI).

The chosen tasks and interaction methods are as follows:

Task 1: Wake up the robot. The participant has to wake up the robot by saying, “Hello, MINI” (VI).

Task 2: See photos of my city. See photos (VI) > From my city (VI). The robot shows through the tablet a series of pictures of the city. Once the test is over, the robot asks, “Did you like the activity?” (TI).

Task 3: Guessing Meals. Entertainment (TI) > Games (TI) > Guessing Meals (VI) Pictures are shown, and different regional dishes are asked about.

Task 4: Stop the test. Before the previous test ended, the participant had to stop the test. by tapping the robot on the shoulder. Then the robot would ask, “Do you want to continue with the activity?” (TI): “Why did you stop the test?” (TI).

Task 5: Weather news. News (VI) > Weather news (TI) The robot gives the weather forecast for the area. Once the test is over, the robot asks, “Did you like the activity?” (TI).

Task 6: Robot suggestion. The robot suggests a task to the participant (IT or IV). Once the test is over, the robot asks, “Did you like the activity?” (TI).

Task 7: Relaxing music. Music > Relaxing music. The robot plays a piece of relaxing music.

Task 8: Stop the test. Before the previous test ended, the participant had to stop the test again (TI) by tapping the robot on the shoulder. Then the robot would ask, “Do you want to continue with the activity?” (TI) or “Why did you stop the test?” (TI).

Task 9: Go to sleep. when the robot asks. “What do you want to do?” the participant must say “Go to sleep” (VI).

Method of Data Collection

Data collection consisted of three phases: the administration of pre-test questionnaires to gather socio-demographic information, direct observations and video recordings, and post-test evaluation through questionnaires.

Sociodemographic survey: to gather relevant sociodemographic details from participants, including factors such as age, gender, educational background, extent of cognitive impairment, and utilization of information and communication technology (ICT).

The System Usability Scale (SUS): a widely employed questionnaire-based tool developed by John Brooke in 1986 to evaluate the perceived usability of diverse products, services, and systems, spanning software, websites, applications, and technological devices. This approach has gained significant popularity in assessing user satisfaction and experience. The SUS (Supplementary file 1) utilizes a straightforward 10-item Likert scale questionnaire, systematically designed to capture users’ subjective views on usability dimensions like ease of use, efficiency, learnability, and user-friendliness. Respondents rate their agreement level with statements related to the evaluated system. Post-questionnaire, scores are aggregated to form a usability score between 0 and 100, with higher scores indicating better perceived usability. The SUS serves as a quantitative tool to compare user satisfaction and usability across various systems, aiding in informed decision-making for enhancing the UX. In essence, the System Usability Scale is a vital resource for evaluating user perceptions and guiding design and usability improvements for more user-friendly products and systems [

17]. The SUS test yields a score between 0 and 100, indicating how useful a device is for the user. A score of 68 points or above indicates good usability.

Data Analysis

During the field test, observation notes were recorded. Both direct and video observations were conducted to collect data on user performance, time to complete tasks, success rates, failure rates, incidents, user and robot behaviours, and other pertinent information. The preliminary scores for SUS were presented using basic descriptive statistics for each item, including mean scores, standard deviation, range, and mode. Furthermore, a total SUS score was computed for each participant, and the mean of all SUS scores was derived.

Results

User testing; Observations

An assessment of several elements influencing correct test performance was considered as follows:

- Difficulty in Understanding the Exercise Methodology (DEM): User misunderstands the exercise instructions.

- Difficulty in Recognizing the Response Method (DRM): User is unsure which device to use for responses (E.g., the user tries to answer verbally when the response method is through the buttons on the tablet screen.).

- Difficulty in Using the Device Corresponding to the Response Method (DUR): User struggles with using the response device or the robot fails to understand the user.

- Difficulty with the Content of the Response (DCR): User does not know the correct answer or what to respond.

These difficulties may arise at the beginning of each task and, depending on the case, persist during the test. Therefore, it is important to take into consideration the help that users may have received in the different tasks.

Table 2 indicates the percentage of participants that needed assistance from the instructor at the start of each task with “comprehending the written instructions of each task” and “response method (verbally or touch)”. As can be observed, the percentages of required help from the instructor in people with MD are often higher than in MCI. Furthermore, both people with MD and MCI needed more help with the response method than with the instructions. Tasks that required reacting via the robot’s touch sensors (4a and 8a) needed more help in both groups.

The majority of the tasks required both verbal and physical interactions.

Table 1 shows that when there is a shift in response method between subtasks, individuals with MD have greater issues and require more assistance.

Regarding the response method, as explained in the difficulties that may arise, it is necessary to distinguish between problems caused by the user not knowing exactly which devices he/she has to answer (DRM) (for example, waiting for the buttons to appear on the screen when in fact he/she has to answer by voice) and problems caused by the use of the response device itself (DUR). Considering the second aspect, the following issues were noticed:

Micros (voice): Because the MINI’s speech recogniser is not always turned on, users must wait until the robot beeps before responding. This caused confusion since many users responded too quickly. There were also instances where users did not vocalise correctly (e.g., too soft voice, spelling the answer) resulting in the robot being unable to comprehend them, even though they reacted adequately to what was asked of it.

Screen-Tablet (touch): Although this device did not cause any problems, there were some instances where users did not tap the screen button properly because they did not tap it with their fingertips, but with their fingernails, or they pressed it too quickly and loosely, causing the tablet to not process it.

Touch sensors: The main issue with this kind of response was that users had difficulty determining where they needed to touch the robot in order to activate the sensors.

Evidently, all these issues manifested themselves equally during task completion. The level of success achieved by the distinct groups in each task, along with the corresponding challenges that emerged, are detailed in

Table 2. On one hand, it’s evident that the MD group encountered challenges mainly linked to response uncertainty (DCR) and complexities in grasping the task methodology (DEM), rather than the actual response method (DRM). The MCI group encountered similar difficulties to the MD group, albeit with more pronounced struggles in using the response device (DUR). Importantly, a noteworthy portion of these challenges stemmed from the robot’s struggles in comprehending the provided answers.

Furthermore, specific technical deficiencies were identified as a result of our analysis (

Table 3). It is essential to clarify that we are only disclosing the game-specific issue detected during the field test. It is possible that additional imperfections existed within other games that were not selected and played by the user during this particular testing phase.

System Usability Scale

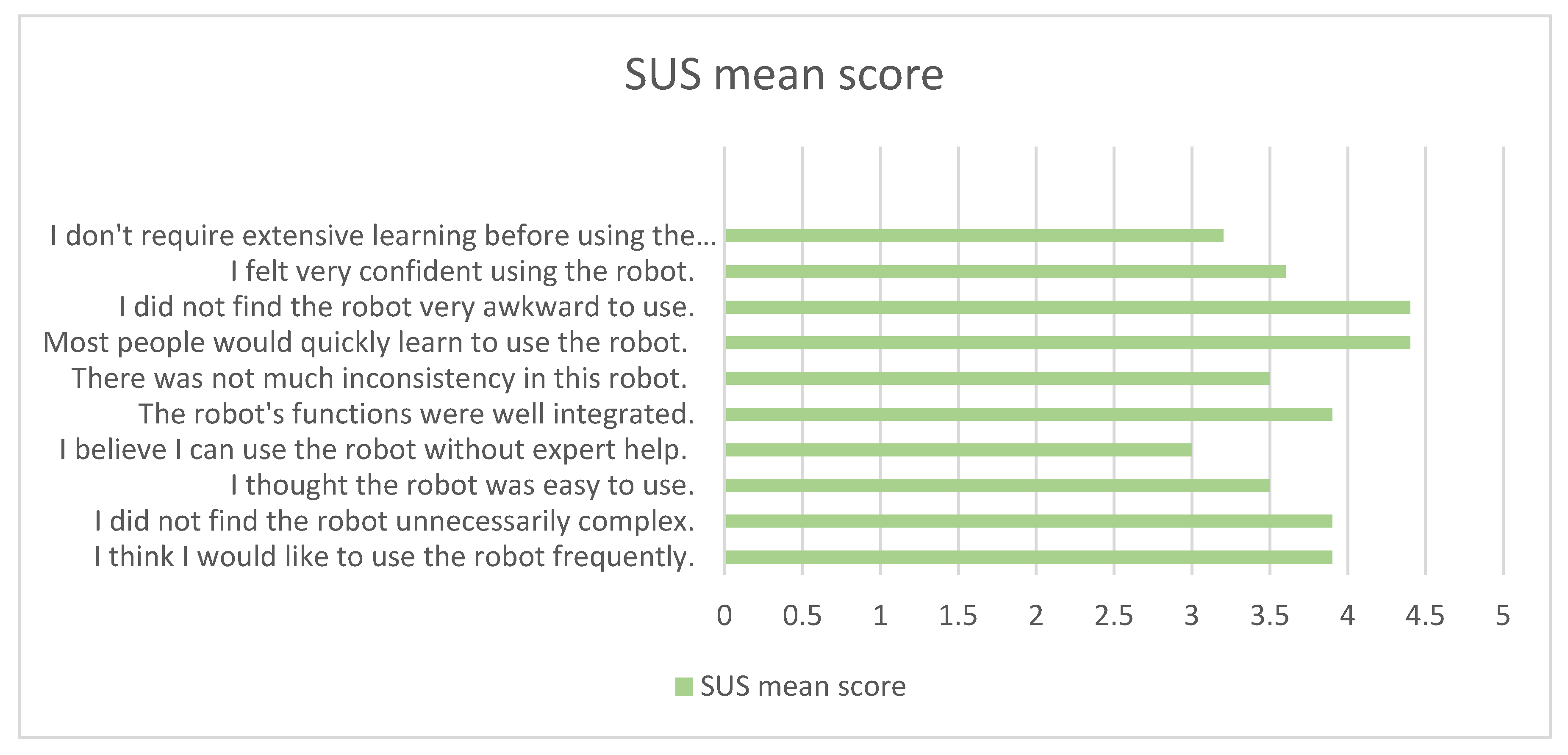

To provide a more comprehensive examination of the SUS score, an item-by-item analysis was conducted to determine the direction of participants’ responses. The descriptive data for the items can be found in Supplementary Material 1. The items were both positively and negatively oriented. Negative items were numerically inverted so that the mean, on a scale of 1-5, represents the lowest to highest level of agreement for all items.

Figure 3 shows the SUS questionnaire mean scores for each item. It can be seen that the majority of the ratings supported the usability of the MINI robot. Of particular note are the items that received the highest levels of agreement, including the users’ belief that the majority of people would quickly learn how to use it (4.40), that the system was not cumbersome to use (4.40), that they will use it frequently (3.90), that the system’s various features were well integrated (3.90), and that there was no perception of excessive complexity in the system (3.90).

The lowest ratings were assigned to the need for instructor assistance in operating the robot (3.00) and the need to learn many things before utilising the system (3.20). There were no negative ratings in the mean scores (3>).

The SUS test gives a total score between 0 and 100, indicating how useful a device is to the user. A score of 68 ≤ indicates favourable usability. With an average SUS score of 68.3 out of 100, the device falls into the “good” usability category (68–80.3).

Discussion

In this study, we examined the UX and usability of the MINI robot and the challenges that might arise during the human-robot interactions with individuals with MD and MCI in laboratory settings. The qualitative and quantitative data gathered from the field test observations and video analysis revealed several facets of the robot interface that should be considered when employed to serve these target groups.

Based on our observational data, we identified several challenges users face when interacting with social robots. These include difficulties in understanding exercise instructions (DEM), recognizing the appropriate response method (DRM), using the correct device for responses (DUR), and knowing the content of the response (DCR). Therefore, they relied on the instructor assistance for each task, for comprehending written instructions and responding appropriately, whether verbal or tactile.

Tasks requiring interaction via the robot’s touch sensors (tasks 4a and 8a) showed an increased need for assistance in both groups, underscoring the inherent complexity of touch-based interactions. Users’ difficulties in completing these tasks could be attributed to unfamiliarity with the robot, uncertainty about how and where to touch, and confusion when shifting between verbal and touch interaction methods. This highlights the heightened challenge posed by having two interaction channels (touch and speech) and the overall complexity of touch-based interactions. Similar studies have documented these challenges in human-robot interactions. For instance, research by Leite et al. (2013) [

18] found that users often struggle with touch-based tasks due to the lack of clear tactile feedback and the ambiguity of touch-sensitive areas on the robot. Additionally, the study by Dragan and Srinivasa (2013) [

19] highlighted that users frequently experience difficulty when required to switch interaction modalities, such as from speech to touch. This transition can be cognitively demanding, particularly for individuals with motor or cognitive impairments. Addressing these challenges requires a multifaceted approach, including better design of touch interfaces, clearer instructions for users, and adaptive support systems that can help users navigate multiple interaction modalities seamlessly.

Turning attention to the response method itself, as elaborated upon in the context of potential difficulties, it is imperative to differentiate between challenges arising from user confusion, recognizing response methods (DRM), and challenges stemming from the use of the response device (DUR). Regarding the issues encountered in the response method, we noticed that the majority of them were caused by the users’ confusion to use the response device, or the robot having difficulty recognising the answer due to its limitations in comprehending speech. Issues regarding the micros, touch sensors and tablet interface emerged in similar studies with social robots [

9,

20]. The poor speech recognition system has always been listed as the main usability issue in most research on social robots with speech capabilities [

8,

9,

20,

21]. So far, social robots lack the ability to engage in seamless conversations with users. At the same time, our target population is not used to such conversational agents. Consequently, there is an urgent need for significant improvements in the speech recognition system. It is therefore imperative to incorporate an initialisation or trial phase to ensure optimal and efficient use of the robot.

Concerning task performance, particularly in the MD group, difficulties are more often caused by not knowing the correct answer (DCR) and difficulty understanding the task methodology (DEM) than by the subtleties of the response method (DRM). Hence, our suggestion is to provide explicit usage instructions using uncomplicated language, ensuring readability and accessibility for individuals with MD and MCI. Another study of the MINI robot [

22] found that stakeholders preferred activities that were not similar to quizzes to avoid potential conflicts with cognitive challenges. However, some participants in this study expressed their readiness for moderately challenging tasks. Consequently, it is essential for developers to adjust the difficulty levels of task content to accommodate the cognitive status and preferences of users. This was previously suggested by the developers of the MINI robot [

23,

24] regarding personalisation of human-robot interaction.

The challenges faced by the MD and MCI groups highlight the intricate relationship between cognitive abilities, device operability, and the overall success of human-robot interactions. These findings emphasize the crucial importance of designing user-friendly interfaces, clear user instructions, and interpretive capabilities within robotic systems to improve overall usability and facilitate seamless user engagement.

The overall SUS score indicates a good usability level, verifying the system’s effectiveness and alignment with user expectations. The majority of participants perceived minimal difficulty with learning to use the robot, operational complexity, and interaction with the MINI robot, and would like to use the robot frequently. Additionally, they acknowledged the smooth integration of system functionalities and perceived the system’s complexity as manageable. Less favourable ratings were assigned to the perceived need for instructor assistance in operating the robot and the need for pre-use learning. This aligns with their performance and accomplishments and the obstacles they encountered in achieving tasks during the field test. Relatively lower scores in feeling confident operating the robot, the robustness of the robotic system, and perceived ease of use may be mainly due to technical issues and design problems inherent in the robot. In another study [

25] investigating the acceptance of the MINI robot, the perceived ease of use ratings after one month of interaction were similar to or slightly higher than those in our current study. This observation suggests that prolonged interaction may potentially increase perceived ease of use.

Based on our results and observations, we propose a list of modifications for future versions of the robot (

Table 4). As MINI is a research platform under continuous development, these suggestions aim to enhance the user experience when interacting with the robot. Given that MINI’s verbal communication relies on state-of-the-art software for recognizing user utterances and synthesizing responses, our recommendations for the speech interface can be applied to other smart devices, such as smart speakers and smartphones, to improve their verbal interaction capabilities.

Limitations of the Study

Despite these promising findings, several limitations in this study warrant attention. First, our study involved a sample of 10 participants. While this number of participants was sufficient to conduct a usability test, from a scientific perspective [

26], its potential impact on the broader generalisability of our findings warrants consideration. Secondly, this study was conducted using specific apps and games that were predefined. Therefore, the results should be interpreted with caution when projecting to different versions of the robot with alternative apps and games. Different features of other apps and games could potentially influence the usability dynamics of the robot.

Conclusion

In conclusion, our study deeply examines the MINI robot’s UX and usability challenges. The robot has strengths in easy learning, simple operation, and seamless feature integration, but also highlights areas for improvement, including general system, game and apps and speech and voice related features. The author assumes that using the MINI robot effectively requires clear and detailed instructions and instructor assistance. By making suggested changes and listening to users, developers can improve the robot’s usability and UX, ensuring the overall effectiveness. This process can turn the MINI robot into a more user-centred system. To overcome challenges, careful design and interaction methods, along with ongoing adjustments, are essential. This comprehensive approach ensures social robots can effectively engage individuals with dementia in a meaningful and respectful manner.

Authors’ Contributions

Conceptualization and methodology: AMA, JMT; Robot interface and task design: ACG, MM; Field test and data collection: AMA, JMT; Data analysis: AMA, JMT; Writing, review and editing: All; Supervision: MFM, MAS, HVR.

Acknowledgement and Funding

This research was carried out as part of the Marie Curie Innovative Training Network (ITN) action, H2020-MSCA-ITN-2018, under grant agreement number 813196.

Ethics Approval: Approval was obtained from the ethics committee of Zamora Health Area Medication Research Ethics Committee, under registration number 574. The procedures used in this study adhere to the tenets of the Declaration of Helsinki.

Consent to Participate: Written informed consent was obtained from the participants and / or their next of kin.

Data Availability

Some data are not publicly available because the data contains information that could compromise research participant privacy.

Supplementary Materials

The following supporting information can be downloaded at the website of this paper posted on Preprints.org.

Conflicts of Interest

No conflict of interest was declared.

Abbreviations

| MD |

Mild dementia |

| MCI |

Mild cognitive impairment |

| PwD |

people with dementia |

| SUS |

System Usability Scale |

References

- Henschel, A., G. Laban, and E.S. Cross, What Makes a Robot Social? A Review of Social Robots from Science Fiction to a Home or Hospital Near You. Current Robotics Reports, 2021. 2(1): p. 9-19. [CrossRef]

- Gerłowska, J., et al., Assessment of Perceived Attractiveness, Usability, and Societal Impact of a Multimodal Robotic Assistant for Aging Patients With Memory Impairments. 2018. 9. [CrossRef]

- Amanatiadis, A., et al. Interactive social robots in special education. in 2017 IEEE 7th international conference on consumer electronics-Berlin (ICCE-Berlin). 2017. IEEE.

- Shourmasti, E.S., et al., User Experience in Social Robots. 2021. 21(15): p. 5052. [CrossRef]

- Reich-Stiebert, N. and F. Eyssel, Learning with Educational Companion Robots? Toward Attitudes on Education Robots, Predictors of Attitudes, and Application Potentials for Education Robots. International Journal of Social Robotics, 2015. 7(5): p. 875-888. [CrossRef]

- Góngora Alonso, S., et al., Social Robots for People with Aging and Dementia: A Systematic Review of Literature. Telemed J E Health, 2019. 25(7): p. 533-540.

- Mahmoudi Asl, A., et al., Methodologies Used to Study the Feasibility, Usability, Efficacy, and Effectiveness of Social Robots For Elderly Adults: Scoping Review. J Med Internet Res, 2022. 24(8): p. e37434.

- Mahmoudi Asl, A., et al., Methodologies Used to Study the Feasibility, Usability, Efficacy, and Effectiveness of Social Robots For Elderly Adults: Scoping Review. 2022. 24(8): p. e37434.

- Yu, C., et al., Socially assistive robots for people with dementia: Systematic review and meta-analysis of feasibility, acceptability and the effect on cognition, neuropsychiatric symptoms and quality of life. Ageing Research Reviews, 2022. 78: p. 101633.

- Busse, T.S., et al., Views on Using Social Robots in Professional Caregiving: Content Analysis of a Scenario Method Workshop. 2021. 23(11): p. e20046.

- van Greunen, D. User experience for social human-robot interactions. in 2019 Amity International Conference on Artificial Intelligence (AICAI). 2019. IEEE.

- Asl, A.M., et al., The usability and feasibility validation of the social robot MINI in people with dementia and mild cognitive impairment; a study protocol. BMC Psychiatry, 2022. 22(1): p. 760.

- Lindblom, J., B. Alenljung, and E. Billing, Evaluating the User Experience of Human–Robot Interaction, in Human-Robot Interaction: Evaluation Methods and Their Standardization, C. Jost, et al., Editors. 2020, Springer International Publishing: Cham. p. 231-256.

- Salichs, M.A., et al., Mini: A New Social Robot for the Elderly. International Journal of Social Robotics, 2020. 12(6): p. 1231-1249.

- Salichs, E., et al. A social robot assisting in cognitive stimulation therapy. in International Conference on Practical Applications of Agents and Multi-Agent Systems. 2018. Springer.

- Velázquez-Navarroa, E., et al., El robot social Mini como plataforma para el desarrollo de juegos de interacción multimodales.

- Brooke, J.J.U.e.i.i., Sus: a “quick and dirty’usability. 1996. 189(3): p. 189-194.

- Leite, I., C. Martinho, and A.J.I.J.o.S.R. Paiva, Social robots for long-term interaction: a survey. 2013. 5: p. 291-308.

- Dragan, A.D. and S.S. Srinivasa, A policy-blending formalism for shared control. 2013. 32(7): p. 790-805.

- Olde Keizer, R.A.C.M., et al., Using socially assistive robots for monitoring and preventing frailty among older adults: a study on usability and user experience challenges. Health and Technology, 2019. 9(4): p. 595-605.

- Cavallo, F., et al., Development of a Socially Believable Multi-Robot Solution from Town to Home. Cognitive Computation, 2014. 6(4): p. 954-967.

- Mahmoudi Asl, A., et al., Potential Facilitators of and Barriers to Implementing the MINI Robot in Community-Based Meeting Centers for People With Dementia and Their Carers in the Netherlands and Spain: Explorative Qualitative Study. J Med Internet Res, 2023. 25: p. e44125.

- Maroto-Gómez, M., et al., An adaptive decision-making system supported on user preference predictions for human–robot interactive communication. User Modeling and User-Adapted Interaction, 2023. 33(2): p. 359-403.

- Maroto-Gómez, M., et al., A biologically inspired decision-making system for the autonomous adaptive behavior of social robots. Complex & Intelligent Systems, 2023.

- Aysan Mahmoudi Asl, J.M.T.-G., Álvaro Castro-González et al., Acceptability of the Social Robot Mini and Attitudes of People with Dementia and Mild Cognitive Impairment: A Mixed Method Study.

- Lewis, J.R., Sample sizes for usability studies: additional considerations. Hum Factors, 1994. 36(2): p. 368-78.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).