1. Introduction

Kubernetes is increasingly emerging as the primary technology in HPC and AI applications due to its robust capabilities in automating containerized applications' deployment, scaling, and administration. Initially created by Google, it has quickly become the widely accepted standard for container orchestration because of its robust architecture, adaptability, and scalability. It has dramatically transformed how organizations deploy and manage their software by effectively handling large clusters of hosts, both on-premises and in the cloud. This has made it an essential tool for modern IT infrastructure.

Scalable and dependable computing environments are of utmost importance in high-performance computing. HPC and AI workloads frequently encompass intricate simulations, data analysis, and computational tasks that require significant computational capacity and effective resource utilization. Kubernetes meets these requirements by offering a scalable infrastructure that can adapt to changing workloads, guaranteeing efficient resource usage and maximum availability.

For instance, let's consider the situation where you need to oversee several Kubernetes clusters specifically designed for different workloads. This could include managing clusters for high-bandwidth video streaming and traditional web server applications. Video streaming applications necessitate resilient and low-latency network setups to effectively manage substantial incoming data volumes, whereas web servers prioritize swift response times and consistent availability amidst fluctuating workloads. Kubernetes enables the customization of clusters to effectively meet diverse requirements by providing an extensible architecture and support for custom network configurations through CNIs. The type of workload is directly related to the kind of traffic that workloads will use, as we expect that there will be a massive difference between the TCP and UDP performance in these scenarios. We will analyze both scenarios to get a picture of the whole performance.

Nevertheless, the wide range of CNIs offered by Kubernetes poses challenges in assessing and selecting the most suitable CNI for workloads and environments. An automated and orchestrated testing methodology utilizing tools like Ansible is highly valuable. Ansible, renowned for its straightforwardness and robust automation capabilities, can automate various CNI deployments and testing across multiple Kubernetes clusters. Automating these processes makes conducting thorough and replicable tests that assess performance metrics like throughput, latency, and resource consumption across various load scenarios feasible. The critical point is that efficient communication between nodes is vital for high performance, especially in massively parallel systems [

1].

Implementing a standardized testing methodology provides numerous advantages. Firstly, it guarantees uniformity in testing, as each CNI is assessed under the same conditions, eliminating any variations and potential prejudices in the outcomes. The ability to repeat experiments is essential for making well-informed decisions, as it offers dependable data that can be utilized to compare the performance and appropriateness of various CNIs for specific applications. Furthermore, automation dramatically diminishes the time and exertion needed to carry out these tests, facilitating quicker repetition and more frequent testing cycles. This flexibility is especially advantageous in dynamic environments where new computer network interfaces (CNIs) and updates are regularly released.

Moreover, implementing a standardized and automated testing framework allows for the democratization of the evaluation process, enabling a more comprehensive range of individuals and organizations, including smaller entities and individual developers with limited resources, to participate in the testing process without requiring extensive manual testing. Facilitating a lucid and user-friendly protocol for CNI assessment enhances transparency and fosters the implementation of optimal network configuration and optimization techniques.

In 2022, Shengyan Zhu, Caiqiu Zhou, and Yongjian Wang introduced a novel hybrid wireless-optical on-chip network as a solution to address the increasing data interaction difficulties in IoT HPC systems. This concept optimizes multicast message transmission by incorporating wireless single-hop transmission to minimize communication distance and an on-chip surface waveguide for reliable wireless transmission. An adjustable routing technique ensures efficient coordination between the optical and wireless layers, alleviating the transmission burdens of each layer individually. Compared to traditional optical NoC with mesh topology, their simulations significantly improve average delay, energy consumption, and network performance [

2].

In 2022, Y. Shang, Xingwen Guo, B. Guo, Haixi Wang, Jie Xiao, and Shanguo Huang proposed the use of Deep Reinforcement Learning (DRL) to create a flexible optical network for HPC systems. This network can adapt to changes in traffic efficiently. The network can switch between several topologies to optimize performance seamlessly. The network prototype was evaluated utilizing Advantage Actor-Critic (A2C) and Deep Deterministic Policy Gradient (DDPG) algorithms, leading to a performance enhancement of up to 1.7 times. This approach offers a promising alternative for efficiently controlling traffic in HPC systems. However, further investigation is necessary to enhance the deep reinforcement learning (DRL) models and easily integrate them into existing HPC infrastructures [

3].

In their 2023 paper, Harsha Veerla, Vinay Chindukuri, Yaseen Junaid Mohammed, Vinay Palakonda, and Radhika Rani Chintala analyze the rapid advancement of HPC systems and their use in several domains, including machine learning and climate modeling. They emphasize the need for advanced hardware and software technologies to enhance performance. The study highlights the importance of HPC in shaping the advancement of future networking. It addresses complex computing issues through parallel processing and specialized hardware. It is recommended that future research focuses on developing optimization algorithms to improve performance management in HPC networks [

4].

The 2022 study by Min Yee Teh, Zhenguo Wu, M. Glick, S. Rumley, M. Ghobadi, and Keren Bergman examines the performance constraints in reconfigurable network topologies built explicitly for HPC systems. The user is comparing two types of networks: ToR-reconfigurable networks (TRNs) and pod-reconfigurable networks (PRNs). The authors found that PRNs demonstrate better overall trade-offs and considerably outperform TRNs in high fan-out communication patterns. This study highlights the importance of tailoring network architecture based on traffic patterns to improve performance. It implies that further investigation is necessary to optimize these structures for broader uses [

5].

In their 2022 paper, Po Yuan Su, D. Ho, Jacy Pu, and Yu Po Wang investigate the implementation of the Fan-out Embedded Bridge (FO-EB) package to meet the demands of HPC applications, particularly the necessity for fast data rates and high-speed transmission, by integrating chiplets. The FO-EB package offers enhanced flexibility and higher electrical performance than traditional 2.5D packages. The paper highlights the challenges in efficiently controlling heat and proposes techniques to enhance the capability of FO-EB packages in dealing with thermal problems. Additional research is necessary to improve the integration process to enhance performance and reduce expenses [

6].

In 2021, Pouya Haghi, Anqi Guo, Tong Geng, A. Skjellum, and Martin C. Herbordt conducted a study to investigate the impact of workload imbalance on the performance of in-network processing in HPC systems. After examining five proxy applications, it was concluded that 45% of the total time used for execution on the Stampede2 compute cluster is wasted due to an uneven distribution of work. This inefficiency highlights the need for enhanced workload distribution strategies to optimize network performance. It is recommended that future research focuses on creating effective methods for distributing workloads across network resources [

7].

In 2023, Steffen Christgau, Dylan Everingham, Florian Mikolajczak, Niklas Schelten, Bettina Schnor, Max Schroetter, Benno Stabernack, and Fritjof Steinert introduced a communication stack explicitly designed to integrate Network-attached Accelerators (NAA) that utilize FPGA technology in HPC data centers. The planned stack uses high-speed network connections to enhance energy efficiency and adaptability. The empirical findings achieved using a 100 Gbps RoCEv2 exhibit performance were close to the theoretical upper limits. This approach offers a promising alternative for improving the efficiency of HPC applications. However, further research is necessary to explore potential enhancements and incorporations [

8].

In their 2022 publication, S. Varrette, Hyacinthe Cartiaux, Teddy Valette, and Abatcha Olloh present a detailed report on the University of Luxembourg's initiatives to enhance and reinforce its HPC networks. The study significantly improved the capacity and performance by merging Infiniband and Ethernet-based networks. The technique consistently maintained a high bisection bandwidth and exhibited minor performance limitations. The solutions could serve as a model for other HPC centers aiming to enhance their network infrastructures. Further research should prioritize implementing additional scalability and optimization strategies [

9].

The 2021 study was authored by Yijia Zhang, Burak Aksar, O. Aaziz, B. Schwaller, J. Brandt, V. Leung, Manuel Egele, and A. Coskun introduces a Network-Data-Driven (NeDD) job allocation paradigm that seeks to enhance the performance of HPC by considering real-time network performance data. The NeDD framework monitors network traffic and assigns tasks to nodes based on the level of network sensitivity. The studies showcased an average reduction in execution time of 11% and a maximum reduction of 34% for parallel applications. Additional research should improve the NeDD framework to suit complex network scenarios and integrate it into broader HPC environments [

10].

In their 2021 study, researchers T. Islam and Chase Phelps have collaborated to create a course that focuses on instructing students in constructing scalable HPC systems that prioritize the reduction of parallel input/output (I/O). The curriculum emphasizes addressing the challenges presented by extensive parallelism and different architectures. The course provides students with the skills and expertise to effectively navigate complex current HPC systems through hands-on training and real-world situations. Additional research could analyze the effectiveness of these instructional techniques in producing skilled HPC specialists [

11].

The 2021 paper authored by Fulong Yan, Changchun Yuan, Chao Li, and X. Deng presents FOSquare, an innovative interconnect topology designed for HPC systems. FOSquare leverages rapid Optical Switches (FOS) and distributed rapid flow control to enhance performance. The architecture maximizes bandwidth, latency, and power efficiency performance. The simulation findings indicate that FOSquare outperforms traditional Leaf-Spine topologies by reducing latency by over 10% in actual traffic conditions. It is recommended to provide higher importance to investigating the implementation of FOSquare in real-world HPC environments and improving its efficiency [

12].

The 2021 editorial by Jifeng He, Chenggang Wu, Huawei Li, Yang Guo, and Tao Li introduces a special issue focused on energy-efficient designs and dependability in HPC systems. The selected papers cover many subjects, including FPGA-based neural network accelerators and frameworks for resource allocation. The issue highlights the importance of developing HPC systems that are both energy-efficient and reliable to meet the demands of growing applications. Additional research should be undertaken to examine innovative approaches for enhancing the effectiveness and durability of high-performance computing networks [

13].

Saptarshi Bhowmik, Nikhil Jain, Xin Yuan, and A. Carried out a research investigation in 2021. Using extensive system simulations, Bhatele thoroughly analyzed how hardware design parameters affect the efficiency of GPU-based HPC platforms. The study examines the number of GPUs assigned to each node, the capacity of network links, and the policies that drive NIC scheduling. The findings suggest that these parameters significantly influence the efficiency of real-world HPC tasks. Additional research is required to fine-tune these parameters to improve the performance and efficiency of future HPC systems [

14].

The individuals mentioned include Mohak Chadha, Nils Krueger, Jophin John, Anshul Jindal, M. Gerndt, and S. Published a research article in 2023. Benedict explores the potential of WebAssembly (Wasm) to distribute HPC applications that utilize the Message Passing Interface (MPI). The MPIWasm embedder enables the efficient execution of WebAssembly (Wasm) code and supports high-performance networking interconnects. The experimental findings indicate that MPIWasm performs similarly and significantly reduces binary sizes. This approach offers a promising option for overcoming the challenges of designing container-based HPC applications. Future investigations should concentrate on improving WebAssembly (Wasm) for HPC environments [

15].

In their 2021 study, Yunlan Wang, Hui Liu, and Tianhai Zhao present Hybrid-TF, a hybrid interconnection network topology that combines the advantages of both direct and indirect networks. The topology is meticulously designed to fit precisely with the communication patterns of HPC applications, lowering the time it takes for communication to occur and improving the overall performance. The NS-3 network simulator's simulation findings indicate that Hybrid-TF outperforms traditional topologies in various traffic patterns. Additional research is required to further apply Hybrid-TF in real-world HPC systems and improve performance [

16].

In their paper released in 2022, E. Suvorova proposes using a unified and flexible router core to establish various networks within HPC systems. The strategy aims to eliminate fluctuations in network segments and guarantee a dependable Quality of Service (QoS). The suggested design's capacity for dynamic reconfiguration allows it to adjust to various attributes of data streams, leading to a decrease in data transmission and network congestion. Future investigations should prioritize implementing this methodology in practical HPC systems and evaluating its effectiveness [

17].

Researchers P. did a study in 2022. Maniotis, L. Schares, D. Kuchta, and B. Karaçali analyzed the benefits of integrating co-packaged optics in high-radix switches built explicitly for HPC and data center networks. The increased escape bandwidth enables the deployment of complex network structures that demonstrate improved network closeness and reduced switch requirements. Simulations show significant performance improvements and reduced execution times for large-scale applications. This strategy offers a promising solution for improving the capability of networks in the future. Nevertheless, further investigation is necessary to enhance and integrate the technology into existing infrastructures [

18].

In their paper released in 2022, H. Lin, Xi-Zhang Hsu, Long-Yuan Wang, Yuan-Hung Hsu, and Y. Wang examine the thermal challenges associated with the integration of sizable chiplets using Fan-out Embedded Bridge (FOEB) packages for HPC applications. Two proposed alternatives include the adoption of thick Through Mold Via (TMV) structures and utilizing Metal TIM treatments. These procedures are intended to enhance the thermal capacity. The experimental results show that the FOEB structure, with the indicated enhancements, effectively passes reliability tests and meets the thermal performance parameters. Additional research should prioritize improving the efficacy of heat management methods to get greater efficiency [

19].

The paper's authors are Nicholas Contini, B. Ramesh, Kaushik Kandadi Suresh, Tu Tran, Benjamin Michalowicz, M. Abduljabbar, H. Subramoni, and D. Authored a scholarly article in 2023. Panda introduces a communication framework that utilizes MPI to enhance the adaptability of HPC systems. This solution employs PCIe interconnects to enable message passing without necessitating specialized system setups. Advanced designs reduce the need for explicit data movement, leading to a substantial reduction of up to 50% in latency for point-to-point transfers. This technique proposes a promising method for integrating FPGAs into HPC systems, although further research is needed to improve the communication structure [

20].

Jinoh Kim, M. Cafaro, J. Chou, and A. Organized a workshop in 2022. Sim primarily concentrates on developing scalable telemetry and analysis approaches for HPC and distributed systems. The program centers on the challenges of monitoring and evaluating diverse input streams from end systems, switches, and developing network elements. The goal is to create novel approaches integrating HPC and data sciences to improve performance, availability, reliability, and security. Additional research should prioritize the development of innovative methods for effective data transmission and analysis in HPC environments [

21].

Narūnas Kapočius conducts a performance analysis of nine commonly used CNI plugins, including Flannel, Calico, and Weave, in virtualized and physical data center environments. The analysis is outlined in their paper titled "Overview of Kubernetes CNI Plugins Performance." The study evaluates the performance of TCP and HTTP protocols in VMware ESXi and a physical data center, assessing the performance of each plugin. Kapočius highlights the significant discrepancies in performance that result from the deployment environment, aiding in the choice of suitable plugins for specific infrastructures. Comprehensive research illustrates that specific plugins perform exceptionally well in virtualized systems, while others are better suited for physical setups. The author emphasizes the importance of understanding the particular demands of the deployment environment to make an informed selection. The study underlines that scalability and performance constraints are the primary challenges, suggesting the necessity for further research to improve the optimization of CNI plugins in different network situations. Further investigations could explore the integration of these plugins with new network technologies to enhance overall performance and dependability. Moreover, the research suggests investigating how network regulations and configurations impact the effectiveness of various CNI plugins in diverse deployment circumstances [

22].

"Performance Studies of Kubernetes Network Solutions" by Narūnas Kapočius assesses the efficiency of four CNCF-recommended CNI plugins (Flannel, Calico, Weave, and Cilium) in a real-world data center environment. The study measures TCP's delay and average transmission capacity for various Maximum Transmission Unit (MTU) sizes, combined network interfaces, and segmentation offloading settings. The results reveal significant discrepancies in performance among the plugins, with specific ones exhibiting higher flexibility in different network settings. A comprehensive analysis indicates that specific plugins may perform better depending on circumstances, such as different MTU sizes or network offloading strategies. Kapočius emphasizes the need for more investigation into network interface aggregation and MTU size optimization to enhance plugin performance. Further research should investigate the impact of evolving network standards and hardware developments on the functionality of CNI plugins. Moreover, the study suggests exploring the potential benefits of integrating these plugins with software-defined networking (SDN) technologies to improve network performance and scalability [

23].

In their study titled "The Performance Analysis of Container Networking Interface Plugins in Kubernetes," Siska Novianti and Achmad Basuki evaluate various Container Networking Interface (CNI) plugins, such as Flannel, Calico, Cilium, Antrea, and Kube-router. Based on their benchmarking tests, Kube-Router demonstrates the highest throughput, exceeding 90% of the anticipated link bandwidth for TCP transfers. The analysis highlights the efficacy of various plugins for CPU and memory usage. The authors thoroughly examine the plugins' performance across multiple network contexts, emphasizing the significance of careful selection based on specific usage scenarios. Novianti and Basuki suggest that future research should focus on improving inter-host communication and reducing the expenses associated with network virtualization. Furthermore, future studies could explore incorporating innovative network technologies and protocols to enhance the efficiency and expandability of plugins. The paper also proposes to investigate the long-lasting impacts of different CNI plugins on the overall stability and performance of massive Kubernetes deployments [

24].

Shixiong Qi, Sameer G. Kulkarni, and K. Ramakrishnan evaluate various open-source CNI plugins in the paper "Assessing Container Network Interface Plugins: Functionality, Performance, and Scalability." The assessment analyzes the additional expenses and constraints associated with these plugins. The study emphasizes the significance of incorporating overlay tunnel offload support to attain optimal performance for plugins that use overlay tunnels. The study also examines the capacity to manage growing workloads and the efficiency of HTTP queries. This implies that further research should be undertaken to enhance the efficiency of data processing and minimize the startup time of a pod. The authors stress the need to understand the trade-offs linked to CNI plugins to make informed judgments for specific network environments. Future research should prioritize creating novel techniques to save expenses and enhance the capacity to manage bigger workloads in different deployment scenarios. Furthermore, the study suggests integrating advanced network monitoring technologies to improve comprehension and mitigate the performance constraints associated with different CNI plugins [

25].

"Seamless Hardware-Accelerated Kubernetes Networking" is a scholarly article written by Mircea M. Iordache-Sica, Tula Kraiser, and O. Komolafe, introduces the HAcK architecture, which integrates hardware networking appliances to enhance the efficiency of Kubernetes in data centers. The article presents the HAcK LoadBalancer, a system that utilizes top-notch network appliances to accomplish the integrated administration of applications and infrastructure. The performance study in real-world data center scenarios shows significant improvements while recognizing challenges in smoothly integrating hardware acceleration. Additional research is required to broaden the range of network functions that can be supported by hardware acceleration. The authors suggest investigating the benefits of combining software-based solutions with hardware acceleration to achieve optimal efficiency. Furthermore, the study proposes to research the impact of different hardware configurations on the performance of CNI plugins. This will help identify the optimal setups for application requirements [

26].

T.P. Nagendra and R. Hemavathy's research paper, titled "Unlocking Kubernetes Networking Efficiency: Exploring Data Processing Units for Offloading and Enhancing Container Network Interfaces," examines the use of DPUs to delegate networking tasks and improve the efficiency of Kubernetes. The report analyzes prominent CNI plugins and demonstrates the benefits of DPUs through case studies. The authors suggest undertaking additional research on integrating DPU and its impact on different Kubernetes cluster requirements. Furthermore, they highlight the capacity of DPUs to enhance network performance and dependability in various deployment settings. Future research should prioritize developing efficient ways to employ DPUs and explore their interaction with emerging network technologies. Moreover, the paper proposes evaluating the cost-benefit analysis of deploying DPUs in large-scale Kubernetes environments to determine their feasibility and efficacy [

27].

The authors of the study titled "Understanding Container Network Interface Plugins: Design Considerations and Performance," Shixiong Qi, Sameer G. Kulkarni, and K. Ramakrishnan, did a comprehensive assessment of various CNI plugins, analyzing the effects and constraints on performance resulting from network models, host protocol stacks, and iptables rules. The study suggests that while choosing CNI plugins, it is essential to consider the dominant communication type, whether it is within a single host or between numerous hosts. Furthermore, the paper proposes performing additional research to reduce costs and improve the execution of network policies. The authors provide helpful perspectives on the trade-offs linked to CNI plugins and their impact on the overall network performance. Future studies could explore innovative methods to enhance network policies and maximize plugin performance in various environments. In addition, the study suggests investigating the impact of different network security configurations on the effectiveness of CNI plugins to provide optimal security without compromising performance [

28].

The study, titled "A Comprehensive Performance Evaluation of Different Kubernetes CNI Plugins for Edge-based and Containerized Publish/Subscribe Applications" by Zhuangwei Kang, Kyoungho An, A. Gokhale, and Paul N Pazandak, investigates the impact of Kubernetes network virtualization on DDS applications. The study assesses various CNI plugins on hybrid edge/cloud K8s clusters, specifically examining throughput, latency, and resource utilization. The findings provide valuable insights into choosing a suitable network plugin for latency-sensitive applications. Additionally, they propose the necessity for further investigation into enhancing the costs connected with virtualization. The authors emphasize the significance of investigating edge computing environments' unique requirements further and developing tailored solutions to meet these needs. Furthermore, the paper proposes exploring sophisticated network optimization strategies to enhance the efficiency and reliability of DDS applications in Kubernetes systems [

29].

Ritik Kumar and M. Trivedi conducted a comprehensive analysis of Kubernetes networking and CNI plugins in the article "Networking Analysis and Performance Comparison of Kubernetes CNI Plugins." The study performs benchmark tests to assess various plugins, focusing on differences in performance concerning cost, maintenance, and fault tolerance. The authors propose further investigation into multi-host communication and ingress management to enhance network performance holistically. In addition, they suggest investigating innovative approaches to improve the scalability and reliability of Kubernetes networking solutions. Moreover, the research highlights the importance of continuous monitoring and adjusting CNI plugins to maintain the best possible network performance in complex and extensive setups [

30].

A paper by Ayesha Ayub, Mubeena Ishaq, and Muhammad Munir presents a new CNI plugin for Multus that improves network performance by removing the need for additional plugin configurations. The study demonstrates significant enhancements in the efficiency of DPDK (Data Plane Development Kit) applications in cloud-native environments. It highlights the necessity for further investigation into smoothly integrating sophisticated networking functionalities into CNI plugins. The authors also highlight the possible benefits of combining DPDK with other high-performance networking technologies for optimal results. Additional research is required to assess the capacity of the proposed solutions to be readily expanded and adjusted in various scenarios to validate their practicality in real-life contexts [

31].

An article written by Andrea Garbugli, Lorenzo Rosa, A. Bujari, and L. Foschini has just unveiled a cutting-edge technology known as KuberneTSN. This Kubernetes network plugin enables expedited communication. The study showcases KuberneTSN's ability to meet predictable deadlines and outperform alternative overlay networks. Future research should focus on prioritizing the enhancement of support for diverse application requirements and the optimization of the scalability of time-sensitive networks. The authors suggest investigating the integration of KuberneTSN with supplementary real-time communication protocols to improve performance further. Furthermore, the study proposes exploring the possible benefits of incorporating KuberneTSN with sophisticated scheduling and resource management approaches to enhance the overall system performance [

32].

The article by Youngki Park, Hyunsik Yang, and Younghan Kim investigates and compares the efficiency of various CNI plugins in both OpenStack and Kubernetes environments. The study highlights crucial performance metrics and suggests implementing containerized cloud applications. Further study is recommended to optimize computer network infrastructure (CNI) configuration and improve network performance in various contexts. The authors stress the significance of regularly monitoring and fine-tuning CNI parameters to guarantee optimal performance. Moreover, the study suggests researching the impact of multiple hardware configurations and virtualization technologies on the performance of CNI plugins. This research aims to identify the most efficient setups for application requirements [

33].

A research paper by Francesco Lombardo, S. Salsano, A. Abdelsalam, Daniel Bernier, and C. Filsfils examines the integration of SRv6 with the Calico CNI plugin. The study demonstrates the benefits of SRv6 in large-scale and distributed scenarios involving many data centers. It highlights the exceptional performance and characteristics of SRv6. Future research should focus on enhancing control plane architectures and expanding support for SRv6 in Kubernetes environments. The authors suggest exploring new uses for SRv6 in different networking situations to utilize its strengths fully. Additionally, the study proposes to investigate the impacts of alternative SRv6 configurations on the efficiency and scalability of Kubernetes installations to optimize results in diverse settings [

34].

The study is authored by Larsson, William Tarneberg, C. Klein, E. Elmroth, and M. Kihl's study titled "Impact of etcd deployment on Kubernetes, Istio, and application performance" explores the vital importance of the etcd database in Kubernetes deployments. The study examines the impact of etcd on the performance of Kubernetes and Istio, emphasizing the need for a storage system that operates at a high level of performance. Further work is required to analyze the process of optimizing etcd setups and resolving unanticipated behaviors in cloud environments. The authors propose investigating innovative methods to enhance the reliability and effectiveness of etcd in large-scale deployments. Moreover, the study suggests integrating state-of-the-art storage technologies with etcd to improve performance and reliability in dynamic and demanding environments [

35].

The paper by Yining Ou presents a new approach to improving CNI metrics for better network performance. The study significantly improves network latency, bandwidth, and packet loss by optimizing CNI settings. The authors suggest further studies to enhance the calibration of CNI measurements to enhance service levels in different cloud settings. Future research may focus on developing automated ways to optimize CNIs and simplify the management of container networks. Additionally, the study proposes to investigate the impact of varied workloads and network circumstances on the performance of improved CNI metrics to ensure their efficiency in diverse deployment scenarios [

36].

The study by Nupur Jain, Vinoth Mohan, Anjali Singhai, Debashis Chatterjee, and Dan Daly. The article showcases the application of P4 in developing a high-performance load balancer for Kubernetes. The study examines the challenges associated with size, security, and network performance, highlighting the benefits of using P4 data planes. Future investigations should focus on expanding P4-based solutions to include more network functions and enhancing their interface with Kubernetes. The authors suggest exploring the possible benefits of combining P4 with other advanced networking technologies to achieve maximum performance and scalability in various deployment scenarios. Moreover, the study proposes to investigate the impact of different P4 configurations on the effectiveness of Kubernetes load balancers to identify the most optimal setups for particular application requirements [

37].

The paper by D. Milroy et al. investigates integrating HPC operations into Kubernetes. The study demonstrates advancements in scaling MPI-based systems and improving scheduling capabilities using the Fluence plugin. The authors propose further investigation to enhance the efficiency of Kubernetes in high-throughput computing (HPC) settings and optimize the scheduler's performance. Furthermore, the research highlights the importance of understanding the specific requirements of HPC workloads to develop tailored solutions for their efficient coordination in Kubernetes contexts. Additional research is required to analyze the incorporation of sophisticated resource management methods to enhance the efficiency and expandability of high-performance computing processes in cloud-native systems [

38].

The authors, Ki-Hyeon Kim, Dongkyun Kim, and Yong-hwan Kim, present a new approach called Container Network Interface (CNI) in their article "Open-Cloud Computing Platform Design based on Virtually Dedicated Network and Container Interface." This approach, which combines Software-Defined Networking (SDN) technology with Kubernetes, is a significant advancement in cloud computing. The study demonstrates substantial improvements in network performance and flexibility. The authors stress the importance of further inquiries to prioritize the expansion of this methodology to include more cloud environments and enhance network efficiency. They also suggest investigating the benefits of integrating advanced software-defined networking (SDN) capabilities with the new container network interface (CNI) to obtain the best possible performance and scalability. Furthermore, the study proposes to examine the impact of different network configurations and virtualization technologies on the performance of the new CNI to identify the most optimal setups for particular application requirements [

39].

The paper, "Characterising resource management performance in Kubernetes," written by Víctor Medel Gracia et al., investigates the performance of Kubernetes using a Petri nets-based model. The study highlights the importance of efficiently allocating and scheduling resources for adaptable cloud systems. Future research should focus on improving performance models and optimizing resource allocation in Kubernetes contexts. The authors emphasize the significance of continuously monitoring and modifying resource management systems to guarantee optimal performance. Additionally, the study proposes to investigate the impact of different types of workloads and network circumstances on the efficacy of Kubernetes resource management to ensure its success in diverse deployment scenarios [

40].

The research, authored by Dinh Tam Nguyen et al., investigates the integration of SR-IOV with CNFs in Kubernetes to improve the performance of the 5G core network. The study shows a notable 30% improvement in network speed for 5G applications using SR-IOV and CPU-Pinning. The authors propose further investigating SR-IOV and other technologies that enhance performance in cloud-native 5G deployments. Furthermore, the study highlights the potential benefits of combining SR-IOV with other cutting-edge networking technologies to optimize performance and scalability in various deployment circumstances. It is essential to prioritize further exploration into the creation of the best methods for integrating SR-IOV and other performance-enhancing technologies with CNFs. This is necessary to ensure that these technologies are feasible, usable, and effective [

41].

This paper is organized as follows. The next sections provide relevant information about all background technologies covered in this paper—Kubernetes, CNIs, and related technologies. Then, we’ll describe the problem, our experimental setup, and study methodology, followed by results and the related discussion. In the last sections of the paper, we’ll go over some future research directions and conclusions we reached while preparing this paper.

4. Experimental Setup and Study Methodology

Our setup consisted of multiple x86 servers, with network interfaces ranging from 1 Gbit/s to 10Gbit/s in different configurations. We also used a combination of copper and optical connections, various tuned optimization profiles, and simulated package sizes to check for any influence of a given tuned profile on real-life performance. We measured bandwidth and latency from a performance standpoint, essential for different workload types. HPC workloads are known to be sensitive to either one or both simultaneously. We also measured the performance of a physical system so that we could see an actual scope overhead influence on these performance metrics. This is where we realized we would have many challenges if we used manual testing and researched other avenues to make testing more efficient and reproducible. Manual measuring could be more efficient and requires a substantial time commitment. Establishing the testing infrastructure, adjusting the CNIs, executing the tests, and documenting the outcomes manually can be highly time-consuming and labor-intensive, particularly in extensive or intricate systems. Not only does this impede the review process, but it also heightens the probability of errors and inconsistencies.

Our automated methodology [

54] guarantees that every test is conducted under identical conditions on each occasion, resulting in a high degree of consistency and reproducibility that is challenging to attain manually. This results in increased reliability and comparability of outcomes, which is crucial for making well-informed judgments regarding the most suitable CNI for given workloads. Moreover, automation significantly decreases the time and exertion needed to carry out these tests. Tools like Ansible can optimize the deployment, configuration, and testing procedures, facilitating quicker iterations and more frequent testing cycles. This enhanced efficiency enables a more flexible evaluation procedure, making including new CNIs and updates easier.

Furthermore, manual testing can be demanding on resources, frequently necessitating specialized expertise and substantial human resources to carry out efficiently. On the other hand, an automated and coordinated testing framework allows for a more inclusive evaluation process, making it easier for a broader range of people to participate, including smaller enterprises and individual engineers. Offering a concise and user-friendly method for assessing CNI enhances transparency and fosters optimal network configuration and optimization techniques.

In our pursuit of improving computing platforms, we often neglect to step back from improvements and see if possible solutions would allow us to optimize our current environments. Part of this paper tests some basic optimization options and examines how they affect typical computing platforms' network performance.

Before we dig into the tests and their results, let us establish some basic terminology. For this paper, we will introduce three basic terms: test configuration, test optimization, and test run. A test optimization refers to a set of optimization changes made to both the server and client side of testing. A test configuration refers to testing the networking setup. A test run, therefore, combines test optimization, configuration, and settings, such as MTU, packet size, test duration, etc. Firstly, let’s define our test optimizations.

The first test optimization isn’t an optimization; we just have to have a baseline starting point. The first optimization level is called “default_settings,” and as the name would suggest, we don’t make any optimization changes during this test. This will allow us to establish a baseline performance to be used as a reference for other optimizations.

The second optimization is called “kernel_optimizations,” at this optimization level, we introduce various kernel-level optimizations to the system. The Linux kernel can be a significant bottleneck for network performance if not configured properly, starting with send and receive buffers. The default value for the maximum receive and send buffer sizes for all protocols, “net.core.rmem_max and net.core.wmem_max,” is 212992 (2MB), which can significantly impact performance, especially in high latency or high bandwidth environments. We increased these values to 16777216 (16MB). The second kernel-level optimization we can take advantage of is TCP window scaling. By default, TCP window scaling is turned off on Linux, and by enabling this setting, we can increase the size of the scaling factor of the TCP receive window. Further, we will disable TCP Selective Acknowledgement (TCP SACK). Disabling TCP SACK can improve performance by slightly reducing CPU overhead. Still, it should be used carefully as it can lead to a significant loss in network performance in lossy networks. The last kernel-level optimization we will introduce is increasing the SYN Queue Size to 4096 packets from the default 128. This setting might not affect our testing environment but can significantly improve performance in environments where a single server handles many connections.

The third optimization is called “nic_optimizations,” at this optimization level, we introduce several network interface-level optimizations. The first important setting is enabling Generic Receive offload. GRO helps coalesce multiple incoming packets into a larger one before passing them to the networking stack, improving performance by reducing the number of packets the CPU needs to process. The second important network interface level optimization we can introduce is enabling TCP Segmentation offload. By default, the CPU handles the segmentation of network packets. By enabling this option, we can offload that work to the network interface card and reduce the work needed by the CPU. This setting could significantly improve our performance as part of our tests, which will also test network performance while forcing segmentation. The last network interface level optimization change we will make is to increase se the receive and transmit ring buffers from the default value of 256 to 4096 packets. We don’t expect this setting to significantly impact the test results, as it will probably only affect performance during bursts of network traffic.

These are the only “custom” optimization levels used during tests. The rest are built into the tuned service available on Linux distributions. Tuned profiles used as the rest of the optimization levels are accelerator performance, hpc compute, latency performance, network latency, and network throughput.

Let us now look at different test configurations used during our testing. We tested five network setups: 1-gigabit, 10-gigabit, 10-gigabit-lag, fiber-to-fiber, and local. 1-gigabit, as the name would suggest, is a test conducted over a 1 Gbit link between the server and the client. The same applies to 10-gigabit. 10-gigabit-lag is a test conducted over the 10 Gbit links aggregated into a link aggregation group. Fiber-to-fiber is a test conducted over a fiber optic connection between the server and client. Finally, local is a local test where the server and the client are on the same physical machine.

We included a few test settings alongside these settings and configuration options in each test run. These combine two different MTU values, 1500 and 9000, and five different packet sizes, 64, 512, 1472, 9000, and 15000. Finally, all tests were conducted using TCP and UDP protocols separately.

This paper focuses on the results of using our methodology. Specifically, bandwidth and latency were analyzed across multiple Kubernetes CNIs, tuned performance profiles, MTU sizes, and different Ethernet standards/interfaces. Let's now examine the results to reach some conclusions.

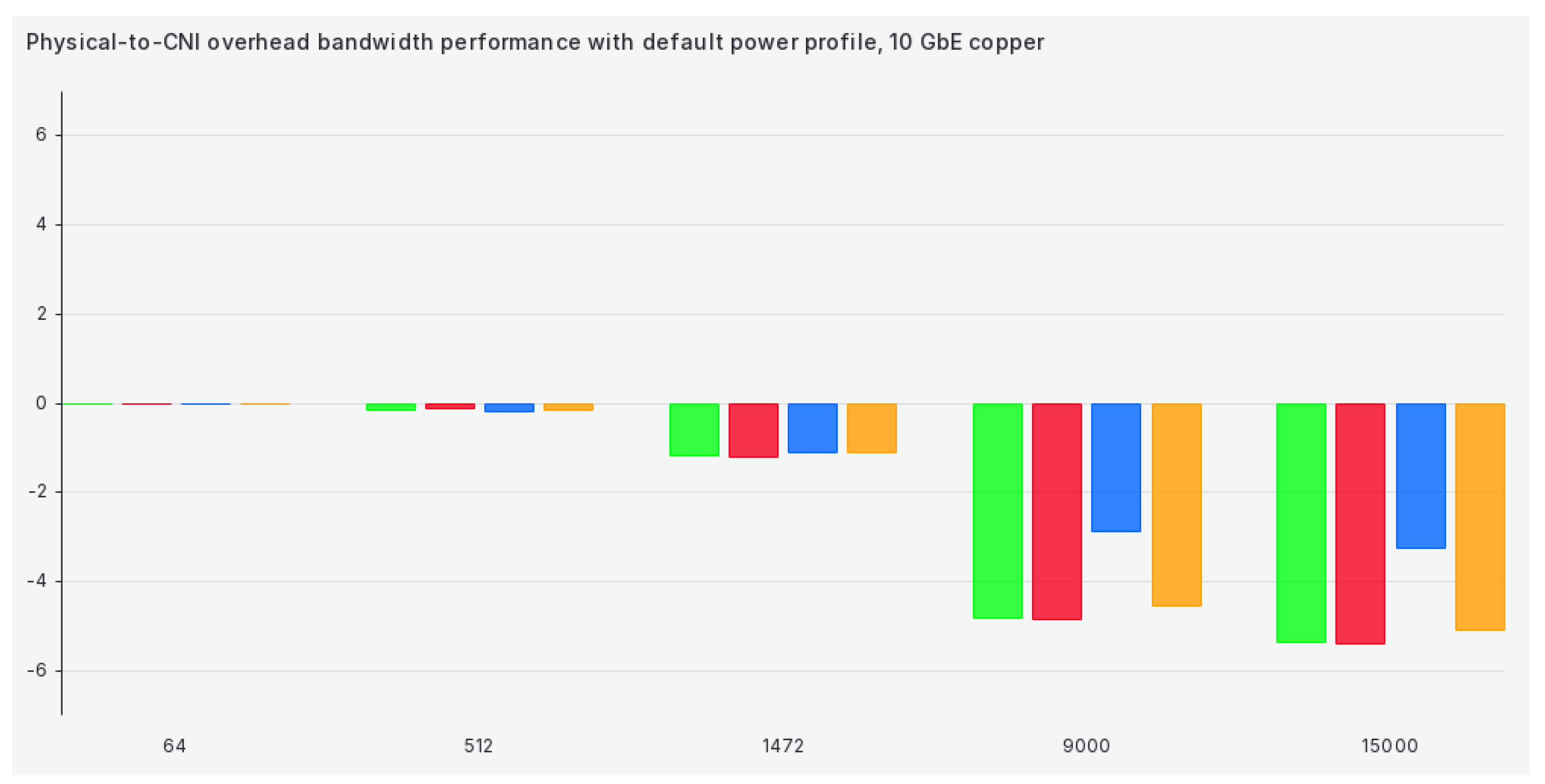

Figure 2.

Physical to CNI comparison for TCP bandwidth in default tuned performance profile.

Figure 2.

Physical to CNI comparison for TCP bandwidth in default tuned performance profile.

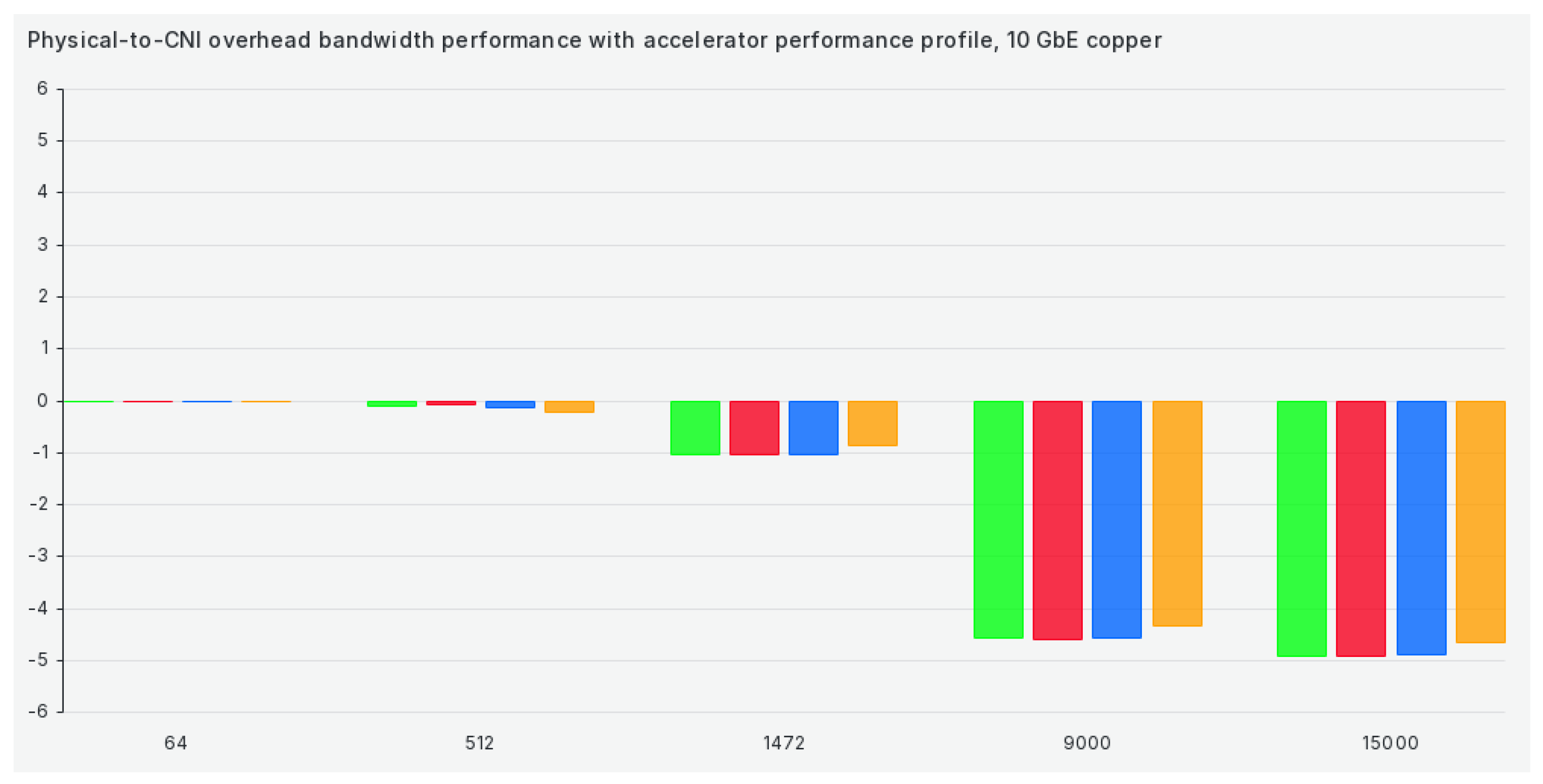

Figure 3.

Physical to CNI comparison for TCP bandwidth accelerator-performance profile.

Figure 3.

Physical to CNI comparison for TCP bandwidth accelerator-performance profile.

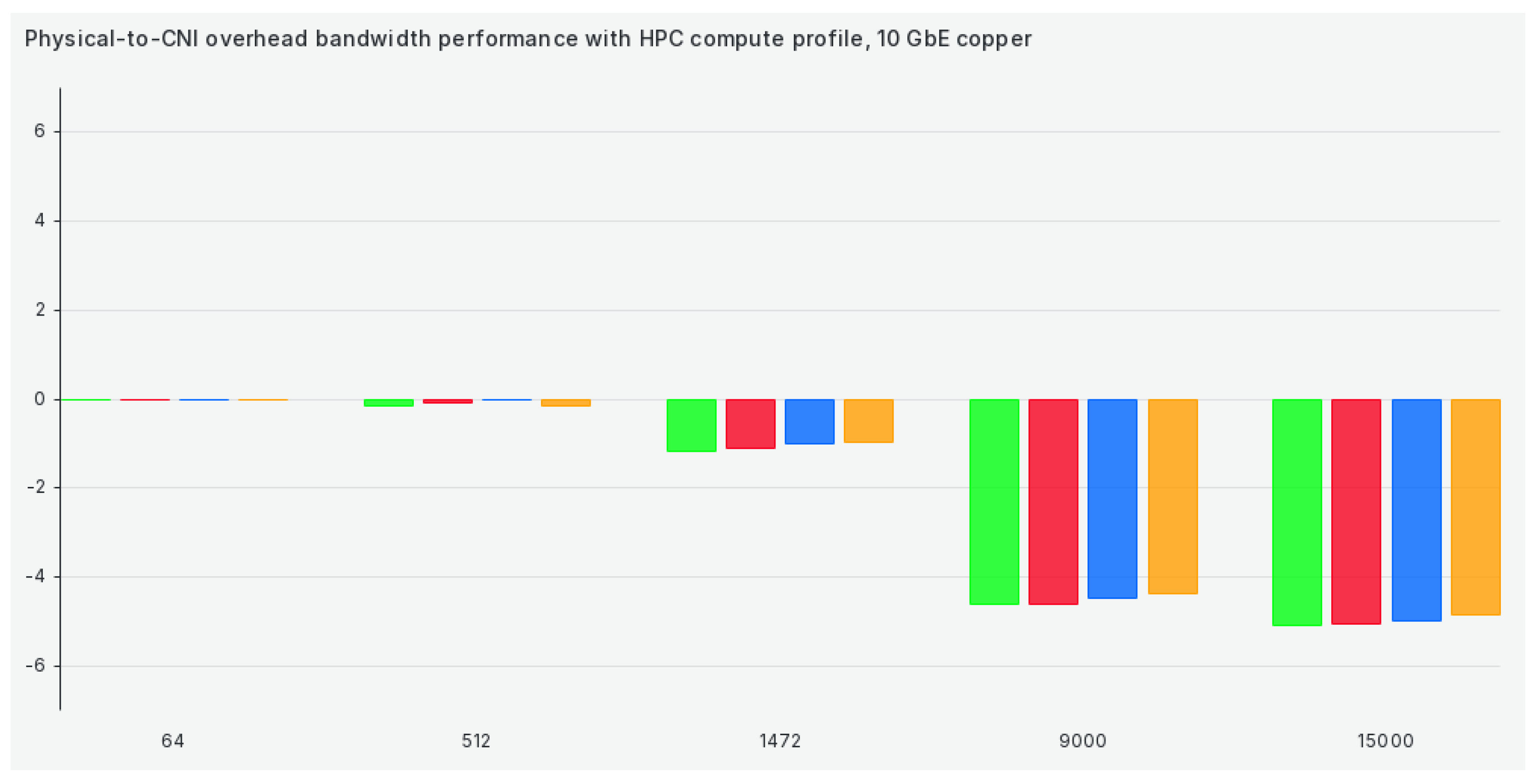

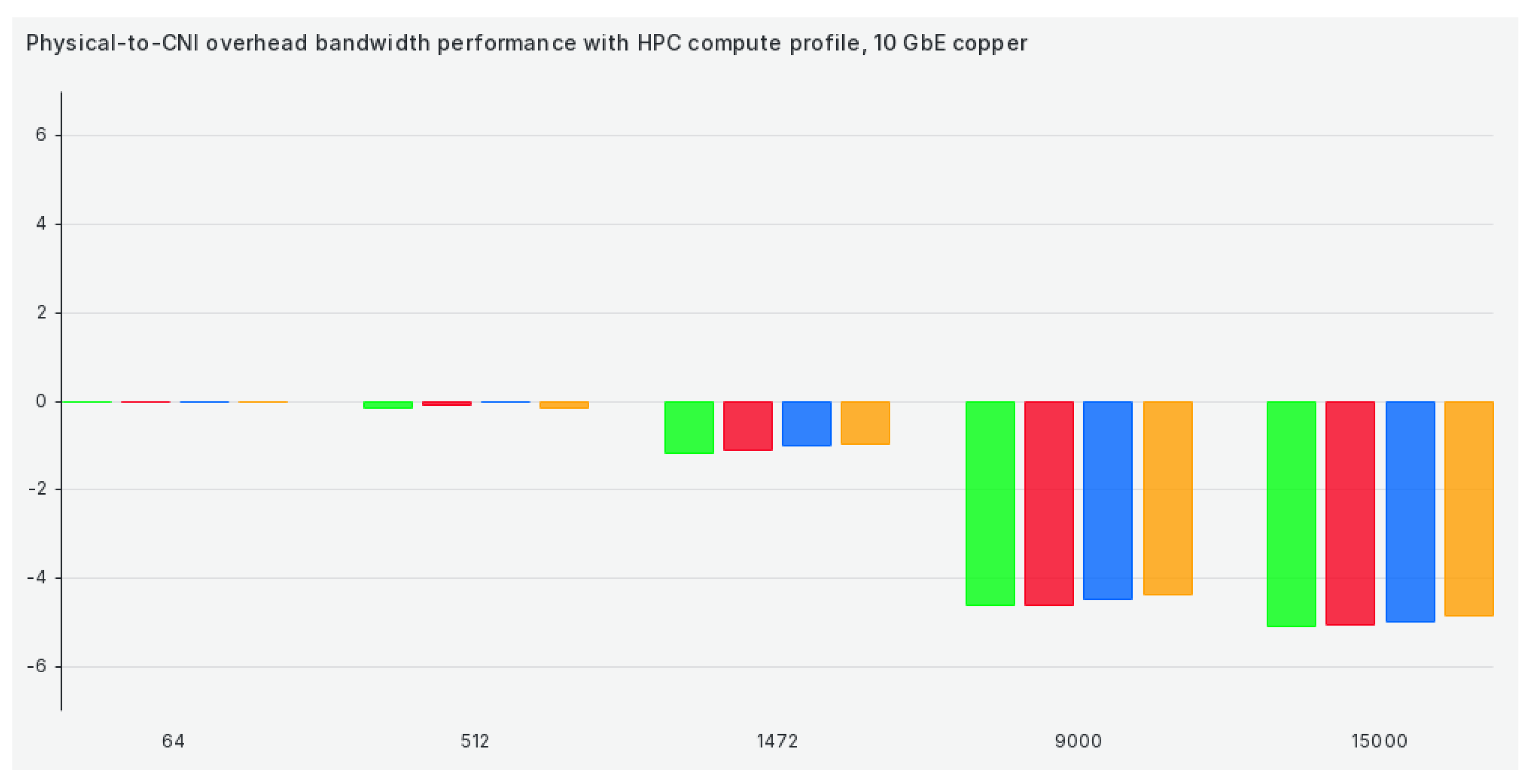

Figure 4.

Physical to CNI comparison for TCP bandwidth in the hpc-compute performance profile.

Figure 4.

Physical to CNI comparison for TCP bandwidth in the hpc-compute performance profile.

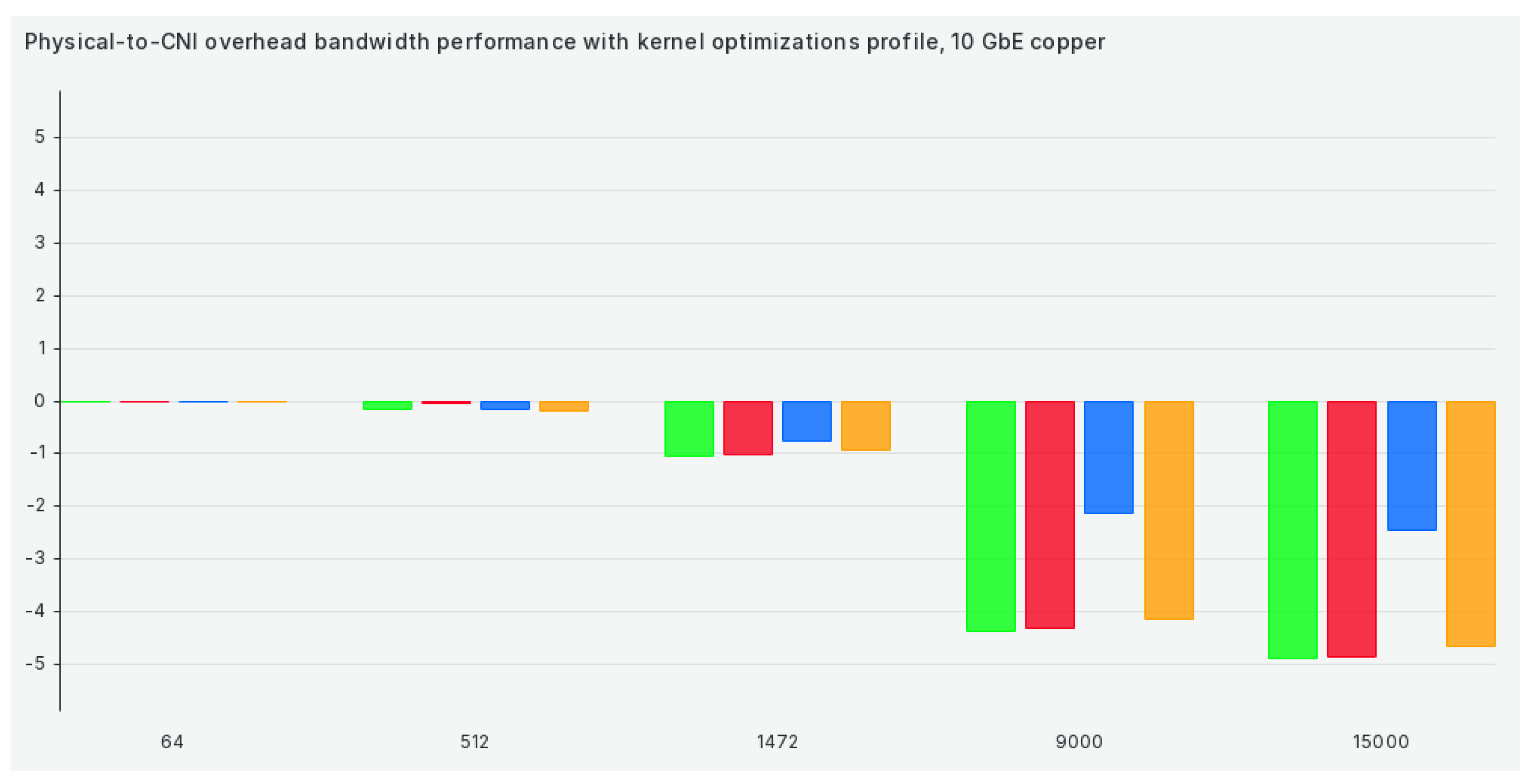

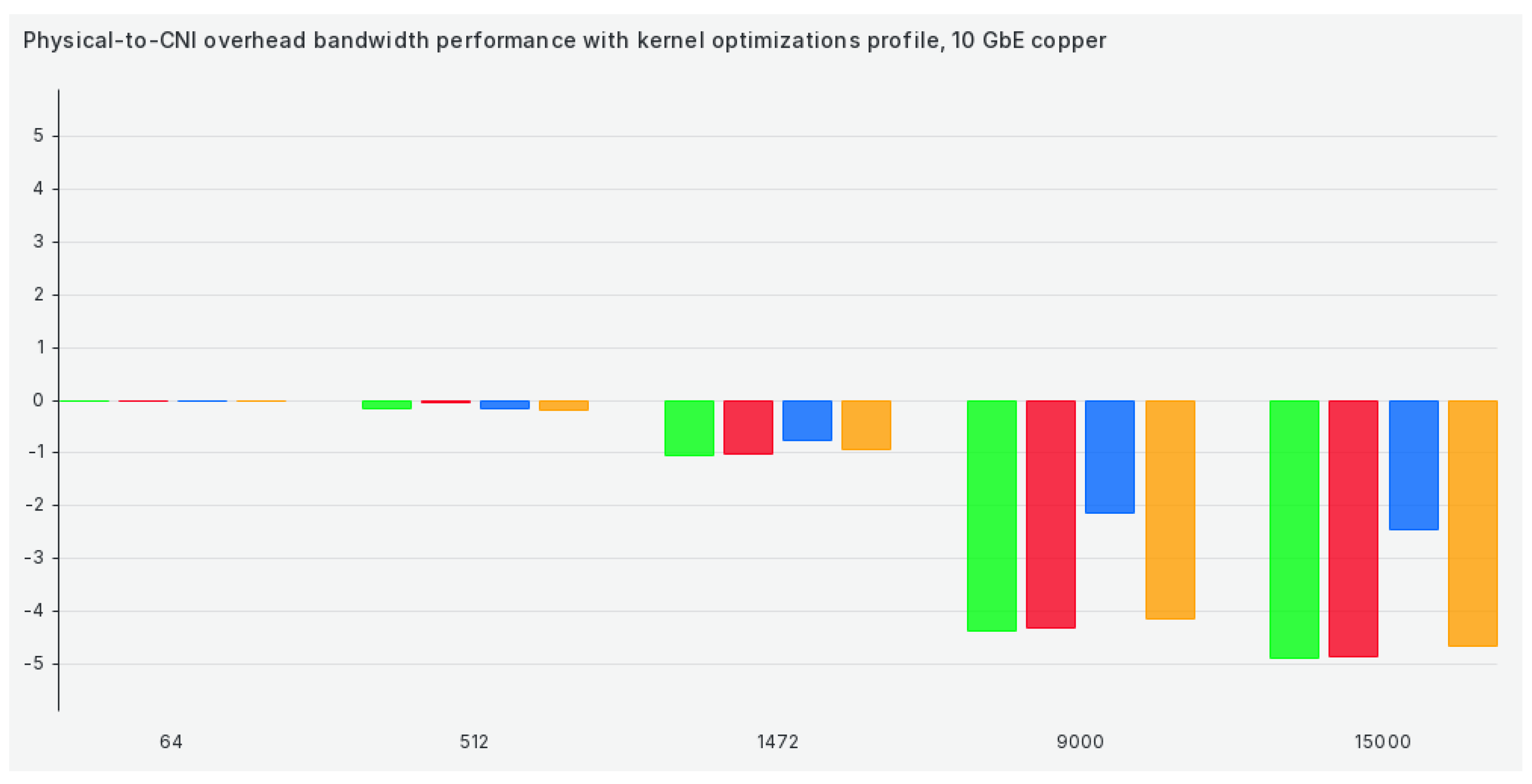

Figure 5.

Physical to CNI comparison for TCP bandwidth in kernel optimization performance profile.

Figure 5.

Physical to CNI comparison for TCP bandwidth in kernel optimization performance profile.

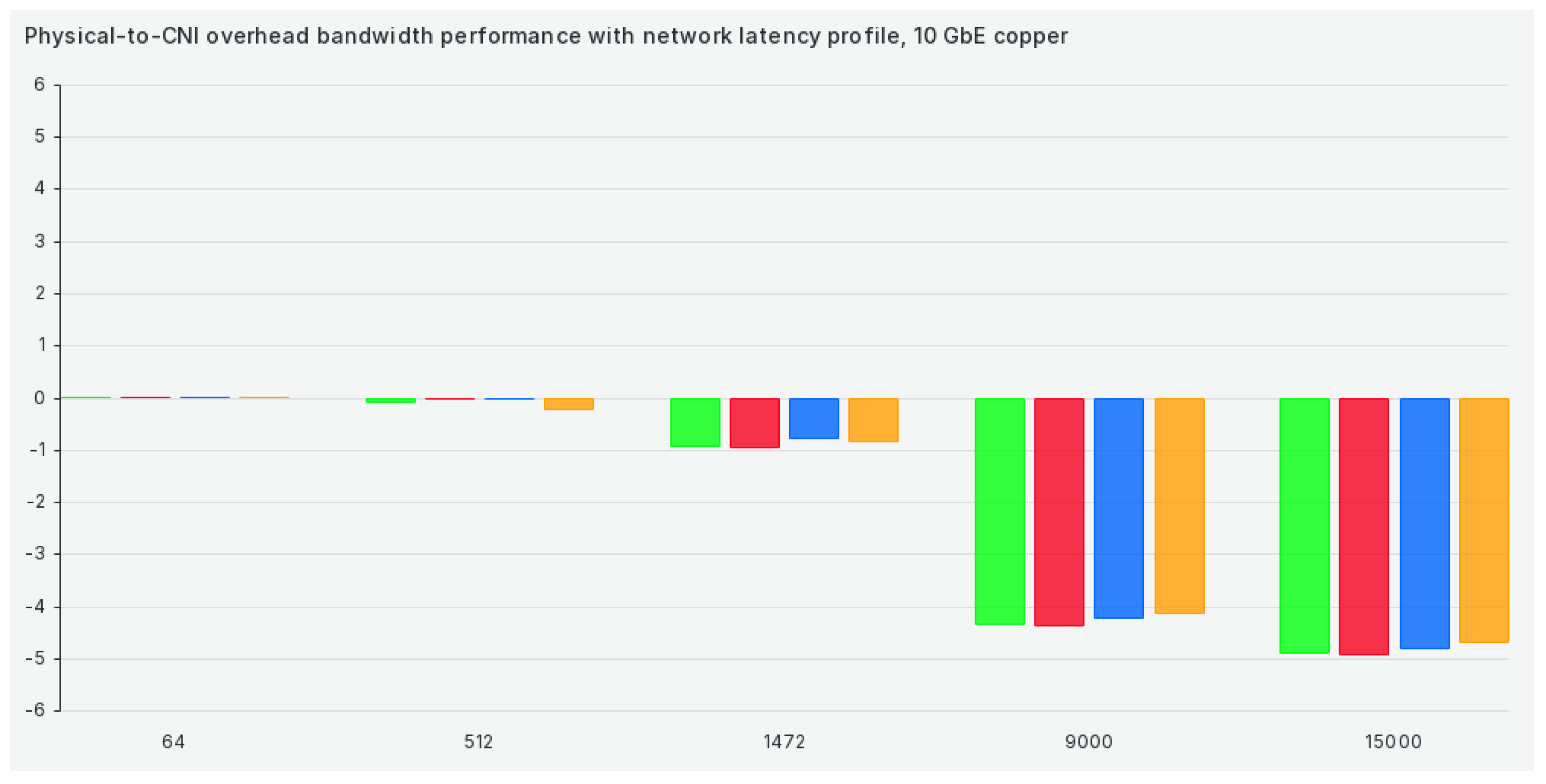

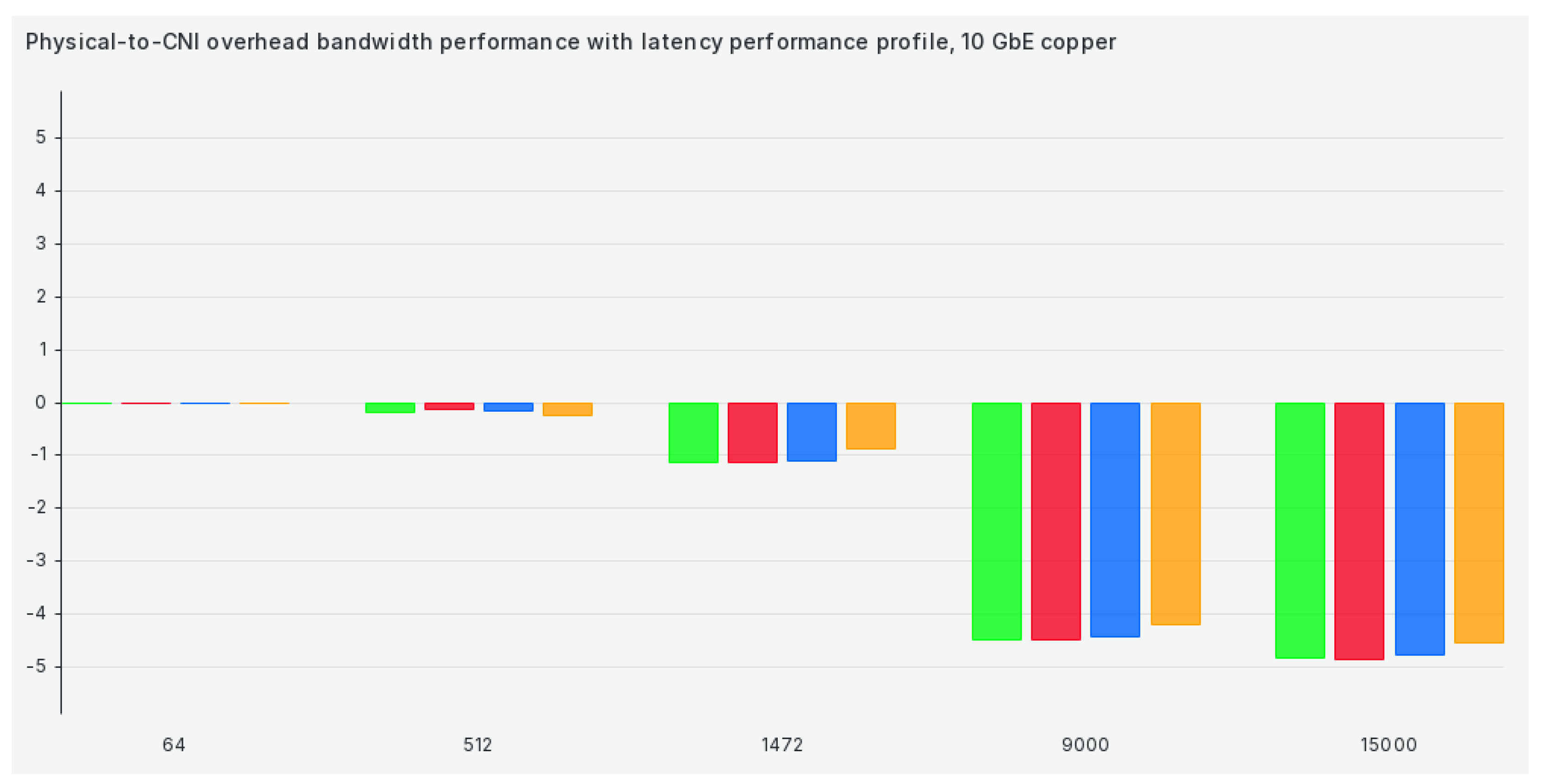

Figure 6.

Physical to CNI comparison for TCP bandwidth in the latency performance profile.

Figure 6.

Physical to CNI comparison for TCP bandwidth in the latency performance profile.

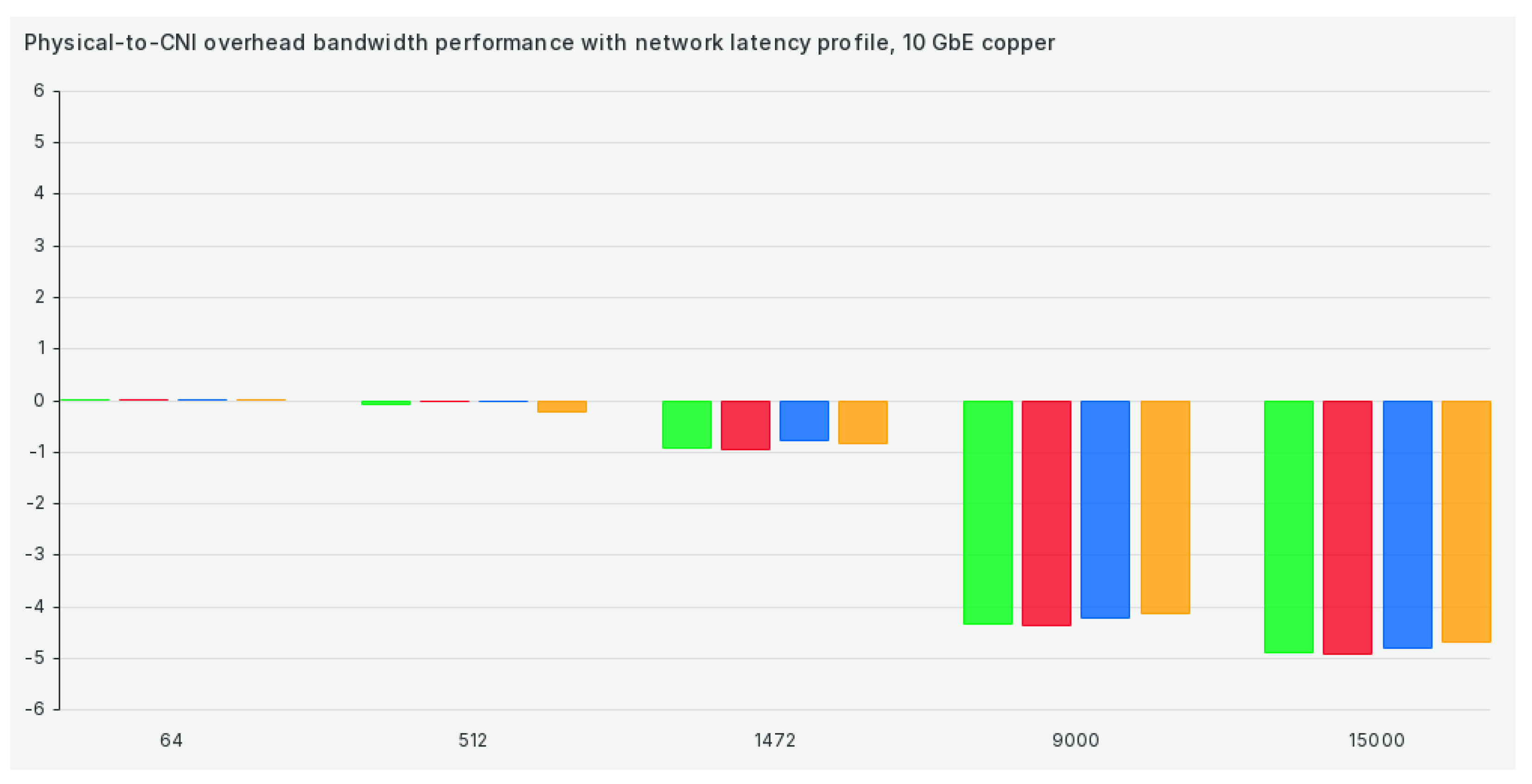

Figure 7.

Physical to CNI comparison for TCP bandwidth in network latency performance profile.

Figure 7.

Physical to CNI comparison for TCP bandwidth in network latency performance profile.

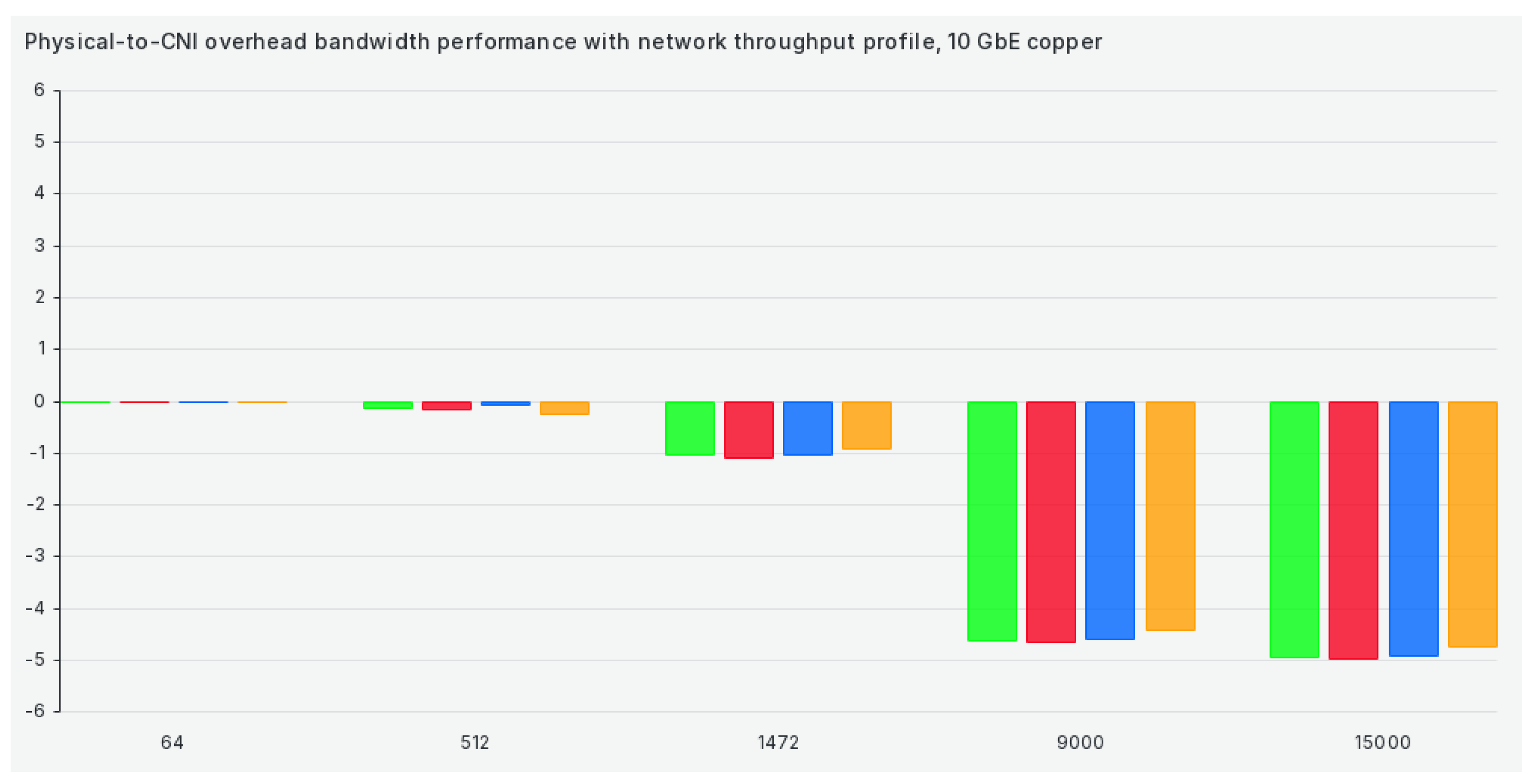

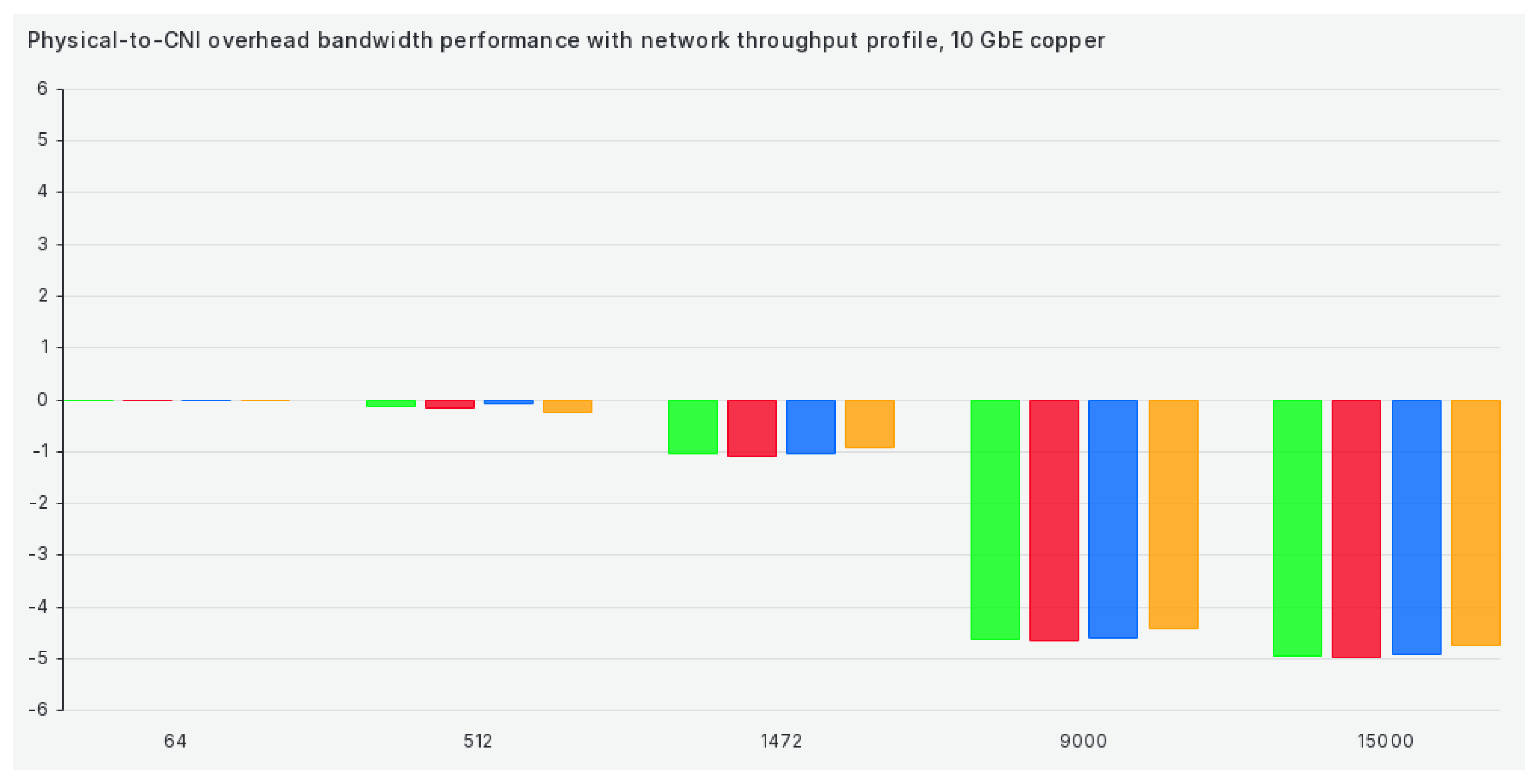

Figure 8.

Physical to CNI comparison for TCP bandwidth in network throughput performance profile.

Figure 8.

Physical to CNI comparison for TCP bandwidth in network throughput performance profile.

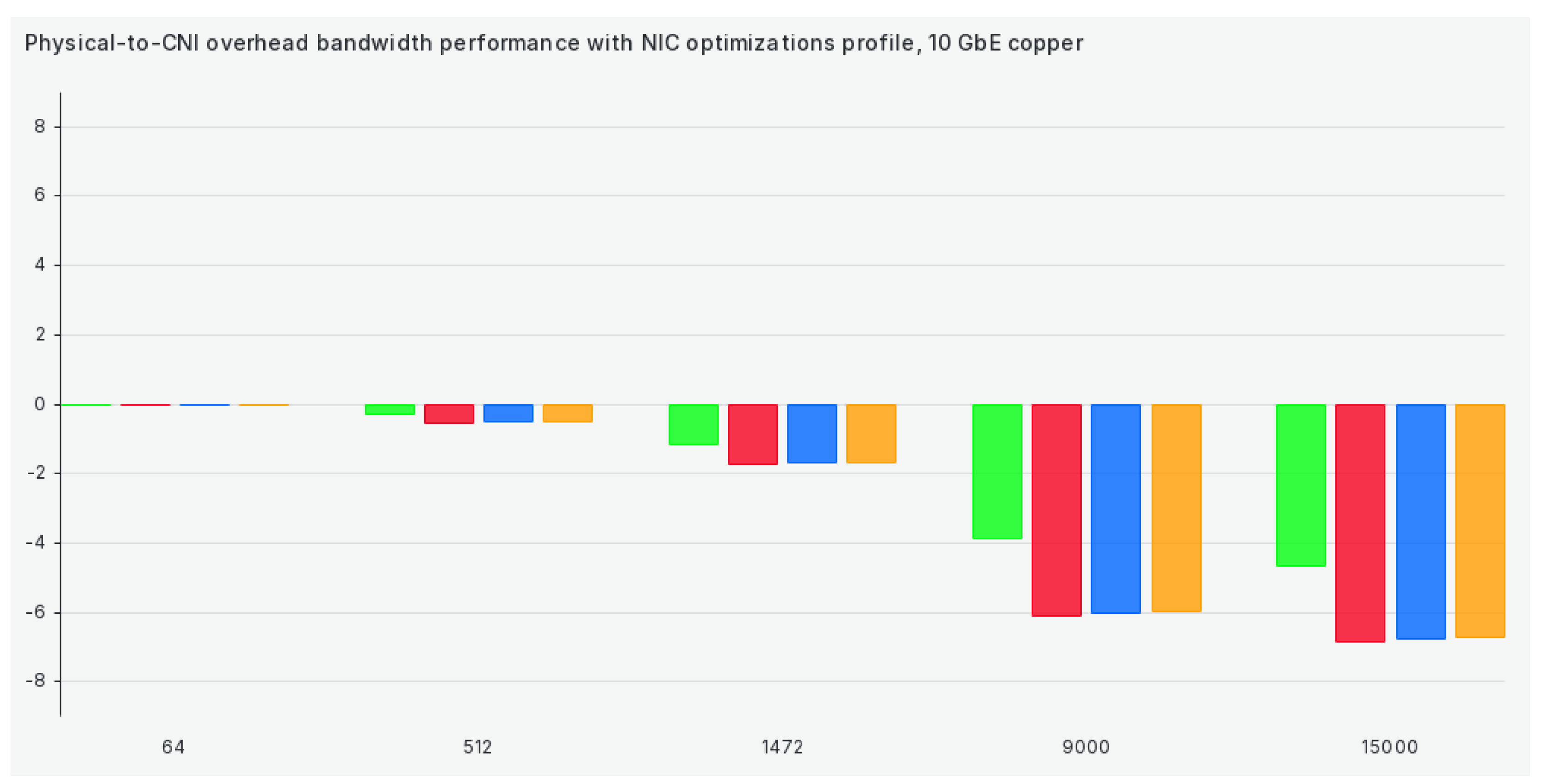

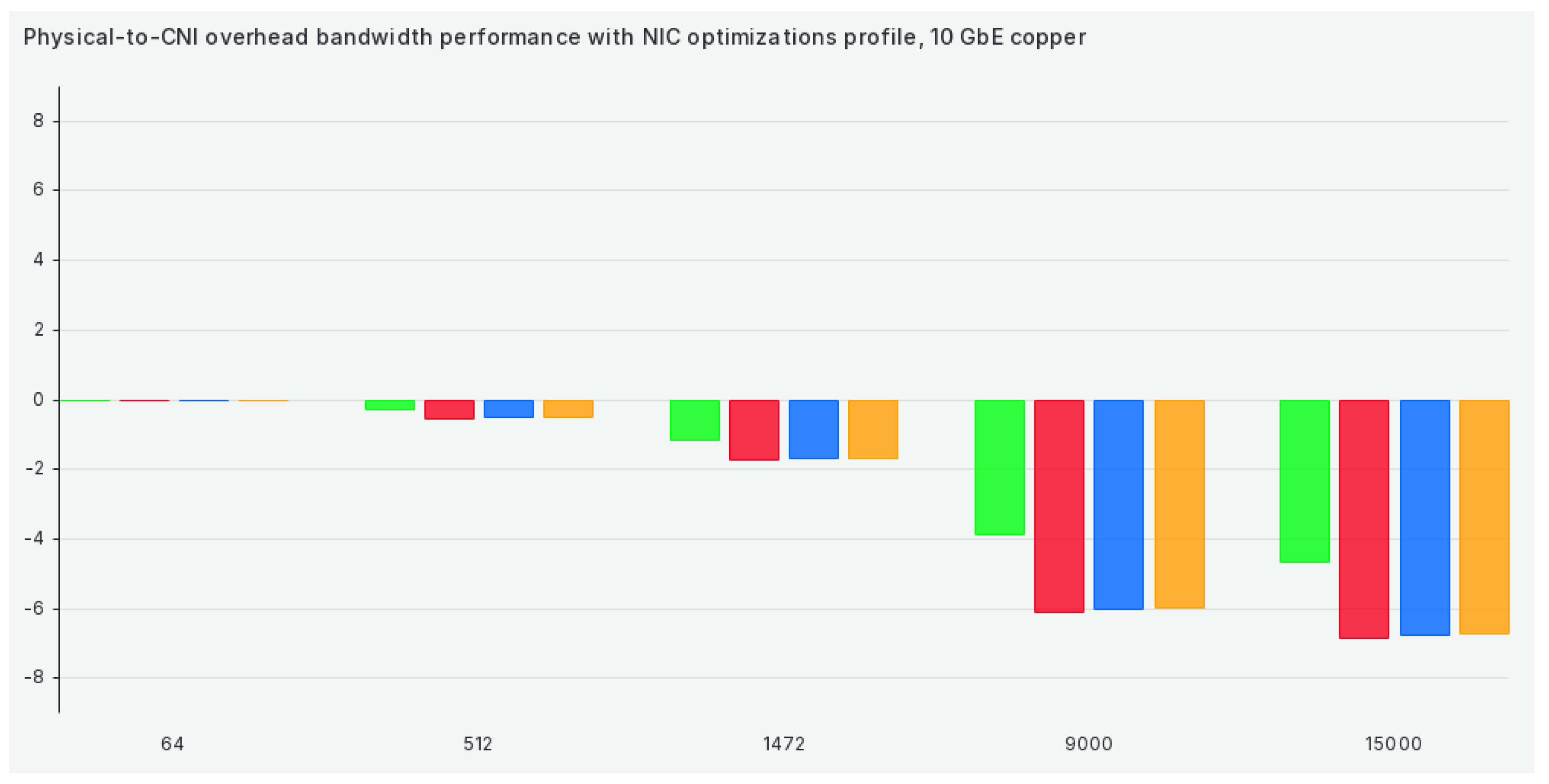

Figure 9.

Physical to CNI comparison for TCP bandwidth in the nic-optimization performance profile.

Figure 9.

Physical to CNI comparison for TCP bandwidth in the nic-optimization performance profile.

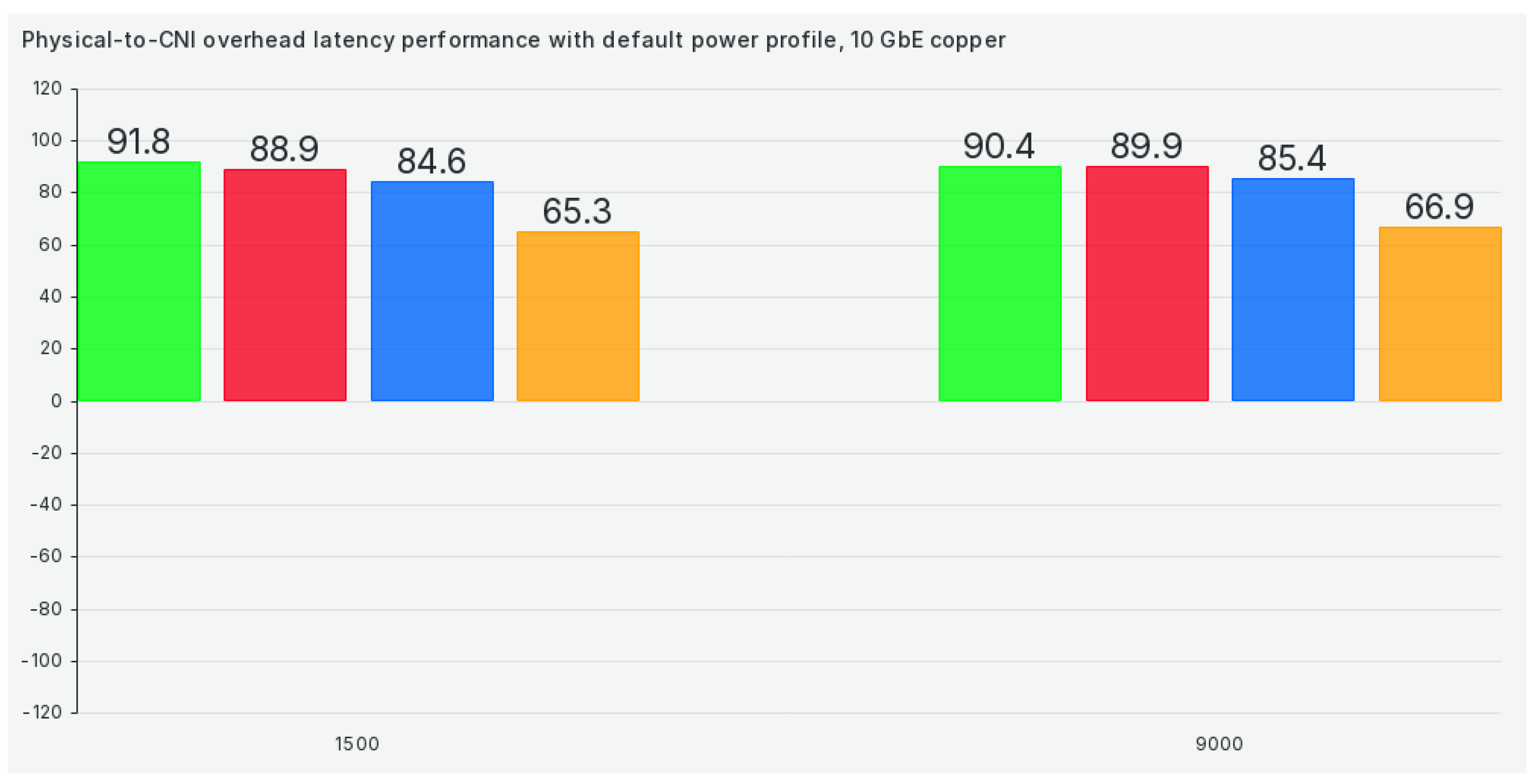

Figure 10.

Physical to CNI comparison for TCP latency in default tuned performance profile.

Figure 10.

Physical to CNI comparison for TCP latency in default tuned performance profile.

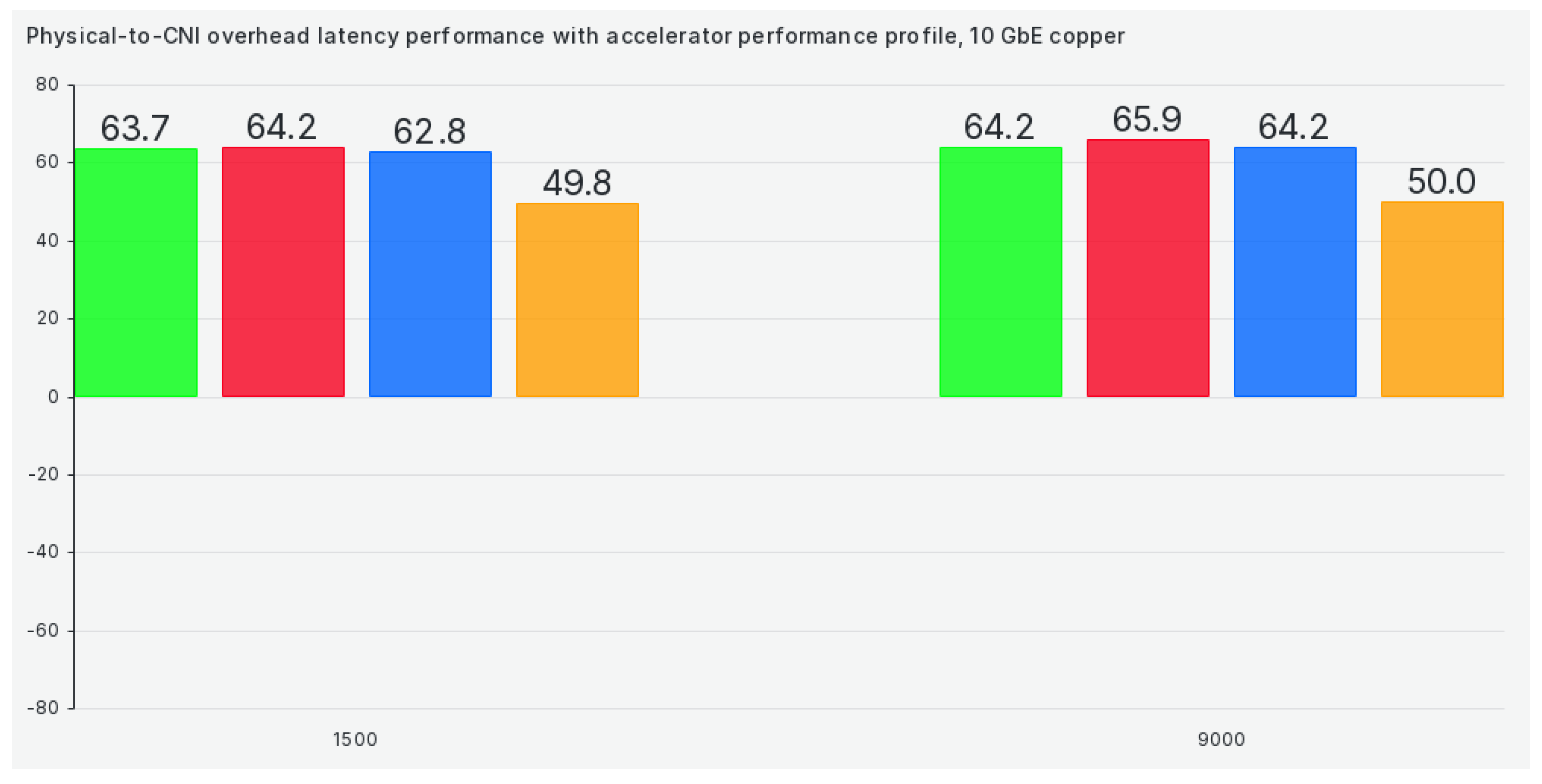

Figure 11.

Physical to CNI comparison for TCP latency accelerator-performance profile.

Figure 11.

Physical to CNI comparison for TCP latency accelerator-performance profile.

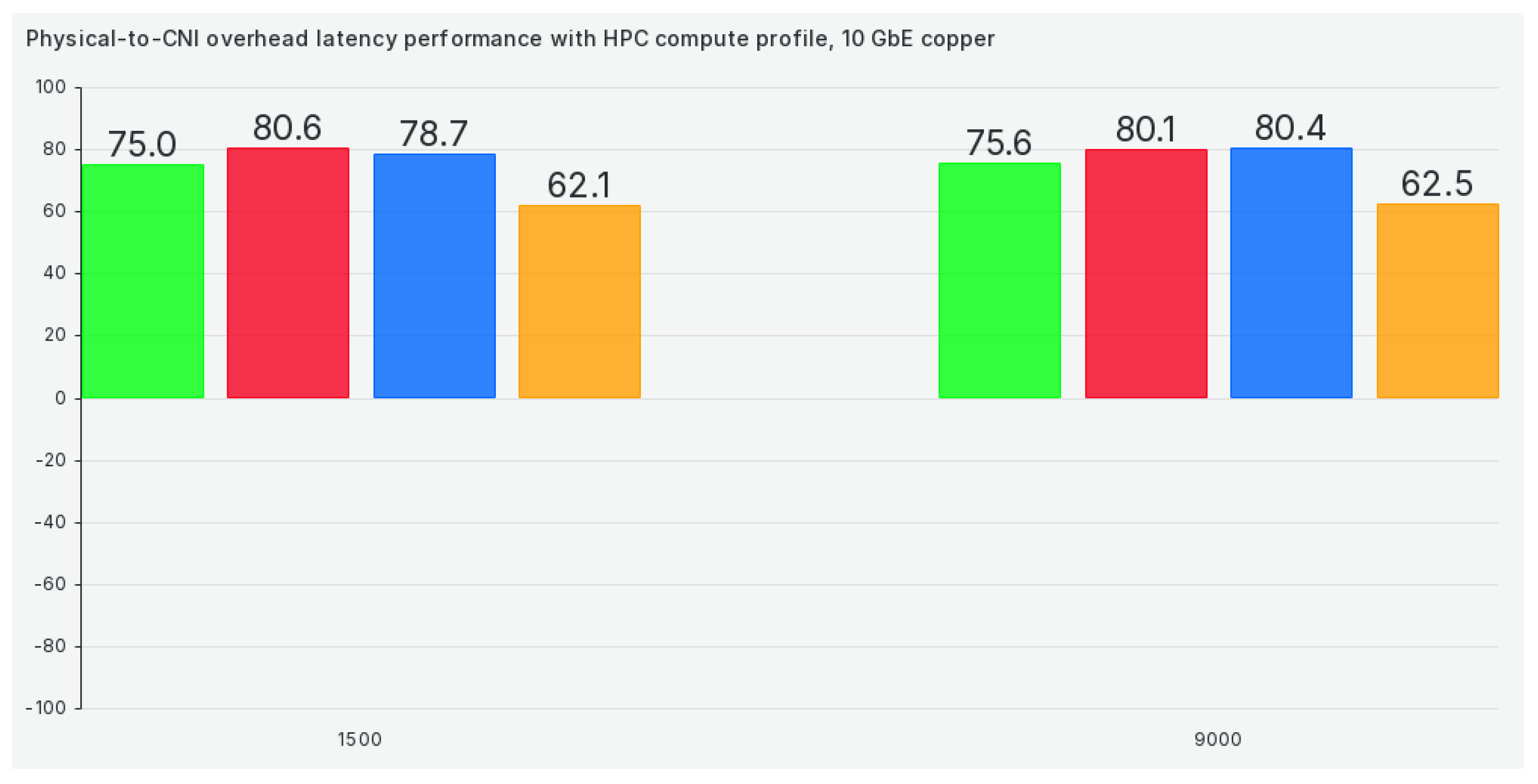

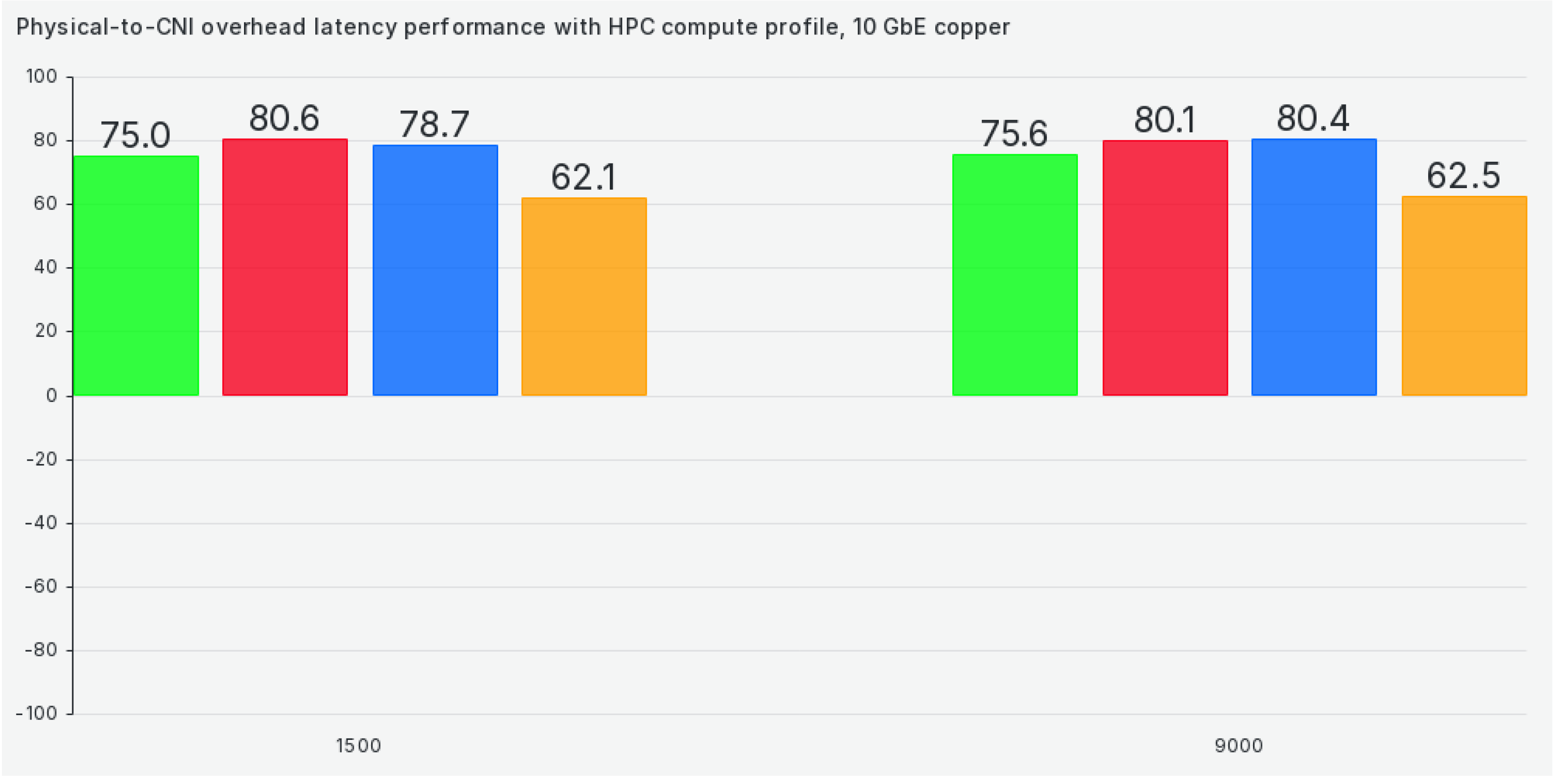

Figure 12.

Physical to CNI comparison for TCP latency in the hpc-compute performance profile.

Figure 12.

Physical to CNI comparison for TCP latency in the hpc-compute performance profile.

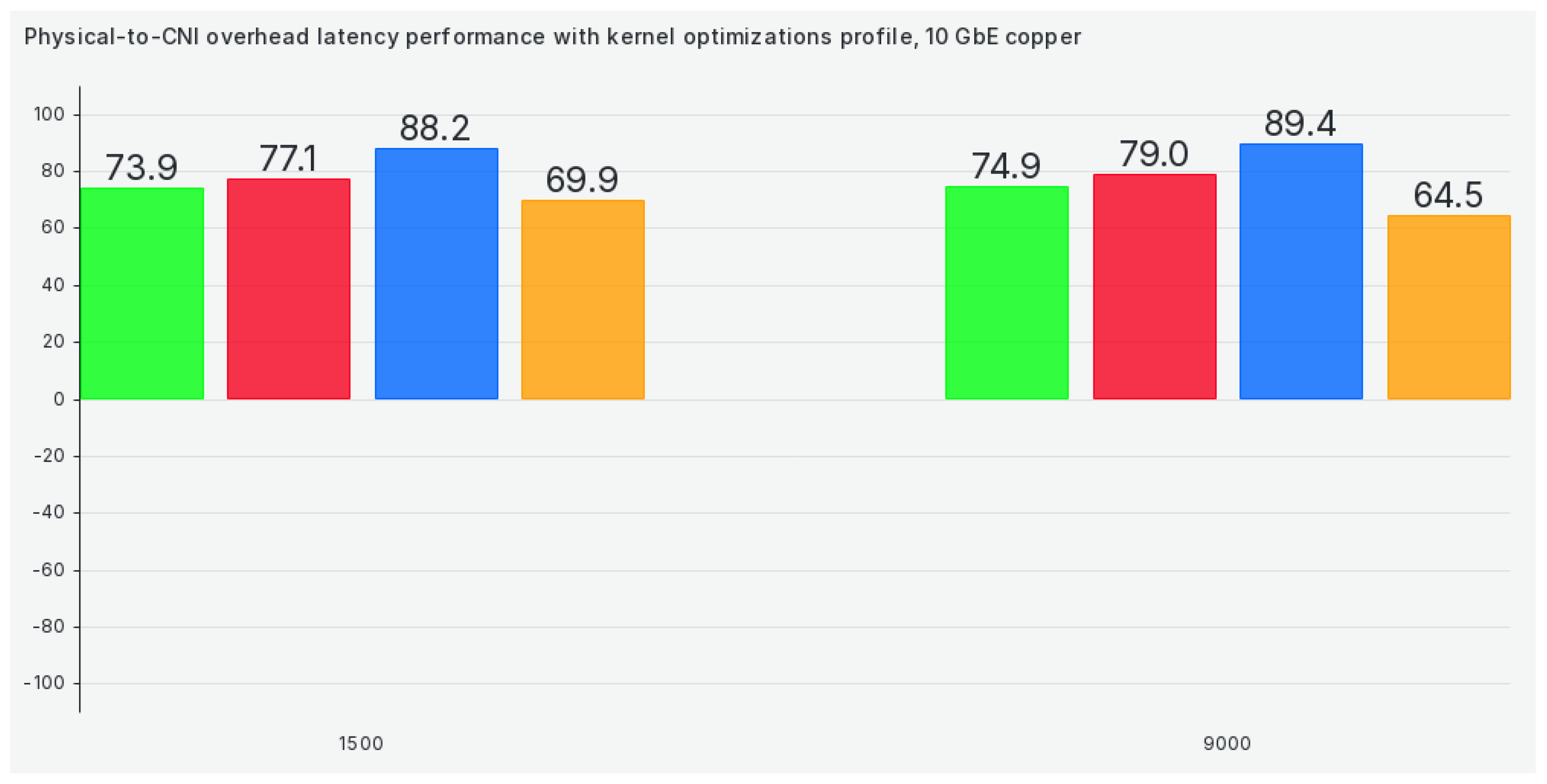

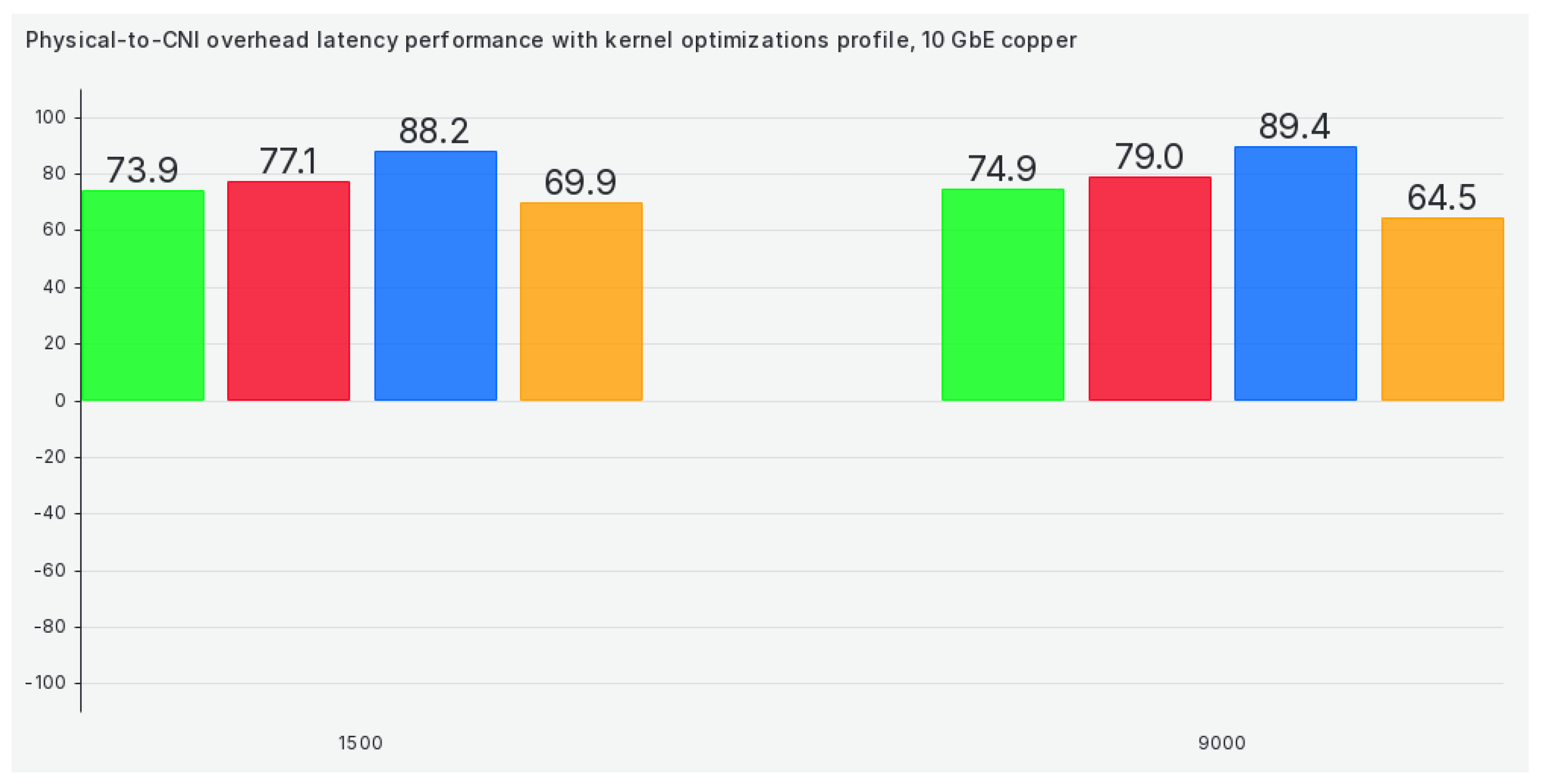

Figure 13.

Physical to CNI comparison for TCP latency in kernel optimization performance profile.

Figure 13.

Physical to CNI comparison for TCP latency in kernel optimization performance profile.

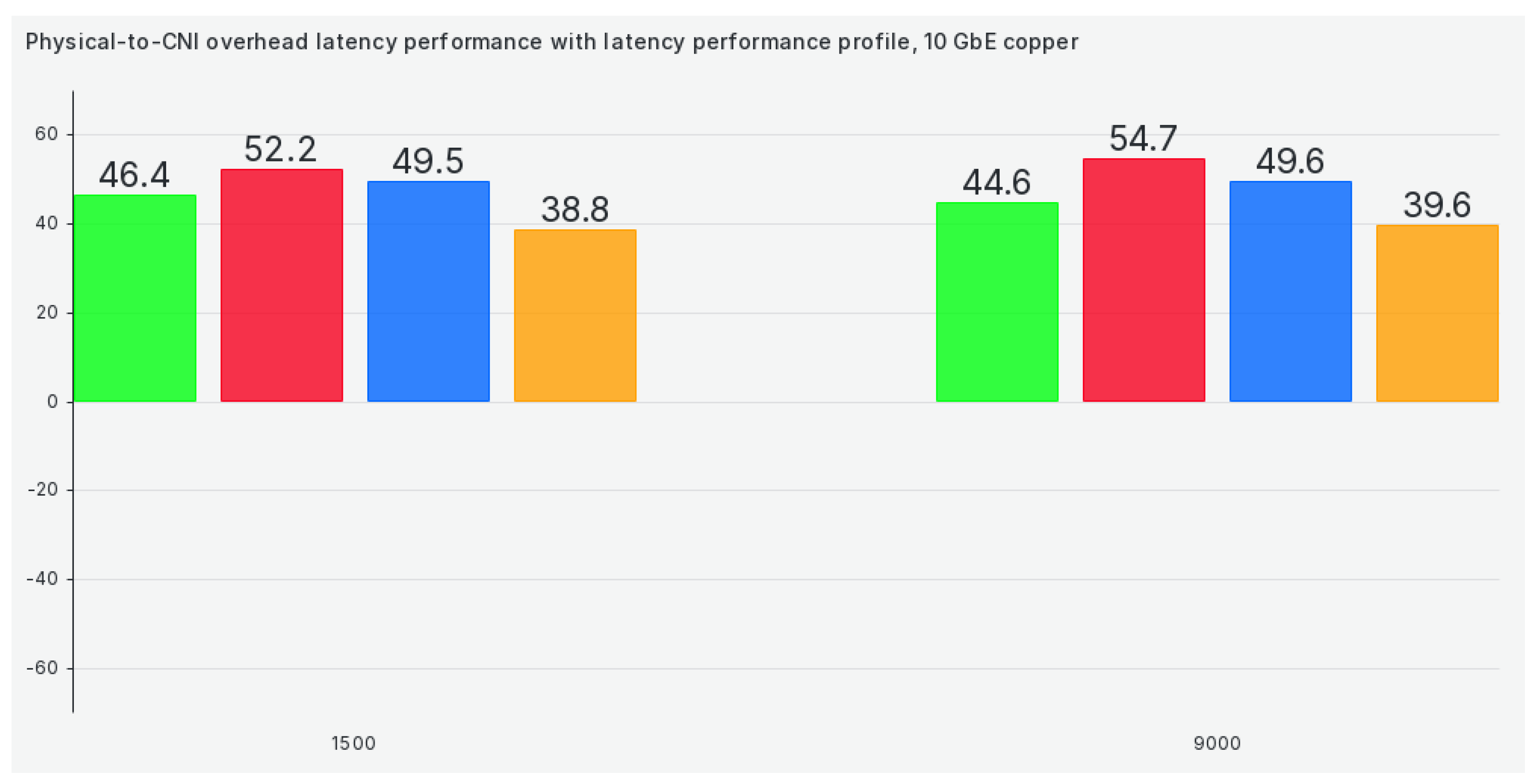

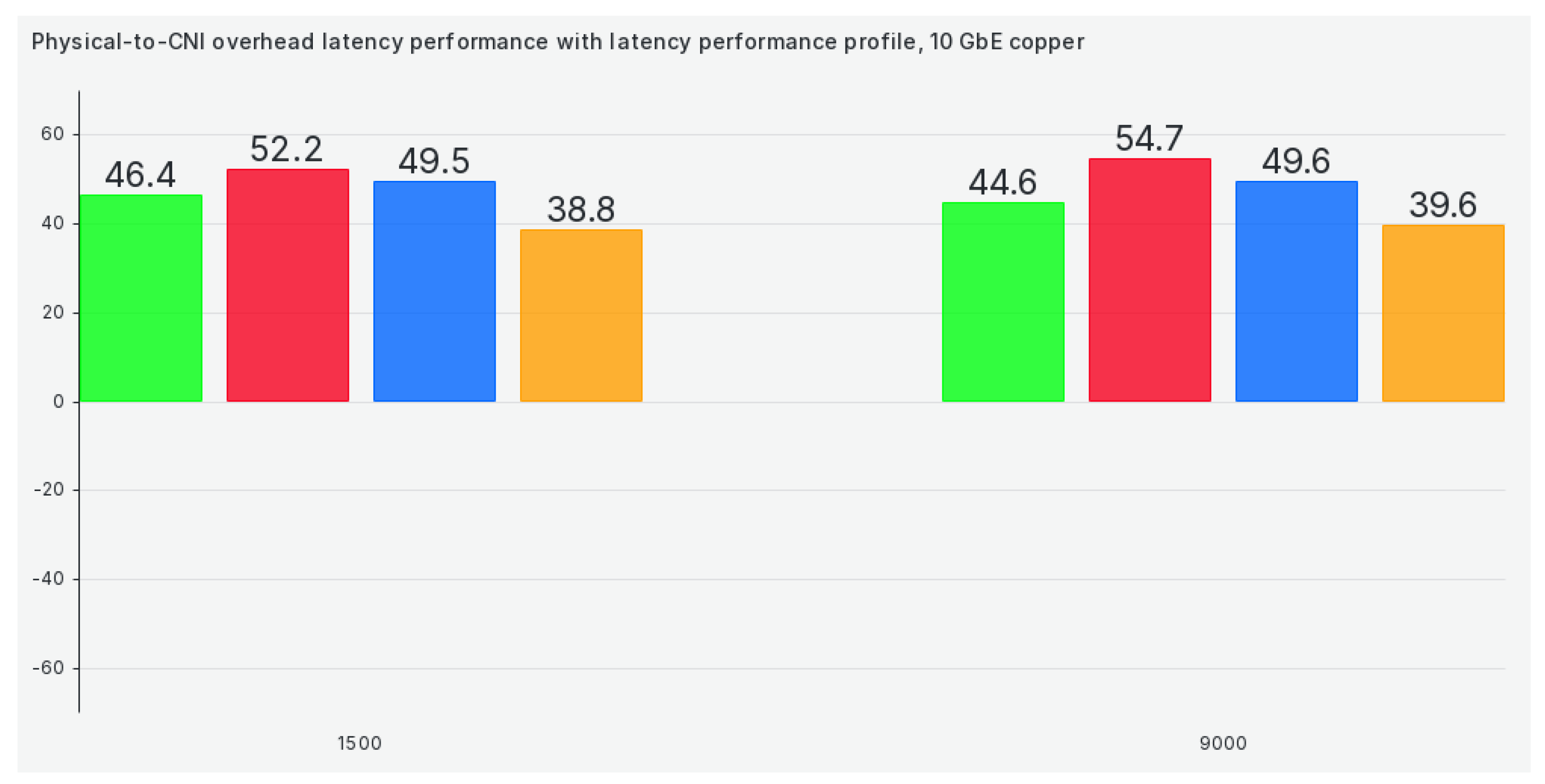

Figure 14.

Physical to CNI comparison for TCP latency in the latency performance profile.

Figure 14.

Physical to CNI comparison for TCP latency in the latency performance profile.

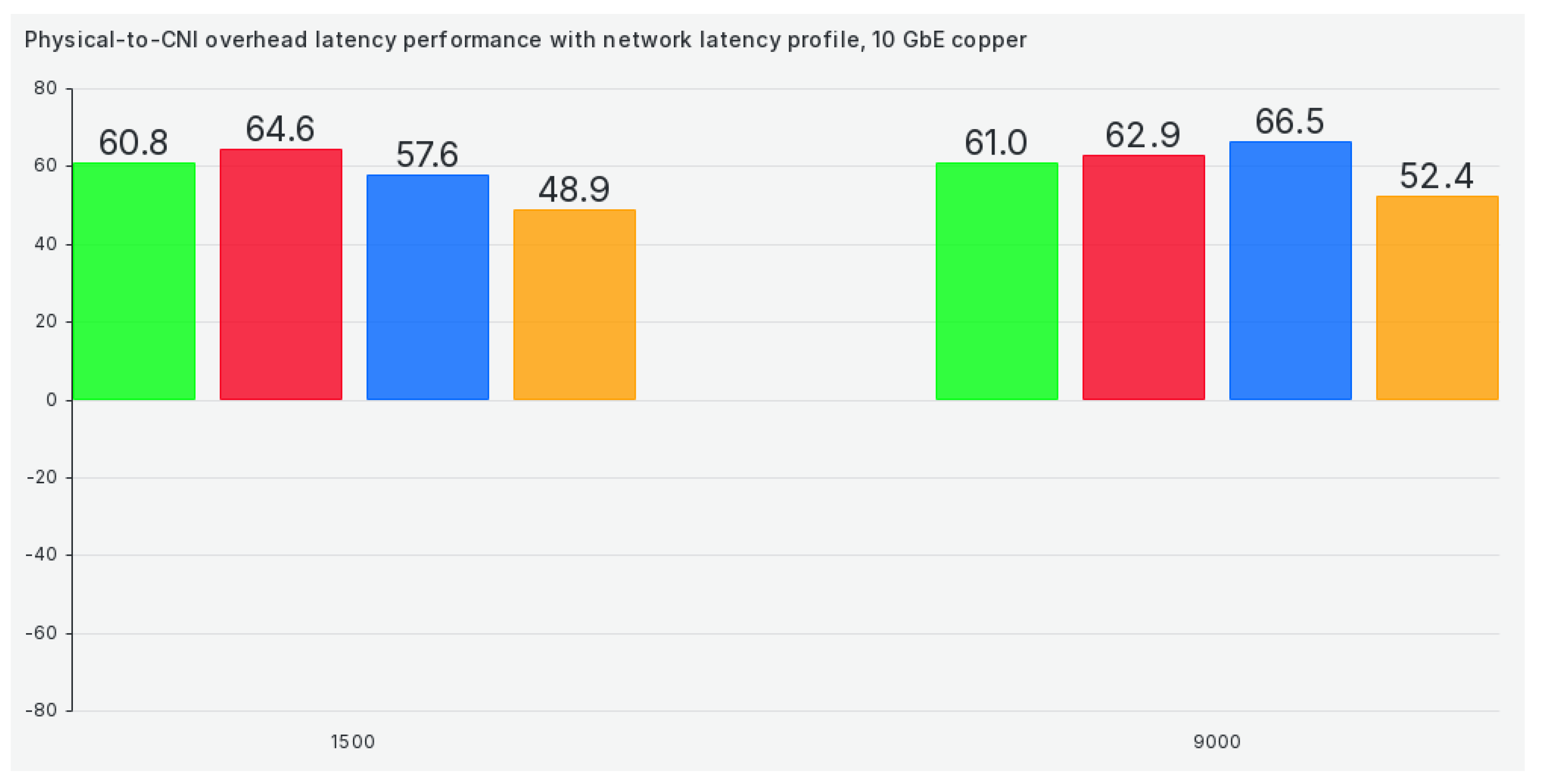

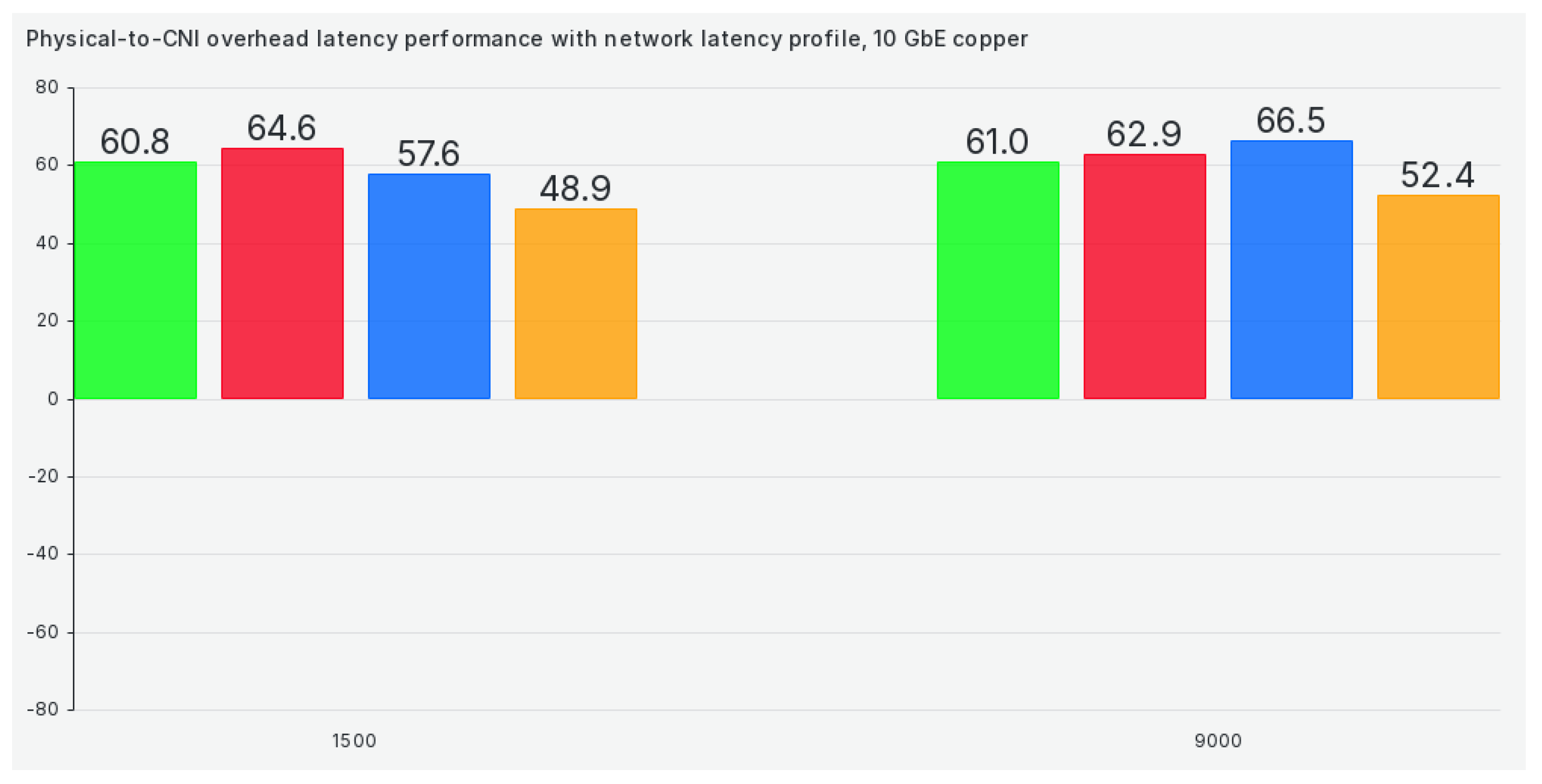

Figure 15.

Physical to CNI comparison for TCP latency in network latency performance profile.

Figure 15.

Physical to CNI comparison for TCP latency in network latency performance profile.

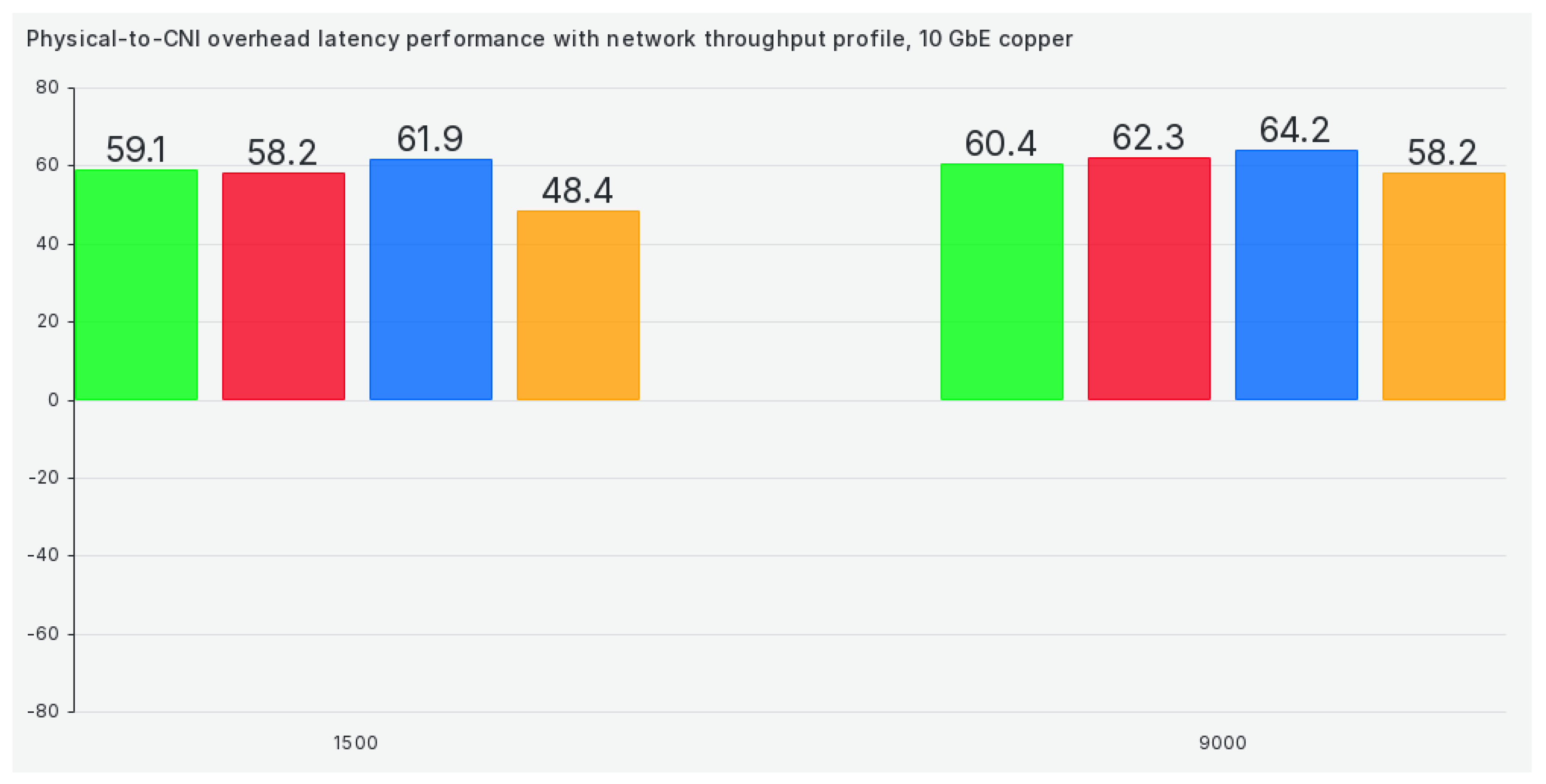

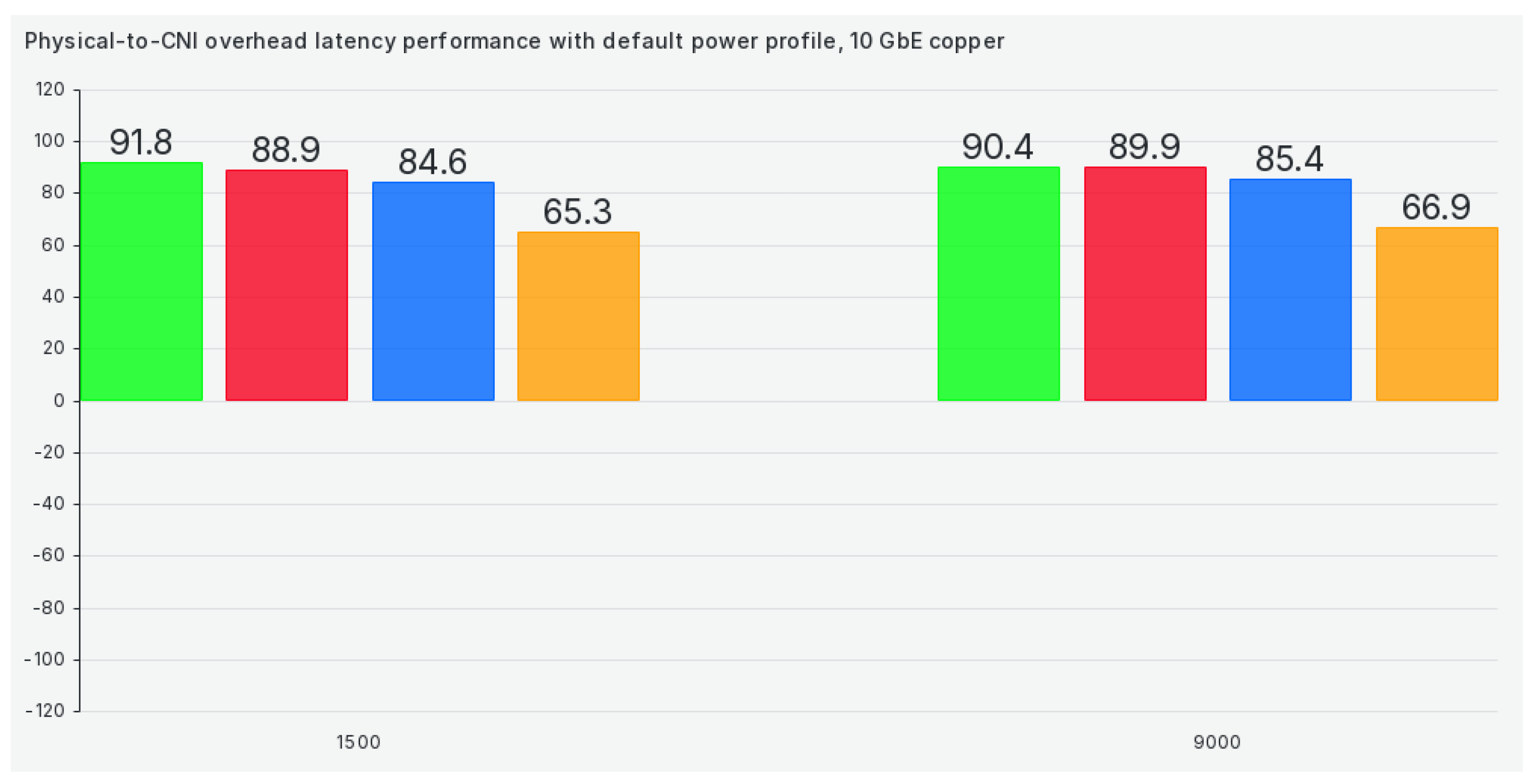

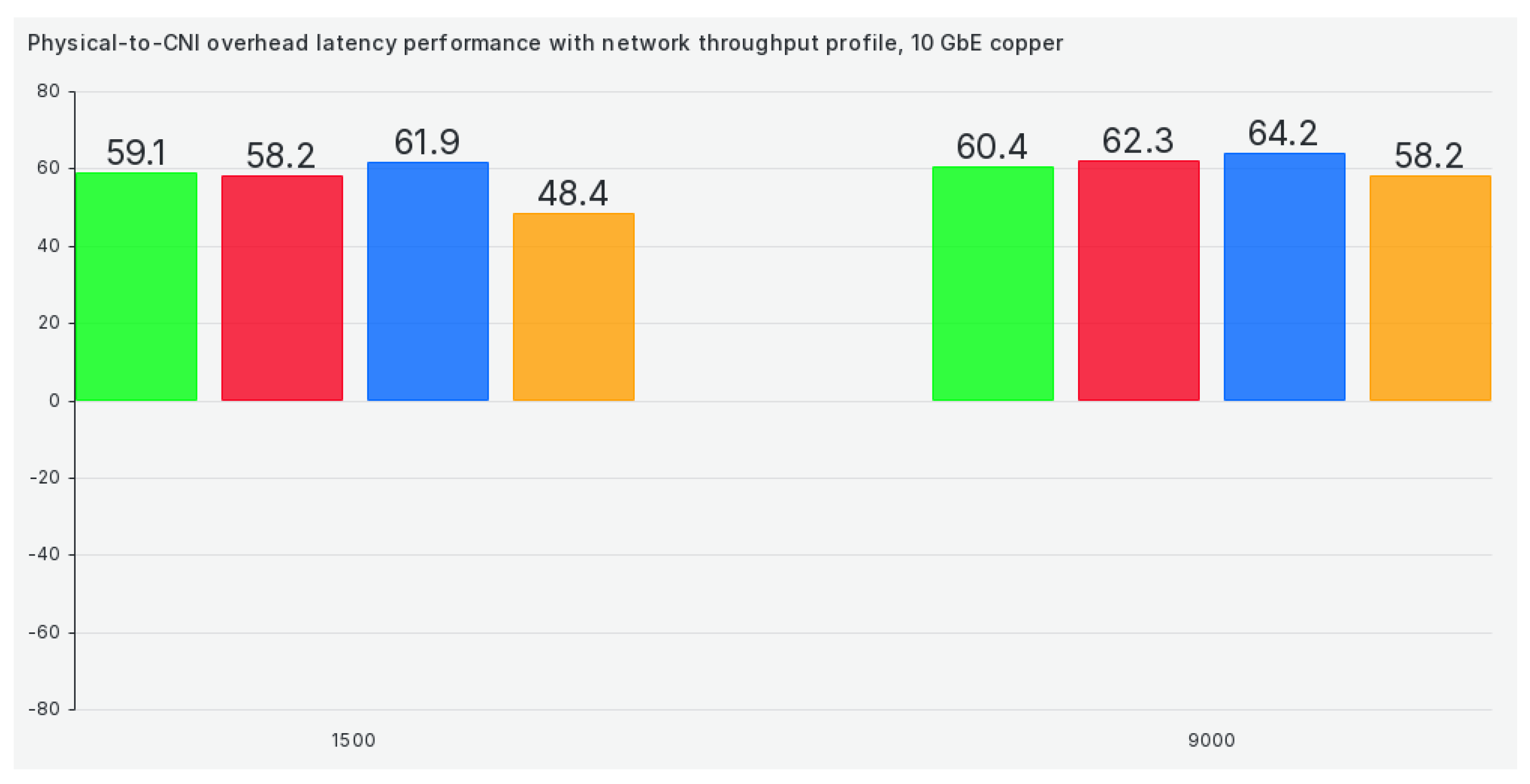

Figure 16.

Physical to CNI comparison for TCP latency in network throughput performance profile.

Figure 16.

Physical to CNI comparison for TCP latency in network throughput performance profile.

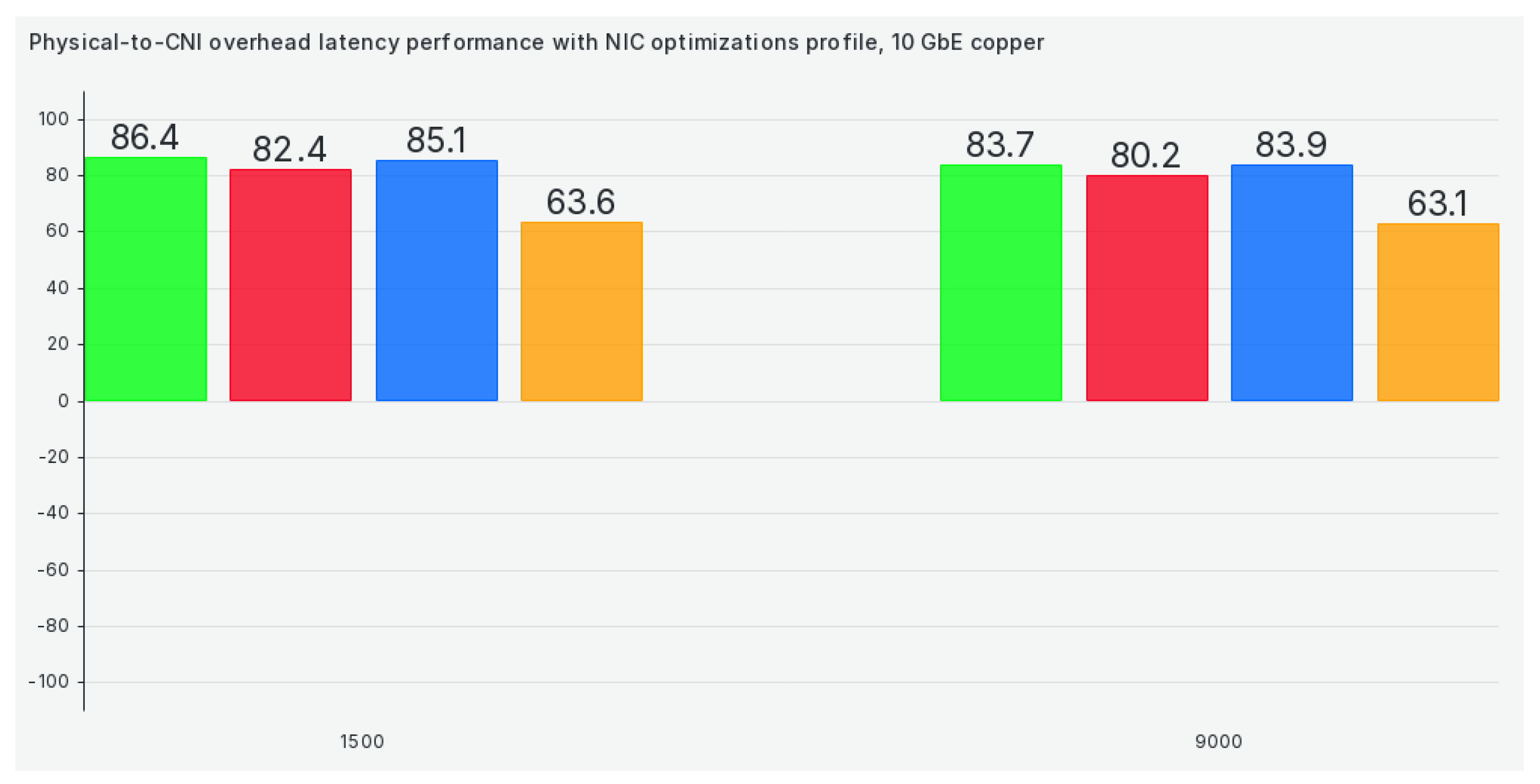

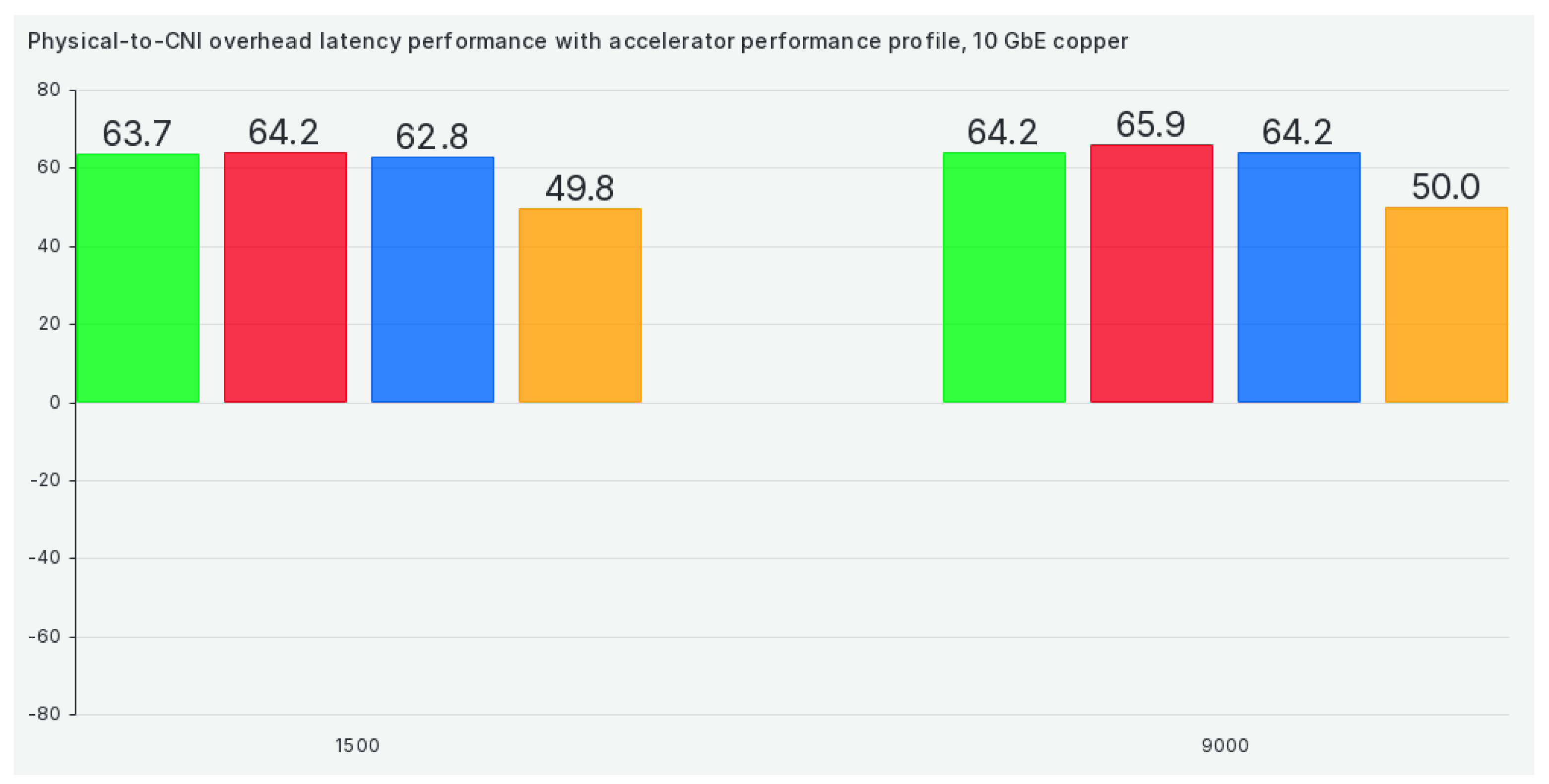

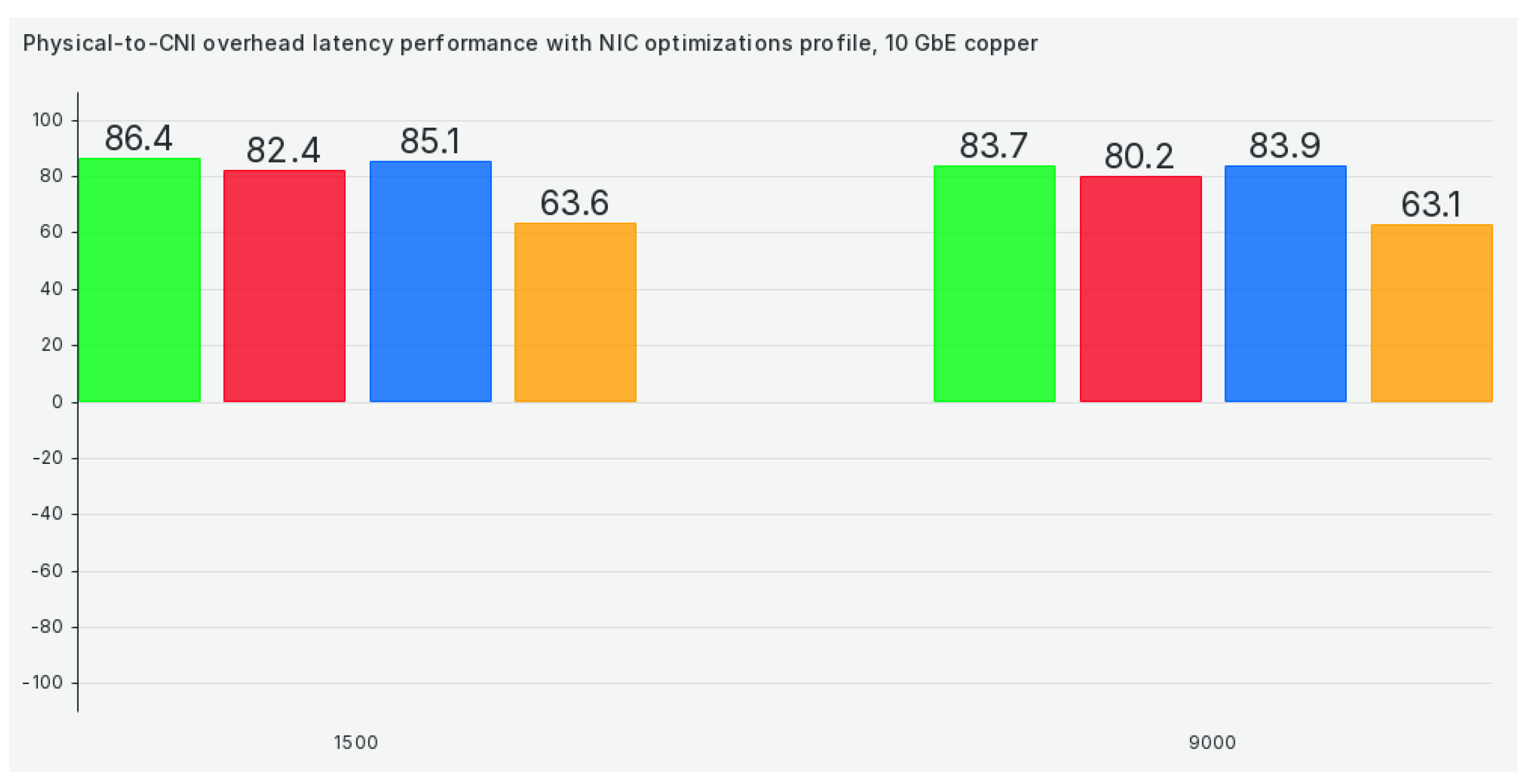

Figure 17.

Physical to CNI comparison for TCP latency in the nic-optimization performance profile.

Figure 17.

Physical to CNI comparison for TCP latency in the nic-optimization performance profile.

Figure 18.

Physical to CNI comparison for UDP bandwidth in default tuned performance profile.

Figure 18.

Physical to CNI comparison for UDP bandwidth in default tuned performance profile.

Figure 19.

Physical to CNI comparison for UDP bandwidth accelerator-performance profile.

Figure 19.

Physical to CNI comparison for UDP bandwidth accelerator-performance profile.

Figure 20.

Physical to CNI comparison for UDP bandwidth in the hpc-compute performance profile.

Figure 20.

Physical to CNI comparison for UDP bandwidth in the hpc-compute performance profile.

Figure 21.

Physical to CNI comparison for UDP bandwidth in kernel optimization performance profile.

Figure 21.

Physical to CNI comparison for UDP bandwidth in kernel optimization performance profile.

Figure 22.

Physical to CNI comparison for UDP bandwidth in the latency performance profile.

Figure 22.

Physical to CNI comparison for UDP bandwidth in the latency performance profile.

Figure 23.

Physical to CNI comparison for UDP bandwidth in network latency performance profile.

Figure 23.

Physical to CNI comparison for UDP bandwidth in network latency performance profile.

Figure 24.

Physical to CNI comparison for UDP bandwidth in network throughput performance profile.

Figure 24.

Physical to CNI comparison for UDP bandwidth in network throughput performance profile.

Figure 25.

Physical to CNI comparison for UDP bandwidth in the nic-optimization performance profile.

Figure 25.

Physical to CNI comparison for UDP bandwidth in the nic-optimization performance profile.

Figure 26.

Physical to CNI comparison for UDP latency in default tuned performance profile.

Figure 26.

Physical to CNI comparison for UDP latency in default tuned performance profile.

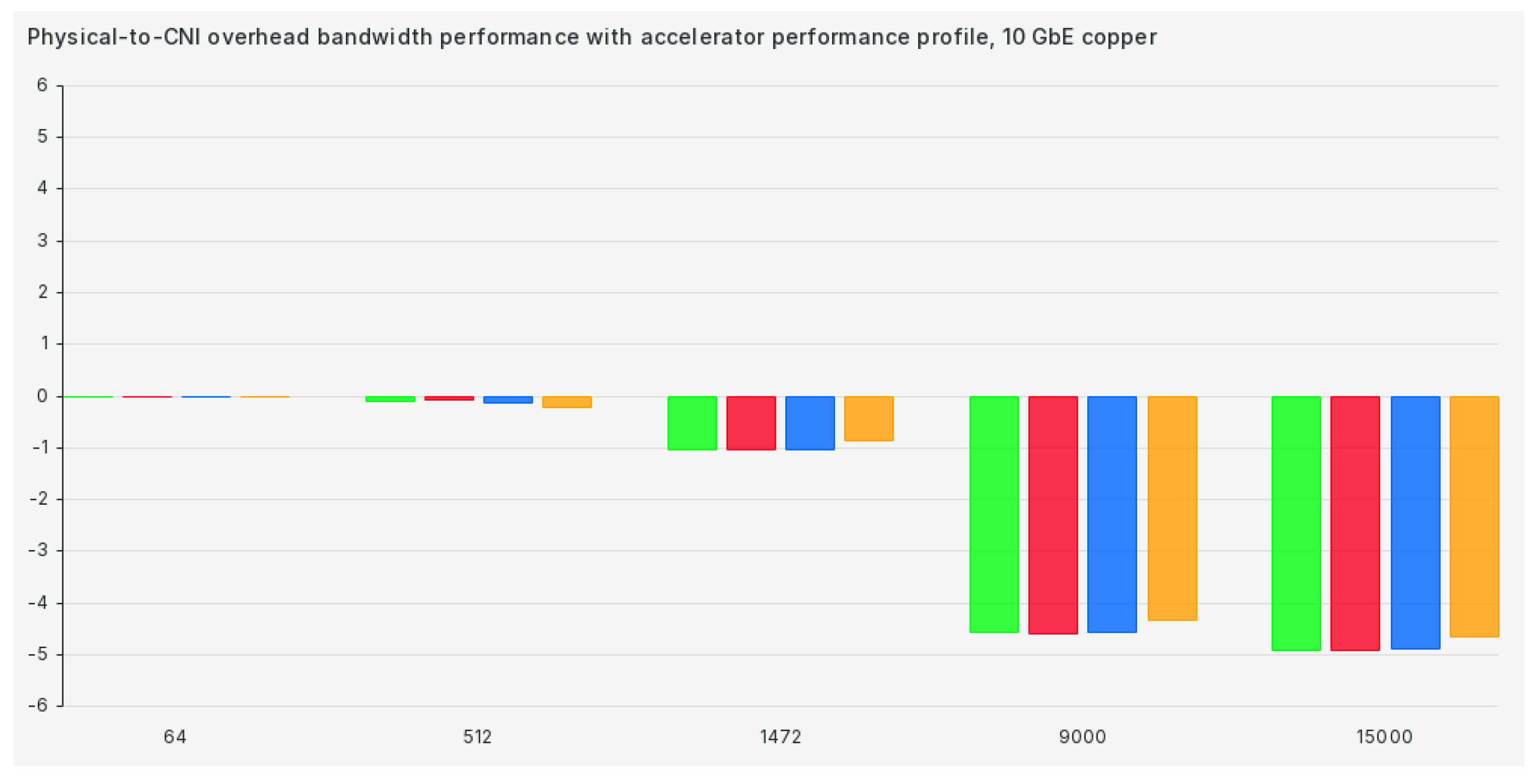

Figure 27.

Physical to CNI comparison for UDP latency accelerator-performance profile.

Figure 27.

Physical to CNI comparison for UDP latency accelerator-performance profile.

Figure 28.

Physical to CNI comparison for UDP latency in the hpc-compute performance profile.

Figure 28.

Physical to CNI comparison for UDP latency in the hpc-compute performance profile.

Figure 29.

Physical to CNI comparison for UDP latency in kernel optimization performance profile.

Figure 29.

Physical to CNI comparison for UDP latency in kernel optimization performance profile.

Figure 30.

Physical to CNI comparison for UDP latency in the latency performance profile.

Figure 30.

Physical to CNI comparison for UDP latency in the latency performance profile.

Figure 31.

Physical to CNI comparison for UDP latency in network latency performance profile.

Figure 31.

Physical to CNI comparison for UDP latency in network latency performance profile.

Figure 32.

Physical to CNI comparison for UDP latency in network throughput performance profile.

Figure 32.

Physical to CNI comparison for UDP latency in network throughput performance profile.

Figure 33.

Physical to CNI comparison for UDP latency in the nic-optimization performance profile.

Figure 33.

Physical to CNI comparison for UDP latency in the nic-optimization performance profile.