I. Introduction

High renewable energy penetration and transportation electrification are the keys to building a secure and sustainable energy infrastructure due to their potential for reducing carbon emission and dependency on fossil fuels [

1]. Many countries are adopting this potential as an initiative to promote their fuel efficiency and emission standards. For instance, the United States has outlined its target to increase the national Electric Vehicles (EV) sales shares to 50% by 2030 [

2]. This is also reflected in the increasing trend of global sales of EVs in recent years. International Energy Agency (IEA) reported that there was a 41% growth in new EV registrations in the year 2020 alone, undeterred by the pandemic-related worldwide downturn in car sales [

3]. Although EVs offer numerous environmental benefits, large-scale adoption of EVs may pose severe impacts on power grids due to the large and undesirable peaks in the load [

4]. Enhancing the generation capacity and network restructuring can be a proactive measure. However, this solution requires substantial infrastructure investments and is a time-consuming approach that is unable to cope with the rapid growth of EV adoption in the transportation industry. Another feasible yet cost-effective solution is adopting the Demand Response (DR) programs that enable EV users to coordinate their charging schedules in response to time-varying electricity prices [

5]. With a readily available Vehicle-to-Grid (V2G) technology [

6], EV users can also incentivize themselves by supplying energy to the grid and thus can contribute to the supply/demand balance for the grid.

Time-varying electricity pricing is a widely adopted DR strategy that helps enhance grid reliability and reduce high generation cost caused by peak demand. Contrary to traditional flat-rate pricing, where customers are charged at the same rate throughout the day, time-varying pricing (TVP) allows the utilities to offer variable rates to their customers at different times of the day depending on total demand, supply, and other critical factors. TVP incentivizes the users to shift their demand from peak hours to off-peak hours and thus, reduce their energy costs as well as reduce the unwanted spikes in the load curve. Utilities offer a variety of TVP programs to their customers, among which Time-of-use (TOU) [

7] is the most common pricing program, where the day is divided into two or three consecutive blocks of hours based on peak, off-peak, and mid-peak periods. In TOU, the prices vary from one block to another. Therefore, it is less reflective of the volatile nature of the wholesale electricity prices. In critical-peak-pricing (CPP), utilities use their load forecasting capability and identify a

‘critical event’ when the price increases dramatically due to deterrent system conditions or weather. Usually, these events are identified and communicated to the users at least a day in advance and are only applied up to 18 times per calendar year [

8]. Both TOU and CPP are known in advance, and hence, customers can plan their energy usage accordingly. Another pricing program that closely follows the wholesale energy market is Real-time-pricing (RTP) [

9]. In RTP, prices vary hourly (sometimes sub-hourly) to reflect the real-time fluctuations in the wholesale market due to variations in demand, supply, generator failure, etc. Although RTP is yet to be adopted by many utility companies, pilot projects run by several utilities, such as Commonwealth Edison Company (ComEd) and Georgia Power, have proven its economical and practical viability for efficient energy management [

10,

11]. RTP can be cleared day-ahead or every hour. EV users can respond to the hourly variable pricing signal by navigating their charging demand. Therefore, this paper considers that an hourly day-ahead pricing signal is available to the EV user. Additionally, we consider the grid to be bi-directional with net metering arrangements [

12] to facilitate V2G technology. V2G allows the users to inject surplus energy of their EV batteries into the grid. Net metering is required to realize V2G as it enables bidirectional trading of energy between EV users and the grid [

13].

An efficient charge scheduling strategy should meet the requirements of both EV users and utility companies. From the EV users’ perspective, the requirements consist of meeting their charging demand and meanwhile minimizing charging costs. From the utility’s perspective, the requirements include ‘peak shaving’ during peak hours and ‘valley filling’ during off-peak hours to facilitate the operation and increase the reliability of the grid. Therefore, an appropriate DR program that can meet these requirements is necessary to accommodate the large-scale adoption of EVs. By implementing an effective charging control strategy, EV users can benefit themselves and help the grid by responding to the pricing signal. On the flip side of the coin, the uncertainties pertaining to the user’s driving behavior, i.e., random arrival and departure times of EVs to and from a charging station, need to be addressed rigorously to develop a charging control scheme. Moreover, users’ sporadic charging preferences and time-varying energy prices of the utilities also need to be accounted for by an effective solution.

Reinforcement Learning (RL) has been used in many studies as the solution method for optimal charge scheduling of EVs. In general, RL helps to learn the policy of selecting the best action in a given environment. An on-policy RL algorithm such as state-action-reward-state-action (SARSA) [

14] is used in [

15] where discrete state and action spaces are considered. However, a discrete state space limits the actual representation of a real-world charging environment. Reference [

16] uses Hyperopia SARSA (HSA), where a linear function approximator is used to evaluate the charging actions. Another deficiency of on-policy RL is that the same policy is used for decision-making and evaluation, which potentially limits exploration of the RL agent. To overcome this, off-policy RL leverages experiences generated by random policies facilitating better simulations of real-world scenarios. Off-policy RL, such as Q-learning [

17], is used to derive optimal charging strategy for EVs, focusing on various objectives, such as minimizing the charging cost, maximizing EV user’s revenue, satisfying charging demand, and balancing the grid load [

18,

19,

20,

21]. However, Q-learning inherently suffers in real-world scenarios where the state and action spaces are large and continuous. Moreover, both SARSA and Q-learning suffer from limited approximation capability in the case of policy evaluation, such as action-value estimation.

Recent successes of Deep Reinforcement Learning (DRL) [

22,

23] have inspired many researchers to address the problem of EV charge scheduling using DRL. Reference [

24] applies Deep Q-Learning (DQN) to minimize electricity bill and user’s range anxiety. User’s range anxiety, defined as the difference between the current and the desired battery energy, is applied at the departure time. Reference [

25] uses Kernel Density Estimation (KDE) to approximate variables related to charger usage patterns, such as arrival time, charging duration, and charging amount from real-world data. However, in both [

24] and [

25], the EV charge scheduling problem is solved using DQN considering discrete charging rates as action space. DQN can only handle discrete action space and, therefore, are not suitable for the problems where continuous action space is required. Authors in [

26] address this issue by formulating the problem as a Constrained Markov Decision Process (CMDP) and derive a continuous charge scheduling strategy using Constrained Policy Optimization (CPO) [

27]. User’s range anxiety is handled using the constraints and a cost function. However, this work disregards user’s anxiety during the charging periods, which is an essential factor when determining an optimal charging strategy. Reference [

28] uses continuous soft actor-critic (SAC) algorithm to learn an optimal charging control strategy. The reward function includes three types of sensitivity coefficients based on user’s preference that requires manual tuning. Reference [

29] also considers continuous charging actions and proposes a Control Deep Deterministic Policy Gradient (CDDPG) algorithm to learn the optimal charging policy. This algorithm uses two replay memories: one to store all the experiences generated before the end of an episode and the other to store only the last experience of an episode. Experiences are then sampled from both memories to train the model. While the performance is favorable, sampling from two different replay memories introduces biased updates that consequently affect the learning performance. To ensure that the unbiased sampling property of RL is maintained, the importance-sampling technique can be implemented [

30].

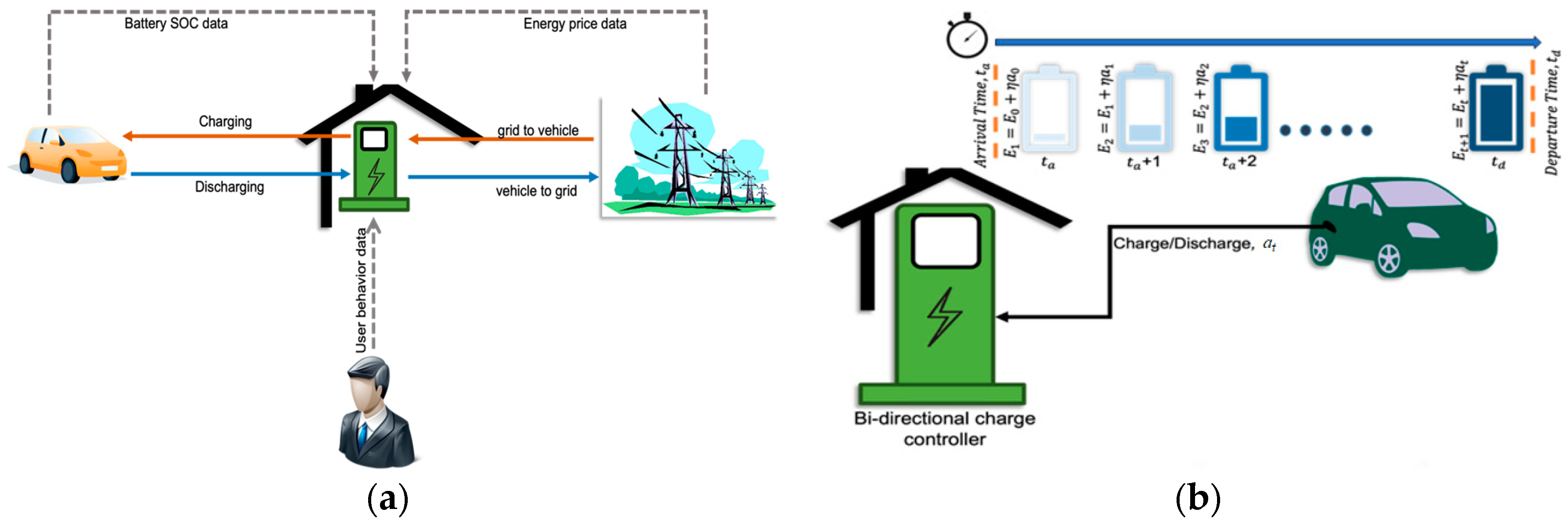

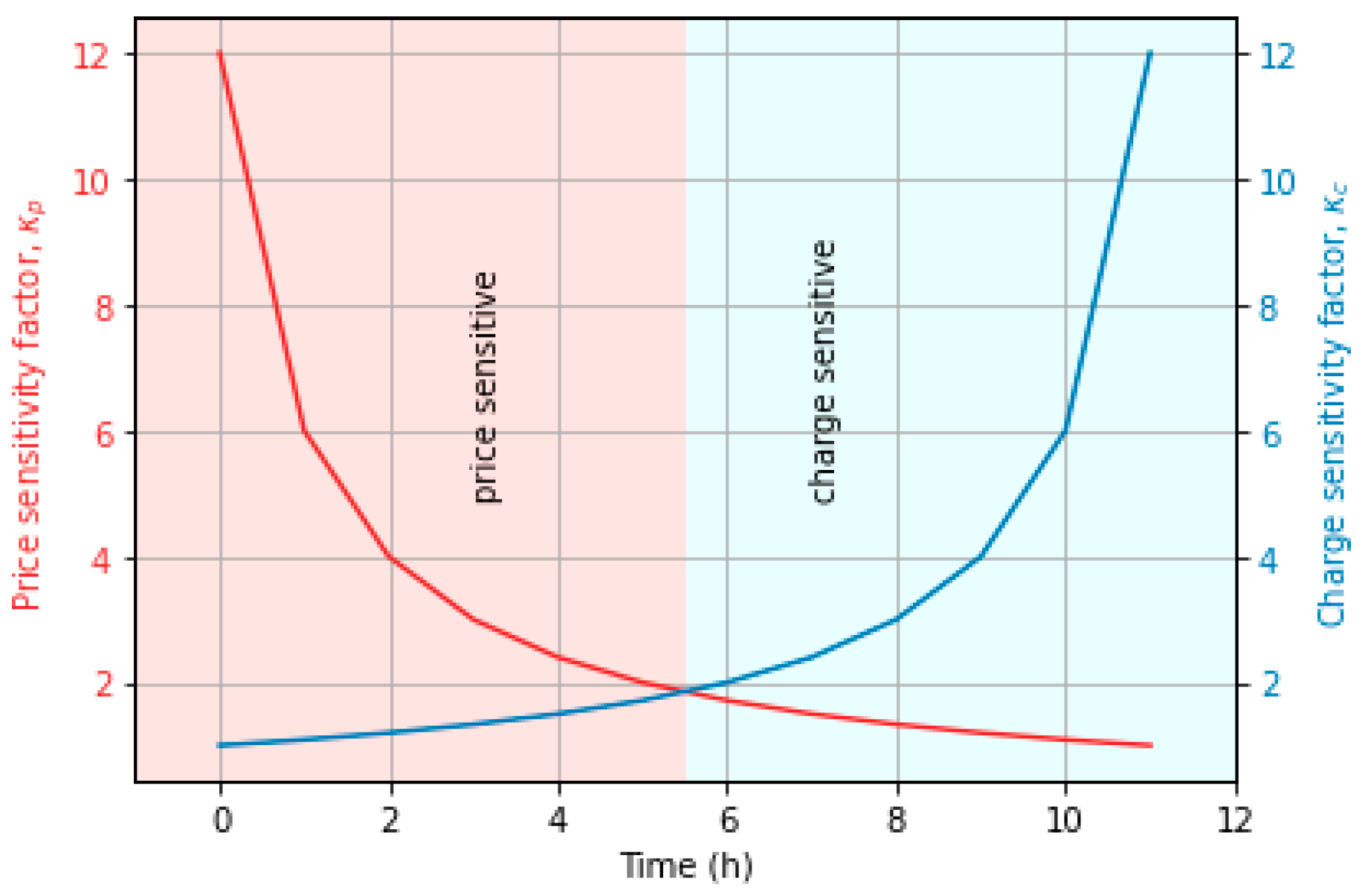

Despite the promising outcomes, the preceding methods lack a few critical aspects when determining the charge scheduling strategy. Firstly, the influence of the remaining charging duration is ignored in the literature when defining user’s anxiety. More specifically, the variation of user’s anxiety with time is not addressed. In our work, we address this variation by defining an anxiety function not only in terms of uncharged battery energy but also in terms of the remaining charging duration. Secondly, the previous works used manually tuned constant coefficients to represent user’s preference for meeting energy demand over minimizing energy cost. In a practical scenario, a user is naturally more inclined to minimize energy cost during the first few hours of the charging duration. As it approaches the departure time, the importance of charging the battery to satisfy the energy requirement increases when compared to minimizing cost. Using constant coefficients cannot account for this scenario effectively. Thus, we introduce a dynamic weight allocation technique to address this issue and avoid the need for manual tuning of coefficients. Thirdly, the randomness of user’s driving behavior, such as arrival time, departure time, etc., was modeled by using a normal distribution in most studies. However, the distribution was defined based on assumptions that may fail to reflect the unpredictability in the real-world. In this paper, a real-world dataset is utilized to extract key information, such as arrival time, departure time, charging duration, and battery SOC.

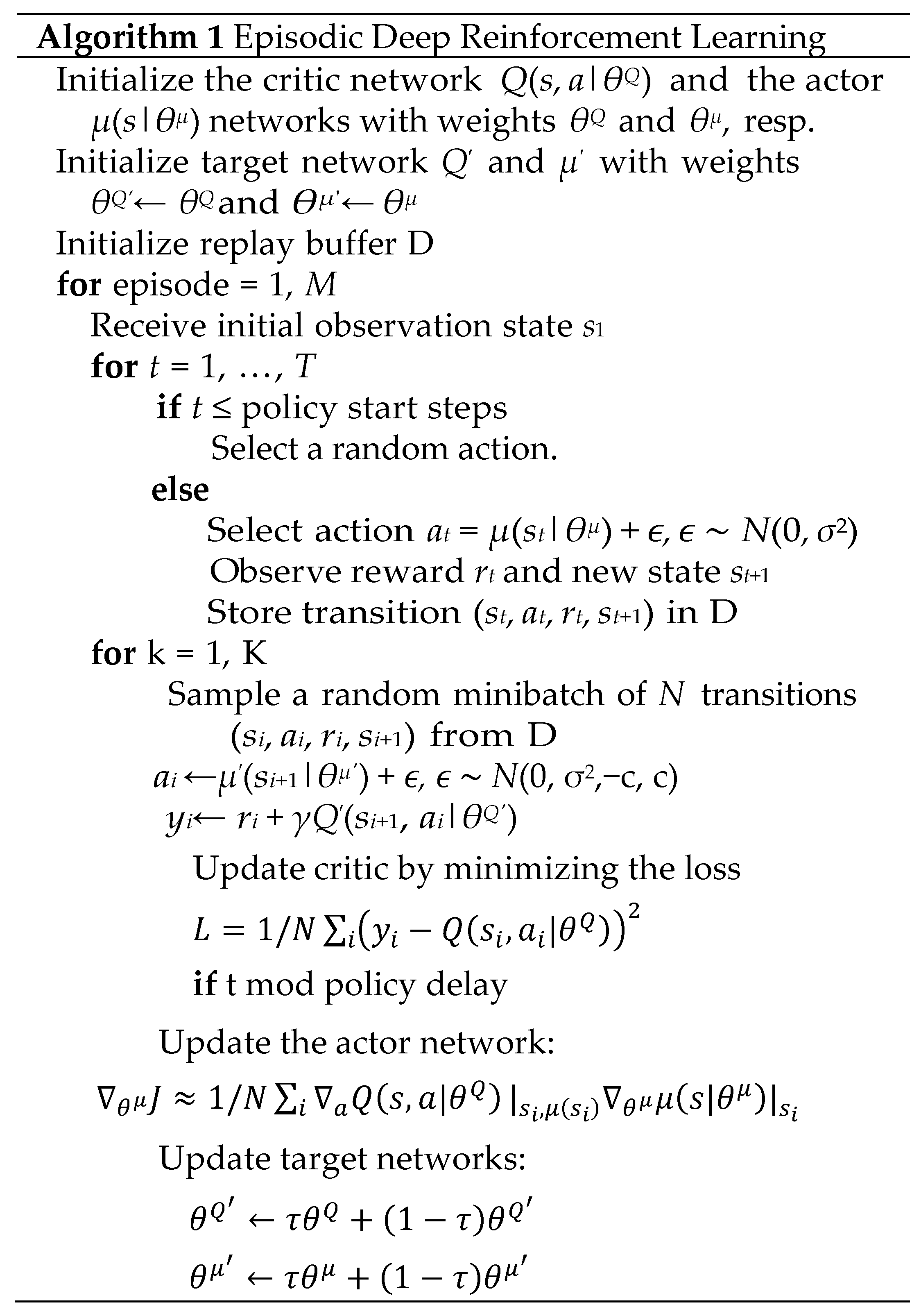

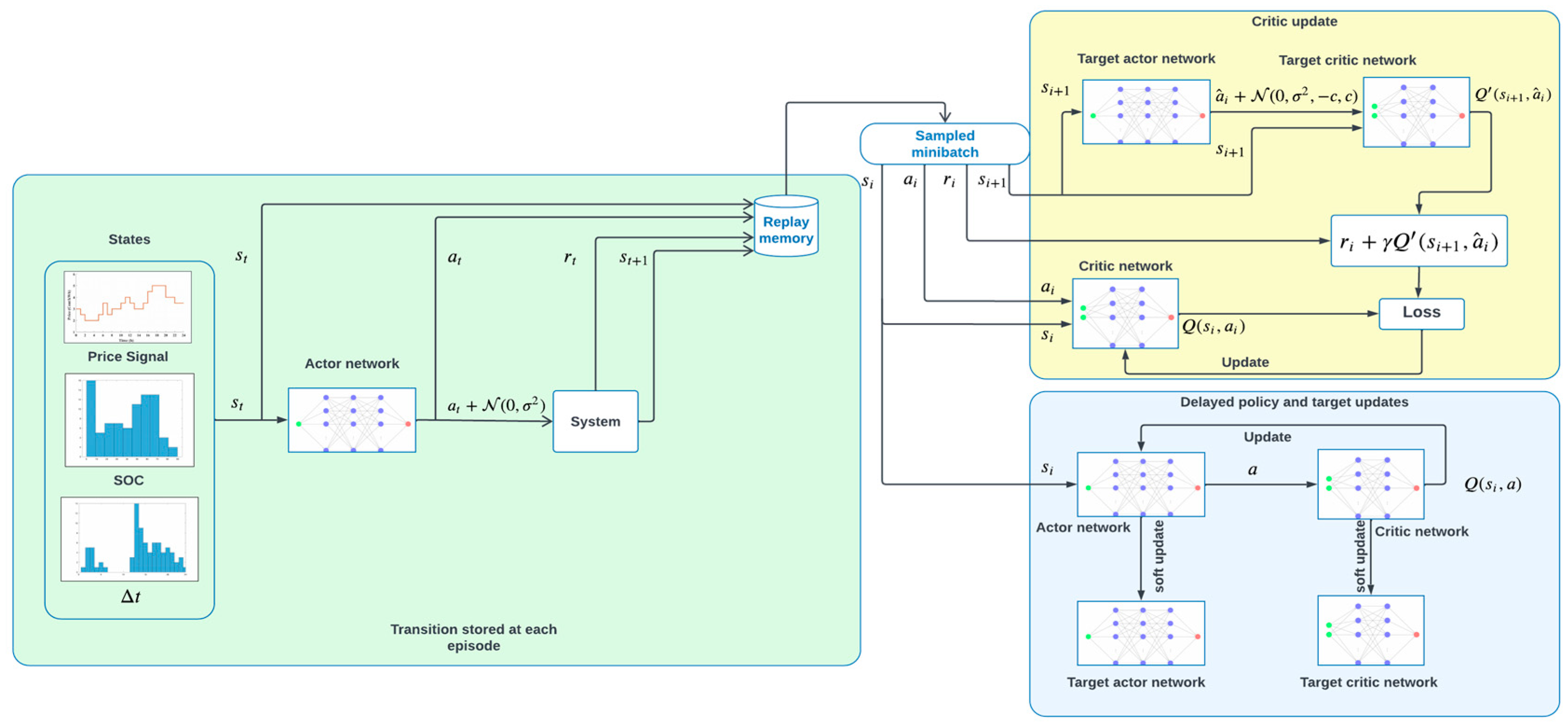

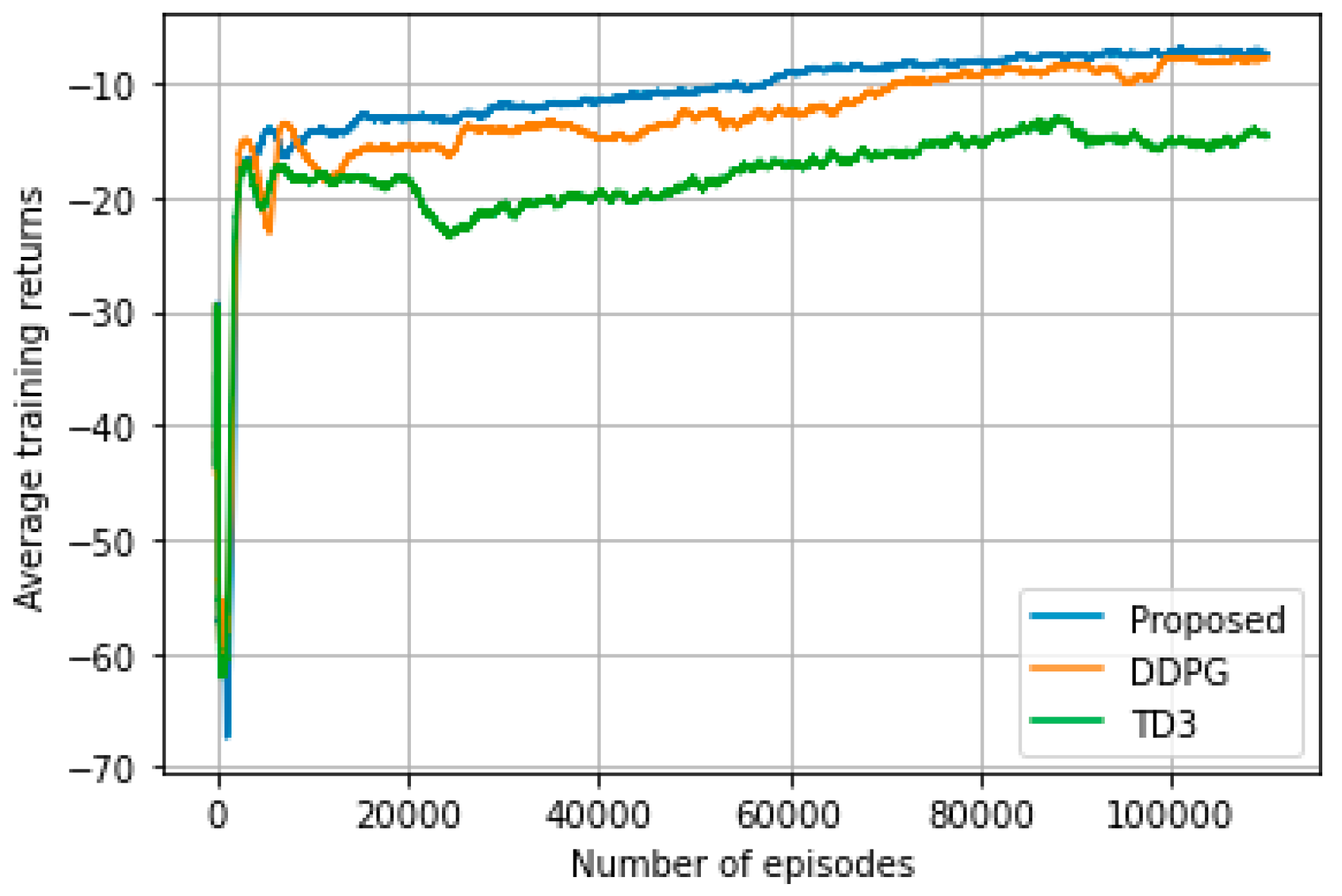

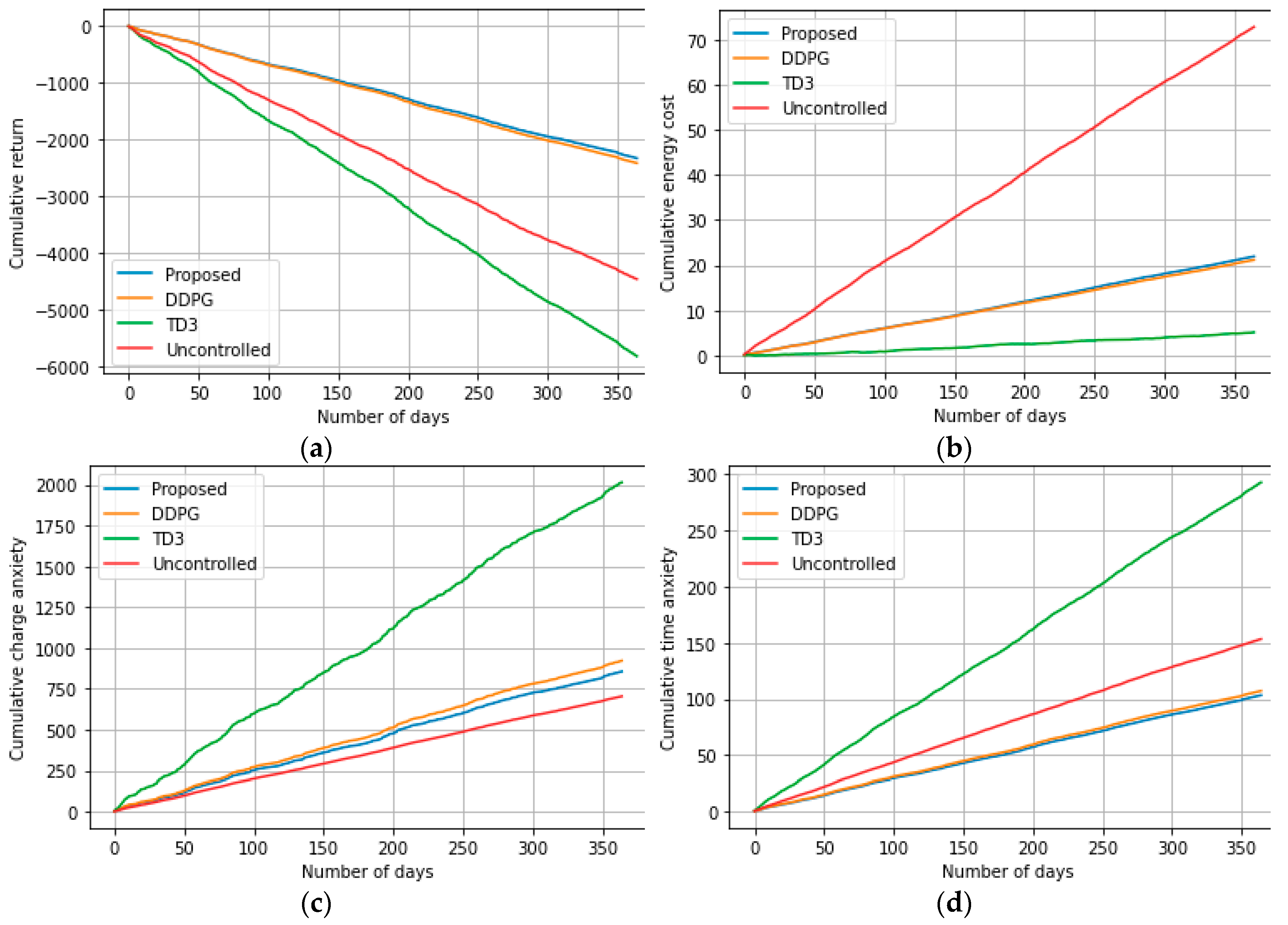

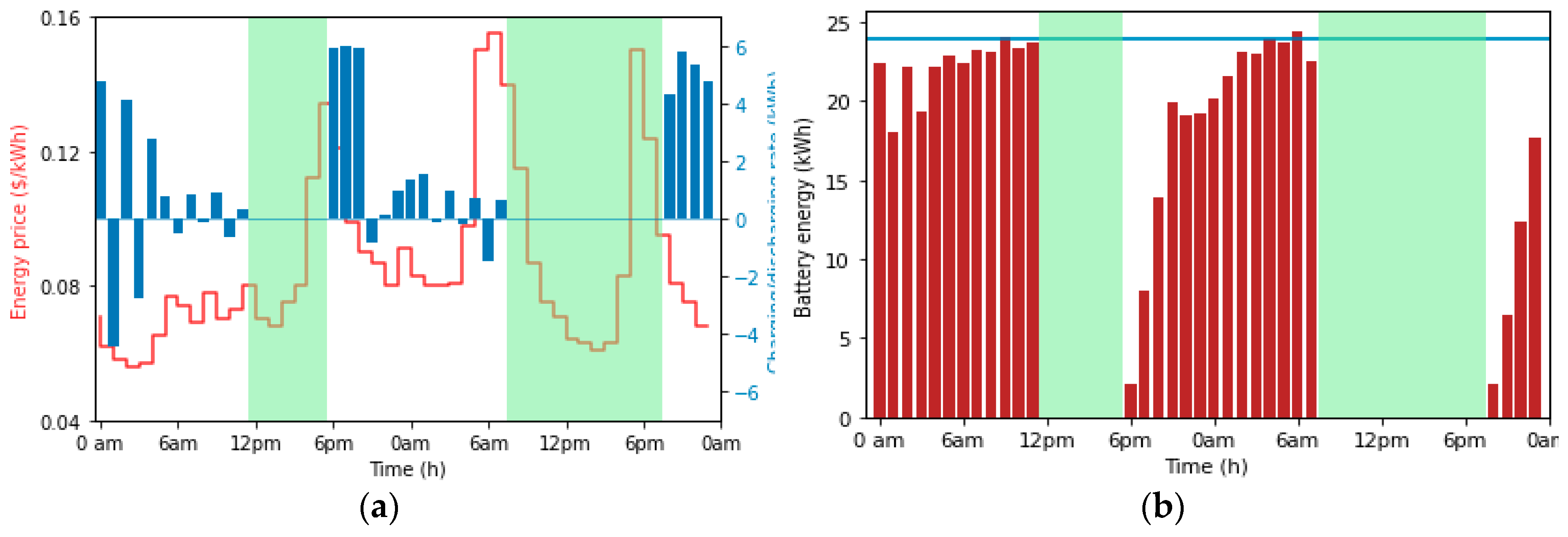

In this work, the anxiety is applied during the entire time horizon of the charging duration to address the influence of time on user’s anxiety. 2) A dynamic weight allocation technique is used to implement the time-varying importance of the components of the reward function. Upon arrival, the weight of energy cost is the highest, and the weight of the anxiety components is the lowest. In sharp contrast, at departure, the weight of the energy cost is the lowest, and the weight of the anxiety components is the highest. 3) This paper proposes an episodic approach to the widely used Deep Deterministic Policy Gradient (DDPG) algorithm [

31], in which each episode corresponds to the complete charging duration between the arrival and departure times. Moreover, this paper incorporates target policy smoothing and delayed policy update techniques from the Twin Delayed DDPG (TD3) algorithm [

32] to address the inherent instability and overfitting problems of the DDPG algorithm. 4) Finally, a real-world dataset is used to derive a realistic model for user's random driving behavior, such as arrival time, departure time, and battery SOC. The performance is compared with two state-of-the-art DRL algorithms: DDPG and TD3. The simulation results exhibit the effectiveness of the proposed algorithm in terms of energy cost minimization and energy demand satisfaction.

The objective of this paper is to develop a charge scheduling strategy that ensures the maximum battery SOC by the time of departure, while minimizing the energy costs through a controlled and dynamic decision-making technique. To obtain the optimal strategy for EV charging control, we propose a model-free DRL-based algorithm. The main contributions of this article are as follows. 1) User’s anxiety is redefined in terms of the remaining energy and the remaining charging duration. More specifically, user’s anxiety consists of two components: a) the difference between the present battery SOC and the target SOC, and b) the ratio of the difference between the present battery SOC and the target SOC to the remaining charging duration. Unlike the previously mentioned studies, in which the anxiety was applied only on the departure time.

The rest of the paper is structured as follows: Section II discusses the system model and a detailed MDP formulation. In Section III, a brief background of DRL followed by the proposed algorithm is presented. In Section IV, performance evaluation of the proposed algorithm along with detailed analysis of the results is provided. Finally, Section V concludes this paper.

Short Biography of Authors

Ishtiaque Zaman received the B.Sc. degree in electrical and telecommunications engineering from North South University, Dhaka, Bangladesh in 2011, the M.S. degree in electrical engineering from Lamar University, USA, in 2017, and the Ph.D. degree in electrical engineering in Texas Tech University, USA in 2022. He worked as an Engineering Development Group Intern at MathWorks, Inc, USA during the fall and summer of 2018 and 2020, respectively, where he developed and automated hardware-in-the-loop simulation workflow of electrical components for efficient deployment. His research interests include machine learning and deep learning technologies and their applications in energy management, smart grid, renewable energy, and load forecasting.

Shamsul Arefeen received the B.Sc. degree in electrical and electronics engineering from Islamic University of Technology, Dhaka, Bangladesh in 2003, the M.S. degree in electrical engineering from Texas Tech University, USA, in 2018, and the Ph.D. degree in electrical engineering in Texas Tech University, USA in 2022. He is currently an Assistant Professor at SUNY Morrisville. He was a part-time instructor of freshmen engineering courses on computational thinking and data science at Texas Tech University. He worked as a graduate intern at National Renewable Energy Laboratory (NREL), USA, during the summer of 2021, where he contributed to the development of bifacial radiance software for bifacial photovoltaic performance modeling. His research interests include renewable energy systems, electric vehicles, smart grid, machine learning and deep learning.

S. L. S. Chetty Vasanth received the B. Tech. degree in electrical and electronics engineering from SRM Institute of Science and Technology, Chennai, India, in 2021. He is currently pursuing a M.S. degree in electrical engineering at Texas Tech University, USA in 2023. Additionally, he is working as a graduate student assistant for engineering courses on control systems analysis and electrical circuits. His research interests include renewable energy systems, smart grid, power electronics, machine learning and power systems.

Tim Dallas is a senior member of IEEE. He received the B.A. degree in physics from the University of Chicago, USA, in 1991 and the M.S degree in physics and the Ph.D. degree in applied physics from Texas Tech University, USA, in 1993 and 1996, respectively. He worked as a Senior Technology and Applications Engineer for ISI Lithography, TX, USA from 1997 to 1998 and was a Post-doctoral Research Fellow in Chemical Engineering at the University of Texas, Austin, USA, from 1998 to 1999, prior to his faculty appointment at Texas Tech University in 1999. He is currently a Professor with the Department of Electrical and Computer Engineering at Texas Tech University. His research interests include microelectromechanical systems, nanotechnology, solar energy, and engineering education. He developed educational technologies for deployment to under-served regions of the world. His research group has developed MEMS-based educational technologies that have been commercialized, expanding dissemination. Dr. Dallas served as an Associate Editor of IEEE Transactions on Education.

Miao He (S’08, M’13, SM’18) received the B.S. degree from Nanjing Univ. of Posts and Telecom. in 2005, the M.S. degree from the Tsinghua University in 2008, and the Ph.D. degree from Arizona State University in 2013, all in Electrical Engineering. He joined the faculty of Texas Tech University in 2013 and is now an associate professor at the Department of Electrical and Computer Engineering. His research interests include deep learning applied to modeling, control, and optimization of smart grids, power networks, and renewable energy.