1. Introduction

Discrete-time models are used in biology to capture the dynamic nature of biological systems. In particular,

Boolean networks are models that also assume discrete values for their variables, and allow us to have a qualitative view of the dynamics of a system. The origin of Boolean networks can be traced to Kauffman’s work [

1], where he introduces

random genetic nets to test the hypothesis that modern organisms are randomly assembled molecular automata. Later works, such as that of Kauffman [

2] where maps continuous biochemical control networks to their discrete analogue, and that of Thomas [

3] who attempts to formalize genetic situations through models of complex control circuits, established the usefulness of Boolean network models to study biological regulatory systems.

Many studies highlight the usefulness of models of Boolean networks to analyze regulatory networks. Some examples are: a) the dynamic properties of the core network that controls the mammalian cell cycle [

4], b) simulation of the stomatal response in plants with a signal transduction network model [

5], c) virulence and response to pathogens resulting from the interactions between bacteria and the immune components of an infected host [

6], d) the modeling of the

lac operon in

Escherichia coli [

7], and e) the modeling of the network of regulatory interactions that occur through various stages of embrionic development of the

Drosophila [

8]. Most importantly, such type of models of regulation and signalling serves to study a multitude of factors in an integrated way, rather than studying them in isolation.

In this paper, we present and compare various methodologies for analyzing the dynamics of some Boolean networks under the simplest of assumptions. First, we analyze the regimes of certain Boolean networks; then, we study the transitions between states in some simple Boolean networks applying perturbations. This work intends to introduce some useful tools for potential applications in the modeling and analysis of genetic regulatory networks, as dynamical systems with a causal regulatory network that can be found via gene perturbations.

2. Background

Boolean networks, also known as

N-

k networks, are characterized by two parameters: the number of nodes

N and the in-degree

k. The state that each node can acquire is only one of two possible states -0 and 1, and is determined by a set of rules called

boolean functions,

where the states

of the nodes at time

are determined by the Boolean functions

that depend on certain nodes at time

t (in this work, we make the assumption of

synchronous update; but there exist more flexible modeling frameworks that incorporate randomness in the duration of processes, see [

9]). The arguments of Boolean functions, as well as their order, are specified by a

connectivity graph and a

connectivity matrix. Boolean functions are represented by a

truth table. A Boolean network has a

transition diagram between all

possible states (see

Figure 1).

2.1. Random Boolean Networks

When Boolean functions are extracted from a random sample of 0s and 1s they become

random boolean functions, and the network becomes a

random boolean network (RBN) [

10]. Boolean functions can be constructed with a uniform probability distribution. Or they can be constructed from a binomial probability distribution with parameter

p, this parameter being therefore the probability that each of the Boolean functions returns a “1” as output. This parameter will also determine the type of system:

Chaotic, if

Ordered, if

At the edge of chaos, if

In the last case,

is known as the

critical in-degree. Since these are probabilities, this is only true when the number of nodes

N is very large.

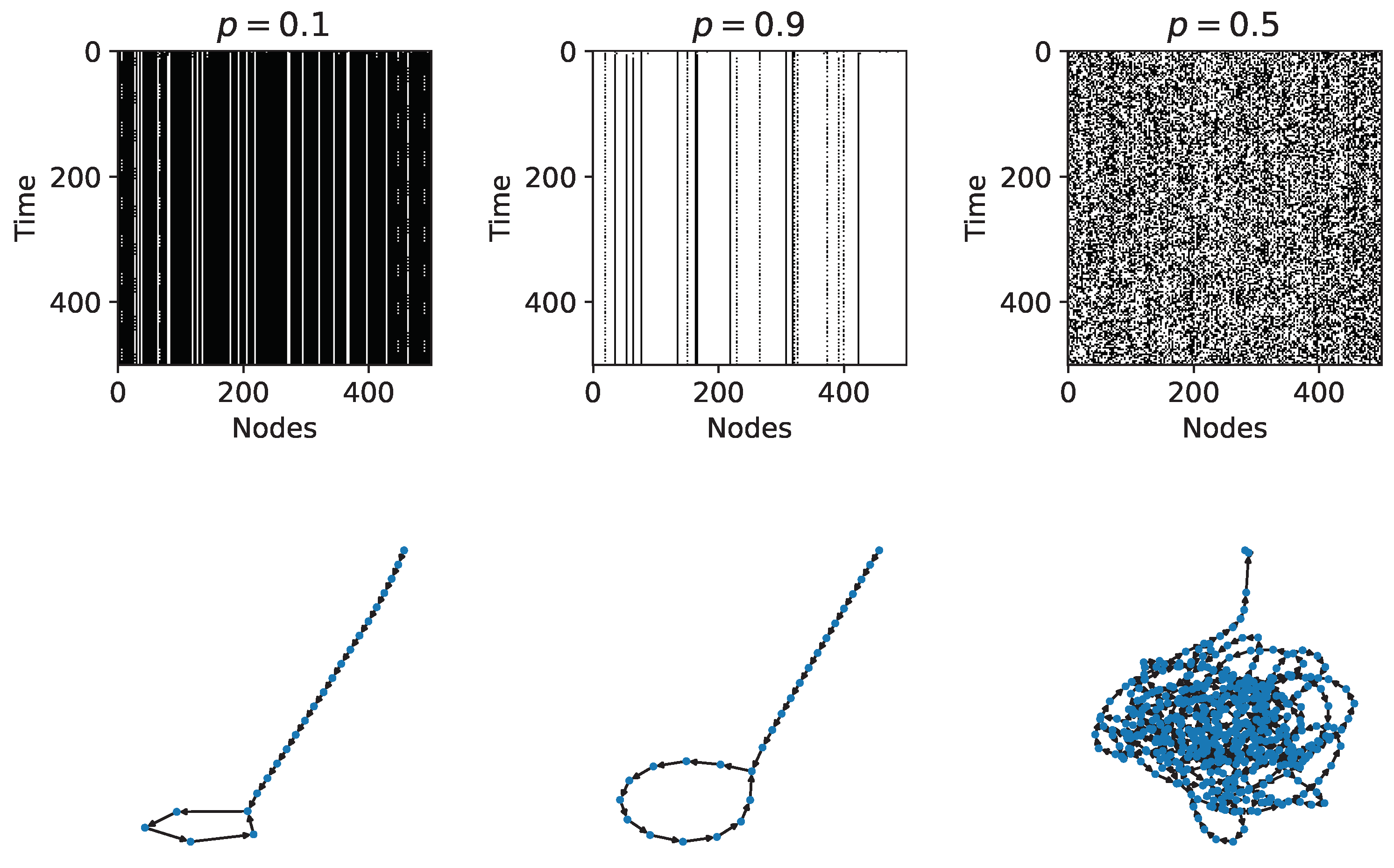

Figure 2 shows time evolution diagrams for three RBNs with 500 nodes and in-degree

; two of them are in the ordered regime (

and

) and the other one (

) is in the chaotic regime. For the RBN with

there is a 90 percent probability that the Boolean functions deactivate their respective node; this can be confirmed in its time evolution diagram where there are many more deactivated nodes and in the rapid fall in a limit cycle attractor. Similarly, for the RBN with

there is a 90 percent probability that the Boolean functions activate their respective node, and a rapid fall in a limit cycle attractor is observed. On the other hand, it can be seen that the RBN with

is a chaotic system.

The time evolution and transition diagrams such as in

Figure 2 allow us to analyze qualitatively the regimes in RBNs; for a more quantitative approach, we need a measure that is capable of capturing such regimes and the transitions between them. In the next section, we introduce some methods of measure on space-time patterns as candidates for the task at hand (for an alternative classification of chaotic/ordered RBNs, which is made from measurements on the basins of attraction of the state transition graphs, see [

11]).

3. Methodology

The act of explaining what our eyes see has always been a human need. The act of trying to understand reality, which some have called science, common sense, etc., is nothing more than classifying randomness. When we discover the mechanism behind a phenomenon, we determine that it is not random and has a specific cause. To do this, a phenomenon can be abstracted to a chain of symbols and we call that “the object”. However, measuring randomness is a challenge, and there are several measures at our disposal. In the next subsections, we review some of these measures.

3.1. Information Theory and Entropy

Information Theory [

12] is a branch of mathematics that deals with the quantification and communication of information. One of the fundamental concepts in information theory is the concept of entropy. In particular, the Shannon entropy is a measure of the amount of uncertainty or information content in a random variable.

The Shannon entropy

of a discrete random variable

X with probability mass function

is defined as

where

is the set of all possible values of

X. The logarithm is usually taken to base 2, so the entropy is measured in bits.

The Shannon entropy has a number of important properties, including:

It is always non-negative, .

It is maximized when all the possible values of X are equally likely, i.e., when for all . In this case, the entropy is .

It is minimized when X is a deterministic function of another random variable Y, i.e., when there exists a function f such that with probability 1.

In the last case, , since there is no uncertainty or information content in X beyond what is already contained in Y.

Shannon entropy is a useful tool in a variety of applications, including coding theory, data compression, and cryptography [

13]. The Shannon entropy indicates how much “surprise” or “unexpectedness” there is in the outcomes of a random variable. The higher the entropy, the more uncertain or unpredictable the random variable is, and the more information is needed to describe or communicate its outcomes. Conversely, the lower the entropy, the more certain or predictable the random variable is, and the less information is needed to describe or communicate its outcomes.

3.2. Compression Algorithms and Compressibility

A

lossless compression algorithm is any encoding procedure that aims to represent a certain amount of information using or occupying a smaller space, making an exact reconstruction of the original data possible. An example is the Lempel-Ziv-Welch (LZW) algorithm [

14], a dictionary-based lossless compressor. The algorithm uses a dictionary to map the sequence of symbols to codes that take up less space. The LZW algorithm performs well, especially with files that have repetitive sequences. Another example of a lossless compression algorithm is the free and open-source file compression program called BZip2, which uses the Burrows-Wheeler algorithm [

15], which only compresses individual files; It relies on standalone external utilities for tasks such as handling multiple files, encryption, and file splitting.

From the lossless compression of a piece of data we can define its compressibility rate as the length of the codified data divided by the length of the original data. This is an intuitive measure of randomness because we expect that the more random a piece of data or string is, the more difficult to compress it.

3.3. Algorithmic Complexity and BDM

Program size complexity, also known as

algorithmic complexity, is a measure that quantifies algorithmic randomness. The algorithmic complexity (AC) or

algorithmic information content of a string

s is defined as the length of the shortest computer program

p that is executed in a prefix-free Universal Turing Machine and generates

s as an output [

16,

17]:

The difference between the original length and the minimum program size determines the complexity of data

s. A string

s is said to be random if

(in bits)

(for a more intuitive measure of complexity that combines the notions of algorithmic information content and time, see the concept of Charles Bennett’s logical depth [

18]).

A technical drawback is that

K is not computable due to the Halting problem [

19], since we can’t always find the shortest programs in a finite time without having to run all the computer programs, which means having to wait forever in case they never stop. Nevertheless, it is possible to randomly produce programs that produce a specific result to estimate its AC from above; for this purpose, the

algorithmic probability of a binary string

s is defined as the sum over all (prefix-free) programs with which a (prefix-free) Universal Turing Machine generates

s and stops:

The

Coding Theorem Method (CTM) [

20,

21] further establishes the connection between

and

,

where

c is a fixed constant, independent from

s. This theorem implies [

22] that the output frequency distribution of random computer programs to approximate

can be converted to estimates of

using

Finally, the

Block Decomposition Method (BDM) [

23] is a powerful tool to estimate algorithmic complexity. This method allows the decomposition of a large system in smaller and more manageable pieces, facilitating the estimation of its total complexity. By dividing the data into fragments and precomputing CTM calculations on these fragments [

24,

25], BDM provides a measure that lies between entropy and algorithmic complexity, allowing a better understanding of the structure and behavior of the system under study. In general, for

w-dimensional objects, BDM is defined as

where the set

is composed of the pairs

,

is an element from the decomposition of

X (specified by a partition

, where

is a submatrix of

X) and

is the multiplicity of each

.

3.4. Perturbation Analysis

It is worth asking whether the randomness measures outlined above can serve us for more than simply determining how random an object is or how chaotic its temporal evolution is. Specifically, we can ask if a robust measure of randomness can help us characterize or even control a dynamic system.

The

perturbation analysis or

reprogrammability analysis is based on the change in the randomness of a dynamic system subjected to perturbations. Its purpose is to track in detail the changes in algorithmic information content (estimated by local observations) produced by natural or induced perturbations in evolving complex open systems. Algorithmic models can be derived from partial observations, and the algorithmic probability that these models produce the behavior of the system under analysis can be estimated. Perturbation analysis can also help us reconstruct space-time diagrams of dynamic systems [

26,

27].

A simple example will allow us to illustrate the basic concepts of perturbation analysis. Let G be a graph with a set of nodes and a set of vertices . The dynamics of G can be defined by transitions between different states. Let denote the operation of removing a vertex e from G. The difference would be the shared mutual algorithmic information, or algorithmic information dynamics, of G and , where it is considered that G is a time-dependent system and that can be the result of the temporal evolution of G. We have the following cases:

. The vertex e is contained in the algorithmic description of G, and can be recovered from the latter.

. The vertex e does not contribute to the description of G. The relationship between G and is causal.

. G and do not share causal information. Removing e results in a loss.

. There are two possibilities: a) cannot be explained by G alone, that is, is not algorithmically contained in G, and therefore e is a fundamental part or generative causal mechanism of G; and b) e is not part of G but it is noise.

In the first two cases, it is said that element e is not essential in the explanation of G but it is an element that is produced by the natural course of evolution of G. In the last two cases, if , element e is said to make a high causal contribution since its removal moves G more toward randomness. And in the last case, if , then the removal of element e moves G away from randomness (or towards simplicity), so it is unlikely that element e is part of the generating mechanism of G, i.e., it is more likely that element e is noise or part of another system.

In this work, a basic perturbation analysis will be carried out on the state transition graph of some RBNs using the randomness measures already outlined in the previous subsections.

4. Results and Discussion

In this section we present and discuss some results about the time evolution of RBNs whose connectivity graphs were generated like this: for each of the

N nodes, select one node

k times as an adjacent node following a uniform probability distribution over all

N nodes. We used the randomness measures of

Section 3 over boolean functions (truth tables) and time evolution diagrams. Entropy was calculated from the relative frequencies of 0s and 1s. For the calculation of compressibility, an array was first converted to a one-dimensional string concatenating the rows from top to bottom, and then such string was compressed using the LZW algorithm. Finally, Algorithmic Complexity for an array was estimated through the Block Decomposition Method (for the estimation of AC by BDM we used the

pybdm library [

28])

4.1. RBNs with Nodes

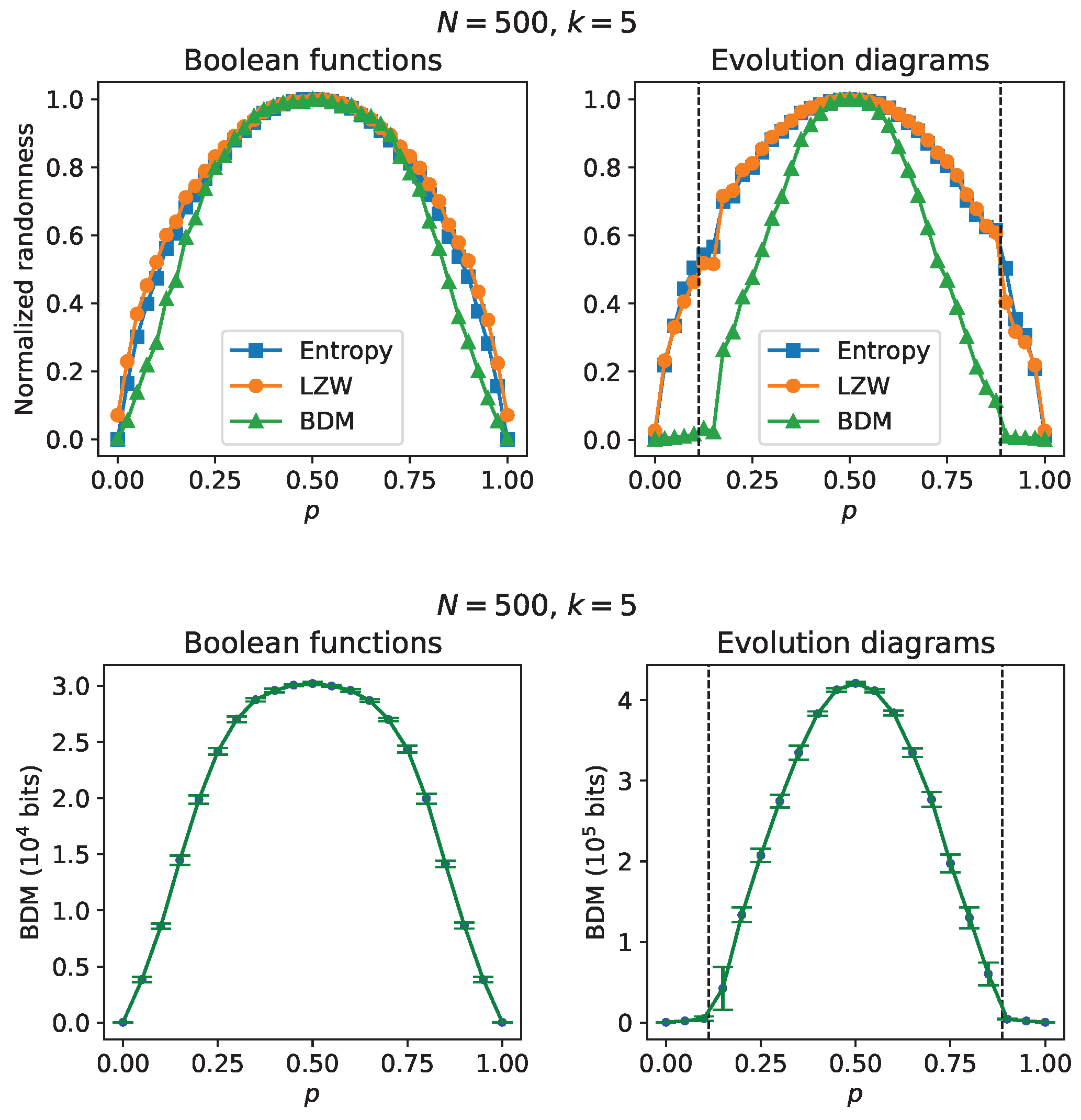

Figure 3 presents results on simulations of RBNs with

and

that share the same initial state and the same connectivity matrix, both generated from uniform probability distributions. At the top of

Figure 3 there are randomness estimates, for both the Boolean functions and the time evolution diagrams, as a function of parameter

p. The results concerning the Boolean functions show “causality gaps” between the entropy/LZW estimates and the AC estimates using the BDM, and could be anticipated since the Boolean functions were constructed from a binomial probability distribution with parameter

p (see [

23]). The causality gaps are more noticeable in the randomness estimates of the time evolution diagrams; it can even be seen that the AC estimation is capable of revealing the transition to the chaotic regime on both sides of the ordered regime (

and

), while both entropy and LZW are insensitive to such transition (beyond some small fluctuations) and give results from which it can only be suggested that the evolution diagrams arise from a binomial probability distribution. For greater statistical rigor, estimates of average algorithmic complexity from samples of size 10 of RBNs generated with a fixed value of p are shown at the bottom of

Figure 3; as in the upper part, we can see the transitions to the chaotic regime.

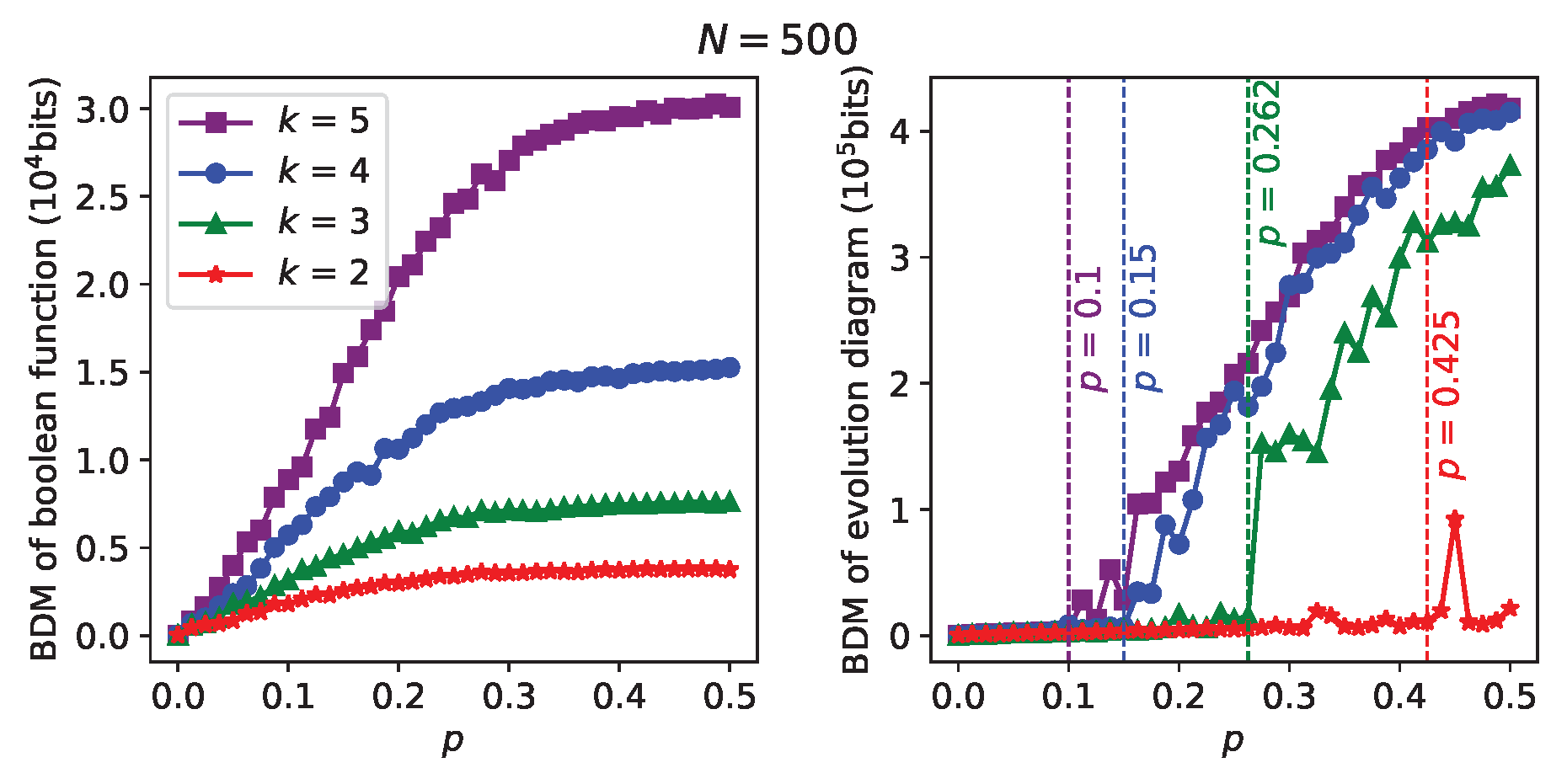

Next,

Figure 4 shows some series of BDM (Boolean function) vs p, and BDM (evolution diagram) vs

p, obtained for each specific value of in-degree

k. In this case,

p takes on 41 values evenly spaced in the interval

. Simulations were carried out with connectivity matrices generated randomly from uniform probability distributions, with the RBNs for a specific

k value sharing the same connectivity matrix. In each of the series, we can notice a sudden growth of the time evolution diagram’s BDM starting from a certain

p value (marked with vertical lines). Such

p values are the critical

p values that point out the transition to the critical regime in each series, as can be confirmed by comparison with the theoretical

p values obtained from the chaos condition in RBNs (see

Table 1). We can also notice that the critical

p value increases when going from one series to another with lower

k, following the chaos condition in RBNs. Furthermore, as expected, in each series it is observed that an increase in BDM of the evolution diagrams goes hand in hand with an increase in BDM of the Boolean functions; it can also be observed that the series with greater

k have greater BDM values in the time evolution diagrams.

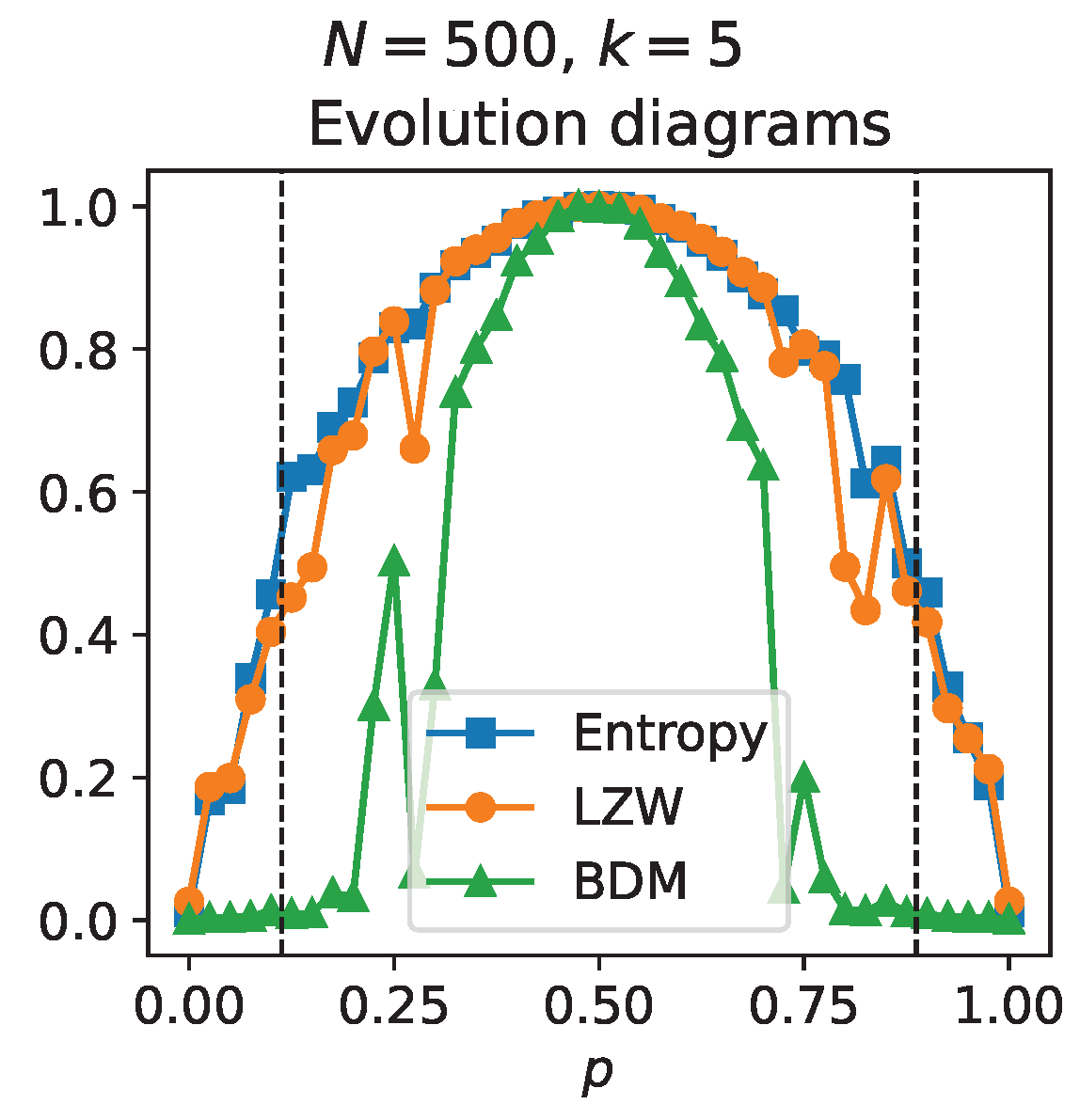

The results presented up to this point were obtained from simulations that consider connectivity matrices generated from uniform probability distributions. However, it is possible that non-uniform probability distributions introduce a change in the dynamics and therefore also introduce changes in the critical points. Although considerations about non-uniform distributions are beyond the objectives of this work,

Figure 5 shows the results obtained by repeating the simulations carried out for

Figure 3, but this time the connectivity matrix was generated from a binomial probability distribution with

and

(that is, the nodes of the Boolean network are labeled from 0 to 499 and the quantity that follows the binomial distribution is the probability that one of these nodes is chosen to determine each of the entries in the connectivity matrix; the condition

implies that the nodes with the highest probability of being chosen are nodes 249 and 250). In this case, transitions towards the chaotic regime continue to be observed and these can be revealed more noticeably by AC estimates; although, unlike the results presented in

Figure 3, the critical values of

p do not coincide with the theoretical ones (indicated with dotted lines in

Figure 5) expected from a connectivity matrix constructed from a uniform probability distribution. The latter shows a change in the dynamics and the critical points when taking into account non-uniform probability distributions.

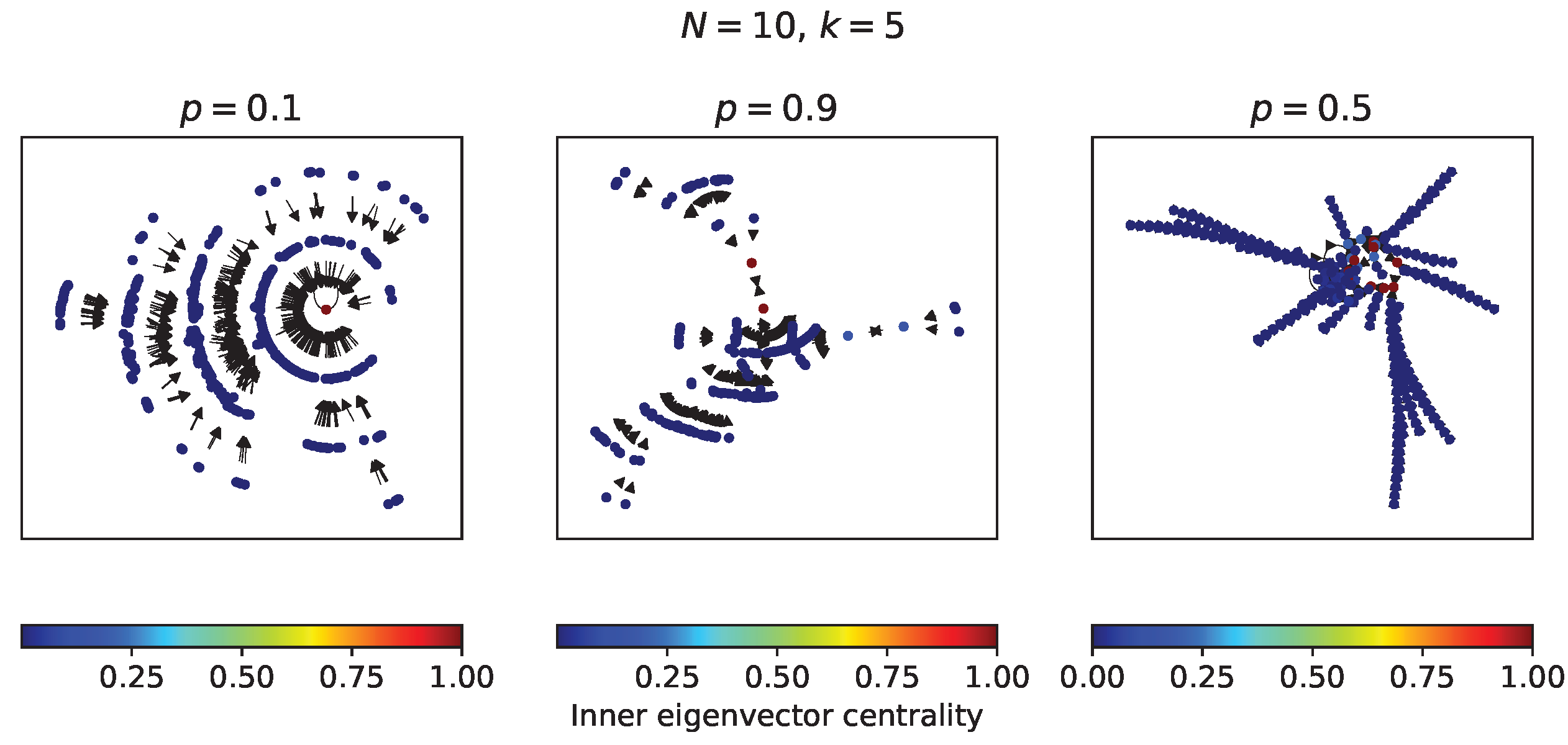

4.2. RBNs with and

In this subsection, some RBNs with 10 nodes and

in-degree of 5 are analyzed from their complete transition diagram - which have

possible states. In

Figure 6 there are three transition diagrams, two for RBNs corresponding to the opposite values

and

, and one for an RBN with a value of

. The

internal eigenvector centrality measure or

prestige of the nodes [

29,

30] in each diagram was calculated. In the transition diagram of the RBN with

a single fixed point attractor is observed, in which all states fall after a few time steps. In the transition diagram of the RBN with

a similar behavior is observed with two limit cycle attractors. On the other hand, in the RBN with

several attractors with more time steps are observed. It can be noted that the attractors have the greatest prestige in each transition diagram.

The transition diagrams obtained are conducive to performing perturbation analysis. In this work, perturbations were carried out on our RBNs according to the following procedure:

- (1)

Perform randomness measurements of the unperturbed transition diagram.

- (2)

Create a list of nodes with the highest prestige.

- (3)

Perturbation: locate the node with the highest prestige in the list, disconnect it from the transition diagram, and perform randomness measurements.

- (4)

Remove the most prestigious node from the list.

- (5)

Restore disconnected node to transition diagram.

- (6)

Repeat steps 3, 4, and 5 until the list of most prestigious nodes is exhausted.

Let’s call this perturbation method perturbation of most relevant nodes. Similarly, let’s call perturbation of less relevant nodes the perturbation method where nodes with lower prestige are disconnected (modifying step 2).

Figure 7 shows the results of performing the aforementioned perturbations. The 20 most relevant-prestigious nodes and the 20 least relevant-prestigious nodes in each transition diagram in

Figure 6 were perturbed. In each perturbation, the

relative randomness change (defined here as

) of the respective transition diagram was calculated from its adjacency matrix. The perturbations series obtained show that the compressibility by LZW is blind to the perturbations, as is the entropy (except for a few perturbations), while the algorithmic complexity - estimated by BDM - is sensitive in the face of these perturbations.

In the graphs at the right of

Figure 7, values of relative shared mutual algorithmic information (AID) between the perturbed and unperturbed transition diagrams of

Figure 6 are shown. Here, we can observe that for the transition diagram with

the first least relevant node makes a relatively high causal contribution to the transition diagram (because

for that perturbation). This is to be expected since the least relevant nodes are found on the periphery of the transition diagram to which they belong, which makes them more preceding nodes in the temporal evolution of the respective RBN. Regarding the perturbation of more relevant nodes, we see a few AID inverted peaks under a constant low absolute value; this tells us that the majority of less relevant nodes are not essential in the explanation of the system, so it is likely that they are elements produced by the natural course of evolution of the system. The latter is to be expected since the most relevant-prestigious nodes are usually close to the attractors. A similar analysis follows from the results for the transition diagram with

, although in this case the first least relevant node does not take part in the algorithmic content of the transition diagram (because

). Finally, for the transition diagram with

, there are more nodes with low causal contribution (

) with respect to the other two transition diagrams; the existence of such nodes with low causal contribution is perhaps due to the fact that the relevant nodes were selected from among the several basins of attraction in the transition diagram, which are separated from each other.

5. Conclusions

We used three measures of randomness to analyze the dynamics of RBNs with synchronous updates. Two main results were shown in this work: a) the randomness estimation by BDM is capable of showing jumps from null values of randomness and “causality gaps”, which makes BDM the randomness measure capable of detecting regime changes in a more noticeable way with respect to the other measures of randomness; b) BDM is the only randomness measure that is capable of revealing the causal contribution of certain states according to the order they occupy in the temporal evolution of an RBN. The theoretical results presented in sub

Section 4.2 introduce the application of reprogrammability analysis in Boolean networks, potentially leading to practical applications. Specifically, we believe that a future work may be the extension of the analysis to RBN models with asynchronous updates [

9], or the application of perturbation analysis on connectivity graphs in regulatory network models to help simplify such graphs and their Boolean rules/functions —i.e., to perform gene knock-outs in order to find the causal regulatory network.

Finally, a brief explanation is in order for the accuracy of the Block Decomposition Method. For larger networks, some effects may be lost, but precisely the sensitivity of the method holds for small perturbations which popular lossless compression would fail [

23].

Funding

This research received no external funding.

Acknowledgments

(Remains pending).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RBN |

Random Boolean Networks |

| AC |

Algorithmic Complexity |

| CTM |

Coding Theorem Method |

| BDM |

Block Decomposition Method |

References

- Kauffman, S. Metabolic stability and epigenesis in randomly constructed genetic nets. Journal of Theoretical Biology 1969, 22, 437–467. [Google Scholar] [CrossRef] [PubMed]

- Glass, L.; Kauffman, S.A. The logical analysis of continuous, non-linear biochemical control networks. Journal of Theoretical Biology 1973, 39, 103–129. [Google Scholar] [CrossRef] [PubMed]

- Thomas, R. Boolean formalization of genetic control circuits. Journal of Theoretical Biology 1973, 42, 563–585. [Google Scholar] [CrossRef] [PubMed]

- Fauré, A.; Naldi, A.; Chaouiya, C.; Thieffry, D. Dynamical analysis of a generic Boolean model for the control of the mammalian cell cycle. Bioinformatics 2006, 22, e124–e131. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Assmann, S.; Albert, R. Predicting essential components of signal transduction networks: A dynamic model of guard cell abscisic acid signaling. PLoS biology 2006, 4, 1732–1748. [Google Scholar] [CrossRef] [PubMed]

- Thakar, J.; Parette, M.; Kirimanjeswara, G.; Harvill, E.; Albert, R. Modeling Systems-Level Regulation of Host Immune Responses. PLoS computational biology 2007, 3, e109. [Google Scholar] [CrossRef] [PubMed]

- Veliz-Cuba, A.; Stigler, B. Boolean models can explain bistability in the lac operon. Journal of computational biology : a journal of computational molecular cell biology 2011, 18, 783–794. [Google Scholar] [CrossRef] [PubMed]

- Albert, R.; Othmer, H.G. The topology of the regulatory interactions predicts the expression pattern of the segment polarity genes in Drosophila melanogaster. Journal of Theoretical Biology 2003, 223, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Chaves, M.; Albert, R.; Sontag, E.D. Robustness and fragility of Boolean models for genetic regulatory networks. Journal of Theoretical Biology 2005, 235, 431–449. [Google Scholar] [CrossRef]

- Shmulevich, I.; Dougherty, E.R. Probabilistic Boolean Networks; Society for Industrial and Applied Mathematics, 2010; [https://epubs.siam.org/doi/pdf/10.1137/1.9780898717631]. [CrossRef]

- Wuensche, A. Classifying cellular automata automatically: Finding gliders, filtering, and relating space-time patterns, attractor basins, and theZ parameter. Complexity 1999, 4, 47–66. [Google Scholar] [CrossRef]

- Shannon, C.E. A Mathematical Theory of Communication. The Bell System Technical Journal 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Stone, J.V. Information Theory: A Tutorial Introduction, 1st ed.; Sebtel Press, 2015.

- Ziv, J.; Lempel, A. Compression of individual sequences via variable-rate coding. IEEE Transactions on Information Theory 1978, 24, 530–536. [Google Scholar] [CrossRef]

- Snyder, D. bzip2. https://gitlab.com/bzip2/bzip2/, 2023. Accessed: (, 2024). 15 January.

- Kolmogorov, A.N. Three approaches to the quantitative definition of information *. International Journal of Computer Mathematics 1968, 2, 157–168. [Google Scholar] [CrossRef]

- Chaitin, G.J. On the Length of Programs for Computing Finite Binary Sequences. J. ACM 1966, 13, 547–569. [Google Scholar] [CrossRef]

- Bennett, C.H. Logical Depth and Physical Complexity. In The Universal Turing Machine. A Half-Century Survey; Herken, R., Ed.; Presses Universitaires de France, 1992; pp. 227–257.

- Copeland, B. The Essential Turing; Clarendon Press, 2004.

- Solomonoff, R. A formal theory of inductive inference. Part I. Information and Control 1964, 7, 1–22. [Google Scholar] [CrossRef]

- Levin, L.A. Laws of information conservation and problems of foundation of probability theory. Probl. Peredachi Inf. 1974, 10, 30–35. [Google Scholar]

- Calude, C.; Chaitin, G.; Salomaa, A. Information and Randomness: An Algorithmic Perspective; Monographs in Theoretical Computer Science. An EATCS Series, Springer Berlin Heidelberg, 2013.

- Zenil, H.; Hernández-Orozco, S.; Kiani, N.A.; Soler-Toscano, F.; Rueda-Toicen, A.; Tegnér, J. A Decomposition Method for Global Evaluation of Shannon Entropy and Local Estimations of Algorithmic Complexity. Entropy 2018, 20. [Google Scholar] [CrossRef] [PubMed]

- Delahaye, J.P.; Zenil, H. Numerical evaluation of algorithmic complexity for short strings: A glance into the innermost structure of randomness. Applied Mathematics and Computation 2012, 219, 63–77. [Google Scholar] [CrossRef]

- Soler-Toscano, F.; Zenil, H.; Delahaye, J.P.; Gauvrit, N. Calculating Kolmogorov complexity from the output frequency distributions of small Turing machines. PloS one 2014, 9, e96223. [Google Scholar] [CrossRef]

- Zenil, H.; Kiani, N.A.; Marabita, F.; Deng, Y.; Elias, S.; Schmidt, A.; Ball, G.; Tegnér, J. An Algorithmic Information Calculus for Causal Discovery and Reprogramming Systems. iScience 2019, 19, 1160–1172. [Google Scholar] [CrossRef]

- Zenil, H.; Kiani, N.A.; Tegnér, J. , Algorithmic Information Dynamics (AID). In Algorithmic Information Dynamics: A Computational Approach to Causality with Applications to Living Systems; Cambridge University Press, 2023; p. 228–240.

- Talaga, S. PyBDM: Python interface to the Block Decomposition Method. https://pybdm-docs.readthedocs.io/en/latest/, 2019. Accessed: (, 2024). 04 February.

- Bonacich, P. Power and Centrality: A Family of Measures. American Journal of Sociology 1987, 92, 1170–1182. [Google Scholar] [CrossRef]

- Newman, M. Networks; OUP Oxford, 2018; p. 159.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).