Submitted:

19 November 2023

Posted:

22 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

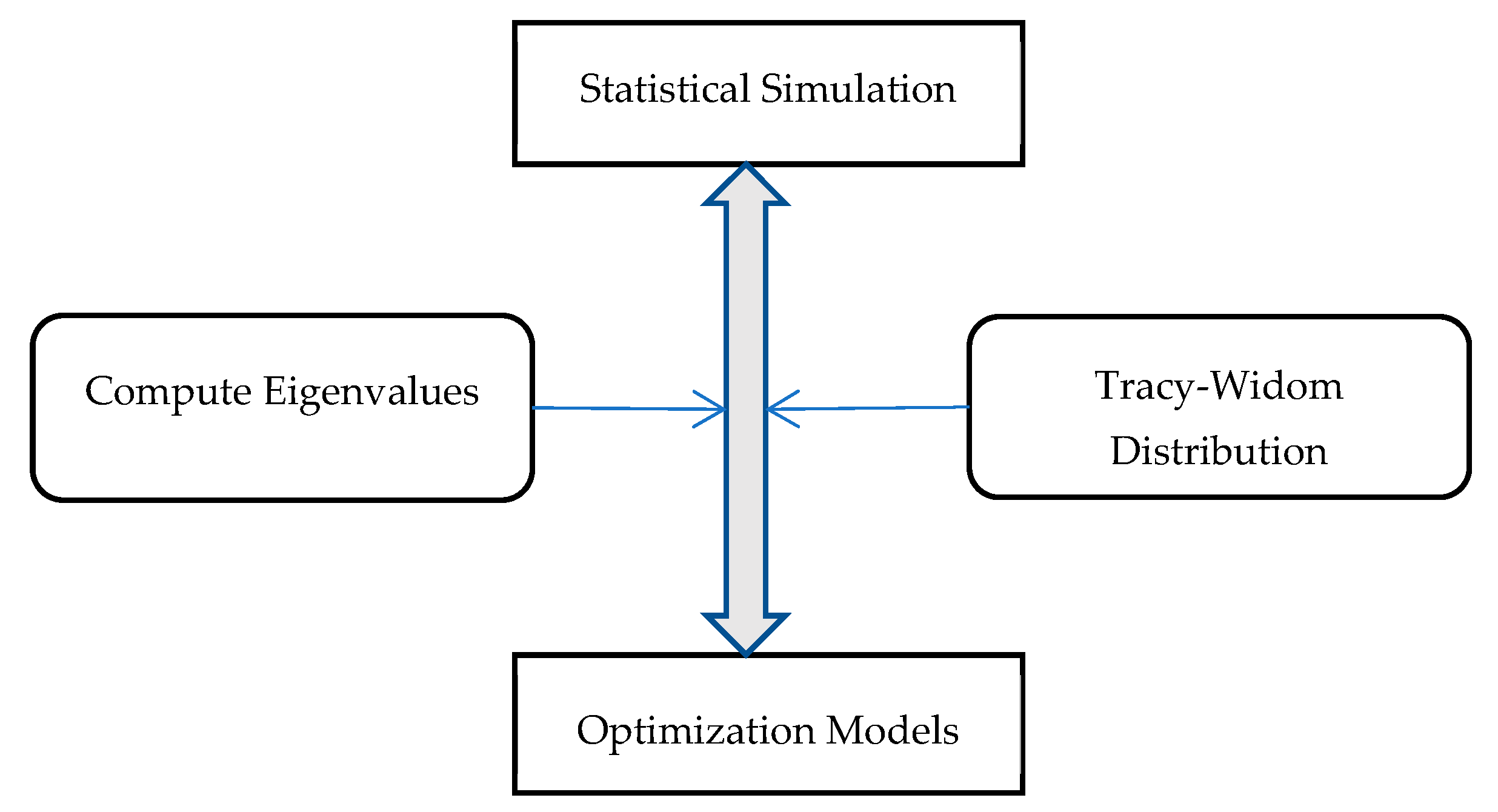

2.1. Statistical simulation and optimization framework

2.2. Optimization model for redundancy allocation

3. Results

4. Discussion

- Redundancy Planning: Identify critical components in the Smart Grid infrastructure. Allocate redundancy by duplicating these components, ensuring backup systems are in place to seamlessly take over in case of failures.

- Risk Assessment: Conduct a thorough risk analysis to understand potential failure points. Allocate redundancies to the most vulnerable areas identified during this assessment.

- Advanced Monitoring: Implement real-time monitoring systems to detect anomalies and potential failures. Use data analytics to predict failure patterns and allocate redundancies accordingly.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- https://www.energy.gov/ne/articles/department-energy-report-explores-us-advanced-small-modular-reactors-boost-grid.

- https://www.smartgrid.gov/the_smart_grid/smart_grid.html.

- Saleem, M.U.; Shakir, M.; Usman, M.R.; Bajwa, M.H.T.; Shabbir, N.; Shams Ghahfarokhi, P.; Daniel, K. Integrating Smart Energy Management System with Internet of Things and Cloud Computing for Efficient Demand Side Management in Smart Grids. Energies 2023, 16, 4835. [Google Scholar] [CrossRef]

- Hannan, M.A., Ker, P. J., Mansor, M., Lipu, MS H., Al-Shetwi, A. Q., Alghamdi, S. M., Begum, R.A., Tiong, S.K. Recent advancement of energy internet for emerging energy management technologies: Key features, potential applications, methods and open issues, Energy Reports, 10, 2023, pp. 3970-3992. [CrossRef]

- Galindo, G., and Batta, R. Review of recent developments in OR/MS research in disaster operations management, European Journal of Operational Research, 2013, 230, pp. 201-211. [CrossRef]

- Wang, J., Sharman, R., and Zionts, S. Functionality defense through diversity a design framework to multitier systems, Annals of Operations Research, 2012, 197, (1), pp. 25-45. [CrossRef]

- Priya R. K. and Josephkutty J. An IOT based efficient energy management in smart grid using DHOCSA technique, Sustainable Cities and Society, 79, 2022, 103727.

- Mishra, P., and Singh, G. Energy Management Systems in Sustainable Smart Cities Based on the Internet of Energy: A Technical Review. Energies, 2023, 16(19), 6903. [CrossRef]

- Khan, W.Z., Ahmed, E., Hakak, S., Yaqoob, I., and Ahmed, A. Edge computing A survey, Future Generation Computer Systems, 2019, 97, pp. 219-235.

- Mavromoustakis, C.X., Batalla, J.M., Mastorakis, G., Markakis, E., and Pallis, E. Socially Oriented Edge Computing for Energy Awareness in IoT Architectures, IEEE Communications Magazine, 2018, 56, (7), pp. 139-145. [CrossRef]

- Perveen, A., Abozariba, R., Patwary, M., Aneiba, A., and Jindal, A. Clustering-based Redundancy Minimization for Edge Computing in Future Core Networks, in Editor (Ed.)^(Eds.) Book Clustering-based Redundancy Minimization for Edge Computing in Future Core Networks (2021, edn.), pp. 453-458.

- Shakarami, A., Ghobaei-Arani, M., and Shahidinejad, A. A survey on the computation offloading approaches in mobile edge computing A machine learning-based perspective, Computer Networks, 2020, 182, pp. 107496. [CrossRef]

- Huang, Y., Ma, X., Fan, X., Liu, J., and Gong, W. When deep learning meets edge computing, in Editor (Ed.)^(Eds.) Book When deep learning meets edge computing (2017, edn.), pp. 1-2.

- Sohn, I. Small-World and Scale-Free Network Models for IoT Systems, Mobile Information Systems, 2017, 2017, pp. 6752048. [CrossRef]

- Faloutsos, M., Faloutsos, P., and Faloutsos, C. On power-law relationships of the Internet topology. Proc. Proceedings of the conference on Applications, technologies, architectures, and protocols for computer communication, Cambridge, Massachusetts, USA1999 pp. Pages.

- Bebortta, S., Senapati, D., Rajput, N.K., Singh, A.K., Rathi, V.K., Pandey, H.M., Jaiswal, A.K., Qian, J., and Tiwari, P. Evidence of power-law behavior in cognitive IoT applications, Neural Computing and Applications, 2020, 32, (20), pp. 16043-16055. [CrossRef]

- Zhang, D.-g., Zhu, Y.-n., Zhao, C.-p., and Dai, W.-b. A new constructing approach for a weighted topology of wireless sensor networks based on local-world theory for the Internet of Things (IOT), Computers & Mathematics with Applications, 2012, 64, (5), pp. 1044-1055. [CrossRef]

- Patsidis, A., Dyśko, A., Booth, C., Rousis, A. O., Kalliga, P., & Tzelepis, D. Digital Architecture for Monitoring and Operational Analytics of Multi-Vector Microgrids Utilizing Cloud Computing, Advanced Virtualization Techniques, and Data Analytics Methods. Energies, 16(16), 5908. [CrossRef]

- Gordon, L.A., and Loeb, M.P. The Economics of Information Security Investment, ACM Transactions on Information and System Security, 2002, 5, (4), pp. 438–457.

- Hsu, C., Lee, J.-N., and Straub, D.W. Institutional influences on information systems security innovations, Information Systems Research, 2012, 23, (3-part-2), pp. 918-939. [CrossRef]

- Hua, J., and Bapna, S. The economic impact of cyber terrorism, The Journal of Strategic Information Systems, 2013, 22, (2), pp. 175-186.

- Wang, J., Chaudhury, A., and Rao, H.R. Research Note-A Value-at-Risk Approach to Information Security Investment, Information Systems Research, 2008, 19, (1), pp. 106-120. [CrossRef]

- Kumar, S., and Singh, B.K. Entropy based spatial domain image watermarking and its performance analysis, Multimedia Tools and Applications, 2021, 80, (6), pp. 9315-9331. [CrossRef]

- Veremyev, A., Prokopyev, O.A., and Pasiliao, E.L. An integer programming framework for critical elements detection in graphs, Journal of Combinatorial Optimization, 2014, 28, (1), pp. 233-273. [CrossRef]

- Pavlikov, K. Improved formulations for minimum connectivity network interdiction problems, Computers & Operations Research, 2018, 97, pp. 48-57. [CrossRef]

- Ventresca, M. Global search algorithms using a combinatorial unranking-based problem representation for the critical node detection problem, Computers & Operations Research, 2012, 39, (11), pp. 2763-2775. [CrossRef]

- Zhang, M., Wang, X., Jin, L., Song, M., and Li, Z. A new approach for evaluating node importance in complex networks via deep learning methods, Neurocomputing, 2022, 497, pp. 13-27. [CrossRef]

- Jin, X., Chow, T.W.S., Sun, Y., Shan, J., and Lau, B.C.P. Kuiper test and autoregressive model-based approach for wireless sensor network fault diagnosis, Wireless Networks, 2015, 21, (3), pp. 829-839. [CrossRef]

- Tracy, C.A., and Widom, H. On orthogonal and symplectic matrix ensembles, Communications in Mathematical Physics, 1996, 177, (3), pp. 727-754. [CrossRef]

- Kulturel-Konak, S., Smith, A.E., and Coit, D.W. Efficiently Solving the Redundancy Allocation Problem Using Tabu Search, IIE Transactions, 2003, 35, (6), pp. 515-526. [CrossRef]

- Shao, B.B.M. Optimal redundancy allocation for information technology disaster recovery in the network economy, Dependable and Secure Computing, IEEE Transactions on, 2005, 2, (3), pp. 262-267. [CrossRef]

- Cattrysse, D.G., and Wassenhove, L.N.V. A survey of algorithms for the generalized assignment problem, European Journal of Operational Research, 1992, 60, pp. 260-272.

- Öncan, T. A survey of the generalized assignment problem and its applications, INFOR Information Systems and Operational Research, 2007, 45, (3), pp. 123-141.

- Devi, S., Garg, H. & Garg, D. A review of redundancy allocation problem for two decades bibliometrics and future directions. Artif Intell Rev 56, 7457–7548 (2023). [CrossRef]

- Wang, H., Alidaee, B., Ortiz, J., and Wang, W. The multi-skilled multi-period workforce assignment problem, International Journal of Production Research, 2020, in press, pp. 1-18. [CrossRef]

- Wang, H., Huo, D., and Alidaee, B. Position Unmanned Aerial Vehicles in the Mobile Ad Hoc Network, Journal of Intelligent & Robotic Systems, 2014, 74, (1), pp. 455-464. [CrossRef]

- Alidaee, B., Gao, H., and Wang, H. A note on task assignment of several problems, Computers & Industrial Engineering, 2010, 59, (4), pp. 1015-1018. [CrossRef]

- Campbell, G. Cross-Utilization of Workers Whose Capabilities Differ, Management Science, 1999, 45, (5), pp. 722-732. [CrossRef]

- Campbell, G.M., and Diaby, M. Developing and evaluation of an assignment heuristic for allocating cross-trained workers, European Journal of Operational Research, 2002, 138, pp. 9-20. [CrossRef]

- Sadatdiynov, K., Cui, L., Zhang, L., Huang, J.Z., Salloum, S., and Mahmud, M.S. A review of optimization methods for computation offloading in edge computing networks, Digital Communications and Networks, 2022. [CrossRef]

- Lin, H., Zeadally, S., Chen, Z., Labiod, H., and Wang, L. A survey on computation offloading modeling for edge computing, Journal of Network and Computer Applications, 2020, 169, pp. 102781. [CrossRef]

- Clauset, A., Shalizi, C.R., and Newman, M.E.J. Power-Law Distributions in Empirical Data, SIAM Review, 2009, 51, (4), pp. 661-70. [CrossRef]

- Alidaee, B. Kochenberger, and H. Wang, Theorems Supporting r-flip Search for Pseudo-Boolean Optimization. Int. J. Appl. Metaheuristic Comput., 2010. 1(1): p. 93-109. [CrossRef]

- Wang, H., and Alidaee, B.: ‘Unrelated Parallel Machine Selection and Job Scheduling with the Objective of Minimizing Total Workload and Machine Fixed Costs’, IEEE Transactions on Automation Science and Engineering, 2018, 15, (4), pp. 1955-1963. [CrossRef]

- Volodarsky, M. The Smart Grid, A Smart Investment. ESG Initiative News. February 11, 2021.

| Class | Methods | Reference |

|---|---|---|

| Entropy-based | Graph neural network | [23] |

| Node Deletion | Mixed integer programming | [24] |

| Network interdiction | Mixed integer linear programming | [25] |

| maximum k-cut problem | Simulated Annealing | [26] |

| Class | Methods | Reference |

|---|---|---|

| Model based | Structure-mechanics | [28] |

| Distribution based | Tracy-widom distribution | [29] |

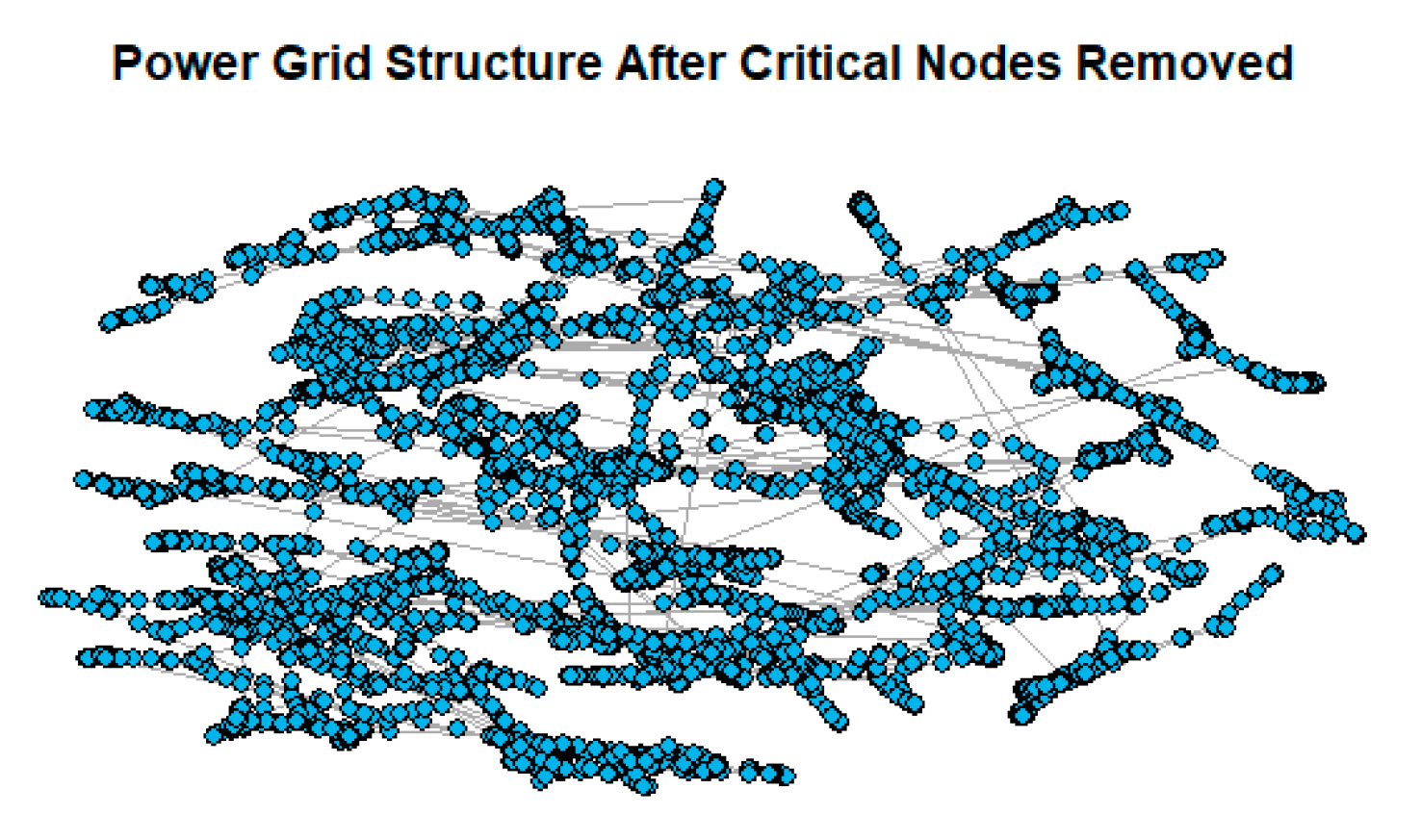

| Number of Removed Critical Nodes | Connectivity |

|---|---|

| 5 | 0.0615851 |

| 10 | 0.0605954 |

| 15 | 0.0592443 |

| PowerGrid | Size | Critical Nodes | Cost of redundancy | Reliability |

|---|---|---|---|---|

| South Carolina cities | 500 | 13 | $13,744,377 | 99.74% |

| Texas cities | 2,000 | 17 | $19,378,002 | 99.66% |

| Texas state | 6,717 | 31 | $47,454,580 | 99.63% |

| Midwest | 24,000 | 59 | $104,646,071 | 99.61% |

| West-East US | 80,000 | 156 | $312,855,059 | 98.79% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).