Submitted:

23 May 2023

Posted:

24 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Theoretical Methods

2.1. Transforming a Discrete-State Stochastic Process into a DPD

2.2. Integer-Order Rényi -Entropies as Synthetic Indices for the Characterization of s

- 1

- they are the result of a min-max normalization, that is obtained using the minimum and the maximum possible values of plain entropies (respectively 0 and );

- 2

- they are formally independent from the number of ordered symbols q chosen for the quantization of the range of the output values of the process and independent from the cardinality of the sample space n; for this reason, they allow comparable values to be obtained, even for different distributions in different sample spaces;

- 3

- they allow the doubt on the choice of the base for the logarithm present in the formula of entropies ( or or ) to be removed, thanks to the use of a variable base, depending on the cardinality of the considered sample space ();

2.3. Rényi Entropy Rates

2.4. Specific Rényi Entropy Rate

2.5. Relationship between Specific Rényi Entropy Rate and Specific Rényi Entropy

3. Empirical Methods

3.1. Transforming a Realization into a Distribution of Relative Frequencies

3.2. Estimating the Second Raw Moment of a

3.3. Estimating the Specific Collision Entropy of a

3.4. Estimating the Specific Collision Entropy Rate of a DSPq

3.5. Method of Validation of Entropy Estimators

- 1.

- choice of a convenient ,

- 2.

- choice of the number of realizations R,

- 3.

- choice of the length N of each realization,

- 4.

- transformation of the samples of any realization in a according to § 3.1,

- 5.

- 6.

- production of the diagrams,

- 7.

- and evaluation of the performances of the estimator.

4. Materials: Choice of Convenient s Suitable for the Experiments

- 1.

-

Regular Processes. The first important sanity check for entropy estimators involves the use of a completely regular process, that consists of an infinitely repeating brief symbolic sequence. Once the initial sequence is known, no additional information is brought by the following samples, and the evolution of the process becomes completely determined. So, for these processes we haveThen, even for short realizations of this kind of processes, any good estimator of the specific Rényi entropy rate has to rapidly fall to zero during the progressive increment of the dimension of the sample space.

- 2.

- Markov Processes. When the is a stationary, irreducible, and aperiodic Markov process, it is possible to calculate the theoretical value of its specific Rényi entropy rate. In fact, given the transition matrix and the unique stationary distribution obtained as the scaled (with rule ) right eigenvector associated to eigenvalue of the equationthen

- 3.

-

Maximum Entropy IID Processes. A third sanity check for entropy estimators involves the use of memoryless IID processes with maximum entropy, because:

- with these processes, the distance between the entropy of the relative frequencies and the actual theoretical entropy of the process is the maximum possible (i.e., using these processes, the estimator is tested in the most severe conditions, obliging it to generate the greatest possible correction);

- the theoretical value for the specific entropy of the processes generated is a priori known and results in being constant, regardless of the choice of the dimension of the considered sample space because the outcome of each throw is independent from the past history.

- having an L-shaped one-dimensional distribution, with one probability bigger than the others, which remain equiprobable, the calculation of their theoretical entropy is trivial;

- they are easily reproducible by, for example, simulating the rolls of a loaded die on which a particular preeminence of the occurrence of a side is initially imposed; the general formula is:

5. Results and Discussion

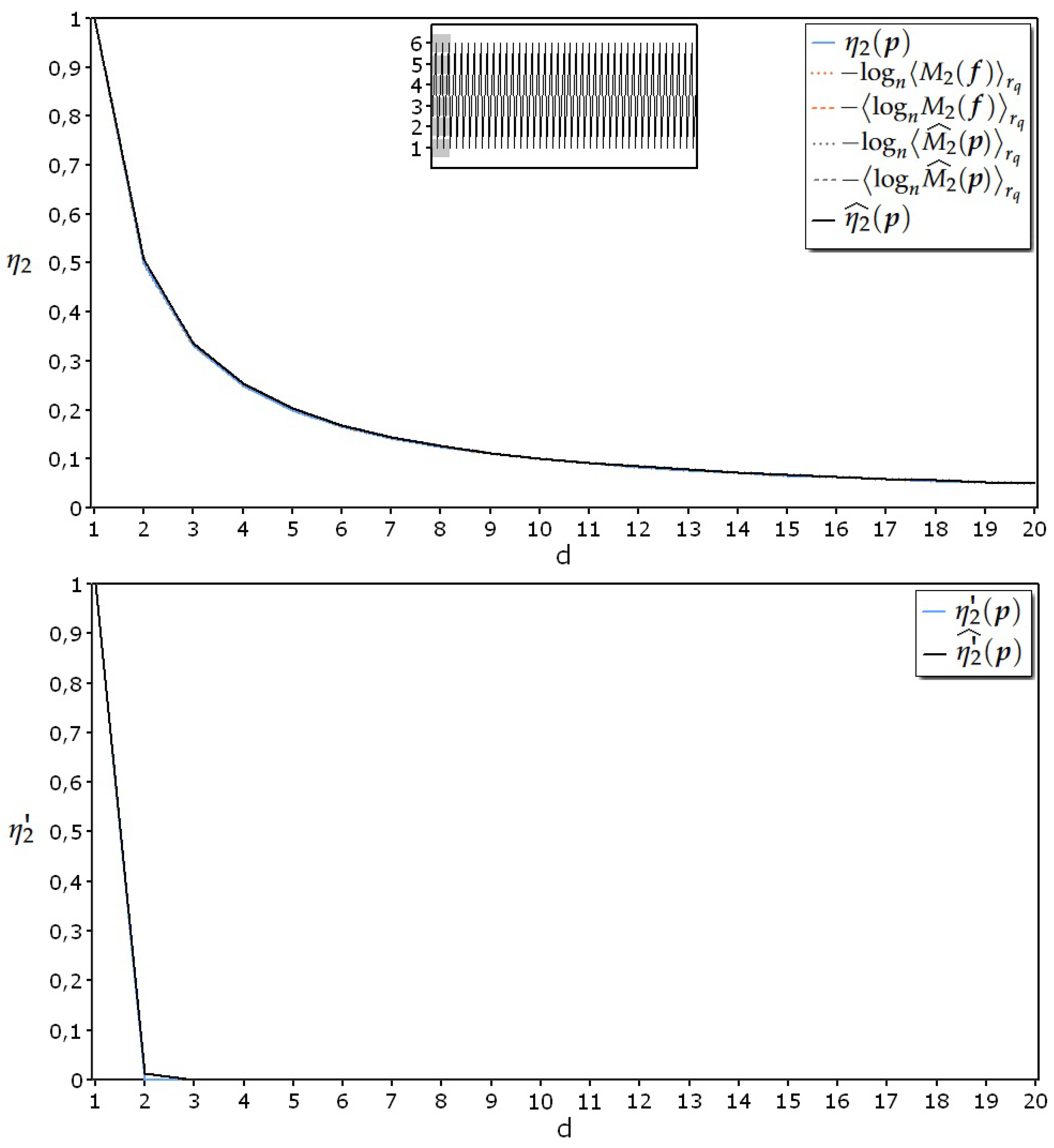

5.1. Experiments with Realizations Coming from Completely Regular Processes

- = Regular process obtained repeating the ordered numerical sequence of the values associated with the six faces of a die ().

- and , because every realization is identical.

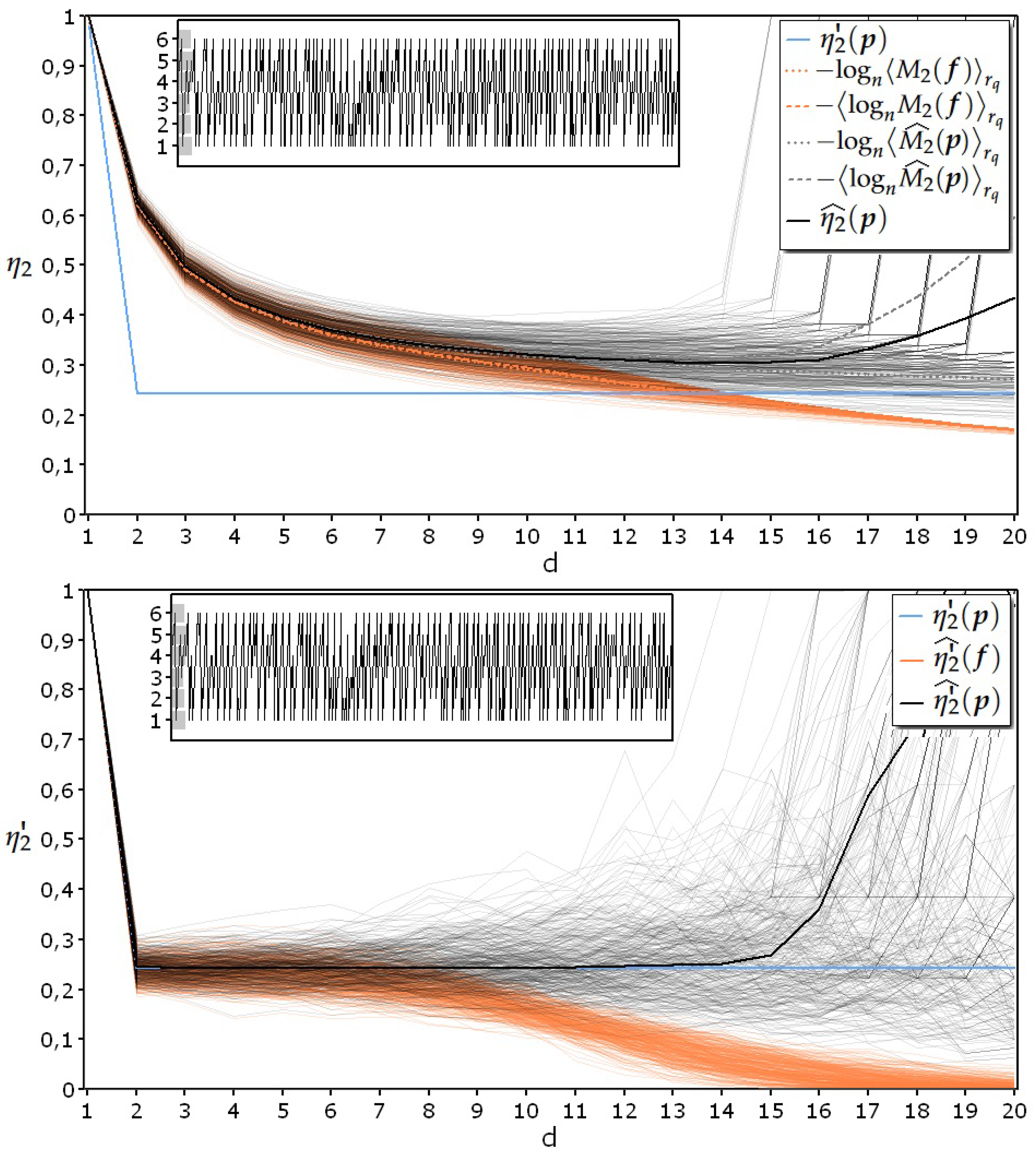

5.2. Experiments with Realizations Coming from Processes Presenting Some Sort of Regularity

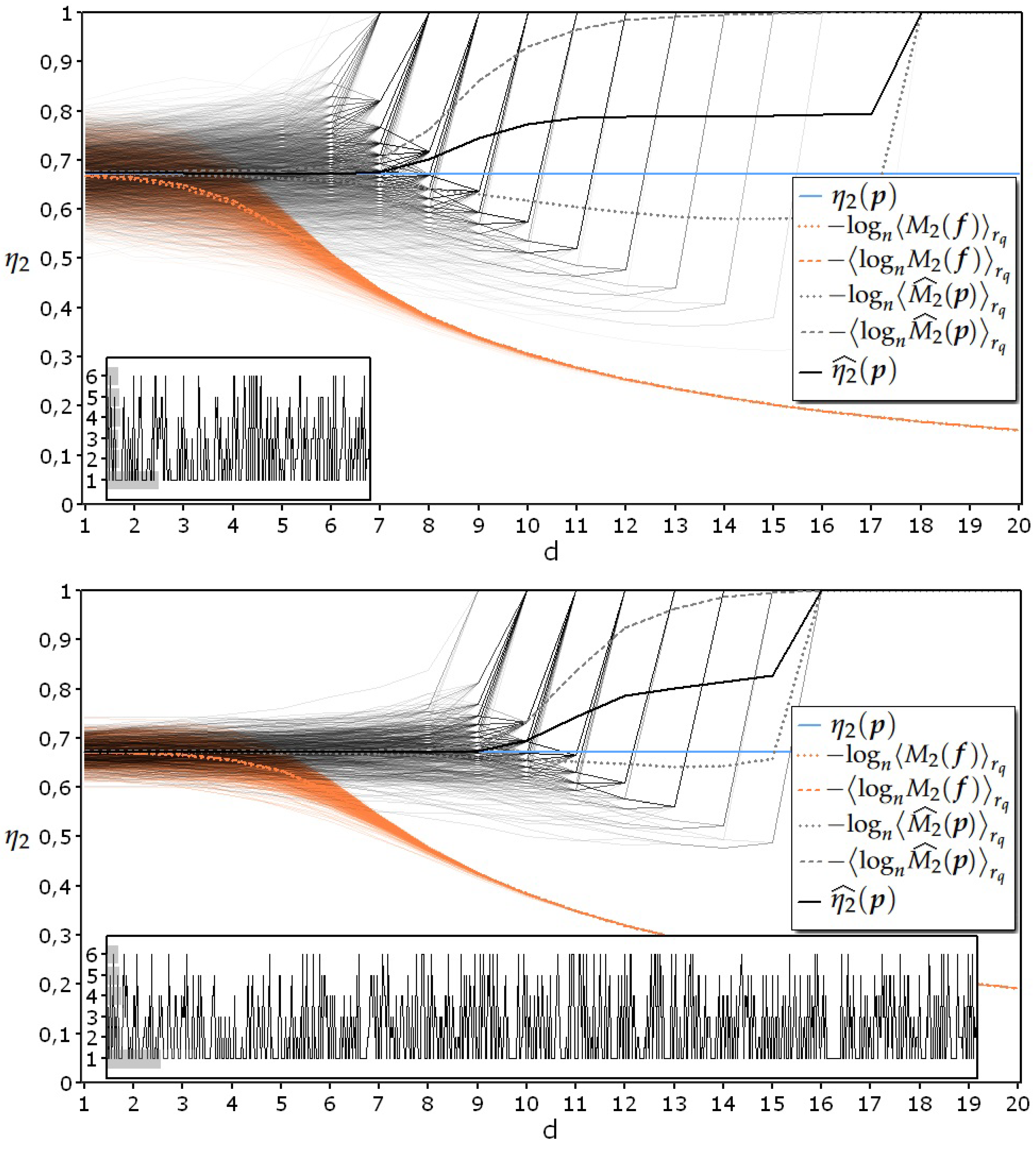

5.3. Experiments with Realizations Coming from Maximum Entropy Memoryless IID Processes

- = process generated by tossing a loaded die with 50% of the outcomes equal to “1” ();

- Upper diagram: and ;

- Lower diagram: and .

- the proposed estimator satisfies the aforementioned third prerequisite of never falling below the theoretical line, even in the heaviest test conditions, represented by the elaboration of data coming from a maximum entropy IID process;

- when using s to estimate specific collision entropy, there is only a slight difference between the two possible ways of averaging the logarithm of the second raw moment (dotted and dashed lines in orange);

- on the contrary, there is a remarkable difference between the two possible ways of averaging the estimates of the logarithm of the second raw moment (dotted and dashed lines in grey) as indicated in Formula (18);

- when the data density in the sample space becomes insufficient for a reliable estimate of the entropy, its value rises toward the value corresponding to the uniform distribution.

6. Conclusions

- the evaluation of the admissibility of this estimator by comparing it to other similar estimators and by using the same kind of processes for the tests;

- the characterization of the variability of the values returned by the estimator as the number of aggregated samples and the irregularity of the processes vary;

- further studies on the methods of estimation in presence of the logarithm operator.

Funding

Conflicts of Interest

Abbreviations

| A | alphabet composed of q ordered symbols |

| Sample space resulting from the Cartesian product d times of the alphabet A | |

| cardinality of the sample space | |

| Discrete-state stochastic process using an alphabet A | |

| Generic discrete probability distribution | |

| obtained from a whose d-grams are inserted in | |

| Realization of a | |

| Relative frequency distribution | |

| obtained from a realization of a whose d-grams are inserted in | |

| Second raw moment of an | |

| Second raw moment of a | |

| Estimate of the second raw moment of a | |

| Collision entropy of an | |

| Collision entropy of a | |

| Estimated collision entropy of a | |

| Specific collision entropy of an | |

| Specific collision entropy of a | |

| Estimated specific collision entropy of a | |

| Specific collision entropy rate of an | |

| Specific collision entropy rate of a | |

| Estimated specific collision entropy rate of a |

References

- Rényi, A. On measures of entropy and information. In Proceedings of the Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Contributions to the Theory of Statistics, Berkeley, CA, USA, 1961; pp. 547–561.

- Grassberger, P. Finite sample corrections to entropy and dimension estimates. Physics Letters A 1988, 128, 369–373. [Google Scholar] [CrossRef]

- Cachin, C. Smooth Entropy and Rényi Entropy. In Proceedings of the Advances in Cryptology — EUROCRYPT ’97; Fumy, W., Ed. Springer-Verlag, 5 1997, Vol. 1233, Lecture Notes in Computer Science, pp. 193–208.

- Schmitt, A.; Herzel, H. Estimating the Entropy of DNA Sequences. Journal of theoretical biology 1997, 188, 369–77. [Google Scholar] [CrossRef]

- Holste, D.; Große, I.; Herzel, H. Bayes’ estimators of generalized entropies. Journal of Physics A: Mathematical and General 1998, 31, 2551–2566. [Google Scholar] [CrossRef]

- Strong, S.P.; Koberle, R.; de Ruyter van Steveninck, R.R.; Bialek, W. Entropy and Information in Neural Spike Trains. Phys. Rev. Lett. 1998, 80, 197–200. [Google Scholar] [CrossRef]

- de Wit, T.D. When do finite sample effects significantly affect entropy estimates? The European Physical Journal B - Condensed Matter and Complex Systems 1999, 11, 513–516. [Google Scholar] [CrossRef]

- Antos, A.; Kontoyiannis, I. Convergence properties of functional estimates for discrete distributions. Random Structures & Algorithms 2001, 19, 163–193. [Google Scholar] [CrossRef]

- Nemenman, I.; Shafee, F.; Bialek, W. Entropy and inference, revisited. In T. G. Dietterich, S. Becker, and Z. Ghahramani, editors, Advances in Neural Information Processing Systems 2002, 14, 471–478. [Google Scholar]

- Paninski, L. Estimation of entropy and mutual information. Neural Computation 2003, 15, 1191–1253. [Google Scholar] [CrossRef]

- Chao, A.; Shen, T.J. Non parametric estimation of Shannon’s index of diversity when there are unseen species. Environ. Ecol. Stat. 2003, 10, 429–443. [Google Scholar] [CrossRef]

- Schürmann, T. Bias analysis in entropy estimation. Journal of Physics A: Mathematical and General 2004, 37, L295. [Google Scholar] [CrossRef]

- Paninski, L. Estimating entropy on m bins given fewer than m samples. IEEE Transactions on Information Theory 2004, 50, 2200–2203. [Google Scholar] [CrossRef]

- Bonachela, J.; Hinrichsen, H.; Muñoz, M. Entropy estimates of small data sets. Journal of Physics A: Mathematical and Theoretical 2008, 41, 9. [Google Scholar] [CrossRef]

- Grassberger, P. Entropy Estimates from Insufficient Samplings, 2008, [arXiv:physics.data-an/physics/0307138].

- Hausser, J.; Strimmer, K. Entropy inference and the James-Stein estimator, with application to nonlinear gene association networks. J. Mach. Learn. Res. 2009, 10, 1469–1484. [Google Scholar]

- Lesne, A.; Blanc, J.; Pezard, L. Entropy estimation of very short symbolic sequences. Physical Review E 2009, 79, 046208. [Google Scholar] [CrossRef]

- Xu, D.; Erdogmuns, D. Renyi’s Entropy, Divergence and Their Nonparametric Estimators. In Information Theoretic Learning: Renyi’s Entropy and Kernel Perspectives; Springer New York: New York, NY, 2010; pp. 47–102. [Google Scholar] [CrossRef]

- Vinck, M.; Battaglia, F.; Balakirsky, V.; Vinck, A.; Pennartz, C. Estimation of the entropy based on its polynomial representation. Phys. Rev. E 2012, 85, 051139. [Google Scholar] [CrossRef] [PubMed]

- Valiant, G.; Valiant, P. Estimating the Unseen: Improved Estimators for Entropy and Other Properties. J. ACM 2017, 64. [Google Scholar] [CrossRef]

- Zhang, Z.; Grabchak, M. Bias Adjustment for a Nonparametric Entropy Estimator. Entropy 2013, 15, 1999–2011. [Google Scholar] [CrossRef]

- Acharya, J.; Orlitsky, A.; Suresh, A.; Tyagi, H. The complexity of estimating Rényi entropy. In Proceedings of the Proceedings of the twenty-sixth annual ACM-SIAM symposium on Discrete algorithms. SIAM, 2014, pp. 1855–1869.

- Archer, E.; Park, I.; Pillow, J. Bayesian entropy estimation for countable discrete distributions. The Journal of Machine Learning Research 2014, 15, 2833–2868. [Google Scholar]

- Li, L.; Titov, I.; Sporleder, C. Improved estimation of entropy for evaluation of word sense induction. Computational Linguistics 2014, 40, 671–685. [Google Scholar] [CrossRef]

- Acharya, J.; Orlitsky, A.; Suresh, A.; Tyagi, H. , The complexity of estimating Rényi entropy. In Proceedings of the 2015 Annual ACM-SIAM Symposium on Discrete Algorithms (SODA); pp. 1855–1869.

- Zhang, Z.; Grabchak, M. Entropic representation and estimation of diversity indices. Journal of Nonparametric Statistics 2016, 28, 563–575. [Google Scholar] [CrossRef]

- Acharya, J.; Orlitsky, A.; Suresh, A.; Tyagi, H. Estimating Rényi entropy of discrete distributions. IEEE Transactions on Information Theory 2017, 63, 38–56. [Google Scholar] [CrossRef]

- de Oliveira, H.; Ospina, R. A Note on the Shannon Entropy of Short Sequences 2018. [CrossRef]

- Berrett, T.; Samworth, R.; Yuan, M. Efficient multivariate entropy estimation via k-nearest neighbour distances. The Annals of Statistics 2019, 47, 288–318. [Google Scholar] [CrossRef]

- Verdú, S. Empirical estimation of information measures: a literature guide. Entropy 2019, 21, 720. [Google Scholar] [CrossRef]

- Goldfeld, Z.; Greenewald, K.; Niles-Weed, J.; Polyanskiy, Y. Convergence of smoothed empirical measures with applications to entropy estimation. IEEE Transactions on Information Theory 2020, 66, 4368–4391. [Google Scholar] [CrossRef]

- Contreras Rodríguez, L.; Madarro-Capó, E.; Legón-Pérez, C.; Rojas, O.; Sosa-Gómez, G. Selecting an effective entropy estimator for short sequences of bits and bytes with maximum entropy. Entropy 2021, 23. [Google Scholar] [CrossRef]

- Kim, Y.; Guyot, C.; Kim, Y. On the efficient estimation of Min-entropy. IEEE Transactions on Information Forensics and Security 2021, 16, 3013–3025. [Google Scholar] [CrossRef]

- Grassberger, P. On Generalized Schürmann Entropy Estimators. Entropy 2022, 24. [Google Scholar] [CrossRef]

- Pincus, S. Approximate entropy as a measure of system complexity. Proc Nati.Acad.Sci.USA 1991, 88, 2297–2301. [Google Scholar] [CrossRef] [PubMed]

- Richman, J.S.; Moorman, J.R. Physiological time-series analysis using approximate entropy and sample entropy. American Journal of Physiology-Heart and Circulatory Physiology 2000, 278, H2039–H2049. [Google Scholar] [CrossRef] [PubMed]

- Manis, G.; Aktaruzzaman, M.; Sassi, R. Bubble entropy: An entropy almost free of parameters. IEEE Transactions on Biomedical Engineering 2017, 64, 2711–2718. [Google Scholar] [CrossRef]

- Shannon, C. The bandwagon (Edtl.). IRE Transactions on Information Theory 1956, 2, 3–3. [Google Scholar] [CrossRef]

- Ribeiro, M.; Henriques, T.; Castro, L.; Souto, A.; Antunes, L.; Costa-Santos, C.; Teixeira, A. The entropy universe. Entropy 2021, 23. [Google Scholar] [CrossRef] [PubMed]

- Skorski, M. Improved estimation of collision entropy in high and low-entropy regimes and applications to anomaly detection. Cryptology ePrint Archive, Paper 2016/1035, 2016.

- Skorski, M. Towards More Efficient Rényi Entropy Estimation. Entropy 2023, 25, 185. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C. A mathematical theory of communication. The Bell System Technical Journal 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Kamath, S.; Verdú, S. Estimation of entropy rate and Rényi entropy rate for Markov chains. In Proceedings of the 2016 IEEE International Symposium on Information Theory (ISIT); 2016; pp. 685–689. [Google Scholar] [CrossRef]

- Golshani, L.; Pasha, E.; Yari, G. Some properties of Rényi entropy and Rényi entropy rate. Information Sciences 2009, 179, 2426–2433. [Google Scholar] [CrossRef]

- Golshani, L.; Pasha, E. Rényi entropy rate for Gaussian processes. Information Sciences 2010, 180, 1486–1491. [Google Scholar] [CrossRef]

- Teixeira, A.; Matos, A.; Antunes, L. Conditional Rényi Entropies. IEEE Transactions on Information Theory 2012, 58, 4273–4277. [Google Scholar] [CrossRef]

- Fehr, S.; Berens, S. On the Conditional Rényi Entropy. IEEE Transactions on Information Theory 2014, 60, 6801–6810. [Google Scholar] [CrossRef]

- Packard, N.H.; Crutchfield, J.P.; Farmer, J.D.; Shaw, R.S. Geometry from a Time Series. Phys. Rev. Lett. 1980, 45, 712–716. [Google Scholar] [CrossRef]

- Takens, F. Detecting strange attractors in turbulence. In Proceedings of the Dynamical Systems and Turbulence, Warwick 1980: proceedings of a symposium held at the University of Warwick 1979/80. Springer, 1981-2006, pp. 366–381.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).