1. Introduction

Most higher education institutions word-wide prioritize in their academic development strategy research and development through winning competitive national and international funding programs and the publication of studies in high-impact venues [

1]. In this teaching research nexus in higher education, student teaching is often seen by university and college faculty members as a necessity sometime even a distraction from truly meaningful work [

2]. Career advancement of tenured academic faculty is determined by research visibility and publication output and impact instead of teaching quality [

1]. However, teaching and learning is a critical procedure that influences directly the prospects of economic growth and societal well-being through the knowledge, competencies and values of students and graduates within academic and professional communities. The quality of teaching and learning depends on several factors, one of them being the perceptions of teaching staff on what constitutes effective teaching and learning [

3].

Tokuhama-Espinosa provides a holistic vision for the contemporary educator as learning professional and scientist [

4]. She advocates that teachers at all levels of service should be literate in neuroeducation. This notion is reflected in an enriched TPACK model that she proposes featuring a new area on educational neuroscience (mind, brain and education science) next to the technological, pedagogical and content knowledge [

4].

Are educators and higher education teachers informed about brain-based learning practices? Apparently, there is an acute upskilling need for professionals at all levels of education, especially higher education lecturers and professors who shape the next generations of teachers and scientists. This need is further amplified due to the fact that there is an alarmingly high number of persistent misconceptions about the brain and its role in learning [

5]. Teacher professional development can be essential to grasp the affordances of new media and for the formulation of pedagogy-informed teaching and learning practices.

Neuropedagogy, educational neuroscience or neuroeducation is the application of cognitive neuroscience to teaching and learning [

6]. Neuropedagogy is the place where science and education can combine and whose scientific goals are to learn how to stimulate new zones of the brain and create neural connections. Research findings from educational neuroscience provide opportunities for transformative learning in distance education settings [

7].

Design thinking is a creative, flexible methodology for tackling complex problems. It is based on a human-centered mindset for problem solving through iterative experimentation [

8]. Design thinking features a series of steps that are revisited and repeated as often as it is necessary. In the context of education, these steps are described as follows: Discovery, Interpretation, Ideation, Experimentation, Evolution [

9]. In the first phase, the target audience is specified and data are detected, collected and analyzed around a challenge. Next, data are interpreted in the quest for sense making and meaning around a set of common identified themes. In the ideation step, ideas are generated, prioritized, assessed and refined. Next, during experimentation these ideas are implemented into prototypes. Finally, evaluation feedback on prototypes is collected to track lessons learned and move forward with eventual shortcomings and open improvement issues. Design thinking techniques can be integrated into user-centered design of electronic systems and artifacts to increase users’ creativity and innovation capacity [

10].

2. Materials

2.1. Platform Design Process

This study took place in the frame of the Neuropedagogy Erasmus+ project. Its prime aim was to train higher education teaching staff in innovative methods, based on neuroscience through an online platform in which a community of higher education lecturers is created to facilitate the development of transversal communication competences [

11]. It was conceived for the application of neuroscience to teaching and to offer professional development chances to interested educators for the expansion of their knowledge and skills in that specific scientific domain. Experiences and lessons learned from previous professional development were incorporated to optimize learning design regarding pedagogy, technology and aesthetics [

12].

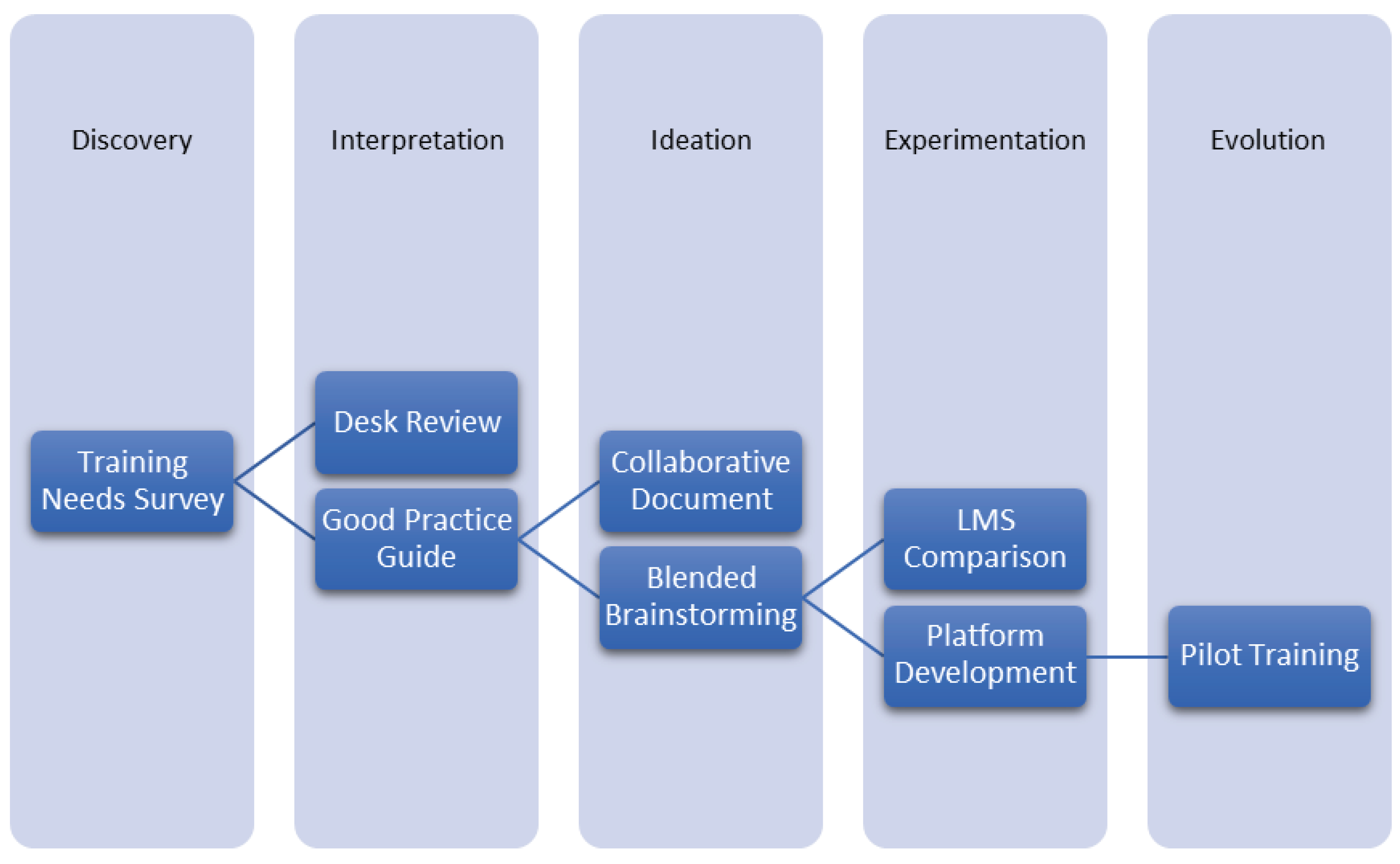

The design thinking methodology was applied throughout all phases to ensure an empathetic implementation approach as illustrated in

Figure 1. First, an extensive data collection from targeted users took place. Quantitative and qualitative user data from academic faculty members revealed a strong interest and knowledge gaps in the subject matter [

13]. Then, a literature review described previous works and principles of neuroeducation [

14]. Selected case studies, narratives, projects, toolkits and resources were compiled in a good practice guide. Next, an elaborate ideation took place with the objective of outlining the platform’s structure. First, a desk study was conducted to identify the best possible software systems. A blended online brainstorming/ideation session followed to define the desirable features and structure of the platform. The ideation was based on objective data from the industry and literature, subjective data from project partners’ experiences and finally their preferences and needs regarding the project’s educational platform.

The designed educational online platform consisted of two, hybrid subsystems. The first, the LMS, aimed at higher education lecturers related to the training in the didactic method based on neuroscience accommodating all developed training contents. The second space was a collaborative environment for an online community of practice that allows communication, sharing and mutual, peer learning among academic faculty members. Both parts can be utilized to facilitate formal and informal learning experiences.

In the first phase of the ideation, desk research was conducted to compare software systems that are suitable for this project’s platform implementation. After this process, two main candidate systems emerged: WordPress and Moodle. Both systems are open source, can be installed for free and are world-leading in their categories. Wordpress is a popular, versatile open-source content management system with a wide user base, installations, a large variety of plugins and extensions. Moreover, Wordpress has a greater potential for social e-learning [

15]. WordPress can provide the basis for the community part, while Moodle can be the hosting system for the online content and courses. Moodle is a solid open-source solution for e-learning with millions of installations in educational institutions world-wide [

16]. However, the interplay between them was not seamless. Ensuring a single-sign on system where users would sign-up once and could have access to both subsystems (e-learning courses and the community of practice platform) emerged as a top priority. This was a problem that would be solved later on.

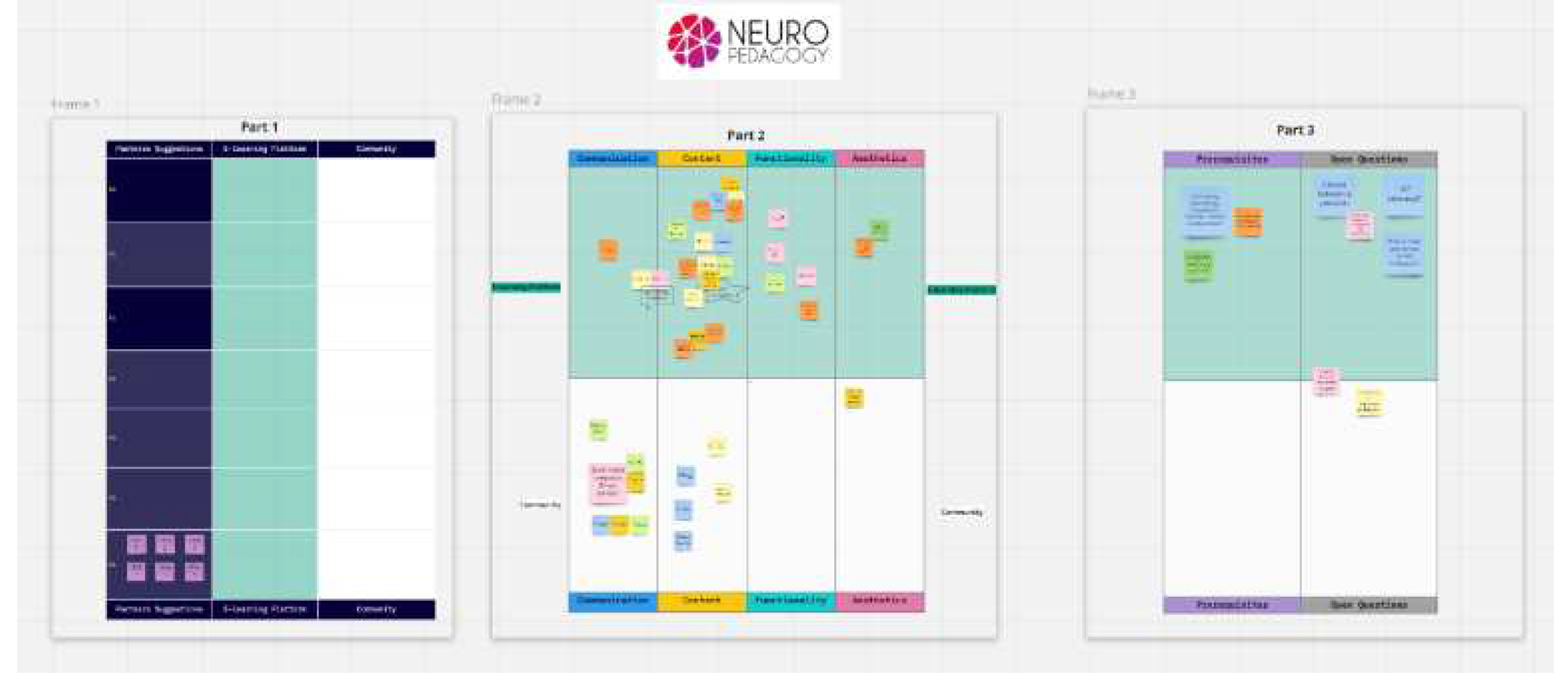

In the second phase, the most important and desired features for the educational platform & community were discussed. A blended system’s requirements collection was implemented in the form of structured brainstorming in two stages. In the first stage, a collaborative sheet was provided online in Google Drive to record the most important/desired features for the educational platform & community. The sheet had the following fields/columns: Importance (Critical, Essential, Good to have, Avoid), Component (E-learning platform or Community - web 2.0), Category (Aesthetics, Communication, Content, Functionality), Title – Description, Usefulness for NP project, Source, Screenshot or link and Connection to Neuroscience (theory / method / technique) and Reference/s. Each partner had its own separate sheet but had open access and could read the other partners’ entries. Sources of evidence for suggestions could be an already existing platform, the instructional design for the e-learning module under development and the application of specific Neuropedagogy principles.

The second stage took place during an online workshop using a collaborative tool (Miro) to discuss and synthesize everyone’s suggestions. First, partners briefly presented their proposals to ensure that each suggestion or concept is clear to all without misconceptions. Second, all suggestions were inserted as virtual post-it notes into the two-dimensional space and organized for (i) e-learning (LMS) and (ii) community subsystems across four main categories: Communication, Content, Functionality, Aesthetics (

Figure 2). Next, all items in each category for both systems were summarized and discussed one by one to see if there were any disagreements or diverging opinions. Through this process, a consensus was reached which is presented in the next section. Finally, additional notes were created whenever prerequisites issues and open questions were identified. These open questions allowed the discussion to reach deeper levels and connect with the project’s rationale in more fundamental ways. However, the problem of seamless interplay between Wordpress and Moodle had not been solved yet.

An open issue that helped us come up with a solution was the possibility to have systems informed by Neuropedagogy to act as good practice for practitioners and differentiate from contemporary practices. This particular aspect led us to widen the search and consider other systems. This process derived from the results of the desk research on LMS, along with project requirements and partners’ experiences lead to Learndash [

17]. Finally, after thorough comparison between the two LMS, Moodle and Learndash and based on the suggested features essential functions of the LMS a decision was taken to adopt Learndash over Moodle as it was considered more appropriate for this particular use case. Its main advantage was its seamless integration into WordPress as a plugin, ensuring full interoperability as well as improved organizational and aesthetical aspects in smaller “chunks” of content.

2.2. Neuropedagogy Course Outline

Administered via an online platform, the course was structured into six modules, each delving into distinct aspects of the subject under investigation as follows:

Introduction to Neuropedagogy: this inaugural module explores the definition of Neuropedagogy and its value for education. It concludes with foundational information about the brain’s anatomy and its function during learning.

Neuromyths in Education: this module explores the problematic proliferation of inaccurate beliefs about the brain and its role in learning. It presents the five more common neuromyths and debunking scientific evidence. It contains also a practical tool for everyone to self-assess eventual biases towards neuromyths.

Engagement: Next, the neural mechanisms underlying engagement are explored along with recommended strategies including cooperation and gamification.

Concentration & Attention: The same structure is duplicated here, presenting the definition and the neural processes behind attention and indicative application methods for classroom instruction.

Emotions: This is the most extensive module starting with a definition and classification of emotions. It explores the emotional and social aspects of the brain and contains several ideas to improve emotional responses in education.

Associative Memory: The ultimate module explores the function of memory in learning, the different associative memory types such as semantic and episodic memory.

These modules (as well as the platform interface) were translated fully in five languages and encompassed a diverse range of learning and evaluation activities, including diagnostic questionnaires, associative exercises, self-assessment quizzes, explanatory schemata and reflective questions.

The user navigation within each module is free and without any restrictions. Hence there are no forced linear learning paths. Adhering to the principles of adult learning, the learners are entrusted to exercise their agency to navigate back and forth to consolidate their learning progress and in-depth understanding engaging with the activities of their choice [

18]. This facilitates the needs of some advanced users for quick access to the learning content skipping eventual self-evaluative and reflective activities. Screenshots from various facets of the Neuropedagogy platform and course are available in

Appendix A.

3. Method

In this study we investigated the attitudes of academics towards a specialized course centered on Neuropedagogy utilizing a mixed method approach. The primary objective was to offer a comprehensive understanding of the discipline while, the secondary goal, focused on equipping participants with the necessary skills to integrate this knowledge into their instructional practices. Upon completion of the course, participants were requested to complete a psychometric survey (

Table 1), comprising 18 items, which aimed at evaluating their learning experience perceptions. These items encompassed various dimensions including: (i) overall assessment of the course, (ii) relevance, currency, and efficiency of the educational materials, (iii) suitability of the learning activities, (iv) perceived value of individual modules and identification of modules requiring revision, (v) relevance of the assessment tasks to the course objectives, and finally (vi) perceived usefulness of the platform in view of technical challenges encountered during the course delivery.

For the analysis of the primary data we followed the guidelines provided by Pallant [

19]. To establish the reliability (internal consistency) of the survey items, Cronbach’s alpha was calculated. Subsequently, the Shapiro-Wilk test was employed to examine normality. Due to the violation of normal distribution and in view of the small sample size, non-parametric tests were selected [

20].

Pertaining to the statistical analysis, descriptive statistics offered an initial overview of the dataset, facilitating the identification of patterns and trends. This analysis provided insights into central tendencies, dispersion, and distribution of the data. Frequencies of responses were calculated for the multiple selection items. Accordingly, multiple Spearman’s rank correlation analyses were conducted to determine the strength and the direction of the relationships between specific variables [

21]. To further validate these findings, a Generalized Additive Model was created using the overall evaluation of the course as the dependent variable. This allowed us to identify potential predictors of the factors that influenced participants’ ratings [

22].

Finally, in order to process the feedback received in the open-ended questions, we adopted the principles of the Ground Theory which involve a constant comparison, coding, and categorization of the data to identify emerging themes and patterns [

23].

4. Results

In total, thirty-two (N=32) participants, high education faculty members took part in the study and evaluated the platform and its contents anonymously between February and April 2023. The decision to keep the survey anonymous was taken to facilitate the objective and impartial validation of the course contents and the platform’s functionality. The participants were primarily university teaching staff and researchers who had expressed their interest in the project’s topic and provided information about their prior knowledge and training needs [

13].

The Cronbach’s alpha coefficient obtained for the Likert Scale items is .86, a value that indicates good internal consistency [

24]. In the remainder of this section, we delve deeper into the key findings that emerged from the statistical analyses and discuss their implications for instructional design and Neuropedagogy.

4.1. Descriptive Statistics

The descriptive statistics of the Likert scale items can be found in

Table 2 while the frequency of the multi-choice responses is illustrated in

Table 3. The positive ratings across the various aspects of the course, such as its overall quality (

M=4.56,

SD=.6), the relevance of the learning resources (

M=4.53,

SD=.55), the efficiency of the educational materials (

M=4.5,

SD=.7) and the perceived usefulness of the platform (

M=4.46,

SD=.74), indicate that participants found the course to be effective in achieving its intended learning objectives. Participants also agreed unanimously on the appropriateness of the learning activities for each module (Q8.1-Q8.6) and the relevance of the assessment questions (Q9) with mean, median, and mode values consistently at 1. This consensus highlights the importance of aligning the learning activities with the assessment tasks and the course objectives. On the negative side, a small portion of participants encountered technical difficulties during the training program (

M=.25,

SD=.43). While this number may be relatively low, addressing this issue is crucial to further improve the user experience and ensure that the stakeholders can access and benefit from the course without hindrances.

Concerning the multiple selection items, the analysis revealed that participants found the modules “Emotions” (56.25%), “Concentration & Attention” (46.85%), and “Associative Memory” (40.6%), to be the most useful, likely due to their direct impact on the teaching practices. The popularity of these modules highlights the importance of addressing real-world classroom challenges and providing educators with tools and techniques to increase learner engagement and improve learning outcomes. In contrast, participants perceived the “Introduction to Neuropedagogy” (31.25%), and the “Engagement in the learning process” (31.25%) modules as less appealing, suggesting that they may have found the content too abstract or that they may have been looking for more concrete, practical examples, strategies, and actionable insights that can be easily applied in diverse teaching contexts.

Regarding suggestions for improvement, the majority of participants (81.25%) believed that none of the modules required any revisions or improvements. This further consolidates the general satisfaction with the course content and structure, reflecting the effectiveness of the modules in addressing participants’ interests. However, a portion of participants identified some of the modules as requiring revision with the “Introduction to Neuropedagogy” (9.4%), “Emotions” (12.5%), and “Neuromyths” (9.4%) being at the top of the list. Interestingly, despite “Emotions” being the most highly valued module, it still received feedback for improvement. This suggests that participants are highly interested in the topic and are seeking further development or expansion in this area to better support their teaching.

4.2. Correlations

Spearman’s rank correlations revealed a strong positive correlation between participants’ general evaluation of the course and their perception of the relevance and recency of the learning materials (

ρ=.73,

p<.01). Our study’s findings align with previous research emphasizing the significance of offering current and pertinent content for successful online learning experiences (for example, [

25,

26]). Additionally, we noticed a strong positive correlation between the overall course assessment and the platform’s usefulness (

ρ=.71,

p<.01). Earlier studies have also shown that factors such as the usability of the platform, its accessibility, and the overall design significantly impact learners’ satisfaction and engagement in online courses (for example, [

27,

28]). Moreover, we found a strong positive correlation between the general evaluation of the course and the effectiveness of the educational materials (

ρ=.73,

p<.01). Several studies have also highlighted the critical role of effective instructional design in fostering successful online learning outcomes (e.g., [

12,

29]).

4.3. Generalized Additive Model

The Generalized Additive Model analysis confirmed the aforementioned findings indicating that the evaluation of the course is significantly predicted by several factors with the

relevance (smooth function=1.45,

p<.01) and

efficacy (smooth function=1.12,

p<.01) of the

learning materials being among the strongest predictors. These findings are in agreement with the relevant literature further confirming the importance of ensuring that the course content is up-to-date [

30,

31]. Although with a lesser effect, the

efficiency of the learning activities (smooth function=.89,

p<.01) and the

relevance of the assessment tasks (smooth function=.67,

p<.05) also influenced participants’ attitudes toward the course. This outcome is also in alignment with recent research suggesting that the learning activities should be designed to enhance learners’ understanding of the course material and the assessment tasks should be closely aligned with the educational content [

32,

33]. Finally, the

usability of the platform utilized to deliver the educational activities and respective content does not seem to have influenced participants’ ratings as much (smooth function=.28,

p<.01) possibly due to the sample consisting of academics who may be more adept at navigating various platforms. However, providing learners with a user-friendly platform remains an important aspect that should not be overlooked as it can contribute to a positive learning experience [

34].

4.4. Open-Ended Questions

Participants’ responses in the open-ended questions compliment the quantitative data and offer additional insights. Academics found each module to be useful (S5.1) in its own way, with many of them noting multiple modules. The reasons cited for usefulness varied but were mostly related to the content with comments such as “the content is informative”, “the material is packed with useful and essential guidelines”, and “the course is well-structured and comprehensive”. Some participants highlighted the practical and applied elements of the materials, stating that “the material was well organized” and that the contents are “very timely and closely linked to real problems”. They also appreciated the research-based way neuromyths were deconstructed, with one participant commenting on the “great job debunking myths with solid evidence”. The module concerning “Emotions” seemed to be an area of interest for some participants who noted the under-researched and underestimated nature of emotions in the learning process. This was further supported by additional feedback emphasizing the importance of engagement and positive attitude in the learning process, as well as the need for attention to be paid to students’ emotions and their connection to the acquisition of knowledge and skills. Other participants praised the course for its clear concepts that can be applied in everyday situations, its ability to broaden their knowledge of associative memory, and the interesting content that contains very useful resources and literature. Finally, one participant even described a module as “the most interesting” they had encountered. Overall, the added feedback underscores the value of the course in addressing prevalent misconceptions and providing a comprehensive understanding of crucial aspects of the learning process.

While most participants did not offer suggestions for enhancing or modifying the modules (S6.1), a few provided valuable feedback on areas that could be improved. Specifically, one participant suggested that the modules dealing with “Emotions” and “Neuromyths” might benefit from more detailed explanations and increased clarity, while another mentioned that certain topics “could be covered at greater depth.” Participants also raised concerns about the linguistic aspects of the modules as well as the presentation and formatting style. Such remarks underline the need to balance across content richness and accessibility to satisfy individual preferences. Lastly, a few participants underlined the importance of ongoing development and adaptation.

The feedback received with regard to the training material (S7) was diverse. Some participants felt that they lacked the necessary experience to identify areas for improvement, while others had no comments or suggestions. Nevertheless, a few participants proposed incorporating more illustrations, images, and videos to enrich the learning experience and make the content more dynamic. Additionally, one participant pointed out that certain visuals needed higher resolution, as they were either small or blurry. In terms of textual content, one respondent recommended that the amount of text should be reduced. Other participants observed that the assessment questions were more challenging than the training material itself, necessitating a deeper understanding of the content to answer them. Relatedly, one participant noted that in assessments, the platform did not accept some correct answers as valid due to language mixing or slight differences in phrasing. They suggested that open-ended tests should not be machine-tested to avoid such issues. In the same vein, one participant advised awarding partial points for correct answers in the quizzes with multiple correct answers, arguing that marking the entire answer as incorrect, when only one part was wrong, was neither helpful nor fair. To further support learning, one participant suggested that qualitative feedback should be provided alongside the correct answer. Finally, some respondents recommended including more examples of good practices, especially when addressing problematic or difficult students.

The last open-ended question (S11) sought to gather supplementary feedback from the participants, encompassing general remarks and suggestions. The majority of the responses painted a favorable picture of the educational program featuring accolades such as «Congratulations on the developed course!” and expressing keen interest. Intriguingly, one participant regarded the Neuropedagogy training program as both captivating and advantageous, further disclosing their plan to adapt their teaching approach in accordance with the program’s principles. On the other hand, some feedback identified areas in need of enhancement. For instance, one participant experienced technical difficulties during the course (e.g., challenges in navigating back to the homepage and sluggish page loading times) while other respondents encountered issues with the registration process, noting that the registration button was not working properly and that the platform was not very intuitive. Another area of concern was the translation quality of the learning material (from English to participants’ native language). These observations underscore the significance of addressing the technical components and translation quality of educational programs to guarantee their seamless operation, efficacy, and overall user experience.

5. Discussion

In the context of this study, we sought to investigate the perspectives of Higher Education instructors on a Neuropedagogy course aiming to identify its strong points and potential areas for enhancement. Our research not only contributes to the broader literature by showcasing methods to enrich teaching and learning practices [

35] but also supports the growing evidence highlighting the significance of educators’ professional development [

36,

37]. The insights obtained from this study can inform course and instructional designers in designing and developing engaging and effective online learning experiences.

Our findings revealed a predominantly favorable attitude towards the course, characterized by high ratings in the relevance and efficacy of the educational resources. These insights can be harnessed to fine-tune the design and delivery of future online courses by: (a) prioritizing the provision of timely and pertinent materials [

38], (b) ensuring that the assessment tasks align well with the content of the course modules [

39], and (c) placing a strong focus on developing a user-friendly and technically sound platform [

40,

41]. Participants also unanimously commended the design of the learning activities and the congruence of the assessment tasks with the course’s intended learning outcomes [

42,

43]. All these factors are crucial in delivering effective and engaging learning experiences [

44,

45].

Concerning the evaluation of the particular topics, we identified specific modules that call for further enhancement and consideration. Continuous evaluation and revision of courses are crucial to maintain their ongoing relevance and efficacy [

46]. Moreover, the technical obstacles reported by participants, resonate with the prevalent issues often faced in online education, thus, meriting careful attention [

47].

In addition, they highlight the potential advantages of integrating diverse instructional strategies and multimedia resources into the course design [

48]. Such an approach can accommodate varied learning preferences and foster cognitive flexibility, thereby creating a more engaging and inclusive learning environment that caters to the diverse needs of learners [

33]. Lastly, our research focuses on the significance of providing personalized feedback and guidance to learners as a means to help them better comprehend their strengths and weaknesses [

49].

6. Conclusions

In light of the study’s findings, we present the following implications for stakeholders involved in designing and delivering online professional development courses.

Instructional designers and course content creators specializing in professional development initiatives should pay special attention to the structure of the learning activities ensuring that the learning material is relevant and current and the assessments authentic [

50,

51]. In addition, the diverse learning preferences of the audience should be considered to increase the incentives for engagement [

52].

Software developers need to invest in robust technical infrastructure and support systems in order to ensure that the final product will deliver a smooth and seamless learning experience [

32,

53].

Higher Education institutions and policymakers need to recognize the potential of online courses to foster professional growth among teachers [

54] as well as their role in the overall teaching optimization process. As academics are subject experts in their fields, recommendations for change in their established teaching practices could be met with skepticism, even resistance. The sustainable transformation of teaching practices requires repeated reinforcement of new knowledge, the freedom to make responsible decisions and the verification of valid interpretations through mentoring and trusted peer interactions.

In this notion, higher-managerial staff is urged to cultivate a culture of ongoing quality-focused professional development by providing access to online courses and encouraging faculty to engage in lifelong learning [

55]. Embracing this approach not only will enhance the quality of teaching but it will also contribute to the overall success of the educational institution [

56].

The overall evaluation demonstrates a notable satisfaction level with regard to the quality of the course. Nonetheless, it is important to consider the following limitations when interpreting these findings.

One limitation is the explicit reliance on self-reported data from a psychometric instrument which may be subject to social desirability bias and may not fully capture participants’ actual experiences [

57]. A more comprehensive assessment of the course’s impact on educators’ professional development could be obtained by incorporating alternative methods such as the evaluation of longitudinal studies on the application of the Neuropedagogy principles in their teaching practices. To obtain a more in-depth understanding of participants’ experiences and identify potential areas for improvement, future research can incorporate additional qualitative data collection sources such as interviews or focus groups.

Furthermore, we did not collect background information on participants. Future work should investigate the influence of participant characteristics including gender, age, prior experience with online courses, academic discipline, teaching experience and so on. Understanding these relationships could help instructional designers and course developers to better target both the course content and the delivery methods.

Another significant limitation is the sample size which restricts the generalizability of the findings to a broader population of academics. Small sample sizes may lead to biased estimates or reduced statistical power thus, making it challenging to detect true effects or relationships. To address this limitation, future research should increase the sample size by recruiting a more diverse and larger pool of participants from various academic disciplines, institutions, and backgrounds. This approach would enhance the generalizability of the findings and provide more accurate insights into the participants’ experiences and perceptions.

Supplementary Materials

The following supporting information can be downloaded at the website of this paper posted on Preprints.org.

Author Contributions

Conceptualization, S.M.; methodology, S.M. and M.F.; software, K.D.; validation, S.M. and A.C.; formal analysis, A.C.; investigation, A.C.; resources, K.D.; data curation, S.M.; writing—original draft preparation, S.M. and A.C.; writing—review and editing, A.C. and S.M.; visualization, S.M. and K.D.; supervision, M.F.; project administration, S.M.; funding acquisition, M.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Erasmus+ program of the European Union, grant number 2020-1-PL01-KA203-081740.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of the University of Patras (protocol code 6823 / 9 October 2020).

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author, S.M.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Snapshots from the Neuropedagogy e-learning platform and course.

References

- Youn, T.I.K.; Price, T.M. Learning from the Experience of Others: The Evolution of Faculty Tenure and Promotion Rules in Comprehensive Institutions. J High. Educ 2009, 80, 204–237. [Google Scholar] [CrossRef]

- Malcolm, M. A Critical Evaluation of Recent Progress in Understanding the Role of the Research-Teaching Link in Higher Education. High Educ (Dordr) 2014, 67, 289–301. [Google Scholar] [CrossRef]

- Entwistle, N.J.; Peterson, E.R. Conceptions of Learning and Knowledge in Higher Education: Relationships with Study Behaviour and Influences of Learning Environments. Int J Educ Res 2004, 41, 407–428. [Google Scholar] [CrossRef]

- Tokuhama-Espinosa, T. Bringing the Neuroscience of Learning to Online Teaching: An Educator’s Handbook; Teachers College Press: 2021; ISBN 9780807765531.

- Grospietsch, F.; Lins, I. Review on the Prevalence and Persistence of Neuromyths in Education – Where We Stand and What Is Still Needed. Front Educ 2021, 6. [Google Scholar] [CrossRef]

- Ansari, D.; de Smedt, B.; Grabner, R.H. Neuroeducation – A Critical Overview of An Emerging Field. Neuroethics 2012, 5, 105–117. [Google Scholar] [CrossRef]

- Doukakis, S.; Alexopoulos, E.C. Online Learning, Educational Neuroscience and Knowledge Transformation Opportunities for Secondary Education Students. J. High. Educ. Theory Pract. 2021, 21. [Google Scholar] [CrossRef]

- Patrício, R.; Moreira, A.C.; Zurlo, F. Enhancing Design Thinking Approaches to Innovation through Gamification. Eur. J. Innov. Manag. 2021, 24, 1569–1594. [Google Scholar] [CrossRef]

- Gachago, D.; Morkel, J.; Hitge, L.; van Zyl, I.; Ivala, E. Developing ELearning Champions: A Design Thinking Approach. Int. J. Educ. Technol. High. Educ. 2017, 14, 30. [Google Scholar] [CrossRef]

- Gonzalez, C.S.G.; Gonzalez, E.G.; Cruz, V.M.; Saavedra, J.S. Integrating the Design Thinking into the UCD’s Methodology. In Proceedings of the IEEE EDUCON 2010 Conference; IEEE, April 2010; pp. 1477–1480. [Google Scholar]

- Dimitropoulos, K.; Mystakidis, S.; Fragkaki, M. Bringing Educational Neuroscience to Distance Learning with Design Thinking: The Design and Development of a Hybrid E-Learning Platform for Skillful Training. In Proceedings of the 2022 7th South-East Europe Design Automation, Computer Engineering, Ioannina, Greece, 23–25 September 2022, Computer Networks and Social Media Conference (SEEDA-CECNSM); pp. 1–6.

- Mystakidis, S.; Fragkaki, M.; Filippousis, G. Ready Teacher One: Virtual and Augmented Reality Online Professional Development for K-12 School Teachers. Computers 2021, 10, 134. [Google Scholar] [CrossRef]

- Fragkaki, M.; Mystakidis, S.; Dimitropoulos, K. Higher Education Faculty Perceptions and Needs on Neuroeducation in Teaching and Learning. Educ Sci 2022, 12, 707. [Google Scholar] [CrossRef]

- Fragkaki, M.; Mystakidis, S.; Dimitropoulos, K. Higher Education Teaching Transformation with Educational Neuroscience Practices. In Proceedings of the 15th annual International Conference of Education, Research and Innovation; IATED, Seville, Spain, 8 November 2022; pp. 579–584. [Google Scholar]

- Krouska, A.; Troussas, C.; Virvou, M. Comparing LMS and CMS Platforms Supporting Social E-Learning in Higher Education. In Proceedings of the 2017 8th International Conference on Information, Intelligence, August 2017, Systems & Applications (IISA); IEEE; pp. 1–6.

- Al-Ajlan, A.; Zedan, H. Why Moodle. Future Trends Distrib. Comput. Syst. IEEE Int. Workshop 2008, 58–64. [Google Scholar] [CrossRef]

- Natriello, G.; Chae, H.S. Taking Project-Based Learning Online. In Innovations in Learning and Technology for the Workplace and Higher Education; Guralnick, D., Auer, M.E., Poce, A., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 224–236. [Google Scholar]

- Mystakidis, S.; Lympouridis, V. Immersive Learning. Encyclopedia 2023, 3, 396–405. [Google Scholar] [CrossRef]

- Pallant, J. SPSS Survival Manual: A Step by Step Guide to Data Analysis Using IBM SPSS.; Allen & Unwin, 2020; ISBN 9781000252521.

- Field, A. Discovering Statistics Using IBM SPSS Statistics; SAGE Publications: 2018; ISBN 9781526419521.

- Hauke, J.; Kossowski, T. Comparison of Values of Pearson’s and Spearman’s Correlation Coefficients on the Same Sets of Data. QUAGEO 2011, 30, 87–93. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Series in Statistics; Springer: Berlin/Heidelberg, Germany, 2009; ISBN 9780387848846. [Google Scholar]

- Walker, D.; Myrick, F. Grounded Theory: An Exploration of Process and Procedure. Qual Health Res 2006, 16, 547–559. [Google Scholar] [CrossRef]

- Tavakol, M.; Dennick, R. Making Sense of Cronbach’s Alpha. Int J Med Educ 2011, 2, 53–55. [Google Scholar] [CrossRef] [PubMed]

- Moore, M.G.; Kearsley, G. Distance Education: A Systems View of Online Learning; Cengage Learning, 2011; ISBN 9781133715450.

- Keshavarz, M.; Ghoneim, A. Preparing Educators to Teach in a Digital Age. Int. Rev. Res. Open Distrib. Learn. 2021, 22, 221–242. [Google Scholar] [CrossRef]

- Al-Azawei, A.; Parslow, P.; Lundqvist, K. Investigating the Effect of Learning Styles in a Blended E-Learning System: An Extension of the Technology Acceptance Model (TAM). Australas. J. Educ. Technol. 2016. [Google Scholar] [CrossRef]

- Sun, P.-C.; Tsai, R.J.; Finger, G.; Chen, Y.-Y.; Yeh, D. What Drives a Successful E-Learning? An Empirical Investigation of the Critical Factors Influencing Learner Satisfaction. Comput Educ 2008, 50, 1183–1202. [Google Scholar] [CrossRef]

- Rienties, B.; Brouwer, N.; Lygo-Baker, S. The Effects of Online Professional Development on Higher Education Teachers’ Beliefs and Intentions towards Learning Facilitation and Technology. Teach Teach Educ 2013, 29, 122–131. [Google Scholar] [CrossRef]

- Khalil, R.; Mansour, A.E.; Fadda, W.A.; Almisnid, K.; Aldamegh, M.; Al-Nafeesah, A.; Alkhalifah, A.; Al-Wutayd, O. The Sudden Transition to Synchronized Online Learning during the COVID-19 Pandemic in Saudi Arabia: A Qualitative Study Exploring Medical Students’ Perspectives. BMC Med Educ 2020, 20, 285. [Google Scholar] [CrossRef]

- Zhou, T.; Huang, S.; Cheng, J.; Xiao, Y. The Distance Teaching Practice of Combined Mode of Massive Open Online Course Micro-Video for Interns in Emergency Department During the COVID-19 Epidemic Period. Telemed. E-Health 2020, 26, 584–588. [Google Scholar] [CrossRef] [PubMed]

- Preece, J.; Sharp, H.; Rogers, Y. Interaction Design: Beyond Human-Computer Interaction; Wiley, 2015; ISBN 9781119020752.

- Yu, Z. A Meta-Analysis and Bibliographic Review of the Effect of Nine Factors on Online Learning Outcomes across the World. Educ Inf Technol (Dordr) 2022, 27, 2457–2482. [Google Scholar] [CrossRef] [PubMed]

- Rodrigues, H.; Almeida, F.; Figueiredo, V.; Lopes, S.L. Tracking E-Learning through Published Papers: A Systematic Review. Comput Educ 2019, 136, 87–98. [Google Scholar] [CrossRef]

- Darby, F.; Lang, J.M. Small Teaching Online: Applying Learning Science in Online Classes; Wiley: Hoboken, NJ, USA, 2019; ISBN 9781119619093. [Google Scholar]

- Christopoulos, A.; Sprangers, P. Integration of Educational Technology during the Covid-19 Pandemic: An Analysis of Teacher and Student Receptions. Cogent Educ. 2021, 8. [Google Scholar] [CrossRef]

- Fernández-Batanero, J.M.; Montenegro-Rueda, M.; Fernández-Cerero, J.; García-Martínez, I. Digital Competences for Teacher Professional Development. Systematic Review. Eur. J. Teach. Educ. 2020, 1–19. [Google Scholar] [CrossRef]

- Bozkurt, A.; Sharma, R.C. Emergency Remote Teaching in a Time of Global Crisis Due to CoronaVirus Pandemic. Asian J. Distance Educ. 2020, 15, 1–6. [Google Scholar]

- Zlatkin-Troitschanskaia, O.; Pant, H.A.; Coates, H. Assessing Student Learning Outcomes in Higher Education: Challenges and International Perspectives. Assess Eval High Educ 2016, 41, 655–661. [Google Scholar] [CrossRef]

- Christopoulos, A.; Conrad, M.; Shukla, M. Increasing Student Engagement through Virtual Interactions: How? Virtual Real 2018, 22, 353–369. [Google Scholar] [CrossRef]

- König, J.; Jäger-Biela, D.J.; Glutsch, N. Adapting to Online Teaching during COVID-19 School Closure: Teacher Education and Teacher Competence Effects among Early Career Teachers in Germany. Eur. J. Teach. Educ. 2020, 43, 608–622. [Google Scholar] [CrossRef]

- Vanslambrouck, S.; Zhu, C.; Lombaerts, K.; Philipsen, B.; Tondeur, J. Students’ Motivation and Subjective Task Value of Participating in Online and Blended Learning Environments. Internet High Educ 2018, 36, 33–40. [Google Scholar] [CrossRef]

- Nancekivell, S.E.; Shah, P.; Gelman, S.A. Maybe They’re Born with It, or Maybe It’s Experience: Toward a Deeper Understanding of the Learning Style Myth. J Educ Psychol 2020, 112, 221–235. [Google Scholar] [CrossRef]

- Darling-Hammond, L.; Flook, L.; Cook-Harvey, C.; Barron, B.; Osher, D. Implications for Educational Practice of the Science of Learning and Development. Appl Dev Sci 2020, 24, 97–140. [Google Scholar] [CrossRef]

- Doo, M.Y.; Bonk, C.; Heo, H. A Meta-Analysis of Scaffolding Effects in Online Learning in Higher Education. Int. Rev. Res. Open Distrib. Learn. 2020, 21. [Google Scholar] [CrossRef]

- Sadaf, A.; Wu, T.; Martin, F. Cognitive Presence in Online Learning: A Systematic Review of Empirical Research from 2000 to 2019. Comput. Educ. Open 2021, 100050. [Google Scholar] [CrossRef]

- Li, X.; Odhiambo, F.A.; Ocansey, D.K.W. The Effect of Students’ Online Learning Experience on Their Satisfaction during the COVID-19 Pandemic: The Mediating Role of Preference. Front Psychol 2023, 14. [Google Scholar] [CrossRef] [PubMed]

- Clark, R.C.; Mayer, R.E. E-Learning and the Science of Instruction: Proven Guidelines for Consumers and Designers of Multimedia Learning; Wiley Desktop Editions; Wiley, 2011; ISBN 9781118047262.

- Van der Kleij, F.M.; Feskens, R.C.W.; Eggen, T.J.H.M. Effects of Feedback in a Computer-Based Learning Environment on Students’ Learning Outcomes. Rev Educ Res 2015, 85, 475–511. [Google Scholar] [CrossRef]

- Rao, K. Inclusive Instructional Design: Applying UDL to Online Learning. J. Appl. Instr. Des. 2021, 10. [Google Scholar] [CrossRef]

- Al-Samarraie, H.; Saeed, N. A Systematic Review of Cloud Computing Tools for Collaborative Learning: Opportunities and Challenges to the Blended-Learning Environment. Comput Educ 2018, 124, 77–91. [Google Scholar] [CrossRef]

- Laurillard, D. The Educational Problem That MOOCs Could Solve: Professional Development for Teachers of Disadvantaged Students. Res. Learn. Technol. 2016, 24, 29369. [Google Scholar] [CrossRef]

- Lidolf, S.; Pasco, D. Educational Technology Professional Development in Higher Education: A Systematic Literature Review of Empirical Research. Front Educ 2020, 5. [Google Scholar] [CrossRef]

- Carrillo, C.; Flores, M.A. COVID-19 and Teacher Education: A Literature Review of Online Teaching and Learning Practices. Eur. J. Teach. Educ. 2020, 43, 466–487. [Google Scholar] [CrossRef]

- Mystakidis, S.; Berki, E.; Valtanen, J.-P. The Patras Blended Strategy Model for Deep and Meaningful Learning in Quality Life-Long Distance Education. Electron. J. E-Learn. 2019, 17, 66–78. [Google Scholar] [CrossRef]

- Rahrouh, M.; Taleb, N.; Mohamed, E.A. Evaluating the Usefulness of E-Learning Management System Delivery in Higher Education. Int. J. Econ. Bus. Res. 2018, 16, 162. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.-Y.; Podsakoff, N.P. Common Method Biases in Behavioral Research: A Critical Review of the Literature and Recommended Remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).