Submitted:

16 September 2024

Posted:

17 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- (1)

- Increasing connectivity, data and computational power (cloud technology, smart sensors and actuators -even wearables-, blockchain…)

- (2)

- Boosting analytics and system intelligence (advanced analytics, machine learning, neural networks, artificial intelligence -AI-…)

- (3)

- Promoting machine-machine and human-machine interaction (extended reality, XR -including virtual, augmented and mixed reality, that is VR, AR and MR, respectively-, digital twins, robotics, automation, autonomous guided vehicles, Internet of Things, Internet of Systems…)

- (4)

- Enhancing advanced engineering (additive manufacturing such as 3D printing, ICTs, nanotechnology, renewable energies, biotechnology…)

- RQ1: Does ChatGPT provide a trustworthy time-independent learning experience to K-12 students, when teachers are unavailable?

- RQ2: Can ChatGPT create meaningful interactions with K-12 students?

- RQ3: What is the real impact of using ChatGPT as a virtual mentor on K-12 students learning science when teachers are unavailable?Following previous works on the use of AI within an educational context [74,75,76], this exploratory study will address these RQs by the evaluation of ChatGPT’s competence to become an educational tool aimed at providing K-12 students with a personalized, meaningful, and location- and time-independent learning, in a safe environment and real time, assisting teachers in the task of mentoring students through specific duties such as homework correcting and solving doubts at home. A special focus will be set on assessing: (a) student’s proficiency before and after the intervention, and (b) students’ perception of the AI as a useful educational tool, once duly evaluated. to the best of our knowledge, this is the first empirical assessment of the real impact of using ChatGPT as a virtual mentor on K-12 students learning chemistry and physics, within the frame of a blended-learning pedagogical approach combining constructivist/connectivist presential learning (Education 2.0 and 3.0) with student-centered self-regulated cybergogy (Education 4.0) [16].

2. Materials and Methods

- Desired outcomes: This empirical study aims to systematically assess the real effect, possibilities and challenges of applying a complementary and well-defined use of ChatGPT outside the traditional school environment (mainly focused on correcting specific homework assignments designed by the teacher and solving students’ particular doubts and needs) on K-12 (15-16-years-old) students learning chemistry and physics. This will allow finding the answers to the previous RQs, which will provide more insight regarding the use of advanced AI tools such as ChatGPT as teaching assistants in the field of science education. The outcomes that will be monitored to assess the impact of the AI on students will be their proficiency (through grades evolution) and their perception on the AI as an educational tool, before and after the intervention.

- Appropriate level of automation: The study has been designed within a blended-learning pedagogical approach, where the teacher role is essential as not only mentor but also facilitator [77]. Thus, K-12 students kept the constructivist/connectivist presential learning at school in combination with online learning experiences designed by the teacher (flipped-learning [78]). The only difference arose for those students in the experimental group, who might complement their homework tasks by means of ChatGPT, employed as an educational tool able to correct assignments, solve doubts and guide the students towards a better understanding of the lesson and a stronger and longer-term settlement of knowledge. Therefore, only a partial automation is considered.

- Ethical considerations: All procedures performed in this study, involving human participants, were in accordance with the national and European ethical standards (European Network of Research Ethics Committees), the 1964 Helsinki Declaration and its later amendments, the 1978 Belmont report, the EU Charter of Fundamental Rights (26/10/2012), and the EU General Data Protection Regulation (2016/679). As the study involved 15-16-years-old students, parental informed consent was obtained from all individual participants included in the study. Main ethical concerns discussed in bibliography are related to intellectual property, privacy, biases, fairness, accuracy, transparency, lack of robustness against “jailbreaking prompts”, and the electricity and water consumption to sustain the AI servers [79,80,81,82]. In this study, the planned use of ChatGPT leaves little room for intellectual property, privacy or transparency issues. Besides, jailbreaking prompts seem not to be useful for students in this case. However, students misusing ChatGPT to do their homework instead of positively exploiting the AI to correct their homework and solve their doubts [56] might be a potential problem, but this technology is so new and attractive that students will easily be engaged to test ChatGPT and its potential benefits. Anyhow, the potential misuse might easily be detected by comparing students’ grades before and after the intervention, as grades of students misusing the AI would never show any improvement. Another potential consideration might be the generation of incorrect or biased information, as the AI answers are limited by the previous training and some mathematical hallucinations have already been detected [83]. Thus, a previous validation of ChatGPT’s performance in the specific field of K-12 chemistry and physics will be assessed. In the case of large language models, bias can be defined as the appearance of systematic misrepresentations, attribution errors or factual distortions based on learned patterns that might drive to supporting certain groups or ideas over different ones, preserving stereotypes or even make incorrect assumptions [84]. Training data, algorithms and other factors might contribute to the rise of demographic, cultural, linguistic, temporal, confirmation, and ideological/political biases [85]. However, these potential preexisting biases within the model should not affect the utility of the AI within the field of interest (K-12 science education), even if users should and will be aware of this possibility. Besides those considerations, the foreseen impact of this study on learners focuses on achieving a better understanding of the lesson, a stronger and longer-term settlement of knowledge. Concerning teachers, they would be assisted in a time- and location-independent manner by the AI in their task of mentoring students, leaving teachers more time to personally satisfy particular students’ needs.

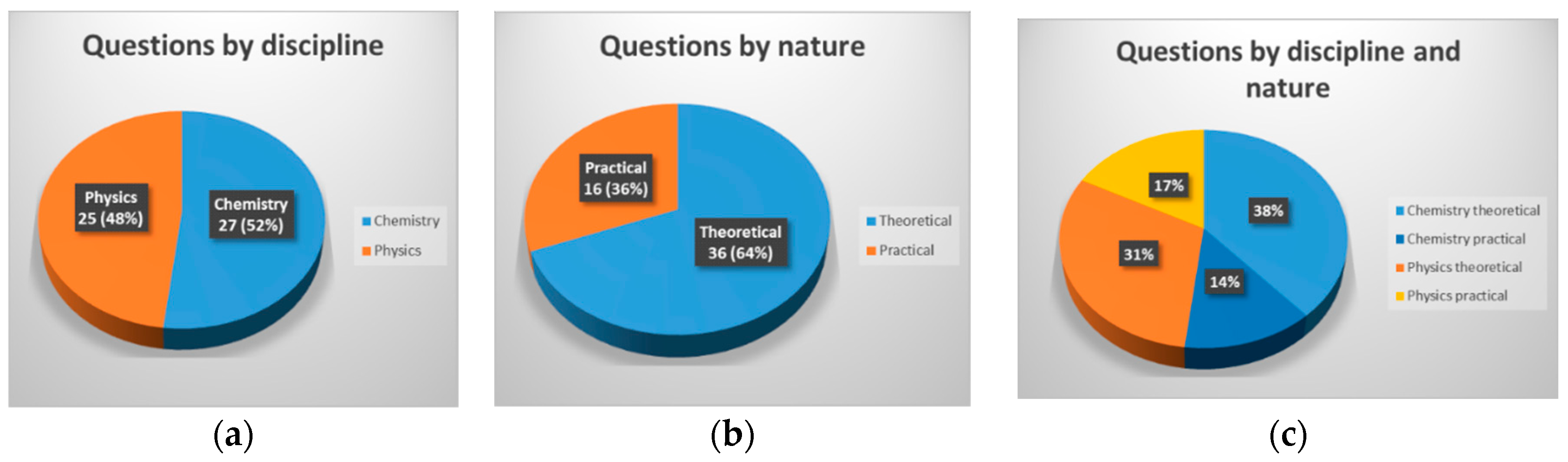

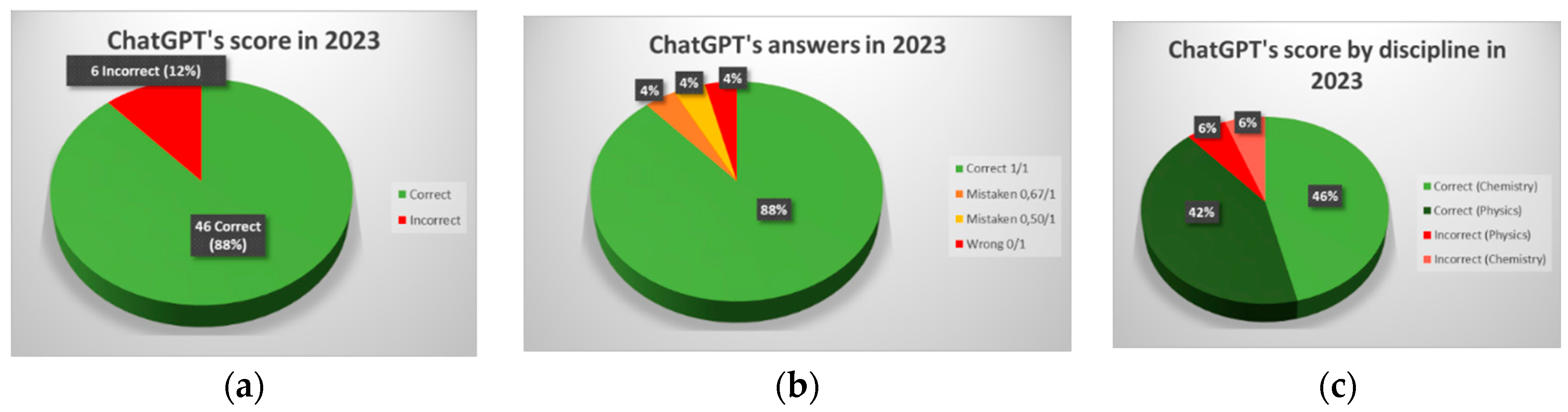

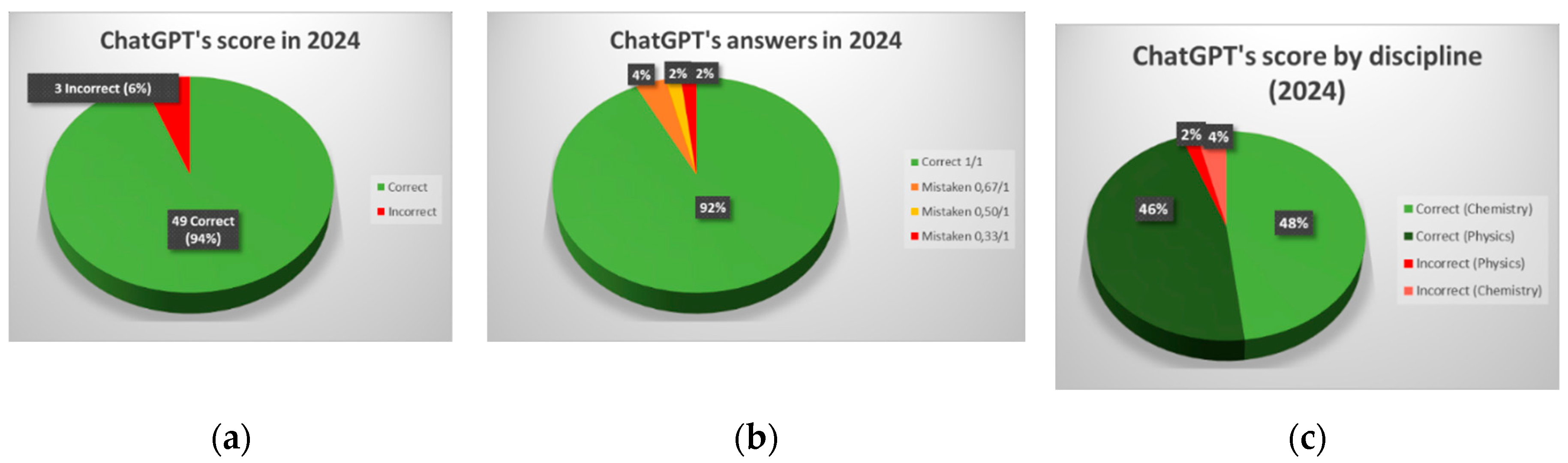

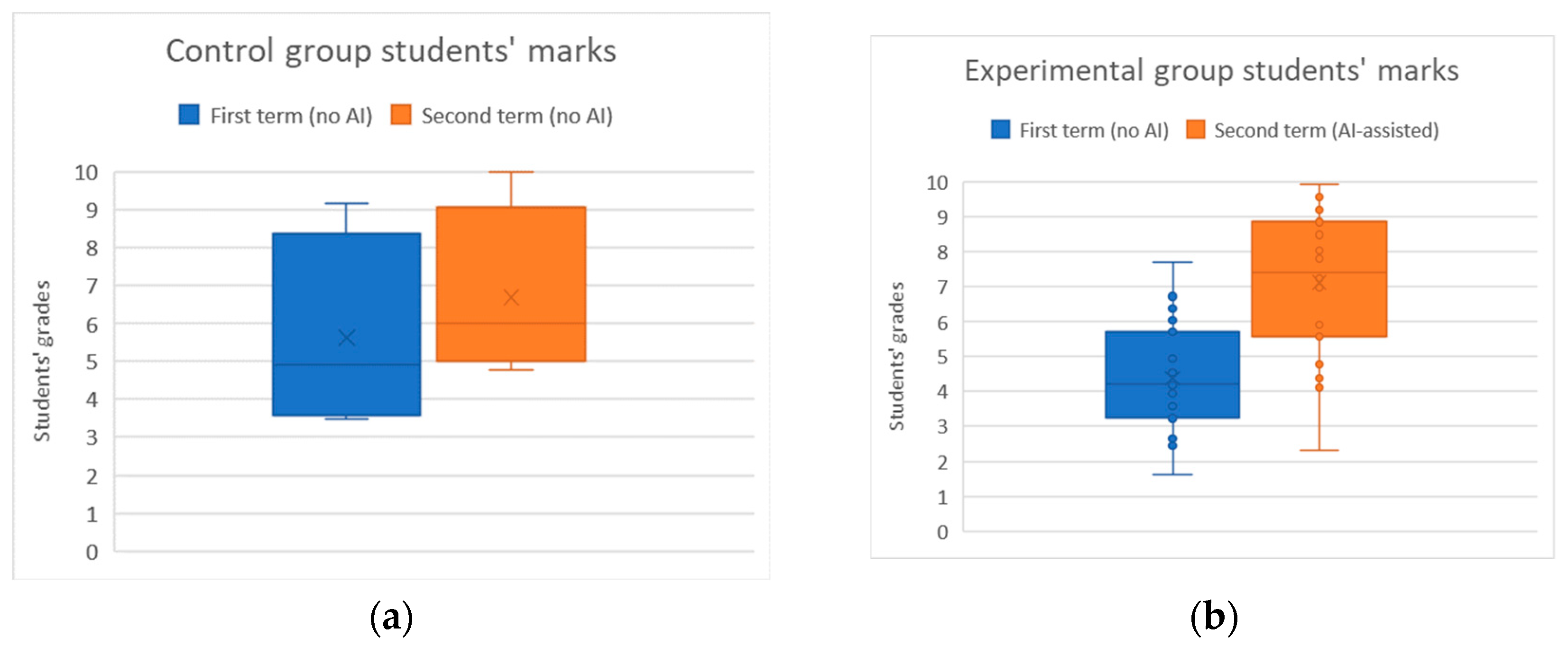

- Evaluation of the effectiveness: According to bibliography, the gold standard for measuring change after any intervention (i.e. within educational research) is the experimental design model [85]. In this case, the study assessed the effectiveness of the proposed educational approach through a quasi-experimental analysis, that is an empirical interventional study avoiding randomization able to determine the causal effects of an intervention (the impact of a chatbot powered by AI used as a virtual mentor on K-12 students learning chemistry and physics when their teachers are unavailable) on the target population. Randomization was not an option for the present study, as there was an interest in counting on two groups of students (the one interacting with the chatbot -experimental group- and that without any interaction with the AI -the control group-, balancing students’ level of proficiency (low, medium and high), thus avoiding potential biases coming from hypothetically unbalanced groups. First, the real performance of ChatGPT in the field of chemistry and physics for K-12 students (precisely 15-16-years-old students) was systematically evaluated by the authors. The AI-powered chatbot answered a test specifically designed for real K-12 students, including a set of 52 selected theoretical questions and problems summarizing the knowledge and problem-solving skills to be acquired during a complete academic course, in a similar way to previous studies [48,59,60], always keeping in mind that this technology is not purposely designed for education, despite its great potential. No difficult nor impossible questions were removed from the set of questions as other studies did (i.e. questions demanding drawings as outputs, or analyzing images as inputs) [86], in order to obtain a fair and accurate perception of the performance of ChatGPT within this particular field, including all type of knowledge and skills requested for 15-16-years-old students learning chemistry and physics. Eleven teachers including chemists, physicists, and engineers evaluated the answers. The AI replies to theoretical questions were assessed looking for clarity, accuracy and soundness, while more applied questions such as problems were not only evaluated by the accuracy of the final result, but also by the validity and clarity of the procedure to reach that result, paying special attention to those resources enabling a stronger and longer-term knowledge settlement in a pedagogical manner. Once the theoretical performance of the chatbot in the field of interest was assessed, the authors judged the experimental capacity of this tool to assist teachers in the task of mentoring real 15-16-years-old students learning chemistry and physics when educators were unavailable, precisely in duties such as solving theoretical doubts and correcting homework assignments (including problem-solving questions) in real time and without time restrictions. Therefore, this study empirically assessed the impact of providing students with a meaningful interaction with the chatbot through which they could experience a completely personalized learning, improving their knowledge and skills while boosting their engagement. All of this could be monitored through two indicators chosen to measure the impact of ChatGPT on K-12 students learning chemistry and physics, before and after the intervention: Students’ grades (taking into account both proficiency and problem-solving skills) and their perception on the AI as a useful educational tool.

2.1. Assessment of ChatGPT’s Performance in the Field of Chemistry and Physics for K-12 Students

- A set of 52 theoretical questions and problems were carefully selected to systematically ascertain the real competence of ChatGPT in the field of interest, covering the main knowledge and problem-solving skills to be acquired by 15-16-years-old students during a complete academic course. Gathering both theoretical questions and problems allowed to analyze not only ChatGPT’s current strengths (textual output) but also its potential weaknesses, exploring its capacity to deal with problem-solving (combining text recognition with mathematical calculation) and also verifying the capacity to deal with inputs and outputs other than text (i.e. requesting to draw the Lewis structures of some molecules, as this is a fundamental part of the knowledge to be reached by chemistry students). The whole set of questions is available within the Supporting Information. The aim of this part of the study is not verifying if ChatGPT fails, as we already know it, but to systematically assess the amount of mistakes displayed within a real physics and chemistry test summarizing the knowledge and problem-solving skills required for a whole course, and grade it in accordance to a human scale, verifying if ChatGPT might be a trustworthy tool in K-12 science education. Finally, other parameters concerning the quality of the answer will also be taken into consideration (clarity, insight, systematicity, simplicity etc.).

2.2. Assessment of ChatGPT’s Impact on Real K-12 (15-16-Years-Old) Students Learning Chemistry

- Strongly disagree.

- Disagree.

- Neither agree nor disagree.

- Agree.

- Strongly agree.

3. Results

3.1. Assessment of ChatGPT’s Performance in the Field of Chemistry and Physics for K-12 Students

3.2. Assessment of ChatGPT’s Impact on Real K-12 (15-16-Years-Old) Students Learning Chemistry

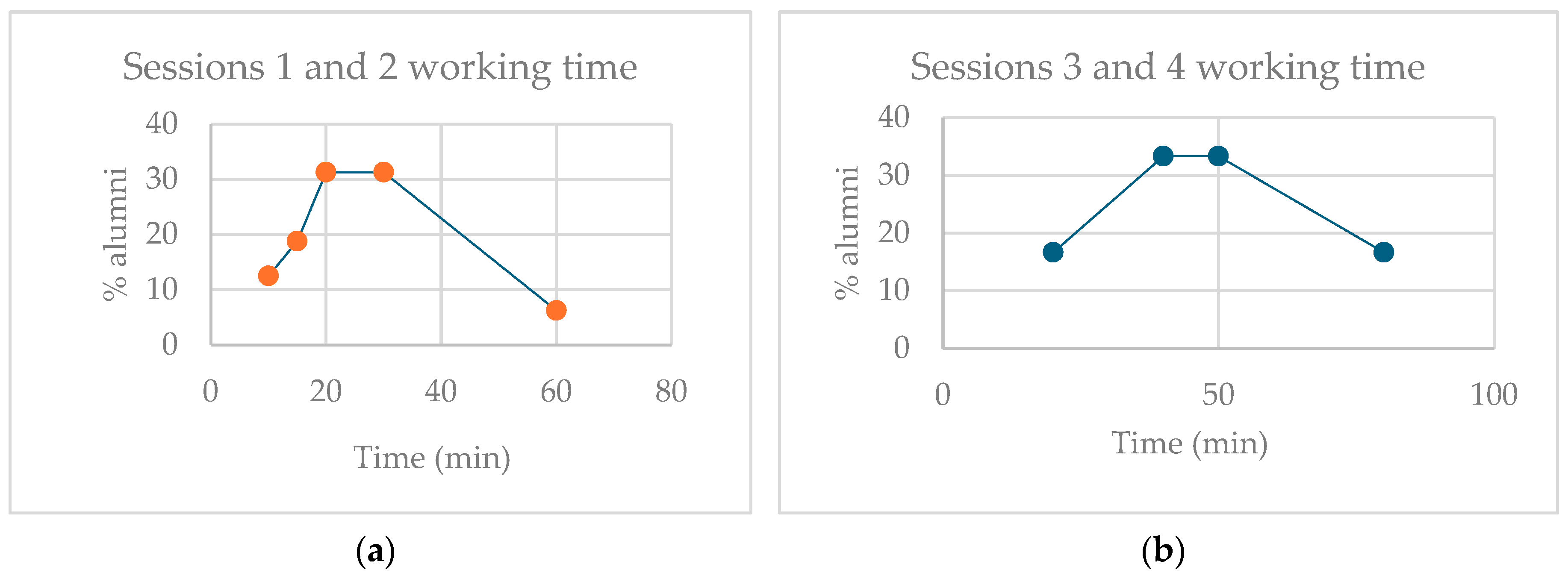

3.2.1. How Long Did It Take to You to Complete the Session?

3.2.2. In What Aspects of the Session Have You Found More Difficulties?

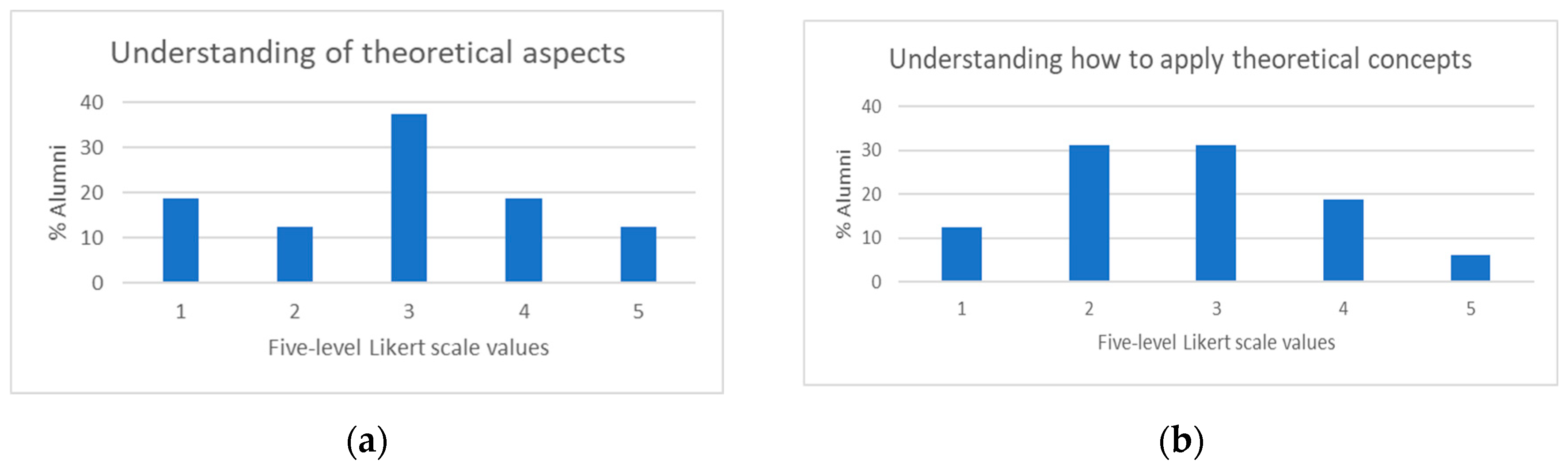

3.2.3. Rate Your Level of Agreement (1: Strongly Disagree, 2: Disagree, 3: Neither Agree Nor Disagree, 4: Agree, 5. Strongly Agree) with the Following Statements:

3.2.3.1. You Have Understood the Theoretical Concepts

3.2.3.2. You Know How to Apply the Theoretical Concepts

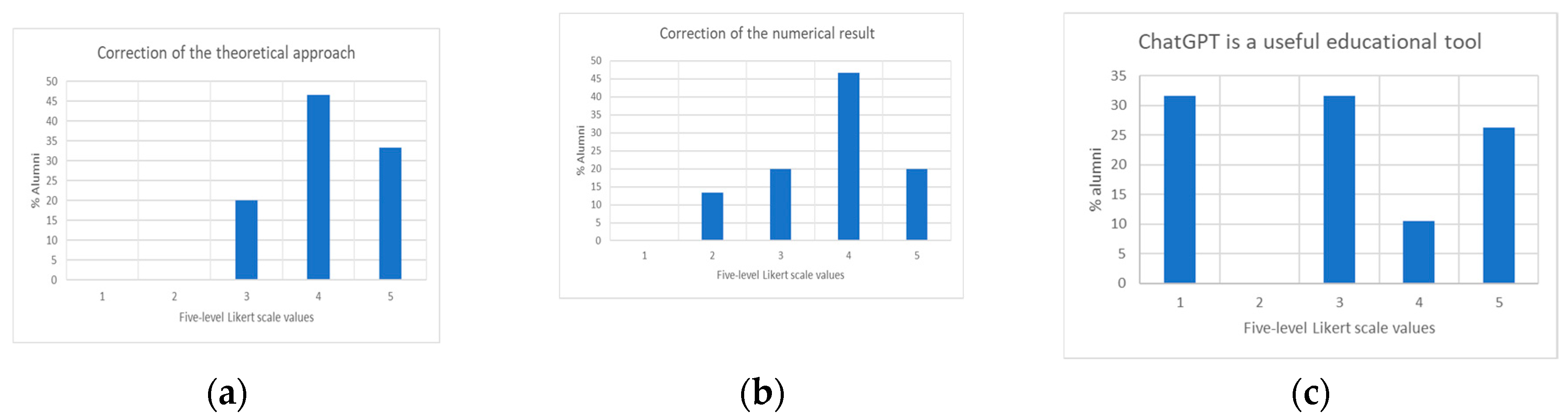

3.2.4. Rate Your Level of Agreement (1-5) with the Following Sentences:

3.2.4.1. The Approach Offered by ChatGPT to Solve the Exercise Is Correct

3.2.4.2. The Numerical Result of the Exercise Provided by ChatGPT Is Correct

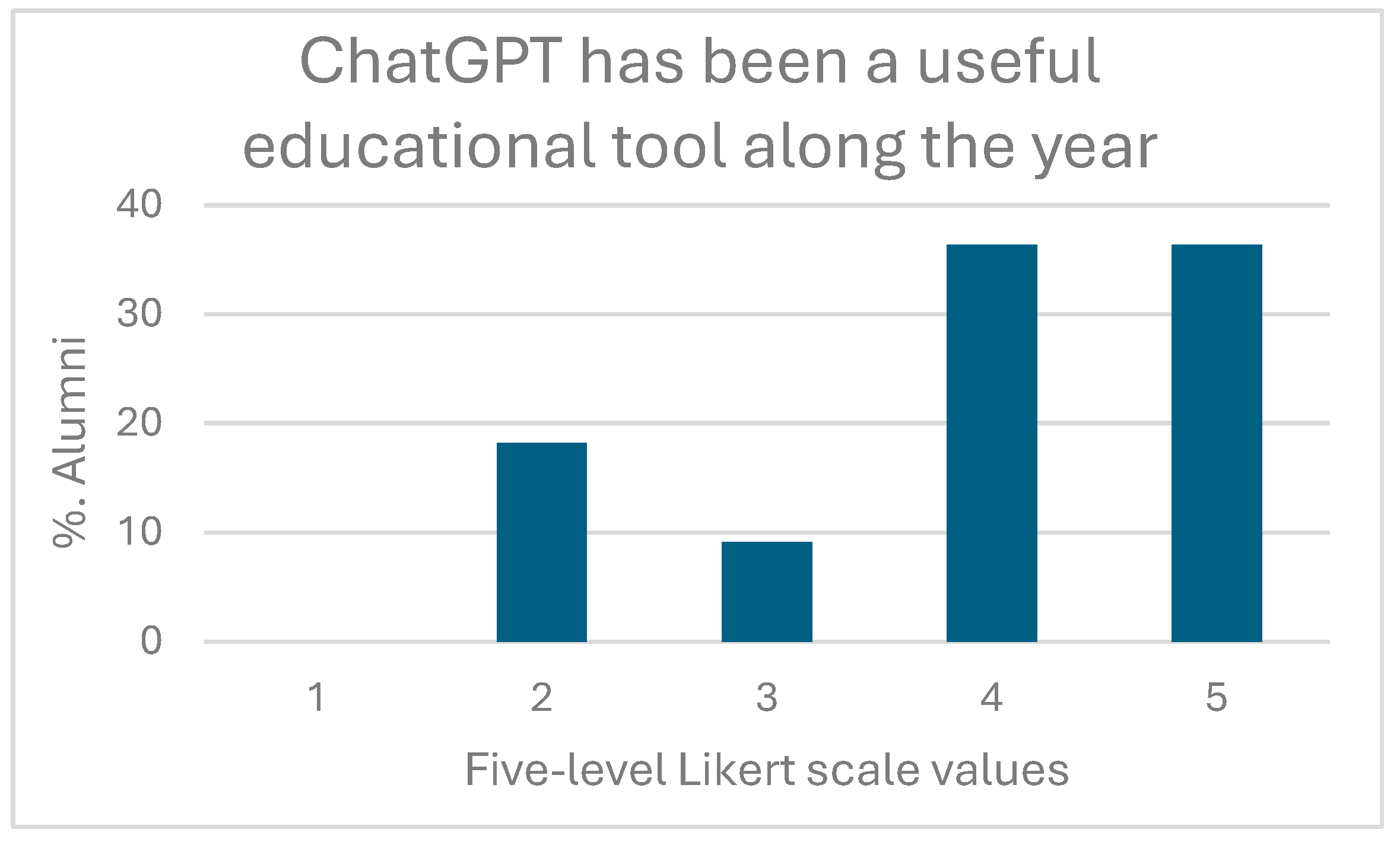

3.2.4.3. ChatGPT is Useful as a Complementary Educational Tool (For Solving Theoretical Doubts or Correcting Problems) in the Absence of a Teacher

4. Discussion

- RQ1: Does ChatGPT provide a trustworthy time-independent learning experience to K-12 students, when teachers are unavailable?

- RQ2: Can ChatGPT create meaningful interactions with K-12 students?

- RQ3: What is the real impact of using ChatGPT as a virtual mentor on K-12 students learning science when teachers are unavailable?

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schwab, K. The Fourth Industrial Revolution. Foreign Affairs, 12 December 2015.

- Wang, Y., Ma, HS., Yang, JH., Wang, KS. Industry 4.0: a way from mass customization to mass personalization production. Adv. Manuf. 2017, 5, 311–320. [CrossRef]

- Schwab, K. The Fourth Industrial Revolution: what it means, how to respond. World Economic Forum 2016. Available online: https://www.weforum.org/agenda/2016/01/the-fourth-industrial-revolution-what-it-means-and-how-to-respond/ (accessed on 19 February 2023).

- Hilbert, M. and López, P. The World’s Technological Capacity to Store, Communicate, and Compute Information. Science 2011, 332(6025), 60-65. [CrossRef]

- Esposito, M. World Economic Forum White Paper: Driving the Sustainability of Production Systems with Fourth Industrial Revolution Innovation. World Economic Forum, 2018. Available online: https://www.researchgate.net/publication/322071988_World_Economic_Forum_White_Paper_Driving_the_Sustainability_of_Production_Systems_with_Fourth_Industrial_Revolution_Innovation (accessed on 20 February 2023).

- Bondyopadhyay, P.K. In the beginning [junction transistor]. Proceedings of the IEEE 1998, 86, 63–77. [Google Scholar] [CrossRef]

- What are Industry 4.0, the Fourth Industrial Revolution, and 4IR? McKinsey, 17 August 2022. Available online: https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-are-industry-4-0-the-fourth-industrial-revolution-and-4ir (accessed on 20 February 2023).

- Bai, C., Dallasega, P., Orzes, G., Sarkis, J. Industry 4.0 technologies assessment: A sustainability perspective. International Journal of Production Economics 2020, 229, 107776. [CrossRef]

- Marr, B. Why Everyone Must Get Ready For The 4th Industrial Revolution. Forbes 2016. Available online: https://www.forbes.com/sites/bernardmarr/2016/04/05/why-everyone-must-get-ready-for-4th-industrial-revolution/?sh=366e89503f90 (accessed on 22 February 2023).

- Mudzar, N.M.B.M., Chew, K.W. Change in Labour Force Skillset for the Fourth Industrial Revolution: A Literature Review. International Journal of Technology 2022, 13(5), 969-978. [CrossRef]

- Goldin, T.; Rauch, E.; Pacher, C.; Woschank, M. Reference Architecture for an Integrated and Synergetic Use of Digital Tools in Education 4.0. Procedia Computer Science 2022, 200, 407–417. [Google Scholar] [CrossRef]

- Cónego, L., Pinto, R., Gonçalves, G. Education 4.0 and the Smart Manufacturing Paradigm: A Conceptual Gateway for Learning Factories. In Smart and Sustainable Collaborative Networks 4.0, Camarinha-Matos, L.M.; Boucher, X.; Afsarmanesh, H., Eds. PRO-VE 2021. IFIP Advances in Information and Communication Technology, vol 629. Springer, Cham.

- Costan, E.; Gonzales, G.; Gonzales, R.; Enriquez, L.; Costan, F.; Suladay, D.; Atibing, N.M.; Aro, J.L.; Evangelista, S.S.; Maturan, F.; Selerio, E., Jr.; Ocampo, L. Education 4.0 in Developing Economies: A Systematic Literature Review of Implementation Barriers and Future Research Agenda. Sustainability 2021, 13, 12763. [Google Scholar] [CrossRef]

- González-Pérez, L.I.; Ramírez-Montoya, M.S. Components of Education 4.0 in 21st Century Skills Frameworks: Systematic Review. Sustainability 2022, 14, 1493. [Google Scholar] [CrossRef]

- Bonfield, C.A.; Salter, M.; Longmuir, A.; Benson, M.; Adachi, C. Transformation or evolution?: Education 4.0, teaching and learning in the digital age. Higher Education Pedagogies for the 4th Industrial Revolution 2020, 5:1, 223-246. [CrossRef]

- Miranda, J.; Navarrete, C.; Noguez, J.; Molina-Espinosa, J.M.; Ramírez-Montoya, M.S.; Navarro-Tuch, S.A.; Bustamante-Bello, M.R.; Rosas-Fernández, J.B.; Molina, A. The core components of education 4.0 in higher education: Three case studies in engineering education. Computers & Electrical Engineering 2021, 93, 107278. [Google Scholar] [CrossRef]

- Chiu, W.-K. Pedagogy of Emerging Technologies in Chemical Education during the Era of Digitalization and Artificial Intelligence: A Systematic Review. Educ. Sci. 2021, 11, 709. [Google Scholar] [CrossRef]

- Mhlanga, D.; Moloi, T. COVID-19 and the digital transformation of education: what are we learning on 4IR in South Africa? Educ. Sci. 2020, 10, 1–11. [Google Scholar] [CrossRef]

- Peterson, L.; Scharber, C.; Thuesen, A.; Baskin, K. A rapid response to COVID-19: one district’s pivot from technology integration to distance learning. Information and learning science 2020, 121(5-6), 461-469. [CrossRef]

- Guo, YJ; Chen, L; Guo, Yujuan; Chen, Li. Ninth International Conference of Educational Innovation through Technology (EITT) 2020, 10-18.

- Mogos, R., Bodea, C.N., Dascalu, M., & Lazarou, E., Trifan, L., Safonkina, O., Nemoianu, I. Technology enhanced learning for industry 4.0 engineering education. Revue Roumaine des Sciences Techniques - Serie Électrotechnique et Énergétique 2018, 63, 429-435.

- Moraes, E.B., Kipper, L.M., Hackenhaar Kellermann, A.C., Austria, L., Leivas, P., Moraes, J.A.R. and Witczak, M. Integration of Industry 4.0 technologies with Education 4.0: advantages for improvements in learning. Interactive Technology and Smart Education 2022, Vol. ahead-of-print, No. ahead-of-print.

- Ciolacu, M.I., Tehrani, A.F., Binder, L., & Svasta, P. Education 4.0 - Artificial Intelligence Assisted Higher Education: Early recognition System with Machine Learning to support Students’ Success. IEEE 24th International Symposium for Design and Technology in Electronic Packaging (SIITME) 2018, 23-30. [CrossRef]

- Chen, Z., Zhang, J., Jiang, X., Hu, Z., Han, X., Xu, M., Savitha, & Vivekananda, G.N. Education 4.0 using artificial intelligence for students performance analysis. Inteligencia Artificial 2020, 23 (66), 124-137. [CrossRef]

- Tahiru, F. AI in Education: A Systematic Literature Review. Journal of Cases on Information Technology 2021, 23(1), 1-20. [CrossRef]

- Miao, Fengchun & Holmes, Wayne & Huang, Ronghuai & Zhang, Hui. AI and education Guidance for policy-makers. UNESCO Publishing: Paris, France, 2021. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000376709 (accessed on 8 March 2023).

- Carbonell, J.R. AI in CAI: An artificial-intelligence approach to computer-assisted instruction. IEEE transactions on man-machine systems 1970, 11(4), 190-202. [CrossRef]

- Psotka, J., Massey, L. D., & Mutter, S. A. (Eds.). (1988). Intelligent tutoring systems: Lessons learned. Lawrence Erlbaum Associates, Inc., New Jersey, United States.

- S. Piramuthu. Knowledge-based web-enabled agents and intelligent tutoring systems. IEEE Transactions on Education 2005, 48, no. 4, 750-756. [CrossRef]

- Mousavinasab, E.; Zarifsanaiey, N.; Kalhori, S.R.N.; Rakhshan, M.; Keikha, L.; Saeedi, M.G. Intelligent tutoring systems: a systematic review of characteristics, applications, and evaluation methods. Interactive Learning Environments 2021, 29:1, 142-163. [CrossRef]

- Alrakhawi, H.; Jamiat, N.; Abu-Naser, S. Intelligent tutoring systems in education: a systematic review of usage, tools, effects and evaluation. Journal of Theoretical and Applied Information Technology 2023, 101, 1205–1226. [Google Scholar]

- Song, D.; Oh, E.Y.; Rice, M. Interacting with a conversational agent system for educational purposes in online courses. 10th International Conference on Human System Interactions (HSI) 2017, 78-82, Ulsan, Korea (South).

- Shute, V. J., & Psotka, J. (1994). Intelligent Tutoring Systems: Past, Present, and Future. Human resources directorate manpower and personnel research division, 2-52.

- VanLehn, K. “The relative effectiveness of human tutoring, intelligent tutoring systems, and other tutoring systems”. Educational Psychologist 2011, 46 (4), 197–221. [CrossRef]

- Fernoaga, P.V.; Sandu, F.; Stelea, G.A.; Gavrila, C. Intelligent Education Assistant Powered by Chatbots. Conference proceedings of The 14th International Scientific Conference of eLearning and Software for Education (eLSE) 2018, 376-383. [CrossRef]

- Hamam, D. (2021). The New Teacher Assistant: A Review of Chatbots’ Use in Higher Education. In: Stephanidis, C., Antona, M., Ntoa, S. (eds) HCI International 2021 - Posters. HCII 2021. Communications in Computer and Information Science 2021, vol 1421. Springer, Cham.

- Satu, M.S.; Parvez, M.H. ; Shamim-Al-Mamun. Review of integrated applications with AIML based chatbot. International Conference on Computer and Information Engineering (ICCIE) 2015, Rajshahi, Bangladesh, 87-90. [CrossRef]

- Schwab, K. The Fourth Industrial Revolution: what it means, how to respond. World Economic Forum 2016. Available online: https://www.weforum.org/agenda/2016/01/the-fourth-industrial-revolution-what-it-means-and-how-to-respond/ (accessed on 19 February 2023).

- “The state of AI in 2023: Generative AI’s breakout year” McKinsey AI global survey 2023. Available online: https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-in-2023-generative-AIs-breakout-year#/ (accessed on 18 April 2024).

- N. Maslej, L. Fattorini, R. Perrault, V. Parli, A. Reuel, E. Brynjolfsson, J. Etchemendy, K. Ligett, T. Lyons, J. Manyika, J.C. Niebles, Y Shoham, R Wald, and J Clark. “The AI Index 2024 Annual Report,” AI Index Steering Committee, Institute for Human-Centered AI, Stanford, 2024. University, Stanford, CA, April 2024.

- R. Lam et al. “Learning skillful medium-range global weather forecasting”. Science 2023, 382, 1416-1421. [CrossRef]

- Merchant, A., Batzner, S., Schoenholz, S.S. et al. “Scaling deep learning for materials discovery”. Nature 2023, 624, 80–85. [CrossRef]

- Boiko, D.A., MacKnight, R., Kline, B. et al. “Autonomous chemical research with large language models”. Nature 2023, 624, 570–578. [CrossRef]

- Rudolph, J.; Tan, S.; Tan, S. ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? Journal of Applied Learning & Teaching 2023, 6, 1. [Google Scholar] [CrossRef]

- Castelvecchi, D. Are ChatGPT and AlphaCode going to replace programmers? Nature 2022. Published online December 8, 2022. [CrossRef]

- Tung, L. ChatGPT can write code. Now researchers say it’s good at fixing bugs, too. ZDNET 2023. Archived from the original on February 3, 2023. Available online: https://www.zdnet.com/article/chatgpt-can-write-code-now-researchers-say-its-good-at-fixing-bugs-too/ (accessed on 13 March 2023).

- Stokel-Walker, C. AI bot ChatGPT writes smart essays — should professors worry? Nature 2022. Published online December 9, 2022. Available online: https://www.nature.com/articles/d41586-022-04397-7 (accessed on 13 March 2023).

- Kung, TH.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G., Maningo, J.; Tseng, V. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit Health 2023, 2(2): e0000198. [CrossRef]

- Koe, C. ChatGPT shows us how to make music with ChatGPT. Published online January 27, 2023. Available online: https://musictech.com/news/gear/ways-to-use-chatgpt-for-music-making/ (accessed on 13 March 2023).

- Zheng, Z.; Zhang, O.; Borgs, C.; Chayes, J. T.; Yaghi, O. M. “ChatGPT Chemistry Assistant for Text Mining and the Prediction of MOF Synthesis”. J. Am. Chem. Soc. 2023, 145 (32), 18048– 18062. [CrossRef]

- Pradhan, T. et al. “The Future of ChatGPT in Medicinal Chemistry: Harnessing AI for Accelerated Drug Discovery.” Chemistry Select 2024, 9, 13, e202304359. [CrossRef]

- Zhang W, Wang Q, Kong X, Xiong J, Ni S, Cao D, et al. “Fine-tuning Large Language Models for Chemical Text Mining”. ChemRxiv. 2024. [CrossRef]

- OpenAI (2023). Available online: https://arxiv.org/pdf/2303.08774.pdf (accessed on 21 March 2023).

- Roose, K. The Brilliance and Weirdness of ChatGPT. The New York Times. Published online December 5, 2022. Available online: https://www.nytimes.com/2022/12/05/technology/chatgpt-ai-twitter.html (accessed on 14 March 2023).

- Sanders, N.E.; Schneier, B. Opinion | How ChatGPT Hijacks Democracy. The New York Times. Published online on January 15, 2023. Available online: https://archive.is/Cyaac (accessed on 14 March 2023).

- García-Peñalvo, F. J. La percepción de la Inteligencia Artificial en contextos educativos tras el lanzamiento de ChatGPT: disrupción o pánico. Education in the Knowledge Society (EKS) 2023, 24, e31279. [Google Scholar] [CrossRef]

- Chomsky, N.; Roberts, I.; Watumull, J. Opinion | Noam Chomsky: The False Promise of ChatGPT. The New York Times. Published online on March 12, 2023. Available online: https://archive.is/SM77M (accessed on 14 March 2023).

- Lo, C.K. What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Terwiesch, C. Would ChatGPT get a Wharton MBA? A prediction based on its performance in the operations management course. Mack Institute for Innovation Management at the Wharton School, University of Pennsylvania; 2023. Available online: https://mackinstitute.wharton.upenn.edu/wp-content/uploads/2023/01/Christian-Terwiesch-Chat-GTP.pdf (accessed on 13 March 2023).

- Mogali, S.R. Initial impressions of ChatGPT for anatomy education. Anat Sci Educ. 2023 (07 February). [CrossRef]

- Wu, R., Yu, Z. Do AI chatbots improve students learning outcomes? Evidence from a meta-analysis. Br J Educ Technol. 2023, 00, 1–24. [CrossRef]

- Rospigliosi, P. Artificial intelligence in teaching and learning: what questions should we ask of ChatGPT? Interactive Learning Environments 2023, 31:1, 1-3.

- Pavlik, J. V. Collaborating With ChatGPT: Considering the Implications of Generative Artificial Intelligence for Journalism and Media Education. Journalism & Mass Communication Educator 2023, 78(1), 84–93. [CrossRef]

- Jeon, J.; Lee, S. Large language models in education: A focus on the complementary relationship between human teachers and ChatGPT. Educ Inf Technol 2023. [CrossRef]

- Luan, L.; Lin, X.; Li, W. Exploring the Cognitive Dynamics of Artificial Intelligence in the Post-COVID-19 and Learning 3.0 Era: A Case Study of ChatGPT. arXiv:2302.04818 2023.

- Rahman, M.M.; Watanobe, Y. ChatGPT for Education and Research: Opportunities, Threats, and Strategies. Appl. Sci. 2023, 13, 5783. [CrossRef]

- Kamil Malinka, Martin Peresíni, Anton Firc, Ondrej Hujnák, Filip Janus. On the Educational Impact of ChatGPT: Is Artificial Intelligence Ready to Obtain a University Degree? ITiCSE 2023: Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 1, 2023, 47–53. [CrossRef]

- Zhai, X. ChatGPT for Next Generation Science Learning (January 20, 2023). Available at SSRN. Available online: https://ssrn.com/abstract=4331313. [CrossRef]

- Wollny Sebastian, Schneider Jan, Di Mitri Daniele, Weidlich Joshua, Rittberger Marc, Drachsler Hendrik. Are We There Yet? - A Systematic Literature Review on Chatbots in Education. Frontiers in Artificial Intelligence 2021, 4. [CrossRef]

- Cooper, G. Examining Science Education in ChatGPT: An Exploratory Study of Generative Artificial Intelligence. J Sci Educ Technol 2023, 32, 444–452. [CrossRef]

- Dos Santos, R.P. Enhancing Chemistry Learning with ChatGPT, Bing Chat, Bard, and Claude as Agents-to-Think-With: A Comparative Case Study. arXiv:2311.00709 2023.

- Schulze Balhorn, L., Weber, J.M., Buijsman, S. et al. Empirical assessment of ChatGPT’s answering capabilities in natural science and engineering. Sci Rep 2024, 14, 4998. [CrossRef]

- Su (苏嘉红), J.; Yang (杨伟鹏), W. Unlocking the Power of ChatGPT: A Framework for Applying Generative AI in Education. ECNU Review of Education 2023, 0(0). [CrossRef]

- L. Mercadé, F.J. Díaz-Fernández, M. Sinusia Lozano, J. Navarro-Arenas, M. Gómez, E. Pinilla-Cienfuegos, D. Ortiz de Zárate, V.J. Gómez Hernández, A. Díaz-Rubio. Research mapping in the teaching environment: tools based on network visualizations for a dynamic literature review. INTED2023 Proceedings 2023, 3916-3922. [CrossRef]

- L. Mercadé, D. Ortiz de Zárate, A. Barreda, E. Pinilla-Cienfuegos. INTED2023 Proceedings 2023, 6175-6179.

- A. Barreda, B. García-Cámara, D. Ortiz de Zárate Díaz, E. Pinilla-Cienfuegos, L. Mercadé. INTED2023 Proceedings 2023, 2547-2554.

- Bizami, N.A.; Tasir, Z.; Kew, S.N. Innovative pedagogical principles and technological tools capabilities for immersive blended learning: a systematic literature review. Educ. Inf. Technol. 2023, 28, 1373–1425. [Google Scholar] [CrossRef]

- Chen, C.K.; Huang, N.T.N.; Hwang, G.J. Findings and implications of flipped science learning research: A review of journal publications. Interactive Learning Environments 2022, 30:5, 949-966. [CrossRef]

- Stahl, B.C.; Eke, D. The ethics of ChatGPT – Exploring the ethical issues of an emerging technology. International Journal of Information Management 2024, 74, 102700. [Google Scholar] [CrossRef]

- Wu, X.; Duan, R.; Ni, J. Unveiling security, privacy, and ethical concerns of ChatGPT. Journal of Information and Intelligence 2024, 2, 102–115. [Google Scholar] [CrossRef]

- Peng, L., & Zhao, B. Navigating the ethical landscape behind ChatGPT. Big Data & Society 2024, 11(1). [CrossRef]

- Zhou, J.; Müller, H.; Holzinger, A.; Chen, F. Ethical ChatGPT: Concerns, Challenges, and Commandments. arXiv 2023. Available online: https://arxiv.org/pdf/2305.10646.

- Frieder, S.; Pinchetti, L.; Griffiths, R.R.; Salvatori, T.; Lukasiewicz, T.; Petersen, P.C.; Chevalier, A.; Berner, J. Mathematical Capabilities of ChatGPT. arXiv 2023, arXiv:2301.13867.

- Ferrara, E. Should ChatGPT be Biased? Challenges and Risks of Bias in Large Language Models. arXiv 2023. Available online: https://arxiv.org/pdf/2304.03738.pdf.

- Jenkinson, J. Measuring the Effectiveness of Educational Technology: What are we Attempting to Measure? Electronic Journal of e-Learning 2009, 7, 3, 273 – 280.

- Gilson A, Safranek CW, Huang T, Socrates V, Chi L, Taylor RA, Chartash D. How Does ChatGPT Perform on the United States Medical Licensing Examination? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med Educ. 2023, Feb 8, 9, e45312.

- Turing, Alan. Computing Machinery and Intelligence. Mind, LIX 1950, (236), 433–460.

- Boone, H. N., & Boone, D. A. Analysing Likert data. Journal of extension 2012, 50(2), 1–5. [CrossRef]

- Wu, S. and Wang, F. Artificial intelligence-based simulation research on the flipped classroom mode of listening and speaking teaching for English majors. Mobile Information Systems 2021, Article ID 4344244. [CrossRef]

- Klos MC, Escoredo M, Joerin A, Lemos VN, Rauws M, Bunge EL. Artificial Intelligence-Based Chatbot for Anxiety and Depression in University Students: Pilot Randomized Controlled Trial. JMIR Form Res. 2021, 5(8), e20678. [CrossRef]

- Mishra P, Singh U, Pandey CM, Mishra P, Pandey G. Application of student’s t-test, analysis of variance, and covariance. Ann Card Anaesth. 2019, 22(4), 407-411.

- Hsu, M. H., Chen, P. S., & Yu, C. S. Proposing a task-oriented chatbot system for EFL learners speaking practice. Interactive Learning Environments 2021, 1–12. [CrossRef]

- Ilgaz H B, Çelik Z. The Significance of Artificial Intelligence Platforms in Anatomy Education: An Experience With ChatGPT and Google Bard. Cureus 2023, 15(9): e45301. [CrossRef]

- Litt, E., Zhao, S., Kraut, R., & Burke, M. What Are Meaningful Social Interactions in Today’s Media Landscape? A Cross-Cultural Survey. Social Media + Society 2020, 6(3). [CrossRef]

- Cooper, H.; Okamura, L.; Gurka, V. Social activity and subjective well-being. Personality and Individual Differences 1992, 13, 5, 573-583.

- Hilvert-Bruce Z., Neill J. T., Sjöblom M., Hamari J. Social motivations of live-streaming viewer engagement on Twitch. Computers in Human Behavior 2018, 84, 58–67. [CrossRef]

- Offer S. Family time activities and adolescents’ emotional well-being. Journal of Marriage and Family 2013, 75(1), 26–41. [CrossRef]

- Gonzales, A.L. Text-based communication influences self-esteem more than face-to-face or cellphone communication. Computers in Human Behavior 2014, 39, 197–203. [Google Scholar] [CrossRef]

- Brennan S. E. The grounding problem in conversations with and through computers. In Fussell S. R., Kreuz R. J. (Eds.), Social and cognitive approaches to interpersonal communication 1998 (pp. 201–225). Lawrence Erlbaum.

- Boothby E. J., Clark M. S., Bargh J. A. Shared experiences are amplified. Psychological Science 2014, 25(12), 2209–2216. [CrossRef]

- Maslow, A. H. Preface to motivation theory. Psychosomatic medicine 1943, 5(1), 85-92. [CrossRef]

- Deci, E. L.; Ryan, R. M. Autonomy and need satisfaction in close relationships: Relationships motivation theory. Human motivation and interpersonal relationships: Theory, research, and applications 2014, 53-73. [CrossRef]

- Burleson, B. R. The experience and effects of emotional support: What the study of cultural and gender differences can tell us about close relationships, emotion and interpersonal communication. Personal Relationships, 2003, 10(1), 1–23. [CrossRef]

- Cook, T. D., & Campbell, D. T. 1979. Quasi-experimentation: Design & analysis issues for field settings (1st ed.). Chicago: Rand McNally.

- Caspersen, J.; Smeby, J.C.; Aamodt, P.O. Measuring learning outcomes. Eur J Educ. 2017, 52, 20–30. [Google Scholar] [CrossRef]

- The importance of grades. 2017. Urban Education Institute. University of Chicago. Available online: https://uei.uchicago.edu/sites/default/files/documents/UEI%202017%20New%20Knowledge%20-%20The%20Importance%20of%20Grades.pdf (accessed on 13 July 2024).

- J. Moon, R. Yang, S. Cha and S. B. Kim. ChatGPT vs Mentor : Programming Language Learning Assistance System for Beginners. 2023 IEEE 8th International Conference On Software Engineering and Computer Systems (ICSECS), Penang, Malaysia, 2023, pp. 106-110. [CrossRef]

- Kim, N. Y. A study on the use of artificial intelligence chatbots for improving English grammar skills. Journal of Digital Convergence 2019, 17(8), 37–46. [CrossRef]

- Mageira, K., Pittou, D., Papasalouros, A., Kotis, K., Zangogianni, P., & Daradoumis, A. Educational AI chatbots for content and language integrated learning. Applied Sciences 2022, 12(7), Article 7. [CrossRef]

- Hwang, W. Y., Guo, B. C., Hoang, A., Chang, C. C., & Wu, N. T. Facilitating authentic contextual EFL speaking and conversation with smart mechanisms and investigating its influence on learning achievements. Computer Assisted Language Learning 2022, 1–27. [CrossRef]

- Garzón, J., & Acevedo, J. Meta-analysis of the impact of augmented reality on students’ learning gains. Educational Research Review 2019, 27, 244–260. [CrossRef]

- Jeon, J. Exploring AI chatbot affordances in the EFL classroom: Young learners’ experiences and perspectives. Computer Assisted Language Learning 2022, 1–26. [CrossRef]

- Watson, S., & Romic, J. ChatGPT and the entangled evolution of society, education, and technology: A systems theory perspective. European Educational Research Journal 2024, 0(0). [CrossRef]

- Anderson, P.W. More is different. Science 1972, 177, 393–396. [Google Scholar] [CrossRef] [PubMed]

| Question | Score | Question | Score | Question | Score |

|---|---|---|---|---|---|

| 1 | 1 | 19 | 1 | 37 | 1 |

| 2 | 1 | 20 | 1 | 38 | 0 |

| 3 | 1 | 21 | 0,67 | 39 | 1 |

| 4 | 1 | 22 | 1 | 40 | 1 |

| 5 | 0 | 23 | 1 | 41 | 1 |

| 6 | 1 | 24 | 1 | 42 | 1 |

| 7 | 1 | 25 | 1 | 43 | 1 |

| 8 | 1 | 26 | 1 | 44 | 1 |

| 9 | 0,50 | 27 | 1 | 45 | 1 |

| 10 | 1 | 28 | 1 | 46 | 1 |

| 11 | 1 | 29 | 1 | 47 | 1 |

| 12 | 1 | 30 | 1 | 48 | 0,67 |

| 13 | 1 | 31 | 1 | 49 | 1 |

| 14 | 1 | 32 | 1 | 50 | 0,50 |

| 15 | 1 | 33 | 1 | 51 | 1 |

| 16 | 1 | 34 | 1 | 52 | 1 |

| 17 | 1 | 35 | 1 | ||

| 18 | 1 | 36 | 1 | Final Score | 9.3/10 |

| Question | Score | Question | Score | Question | Score |

|---|---|---|---|---|---|

| 1 | 1 | 19 | 1 | 37 | 1 |

| 2 | 1 | 20 | 1 | 38 | 1 |

| 3 | 1 | 21 | 0,67 | 39 | 1 |

| 4 | 1 | 22 | 1 | 40 | 1 |

| 5 | 0 | 23 | 1 | 41 | 1 |

| 6 | 1 | 24 | 1 | 42 | 1 |

| 7 | 1 | 25 | 1 | 43 | 1 |

| 8 | 1 | 26 | 1 | 44 | 1 |

| 9 | 0,50 | 27 | 1 | 45 | 1 |

| 10 | 1 | 28 | 1 | 46 | 1 |

| 11 | 1 | 29 | 1 | 47 | 1 |

| 12 | 1 | 30 | 1 | 48 | 1 |

| 13 | 1 | 31 | 1 | 49 | 1 |

| 14 | 1 | 32 | 1 | 50 | 0,50 |

| 15 | 1 | 33 | 1 | 51 | 1 |

| 16 | 1 | 34 | 1 | 52 | 1 |

| 17 | 1 | 35 | 1 | ||

| 18 | 1 | 36 | 1 | Final Score | 9.7/10 |

| Control Group | Before | After | Experimental Group | Before | After | |

|---|---|---|---|---|---|---|

| Mean | 5,62 | 6,69 | Mean | 4,37 | 7,11 | |

| Standard deviation | 6,8225 | 5,2588 | Standard deviation | 2,5190 | 4,3867 | |

| Observations | 4 | 4 | Observations | 19 | 19 | |

| Pearson correlation coefficient | 0,7697 | Pearson correlation coefficient | 0,5951 | |||

| Hypothetical difference of means | 0 | Hypothetical difference of means | 0 | |||

| Degrees of freedom | 3 | Degrees of freedom | 18 | |||

| t statistic | -1,2654 | t statistic | -6,9602 | |||

| P(T<=t) one-tailed test | 0,1475 | P(T<=t) one-tailed test | 8,3829E-07 | |||

| t critical value (one-tailed test) | 2,3533 | t critical value (one-tailed test) | 1,7341 | |||

| P(T<=t) two-tailed test | 0,2951 | P(T<=t) two-tailed test | 1,6766E-06 | |||

| t critical value (two-tailed test) | 3,1824 | t critical value (two-tailed test) | 2,10092204 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).