Submitted:

16 December 2022

Posted:

03 January 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Past Studies on Emotional Speech Synthesis

2.1. Emotional Speech Corpus

3. Emotional Speech Corpus Analysis

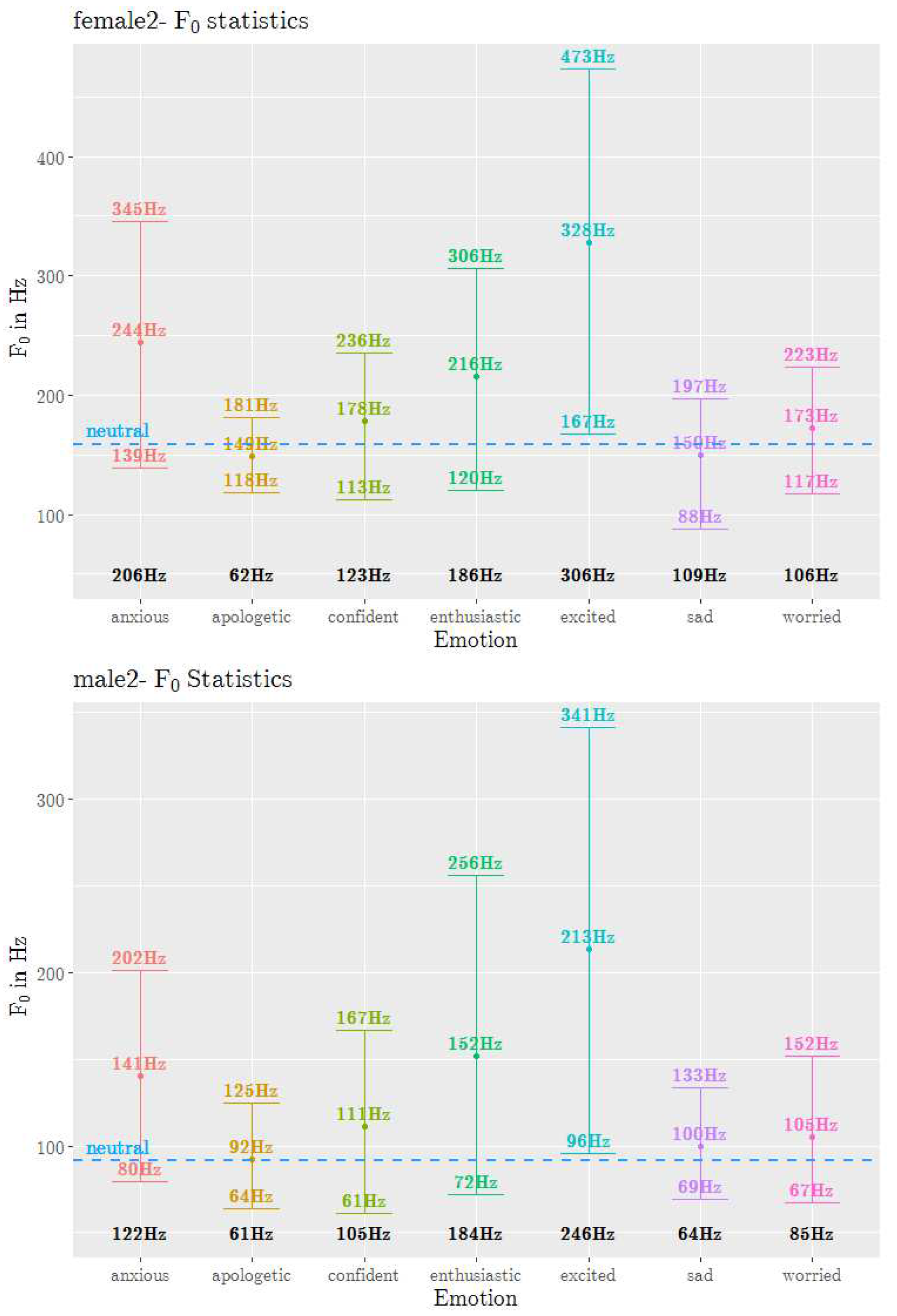

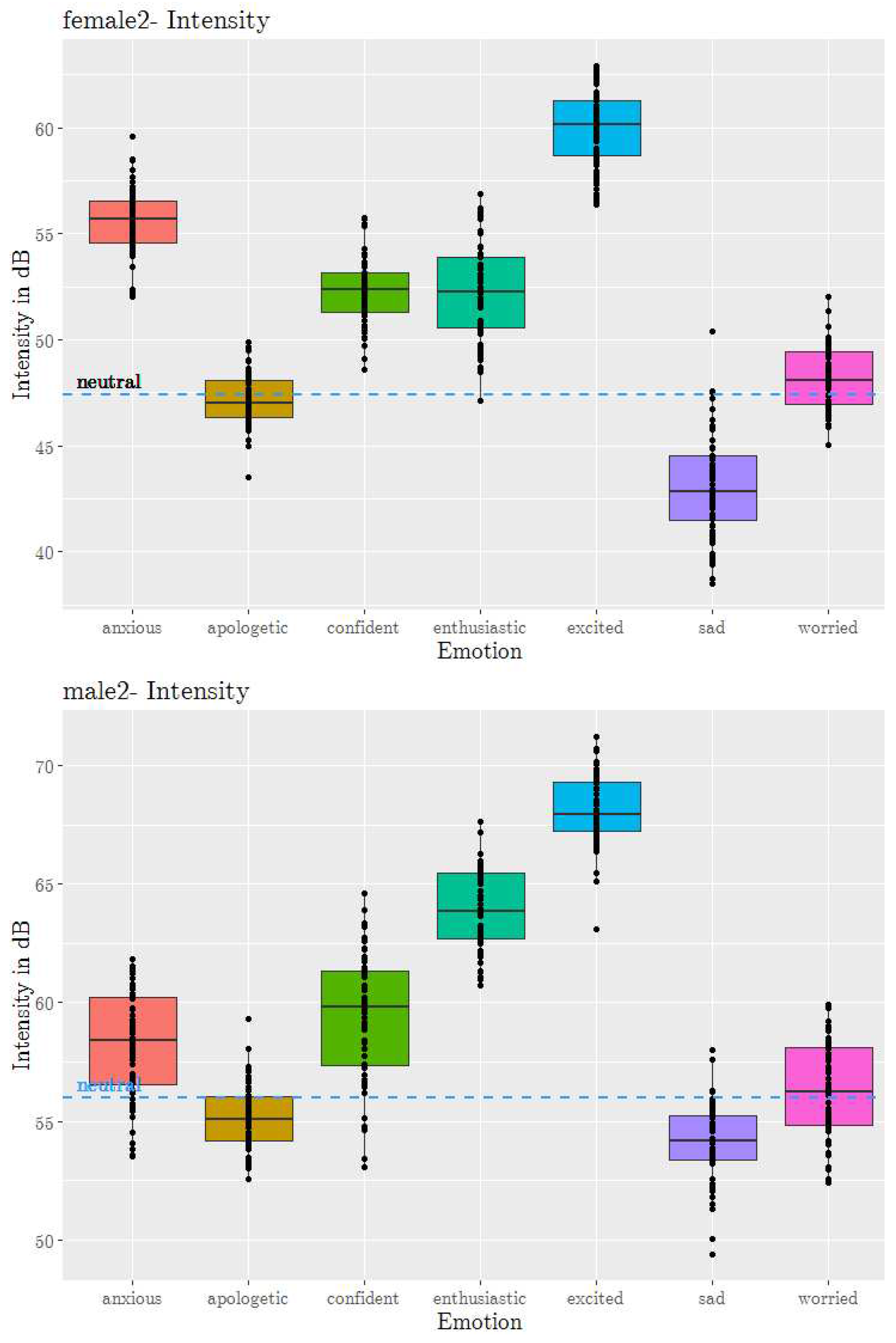

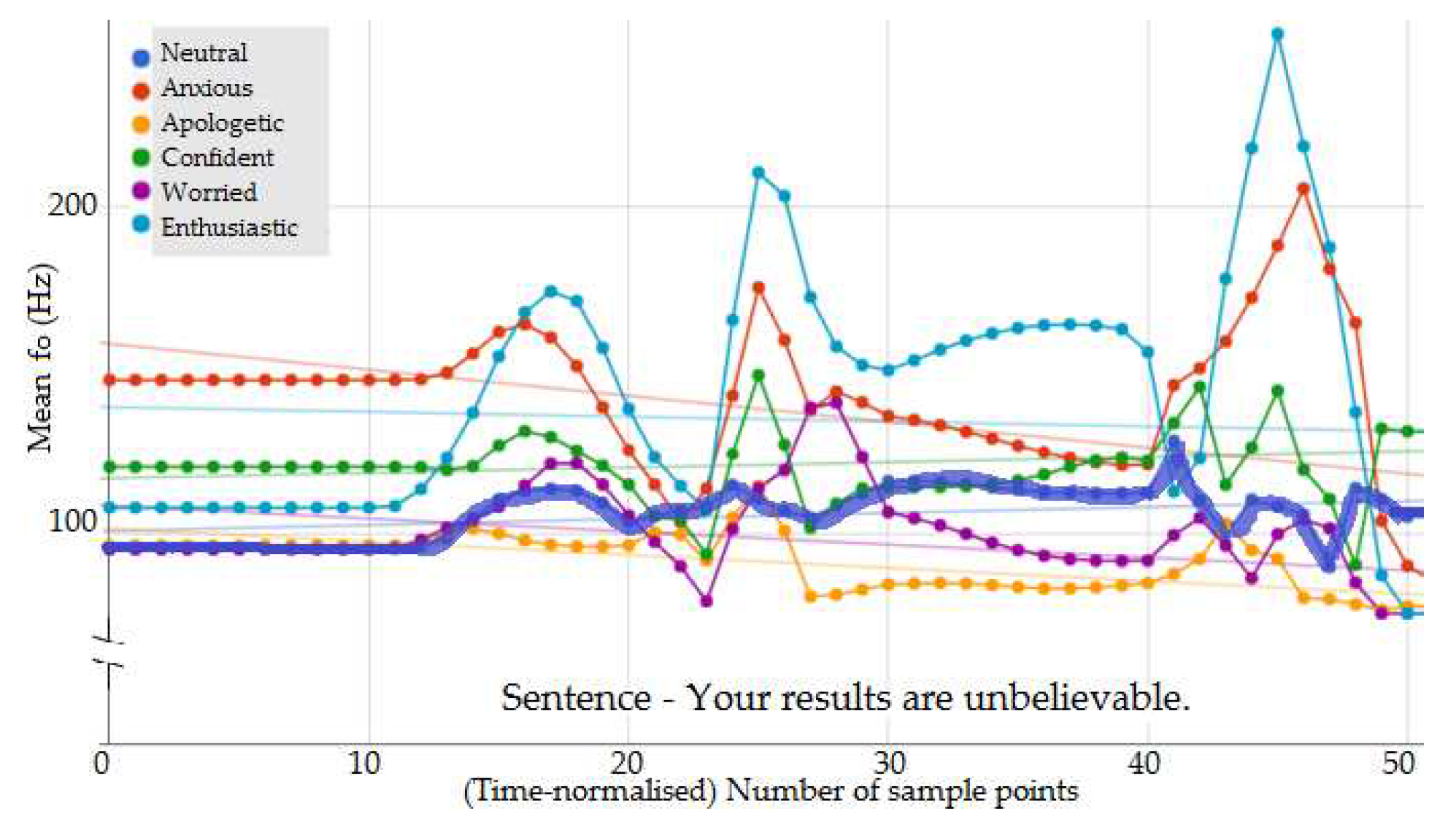

3.1. Prosody Feature Analysis

3.2. Contour Analysis

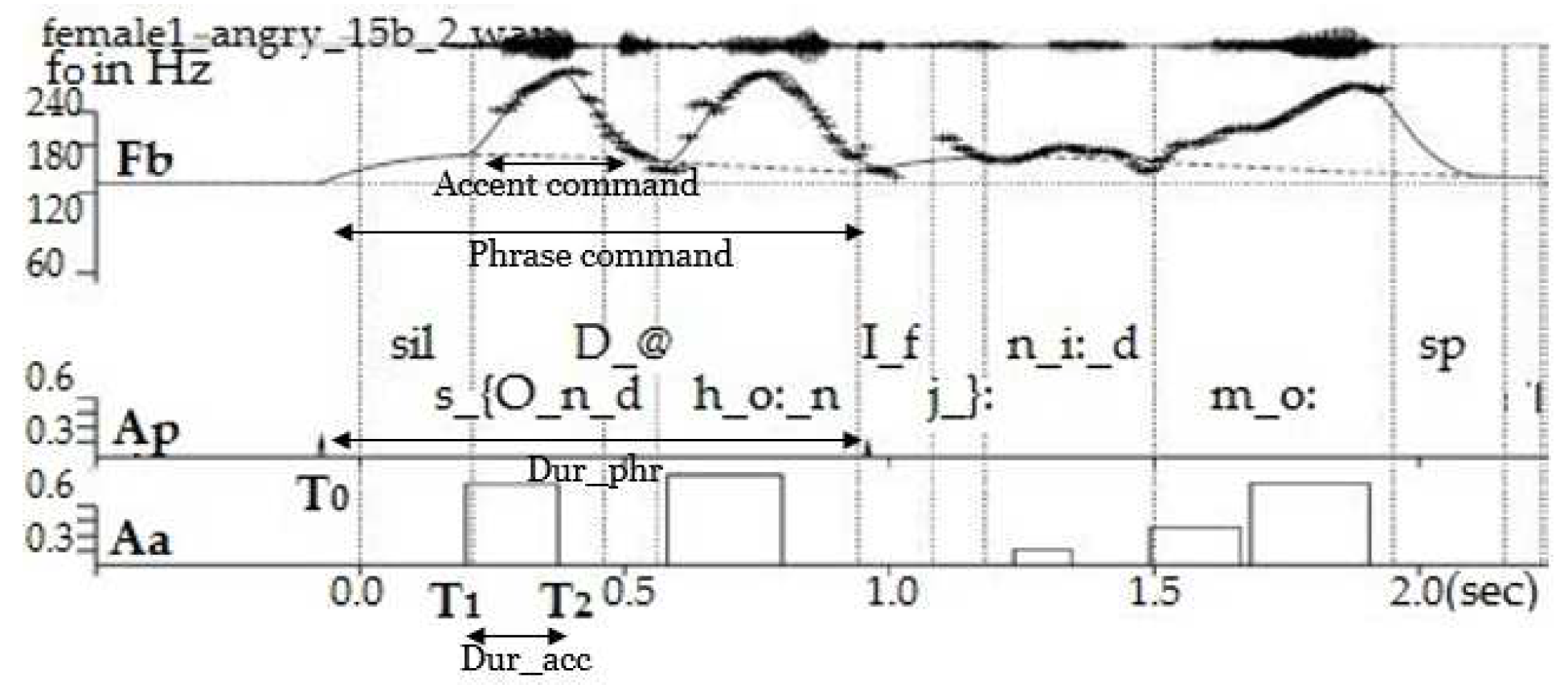

- Phrase command onset time (): Onset time of the phrasal contour, typically before the segmental onset of the phrase of the ensuing prosodic phrase. (Phrase command duration Dur_phr= End of phrase time - )

- Phrase command amplitude (): Magnitude of the phrase command that precedes each new prosodic phrase, quantifying the reset of the declining phrase component.

- Accent command Amplitude (): Amplitude of accent command associated with every pitch accent.

- Accent command onset time () and offset time (): The timing of the accent command can be related to the timing of the underlying segments. (Accent command duration Dur_acc = - )

3.2.1. Fujisaki Parameterisation of Contour

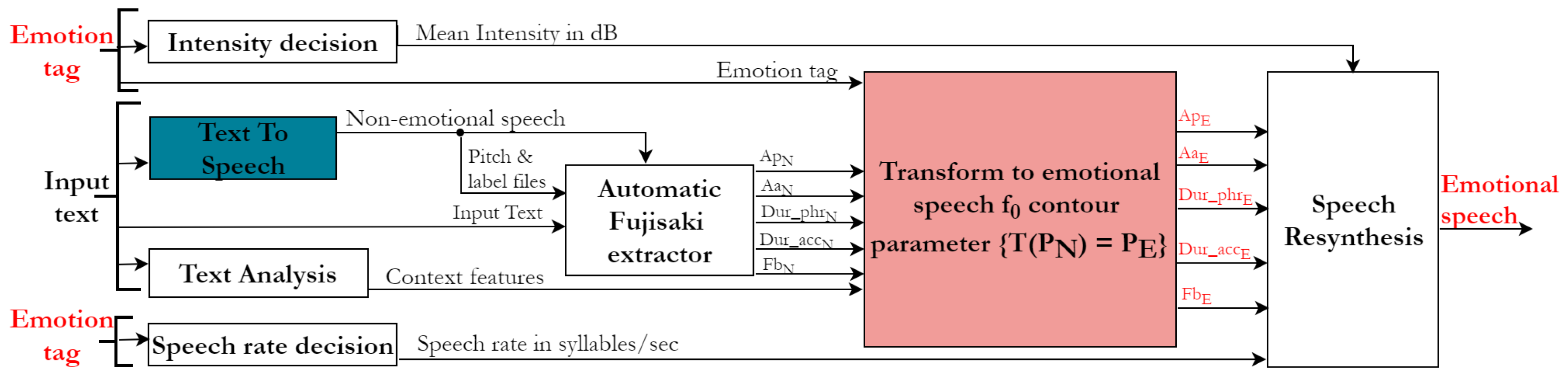

4. Emotional TTS Synthesis System Development

4.1. Text To Speech Module

4.2. Automatic Fujisaki Extractor

4.3. Transformation to Emotional Speech Parameters

4.3.1. Features for the Regression Model

4.3.2. Contour Transformation Model

4.3.3. Using the Transformation Model

4.4. Speech Rate and Mean Intensity Modelling

4.5. Resynthesis

5. Performance Analysis and Results

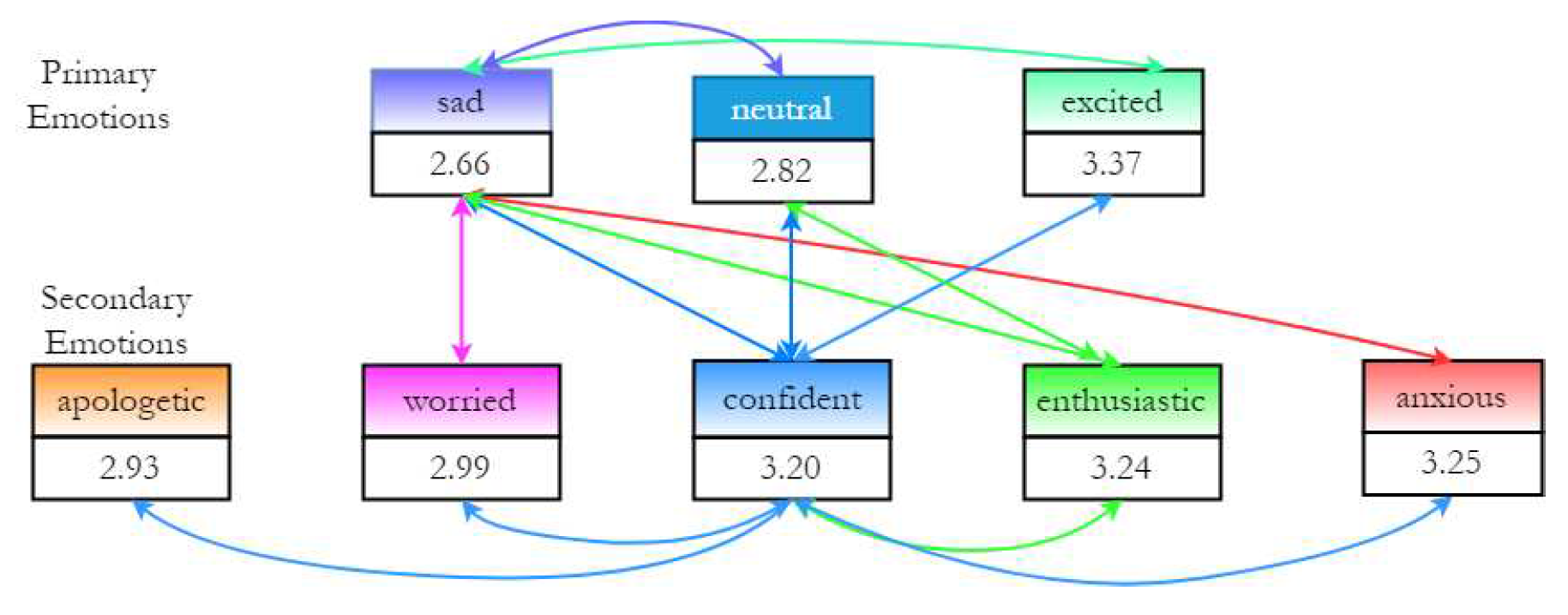

5.1. Task I - Pairwise forced response test for Five Secondary Emotions

5.2. Task II - Free Response Test for Five Secondary Emotions

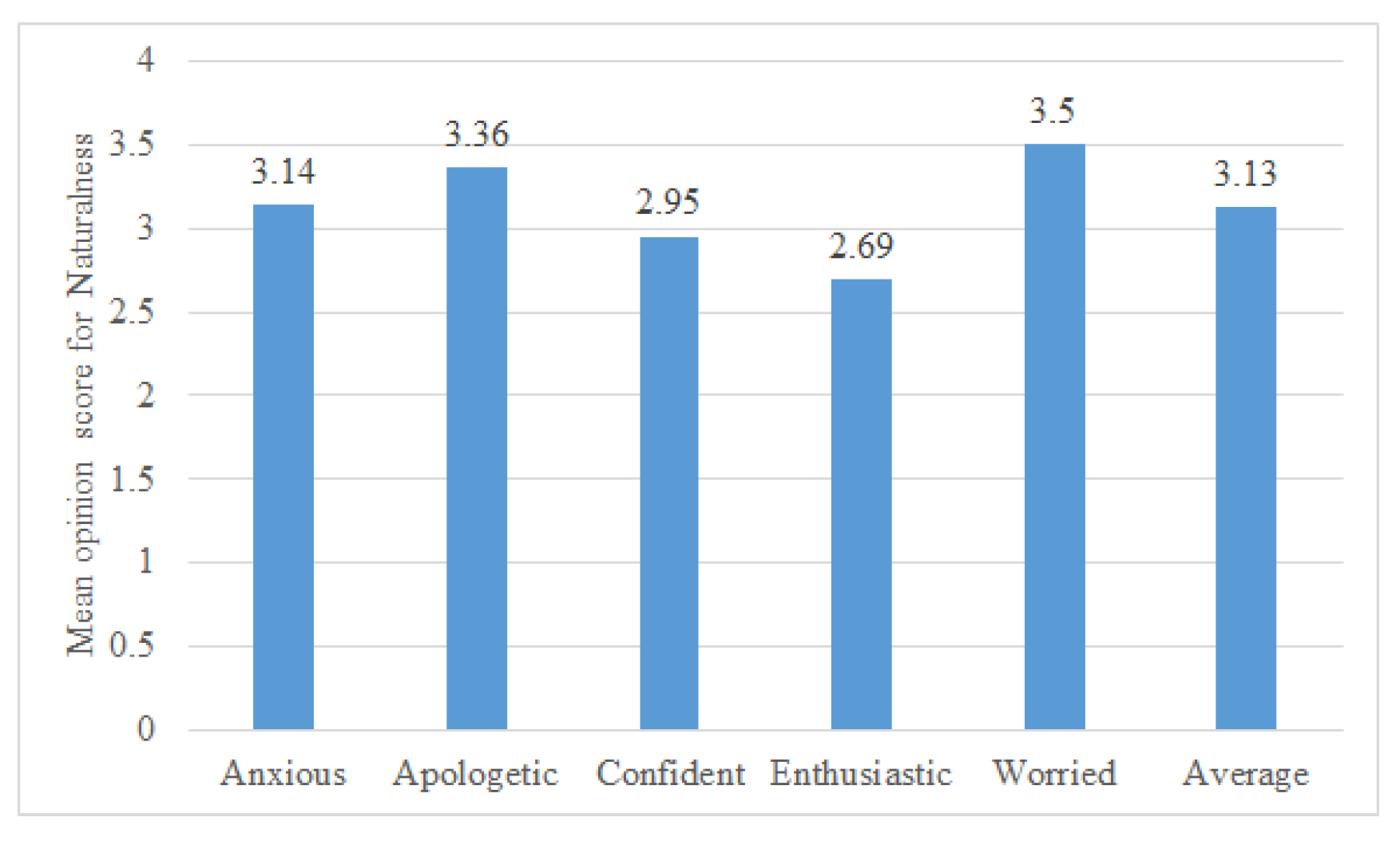

5.3. Task III - Naturalness Rating on Five-point Scale

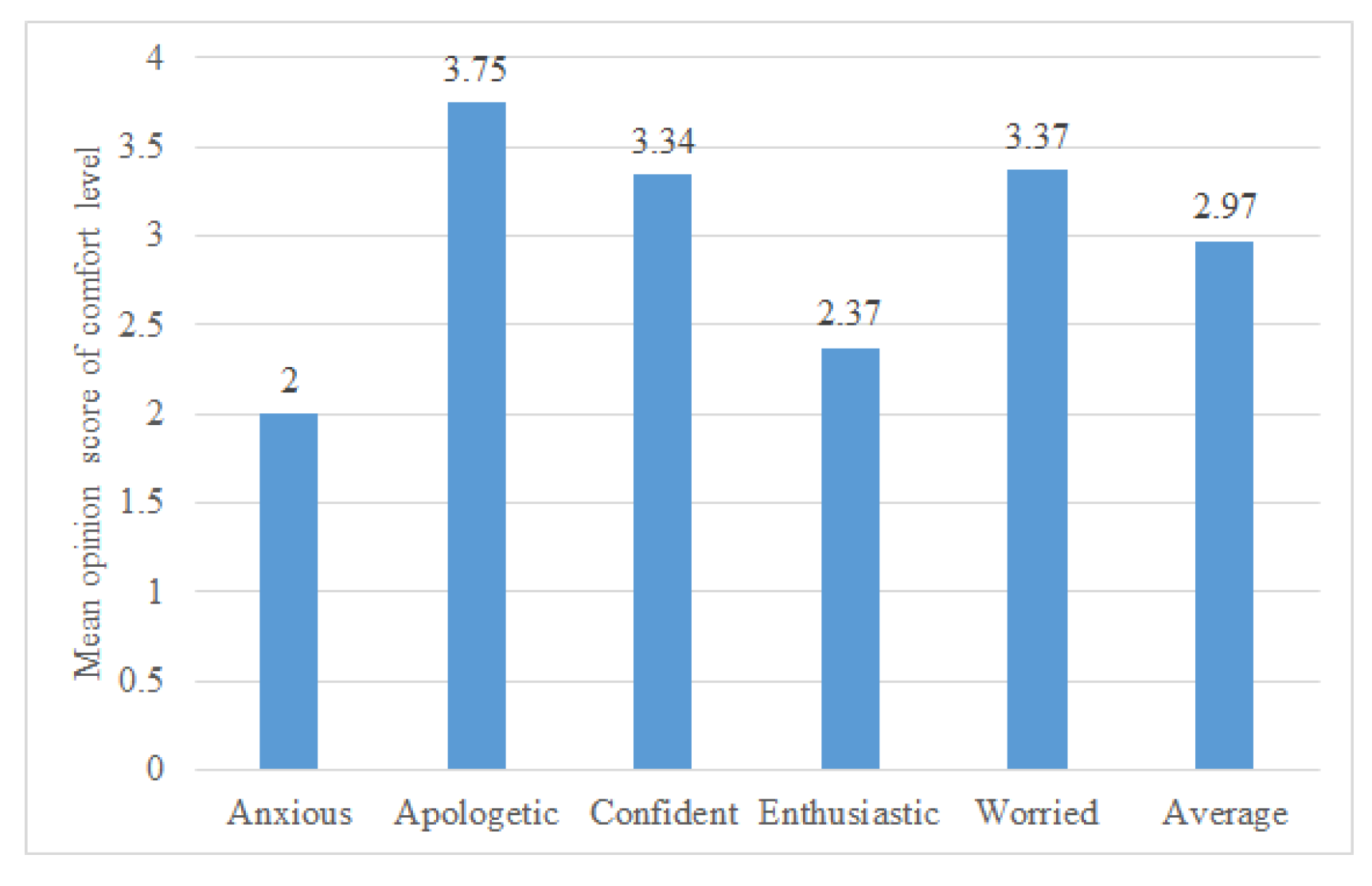

5.4. Task IV - Comfort Level Rating on Five-point Scale

6. Discussion

7. Conclusion

Author Contributions

Acknowledgments

References

- Eyssel, F.; Ruiter, L.D.; Kuchenbrandt, D.; Bobinger, S.; Hegel, F. ‘If you sound like me, you must be more human’: On the interplay of robot and user features on human-robot acceptance and anthropomorphism. Int. Conf. on Human-Robot Interaction, USA, 2012, pp. 125–126. [CrossRef]

- James, J.; Watson, C.I.; MacDonald, B. Artificial empathy in social robots: An analysis of emotions in speech. IEEE Int. Symposium on Robot & Human Interactive Communication, China, 2018, pp. 632–637. [CrossRef]

- James, J.; Balamurali, B.; Watson, C.I.; MacDonald, B. Empathetic Speech Synthesis and Testing for Healthcare Robots. International Journal of Social Robotics 2020, 1–19. [Google Scholar] [CrossRef]

- Ekman, P. An argument for basic emotions. Cognition & emotion 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Schröder, M. Emotional Speech Synthesis: A Review. Eurospeech, Scandinavia, 2001, pp. 561–64.

- Becker-Asano, C.; Wachsmuth, I. Affect Simulation with Primary and Secondary Emotions. Intelligent Virtual Agents. IVA 2008. LNCS. Springer, 2008, Vol. 5208, pp. 15–28. [CrossRef]

- Damasio., A. Descartes’ Error, Emotion Reason and the Human Brain); Avon books, New York, 1994.

- James, J.; Watson, C.; Stoakes, H. Influence of Prosodic features and semantics on secondary emotion production and perception. Int. Congress of Phonetic Sciences, Australia, 2019, pp. 1779–1782.

- Murray, I.R.; Arnott, J.L. Implementation and testing of a system for producing emotion-by-rule in synthetic speech. Speech Communication 1995, 16, 369–390. [Google Scholar] [CrossRef]

- Tao, J.; Kang, Y.; Li, A. Prosody conversion from neutral speech to emotional speech. IEEE transactions on Audio, Speech, and Language processing 2006, 14, 1145–1154. [Google Scholar] [CrossRef]

- Skerry-Ryan, R.; Battenberg, E.; Xiao, Y.; Wang, Y.; Stanton, D.; Shor, J.; Weiss, R.J.; Clark, R.; Saurous, R.A. Towards end-to-end prosody transfer for expressive speech synthesis with tacotron. Int. Conf, on Machine Learning, Sweden, 2018, pp. 4693–4702.

- An, S.; Ling, Z.; Dai, L. Emotional statistical parametric speech synthesis using LSTM-RNNs. APSIPA Conference, 2017, pp. 1613–1616. [CrossRef]

- Vroomen, J.; Collier, R.; Mozziconacci, S. Duration and intonation in emotional speech. Third European Conference on Speech Communication and Technology, Germany, 1993.

- Masuko, T.; Kobayashi, T.; Miyanaga, K. A style control technique for HMM-based speech synthesis. International Conference on Spoken Language Processing, Korea, 2004.

- Pitrelli, J.F.; Bakis, R.; Eide, E.M.; Fernandez, R.; Hamza, W.; Picheny, M.A. The IBM expressive text-to-speech synthesis system for American English. IEEE Transactions on Audio, Speech, and Language Processing 2006, 14, 1099–1108. [Google Scholar] [CrossRef]

- Yamagishi, J.; Kobayashi, T.; Tachibana, M.; Ogata, K.; Nakano, Y. Model adaptation approach to speech synthesis with diverse voices and styles. IEEE International Conference on Acoustics, Speech and Signal Processing, USA, 2007, pp. IV–1233. [CrossRef]

- Barra-Chicote, R.; Yamagishi, J.; King, S.; Montero, J.M.; Macias-Guarasa, J. Analysis of statistical parametric and unit selection speech synthesis systems applied to emotional speech. Speech communication 2010, 52, 394–404. [Google Scholar] [CrossRef]

- Tits, N. A Methodology for Controlling the Emotional Expressiveness in Synthetic Speech-a Deep Learning approach. International Conference on Affective Computing and Intelligent Interaction, UK, 2019, pp. 1–5. [CrossRef]

- Zhang, M.; Tao, J.; Jia, H.; Wang, X. Improving HMM based speech synthesis by reducing over-smoothing problems. Int, Symposium on Chinese Spoken Language Processing, 2008, pp. 1–4. [CrossRef]

- Murray, I.; Arnott, J.L. Toward the simulation of emotion in synthetic speech: A review of the literature on human vocal emotion. Journal of Acoustic Society of America 1993, 93(2), 1097–1108. [Google Scholar] [CrossRef] [PubMed]

- Masuko, T.; Kobayashi, T.; Miyanaga, K. A style control technique for HMM-based speech synthesis. International Conference on Spoken Language Processing, Korea, 2004, pp. 1437–1440.

- Yamagishi, J.; Kobayashi, T.; Tachibana, M.; Ogata, K.; Nakano, Y. Model adaptation approach to speech synthesis with diverse voices and styles. International Conference on Acoustics, Speech and Signal Processing, USA, 2007, p. 1236. [CrossRef]

- James, J.; Tian, L.; Watson, C. An open source emotional speech corpus for human robot interaction applications. Interspeech, India, 2018, pp. 2768–2772. [CrossRef]

- Scherer, K. What are emotions? And how can they be measured? Social Science Information 2005, 44, 695–729. [Google Scholar] [CrossRef]

- Paltoglou, G.; Thelwall, M. Seeing Stars of Valence and Arousal in Blog Posts. IEEE Trans. of Affective Computing 2013, 4(1), 116–23. [Google Scholar] [CrossRef]

- Winkelmann, R.; Harrington, J.J.K. EMU-SDMS: Advanced speech database management and analysis in R. Computer Speech & Language 2017, 45, 392–410. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing, R Foundation for Statistical Computing, Vienna, Austria, 2017.

- Wickham, H. ggplot2: Elegant Graphics for Data Analysis; Springer New York, 2016. [CrossRef]

- James, J.; Mixdorff, H.; Watson, C. Quantitative model-based analysis of F0 contours of emotional speech. International Congress of Phonetic Sciences, Australia, 2019, pp. 72–76.

- Hui, C.T.J.; Chin, T.J.; Watson, C. Automatic detection of speech truncation and speech rate. SST, New Zealand, 2014, pp. 150–153.

- Hirose, K.; Fujisaki, H.; Yamaguchi, M. Synthesis by rule of voice fundamental frequency contours of spoken Japanese from linguistic information. IEEE International Conference on Acoustics, Speech, and Signal Processing, 1984, Vol. 9, pp. 597–600. [CrossRef]

- Nguyen, D.T.; Luong, M.C.; Vu, B.K.; Mixdorff, H.; Ngo, H.H. Fujisaki Model based f0 contours in Vietnamese TTS. Int. Conf. on Spoken Language Processing, Korea, 2004, pp. 1429–1432.

- Mixdorff, H.; Cossio-Mercado, C.; Hönemann, A.; Gurlekian, J.; Evin, D.; Torres, H. Acoustic correlates of perceived syllable prominence in German. Annual Conference of the International Speech Communication Association, Germany, 2015, pp. 51–55.

- Gu, W.; Lee, T. Quantitative analysis of f0 contours of emotional speech of Mandarin. ISCA Speech Synth. Wksp/, 2007, pp. 228–233.

- Amir, N.; Mixdorff, H.; Amir, O.; Rochman, D.; Diamond, G.M.; Pfitzinger, H.R.; Levi-Isserlish, T.; Abramson, S. Unresolved anger: Prosodic analysis and classification of speech from a therapeutic setting. Speech Prosody, USA, 2010, p. 824.

- Mixdorff, H.; Cossio-Mercado, C.; Hönemann, A.; Gurlekian, J.; Evin, D.; Torres, H. Acoustic correlates of perceived syllable prominence in German. Annual Conference of the International Speech Communication Association, Germany, 2015, pp. 51–55.

- Boersma, P.; Weenink, D. Praat: Doing phonetics by computer [Computer program]. Version 6.0.46, 2019.

- Mixdorff, H. A novel approach to the fully automatic extraction of Fujisaki model parameters. IEEE Int. Conf. on Acoustics, Speech, and Signal Processing, Turkey, 2000, pp. 1281–1284. [CrossRef]

- Mixdorff, H.; Fujisaki, H. A quantitative description of German prosody offering symbolic labels as a by-product. International Conference on Spoken Language Processing, China, 2000, pp. 98–101.

- Watson, C.I.; Marchi, A. Resources created for building New Zealand English voices,. Australasian International Conference of Speech Science and Technology, New Zealand, 2014, pp. 92–95.

- Jain, S. Towards the Creation of Customised Synthetic Voices using Hidden Markov Models on a Healthcare Robot. Master’s thesis, The University of Auckland, New Zealand, 2015.

- Schröder, M.; Trouvain, J. The German text-to-speech synthesis system MARY: A tool for research, development and teaching. International Journal of Speech Technology 2003, 6, 365–377. [Google Scholar] [CrossRef]

- Boersma, P. Accurate short-term analysis of the fundamental frequency and the harmonics-to-noise ratio of a sampled sound. Institute of Phonetic Sciences 1993, 17, 97–110. [Google Scholar]

- Kisler, T.; Schiel, F.; Sloetjes, H. Signal processing via web services: the use case WebMAUS. Digital Humanities Conf., 2012, pp. 30–34.

- Liaw, A.; Wiener, M. Classification and Regression by Random Forest. R news 2.3 2002, 23, 18–22. [Google Scholar]

- Yoav, F.; Robert E, S. Experiments with a new boosting algorithm. Int. Conf. on Machine Learning, Italy, 1996, pp. 148–156.

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; Vanderplas, J.; Passos, A.; Cournapeau, D.; Brucher, M.; Perrot, M.; Duchesnay, E. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research 2011, 12, 2825–2830. [Google Scholar]

- Mendes-Moreira, J.; Soares, C.; Jorge, A.M.; Sousa, J.F.D. Ensemble approaches for regression: A survey. Acm computing surveys (csur) 2012, 45, 1–40. [Google Scholar] [CrossRef]

- Eide, E.; Aaron, A.; Bakis, R.; Hamza, W.; Picheny, M.; Pitrelli, J. A corpus-based approach to expressive speech synthesis. ISCA ITRW on speech synthesis, USA, 2004, pp. 79–84.

- Ming, H.; Huang, D.Y.; Dong, M.; Li, H.; Xie, L.; Zhang, S. Fundamental Frequency Modeling Using Wavelets for Emotional Voice Conversion. International Conference on Affective Computing and Intelligent Interaction, China, 2015, pp. 804–809. [CrossRef]

- Lu, X.; Pan, T. Research On Prosody Conversion of Affective Speech Based on LIBSVM and PAD Three Dimensional Emotion Model. Wkhp on Advanced Research & Tech. in Industry Applications, 2016, pp. 1–7. [CrossRef]

- Robinson, C.; Obin, N.; Roebel, A. Sequence-To-Sequence Modelling of F0 for Speech Emotion Conversion. International Conference on Acoustics, Speech, and Signal Processing, UK, 2019, pp. 6830–6834. [CrossRef]

- Viswanathan, M.; Viswanathan, M. Measuring speech quality for text-to-speech systems: development and assessment of a modified mean opinion score (MOS) scale. Computer Speech & Language 2005, 19, 55–83. [Google Scholar] [CrossRef]

| 1 | Primary emotions are innate to support fast and reactive response. Eg: angry, happy, sad. Six basic emotions were defined by Ekman [4] based on cross-cultural studies, and the basic emotions were found to be expressed similarly across cultures. The terms ‘primary’ and ‘basic’ emotions are used in literature with no clear distinction defined between them. For this study, the definition of primary emotions as defined above is used to be in alignment with studies in emotional speech synthesis [5]. Secondary emotions are assumed to arise from higher cognitive processes based on evaluating preferences over outcomes and expectations. E.g., relief, hope [6]. This distinction between the two emotion classes is based on neurobiological research by Damasio [7]. |

| 2 | ‘base’ speech synthesis system refers to the synthesis system that is built initially, which has no emotional modelling. The emotion-based modelling is built on this ‘base’ speech synthesis system. |

| 3 | Deep Neural Network |

| 4 | The term good quality here refers to recordings in recording studio environments that have controlled noise levels. |

| 5 |

Neutral in this context refers to speech without any emotions. |

| 6 | medium in comparison to the databases needed for the deep-learning approaches explained in the next paragraph |

| 7 | |

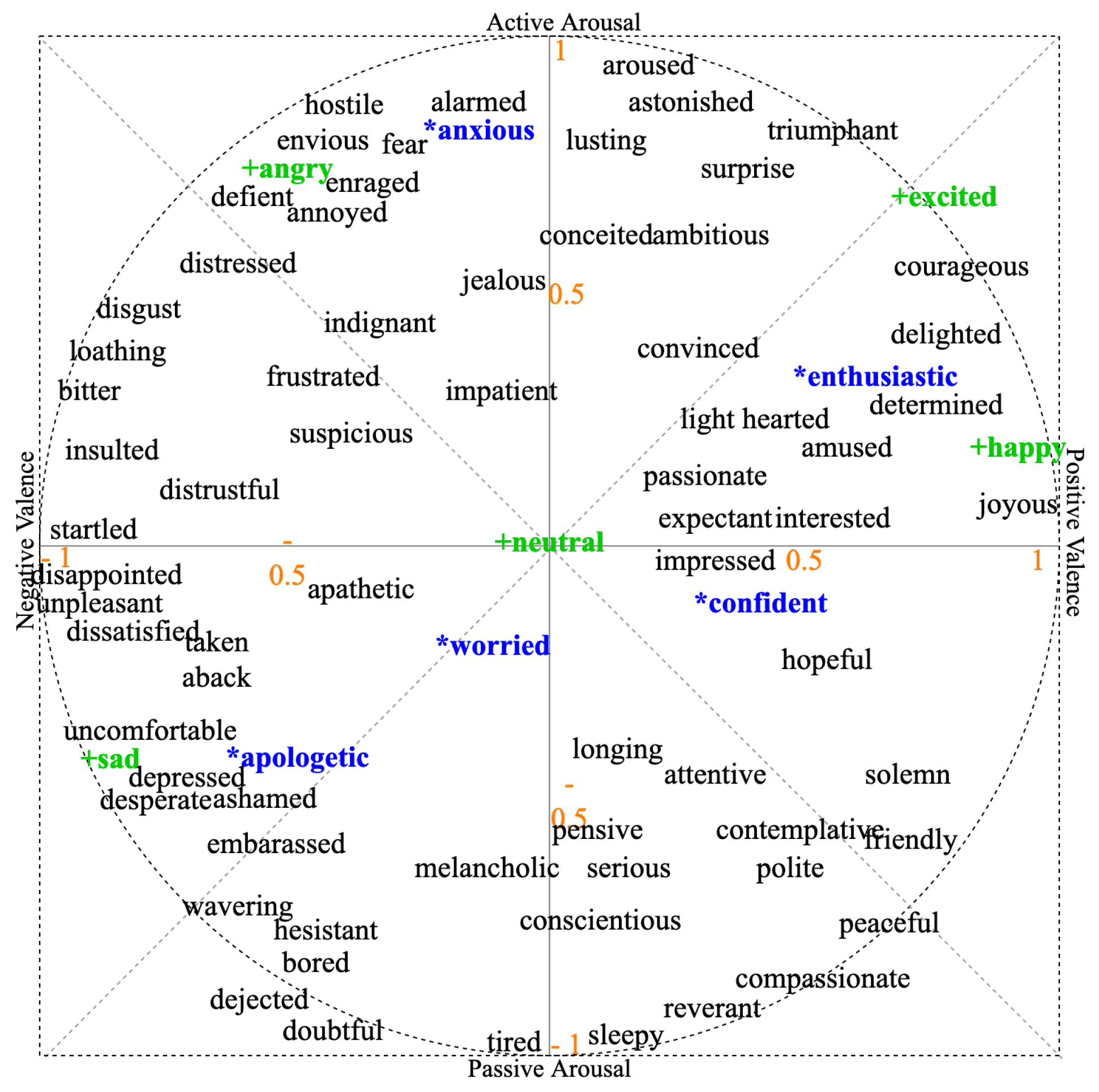

| 8 | Valence indicates the pleasantness of the voice ranging from unpleasant (e.g. sad, fear) to pleasant (e.g. happy, hopeful). Arousal specifies the reaction level to stimuli ranging from inactive (e.g. sleepy, sad) to active (e.g. anger, surprise). Russel developed this model in a psychology study where Canadian participants categorised English stimulus words portraying moods, feelings, affect or emotions. Later, 80 more emotion words were superimposed on Russel’s model based on studies in German [24]. Russel’s circumplex model diagram, as used in this study shown in Figure 1, is adapted from [25], which was adopted from Russel and Scherer’s work, but the positive valence is depicted by the right side of the x-axis (in contrast to Scherer’s study where it was on the left side.). A two-dimensional model is used (and not higher dimension models) as representing the emotions on a plane facilitates their visualisation. |

| 9 | Both male and female speakers’ sentences were used for the initial analysis. But only the male speaker sentences were used for the emotion-based contour model developed because it is this male speaker’s voice that will be synthesised. |

| 10 | This study was approved by the University of Auckland Human Participants Ethics Committee on 24/07/2019 for three years. Ref. No. 023353. |

| Speech synthesis method | Emotional speech synthesis method | Approach | Resources needed | Naturalness | Emotions modelled |

| 1993 [13] Diphone synthesis | Rule-based | Emotion rules applied on speech synthesis systems | All possible diphones in a language have to be recorded for neutral TTS 1. E.g. 2431 diphones in British English. An emotional speech database (312 sentences) to frame rules is needed | Average 2 | neutral, joy, boredom, anger, sadness, fear, indignation |

| 1995 [9] Formant synthesis | Rule-based | Rules framed for prosody features such as pitch, duration, voice quality features | DECtalk synthesiser used containing approximately 160000 lines of C code. Emotion rules framed from past research | Average | anger, happiness, sadness, fear, disgust and grief |

| 2004 [14] Parametric speech synthesis | Style control vector | Style control vector associated with the target style transforms the mean vectors of the neutral HMM models | 504 phonetically balanced sentences for average voice, and at least 10 sentences of each of the styles | Good | Three styles: Rough, Joyful, sad |

| 2006 [10] Recorded neutral speech used as it is | Rule-based | GMM 3 and CART 4 based models for and duration | Corpus with 1500 sentences | Average | neutral, happiness, sadness, fear, anger |

| 2006 [15] Parametric speech synthesis | Corpus-based | Decision trees determine contours & timing trained from the database | 11 hours (excluding silence) of neutral sentences + 1 hour emotional speech | Good 5 | Conveying bad news, yes-no questions |

| 2006 [15] Parametric speech synthesis | Prosodic phonology approach | ToBI 6 based modelling | 11 hours (excluding silence) of neutral sentences + 1 hour emotional speech | Good | Conveying bad news, yes-no questions |

| Speech synthesis method | Emotional speech synthesis method | Approach | Resources needed | Naturalness | Emotions modelled |

| 2007 [16] Parametric speech synthesis | Model adaptation on average voice | Acoustic features Mel-cepstrum & log were adapted | 503 phonetically balanced sentences for average voice, and at least 10 sentences of a particular style | Good | Speaking styles of speakers in the database |

| 2010 [17] Neutral voice not created | HMM-based parametric speech synthesis | Each emotion’s database was used to train emotional voice. | Spanish expressive voices corpus - 100 mins per emotion | Good | happiness, sadness, anger, surprise, fear, disgust |

| 2017 [12] Parametric speech synthesis using recurrent neural networks with long short-term memory units | Emotion- dependent modelling and unified modelling with emotion codes | Emotion code vector is input to all model layers to indicate the emotion characteristics | 5.5 hours emotional speech data + speaker independent model from 100 hours speech data | Reported to be better than HMM-based synthesis | neutral, happiness, anger, and sadness |

| 2018 [11] Tacotron-based end-to-end synthesis using DNN 3 | Prosody transfer | Tacotron model learning a latent embedding space of prosody derived from a reference acoustic representation containing the desired prosody | English dataset of audiobook recordings - 147 hours | Reported to be better than HMM-based synthesis | Speaking styles of speakers in the database |

| 2019 [18] Deep Convolutional TTS | Emotion adaptation | Transfer learning from neutral TTS to emotional TTS | Large dataset (24 hours) neutral speech + 7000 emotional speech sentences (5 emotions) | Reported to be better than HMM-based synthesis | anger, happiness, sadness, neutral |

| Feature | Description | Extraction method |

|---|---|---|

| Linguistic context features | Count = 102, Eg. accented/unaccented, vowel/consonant | Text analysis at the phonetic level using MaryTTS. |

| Non-emotional contour Fujisaki model parameters | Five Fujisaki parameters - , , , , | Passing non-emotional speech to AutoFuji extractor. |

| Emotion tag | Five primary & five secondary emotions | Each emotion tag is assigned to the sentence |

| Speaker tag | Two male speakers | Speaker tag is assigned |

| Secondary emotion | Mean speech rate (syllables/sec) | Mean intensity (dB) |

|---|---|---|

| anxious | 3.25 | 58.24 |

| apologetic | 2.93 | 55.14 |

| confident | 3.20 | 59.50 |

| enthusiastic | 3.24 | 63.91 |

| worried | 2.99 | 56.34 |

| APO | ANX | APO | ENTH | ||

| APO | 97.9% | 2.1% | APO | 100% | 0% |

| ANX | 0% | 100% | ENTH | 1.4% | 98.6% |

| CONF | ANX | APO | WOR | ||

| CONF | 88.3% | 11.7% | APO | 64.3% | 35.2% |

| ANX | 12.4% | 87.6% | WOR | 32.4% | 67.6% |

| ENTH | ANX | CONF | ENTH | ||

| ENTH | 78.6% | 21.4% | CONF | 69% | 31% |

| ANX | 24.8% | 75.2% | ENTH | 30.3% | 69.7% |

| WOR | ANX | CONF | WOR | ||

| WOR | 97.9% | 2.1% | CONF | 95.2% | 4.8% |

| ANX | 4.19% | 95.9% | WOR | 22.8% | 77.2% |

| APO | CONF | WOR | ENTH | ||

| APO | 94.5% | 5.5% | WOR | 97.9% | 2.1% |

| CONF | 9.7% | 90.3% | ENTH | 0.7% | 99.3% |

| Actual emotions | Emotion words by participants (count of times used) |

| Anxious | Anxious (41), Enthusiastic (9), Neutral (4), Confident (3), Energetic (1) |

| Apologetic | Apologetic (35), Worried (22), Worried/Sad (1) |

| Confident | Confident (34), Enthusiastic (9), Worried (8), Neutral (5), Authoritative (1), Demanding (1) |

| Enthusiastic | Confident (24), Enthusiastic (21), Neutral (4), Apologetic (3), Worried (5), Encouraging (1) |

| Worried | Worried (38), Apologetic (12), Anxious (5), Condescending (1), Confident (1), Neutral (1) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).