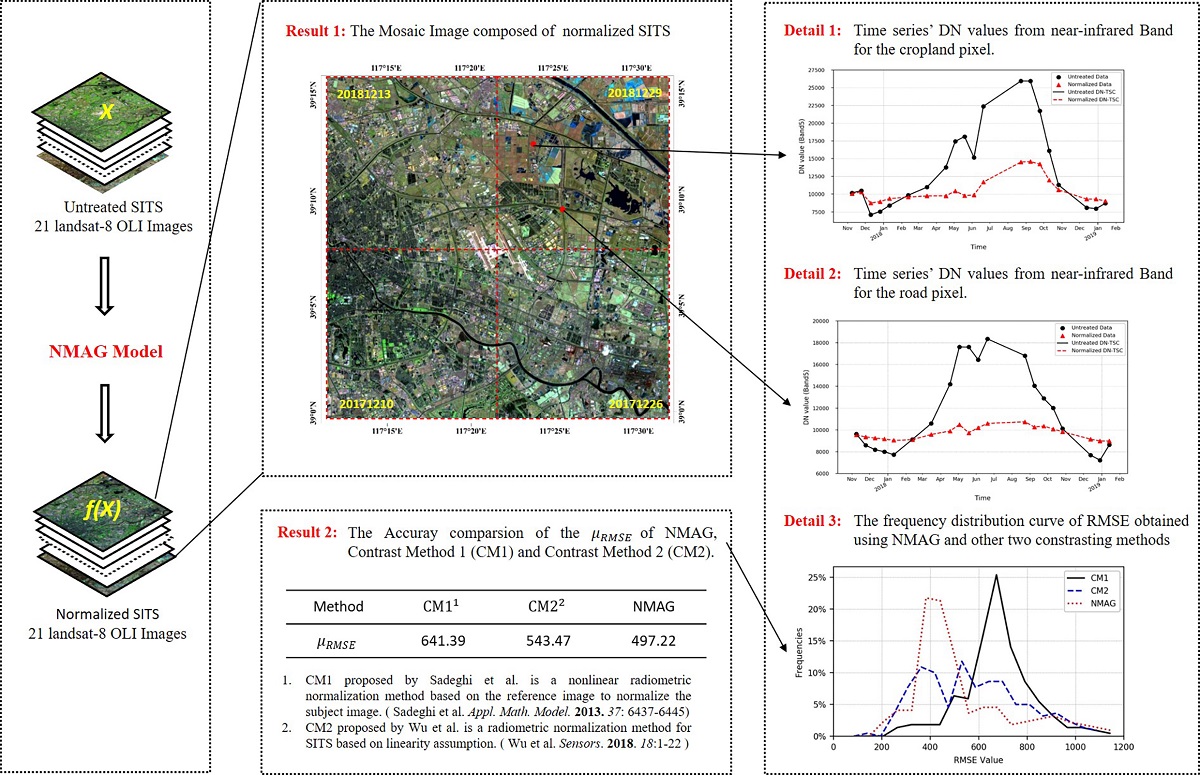

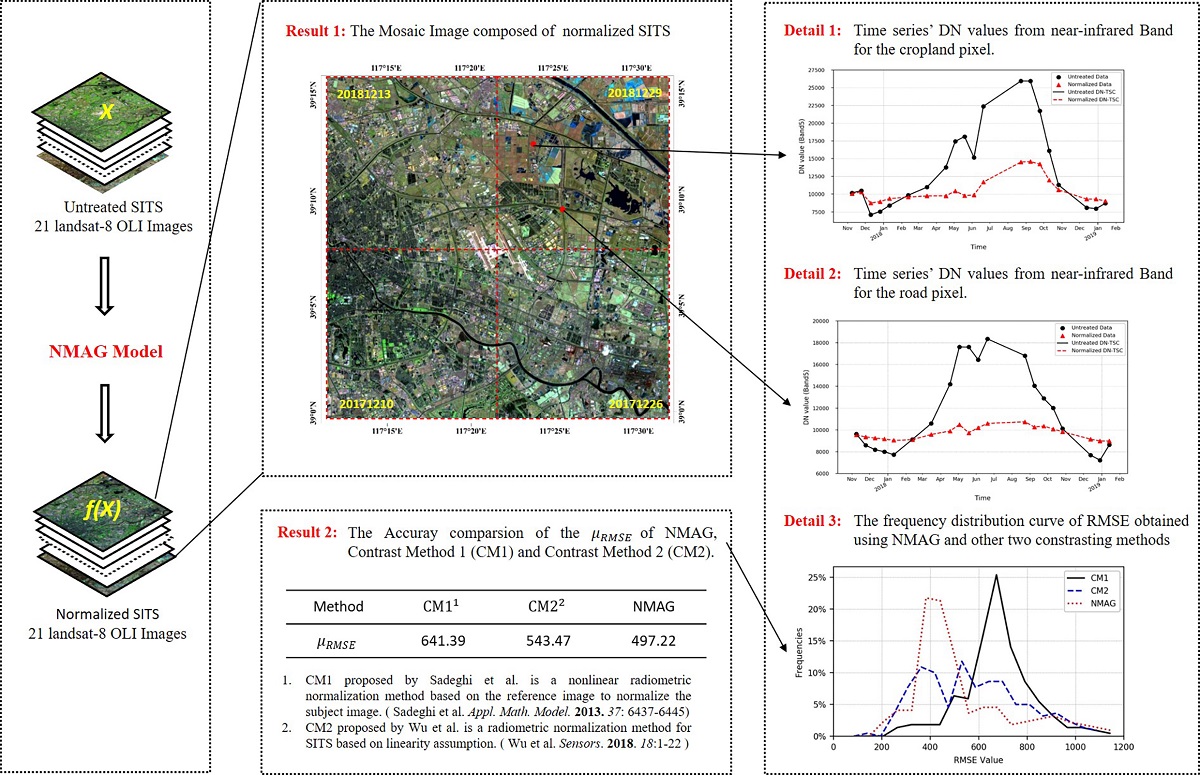

Satellite Image Time Series (SITS) is a data set which includes satellite images across several years with a high acquisition rate. Radiometric normalization is a fundamental and important preprocessing method for remote sensing applications using SITS due to the radiometric distortion caused by noise between images. Normalizing the subject image based on the reference image is a general strategy when using traditional radiometric normalization methods to normalize multi-temporal imagery (usually two scenes or three scenes in different time phases). However, these methods are not suitable for calibrating SITS because they cannot minimize the radiometric distortion between any pair of images in SITS. The existing relative radiometric normalization methods for SITS are based on linear assumptions which cannot effectively reduce nonlinear radiometric distortion caused by continuously changing noise in SITS. To overcome this problem and obtain a more accurate SITS, this study proposed a Nonlinear Radiometric Normalization Model (named NMAG) for SITS based on Artificial Neural Networks (ANN) and Greedy Algroithm (GA) . In this method, GA was used to determine the correction order of SITS and calculate the error between the image to be corrected and normalized images, which avoided the selection of a single reference image. ANN was used to obtain the optimal solution of error function, which minimized the radiometric distortion between different images in SITS. SITS composed of 21 Landsat-8 images in Tianjin City from October 2017 to January 2019 were selected to test the method. We compared NMAG with other two contrasting method (refered as CM1 and CM2), and found that the average of root mean square error $(\mu_{RMSE})$ of NMAG $(497.22)$ was significantly smaller than those of CM1 $(641.39)$ and CM2 $(543.47)$, and the accuracy of normalized SITS obtained by using NMAG has increased by 22.4\% and 8.5\% toward CM1 and CM2, respectively. These experimental results confirmed the effectiveness of NMAG in the reduction of radiometric distortion caused by continuously changing noise between images in SITS.