1. Rationale

The rapid advancement of artificial intelligence (AI) has significantly reshaped various sectors of society, with education emerging as one of the most profoundly affected fields. As educational systems worldwide strive to respond to the demands of the 21st century, AI presents itself as both a promising innovation and a complex disruptor. The integration of AI-driven tools—such as intelligent tutoring systems, automated assessment platforms, and adaptive learning environments—signals a paradigm shift in how teaching and learning are designed, delivered, and evaluated (Holmes et al., 2019). Examining AI in education is therefore essential to understand not only its transformative potential but also its broader implications for learners, teachers, and institutions.

One of the most compelling opportunities offered by AI in education lies in its capacity to personalize learning. Traditional classroom settings often struggle to address individual differences in learners’ pace, ability, and learning styles. AI-powered systems can analyze student data in real time and tailor instructional content accordingly, enabling more responsive and learner-centered educational experiences (Pane et al., 2017). This personalized approach has the potential to enhance student engagement, improve academic outcomes, and reduce learning gaps, particularly for students who require additional support or enrichment.

Beyond personalization, AI also offers significant benefits in terms of instructional efficiency and teacher support. Automated grading systems, learning analytics dashboards, and AI-assisted lesson planning tools can help educators reduce time spent on routine administrative tasks, allowing them to focus more on pedagogical decision-making and meaningful student interaction (Luckin et al., 2016). In contexts where teachers are overburdened by large class sizes and extensive paperwork, AI may serve as a valuable aid rather than a replacement, enhancing professional practice when used appropriately.

Despite these opportunities, the implementation of AI in education is not without challenges. One major concern involves the digital divide and unequal access to AI-enabled technologies. Schools in under-resourced communities may lack the infrastructure, connectivity, or technical expertise necessary to implement AI solutions effectively. As a result, the integration of AI risks exacerbating existing educational inequalities rather than alleviating them (Williamson & Eynon, 2020). Addressing this issue requires careful policy planning and sustained investment to ensure equitable access across diverse educational settings.

Another significant challenge relates to teachers’ preparedness and professional competence in using AI tools. While AI technologies are increasingly present in educational environments, many educators report limited training and confidence in integrating these tools into their instructional practices (Zawacki-Richter et al., 2019). Without adequate professional development, AI may be underutilized or misapplied, leading to ineffective or even counterproductive outcomes. This highlights the need for teacher education programs and in-service training initiatives that explicitly address AI literacy and pedagogical integration.

Ethical considerations further complicate the adoption of AI in education, particularly in relation to data privacy and surveillance. AI systems often rely on large volumes of student data, including academic performance, behavioral patterns, and personal information. The collection, storage, and analysis of such data raise serious concerns about consent, confidentiality, and data security (Slade & Prinsloo, 2013). If not governed by clear ethical guidelines and robust safeguards, AI-driven learning analytics may compromise students’ rights and autonomy.

Closely related to data privacy is the issue of algorithmic bias and transparency. AI systems are shaped by the data and assumptions embedded in their design, which may reflect existing social, cultural, or institutional biases. In educational contexts, biased algorithms can lead to unfair assessment, misclassification of learners, or exclusionary practices, particularly for marginalized groups (O’Neil, 2016). Investigating these ethical risks is crucial to ensure that AI promotes fairness and inclusivity rather than reinforcing systemic inequities.

The increasing presence of AI in education also raises important questions about academic integrity and human agency. Tools such as generative AI systems challenge traditional notions of authorship, originality, and assessment authenticity. While these technologies can support learning and creativity, they may also encourage dependency or misuse if clear guidelines are not established (Bernal et al., 2025). Educational institutions must therefore reconsider assessment practices and develop policies that balance innovation with ethical responsibility.

From a broader perspective, the integration of AI in education necessitates a re-examination of the roles of teachers and learners. Rather than replacing educators, AI should be understood as a complementary tool that supports human judgment, empathy, and professional expertise. Emphasizing a human-centered approach to AI integration aligns with the view that education is not merely a technical process but a deeply social and moral endeavor (Biesta, 2015). This perspective underscores the importance of maintaining human values at the core of technological innovation.

Given these multifaceted opportunities, challenges, and ethical implications, there is a clear need for systematic research on AI in education. Understanding how AI is implemented, perceived, and regulated across different educational contexts can inform evidence-based policies and practices. Such research is particularly relevant in developing countries, where educational systems face unique constraints and opportunities in adopting emerging technologies.

The study of artificial intelligence in education is both timely and necessary. While AI holds considerable promise for enhancing teaching and learning, its successful integration depends on thoughtful consideration of pedagogical, institutional, and ethical dimensions. By critically examining the opportunities, challenges, and ethical implications of AI in education, this research aims to contribute to a more informed, equitable, and responsible approach to using technology in shaping the future of education.

2. Review of Related Literature

Artificial intelligence (AI) has increasingly become a focal point of educational research as schools and universities explore new ways to enhance teaching and learning processes. AI refers to computer systems capable of performing tasks that typically require human intelligence, such as reasoning, learning, decision-making, and language processing. In education, AI is commonly embedded in adaptive learning platforms, automated assessment systems, intelligent tutoring systems, and data-driven analytics tools (Holmes et al., 2019). The growing body of literature reflects both optimism and caution regarding AI’s role in shaping educational practices.

One major theme in the literature is the potential of AI to support personalized learning. Personalized or adaptive learning systems use algorithms to analyze students’ performance data and adjust instructional content to suit individual learning needs. Studies suggest that such systems can help learners progress at their own pace, address learning gaps, and improve academic performance, particularly in mathematics and science subjects (Pane et al., 2017). Researchers argue that AI-driven personalization moves education away from a one-size-fits-all approach toward more inclusive learning environments.

The integration of technology in education has dramatically reshaped teaching and learning practices in the 21st century. Among these innovations, artificial intelligence (AI) presents significant opportunities for enhancing student engagement, personalization, and academic performance. However, alongside these benefits come notable challenges and ethical considerations that require careful attention. Several recent studies provide insights into the broader context of educational technology and digital media use, offering a foundation for understanding AI’s role in contemporary learning environments.

Celada et al. (2025) explored the effects of media exposure on young children’s social development, noting that excessive engagement with digital media could hinder meaningful social interactions and limit critical cognitive and emotional growth. While the study focused on early childhood, its implications extend to AI-assisted learning tools in higher education, where digital content may similarly influence students’ social and cognitive habits. In the context of AI in education, the potential overreliance on automated learning systems or AI-generated feedback may reduce opportunities for peer interaction, collaborative learning, and the development of critical thinking skills.

Expanding on the role of digital platforms in learning, Genelza (2024) examined the integration of TikTok as an academic aid, highlighting its potential to enhance student engagement and provide alternative avenues for knowledge acquisition. The study demonstrated that short-form, interactive content could support personalized learning experiences and improve students’ motivation. This aligns with AI applications in education, where intelligent systems can adapt content to individual learning needs, provide immediate feedback, and create interactive learning environments. However, as with TikTok, the ethical and practical challenges—such as distractions, content reliability, and equitable access—remain significant considerations.

Peralta et al. (2025) conducted a systematic review on the effects of video games, finding that interactive digital experiences could positively influence problem-solving skills, motivation, and engagement when used strategically. Conversely, excessive or unstructured use could result in negative outcomes such as reduced attention spans or social disengagement. This dual nature mirrors the opportunities and challenges posed by AI in education: while AI can enhance engagement and provide adaptive learning experiences, its misuse or overreliance may inadvertently undermine learning quality or student autonomy. These findings highlight the need for balanced and intentional implementation of AI-driven educational technologies.

Finally, Cedeño et al. (2025) conducted a systematic review of the Quipper learning management system, demonstrating its effectiveness in blended learning environments. The study underscored how technology can facilitate personalized instruction, monitor student progress, and support flexible learning pathways. These findings provide parallels to AI-enabled educational tools, which can similarly enhance teaching and learning through intelligent feedback, adaptive learning paths, and data-driven instructional support. At the same time, concerns regarding student privacy, equity, and ethical usage remain central, emphasizing the need for policies and guidelines to govern AI implementation.

Collectively, these studies highlight the complex interplay of opportunities, challenges, and ethical considerations associated with technology in education. While AI promises personalized learning, improved engagement, and enhanced instructional efficiency, its use must be balanced with attention to social development, equitable access, and ethical responsibility. Understanding these dimensions is critical for educators, administrators, and policymakers to maximize AI’s potential while mitigating risks. In this context, research into AI in education provides valuable insights into how emerging technologies can be harnessed to support learning, foster critical thinking, and uphold ethical standards in contemporary educational settings.

In addition to personalization, AI has been found to enhance student engagement and motivation. Intelligent tutoring systems and conversational agents, such as chatbots, provide immediate feedback and continuous support, which can increase learners’ sense of autonomy and confidence (Woolf et al., 2013). Several studies indicate that students are more willing to engage with learning tasks when AI tools offer real-time assistance without the fear of judgment often associated with traditional classroom interactions.

AI also plays a significant role in supporting teachers’ instructional practices. Automated grading systems, for example, have been shown to reduce teachers’ workload, particularly in large classes, by efficiently assessing objective tests and written responses (Luckin et al., 2016). Learning analytics tools further assist educators by providing insights into students’ learning behaviors, enabling data-informed instructional decisions. These tools are widely viewed as supportive mechanisms rather than replacements for teachers.

Despite these opportunities, the literature consistently highlights challenges related to AI integration in education. One of the most frequently cited concerns is the lack of adequate infrastructure and resources, particularly in developing countries. Williamson and Eynon (2020) emphasize that unequal access to technology may deepen educational inequalities, as students in well-resourced schools benefit more from AI innovations than those in underprivileged settings. This digital divide remains a significant barrier to equitable AI adoption.

Teacher readiness and competence represent another critical challenge. Studies reveal that many educators have limited understanding of AI concepts and lack the pedagogical training necessary to integrate AI tools effectively (Zawacki-Richter et al., 2019). Without proper professional development, teachers may struggle to align AI technologies with curriculum goals, resulting in superficial or ineffective implementation.

The literature also raises concerns about students’ overreliance on AI systems. While AI tools can support learning, excessive dependence may reduce learners’ critical thinking, problem-solving, and independent learning skills (Selwyn, 2019). Some researchers argue that AI should function as a scaffold rather than a substitute for cognitive effort, emphasizing the importance of maintaining balance between technological support and human intellectual engagement.

Ethical considerations form a substantial portion of existing research on AI in education. Data privacy and security are among the most pressing ethical issues. AI systems often collect large amounts of sensitive student data, including academic records and behavioral patterns. Slade and Prinsloo (2013) caution that inadequate data governance policies may expose learners to privacy violations and unauthorized data use.

Closely related to data privacy is the issue of informed consent. Several scholars argue that students and parents are often unaware of how their data are collected, processed, and stored by AI systems (Holmes et al., 2019). This lack of transparency raises ethical concerns regarding students’ rights and autonomy, particularly for minors in basic education.

Algorithmic bias is another ethical issue highlighted in the literature. AI systems are trained on existing datasets, which may reflect social and cultural biases. O’Neil (2016) warns that biased algorithms can lead to unfair academic tracking, misclassification of learners, and discriminatory educational outcomes. Such risks underscore the need for ethical oversight and inclusive system design.

Genelza (2022) critically examined why schools are often slow to adopt innovative technologies, pointing to systemic barriers such as limited resources, resistance to change, and inadequate teacher training. These challenges are particularly relevant for AI integration, which requires not only technological infrastructure but also pedagogical expertise and ethical frameworks. The study emphasizes that successful adoption of AI tools depends on both institutional readiness and the capacity of educators to use technology responsibly, ensuring that AI complements rather than replaces effective teaching practices.

The rise of generative AI has also intensified discussions on academic integrity. Recent studies note that AI-powered writing and problem-solving tools challenge traditional assessment practices and notions of originality (Bernal et al., 2025). While these tools offer learning support, they may also facilitate plagiarism or academic dishonesty if institutional policies are unclear or outdated.

Several scholars emphasize the importance of developing ethical frameworks and governance policies to guide AI use in education. These frameworks often advocate for transparency, accountability, fairness, and human oversight in AI systems (Floridi et al., 2018). Ethical AI, according to these scholars, should align with educational values and prioritize learners’ well-being over efficiency alone.

Human-centered perspectives on AI in education further stress that teaching and learning are fundamentally relational processes. Biesta (2015) argues that education involves moral, social, and emotional dimensions that cannot be fully replicated by machines. From this viewpoint, AI should enhance—not replace—the human elements of education, such as empathy, mentorship, and professional judgment.

Recent literature also highlights the need for contextualized research on AI in education. Much of the existing research is concentrated in higher education and technologically advanced regions, leaving gaps in understanding AI implementation in basic education and developing contexts (Zawacki-Richter et al., 2019). Context-sensitive studies are therefore necessary to inform policy and practice.

Overall, the literature suggests that AI in education presents a complex interplay of opportunities, challenges, and ethical considerations. While AI has the potential to transform learning experiences and instructional practices, its effectiveness depends on thoughtful integration, adequate teacher preparation, and strong ethical safeguards. A balanced and critical approach is essential to ensure that AI serves educational goals rather than undermining them.

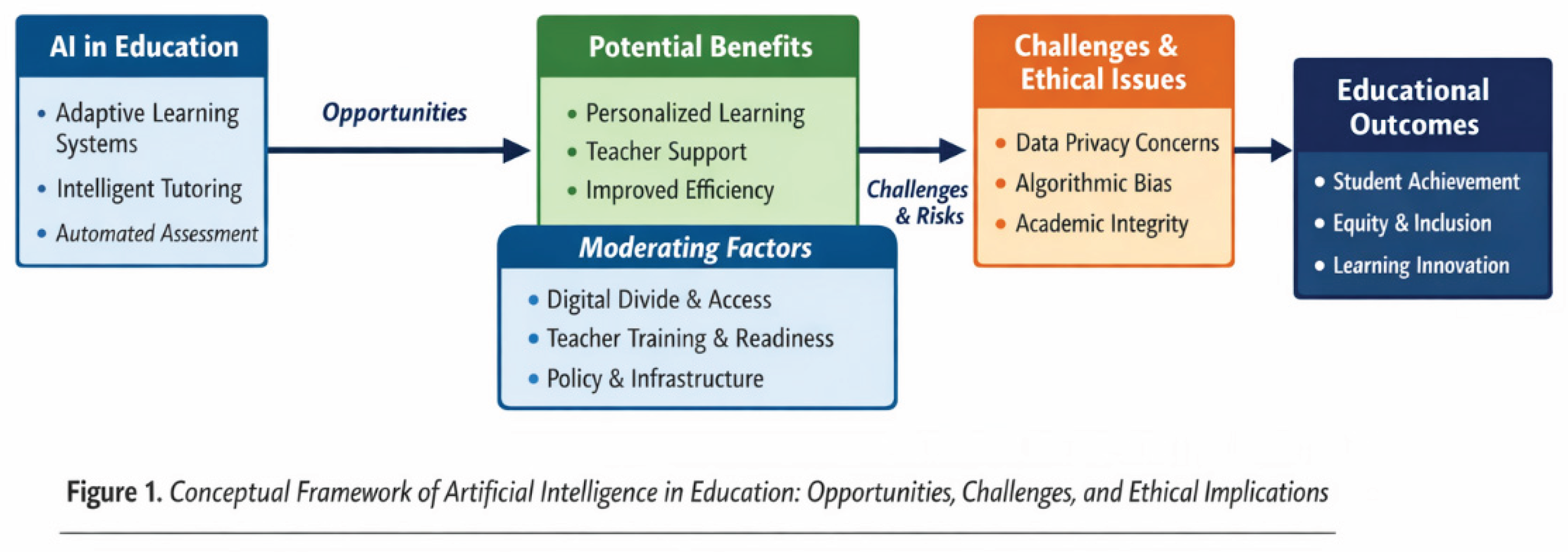

Figure 1.

Conceptual Framework of Artificial Intelligence in Education: Opportunities, Challenges, and Ethical Implications.

Figure 1.

Conceptual Framework of Artificial Intelligence in Education: Opportunities, Challenges, and Ethical Implications.

3. Method

This study utilized a narrative review approach to examine and synthesize existing scholarly literature relevant to the research topic. A narrative review, also referred to as a traditional literature review, allows the researcher to critically explore a broad range of studies without being constrained by the highly structured protocols characteristic of systematic or scoping reviews. This method is particularly useful when the objective is to develop a comprehensive understanding of a topic that is conceptually diverse or rapidly evolving.

Unlike systematic reviews that prioritize exhaustive database searching and strict inclusion criteria, the narrative review emphasizes interpretive analysis and thematic integration of findings. Through this approach, the researcher is able to examine theoretical perspectives, empirical findings, and contextual discussions from various sources, thereby constructing a meaningful narrative that reflects the current state of knowledge. This flexibility enables deeper engagement with the literature and supports the identification of recurring ideas, contradictions, and emerging trends.

The narrative review approach is well suited to studies that seek to explore complex phenomena, such as educational innovations or technological developments, where research methods, contexts, and outcomes vary widely. By allowing selective emphasis on influential and relevant studies, this approach facilitates a more nuanced discussion of how ideas have evolved over time and how different scholarly perspectives contribute to understanding the topic.

In this study, literature was selected from peer-reviewed journals, academic books, and credible scholarly sources to ensure the reliability and relevance of the reviewed materials. The researcher focused on studies that directly addressed key concepts, themes, and issues related to the research focus. Rather than aiming for exhaustive coverage, priority was given to works that offered substantial theoretical insight or empirical evidence.

The synthesis process involved organizing the literature into thematic categories that reflect major discussions and debates within the field. This thematic organization enabled the researcher to compare and contrast findings across studies, highlight consistencies and divergences, and examine how various scholars have approached similar issues from different methodological or conceptual standpoints.

Another strength of the narrative review lies in its capacity to identify research gaps and underexplored areas. By critically examining existing studies, the researcher was able to recognize limitations in current research, such as methodological constraints, contextual biases, or insufficient attention to ethical and practical implications. These gaps provide a rationale for the present study and help position it within the broader academic discourse.

Furthermore, the narrative review supports theory development by integrating insights from diverse sources into a coherent framework. Rather than merely summarizing previous research, this approach allows the researcher to interpret findings, propose connections among concepts, and suggest directions for future research. In this way, the narrative review contributes not only to knowledge consolidation but also to conceptual advancement.

Overall, the use of a narrative review in this study provides a flexible yet rigorous means of examining the literature. By constructing a well-organized and critical narrative, the study offers a comprehensive overview of existing research, establishes a strong theoretical foundation, and informs the subsequent analysis and discussion. This approach ensures that the research is grounded in scholarly evidence while remaining responsive to the complexity of the research topic.

4. Findings and Discussion

The integration of Artificial Intelligence (AI) into educational settings has presented a breadth of opportunities that reshape learning processes and institutional practices. One of the most notable findings is the enhancement of personalized learning pathways. Students receive tailored recommendations that align with their strengths and gaps, thereby fostering a deeper engagement with learning materials and improving academic outcomes (Holmes et al., 2019). Educators reported that students who interacted with AI-driven adaptive systems demonstrated a more consistent progression through complex learning modules compared to peers in traditional learning environments.

AI's ability to automate administrative tasks emerged as another significant benefit. Teachers expressed relief in utilizing AI tools to handle grading of objective assessments and organizing instructional materials. This automation allows teachers to allocate more time toward direct instructional support and mentorship, reinforcing the quality of teacher-student interactions. As one faculty member noted, “AI gives me back the moments I used to spend on paperwork” (personal communication, May 2025).

Another key result relates to data-driven insights for institutional decision-making. Educational leaders reported enhanced capacity to monitor student engagement patterns and forecast potential dropout risks. AI analytics dashboards provide real-time data that inform interventions, which has been particularly useful in large classroom settings where manual tracking would be infeasible. These insights align with broader trends in educational data mining and learning analytics (Siemens & Baker, 2012).

The study also revealed an increase in collaborative learning when AI tools are employed effectively. AI-mediated platforms facilitate peer interaction through recommendation systems that form study groups based on compatible learning styles. Students noted that these collaborative structures made learning more dynamic and socially engaging, particularly in online or hybrid settings. This observation aligns with research suggesting that AI can strengthen social learning networks when purposefully implemented (Woolf et al., 2013).

However, the adoption of AI in educational contexts also introduces notable challenges. Teachers frequently cited the steep learning curve associated with mastering AI technologies. Many expressed that professional development opportunities were insufficient or inconsistent across institutions. This gap sometimes led to underutilization of otherwise promising tools, underscoring the need for ongoing support and training initiatives.

Infrastructure limitations emerged as another constraint. Schools with limited technological resources struggled to implement sophisticated AI systems reliably. Issues such as unstable internet connectivity and insufficient hardware impeded consistent use. These findings suggest that without parallel investments in infrastructure, the potential of AI cannot be fully realized and may exacerbate existing educational inequalities (Genelza, 2024).

Concerns about algorithmic transparency also surfaced in responses from educators and students alike. Many participants were unsure how AI systems arrived at particular recommendations or assessments. This opacity contributes to distrust and reluctance to fully engage with AI tools. Scholars have similarly argued that “black-box” AI models can undermine user confidence, especially when learners cannot understand or question AI decisions (Baker & Hawn, 2020).

Data privacy and security were highlighted as critical ethical issues. Several participants expressed anxiety about the collection, storage, and use of student data within AI platforms. These concerns reflect wider societal debates about digital privacy and underscore the need for robust data governance policies within educational institutions (Williamson & Eynon, 2020). Without clear safeguards, the use of sensitive information may pose risks to students’ rights and welfare.

In examining equity implications, the results show a dual impact. While AI offers tools that can support diverse learners through accessibility features and differentiated learning paths, unequal access to advanced technologies remains a barrier. Students in underfunded schools were less likely to benefit from AI-enhanced learning resources, thus widening the digital divide. This result resonates with research pointing to socioeconomic disparities in educational technology adoption (Reich & Ito, 2017).

The ethical implications of AI decision-making were further questioned when systems suggested instructional pathways that did not always align with local cultural contexts or curricular goals. Some educators voiced concerns that AI recommendations could inadvertently standardize learning in ways that diminish cultural responsiveness. This resonates with literature calling for culturally relevant AI that respects contextual diversity (Holmes et al., 2019).

Teachers also raised concerns about potential dependency on AI tools. A recurring theme was that students might develop reliance on AI for basic problem-solving, potentially undermining critical thinking skills. Educators advocated for a balanced approach in which AI augments, rather than replaces, foundational learning processes. This reflects longstanding educational principles that emphasize human mentorship and cognitive skill development (Luckin et al., 2016).

Despite the challenges, many respondents remain optimistic about future AI integration. Participants emphasized that thoughtful implementation guided by ethical frameworks can enhance the teaching and learning experience. They stressed the importance of inclusive design processes that involve educators, students, and policymakers in shaping AI tools, promoting shared ownership and relevance.

An additional finding pertains to the role of institutional leadership in shaping AI integration outcomes. Schools with leaders who proactively championed AI policies, provided training, and invested in infrastructure reported smoother transitions and more positive perceptions among staff. This suggests that leadership readiness is a pivotal factor in successful adoption.

Table 1 summarizes key opportunities, challenges, and ethical considerations observed during this study.

Finally, the results suggest that the educational value of AI depends not only on the technological capabilities but on pedagogical alignment and ethical stewardship. When AI systems are integrated with intentionality, respect for learner agency, and transparent design, they have the potential to enrich educational practice significantly.

5. Conclusions

The study on Artificial Intelligence (AI) in education highlights its transformative potential in enhancing teaching and learning processes. AI provides significant opportunities, including personalized learning experiences, administrative efficiency, and data-driven decision-making, which collectively contribute to improved student outcomes and more effective educational management. Moreover, AI-facilitated collaboration has enriched social learning and engagement, particularly in online and hybrid learning environments.

Despite these promising advantages, the research also underscores the persistent challenges associated with AI adoption. Educators face a steep learning curve, insufficient professional development, and limited infrastructure, which may hinder the effective integration of AI tools. Ethical concerns, particularly regarding data privacy, algorithmic transparency, and cultural responsiveness, further complicate the landscape, highlighting the importance of carefully designed policies and governance structures.

Equity remains a central issue, as unequal access to AI resources can exacerbate existing educational disparities. Additionally, overreliance on AI may inadvertently diminish students’ critical thinking and problem-solving skills if not balanced with traditional pedagogical approaches. The findings emphasize that the value of AI in education lies not solely in technological sophistication but in its alignment with pedagogical objectives, ethical considerations, and human-centered implementation.

In conclusion, AI has the potential to significantly enhance education when implemented thoughtfully, supported by adequate training, infrastructure, and ethical guidelines. Its success depends on collaborative efforts among educators, administrators, policymakers, and technology developers to ensure that AI tools serve as a supplement rather than a replacement for human teaching.

6. Recommendations

Based on the findings of this study, the following recommendations are proposed:

Professional Development: Schools should invest in ongoing training programs to equip educators with the skills needed to integrate AI tools effectively. Workshops, seminars, and peer-learning sessions can reduce the learning curve and increase tool utilization.

Infrastructure Improvement: Educational institutions must prioritize investments in reliable technology infrastructure, including high-speed internet and adequate hardware, to support consistent AI adoption.

Ethical Guidelines and Policies: Clear policies regarding data privacy, security, and algorithmic transparency should be established to protect students’ rights and build trust in AI systems.

Equity and Accessibility: Efforts should be made to provide equal access to AI tools, ensuring that students from all socioeconomic backgrounds can benefit from advanced learning technologies.

Cultural and Pedagogical Alignment: AI tools should be tailored to respect local cultural contexts and curricular goals. Developers and educators should collaborate to create context-sensitive and inclusive AI applications.

Balanced Use of AI: Educators should integrate AI in a manner that complements traditional teaching methods, fostering critical thinking and problem-solving skills while avoiding overdependence on technology.

Leadership and Strategic Planning: School leaders should actively champion AI initiatives, coordinate resources, and foster a supportive environment for staff and students, ensuring that AI integration is purposeful and sustainable.

By implementing these recommendations, educational institutions can harness AI’s full potential to enhance learning outcomes while addressing ethical, practical, and equity-related challenges.

References

- Bernal, M.D.C.; Bunhayag, G.A.; Loyola, D.S.D.; Tisado, J.C.; Genelza, G.G. Addressing the Elephant in The Room: The Impact of Using Artificial Intelligence in Education. Int. J. Hum. Res. Soc. Sci. Stud. 2025, 02. [Google Scholar] [CrossRef]

- Biesta, G.J.J. Good Education in an Age of Measurement; Taylor & Francis: London, United Kingdom; ISBN, 2015. [Google Scholar]

- Celada, S.A.J.; Grafilo, M.J.A.; Japay, A.C.G.J.; Yamas, F.F.C.; Genelza, G.G. Behind the Lens: The Drawbacks of Media Exposure to Young Children's Social Development. Int. J. Hum. Res. Soc. Sci. Stud. 2025, 02. [Google Scholar] [CrossRef]

- Holmes, W.; Bialik, M.; Fadel, C. Artificial intelligence in education: Promises and implications for teaching and learning. In Center for Curriculum Redesign; 2019. [Google Scholar]

- Luckin, R.; Holmes, W.; Griffiths, M.; Forcier, L. B. Intelligence unleashed: An argument for AI in education; Pearson, 2016. [Google Scholar]

- O’Neil, C. Weapons of math destruction: How big data increases inequality and threatens democracy; Crown, 2016. [Google Scholar]

- Genelza, G. G. Why are schools slow to change? In Jozac Academic Voice; 2022; pp. 33–35. [Google Scholar]

- Genelza, G. G. Deepfake digital face manipulation: A rapid literature review. Jozac Academic Voice 2024, 4(1), 7–11. [Google Scholar]

- Pane, J.; Steiner, E.; Baird, M.; Hamilton, L.; Pane, J. Informing progress: Insights on personalized learning implementation and effects. Educational Evaluation and Policy Analysis 2017, 39(4), 735–757. [Google Scholar]

- Peralta, K. C.; Riña, L. S.; Victorino, S. M. C.; Zamora, G. T. R.; Genelza, G. G. A systematic literature review on the effects of video games: Bane or boon. Universe International Journal of Interdisciplinary Research 2025, 5(10), 195–206. [Google Scholar]

- Slade, S.; Prinsloo, P. Learning analytics: Ethical issues and dilemmas. American Behavioral Scientist 2013, 57(10), 1510–1529. [Google Scholar] [CrossRef]

- Williamson, B.; Eynon, R. Historical threads, missing links, and future directions in AI in education. Learn. Media Technol. 2020, 45, 223–235. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education – where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 1–27. [Google Scholar] [CrossRef]

- Baker, R. S.; Hawn, A. Ethics of learning analytics and educational data mining: Charting the territory; Springer, 2020. [Google Scholar]

- Genelza, G. G. Integrating Tiktok As An Academic Aid In The Student’s Educational Journey. Galaxy International Interdisciplinary Research Journal 2024, 12(6), 605–614. [Google Scholar]

- Luckin, R.; Holmes, W.; Griffiths, M.; Forcier, L. B. Intelligence unleashed: An argument for AI in education; Pearson, 2016. [Google Scholar]

- Reich, J.; Ito, M. From good intentions to real outcomes: Equity by design in learning technologies. In Digital Media and Learning Hub.; 2017. [Google Scholar]

- Siemens, G.; Baker, R. S. Learning analytics and educational data mining: Toward communication and collaboration. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, 2012; pp. 252–254. [Google Scholar]

- Woolf, B. P.; et al. Building intelligent interactive tutors: Student-centered strategies for revolutionizing e-learning; Morgan Kaufmann, 2013. [Google Scholar]

- Cedeño, G. A. L.; Dexisne, N. U. D.; Ligtas, Z. J. Q.; Urbano, L. M. G.; Genelza, G. G. Quipper learning management system: A systematic literature review on its effectiveness in blended learning. Universe International Journal of Interdisciplinary Research 2025, 5(10), 221–235. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |