Submitted:

05 January 2026

Posted:

06 January 2026

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Dung Beetle Optimization Algorithm

2.1. Rolling Ball Beetle

2.2. Breeding Dung Beetle

2.3. Small Dung Beetle

2.4. Stealing Dung Beetle

3. A New Multi-Threshold Image Segmentation Algorithm

3.1. Improved Dung Beetle Algorithm Based on Multiple Strategies

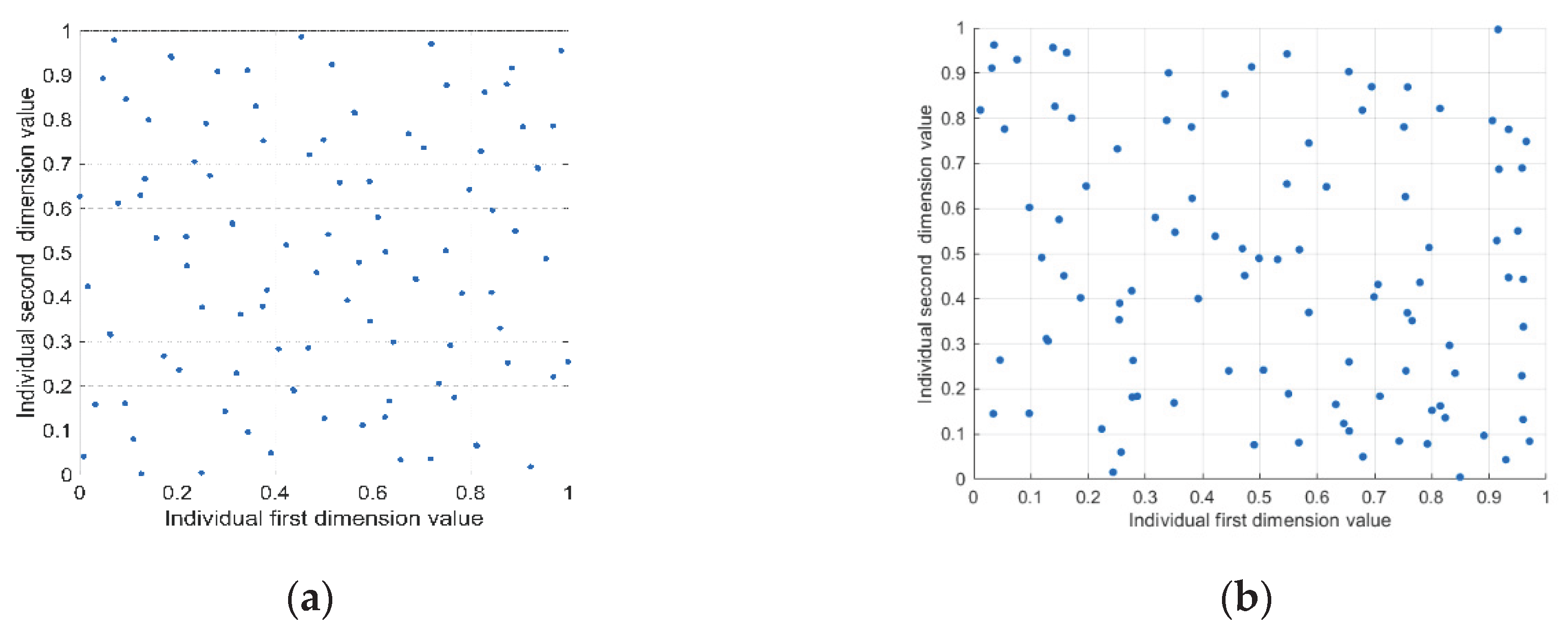

3.1.1. Sobol Sequence Initialization Population

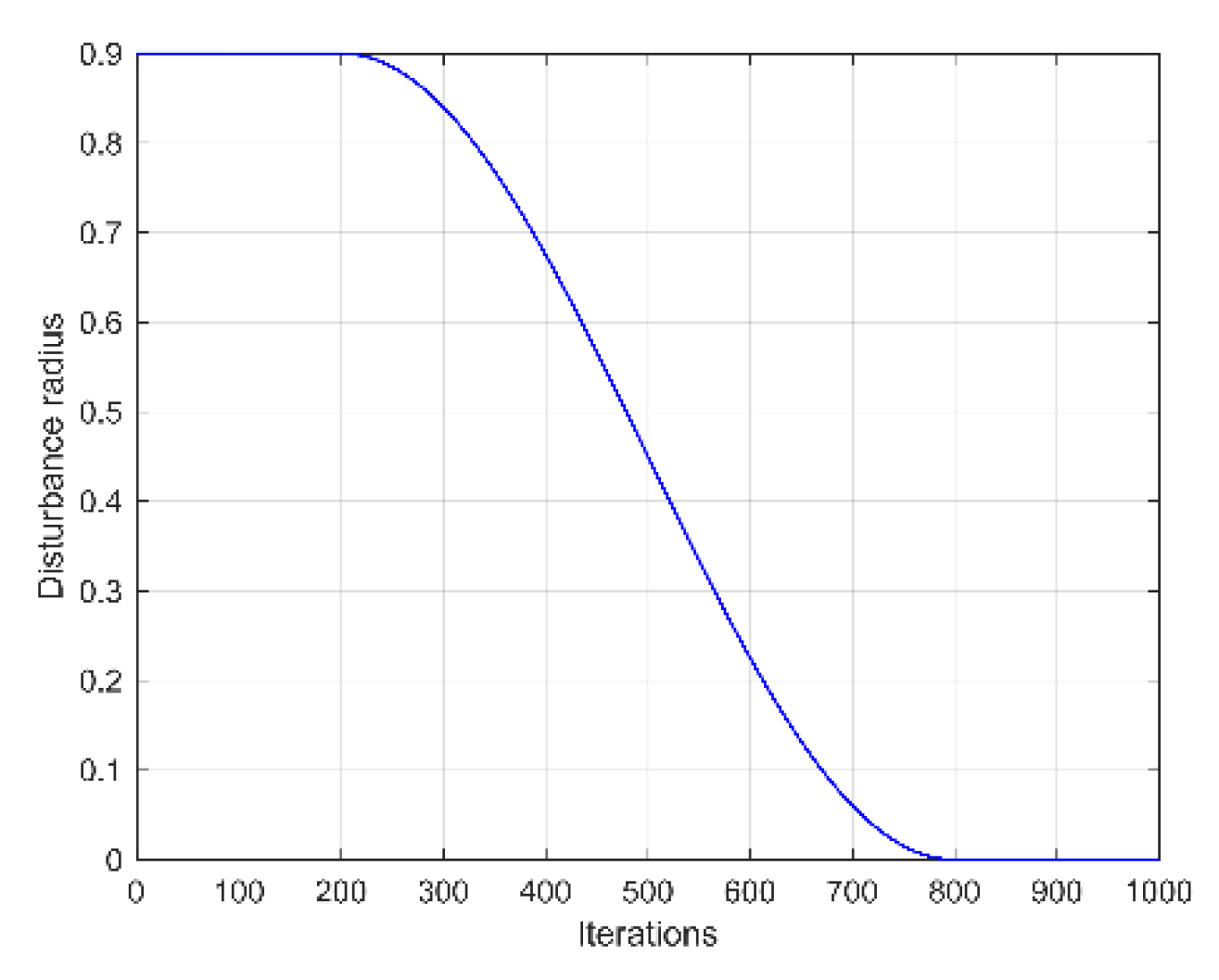

3.1.2. Multi-Stage Disturbance Update

3.1.3. Hybrid Dynamic Switching Mechanism

- Distance-based Individual Selection and Updating Strategy

- 2.

- Mutation update towards global optimum

- 3.

- Dynamic switching mechanism

3.1.4. MIDBO Algorithm

3.2. MIDBO-KMIA

4. Verification Experiments of Algorithm Performance

4.1. Verification Experiments of Multi-Strategy Improved Dung Beetle Optimization Algorithm Performance

4.1.1. Experimental Environment

4.1.2. Experimental Setup and Test Function

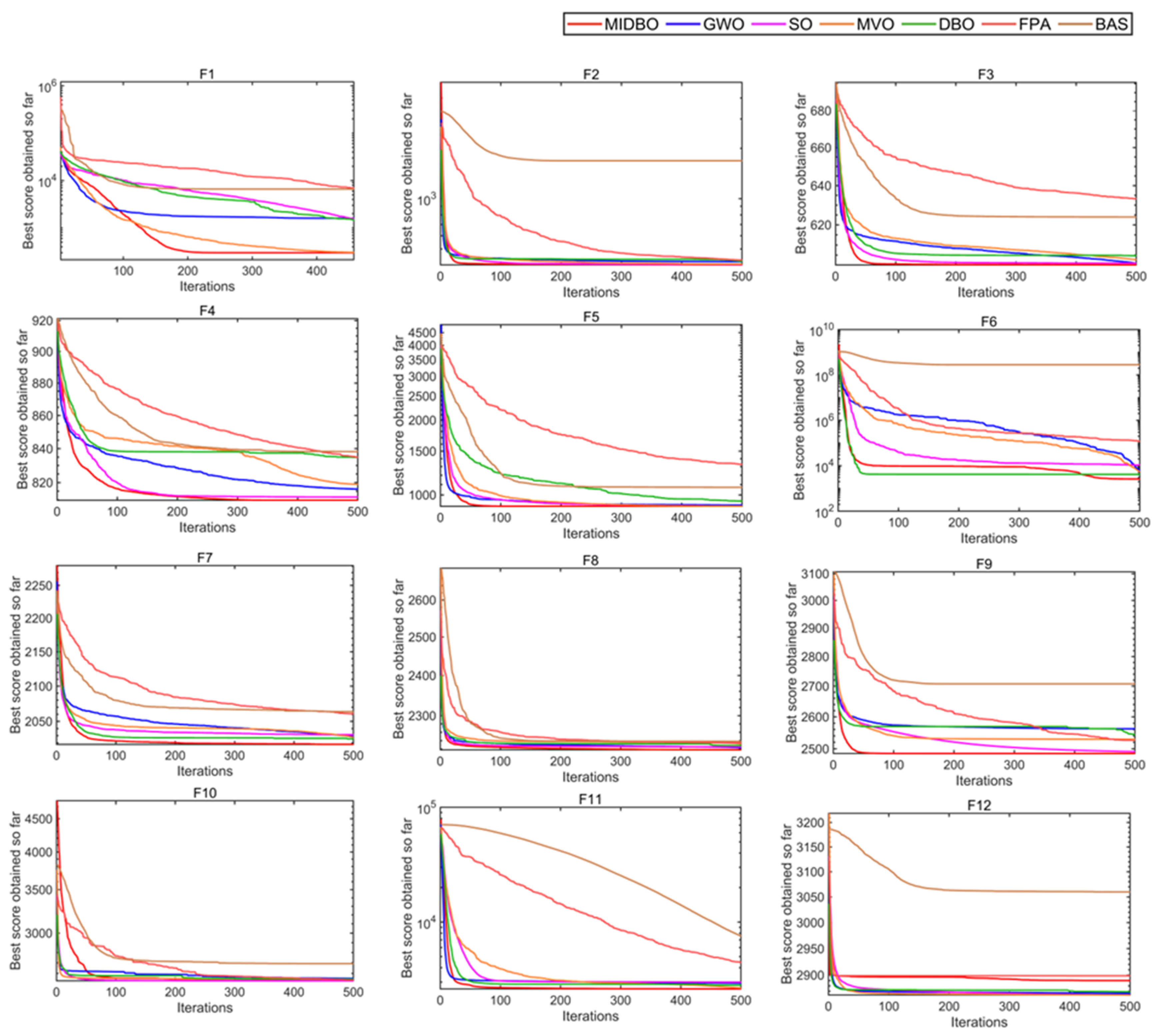

4.1.3. Results Analyses Based CEC2022 Test Function

4.1.4. Wilcoxon Signed-Rank Test

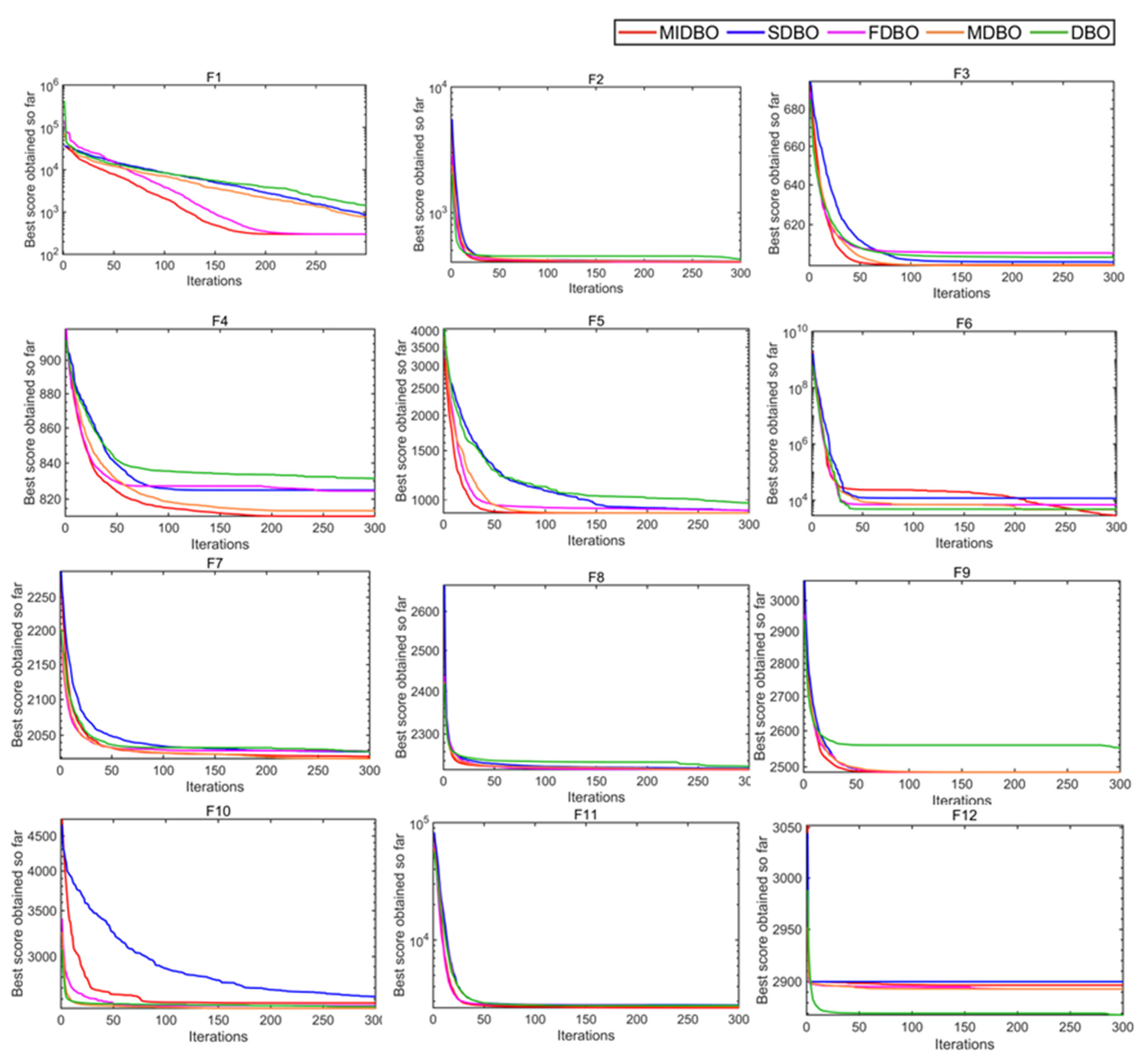

4.1.5. Verification of the Effectiveness of Improved Strategies

4.2. Performance Verification of MIDBO-KMIA

4.2.1. Experimental Design

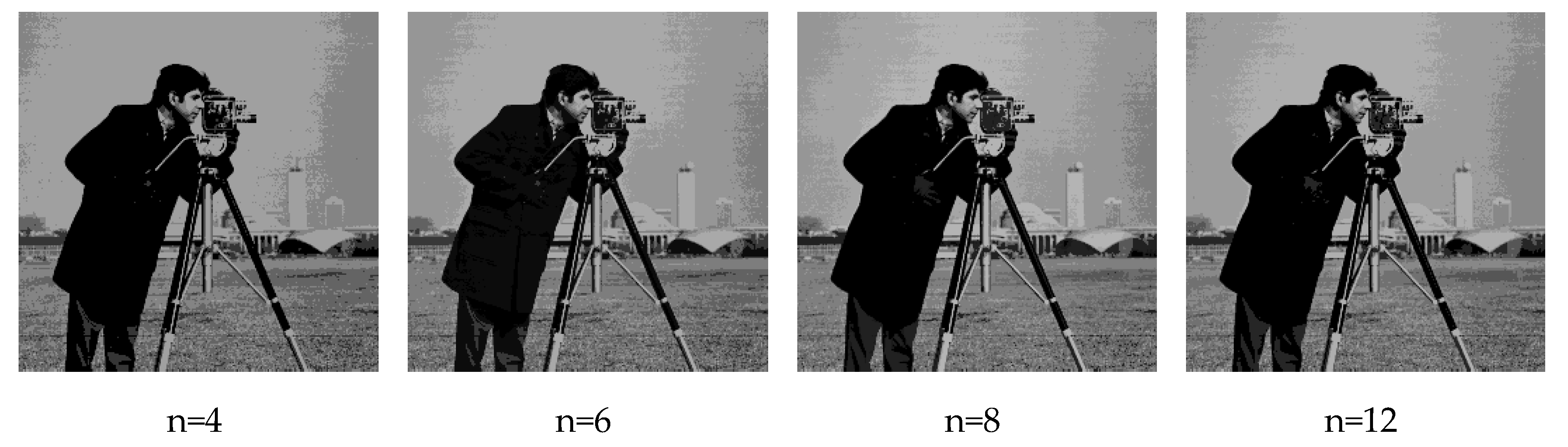

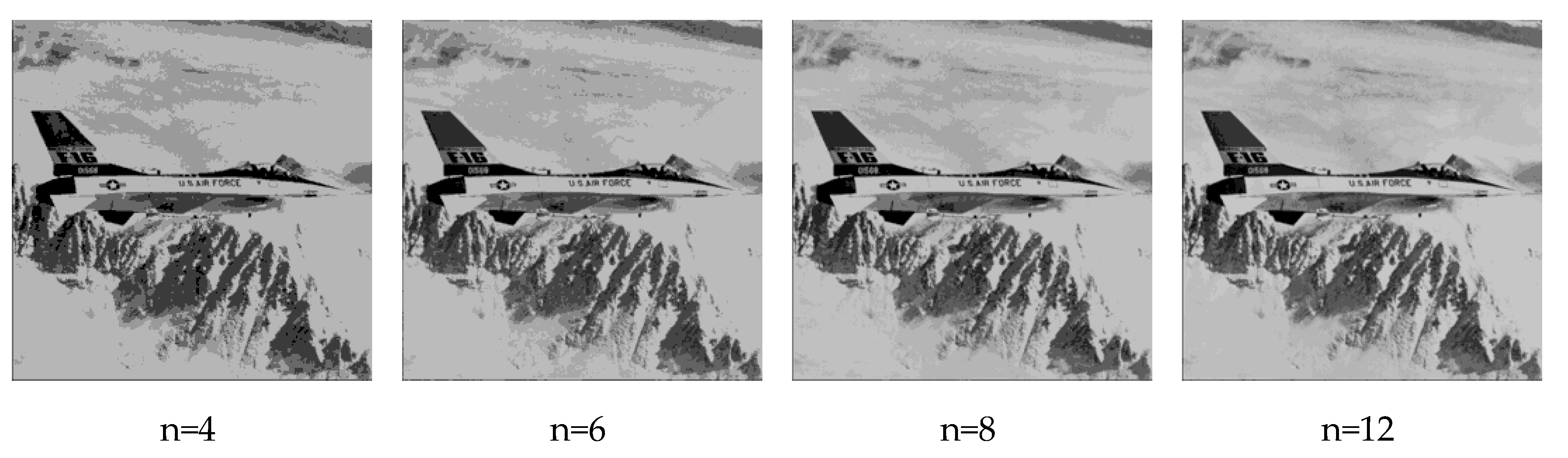

4.2.2. Analyses of Threshold Segmentation Results

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abdulateef SK, Salman MD. A Comprehensive Review of Image Segmentation Techniques. Iraqi Journal for Electrical and Electronic Engineering. 2021;17(2):166-75. [CrossRef]

- Hao S, Huang C, Heidari AA, Chen H, Liang G. An improved weighted mean of vectors optimizer for multi-threshold image segmentation: case study of breast cancer. Cluster Computing. 2024;27(10):13945-4004. [CrossRef]

- Amiriebrahimabadi M, Rouhi Z, Mansouri N. A Comprehensive Survey of Multi-Level Thresholding Segmentation Methods for Image Processing. Archives of Computational Methods in Engineering. 2024;31(6):3647-97. [CrossRef]

- Ning G. Two-dimensional Otsu multi-threshold image segmentation based on hybrid whale optimization algorithm. Multimedia Tools and Applications. 2023;82(10):15007-26. [CrossRef]

- Yin P-Y. Multilevel minimum cross entropy threshold selection based on particle swarm optimization. Applied Mathematics and Computation. 2007;184(2):503-13. [CrossRef]

- Sharma A, Chaturvedi R, Kumar S, Dwivedi UK. Multi-level image thresholding based on Kapur and Tsallis entropy using firefly algorithm. Journal of Interdisciplinary Mathematics. 2020;23(2):563-71. [CrossRef]

- Nobre RH, Rodrigues FAA, Marques RCP, Nobre JS, Neto JFSR, Medeiros FNS. SAR Image Segmentation With Rényi's Entropy. IEEE Signal Processing Letters. 2016;23(11):1551-5. [CrossRef]

- Wang S, Fan J. Simplified expression and recursive algorithm of multi-threshold Tsallis entropy. Expert Systems with Applications. 2024;237:121690. [CrossRef]

- Abualigah L, Almotairi KH, Elaziz MA. Multilevel thresholding image segmentation using meta-heuristic optimization algorithms: comparative analysis, open challenges and new trends. Applied Intelligence. 2023;53(10):11654-704. [CrossRef]

- Nadimi-Shahraki MH, Zamani H, Asghari Varzaneh Z, Mirjalili S. A Systematic Review of the Whale Optimization Algorithm: Theoretical Foundation, Improvements, and Hybridizations. Archives of Computational Methods in Engineering. 2023;30(7):4113-59. [CrossRef]

- Huo F, Wang Y, Ren W. Improved artificial bee colony algorithm and its application in image threshold segmentation. Multimedia Tools and Applications. 2022;81(2):2189-212. [CrossRef]

- Makhadmeh SN, Al-Betar MA, Doush IA, Awadallah MA, Kassaymeh S, Mirjalili S, et al. Recent Advances in Grey Wolf Optimizer, its Versions and Applications: Review. IEEE Access. 2024;12:22991-3028. [CrossRef]

- Song H, Wang J, Bei J, Wang M. Modified snake optimizer based multi-level thresholding for color image segmentation of agricultural diseases. Expert Systems with Applications. 2024;255:124624. [CrossRef]

- Chen D, Li X, Li S. A Novel Convolutional Neural Network Model Based on Beetle Antennae Search Optimization Algorithm for Computerized Tomography Diagnosis. IEEE Transactions on Neural Networks and Learning Systems. 2023;34(3):1418-29. [CrossRef]

- Sawant SS, Prabukumar M, Loganathan A, Alenizi FA, Ingaleshwar S. Multi-objective multi-verse optimizer based unsupervised band selection for hyperspectral image classification. International Journal of Remote Sensing. 2022;43(11):3990-4024. [CrossRef]

- Alanazi F, Bilal M, Armghan A, Hussan MR. A Metaheuristic Approach Based Feasibility Assessment and Design of Solar, Wind, and Grid Powered Charging of Electric Vehicles. IEEE Access. 2024;12:82599-621. [CrossRef]

- Mahajan S, Mittal N, Pandit AK. Image segmentation using multilevel thresholding based on type II fuzzy entropy and marine predators algorithm. Multimedia Tools and Applications. 2021;80(13):19335-59. [CrossRef]

- Zhao D, Liu L, Yu F, Heidari AA, Wang M, Liang G, et al. Chaotic random spare ant colony optimization for multi-threshold image segmentation of 2D Kapur entropy. Knowledge-Based Systems. 2021;216:106510. [CrossRef]

- Ramesh Babu P, Srikrishna A, Gera VR. Diagnosis of tomato leaf disease using OTSU multi-threshold image segmentation-based chimp optimization algorithm and LeNet-5 classifier. Journal of Plant Diseases and Protection. 2024;131(6):2221-36. [CrossRef]

- Jia H, Wen Q, Wang Y, Mirjalili S. Catch fish optimization algorithm: a new human behavior algorithm for solving clustering problems. Cluster Computing. 2024;27(9):13295-332. [CrossRef]

- Bourzik A, Bouikhalene B, El-Mekkaoui J, Hjouji A. Accurate image reconstruction by separable krawtchouk-charlier moments with automatic parameter selection using artificial bee colony optimization. Multimedia Tools and Applications. 2025;84(16):16083-104. [CrossRef]

- Nasir M, Sadollah A, Mirjalili S, Mansouri SA, Safaraliev M, Rezaee Jordehi A. A Comprehensive Review on Applications of Grey Wolf Optimizer in Energy Systems. Archives of Computational Methods in Engineering. 2025;32(4):2279-319. [CrossRef]

- Xue J, Shen B. Dung beetle optimizer: a new meta-heuristic algorithm for global optimization. The Journal of Supercomputing. 2023;79(7):7305-36. [CrossRef]

- Jiachen H, Li-hui F. Robot path planning based on improved dung beetle optimizer algorithm. Journal of the Brazilian Society of Mechanical Sciences and Engineering. 2024;46(4):235. [CrossRef]

- Xia H, Chen L, Xu H. Multi-strategy dung beetle optimizer for global optimization and feature selection. International Journal of Machine Learning and Cybernetics. 2025;16(1):189-231. [CrossRef]

- Bei J, Wang J, Song H, Liu H. Slime mould algorithm with mechanism of leadership and self-phagocytosis for multilevel thresholding of color image. Applied Soft Computing. 2024;163:111836. [CrossRef]

- Qian Y, Tu J, Luo G, Sha C, Heidari AA, Chen H. Multi-threshold remote sensing image segmentation with improved ant colony optimizer with salp foraging. Journal of Computational Design and Engineering. 2023;10(6):2200-21. [CrossRef]

| Function name | No. | Functions | |

|---|---|---|---|

| Unimodal Function |

1 | Shifted and full Rotated Zakharov Function | 300 |

| Basic Functions |

2 | Shifted and fully rotated Rosenbrock’s Function | 400 |

| 3 | Shifted and full Rotated Expanded Schaffer’s f6 Function | 600 | |

| 4 | Shifted and full Rotated Non-Continuous Rastrigin’s Function | 800 | |

| 5 | Shifted and full Rotated Levy Function | 900 | |

| Hybrid Functions |

6 | Hybrid Function 1 (N = 3) | 1800 |

| 7 | Hybrid Function 2 (N = 6) | 2000 | |

| 8 | Hybrid Function 3 (N = 5) | 2200 | |

| Composition Functions |

9 | Composition Function 1 (N = 5) | 2300 |

| 10 | Composition Function 2 (N = 4) | 2400 | |

| 11 | Composition Function 3 (N = 5) | 2600 | |

| 12 | Composition Function 4 (N = 6) | 2700 | |

| Func. | Dim. | Indi. | MIDBO | GWO | SO | MVO | DBO | FPA | BAS | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | 10 | Best | 300 | 345.3092 | 318.3107 | 300.0108 | 300 | 1001.255 | 1988.901 | ||||

| Std | 1.98E-13 | 1607.695 | 1087.288 | 0.03542 | 668.6178 | 2912.941 | 2071.044 | ||||||

| Mean | 300 | 1737.622 | 1262.631 | 300.0489 | 576.2715 | 5345.38 | 52.18.876 | ||||||

| F2 | 10 | Best | 400.1135 | 402.0848 | 400.0849 | 400.0387 | 400.0513 | 412.3448 | 629.5263 | ||||

| Std | 1.9476 | 23.1172 | 2.7056 | 12.5278 | 27.8919 | 9.1908 | 700.6967 | ||||||

| Mean | 401.6285 | 422.3099 | 404.0562 | 408.4185 | 419.7833 | 427.7779 | 1640.674 | ||||||

| F3 | 10 | Best | 600.0023 | 600.0828 | 600.6971 | 600.1822 | 600.1631 | 622.5199 | 611.012 | ||||

| Std | 0.5624 | 0.5627 | 1.1817 | 3.1383 | 5.0678 | 6.0866 | 8.3312 | ||||||

| Mean | 600.2731 | 600.5877 | 601.4654 | 602.0208 | 605.0228 | 633.1458 | 625.2483 | ||||||

| F4 | 10 | Best | 803.9798 | 804.1978 | 804.5676 | 808.956 | 810.7761 | 825.7786 | 819.7244 | ||||

| Std | 3.2685 | 0.5627 | 3.8431 | 11.2878 | 11.9834 | 4.9787 | 10.0399 | ||||||

| Mean | 808.5898 | 814.9306 | 810.357 | 823.2847 | 830.4716 | 835.568 | 839.1543 | ||||||

| F5 | 10 | Best | 900 | 900.1186 | 900.2933 | 900.0014 | 900.4638 | 1031.73 | 917.2049 | ||||

| Std | 0.0693 | 11.5821 | 8.3399 | 0.5909 | 26.5231 | 190.3919 | 87.0764 | ||||||

| Mean | 900.0507 | 905.1358 | 902.081 | 900.2423 | 926.039 | 1309.526 | 1065.214 | ||||||

| F6 | 10 | Best | 1803.5 | 1913.462 | 2065.397 | 1967.53 | 2138.233 | 9387.876 | 1848.408 | ||||

| Std | 239.1878 | 2319.833 | 3568.985 | 2393.873 | 1934.538 | 77376.31 | 2374.0863 | ||||||

| Mean | 1959.214 | 6110.882 | 7523.001 | 4186.314 | 5370.294 | 93423.94 | 4431.624 | ||||||

| F7 | 10 | Best | 2000.624 | 2005.941 | 2006.657 | 2017.7 | 2001.62 | 2044.477 | 2033.249 | ||||

| Std | 6.9295 | 10.0903 | 5.6428 | 28.1291 | 12.4153 | 8.8352 | 20.824 | ||||||

| Mean | 2020.596 | 2009.362 | 2028.812 | 2031.539 | 2027.971 | 2058.375 | 2060.787 | ||||||

| F8 | 10 | Best | 2200.159 | 2210.208 | 2205.768 | 2221.976 | 2220.567 | 2228.081 | 2217.587 | ||||

| Std | 7.5046 | 4.4646 | 5.6428 | 42.2656 | 4.1016 | 3.6022 | 16.1669 | ||||||

| Mean | 2218.1 | 2223.985 | 2223.56 | 2243.028 | 2225.904 | 2234.494 | 2232.519 | ||||||

| F9 | 10 | Best | 2485.502 | 2529.293 | 2488.065 | 2529.289 | 2529.284 | 2494.609 | 2656.135 | ||||

| Std | 1.53E-12 | 41.98763 | 2.2671 | 26.8173 | 34.1851 | 29.5603 | 37.0427 | ||||||

| Mean | 2485.502 | 2562.242 | 2491.389 | 2534.23 | 2549.373 | 2524.606 | 2722.926 | ||||||

| F10 | 10 | Best | 2403.54 | 2500.22 | 2422.562 | 2500.203 | 2500.381 | 2508.011 | 2502.788 | ||||

| Std | 63.4296 | 83.6739 | 59.7726 | 135.8494 | 62.1079 | 124.0359 | 301.3793 | ||||||

| Mean | 2560.141 | 2561.251 | 2527.178 | 2590.259 | 2539.257 | 2576.715 | 2694.086 | ||||||

| F11 | 10 | Best | 2600 | 2731.359 | 2993.586 | 2603.593 | 2600 | 3175.546 | 4039.877 | ||||

| Std | 54.0871 | 93.1558 | 64.0764 | 203.5316 | 243.9764 | 553.838 | 1687.189 | ||||||

| Mean | 2623.544 | 2959.11 | 3016.3085 | 2751.437 | 2853.169 | 4349.104 | 7289.763 | ||||||

| F12 | 10 | Best | 2846.017 | 2862.752 | 2855.21 | 2858.638 | 2863.159 | 2864.106 | 2946.976 | ||||

| Std | 13.5219 | 4.8807 | 4.7232 | 1.4237 | 16.3449 | 7.6466 | 64.0214 | ||||||

| Mean | 2896.449 | 2866.59 | 2864.581 | 2863.478 | 2872.096 | 2897.395 | 3069.284 | ||||||

| Function | GWO | SO | MVO | DBO | FPA | BAS |

|---|---|---|---|---|---|---|

| F1 | 5.5321E-74 | 5.5321E-74 | 5.5321E-74 | 5.5321E-74 | 5.5321E-74 | 5.5321E-74 |

| F2 | 7.3994E-62 | 7.3994E-62 | 7.3994E-62 | 7.3994E-62 | 7.3994E-62 | 7.3994E-62 |

| F3 | 1.8973E-55 | 1.8974E-55 | 1.8974E-55 | 1.8974E-55 | 1.8974E-55 | 1.8974E-55 |

| F4 | 1.3598E-83 | 1.3598E-83 | 1.3598E-83 | 1.3598E-83 | 1.3598E-83 | 1.3598E-83 |

| F5 | 5.4567E-59 | 5.4567E-59 | 5.4567E-59 | 5.4567E-59 | 5.4567E-59 | 5.4567E-59 |

| F6 | 4.8442E-69 | 4.8442E-69 | 4.8442E-69 | 4.8442E-69 | 4.8442E-69 | 4.8442E-69 |

| F7 | 1.0967E-81 | 1.0967E-81 | 1.0967E-81 | 1.0967E-81 | 1.0967E-81 | 1.0967E-81 |

| F8 | 1.4881E-45 | 1.4881E-45 | 1.4881E-45 | 1.4881E-45 | 1.4881E-45 | 1.4881E-45 |

| F9 | 9.7451E-79 | 9.7451E-79 | 9.7451E-79 | 9.7451E-79 | 9.7451E-79 | 9.7451E-79 |

| F10 | 7.2771E-47 | 7.2771E-47 | 7.2771E-47 | 7.2771E-47 | 7.2771E-47 | 7.2771E-47 |

| F11 | 8.9699E-68 | 8.9699E-68 | 8.9699E-68 | 8.9699E-68 | 8.9699E-68 | 8.9699E-68 |

| F12 | 4.9385E-76 | 4.9385E-76 | 4.9385E-76 | 4.9385E-76 | 4.9385E-76 | 4.9385E-76 |

| Function | Indicators | DBO | SDBO | FDBO | MDBO | MIDBO |

|---|---|---|---|---|---|---|

| F1 | Best | 322.2929 | 321.6293 | 300.3925 | 301.7422 | 300 |

| 300 | Mean | 483.0199 | 320.859 | 300.0004 | 308.6264 | 300 |

| Std | 246.5947 | 86.5498 | 0.0014611 | 31.9939 | 2.9363E-13 | |

| F2 | Best | 400.5527 | 400.049 | 400.3191 | 400.0393 | 400.0249 |

| 400 | Mean | 427.8402 | 404.5403 | 409.3614 | 407.4164 | 404.0657 |

| Std | 32.4372 | 2.572 | 16.8402 | 15.4863 | 2.5672 | |

| F3 | Best | 600.0179 | 600.0032 | 600.2285 | 600.0002 | 600.0001 |

| 600 | Mean | 607.6264 | 601.6133 | 604.9408 | 600.0765 | 600.0241 |

| Std | 8.116 | 2.2539 | 6.1834 | 0.2824 | 0.53487 | |

| F4 | Best | 810.9445 | 804.9748 | 808.9546 | 804.2699 | 804.1748 |

| 800 | Mean | 832.6764 | 825.6367 | 826.1342 | 813.5914 | 810.2481 |

| Std | 11.3855 | 10.6511 | 10.4774 | 5.8411 | 3.2546 | |

| F5 | Best | 900.0919 | 900.0079 | 900.0895 | 900 | 900 |

| 800 | Mean | 940.6964 | 924.9668 | 940.7126 | 900.0776 | 900.1898 |

| Std | 74.988 | 37.9901 | 68.5948 | 0.07706 | 0.2028 | |

| F6 | Best | 1883.3084 | 1881.6132 | 1819.4202 | 1884.9456 | 1814.0878 |

| 1800 | Mean | 5009.0288 | 14100.7001 | 7991.9951 | 5909.5978 | 2325.9438 |

| Std | 2269.6112 | 12613.1149 | 11537.774 | 4289.004 | 809.6063 | |

| F7 | Best | 2019.6384 | 2003.5749 | 2001.3025 | 2000.9953 | 2004.9748 |

| 2000 | Mean | 2029.484 | 2028.1521 | 2029.8622 | 2021.676 | 2021.2029 |

| Std | 15.6998 | 12.9299 | 13.6341 | 6.6804 | 5.3636 | |

| F8 | Best | 2220.1304 | 2220.2234 | 2203.6886 | 2203.9429 | 2200.5355 |

| 2200 | Mean | 226.1435 | 2222.3121 | 2220.1613 | 2222.014 | 2219.2819 |

| Std | 4.9128 | 6.3306 | 3.7943 | 5.173 | 2.0135 | |

| F9 | Best | 2529.2844 | 2485.5017 | 2485.5017 | 2485.5017 | 2485.5017 |

| 2300 | Mean | 2542.2794 | 2492.6967 | 2485.5018 | 2485.502 | 2488.4953 |

| Std | 24.9954 | 16.3847 | 39.4088 | 0.0005607 | 0.000216 | |

| F10 | Best | 2500.3708 | 2500.4565 | 2455.0457 | 2450.2654 | 2400.2008 |

| 2400 | Mean | 2556.2397 | 2626.9604 | 2573.4804 | 2520.514 | 2558.5539 |

| Std | 64.9599 | 125.0734 | 64.4409 | 52.7332 | 57.5874 | |

| F11 | Best | 2600 | 2600 | 2600 | 2600 | 2600 |

| 2600 | Mean | 2845.5632 | 2791.4051 | 2744.7952 | 2703.8944 | 2620.3293 |

| Std | 230.5968 | 154.3065 | 159.0115 | 158.1639 | 51.9195 | |

| F12 | Best | 2861.1537 | 2846.7353 | 2847.6924 | 2846.6503 | 2900.0014 |

| 2700 | Mean | 2869.9378 | 2898.2265 | 2893.4513 | 2887.7465 | 2900.002 |

| Std | 15.2099 | 9.7251 | 17.0214 | 22.5967 | 0.0001819 |

| Image | Threshold quantity | MIDBO | DBO | FPA | SO | GWO | WOA |

|---|---|---|---|---|---|---|---|

| Camera | 4 | 22.30 | 22.27 | 21.96 | 21.99 | 19.96 | 22.14 |

| 6 | 24.80 | 24.34 | 23.76 | 24.09 | 21.05 | 24.29 | |

| 8 | 26.49 | 24.49 | 25.74 | 26.19 | 23.60 | 26.40 | |

| 12 | 28.57 | 28.30 | 26.97 | 28.86 | 25.03 | 28.85 | |

| Pepper | 4 | 21.01 | 20.82 | 20.01 | 20.93 | 16.90 | 20.81 |

| 6 | 24.03 | 22.62 | 22.52 | 23.16 | 21.37 | 23.10 | |

| 8 | 25.58 | 24.76 | 23.58 | 24.86 | 20.32 | 24.41 | |

| 12 | 28.91 | 26.25 | 27.29 | 27.33 | 25.40 | 26.17 | |

| Plane | 4 | 22.00 | 21.94 | 21.19 | 21.99 | 18.52 | 21.86 |

| 6 | 25.15 | 25.01 | 23.53 | 25.08 | 21.50 | 21.88 | |

| 8 | 26.95 | 26.80 | 26.49 | 26.65 | 25.72 | 26.75 | |

| 12 | 30.20 | 27.60 | 27.88 | 28.64 | 25.45 | 29.06 | |

| House2 | 4 | 22.91 | 21.10 | 21.96 | 21.29 | 20.10 | 22.53 |

| 6 | 24.72 | 24.55 | 24.32 | 23.46 | 23.15 | 23.50 | |

| 8 | 27.66 | 25.01 | 25.34 | 26.58 | 25.12 | 26.24 | |

| 12 | 29.62 | 29.09 | 28.32 | 29.54 | 25.73 | 27.46 |

| Image | Threshold quantity | MIDBO | DBO | FPA | SO | GWO | WOA |

|---|---|---|---|---|---|---|---|

| Camera | 4 | 0.69 | 0.70 | 0.68 | 0.68 | 0.69 | 0.69 |

| 6 | 0.73 | 0.73 | 0.72 | 0.71 | 0.64 | 0.72 | |

| 8 | 0.87 | 0.82 | 0.76 | 0.76 | 0.87 | 0.76 | |

| 12 | 0.89 | 0.83 | 0.76 | 0.92 | 0.73 | 0.89 | |

| Pepper | 4 | 0.73 | 0.73 | 0.71 | 0.73 | 0.67 | 0.71 |

| 6 | 0.80 | 0.77 | 0.78 | 0.78 | 0.75 | 0.78 | |

| 8 | 0.85 | 0.83 | 0.80 | 0.84 | 0.76 | 0.82 | |

| 12 | 0.91 | 0.88 | 0.89 | 0.90 | 0.86 | 0.86 | |

| Plane | 4 | 0.83 | 0.82 | 0.82 | 0.83 | 0.71 | 0.83 |

| 6 | 0.88 | 0.88 | 0.85 | 0.87 | 0.78 | 0.83 | |

| 8 | 0.90 | 0.90 | 0.89 | 0.90 | 0.88 | 0.89 | |

| 12 | 0.94 | 0.91 | 0.92 | 0.93 | 0.87 | 0.92 | |

| House2 | 4 | 0.80 | 0.77 | 0.80 | 0.86 | 0.61 | 0.78 |

| 6 | 0.82 | 0.85 | 0.83 | 0.81 | 0.79 | 0.84 | |

| 8 | 0.89 | 0.84 | 0.85 | 0.85 | 0.84 | 0.86 | |

| 12 | 0.89 | 0.89 | 0.90 | 0.89 | 0.85 | 0.86 |

| Image | Threshold quantity | MIDBO | DBO | FPA | SO | GWO | WOA |

|---|---|---|---|---|---|---|---|

| Camera | 4 | 0.86 | 0.86 | 0.85 | 0.84 | 0.85 | 0.85 |

| 6 | 0.90 | 0.90 | 0.89 | 0.89 | 0.81 | 0.89 | |

| 8 | 0.91 | 0.87 | 0.93 | 0.93 | 0.83 | 0.93 | |

| 12 | 0.94 | 0.94 | 0.93 | 0.94 | 0.90 | 0.94 | |

| Pepper | 4 | 0.77 | 0.77 | 0.76 | 0.76 | 0.72 | 0.77 |

| 6 | 0.83 | 0.82 | 0.81 | 0.83 | 0.79 | 0.82 | |

| 8 | 0.87 | 0.85 | 0.83 | 0.86 | 0.79 | 0.85 | |

| 12 | 0.92 | 0.89 | 0.90 | 0.91 | 0.87 | 0.88 | |

| Plane | 4 | 0.84 | 0.85 | 0.83 | 0.84 | 0.74 | 0.84 |

| 6 | 0.90 | 0.90 | 0.86 | 0.90 | 0.82 | 0.84 | |

| 8 | 0.92 | 0.92 | 0.92 | 0.92 | 0.91 | 0.92 | |

| 12 | 0.96 | 0.93 | 0.94 | 0.94 | 0.89 | 0.95 | |

| Hoe2 | 4 | 0.82 | 0.81 | 0.82 | 0.81 | 0.76 | 0.80 |

| 6 | 0.87 | 0.86 | 0.83 | 0.83 | 0.83 | 0.84 | |

| 8 | 0.91 | 0.87 | 0.88 | 0.89 | 0.87 | 0.88 | |

| 12 | 0.94 | 0.94 | 0.91 | 0.94 | 0.89 | 0.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).