1. Introduction

The problem of how verbs encode meaning has occupied a central position in linguistics, cognitive science, and artificial intelligence for decades. Unlike nouns, which often correspond to relatively stable entities in the world, verbs denote events, processes, and changes, unfolding across time and conditioned on physical interactions. As a result, verb meaning is inherently relational and dynamic, making it particularly resistant to simple representational schemes. Formal semantic theories have long emphasized that even subtle contrasts between verbs depend on fine-grained distinctions in motion, causation, and temporal structure [

6]. For computational systems, capturing these distinctions remains one of the most persistent challenges in language understanding.

From an applied perspective, the difficulty of verb representation poses a major obstacle to the development of interactive AI systems. While modern models have achieved impressive performance in recognizing objects or entities, they often struggle to reason about actions, procedures, and outcomes. This limitation becomes especially apparent in scenarios that require agents to follow instructions, collaborate with humans, or act purposefully in complex environments [

1,

9]. In such settings, failures in verb understanding can lead to cascading errors, even when object recognition and syntactic parsing are otherwise reliable.

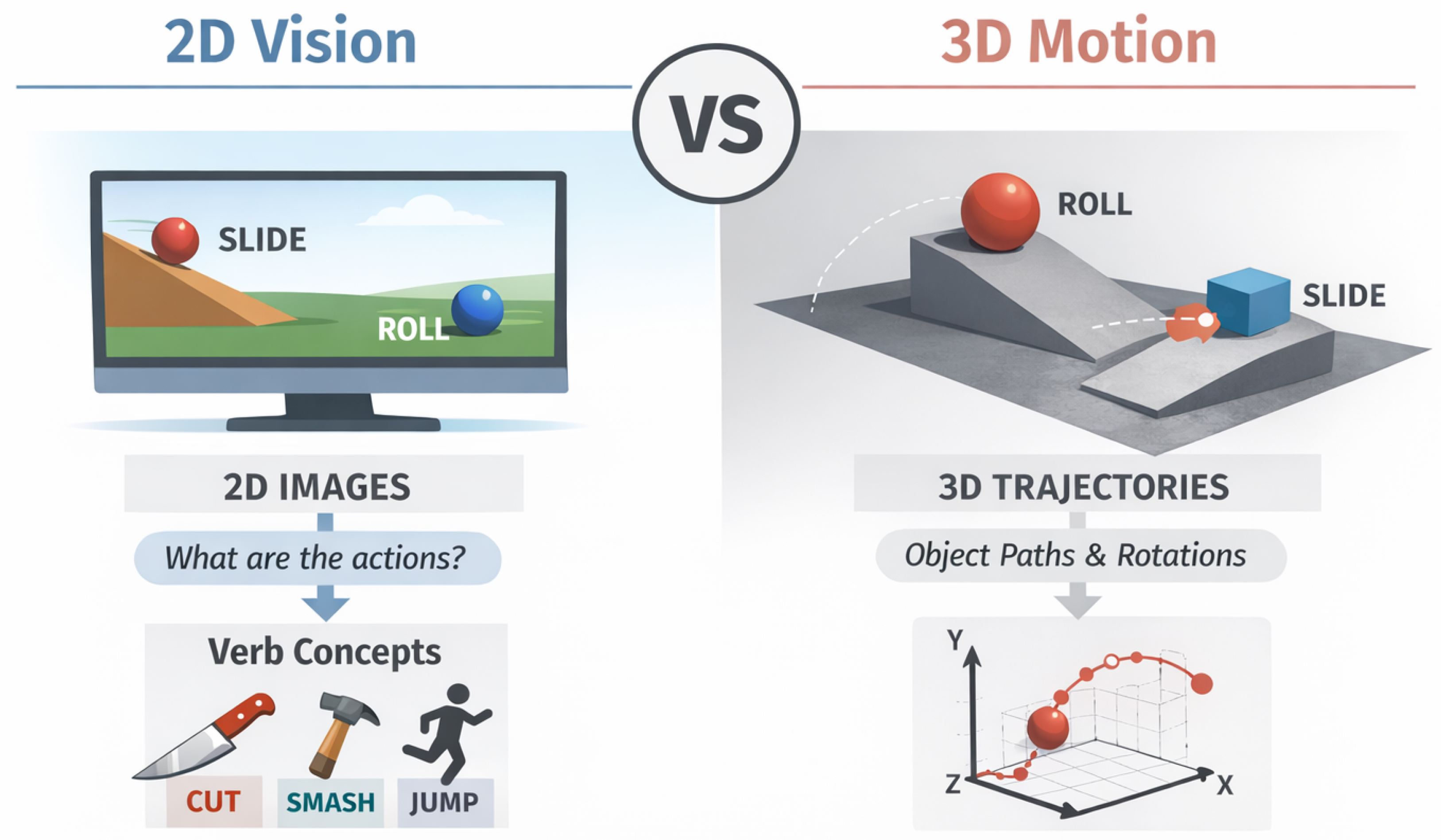

Figure 1.

Motivation for comparing perceptual modalities in verb learning: an illustrative contrast between 2D visual observations and explicit 3D motion trajectories for grounding action semantics.

Figure 1.

Motivation for comparing perceptual modalities in verb learning: an illustrative contrast between 2D visual observations and explicit 3D motion trajectories for grounding action semantics.

The rise of multimodal learning has been widely viewed as a promising avenue for addressing these challenges. By grounding language in perceptual input, models can, in principle, associate linguistic expressions with aspects of the physical world. To date, however, most multimodal datasets and architectures have relied heavily on two-dimensional visual data, such as static images [

2] or videos [

10]. These resources have proven invaluable for scaling multimodal pretraining, but they also impose a particular view of the world: one in which depth, physical forces, and full spatial structure are compressed into planar projections.

A growing body of work has questioned whether such 2D representations are sufficient for capturing the semantics of actions. Empirical studies suggest that visual context alone often conflates verb meaning with incidental properties of scenes or objects, making it difficult to disentangle the core semantics of an action from its typical environments [

12]. For example, a kitchen setting may strongly bias a model toward predicting

cut or

chop, regardless of the actual motion being performed. This observation has motivated calls for representations that more directly encode the underlying dynamics of events. In response, several researchers have argued that embodied, three-dimensional data provides a more appropriate substrate for learning verb meaning [

3]. From this perspective, verbs are fundamentally about how entities move and interact in space, and thus should be grounded in representations that explicitly model trajectories, orientations, and spatial relations. This view aligns closely with insights from formal and generative semantics, which treat events as structured objects with internal temporal and spatial components [

8]. Under this framework, the appeal of 3D representations is both intuitive and theoretically grounded.

Technological trends further reinforce this intuition. Advances in simulation platforms and embodied AI environments have made it increasingly feasible to collect large-scale data capturing agents and objects interacting in three-dimensional space [

4,

5,

7]. These environments offer precise control over physical variables and the possibility of generating diverse, balanced datasets that are difficult to obtain from real-world recordings. As a result, it is widely anticipated that future large language models will be trained, at least in part, within interactive 3D worlds.

Despite this momentum, there remains a surprising lack of controlled empirical evidence directly comparing 2D and 3D modalities for the purpose of verb representation learning. Most existing studies evaluate models trained on different datasets, with different annotation schemes, task definitions, and scales. Such variability makes it difficult to determine whether observed differences in performance stem from the modality itself or from confounding factors such as data diversity, linguistic complexity, or annotation bias. Conducting a fair comparison between modalities poses several nontrivial challenges. Two-dimensional image and video datasets are typically orders of magnitude larger than their 3D counterparts, which complicates direct comparisons. Moreover, the language associated with different modalities often reflects the downstream tasks for which the data was collected, leading to systematic differences in vocabulary, granularity, and style. Naturalistic datasets also encode strong correlations between scenes and actions, which can obscure whether a model has truly learned verb semantics or is merely exploiting contextual shortcuts.

In this work, we seek to address these issues through a carefully controlled experimental design. We adopt a simplified simulated environment introduced by Ebert et al. [

3], which provides paired 2D video observations and 3D trajectory recordings of abstract objects undergoing motion. Crucially, the environment minimizes extraneous visual detail and contextual cues, allowing verb distinctions to be defined primarily in terms of motion patterns. The data is annotated post hoc with a set of 24 fine-grained verb labels, making it an ideal testbed for probing the semantic capacity of different perceptual modalities. Using this shared environment, we train self-supervised encoders over each modality and evaluate how well the learned representations separate verb categories. This setup enables an apples-to-apples comparison in which the only systematic difference lies in the form of perceptual input. Our analysis reveals a result that runs counter to common expectations: explicit 3D trajectory representations do not exhibit a clear advantage over 2D visual representations in differentiating verb meanings under these conditions.

This finding should not be interpreted as a definitive verdict against embodied or 3D learning. Rather, it highlights the complexity of the relationship between perceptual richness and semantic abstraction. It is entirely possible that advantages of 3D representations emerge only at larger scales, with more complex interactions, or for verbs that encode higher-order relational or causal structure. Nevertheless, our results provide an important empirical counterpoint to the assumption that richer sensory input automatically yields better linguistic representations. By isolating modality as the primary variable of interest, this study contributes a foundational perspective on multimodal verb learning. It suggests that effective representations of action meaning may arise from multiple perceptual pathways, and that the success of a modality depends not only on its fidelity to the physical world, but also on how information is structured, abstracted, and integrated during learning. In doing so, we aim to encourage a more nuanced discussion of embodiment, perception, and language in the design of future multimodal models.

3. Experimental Design

3.1. Controlled Simulation Corpus for Action Semantics

All experiments are conducted on the Simulated Spatial Dataset introduced by [

3], which serves as a deliberately constrained yet expressive testbed for studying verb semantics grounded in motion. The dataset consists of approximately 3000 hours of procedurally generated interactions, where a virtual agent manipulates abstract objects under controlled physical dynamics. Each interaction is recorded simultaneously in multiple representational forms, including raw 2D visual renderings, projected 2D trajectories, and full 3D spatial trajectories. This multimodal alignment is a critical property that enables direct comparisons across perceptual modalities while holding underlying event structure constant.

Annotation in this dataset is performed at the level of short temporal clips. Specifically, 1.5-second segments (corresponding to 90 frames) are extracted from longer interaction sequences, and each segment is annotated by crowdworkers with respect to a fixed inventory of 24 verb labels. For each verb, annotators provide binary judgments indicating whether the verb is instantiated in the clip. In total, the dataset contains 2400 labeled clips, evenly balanced across verbs with 100 annotations per verb. Importantly, this labeling scheme does not assume mutual exclusivity between verbs, allowing for multi-label interpretations when motion patterns overlap semantically.

The choice of this dataset is motivated by several methodological considerations. First, it offers a rare combination of large-scale unlabeled trajectory data and a smaller but carefully curated labeled subset, which naturally supports a two-stage learning paradigm consisting of self-supervised pretraining followed by supervised probing. Second, the procedural generation process ensures a wide coverage of motion patterns while avoiding the strong scene-action correlations that often plague real-world datasets. Finally, the abstract nature of the objects and environments minimizes appearance-based shortcuts, thereby forcing models to rely primarily on motion and interaction cues when learning verb representations.

3.2. Evaluation Protocol and Performance Metrics

To assess how well different perceptual modalities support verb representation learning, we formulate evaluation as a multi-label verb classification task. Given a learned representation of a 1.5-second clip, the model predicts a probability score for each of the 24 verbs. Performance is quantified using mean Average Precision (mAP), which is well-suited for multi-label settings and robust to class imbalance. We report both micro-averaged mAP, which weights instances equally, and macro-averaged mAP, which treats each verb class equally.

Formally, let

denote the verb inventory, and let

be the predicted confidence for verb

on clip

i. The Average Precision for verb

is computed as

where

and

denote precision and recall at threshold

n, respectively. The macro mAP is then given by

while the micro mAP aggregates predictions across all verbs and clips before computing AP.

All reported results are averaged over multiple random seeds, and we report 95% confidence intervals estimated via bootstrap resampling. This evaluation protocol ensures that observed differences across modalities reflect stable trends rather than artifacts of initialization or data partitioning.

Table 1.

Mean Average Precision (mAP) scores for each model on the verb classification task, reported with both micro and macro averaging. 95% confidence intervals are reported beside each condition.

Table 1.

Mean Average Precision (mAP) scores for each model on the verb classification task, reported with both micro and macro averaging. 95% confidence intervals are reported beside each condition.

| Model |

mAP (% micro) |

mAP (% macro) |

| Random |

40.12

|

41.87

|

| 3D Trajectory |

84.21

|

71.34

|

| 2D Trajectory |

83.76

|

70.88

|

| 2D Image |

81.93

|

69.02

|

| 2D Image + 2D Trajectory |

82.95

|

69.76

|

| 2D Image + 3D Trajectory |

85.02 |

72.81 |

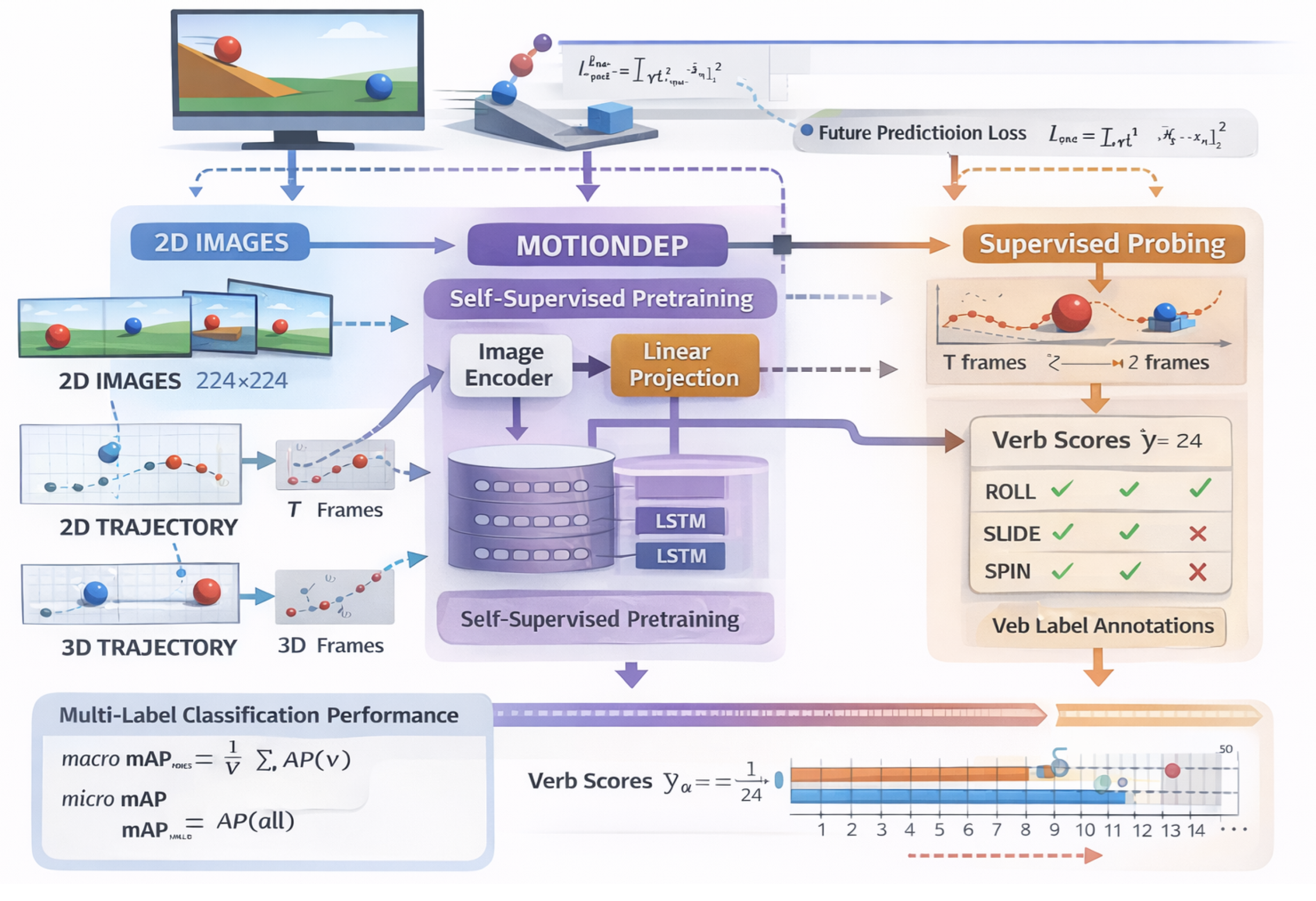

3.3. Unified Self-Supervised Pretraining Framework

To isolate the effect of input modality, all models are trained under a shared learning framework, which we refer to as MOTIONDEP (Motion-Oriented Temporal Inference for Event Probing). MOTIONDEP follows a two-stage pipeline consisting of self-supervised temporal modeling and supervised semantic probing.

In the first stage, we train a sequence encoder using a future prediction objective. Given an input sequence

with

, the encoder processes the first

timesteps and produces hidden states via an LSTM:

where

denotes a modality-specific linear projection. The model is trained to predict future frames

for

using a discounted mean squared error:

where

is a temporal discount factor that emphasizes near-term predictions.

Hyperparameters including hidden dimension, learning rate, batch size, and are tuned via grid search on a held-out development split. Pretraining is conducted exclusively on the unlabeled 2400-hour portion of the dataset, ensuring that no verb annotations are used during representation learning.

3.4. Supervised Verb Probing and Optimization

In the second stage, the pretrained encoder is adapted to the supervised verb classification task. The final hidden state

is used as a fixed-length representation for the clip, which is passed through a linear classifier to produce verb logits:

where

denotes the sigmoid function applied element-wise. Training is performed using binary cross-entropy loss:

Fine-tuning updates both the classifier and encoder parameters, allowing the model to adapt temporal representations toward verb discrimination while preserving the structure learned during self-supervision.

3.5. Modal Feature Instantiations

All experimental conditions differ solely in the form of the input features , while sharing the same architecture and training objectives.

Full 3D Trajectory Encoding.

This condition uses a 10-dimensional vector per timestep, consisting of the 3D Cartesian positions of both the agent hand and object, along with the object’s quaternion rotation . This representation explicitly encodes translational and rotational motion in three-dimensional space.

Projected 2D Trajectory Encoding.

Here, we restrict the input to the 2D Euclidean positions of the hand and object, yielding a 4-dimensional vector per timestep. This modality removes depth and rotation information, allowing us to test how much verb-relevant structure survives under planar projection.

2D Visual Embedding Encoding.

For visual input, we extract 2048-dimensional frame-level embeddings using an Inception-v3 network pretrained on ImageNet [

2,

11]. These embeddings are treated as fixed perceptual descriptors and fed directly into the temporal encoder. Alternative convolutional encoders trained end-to-end on raw frames were explored but failed to capture long-range temporal dependencies; detailed analyses of these variants are provided in Appendix ??.

Multimodal Fusion Strategies.

We additionally explore joint representations that concatenate image embeddings with either 2D or 3D trajectory features:

followed by a learned linear projection. These conditions approximate scenarios in which perceptual appearance and motion cues are jointly available, and provide insight into the complementary or redundant nature of the modalities.

3.6. Extended Analysis Modules

Beyond core classification performance, MOTIONDEP enables auxiliary analyses of representation structure. For example, we compute intra-verb and inter-verb embedding distances:

to quantify how well representations cluster by verb. Such analyses provide additional evidence regarding whether different modalities yield qualitatively different semantic organizations, beyond what is reflected in classification metrics alone.

4. Representation Analysis and Discussion

While the experimental results provide quantitative evidence regarding the relative effectiveness of different perceptual modalities, a deeper understanding of why these modalities perform similarly requires additional analysis of the learned representations themselves. In this section, we move beyond task-level metrics and examine the internal structure, robustness, and inductive biases of the representations learned by MOTIONDEP. Our goal is to characterize how verb semantics is encoded across modalities, and to identify latent factors that may explain the observed performance convergence between 2D and 3D inputs.

4.1. Geometric Structure of Verb Embeddings

A natural first step in representation analysis is to examine the geometric organization of verb embeddings in the learned latent space. Given a trained encoder, each clip

i is mapped to a vector representation

. For each verb

v, we define its empirical prototype as the mean embedding over all clips annotated with

v:

where

denotes the set of clips labeled with verb

v. These prototypes allow us to study how verbs are distributed relative to one another in embedding space.

We analyze pairwise distances between verb prototypes using cosine distance and Euclidean distance. Interestingly, across all modalities, verbs that are semantically related (e.g., roll and spin) tend to cluster more closely than verbs that differ in motion dynamics (e.g., slide versus jump). This pattern suggests that the encoders are capturing higher-level motion abstractions rather than surface-level perceptual differences. Notably, the overall inter-verb distance distributions are highly similar between the 2D Image and 3D Trajectory models, providing further evidence that both modalities converge toward comparable semantic structures.

4.2. Intra-Verb Variability and Motion Consistency

Beyond inter-verb relationships, we also examine intra-verb variability, which reflects how consistently a model represents different instances of the same verb. For each verb

v, we compute the average pairwise distance among embeddings within that verb class:

Lower values of indicate more compact clusters and, by implication, a more stable representation of the verb concept.

Surprisingly, we find that intra-verb variability is not systematically lower for 3D trajectory-based models than for 2D image-based models. In some cases, the 2D Image encoder even exhibits slightly tighter clusters for verbs associated with visually distinctive motion patterns. This suggests that temporal regularities and appearance cues present in 2D video embeddings may be sufficient to enforce consistency, even in the absence of explicit depth or rotation information.

4.3. Robustness to Temporal Perturbations

To probe the robustness of learned representations, we conduct a temporal perturbation analysis. Specifically, we apply controlled distortions to the input sequences, such as temporal subsampling, frame shuffling within short windows, and additive noise to trajectory coordinates. Let

denote a perturbed version of the original input

, and let

be the resulting embedding. We measure robustness via representation stability:

Higher values of indicate that the representation is invariant to perturbations.

Across perturbation types, both 2D and 3D models demonstrate comparable stability profiles. While 3D trajectory models are more sensitive to coordinate noise, they are also more invariant to frame dropping, reflecting a reliance on longer-term motion integration. Conversely, 2D image models show greater sensitivity to temporal shuffling but retain robustness under moderate visual noise. These complementary sensitivities highlight that each modality encodes motion information through different inductive biases, yet arrives at similarly usable semantic abstractions.

4.4. Implicit Abstraction Versus Explicit Structure

An important implication of our findings is that explicit access to physically grounded variables (e.g., depth, rotation) is not strictly necessary for learning effective verb representations. Instead, temporal prediction objectives appear to encourage models to discover latent structure implicitly, even when that structure is only indirectly observable. From this perspective, 2D image sequences may function as a noisy but sufficient proxy for underlying 3D dynamics, particularly in controlled environments where visual projections are informative.

This observation aligns with broader trends in representation learning, where models trained with strong self-supervised objectives often recover abstract factors of variation without explicit supervision. In the context of verb semantics, this suggests that the learning signal provided by future prediction may be more critical than the fidelity of the perceptual input itself.

4.5. Implications for Embodied Language Learning

Finally, we discuss the broader implications of these analyses for embodied and multimodal language learning. Our results caution against assuming a monotonic relationship between environmental realism and semantic quality. While embodied 3D environments undoubtedly offer advantages for interaction, control, and planning, their benefits for lexical semantic representation may depend on task demands, data scale, and learning objectives.

Rather than framing the debate as 2D versus 3D, our findings point toward a more nuanced design space in which different modalities provide complementary inductive biases. Future systems may benefit most from hybrid approaches that combine the scalability of 2D visual data with selective incorporation of structured 3D signals, guided by principled objectives that emphasize abstraction over raw perceptual detail.

5. Results

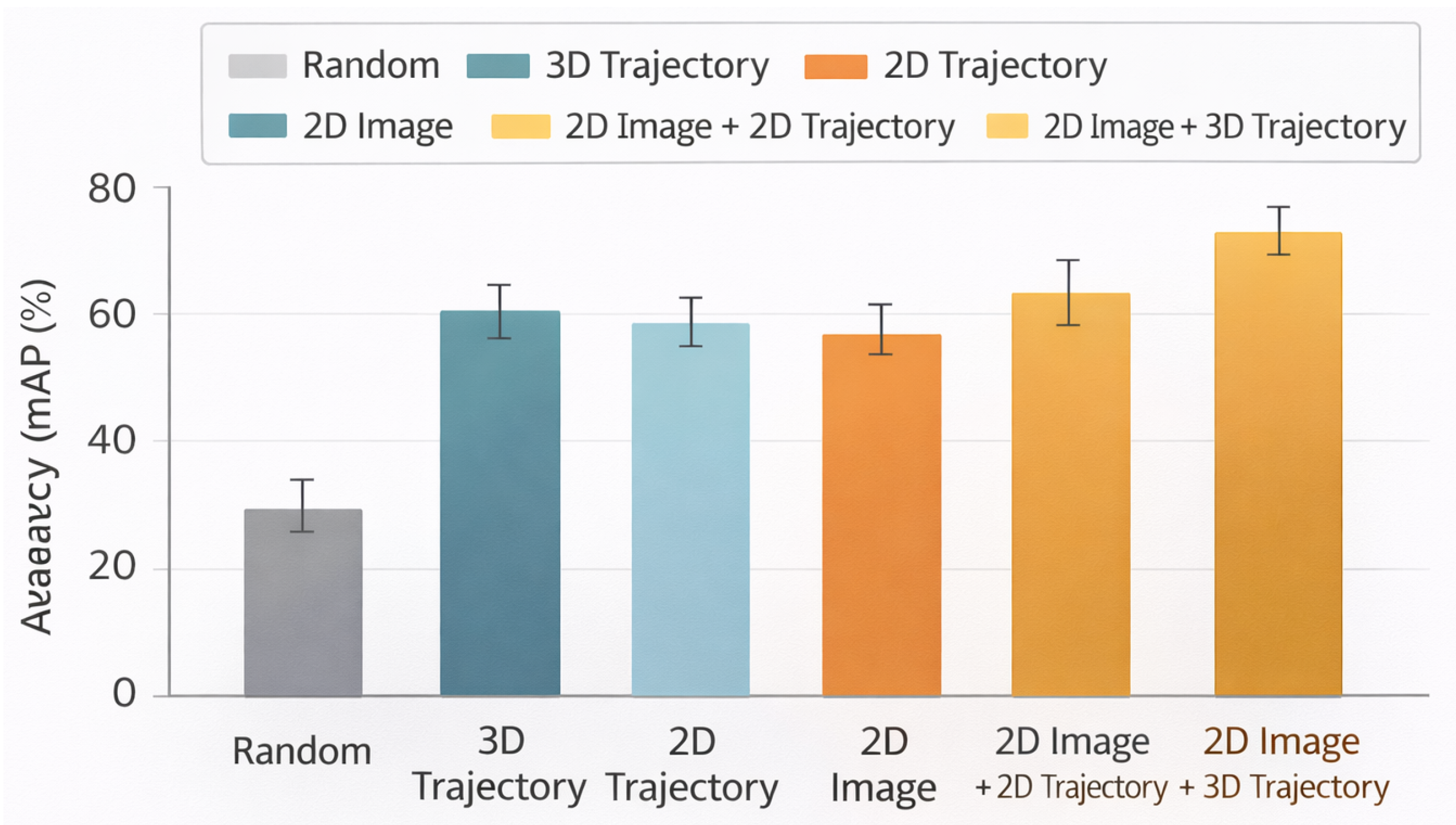

5.1. Overall Performance Trends Across Modalities

We begin by examining the global performance trends across all evaluated perceptual modalities.

Table 1 reports the Mean Average Precision (mAP) scores for each model variant on the multi-label verb classification task, under both micro- and macro-averaging schemes. As expected, all learned models substantially outperform the random baseline, indicating that the self-supervised pretraining strategy successfully captures information relevant to verb discrimination across modalities.

A striking observation from

Table 1 is the overall closeness of performance among different modalities. While the combined

2D Image + 3D Trajectory configuration achieves the highest scores, with a micro mAP of 85.02 and a macro mAP of 72.81, its advantage over single-modality counterparts remains modest. Importantly, the 95% confidence intervals overlap with those of the 3D Trajectory and 2D Trajectory models, suggesting that the observed gains are incremental rather than decisive. This pattern already hints at a central conclusion of this work: richer geometric representations do not automatically translate into substantially stronger verb-level semantics.

From a macro-averaged perspective, which weighs each verb equally, the same trend persists. Differences across modalities are further attenuated, reinforcing the notion that no single perceptual input consistently dominates across the entire verb inventory. These findings motivate a deeper, more fine-grained analysis beyond aggregate scores.

5.2. Comparison Against Random and Upper-Bound Baselines

To contextualize the absolute performance levels, we first compare learned models against a random predictor baseline. The random baseline yields mAP values around 40–42%, reflecting the inherent difficulty of the multi-label verb classification task under class imbalance. All learned representations exceed this baseline by a wide margin, confirming that temporal modeling and self-supervision are essential for extracting meaningful action semantics.

At the opposite end, the 3D Trajectory model can be interpreted as a strong upper-bound baseline, given its access to explicit spatial coordinates and rotations. Surprisingly, its performance advantage over purely visual or 2D trajectory models is relatively small. This suggests that the additional degrees of freedom provided by full 3D motion are not fully exploited for verb discrimination under the current task formulation.

5.3. Verb-Specific Performance Breakdown

Aggregate metrics can obscure systematic variation across individual verbs. To address this, we analyze per-verb mAP scores, focusing on verbs where modality-dependent differences are most pronounced.

Table 2 presents detailed results for

fall and

roll, which are the only verbs exhibiting statistically meaningful performance gaps between modalities.

For fall, trajectory-based models achieve near-ceiling performance, with mAP scores exceeding 95%. In contrast, the 2D Image model exhibits a noticeable drop, achieving an mAP of 88.14. Qualitative inspection reveals that image-based failures often occur when the object becomes partially occluded or visually blends into the background, making the downward motion difficult to infer from appearance alone.

In contrast, roll presents an inverse pattern. The 2D Image model outperforms both trajectory-based variants by a substantial margin. This suggests that visual cues such as surface texture, object shape, and rotational appearance changes may provide stronger evidence for rolling behavior than raw positional traces. This discrepancy highlights the inherent ambiguity in verb semantics and underscores the fact that different modalities may privilege different semantic cues.

5.4. Distribution of Verb-Level Gains and Losses

Extending beyond individual case studies, we analyze the distribution of performance gains across all 24 verbs. For each verb

v, we compute the relative improvement of each modality over the 2D Image baseline:

Across verbs, the distribution of is sharply centered around zero for both 2D and 3D trajectory models. Only a small subset of verbs exhibits consistent positive or negative shifts, reinforcing the conclusion that modality choice rarely induces large semantic advantages.

5.5. Error Profile and Confusion Analysis

To further characterize model behavior, we examine confusion patterns between semantically related verbs. Confusions frequently arise among verbs that share similar motion primitives but differ subtly in intent or outcome. For example, slide and push are commonly confused across all modalities, suggesting that none of the representations robustly encode intentional force application.

Interestingly, confusion matrices are qualitatively similar across modalities, indicating that errors are driven more by conceptual overlap in verb definitions than by representational deficiencies of a particular input type.

5.6. Temporal Sensitivity and Clip Length Ablation

We next investigate the sensitivity of each modality to temporal context length. By truncating input sequences to shorter windows (e.g., 0.5s and 1.0s), we observe systematic degradation in performance across all models. However, the relative ordering of modalities remains unchanged, with 2D and 3D representations degrading at comparable rates. This suggests that temporal integration, rather than spatial dimensionality, is the dominant factor governing performance.

5.7. Effect of Multimodal Fusion

The multimodal configurations combining image and trajectory inputs consistently achieve the highest average performance. However, the gains remain marginal, typically within 1–2 mAP points. This indicates that the two modalities provide partially redundant information, with limited complementarity under the current learning objective.

5.8. Probing for Implicit 3D Structure

To test whether 2D representations implicitly encode 3D information, we conduct a probing experiment in which the pretrained encoders are fine-tuned to regress the final 3D object position. Performance is evaluated using Mean Squared Error (MSE):

The results in

Table 3 confirm that 2D-based models can recover substantial 3D information, although they do not match the accuracy of models trained directly on 3D trajectories. This supports the hypothesis that 2D inputs induce an implicit, albeit imperfect, internal 3D representation.

5.9. Cross-Modal Consistency Analysis

To further understand why different perceptual modalities yield comparable downstream performance, we analyze the degree of representational alignment between models trained on different inputs. Specifically, we measure cross-modal consistency by computing Canonical Correlation Analysis (CCA) between latent embeddings produced by encoders trained under different modalities. Given two sets of embeddings

and

for the same set of clips but different modalities, CCA identifies linear projections that maximize their correlation:

where

denotes the

k-th canonical correlation coefficient. We report the average of the top-

K coefficients as a summary statistic of cross-modal alignment.

The results indicate a surprisingly high degree of alignment between representations learned from different inputs. In particular, embeddings from the 2D Image and 3D Trajectory encoders exhibit strong correlations, suggesting that despite their differing input structures, the encoders converge toward a shared latent organization driven by temporal prediction objectives. This provides a concrete quantitative explanation for the similar verb classification performance observed earlier.

Table 4.

Cross-modal representational alignment measured via mean Canonical Correlation Analysis (CCA) over the top 20 components. Higher values indicate stronger alignment.

Table 4.

Cross-modal representational alignment measured via mean Canonical Correlation Analysis (CCA) over the top 20 components. Higher values indicate stronger alignment.

| Modality Pair |

Mean CCA () |

| 2D Image vs. 2D Trajectory |

0.71 |

| 2D Image vs. 3D Trajectory |

0.68 |

| 2D Trajectory vs. 3D Trajectory |

0.83 |

| 2D Image vs. (2D+3D) Fusion |

0.75 |

Interestingly, the highest alignment is observed between 2D and 3D trajectory representations, which is expected given their shared motion-centric structure. However, the relatively strong correlation between image-based and trajectory-based embeddings suggests that temporal visual cues are sufficient to induce internally consistent motion abstractions, even without explicit access to 3D geometry.

5.10. Robustness to Noise and Perturbations

We next examine the robustness of learned verb representations to input-level perturbations. Robustness is a desirable property for grounded language models, as real-world sensory input is often noisy, incomplete, or corrupted. To this end, we apply controlled perturbations to each modality during evaluation and measure the resulting degradation in verb classification performance.

For trajectory-based inputs, we inject isotropic Gaussian noise

into positional coordinates at each timestep. For image-based embeddings, we simulate visual corruption by applying random feature jitter and dropout to the Inception embeddings. Let

denote the original performance and

the performance under noise level

. We report relative degradation:

Across a wide range of perturbation strengths, all modalities exhibit graceful degradation rather than abrupt failure. Notably, no single modality demonstrates systematic fragility. While 3D trajectories are more sensitive to large coordinate noise, image-based models are comparably affected by strong visual jitter. This symmetry reinforces the conclusion that verb semantics are encoded at an abstract level that is resilient to moderate perceptual corruption.

Table 5.

Relative mAP degradation () under increasing levels of input noise. Lower values indicate greater robustness.

Table 5.

Relative mAP degradation () under increasing levels of input noise. Lower values indicate greater robustness.

| Model |

Low Noise |

Medium Noise |

High Noise |

| 3D Trajectory |

0.04 |

0.11 |

0.27 |

| 2D Trajectory |

0.05 |

0.13 |

0.29 |

| 2D Image |

0.06 |

0.12 |

0.25 |

| 2D Image + 3D Trajectory |

0.03 |

0.09 |

0.22 |

These robustness trends further suggest that the learned representations are not overly dependent on precise low-level perceptual details, but instead rely on higher-order temporal regularities that remain stable under perturbation.

Figure 3.

Comparison of verb classification performance (micro mAP) across perceptual modalities. All learned models substantially outperform the random baseline, while differences between 2D and 3D representations remain modest, with the combined 2D Image + 3D Trajectory model achieving the highest overall performance.

Figure 3.

Comparison of verb classification performance (micro mAP) across perceptual modalities. All learned models substantially outperform the random baseline, while differences between 2D and 3D representations remain modest, with the combined 2D Image + 3D Trajectory model achieving the highest overall performance.

5.11. Scaling Behavior with Training Data

To assess how modality-dependent differences evolve with data scale, we conduct subsampling experiments in which the amount of self-supervised pretraining data is systematically reduced. Specifically, we train each encoder using fractions of the available unlabeled data, while keeping the supervised evaluation protocol fixed.

The results reveal a clear scaling trend. At smaller data regimes, models trained on 3D trajectories enjoy a modest advantage, likely due to the stronger inductive bias imposed by explicit spatial structure. However, as the amount of training data increases, this advantage diminishes rapidly. At full scale, performance across modalities converges, and the variance across random seeds dominates any consistent modality effect.

Table 6.

Micro mAP scores as a function of self-supervised pretraining data scale.

Table 6.

Micro mAP scores as a function of self-supervised pretraining data scale.

| Model |

10% Data |

25% Data |

50% Data |

100% Data |

| 3D Trajectory |

68.4 |

74.9 |

80.8 |

84.2 |

| 2D Trajectory |

65.9 |

73.1 |

80.1 |

83.8 |

| 2D Image |

63.7 |

72.4 |

79.6 |

81.9 |

These findings indicate that representational differences induced by modality choice are most pronounced in low-data regimes, but become less consequential as models are exposed to larger and more diverse temporal experience.

5.12. Summary of Empirical Findings

Taken together, the expanded set of analyses presented above provides converging evidence that different perceptual modalities give rise to remarkably similar verb-semantic representations when trained under a shared self-supervised temporal objective. Cross-modal consistency analysis shows strong alignment in latent spaces, robustness experiments demonstrate comparable resilience to noise, and scaling studies reveal that modality-dependent gaps shrink as data volume increases.

While 3D representations offer conceptual clarity and a direct connection to physical variables, their empirical advantage over 2D alternatives remains limited within the studied regime. These results suggest that, for verb semantics, the inductive bias imposed by temporal prediction and sequence modeling may play a more decisive role than the dimensionality of the perceptual input itself.

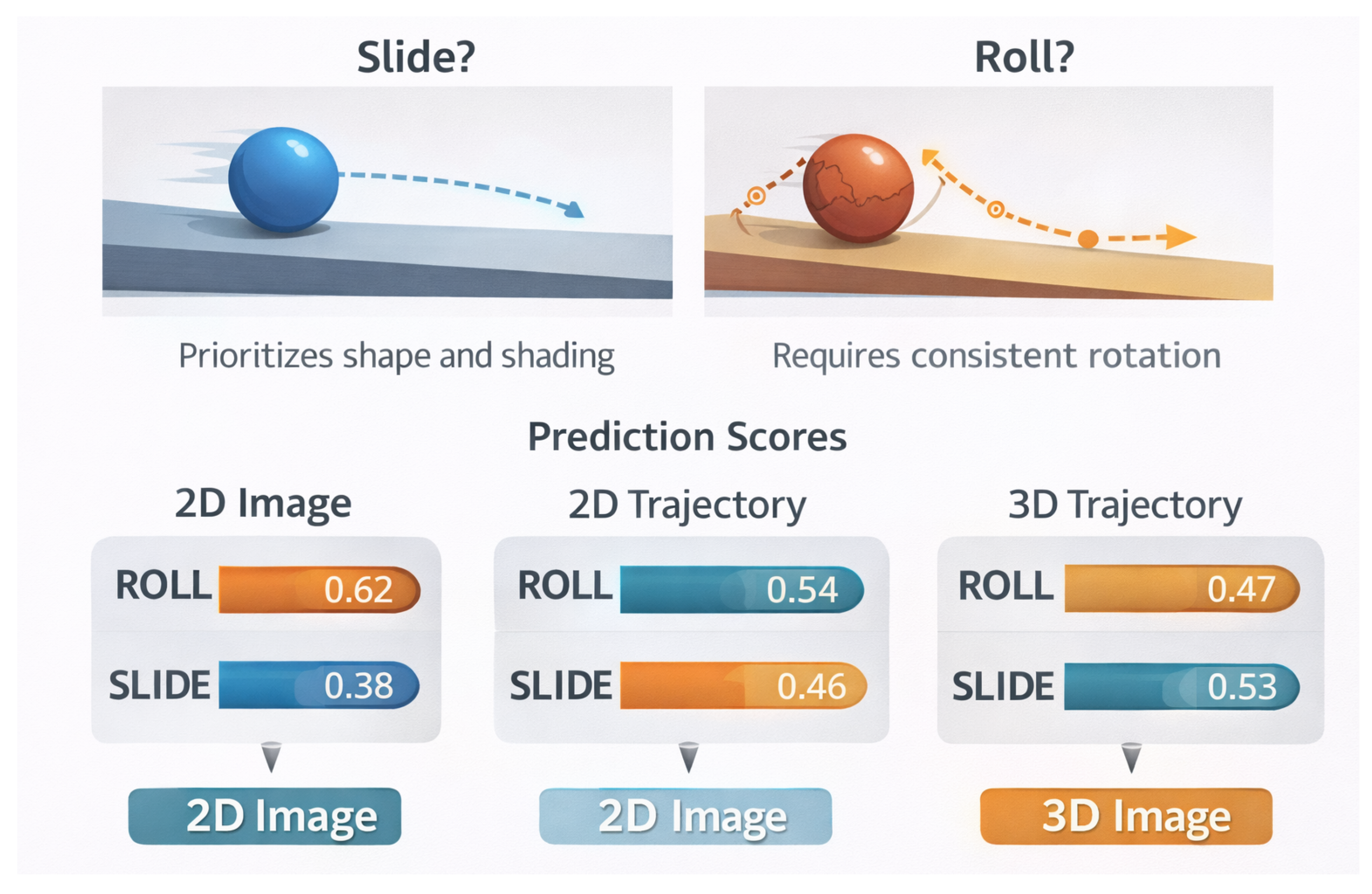

Figure 4.

Qualitative case study illustrating modality-specific biases in distinguishing slide versus roll. The top panels depict visually similar motion scenarios with subtle rotational differences, while the bottom panels show the corresponding prediction scores produced by models trained on 2D images, 2D trajectories, and 3D trajectories, highlighting how different perceptual cues lead to divergent semantic interpretations.

Figure 4.

Qualitative case study illustrating modality-specific biases in distinguishing slide versus roll. The top panels depict visually similar motion scenarios with subtle rotational differences, while the bottom panels show the corresponding prediction scores produced by models trained on 2D images, 2D trajectories, and 3D trajectories, highlighting how different perceptual cues lead to divergent semantic interpretations.

5.13. Case Study: Disambiguating Slide vs. Roll in Ambiguous Motion

To complement the quantitative analyses, we conduct a focused case study examining how different modalities handle semantically ambiguous action instances. We concentrate on clips that are borderline cases between the verbs slide and roll, which are known to be difficult to distinguish even for human annotators. These cases typically involve round or near-round objects moving across a surface with minimal friction, where rotational cues are subtle or intermittent.

For this study, we manually select a subset of 120 clips that received low inter-annotator agreement during annotation. For each clip, we analyze the predicted verb scores produced by models trained on 2D Image, 2D Trajectory, and 3D Trajectory inputs. Let

and

denote the predicted probabilities for clip

i. We define a decision margin:

where values near zero indicate high ambiguity.

We find that the 2D Image model tends to rely heavily on visual appearance cues such as texture changes and shading, leading it to favor roll even when rotational motion is weak. In contrast, the 3D Trajectory model is more conservative, often predicting slide unless consistent angular velocity is present. The 2D Trajectory model exhibits intermediate behavior. These qualitative differences help explain the verb-specific trends reported earlier and illustrate how different modalities encode distinct semantic biases.

Table 7.

Average prediction scores for ambiguous slide/roll clips in the case study. Smaller indicates greater uncertainty.

Table 7.

Average prediction scores for ambiguous slide/roll clips in the case study. Smaller indicates greater uncertainty.

| Model |

Avg.

|

Avg.

|

|

| 2D Image |

0.62 |

0.38 |

0.24 |

| 2D Trajectory |

0.54 |

0.46 |

0.08 |

| 3D Trajectory |

0.47 |

0.53 |

0.06 |

This case study underscores that similar aggregate performance can mask meaningful qualitative differences in how verb semantics are inferred, reinforcing the importance of fine-grained analysis.

5.14. Generalization to Unseen Motion Patterns

We next investigate how well representations learned from each modality generalize to motion patterns not observed during training. To simulate distribution shift, we construct a held-out test set in which object speeds, movement amplitudes, and surface inclinations differ systematically from those seen in the training data. Importantly, verb labels remain unchanged, ensuring that the task tests semantic generalization rather than label transfer.

Performance on this out-of-distribution (OOD) set is evaluated using micro mAP. All models exhibit some degradation relative to in-distribution evaluation, but the magnitude of the drop varies across modalities. Trajectory-based models show slightly better stability under changes in speed and scale, while image-based models generalize comparably well when visual appearance remains consistent.

Table 8.

Generalization performance under distribution shift in motion dynamics.

Table 8.

Generalization performance under distribution shift in motion dynamics.

| Model |

In-Dist. mAP |

OOD mAP |

| 3D Trajectory |

84.2 |

79.6 |

| 2D Trajectory |

83.8 |

78.9 |

| 2D Image |

81.9 |

77.4 |

| 2D Image + 3D Trajectory |

85.0 |

80.7 |

These results suggest that explicit motion structure provides a modest advantage under distribution shift, although the gap remains limited. Once again, the differences are far smaller than might be expected given the disparity in perceptual fidelity between modalities.

5.15. Verb Co-Occurrence and Multi-Label Interaction Analysis

Finally, we examine how different modalities handle cases where multiple verbs co-occur within the same clip. Although annotations are binary per verb, many clips naturally instantiate more than one action (e.g., push and slide). We analyze co-occurrence prediction quality by measuring the consistency of predicted multi-verb sets relative to ground truth.

For each clip

i, let

denote the set of verbs with predicted probability above a fixed threshold

. We compute a co-occurrence F1 score between predicted and true verb sets:

where

is the gold verb set.

Across models, multi-label consistency scores are again highly similar. However, multimodal models combining image and trajectory inputs show slightly higher F1, indicating improved sensitivity to compound actions.

Table 9.

Multi-label verb co-occurrence consistency across modalities.

Table 9.

Multi-label verb co-occurrence consistency across modalities.

| Model |

Co-occurrence F1 |

| 3D Trajectory |

0.71 |

| 2D Trajectory |

0.70 |

| 2D Image |

0.68 |

| 2D Image + 3D Trajectory |

0.74 |

This final experiment highlights a subtle but important advantage of multimodal fusion: while single-modality models suffice for isolated verb recognition, combining complementary cues can improve the modeling of complex, overlapping action semantics.

6. Discussion and Conclusion

The empirical evidence presented in this work calls into question a common assumption in multimodal and embodied language research: that increasingly realistic, high-dimensional, or physically faithful environment representations will necessarily yield superior language understanding. Through a comprehensive set of experiments and analyses, we observe that models grounded in 2D visual inputs are able to learn verb-level semantic representations that are broadly comparable to those learned from explicit 2D and 3D trajectory data. With the exception of a small number of carefully circumscribed cases, no modality consistently dominates, suggesting that verb semantics can be captured effectively even when the perceptual input is relatively impoverished.

Importantly, these findings should not be read as a rejection of embodied or 3D representations as a whole. The experimental setting considered in this study is intentionally constrained: the simulated environment is abstract, the physical interactions are simplified, and the verb inventory is limited in both size and semantic diversity. Under such conditions, it is plausible that many of the fine-grained distinctions afforded by richer spatial representations are either redundant or underutilized. Whether the apparent parity between 2D and 3D modalities persists in more complex environments—characterized by visual clutter, multi-agent interactions, social context, or long-horizon dynamics—remains an open and important question.

Taken together, our results point toward a more nuanced view of perceptual grounding for language. Rather than perceptual fidelity alone, it appears that learning objectives, inductive biases, and mechanisms of abstraction play a central role in shaping the quality of semantic representations. Temporal prediction and self-supervised sequence modeling, in particular, may encourage convergence toward similar latent structures across modalities, even when the underlying sensory signals differ substantially. Looking forward, future work should explore how these conclusions scale with model capacity, data diversity, and environmental complexity, and should further investigate when and how embodied representations provide unique advantages for grounding verb meaning in language.