Submitted:

04 December 2025

Posted:

10 December 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Motivations

1.1.1. Transformation of Large Sets of Signals

1.1.2. Filtering Based on Idea of Piecewise Function Interpolation

1.1.3. Exploiting Pseudo-Inverse Matrices in the Filter Model

1.1.4. Computational Work

1.2. Relevant works

1.2.1. Generic Optimal Linear (GOL) Filter [5]

1.2.2. Simplicial Canonical Piecewise Linear Filter [23]

1.2.3. Adaptive Piecewise Linear Filter [22]

1.2.4. Averaging Polynomial Filter [10,12]

1.2.5. Other Relevant Filters

1.3. Difficulties Associated with the Known Filtering Techniques

1.4. Differences from the Known Filtering Techniques

1.5. Contribution

2. Some Preliminaries

2.1. Notation

2.2. Brief Description of the Problem

2.3. Brief description of the method

3. Description of the Problem

3.1. Piecewise Linear Filter Model

3.2. Assumptions

3.3. The Problem

3.4. Interpolation Conditions

4. Main Results

4.1. General Device

4.2. Determination of Piecewise Linear Interpolation Filter

4.3. Numerical Realization of Filter and Associated Algorithm

4.3.1. Numerical Realization

4.3.2. Algorithm

4.4. Error Analysis

4.5. Some Remarks Related to the Assumptions of the Method

5. Simulations

5.1. General Consideration

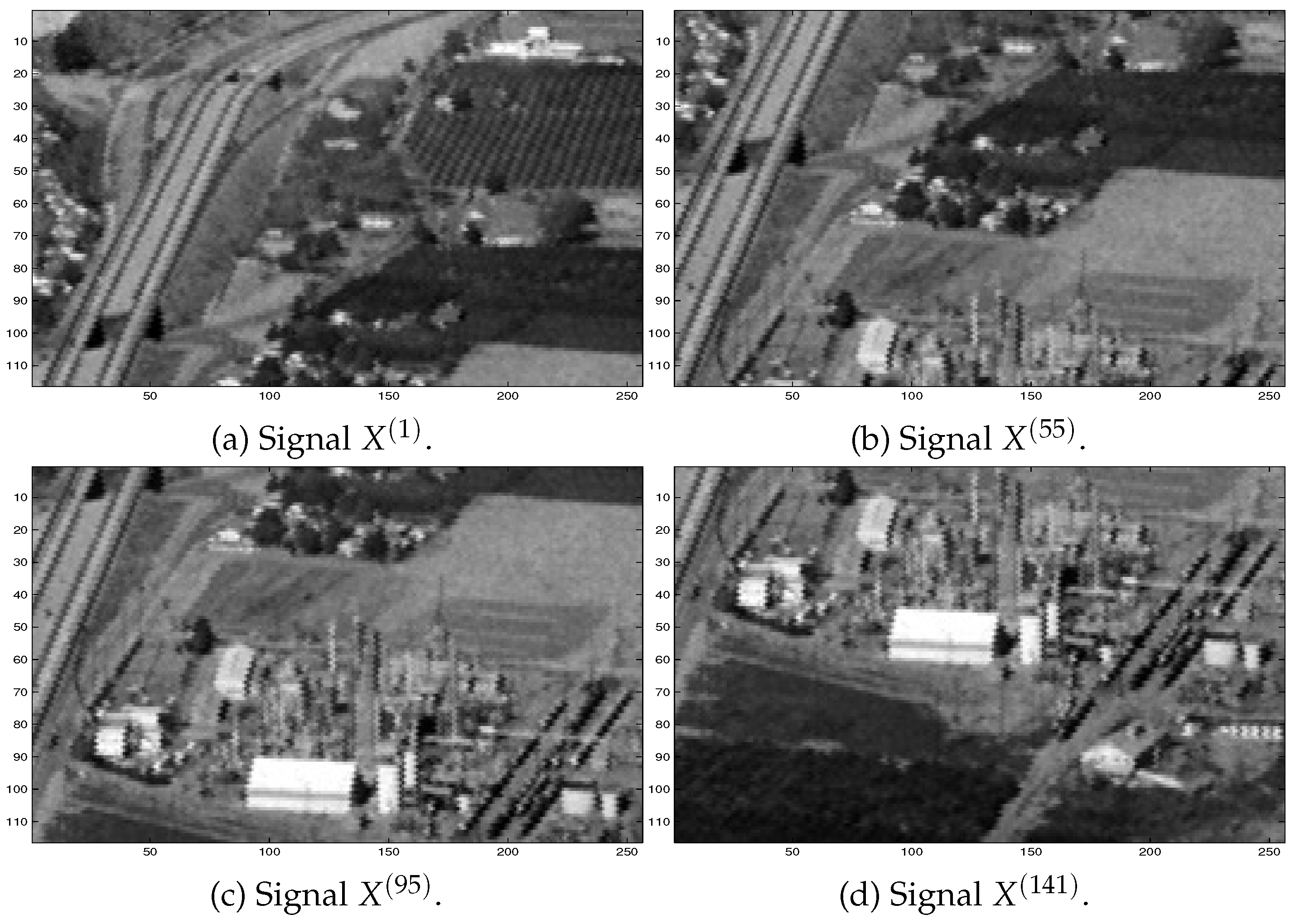

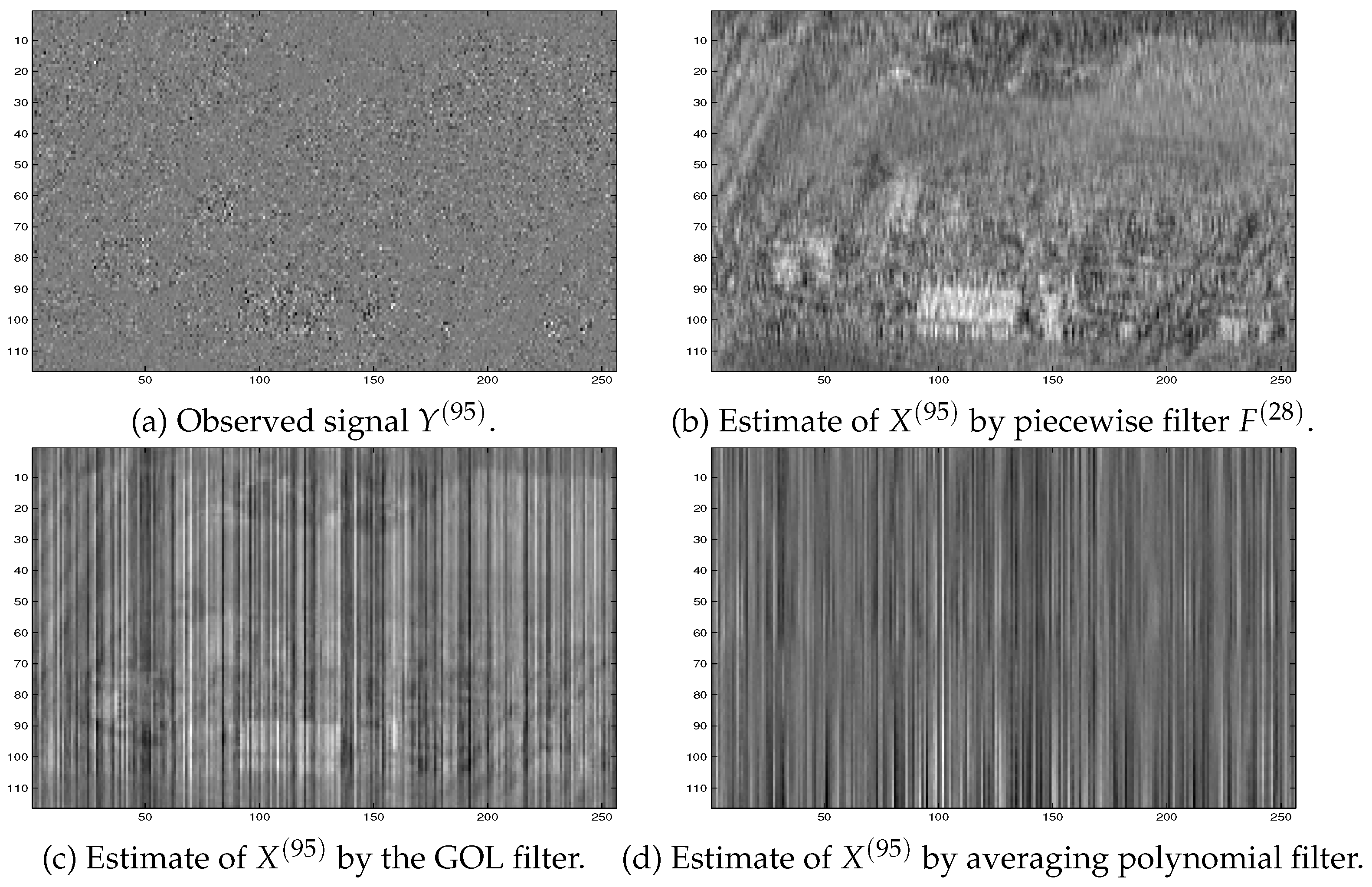

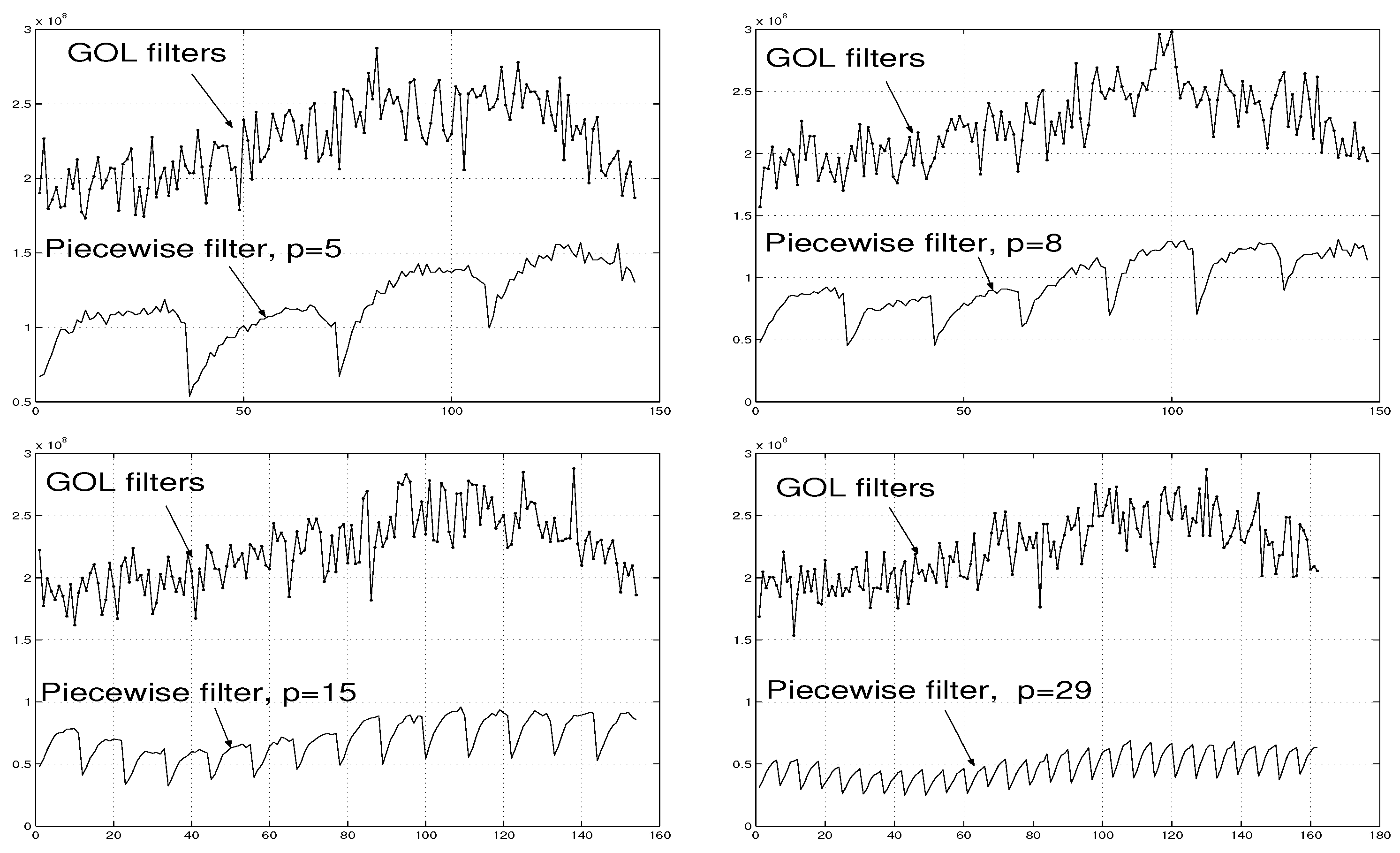

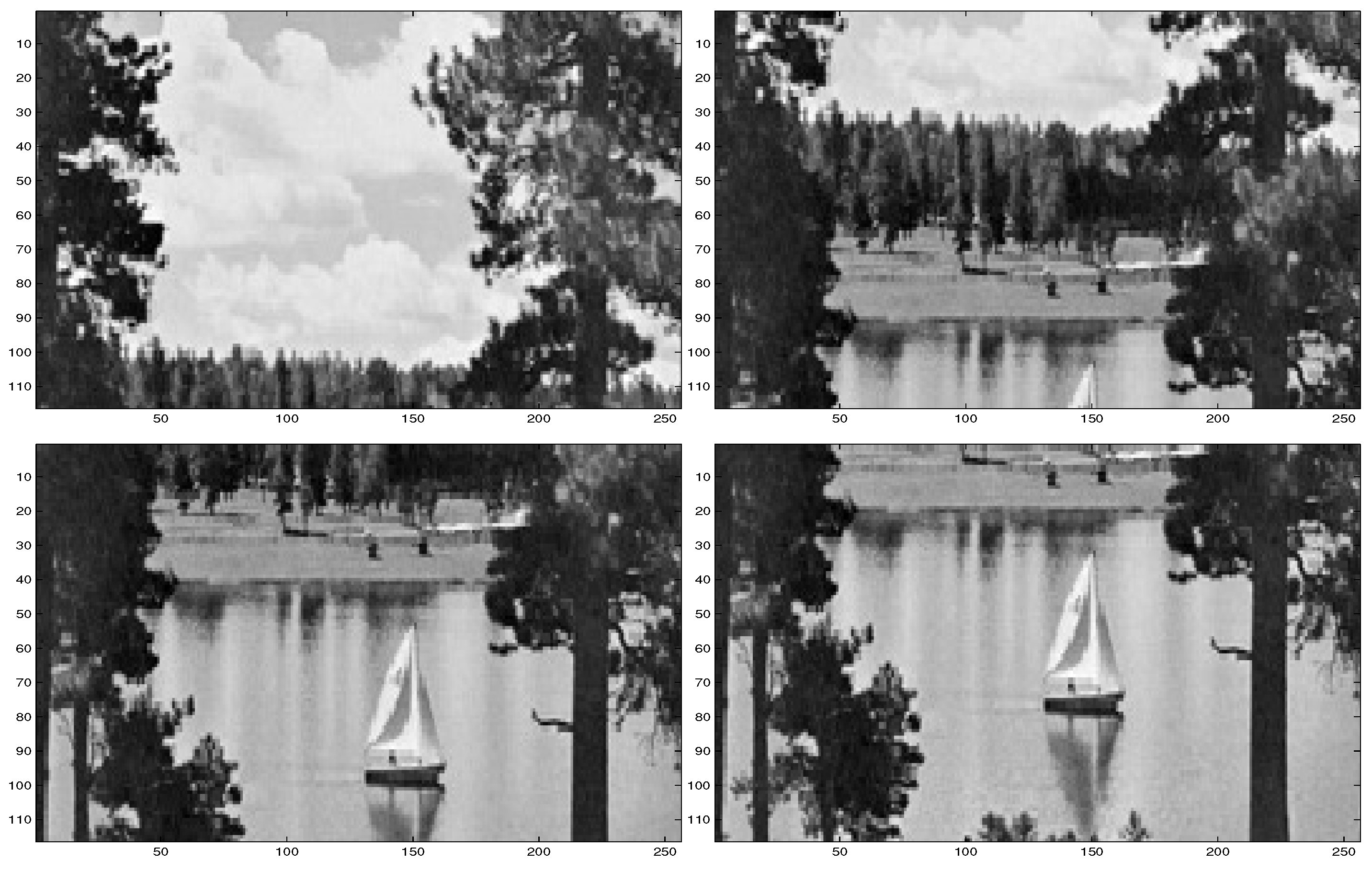

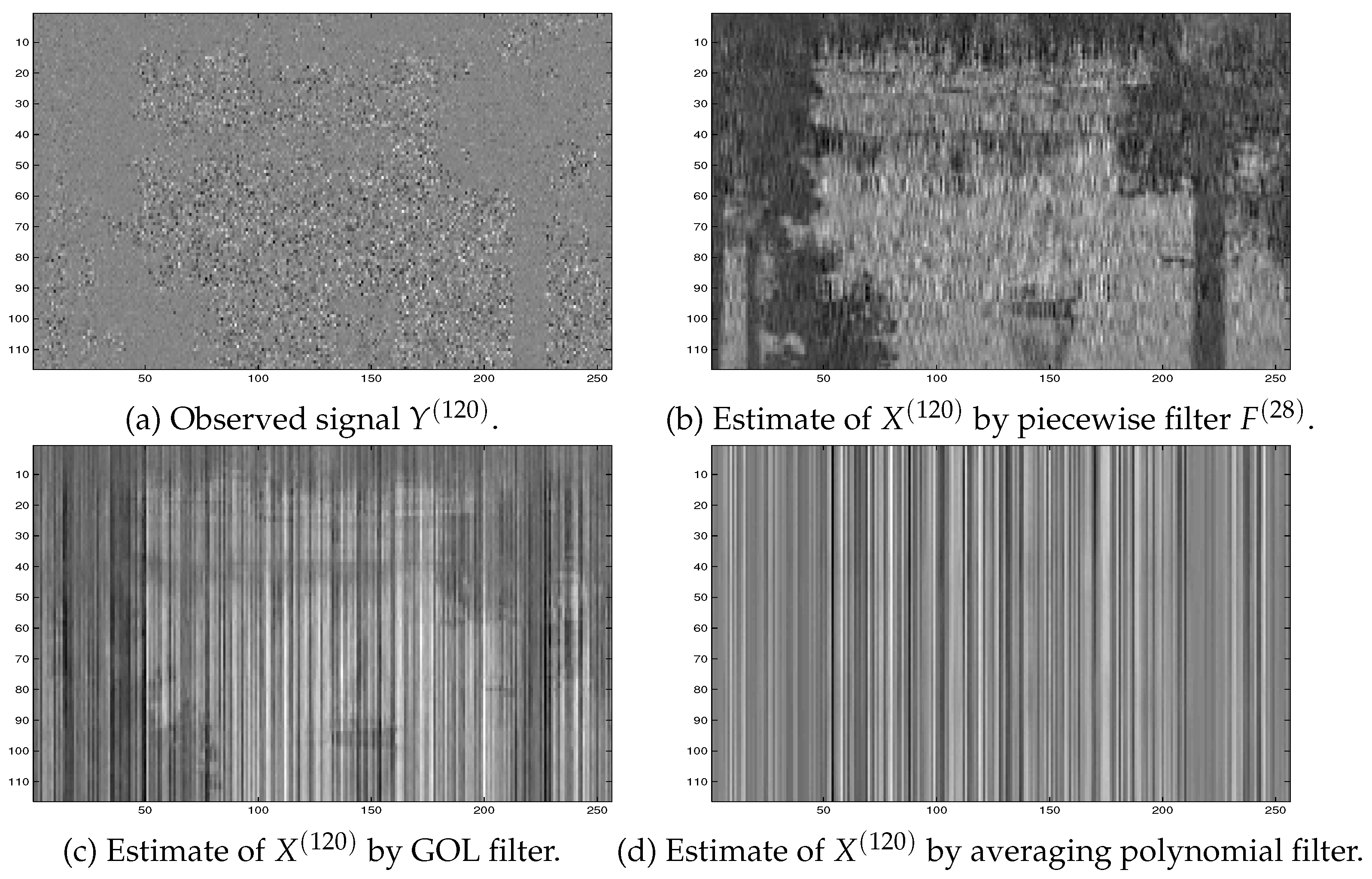

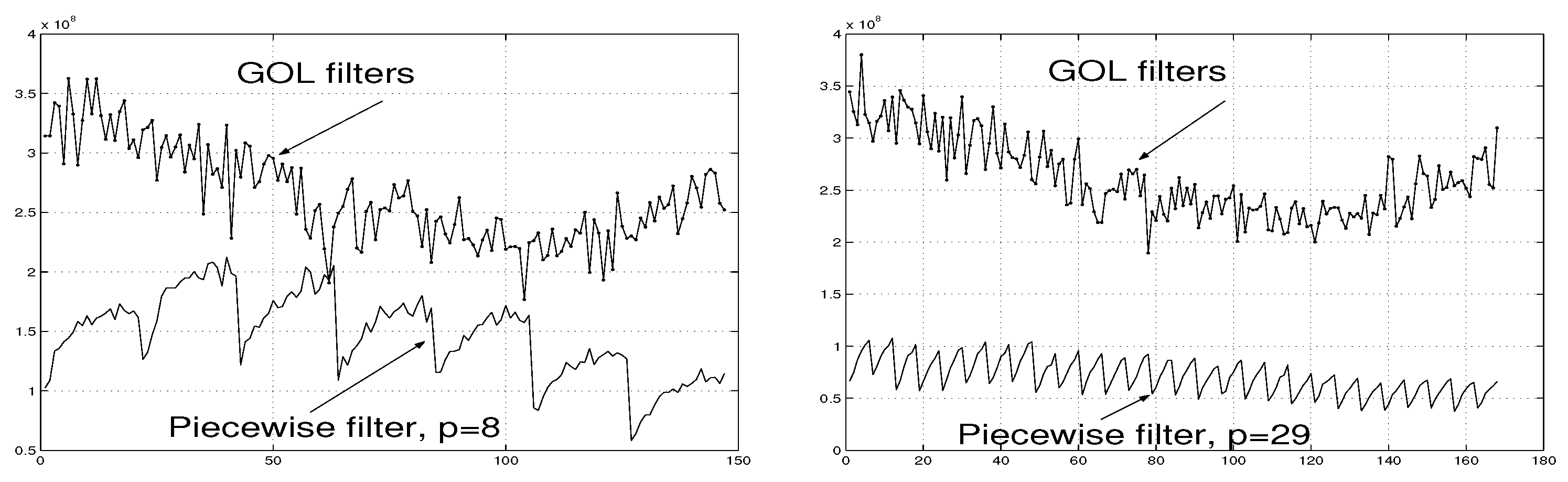

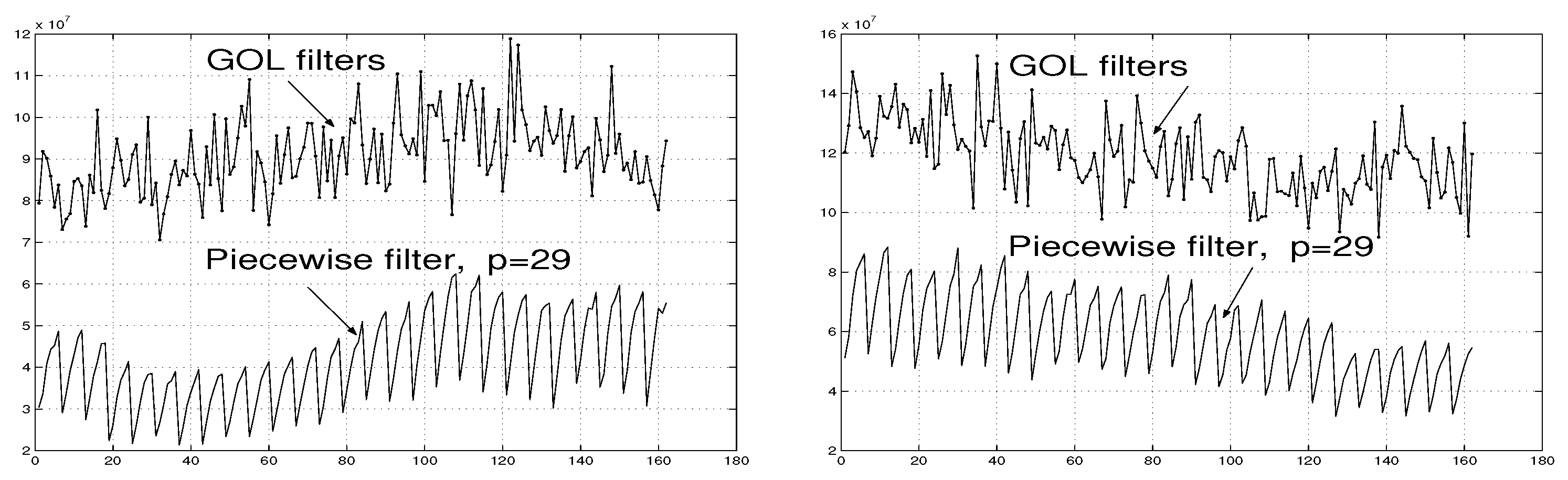

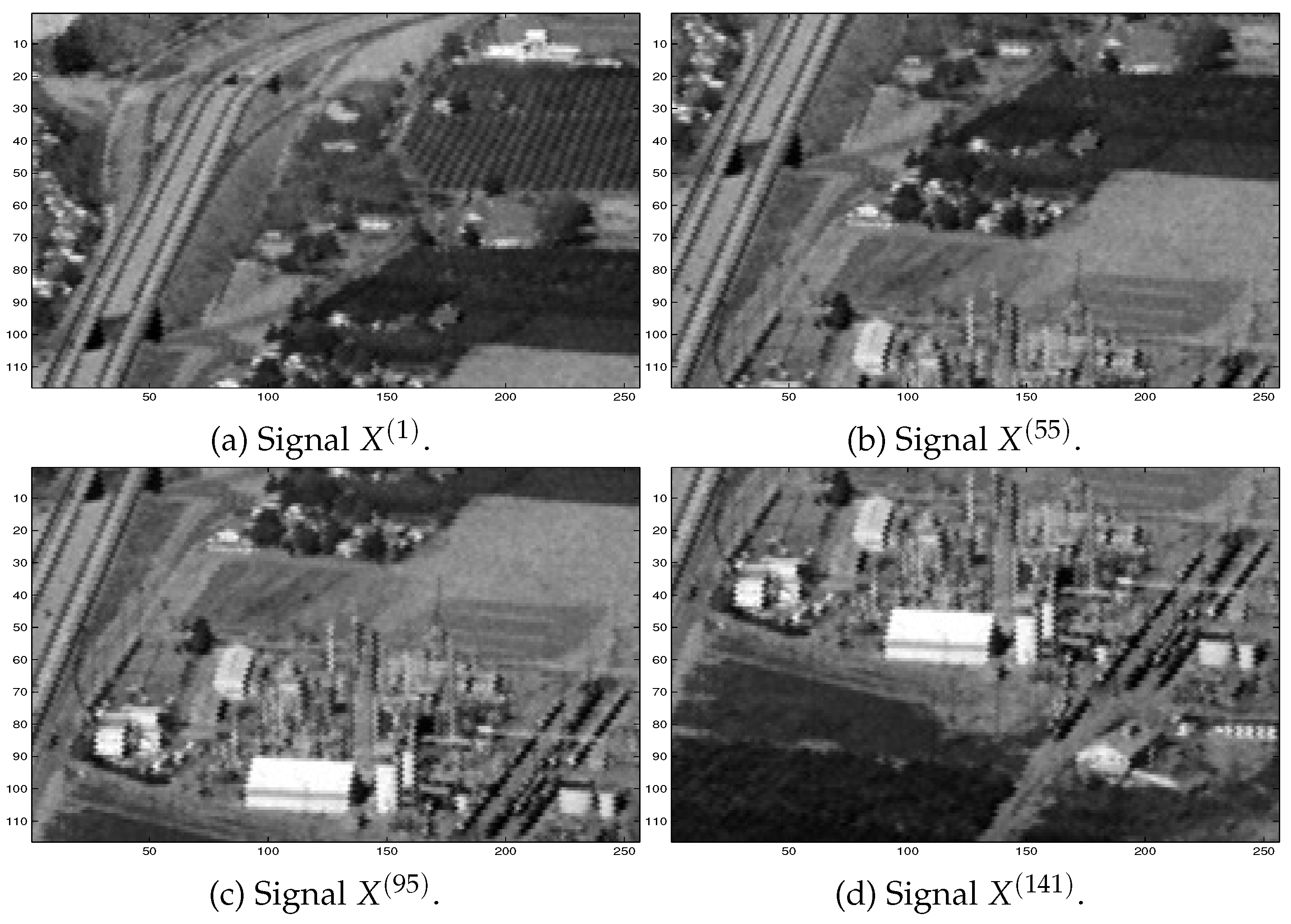

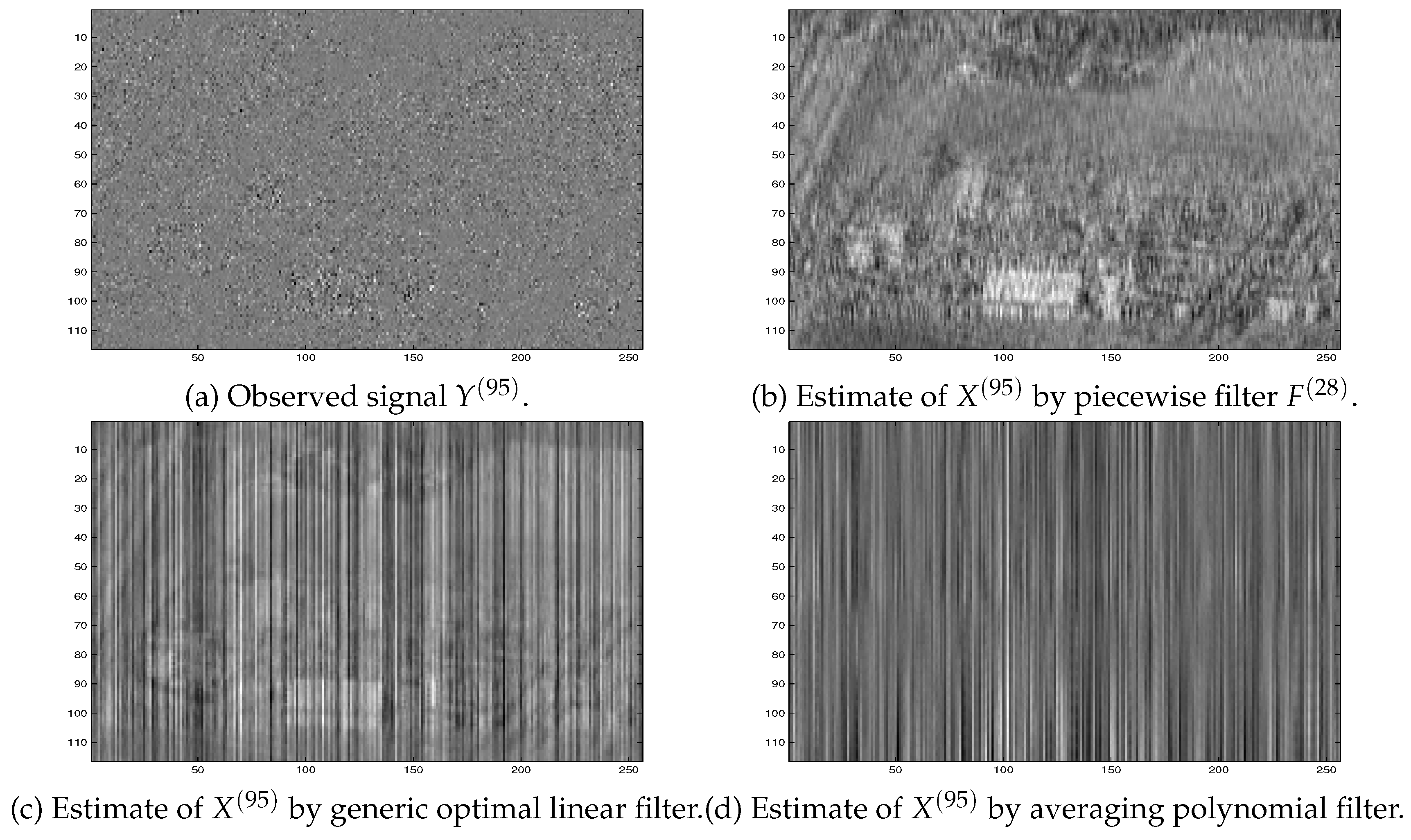

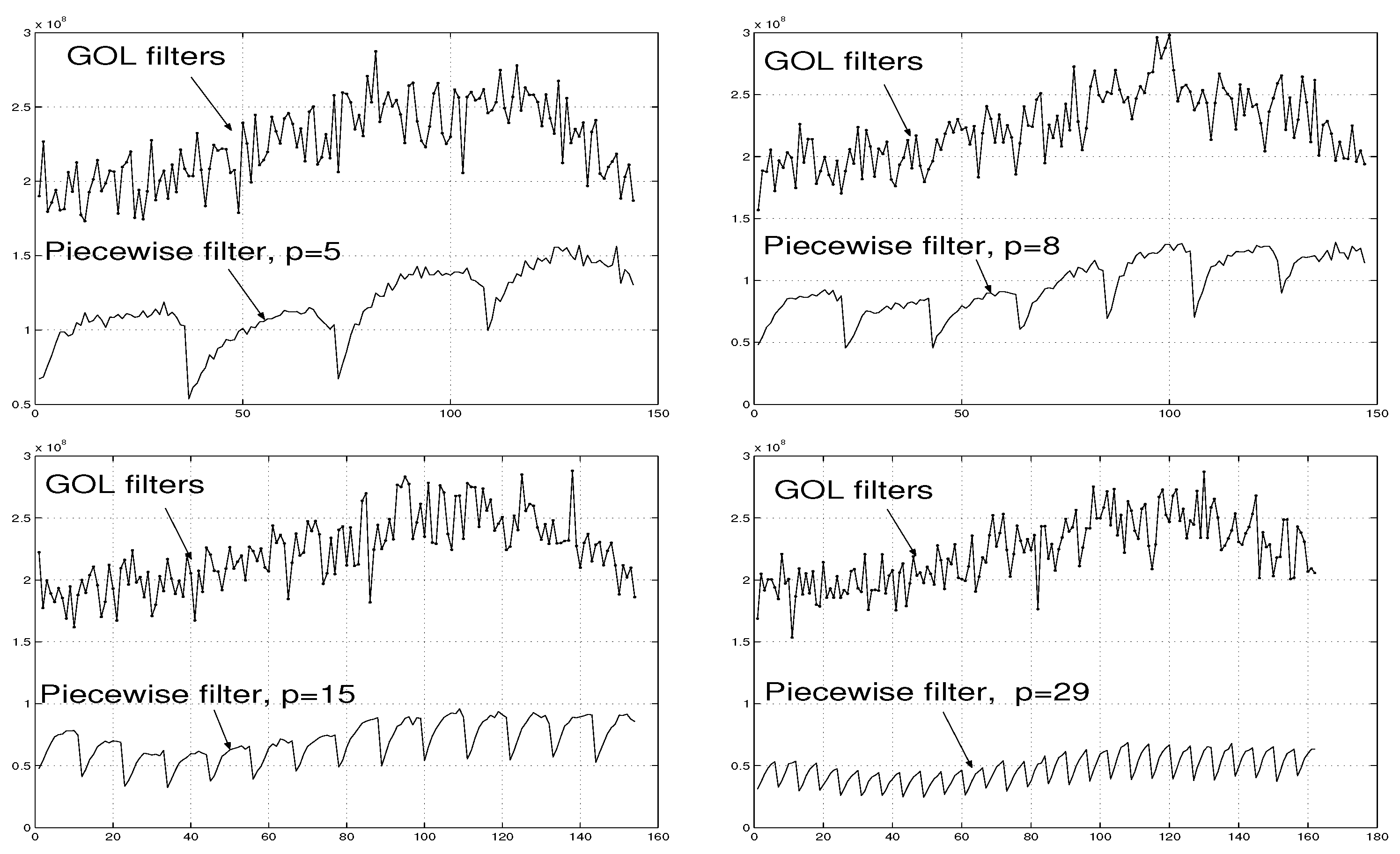

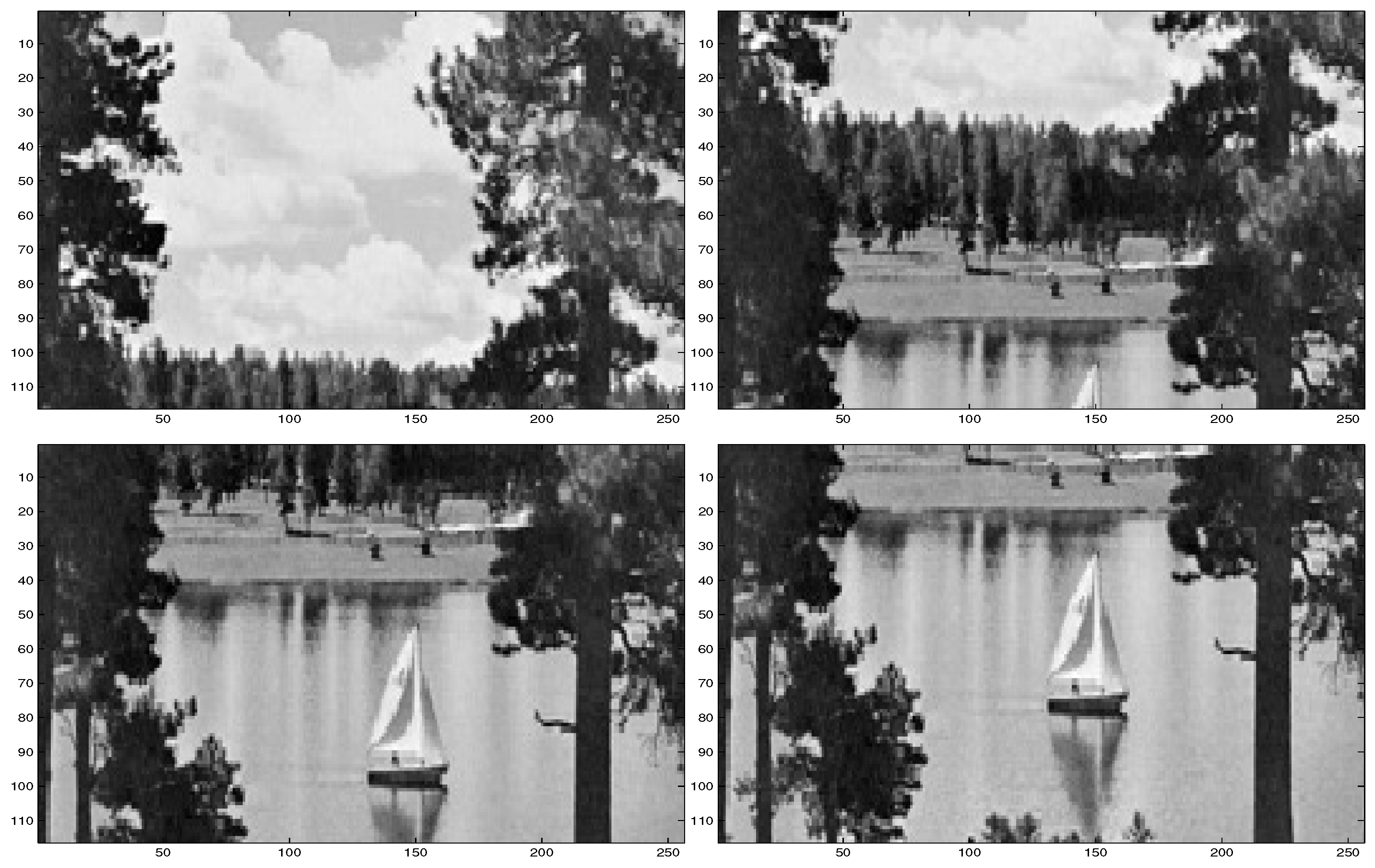

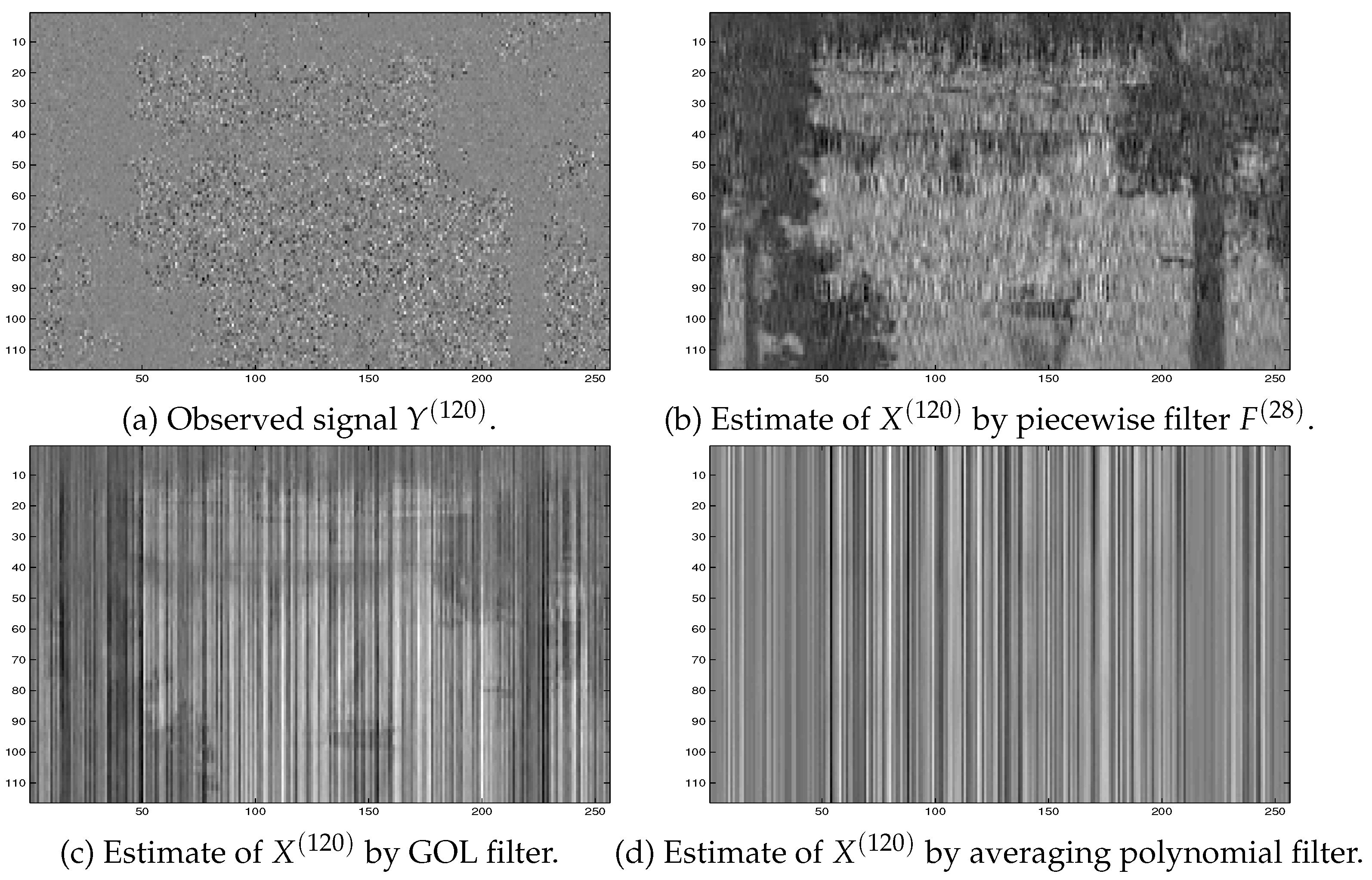

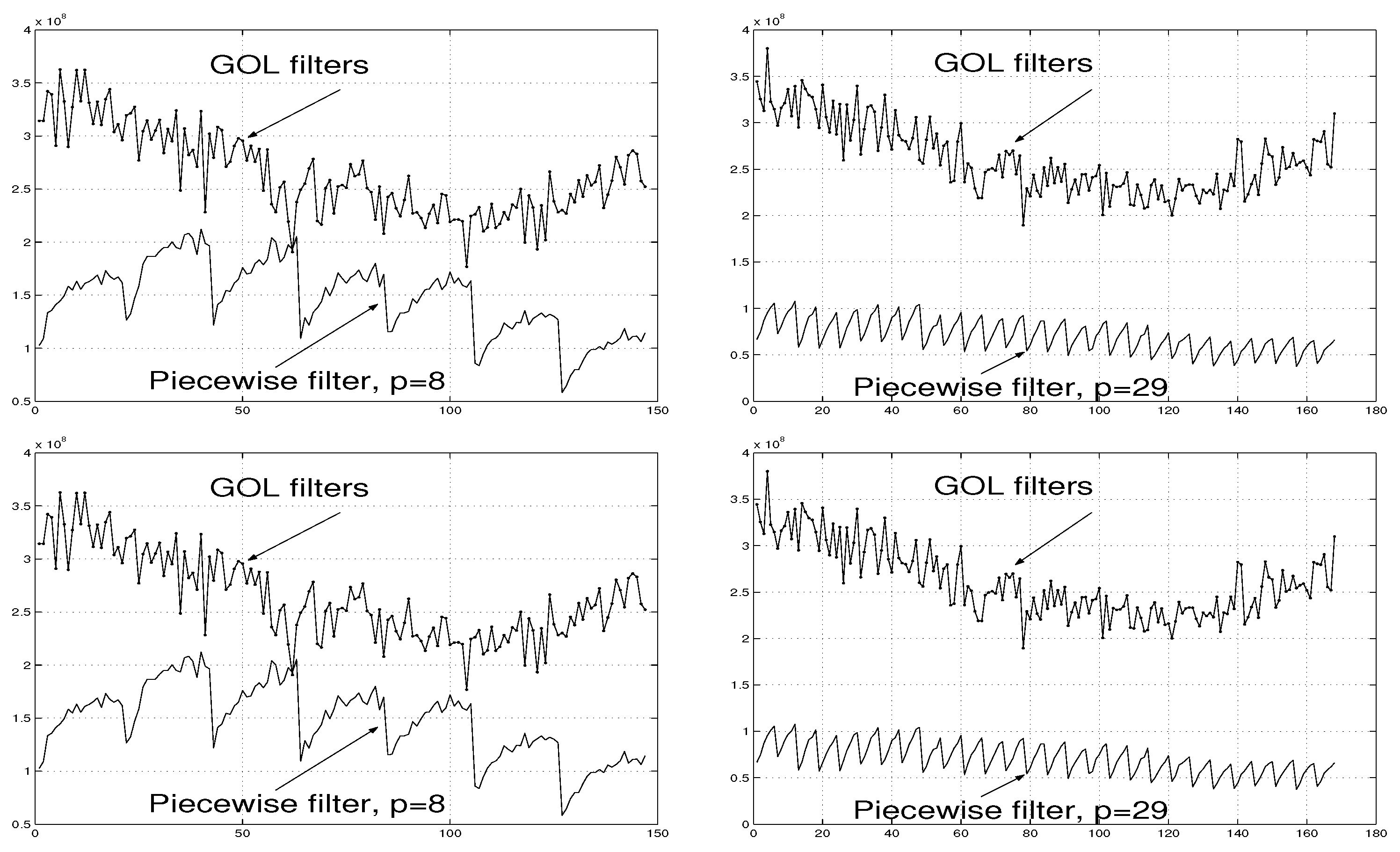

5.2. Simulations with Signals Modelled from Images ‘Plant’: Application of Piecewise Interpolation Filter and GOL Filters

5.3. Simulations with Signals Modelled from Images ‘Boat’: Application of Piecewise Interpolation Filter and GOL Filters

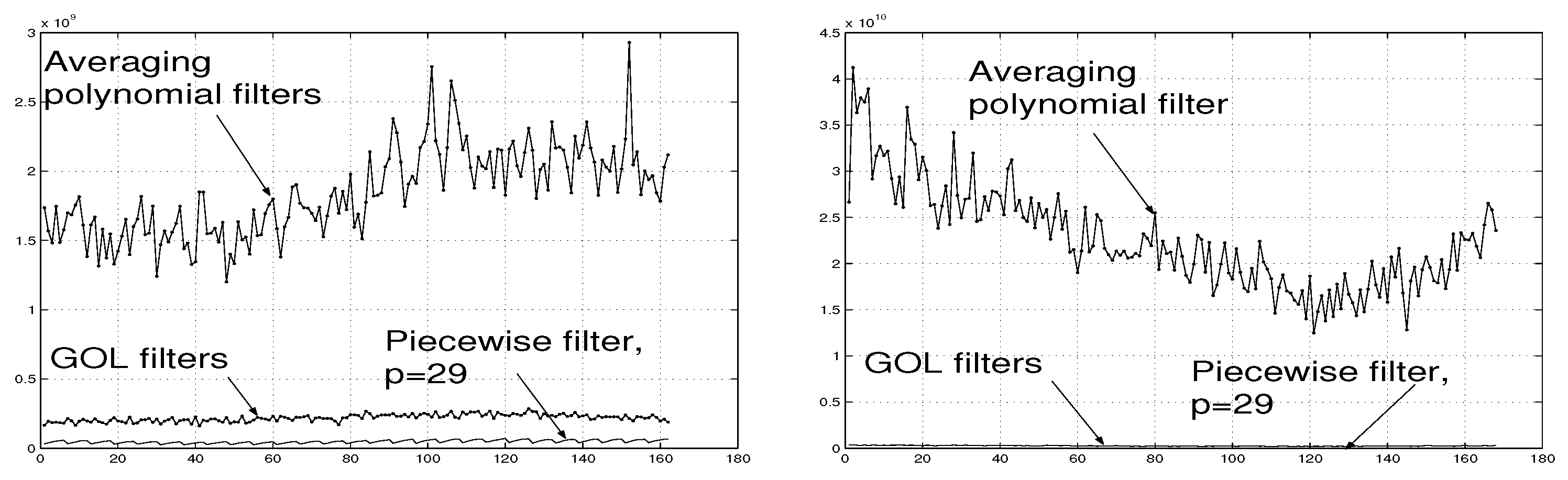

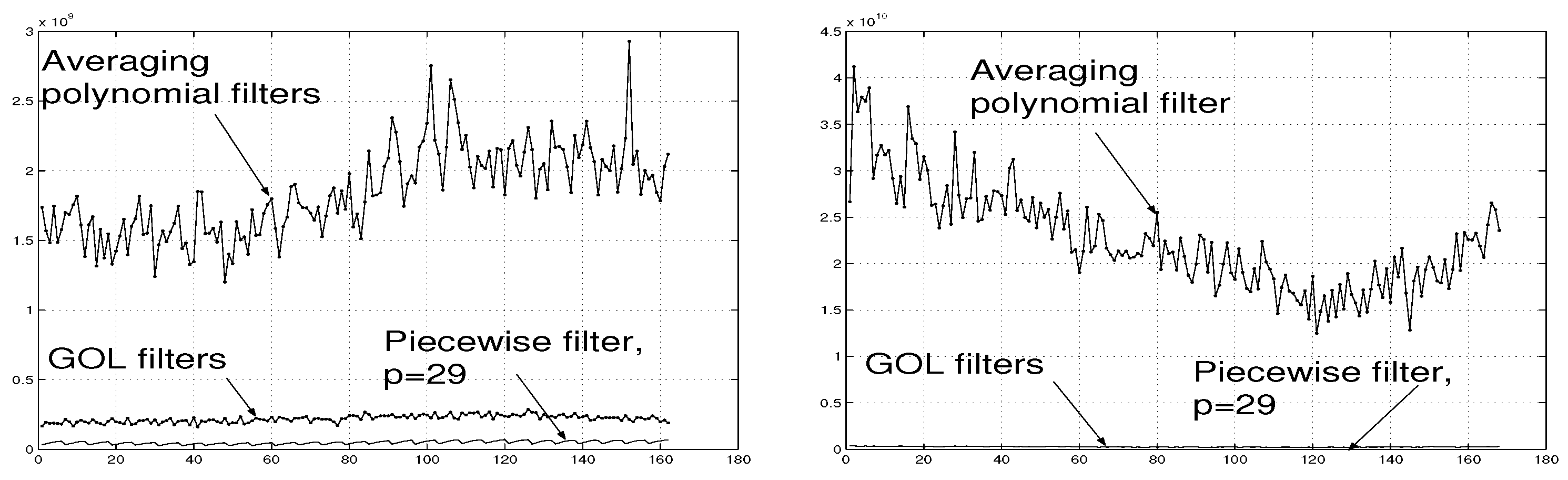

5.4. Results of Simulations for Averaging Polynomial Filter [10,12]

5.5. Further Simulations with Different Type of Noise

5.6. Summary of Simulations

6. Conclusions

Authors’ Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Chen, J.; Benesty, J.; Huang, Y.; Doclo, S. New Insights Into the Noise Reduction Wiener Filter. IEEE Trans. on Audio, Speech, and Language Processing 2006, 14(No. 4), 1218–1234. [Google Scholar] [CrossRef]

- Spurbeck, M.; Schreier, P. Causal Wiener filter banks for periodically correlated time series. Signal Processing 2007, 87(6), 1179–1187. [Google Scholar] [CrossRef]

- Goldstein, J. S.; Reed, I.; Scharf, L. L. A Multistage Representation of the Wiener Filter Based on Orthogonal Projections. IEEE Trans. on Information Theory 1998, 44, 2943–2959. [Google Scholar] [CrossRef]

- Y. Hua, M. Nikpour, and P. Stoica, “Optimal Reduced-Rank estimation and filtering,”IEEE Trans. on Signal Processing,vol. 49, pp. 457-469, 2001.

- A. Torokhti and P. Howlett, Computational Methods for Modelling of Nonlinear Systems, Elsevier, 2007.

- E. D. Sontag, Polynomial Response Maps, Lecture Notes in Control and Information Sciences, 13, 1979.

- Chen, S.; Billings, S. A. Representation of non-linear systems: NARMAX model. Int. J. Control 1989, 49(no. 3), 1013–1032. [Google Scholar] [CrossRef]

- V. J. Mathews and G. L. Sicuranza, Polynomial Signal Processing, J. Wiley & Sons, 2001.

- Torokhti, A.; Howlett, P. Optimal Transform Formed by a Combination of Nonlinear Operators: The Case of Data Dimensionality Reduction. IEEE Trans. on Signal Processing 2006, 54(No. 4), 1431–1444. [Google Scholar]

- Torokhti, A.; Howlett, P. Filtering and Compression for Infinite Sets of Stochastic Signals. Signal Processing, 2009; Volume 89, pp. 291–304. [Google Scholar]

- Vesma, J.; Saramaki, T. Polynomial-Based Interpolation Filters - Part I: Filter Synthesis; Circuits, Systems, and Signal Processing, 2007; Volume 26, Number 2, pp. Pages 115–146. [Google Scholar]

- Torokhti, A.; Manton, J. Generic Weighted Filtering of Stochastic Signals. IEEE Trans. on Signal Processing 2009, 57(issue 12), 4675–4685. [Google Scholar] [CrossRef]

- Torokhti, A.; Miklavcic, S. Data Compression under Constraints of Causality and Variable Finite Memory. Signal Processing 2010, 90(Issue 10), 2822–2834. [Google Scholar] [CrossRef]

- Babuska, I.; Banerjee, U.; Osborn, J. E. Generalized finite element methods: main ideas, results, and perspective. International Journal of Computational Methods 2004, 1(1), 67–103. [Google Scholar] [CrossRef]

- Kang, S.; Chua, L. A global representation of multidimensional piecewise-linear functions with linear partitions. IEEE Trans. on Circuits and Systems 1978, 25(Issue:11), 938–940. [Google Scholar] [CrossRef]

- Chua, L.O.; Deng, A.-C. Canonical piecewise-linear representation. IEEE Trans. on Circuits and Systems 1988, 35(Issue:1), 101–111. [Google Scholar] [CrossRef]

- Lin, J.-N.; Unbehauen, R. Adaptive nonlinear digital filter with canonical piecewise-linear structure. IEEE Trans. on Circuits and Systems 1990, 37(Issue:3), 347–353. [Google Scholar] [CrossRef]

- J.-N. Lin and R. Unbehauen, Canonical piecewise-linear approximations,IEEE Trans. on Circuits and Systems I: Fundamental Theory and Applications,39 Issue:8, pp. 697 - 699, 1992.

- Gelfand, S.B.; Ravishankar, C.S. A tree-structured piecewise linear adaptive filter. IEEE Trans. on Inf. Theory 1993, 39(issue 6), 1907–1922. [Google Scholar] [CrossRef]

- Heredia, E.A.; Arce, G.R. Piecewise linear system modeling based on a continuous threshold decomposition. IEEE Trans. on Signal Processing 1996, 44(Issue:6), 1440–1453. [Google Scholar] [CrossRef]

- Feng, G. Robust filtering design of piecewise discrete time linear systems. IEEE Trans. on Signal Processing 2005, 53(Issue:2), 599–605. [Google Scholar] [CrossRef]

- Russo, F. Technique for image denoising based on adaptive piecewise linear filters and automatic parameter tuning. IEEE Trans. on Instrumentation and Measurement 2006, 55(Issue:4), 1362–1367. [Google Scholar] [CrossRef]

- Cousseau, J.E.; Figueroa, J.L.; Werner, S.; Laakso, T.I. Efficient Nonlinear Wiener Model Identification Using a Complex-Valued Simplicial Canonical Piecewise Linear Filter. IEEE Trans. on Signal Processing 2007, 55(Issue:5), 1780–1792. [Google Scholar] [CrossRef]

- P. Julian, A. Desages, B. D’Amico, Orthonormal high-level canonical PWL functions with applications to model reduction,IEEE Trans. on Circuits and Systems I: Fundamental Theory and Applications,47 Issue:5, pp. 702 - 712, 2000.

- T. Wigren, Recursive Prediction Error Identification Using the Nonlinear Wiener Model,Automatica,29, 4, pp. 1011–1025, 1993.

- G. H. Golub and C. F. van Loan, Matrix Computations, Johns Hopkins University Press, Baltimore, 1996.

- T. Anderson,An Introduction to Multivariate Statistical Analysis,New York, Wiley, 1984.

- L. I. Perlovsky and T. L. Marzetta, Estimating a Covariance Matrix from Incomplete Realizations of a Random Vector,IEEE Trans. on Signal Processing, 40, pp. 2097-2100, 1992.

- O. Ledoit and M. Wolf, A well-conditioned estimator for large-dimensional covariance matrices,J. Multivariate Analysis88, pp. 365–411, 2004.

| 1 | We say a stochastic vector is finite if its realization has a finite number of scalar components. |

| 2 | |

| 3 | This means that any desired accuracy is achieved theoretically, as is shown in Section 4.4 below. In practice, of course, the accuracy is increased to a prescribed reasonable level. |

| 4 | As usually, is the set of outcomes, a -field of measurable subsets in and an associated probability measure on . In particular,

|

| 5 | Hereinafter, we will use a non-curly symbol to denote an operator and associated matrix (e.g., the operator and the associated matrix are denoted by ). |

| 6 | It is worthwhile to note that it is not assumed that the covariance matrices are known for each signal pair from , with . |

| 7 | As it has been mentioned in Section 3.4, can be determined by the known methods. |

| 8 | The database is available in http://sipi.usc.edu/services/database.html. |

| 9 | The database is available in http://sipi.usc.edu/services/database.html. |

| 10 | The database is available in http://sipi.usc.edu/services/database.html. |

| 11 | The database is available in http://sipi.usc.edu/services/database.html. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).