1. Introduction

Function allocation (FA) is the process by which the functions necessary to accomplish an overall set of goals are allocated to human and machine resources to maximize the advantage of their unique strengths while minimizing the impact of their limitations. The philosophical and practical basis of any FA is to systematically consider two questions: “Is complete system automation mandatory (e.g., for ensuring safety)?” and “Is complete system automation achievable?” In practice, there are very few situations where both questions are affirmed. Thus, most tasks must be divided, to some degree, between humans and automated systems. For this reason, substantial attention in the systems engineering literature has been devoted to studying the optimal division of labor between humans and machines.

Classically, the Fitts List [

2] distinguishes which tasks can be optimally performed by humans and machines respectively. The list, seen in

Table 1, provides a framework for function allocation (FA) based on “Humans Are Better At vs. Machines Are Better At” (HABA-MABA) guidelines. However, since the time of Fitts, automation has overcome many of its past limitations. Breakthroughs in artificial intelligence (AI) and machine learning (ML) are challenging the paradigm through new developments in adaptive automation (AA), large-scale data analysis for trend projection, and data-informed decision-making capability.

While heuristics like Fitts List [

2] provided a useful baseline for distinguishing between human and machine capabilities, they did not offer a systematic means of determining how functions should be divided in complex systems. As nuclear power plants and other high-risk domains matured through the 1960s and 1970s, system designers required more structured approaches that went beyond the HABA-MABA heuristic. This period saw the development of a variety of methodological frameworks for FA, many of which relied heavily on expert judgment, qualitative assessment, and context-specific criteria. These approaches reflected both the technological limitations of automation at the time and the recognition that optimal allocation was not fixed but dependent on system goals, safety requirements, and operational context.

1.1. Regulatory Perspectives on Function Allocation (FA)

The culmination of this early work on FA in the nuclear industry was captured in NUREG/CR-2623 [

3] and NUREG/CR-3331[

1], which documents the methodological diversity of this era and highlights the growing recognition that FA must be approached as a dynamic and iterative design problem rather than a one-time assignment of tasks. In the nuclear power plant (NPP) domain, the U.S. Nuclear Regulatory Commission (NRC) provides guidance for reviewing an NPP applicant’s FA in the context of their human factors engineering (HFE) program in

Section 4, Functional Requirements Analysis (FRA) and Function Allocation (FA) of NUREG-0711, Revision 3 [

4]. This section of NUREG-0711 [

4] operationally defines FRA and FA while providing examples of how NPP applicants utilize these methods to determine how to divide tasks between a human operator and non-human agents or systems. Although NUREG-0711, Revision 3 [

4], was published in 2012, its primary technical basis for FA, NUREG/CR-2623 [

3], was published in 1983. From a conceptual perspective, FA and FRA have not changed – tasks must be allocated based on the resources (human, non-human agents, and automated systems) available to reliably complete those tasks. However, as automation technologies have advanced, it is increasingly unclear whether the legacy approaches described in NUREG/CR-2623 [

3] adequately reflect the evolving nature of task demands and system capabilities in modern, digital, control room environments. This raises important questions about whether the assumptions underlying earlier FA models, such as those informed by the Fitts List, remain valid in today’s automation landscape.

The term “non-human agents” is used here to refer broadly to modern automation technologies such as those involving AI, ML, and adaptive systems that offer levels of flexibility, adaptability, and responsiveness that were not envisioned by early system designers. In some cases, these technologies now rival or exceed human performance in tasks once considered uniquely human. As a result, the traditional HABA vs. MABA heuristic may no longer have a role in future task allocation decisions – if tasks are increasingly suitable for humans or machines, allocations may need to be more context dependent and determined along a dynamic continuum rather than as discrete, binary choices. It is not just digitalization and advanced automation that could change the landscape for function allocation; many advanced reactor designs may be capable of achieving adequate safety margins without reliance on human actions through simplified reactor designs with fewer moving parts, inherent safety characteristics, and passive safety features.

Inherent safety characteristics are physical or chemical properties of the reactor or its materials that naturally ensure safety through elimination of a hazard. Inherent safety characteristics are already found in many operating reactors, for example, large light water reactors have a negative temperature coefficient—as the reactor gets hotter, the nuclear reaction slows down significantly. This inherent safety characteristic prevents a runaway reaction and gives operators time to address the underlying issue that led to the increase in temperature. An inherent safety characteristic does not require active systems or even a passive mechanism to function (e.g., negative temperature coefficient of reactivity, certain types of coolants, thermal expansion of fuel or moderator).

When a hazard has not been eliminated, engineered safety systems, structures, or components are incorporated into a design to reduce the risk of a hazard. Active systems rely on external mechanical and/or electrical power, signals or forces. Passive safety features are engineered systems, structures, or components that use natural physical properties, like gravity, natural circulation, or pressure differentials, to maintain the reactor in a safe state during an emergency [

5]. While passive safety features are designed to reduce reliance on human intervention, they are still engineered systems. Human errors in design, construction, or maintenance can compromise their effectiveness. For example, the effectiveness of natural circulation for cooling could be impacted by latent errors introduced into the system during maintenance or testing activities, such as a bypass valve that was left closed, or a component reinstalled incorrectly. This means that the “fail-safe” nature of passive cooling is not absolute.

Just like with automated safety systems, the incorporation of inherent safety characteristics and passive safety features into advanced reactor designs can result in changes in what functions are required to ensure safety and how those functions are accomplished. Key safety functions or tasks that were previously allocated to operator control may now be accomplished by some combination of inherent safety characteristics, passive safety features, and active automated systems. Incorporating these elements and more advanced and capable forms of autonomy (i.e., non-human agents) may leave traditional approaches to FA falling short when applied to advanced reactor designs with these characteristics. These examples of key, paradigm shifting developments highlight the need to revisit and potentially revise existing FRA and FA guidance to ensure it remains aligned with current and emerging capabilities. This review, therefore, seeks to determine whether developments in the literature since the early 1980s provide a basis for updating FRA and FA methodologies to better align with the capabilities and challenges of modern autonomy, passive safety features, and inherent safety characteristics.

Recently, [

6] published a paper highlighting the evolving role of HFE tools, like FRA and FA, in the regulatory and design processes for advanced nuclear reactors. Green et al. discussed the application of FRA within the proposed regulations for advanced reactors in Title 10 of the Code of Federal Regulations Part 53 (10 CFR Part 53) to identify where human involvement is essential to fulfilling safety functions across a range of reactor technologies. They suggested that traditional FA approaches may need to be expanded to assess designs that achieve safety without relying on human action, particularly by evaluating system resilience to human performance failures. Further documenting the complex and nuanced operational changes that may be integrated into new and advanced reactors, [

7] discuss how FRA and FA must evolve to support automation allocation decisions in advanced reactor systems. Their work underscores the importance of addressing the complexities of human-automation interaction, including accounting for passive safety features and inherent safety characteristics, and the growing integration of human and automated systems. Together, these perspectives underscore the need to potentially adapt HFE methodologies for FA so that they align with both regulatory expectations and the technological innovations shaping the future of nuclear energy.

1.3. Review Objectives

NUREG/CR-2623 [

3] states that “technological advances have greatly altered the role of man in relation to systems, changing his function from that of continuous control element to that of supervisor.” (p. 113). The driver for the 1983 NUREG/CR-2623 [

3] literature review is the same as the driver for this review; technological changes have the potential to impact the way FA is performed and the types of tasks allocated to humans, non-human agents, and other inherent characteristics and passive safety features. It is possible that between the publication of NUREG/CR-2623 [

3] and the initiation of this research in 2025, there are new or better approaches, more suitable for application to novel technologies; however, it may also be the case that some or all the underlying literature documenting approaches to FA are technology-neutral and evergreen.

Moreover, a changing regulatory landscape reinforces the need for updated guidance in this domain. In October 2024, the NRC introduced a draft rule aimed at establishing a “risk-informed, technology-inclusive regulatory framework for advanced reactors,” to be codified in 10 CFR Part 53. Relevant to the current paper is that the proposed licensing requirements in 10 CFR 53.730(d) would require applicants to submit FRA and FA documentation identifying the safety functions of the facility. Under this framework, FA is not limited to assigning tasks between humans and automation. It also involves allocating safety functions across active safety features, passive safety features, and/or inherent safety characteristics.

Further motivating this need, [

8] highlighted that traditional function allocation methods have not kept pace with the increasing complexity of automation in modern nuclear systems. The report emphasized that AA—where the degree of automation dynamically changes in response to contextual changes (e.g., operator workload or performance)—offers potential benefits such as improved task performance, situation awareness (SA), and workload management. However, [

8] also identified significant challenges, including the lack of detailed HFE guidance for designing and evaluating such systems. [

9] began to address this limitation by evaluating the adequacy of existing NRC HFE guidance, specifically NUREG-0711 [

4] and NUREG-0700 [

10], for reviewing AA in their safety evaluations. While the guidance was found sufficient for reviewing some aspects (e.g., monitoring and failure detection), it was deemed insufficient for addressing key design characteristics of AA systems, such as configuration design, triggering conditions, and dynamic operator roles. The report concluded that additional guidance is needed to support comprehensive safety reviews of AA systems, reinforcing the need for updated FRA and FA approaches that reflect the operational realities of advanced technologies.

Most recently, [

6] emphasized the key role of FRA and FA in NRC’s evolving regulatory approach. They explained that, with the availability of greater diversity in agents involved in operations and greater availability of passive safety features and inherent safety characteristics, identifying where human support is essential for maintaining safety across diverse reactor technologies is critical. They suggest that traditional FA methods (like those documented in [

3]) may not be adequate for fully evaluating designs that purport to achieve safety without human intervention. They go on to advocate for the use and/or development of approaches that assess a system’s resilience to human performance variability. Their work illustrates how safety functions can be fulfilled and how human roles would be shaped by the design’s safety features (i.e., passive features and inherent safety characteristics).

Morrow, Xing, and Hughes Green [

7] further explored how FRA and FA need to evolve to support allocation decisions involving advanced forms of autonomy (e.g., automation and AI, broadly referred to as “non-human agents” in this review) in advanced reactor designs. They highlight that traditional binary allocation models are inadequate for capturing the dynamic, shared responsibilities between humans and automation. For instance, computerized procedure systems may automate information acquisition and evaluation yet still require human oversight. Similarly, passive safety systems may reduce the need for human intervention but still depend on human roles for monitoring and maintenance. The authors emphasize the need for updated FRA/FA methods that reflect these complexities and support performance-based regulatory reviews of next-generation nuclear technologies under 10 CFR Part 53.

The purpose of this literature review is to explore the perspectives published by [

6] and [

7] by examining the scientific literature on FA and the associated concept of levels of automation (LOA) to determine if there have been new developments in FA methodology since the publication of the NRC’s technical basis for its guidance on the topic. To this end, specific research questions have been established to guide the literature search. These questions, along with their general research area, can be found in

Table 2.

2. Methodology

2.1. Literature Review Definition

This literature review uses a systematic methodology for collecting, critically evaluating, integrating, and presenting information from many sources. A process was developed to rapidly identify and review a large number of sources. A process was necessary because FA is a core aspect of the systems engineering process and it was expected that thousands of sources may surface once the literature search criteria were applied. This process enabled a staged review process where literature was filtered, reviewed, critiqued, and synthesized. Such a method allows for a comprehensive review while minimizing the potential for one author to skew interpretation of the literature. The process used was developed to be effective at surfacing similarities and differences across technical domains. For example, comparing nuclear and aviation domains you may find that both contain FA methods research, but that qualitative approaches are more common in nuclear than aviation. The comprehensiveness of this approach is particularly suited to supporting future guidance for safety-significant domains because it ensures that all relevant information is thoroughly considered prior to technical basis establishment.

2.2. Review Software

This review was supported by a software product called Rayyan [

11], a web-based software-as-a-service collaboration tool used to expedite the screening of scientific materials. Rayyan contains tools for filtering material, screening based on objective criteria, and analysis of document metadata. While originally developed for bio-medical systematic literature reviews, the platform can be used by any discipline, for both systematic and conventional reviews. There are two tiers, a free and a professional version. This review utilizes Rayyan Professional version, which enabled full-text screening of articles, collaboration, and storage of multiple reviews.

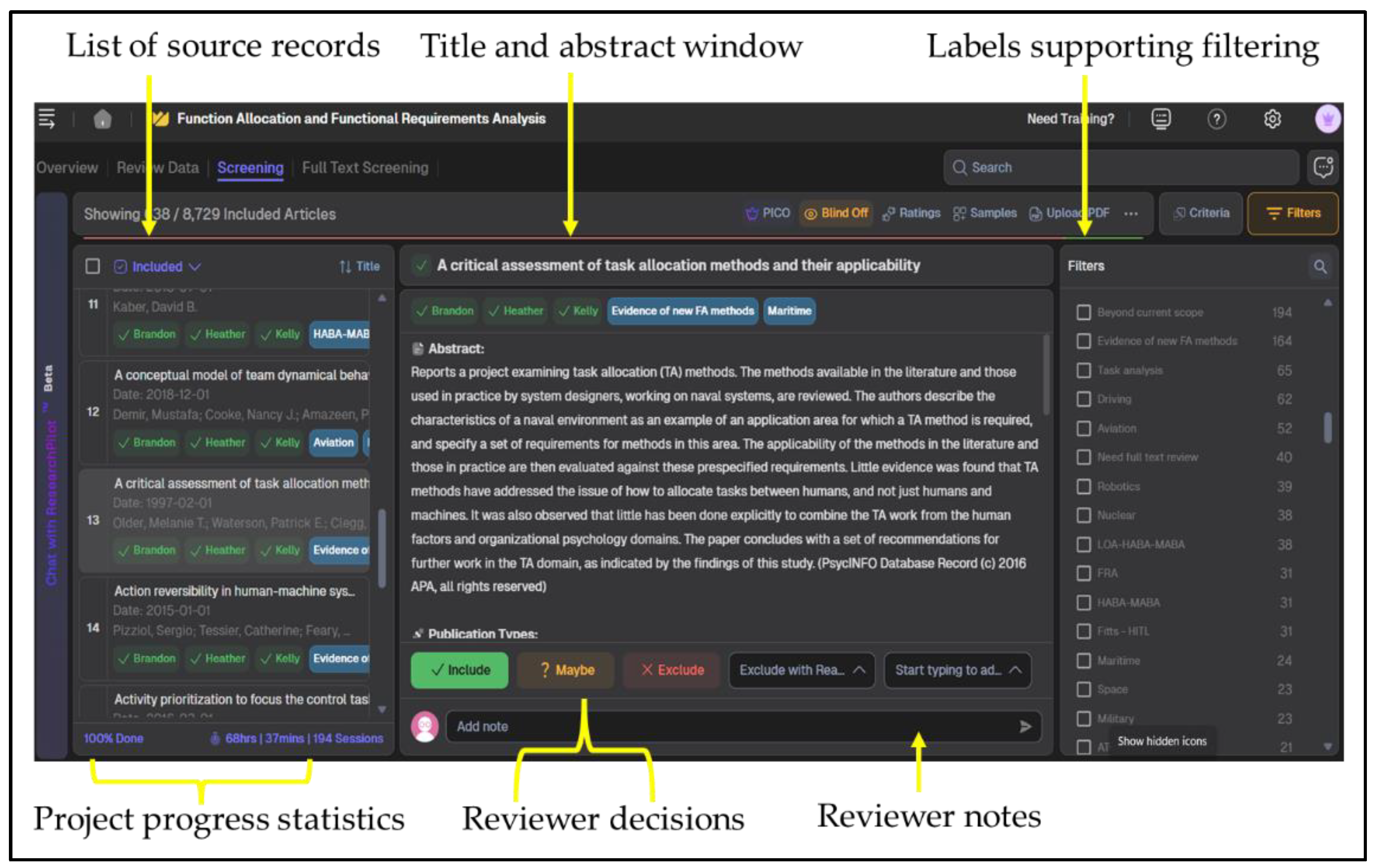

Figure 1.

Example of the user interface during screening. The article screening list and each entry’s status are located on the left. The abstract for a selected entry is in the center and includes buttons for the reviewer’s decision and classification (reason, label). The rightmost panel includes keywords that can be used to establish inclusion criteria and (not pictured) a list of selectable labels.

Figure 1.

Example of the user interface during screening. The article screening list and each entry’s status are located on the left. The abstract for a selected entry is in the center and includes buttons for the reviewer’s decision and classification (reason, label). The rightmost panel includes keywords that can be used to establish inclusion criteria and (not pictured) a list of selectable labels.

2.3. Literature Search Procedures

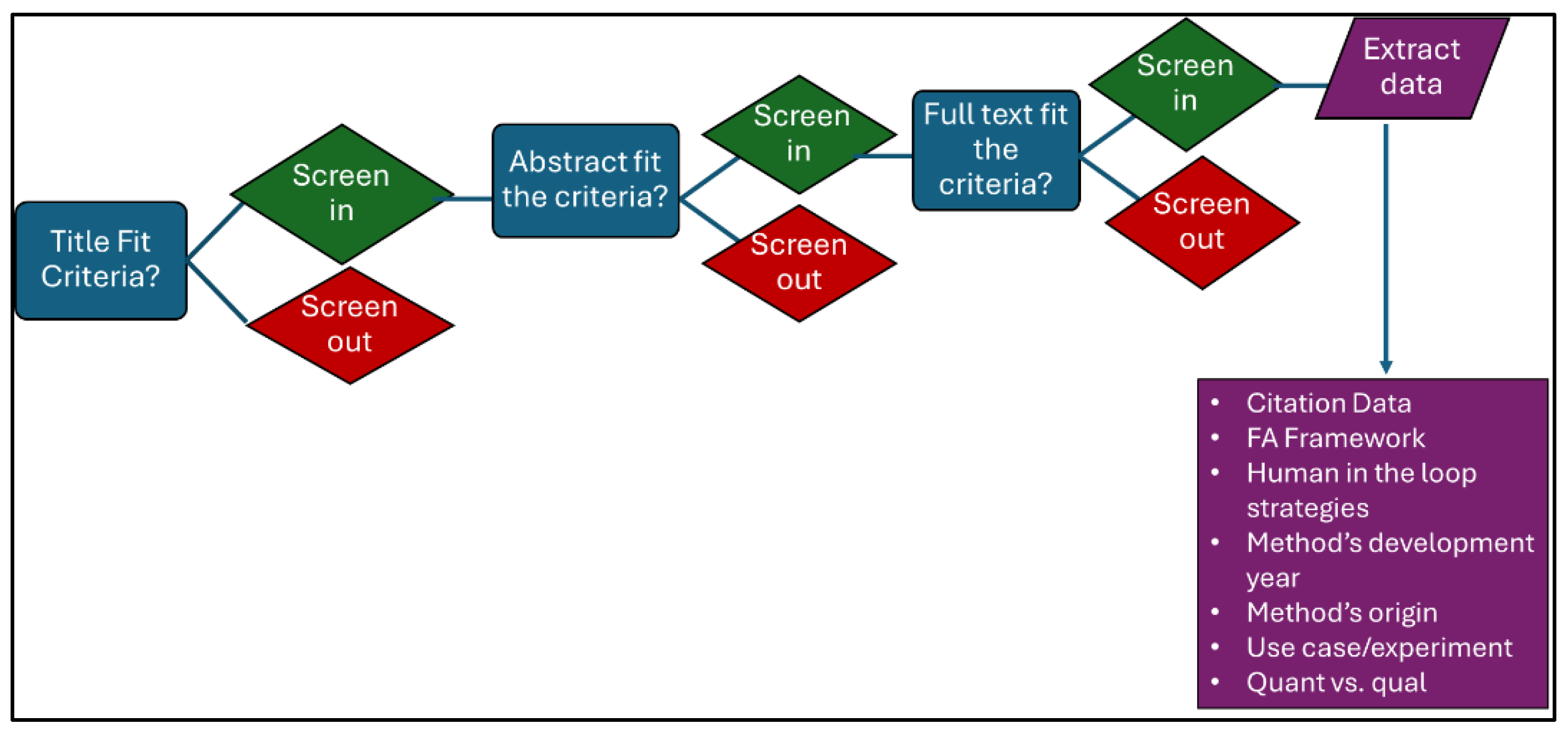

The literature search and screening process contained several stages. These include the selection of databases (

Table 3), definition of search criteria (

Figure 2) and keywords (

Table 4), extraction and storage of citation data, review and classification of the extracted citation information, a “backwards forwards” search, and finally, analysis and synthesis of the papers that meet the inclusion criteria.

2.3.1. Database Selection

Databases were selected based on their relevance to the topic, specificity of search results, availability of digital content, and ability to export citation data.

Table 3 lists the selected databases.

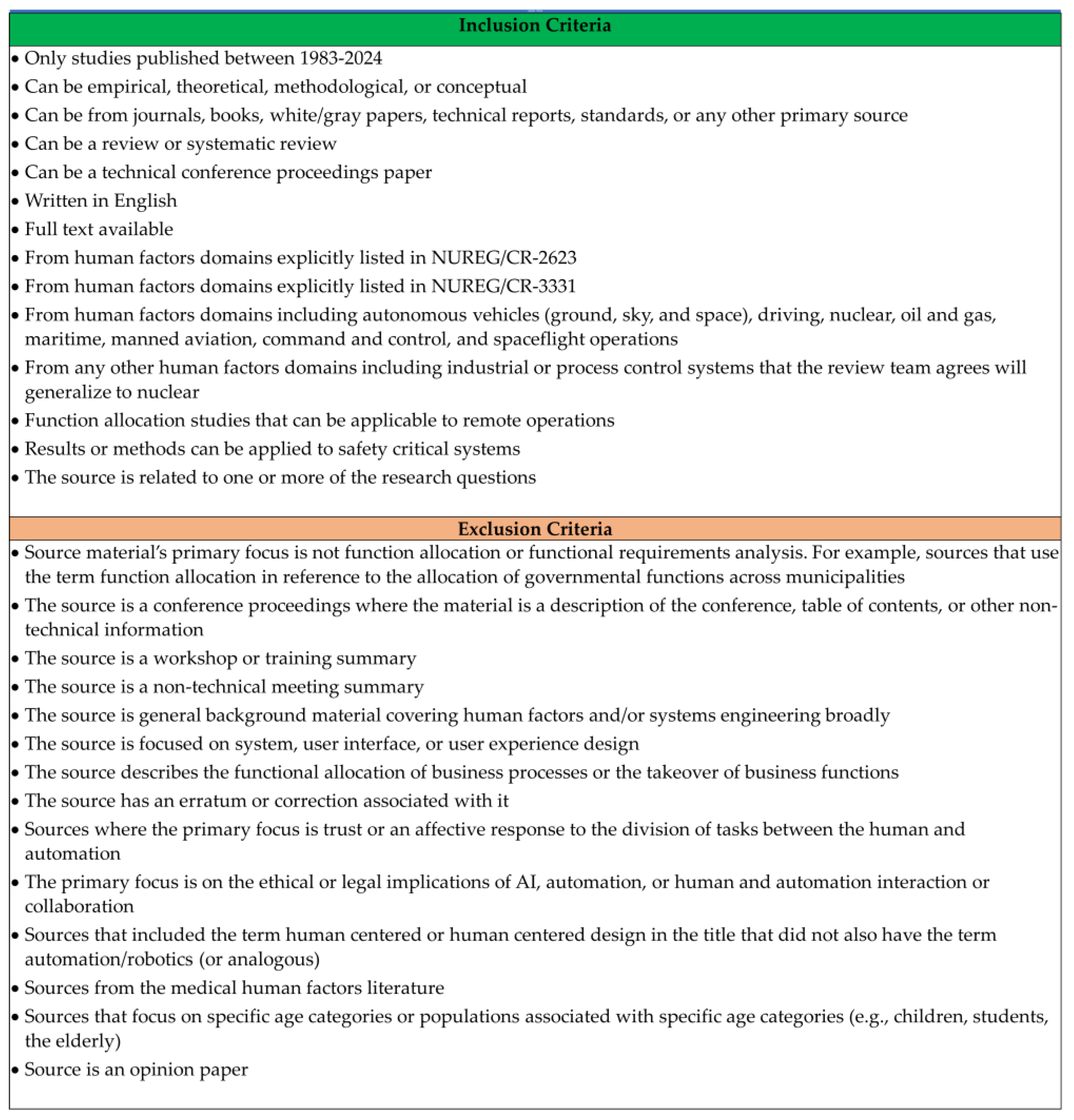

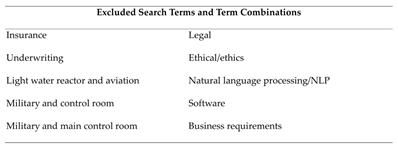

2.3.2. Definition of Search Criteria

Sixty-seven keywords were tested. The keywords were selected based on a review of 29 papers on function allocation methods, a review of NRC’s 1983 publications on FA, and a discussion with NRC’s experts on FA. Each of the 67 candidate keywords were evaluated for efficacy by testing them in one of the three databases used for the review. 3 of the search terms did not yield any results that would have met the review’s inclusion criteria and were removed from further use. The final set of 64 keywords are listed in

Table 4. Additionally, the review team discussed with NRC experts and identified a set of keywords that often co-occurred with the target keywords. The words in

Table 5 were specifically excluded from use in the search process. The same initial testing was used to aid in definition of a few excluded search terms. Excluded terms were irrelevant terms that seemed to have a high co-occurrence with relevant terms.

Many published systematic reviews use Boolean logic as part of their search process. The initial results of the single-term search yielded such a high volume of resources that the team opted to use only this data in the analysis of the literature. An additional search method (“backwards search” see section titled Backwards-Forwards Search) was expected to be sufficient to identify any sources that were potentially missed by not using Boolean logic to combine search terms. This also eliminated any potential for the review team to be biased in deciding which terms to use in a combined form and which terms to use only as single search terms.

2.3.3. Keyword Mapping

A keyword-to-question mapping was developed to verify that the search terms the review team planned to use were targeting the relevant literature. Each member of the review team sorted the keywords under each research question so that topic coverage could be visualized and topic conceptual understanding could be evaluated prior to beginning the search. Once members of the review team agreed with which keywords would best support each research question, the team began running queries in each of the three identified databases.

Search returns were documented in a database-specific tracking sheet to ensure each keyword was used for the search. Each tab included the full keyword list and the number of results returned for that keyword. To maintain feasibility, the search results for any keyword that yielded more than 1,000 results were excluded from screening. This threshold did not reflect a limitation of the inclusion criteria or the review process. It reflects the expansive nature of certain topics (e.g., function allocation, levels of automation) and the broad time span covered by the review. As FA is a crucial step in systems engineering, it is a well-documented topic. This approach constrained the search space enough to ensure that the screening process remained manageable while still capturing the relevant and high-quality sources. The second phase of literature search (“backwards search”, see section titled Backwards-Forwards Search), ensured that any relevant items that were potentially missed due to the practical constraints of the review were ultimately captured and subsequently reviewed

2.3.4. Extraction and Storage of Citation Data

Each keyword was entered into each database individually. The results returned for that keywork were then exported to Rayyan as BibTex (.bib) files. Rayyan also accepts EndNote Export (.enw), RIS (.ris), CSV (.csv), PubMed XML (.xml), New PubMed Format (.nbib), and Web of Science (.ciw).

Rayyan supports full text review for .pdf format documents. The review team did not use this feature for this review and instead reviewed full text documents in a .pdf reader. Following the citation data import the Rayyan deduplication tool was used to remove repeat entries. After deduplication there were a total of 8,729 sources that would be subject to the first level of screening.

2.4. Screening Process

The information imported from the databases included title, abstract, and some metadata (authors, publication name, publication type, year, topic/keywords, URL, and DOI number). The criteria (

Figure 2) were applied at the title, abstract, and full text level of analysis. However, it is often not possible to confidently determine if a source meets all the inclusion criteria just from the title, so the review team agreed to screen liberally at the title and abstract level, airing on the side of being inclusive rather than exclusive. All 8,729 sources were screened at the title level. Following screening at the title level, 2,088 sources remained and were screened at the abstract level. Abstract review further reduced the set of sources to 638. An initial screening of the full text reduced the data set for a detailed full text review to 164 sources to be analyzed. The final set (including those identified in the backwards search) included 123 sources.

The review team made their decisions independently and did not discuss their screening decisions while independently reviewing sources. Reviewers were also instructed to ignore any source status information logged by another reviewer in the Rayyan portal. While independent, this was an un-blinded review procedure. It was possible for another reviewer to see the decisions of the other reviewers. The use of the unblinded data for the title and abstract screening enabled the review team to resolve disagreements during the review process (at designated screening alignment meetings where the inclusion and exclusion criteria were reviewed and discussed in the context of each conflicted source). After screening the title and abstract level, 638 papers were moved off platform and reviewed. At this stage each reviewer was fully blind to the classifications made by the other reviewers. The Analysis and Synthesis section provides additional details about the review process at each level of review.

2.4.1. Title-Level Screening

The initial screening was performed using the Rayyan platform screening tools. The screening began by removing sources that could be excluded with complete confidence. For example, because the topic of functional requirements analysis and function allocation between humans and automation shared terminology with software engineering requirements analysis, many sources were retrieved from the software engineering literature. These sources often addressed engineering requirements but did not discuss function allocation or functional requirements analysis. This left a substantial number of sources that superficially and liberally met the inclusion criteria. One challenge specific to this review is the overlap in terminology and scope between FA and task analysis. Many papers that discuss FA also briefly discuss the task analysis performed to determine the required set of functions. It was often not clear from the source title what the focus of the paper would be. This ambiguity was one reason why so many sources required screening at the abstract level.

2.4.2. Abstract-Level Screening

After assessing the title for relevance, 2,088 sources remained. Each of the 2,088 sources was reviewed by two reviewers, working independently in Rayyan. Reviewers worked without discussion and relied solely on the information available in Rayyan and the review protocol. When conflicts occurred, Rayyan flagged the conflict, and it was discussed between the reviewers at a specific conflict resolution meeting. If they could not agree based solely on the information available in the abstract, the paper’s full text was reviewed and then the paper was classified as included/excluded.

2.4.3. Full-Text Screening

The third level of screening was based on a quick review of the full text. A quick review meant that the reviewers read the source for understanding, in the context of the evaluation criteria. Following initial reading the text was reviewed comprehensively with the goal of extracting the data necessary to answer the research questions (see

Figure 3). For white papers, reviews, and similar documents, the introductory material was reviewed. For experimental studies, both the introduction and methods sections were examined to determine whether the source met the inclusion criteria.

2.4.4. Backwards Forwards Search

The backwards forwards method is a technique used to ensure that no resources were missed due to limitations in the selection of keywords or databases. The idea is that each paper screened into the review will have a comprehensive list of references relevant to that paper. The reference list for each paper screened into the review was examined, and these references were then compared to the full set of sources listed in Rayyan. Sources that were already evaluated were not evaluated a second time. Sources that were new were evaluated against the review criteria described in

Figure 2. These reference lists can fill any gaps missed during the forward search process [

12]. This technique is also ideally suited to surfacing older and foundational studies. Resources that were classified as new were then screened for potential inclusion in the screened in literature set (see Review and Classification of Citation Data for screening methods). The backwards search uncovered an additional 102 items, of which 44 met the inclusion criteria and were screened into review

3. Results

3.1. Literature Characteristics

This review provided an opportunity to demonstrate the development and popularity of function allocation as a focus of research. Since NRC’s guidance was published in 1983, a primary motivation for this research was to understand what new research is available and how the research has changed.

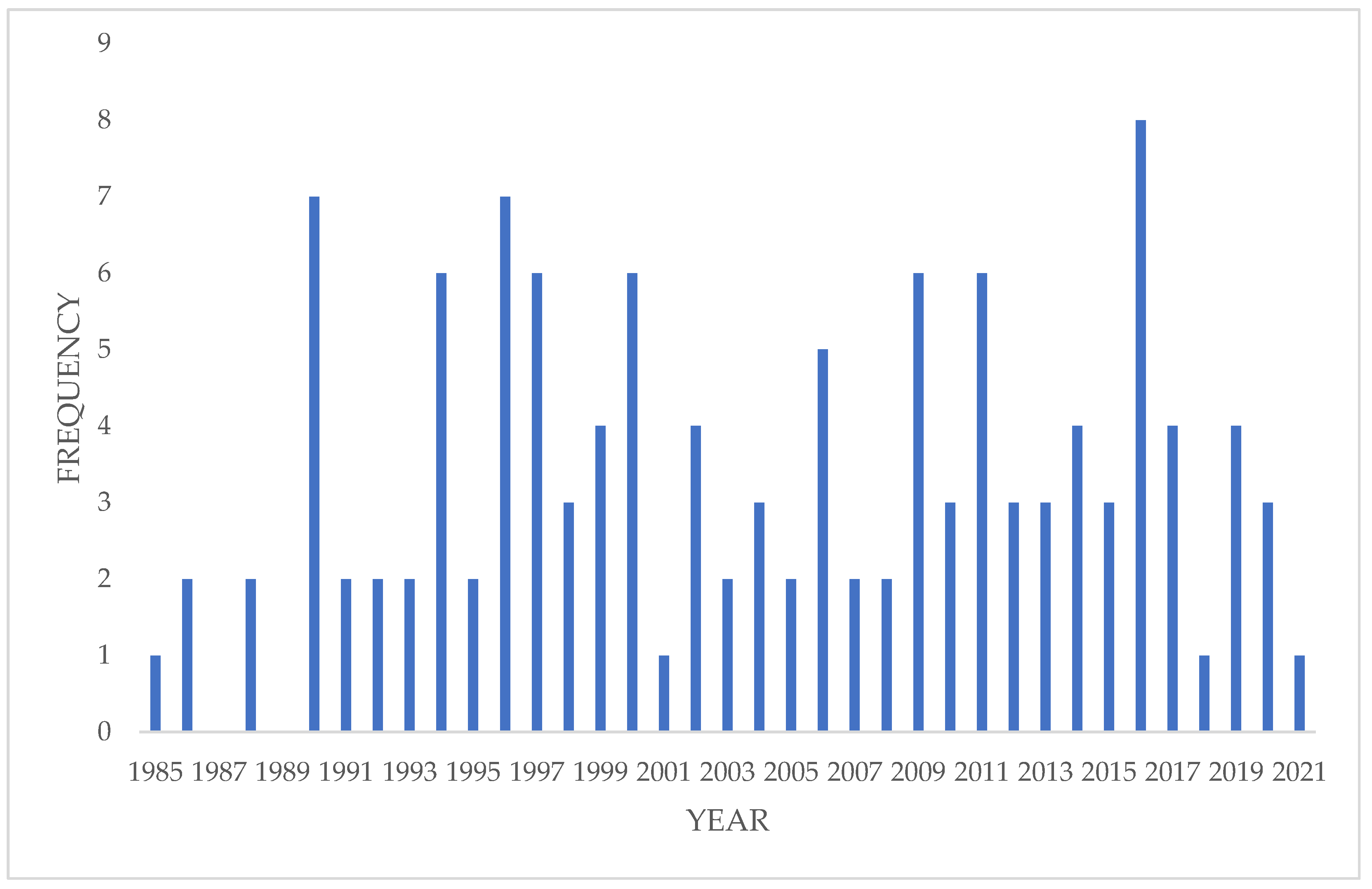

3.2. Publications by Year

Overall, there was an upward and bimodal trend in the frequency of publications for each year (

Figure 4). This suggests that as technology has advanced, there has been increased interest in understanding function allocation methods.

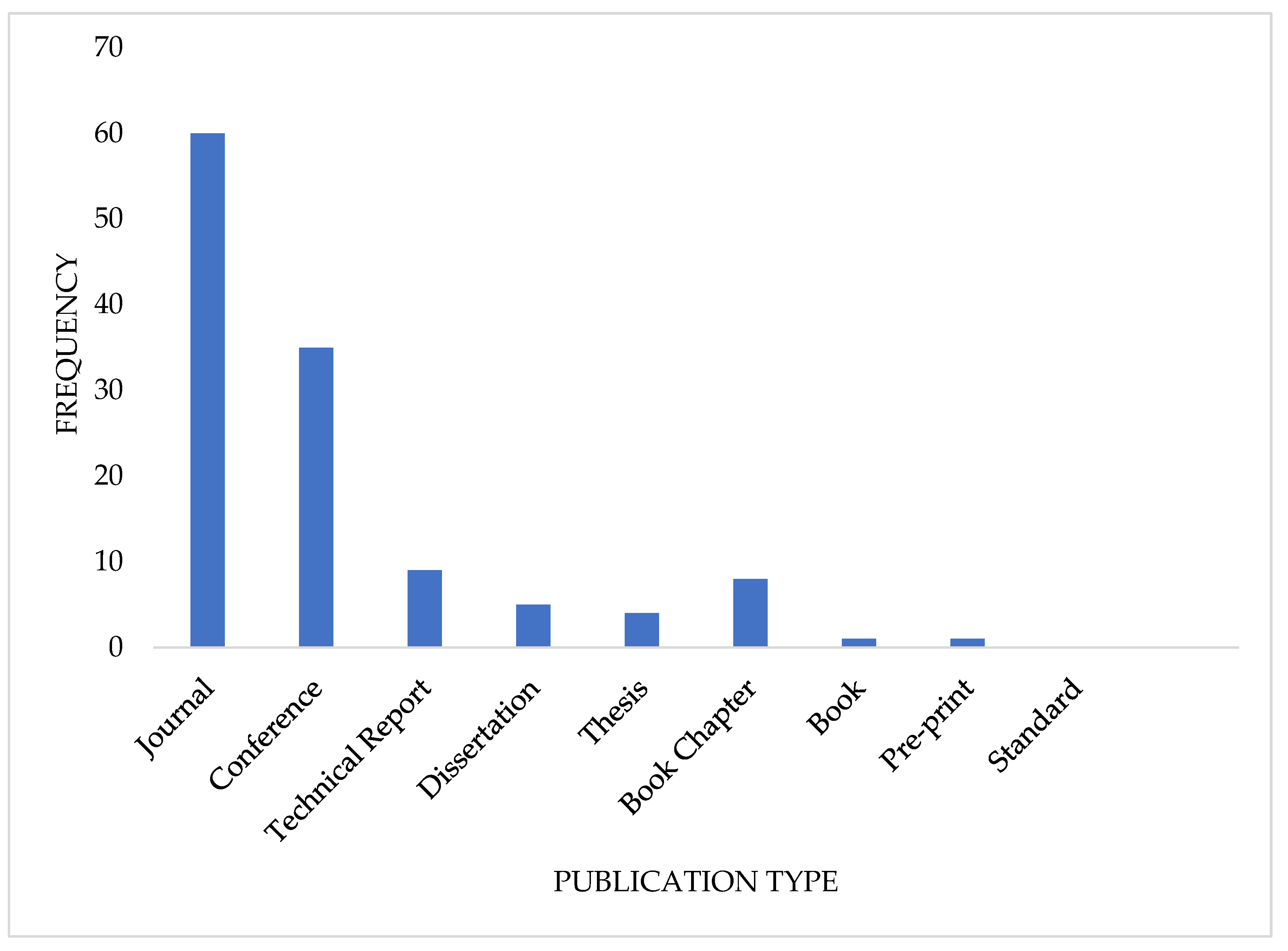

3.3. Publication Types

The distribution of sources was analyzed by publication type (

Figure 5). Most sources were published in peer-reviewed journals, followed by conference proceedings. There were far fewer books and book chapters, and limited student work (e.g., dissertation or thesis) in the sources that met the review inclusion criteria. One limitation of the current study is that technical reports from manufactures, government agencies, and private companies are often difficult to access, making it possible that the low number of technical reports is due to limited availability of this type of source.

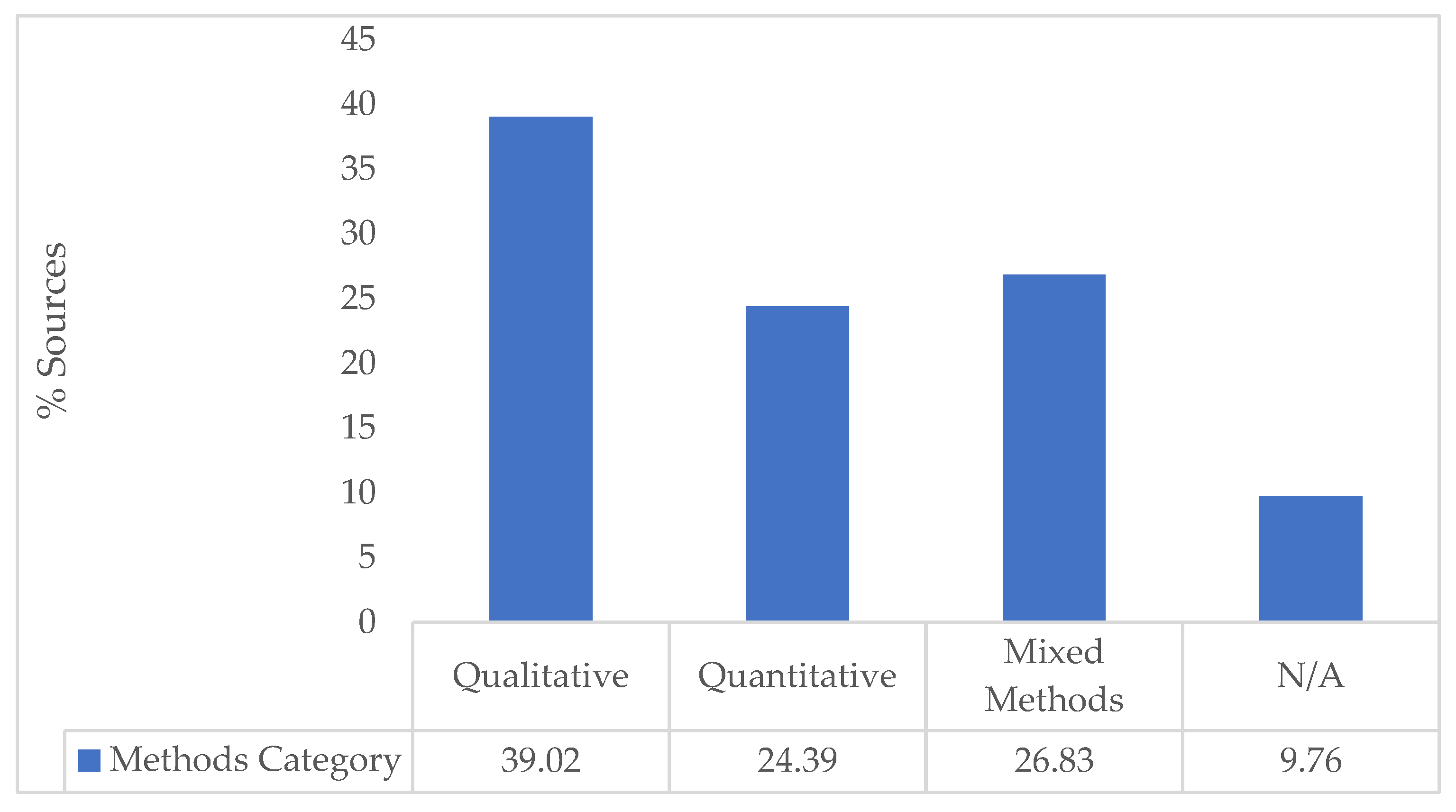

3.4. Representation of Methodological Approaches

Figure 6 provides a breakdown of the frameworks described in the literature as a function of the general methodological approach, specifically, if the framework is based on qualitative or quantitative data or represents a mixed method approach. More than 39% of the 123 sources screened into the review were clearly qualitative. The qualitative frameworks are those that provide a set of descriptive criteria for different allocation configurations, without applying any quantifiable metrics as the basis for allocation descriptions. The quantitative frameworks represented nearly 25% of the screened in papers. These are frameworks that typically relied on a continuum approach to automation “level” and often include a modeling and/or simulation of the operational environment. These frameworks also included quantitative metrics for human performance as part of an evaluation strategy to assess the efficacy of the allocations. This enables more realistic allocation decisions. Model and simulator-based function allocation methods also had a tendency to use real time allocation triggers. Mixed methods approaches were the second most common approach. The mixed methods frameworks often included one of the more popular LOA frameworks (e.g., [

13,

14]), where the configuration of human and automation was categorical and used discrete levels, but relied on quantitative metrics from the human and/or system as the basis for the initial allocations and similarly used quantitative metrics to validate the allocations.

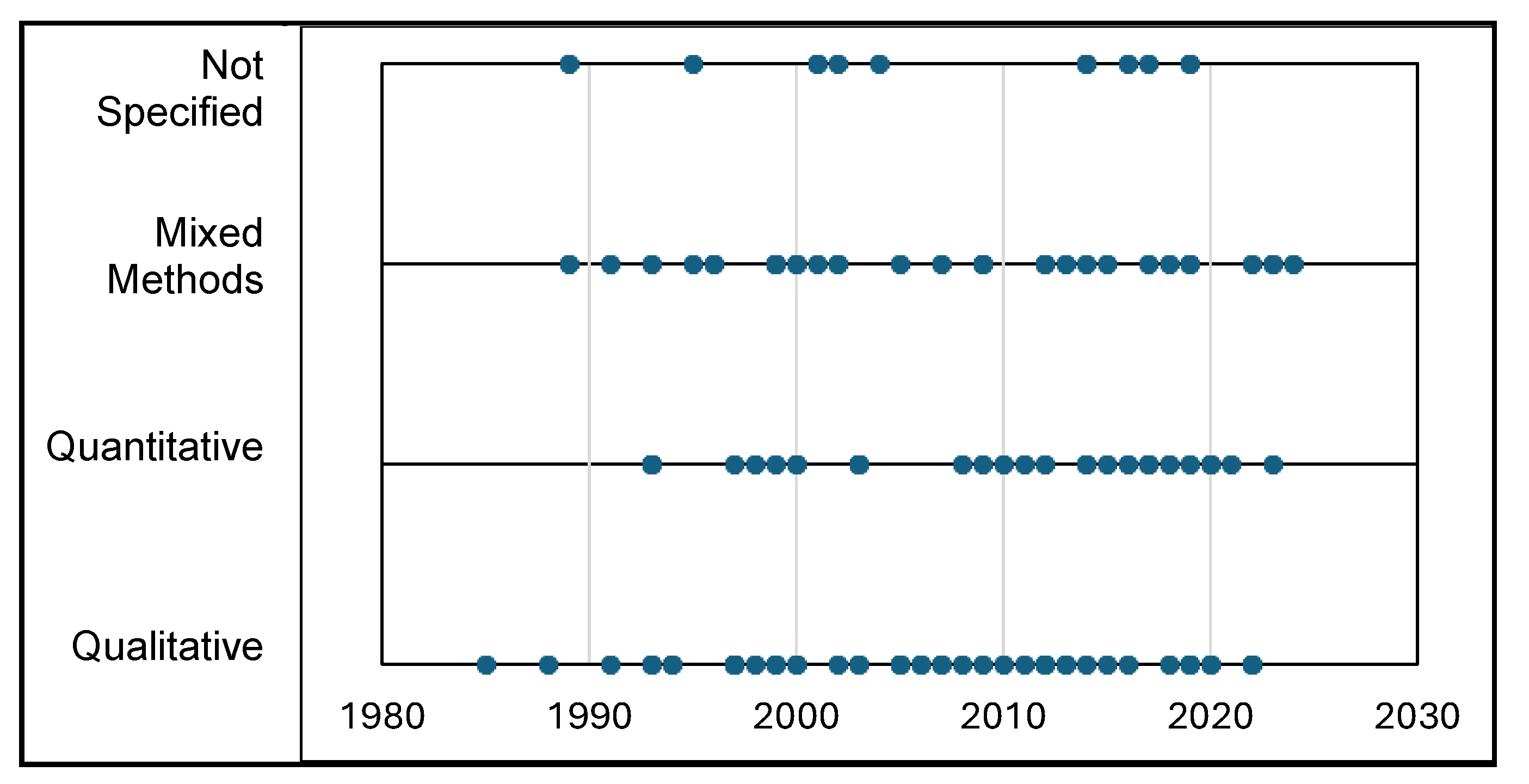

3.5. Are Quantitative Approaches Newer?

At the outset, a goal of this SLR was to identify changes in the literature since the publication of NRC’s guidance on FA and FRA. The distribution of qualitative relative to quantitative approaches over time was of particular interest because improvements in technology since 1983 not only influenced the types of tasks that can be allocated to non-human agents, but it also influenced the availability of tools and techniques for performing FA. To assess methodological changes over time the SLR used the high level of qualitative, quantitative, or mixed method to classify each approach described in the screened in literature.

Figure 7 visualizes the methodological categories as a function of publication years. Visual inspection of the graph shows that quantitative frameworks emerged approximately five years later than qualitative frameworks, however, this was not a statistically meaningful difference (

r = .04,

p = .61).

4. Discussion

4.1. The Benefits and Limitations of Different FA Methods Categories

Determining what functions to allocate to which agent can be influenced by numerous human, system, and non-human agent factors. There is not a “good” or “bad” framework-based approach to FA. Qualitative, quantitative, and mixed methods frameworks are all effective FA strategies. The specific allocation approach selected by system designers and researchers will be highly dependent on the operational context, the type and sophistication of the non-human agent(s), domain-specific risk considerations, and the maturity of the design. One-hundred and twelve of the sources included in the review included a discussion of LOA frameworks, often comparing static to dynamic approaches and the limitations of humans and non-human agents as part of an overall assessment of a potential allocation approach. The remaining papers discussed function allocation from a collaborative or joint control perspective. These perspectives tended to be used in the context of human-robot collaboration but also appeared in the aviation literature.

Figure 5 and

Figure 6 provide a breakdown of the general methodological approach for LOA and other kinds of FA frameworks. Importantly, even when the general structure of an FA approach was consistent with the most common qualitative LOA framework developed by [

13] and later [

14], that did not limit the use of quantitative methods for measuring human or agent performance and using those data to trigger shifts between LOAs dynamically.

When a qualitative framework was discussed as a standalone framework, it was generally used as a means to make sense of a complex joint human-autonomous agent system that is controlled by both humans and non-human agents engaged in different tasks or different aspects of the same task. Qualitative frameworks were often applied when allocations were static or adaptable (human initiated). The basis for allocation decisions varied, but generally included discussions with experts, division of tasks based on level of cognitive processing, or operating experience (e.g., [

15]). These kinds of allocation strategies do not explicitly require direct measurement of the human and non-human agent within the operational context. The review also uncovered that most of the qualitative allocation frameworks were based on LOA and were decedents of [

13] and/or [

14]. Those that were not explicitly tied to [

13] or [

14] were joint or supervisory control frameworks. An important note about difference in representation between LOA and supervisory and joint control frameworks – supervisory and joint control are most commonly found in the driving literature. This literature review did not include research from the driving literature because the human tasks and use case generally is too different from nuclear process control, calling to question the applicability of frameworks from that domain. based on complementary principles. The domains included in this review did occasionally use supervisory or joint control frameworks, it was less common than the hierarchal LOA frameworks (e.g., [

13,

14]).

The origins of the quantitative methods appeared to be more varied (relative to the qualitative frameworks). A limitation of the qualitative frameworks from the perspective of a potential update to FA guidance is that there is not a clear fit for systems that would assign critical safety functions to a potential mixture of humans, non-human agents, inherent safety characteristics and passive safety features. Quantitative approaches, while not significantly newer, tend to give the impression of newness because they tend to be used in the context of dynamic and adaptive automation and they tend to be used with more advanced forms of autonomy, including robotic applications [

16,

17,

18,

19]. For example, [

17] used a 2-stage method to allocate functions between a human and a robotic agent on a device assembly task. In stage 1, initial task allocations were based on task demand weights, estimated algorithmically. The second phase of this FA method was real time monitoring of completion time and errors. If the human assembler slowed down or made too many errors, the robotic agent is assigned more tasks until the human’s performance improves. Once performance improves, the task load is shifted back to the baseline allocation.

Dynamic and adaptive allocation methods are also commonly referenced among strategies to keep the human in the loop (see next section for details on various HITL strategies). When working under dynamic FA, the human remains in control of critical tasks, and the autonomous agent performs routine or repetitive work. If the human is underloaded, tasks can be automatically reallocated to the human. This ensures humans retain the skills needed for manual control. When the human is overloaded, the system can automatically reallocate their tasks to balance their workload. To reallocate tasks dynamically and effectively, a quantitative approach is required. In the literature reviewed, dynamic allocation is based on measures related to performance (of the human or the autonomous agent), physiological measures, or estimates of the consequences of a particular allocation based on similar operational contexts or experimental data.

Additionally, quantitative approaches to allocation may also be better positioned to integrate the status of the passive safety features and inherent safety characteristics and capture the effectiveness, efficiency, and system load associated with metrics that indicate the status of those systems. For example, the “actions” of inherent safety characteristics and passive safety features can be modeled using digital twin technology, and those parameters under simulated normal, emergency, and accident conditions can be incorporated into quantitative FA models to provide better estimates of the human’s task load under a given allocation, when they are supported by both the autonomous agent and the inherent safety characteristics and passive safety features. A quantitative allocation framework developed by Hilburn, Molloy, Wong, and Parasuraman [

20] provides a dimensional structure that is flexible enough to take into consideration actions beyond those typically attributed to human and non-human agents. Their framework includes an authority dimension, which is equivalent to the level of automation in [

13], an automation invocation strategy (the specific automation triggers), and the task strategy. The task strategy includes specific types of allocation: (1) giving an entire task to an agent and (2) partitioning, separating tasks based on expertise, e.g., a human retains decision making while the non-human agent performs target identification to support decision making. (3) Transformation, where a task is changed based on the allocation. The [

20] framework also includes a dimension for automation cycle, which captures the frequency of automation status changes and a decision stability dimension, which can be another factor in determining which agent or system to assign a specific task or portion of a task. All these dimensions present tradeoffs that will impact the success of both the human and non-human aspects of a system. Through the lens of the Hillburn framework, FA is agnostic to “who” performs a task. It emphasizes effectiveness for the system holistically, which could include passive safety features and inherent safety characteristics in addition to the human and non-human agent.

An example of how task allocation works when distributing between humans, non-human agents, and active and passive safety features is managing a steam generator tube rupture (SGTR) event. When the rupture occurs, the reactor will likely automatically trip. If the automatic trip fails, the human operator trips the reactor, in which case the human is the back up for an autonomous function. The operator follows the procedures to perform numerous tasks in quick succession to isolate the steam generator with the ruptured tube (e.g., trip main feedwater to the faulted steam generator, close the main steam isolation valve, close steam line drains and blowdown valves, and redirect auxiliary feedwater to the other steam generators). The operator’s actions to isolate the faulted generator are dictated by procedures, but these procedural actions could be allocated dynamically to automation, while the operator focuses on tasks requiring higher level decision making. While these actions and decisions are occurring, the task of cooling the reactor is “allocated” to an inherent safety characteristic of the system—natural circulation moves cooling water over the fuel to maintain core temperature in a safe range. The “actions” of the inherent safety characteristic of natural circulation would be represented in the control room human system interface (HSI) and the parameters associated with those actions could be monitored by either the operator or the non-human agent. The operator does not need to actively control cooling flow because it happens naturally.

4.2. Was Fitts List Represented in the Post-1983 Literature?

Few papers explicitly referenced Fitts List [

2] and the HABA-MABA terminology; however, the influence of this early work was evident in the literature reviewed (see [

21,

22] for recent uses/modifications of Fitts List [

2]. See also [

23]). The stages and levels perspective [

14] is a more contemporary test of partitioning tasks based on the skills commonly associated with role (i.e., human vs. automation). [

14] argued that automation is best used on lower information processing tasks like perception and identification, and humans should have authority when it comes to higher level tasks like decision making. The reason many frameworks align on humans retaining decisional control is because current capabilities of non-human agents are not sufficient for those agents to develop and exercise judgement and expertise. This kind of alignment represents a consensus that humans are better at (HABA) decision making and non-human agents (MABA) are better at lower-level cognitive tasks. Additionally, nearly all sources that discussed strategies for keeping the human in the loop highlighted a categorical distinction between human versus non-human agent tasks. For example, nearly all papers that discussed FA and human in the loop issues explicitly stated that the human should retain authority over decision making and that the human should be the only decider in high-risk operational contexts. There was a similar sentiment directed at the autonomous agents; they should perform routine or monotonous tasks unless the human is underloaded or engaging manual control to prevent deskilling.

The influence of Fitts List was evident in the joint control frameworks; both are based on understanding the strengths and limitations of human and non-human agents and allocating tasks based on that understanding. These perspectives are based on a complementary perspective on function allocation, where human and non-human work with tasks consistent with their individual strengths. The complementary perspective was also evident in frameworks that discussed stages of information processing, as those perspectives advocated for allocation of tasks requiring low levels of information processing (e.g., detection) would be allocated to non-human agents, but tasks requiring higher levels of information processing, such as decision making, would be retained by humans.

4.3. How Does FA Need to Change with Increased Use of AI?

FA in industrial process control, aviation, transportation, and military context tend to refer to systems that meet the criteria for agentic AI as dynamic or adaptive automation. The shift away from static to dynamic allocations and the development of algorithmic approaches to allocation enable these more advanced digital intelligence systems. Agentic AI is defined as an intelligence system that acts autonomously, pursues goals, takes action, and makes decisions with a degree of independence from a human partner or teammate. The only distinction between the attributes of advanced forms of autonomy reviewed in this paper and agentic AI is the removal of the non-human agent’s decision-making authority. In dynamic and adaptive allocation, the non-human agent could be allocated all the tasks required to support decision making, while still deferring to the human to make a decision based on their understanding of the non-human agent’s prior actions. One could argue that the quantitative FA frameworks that emerged in the 1990s [

24,

25,

26,

27] contributed to the foundational allocation schemes incorporated into agentic AI systems used in non-commercial driving, AI personal assistants, and game agents.

4.4. Common and Less Common Human in the Loop Strategies

One hundred and eleven out of 123 papers discussed strategies for keeping humans in the loop when working with some type of autonomous agent. The remaining 12 papers either did not include manipulations and/or suggestions about factors influencing humans’ ability to maintain SA (i.e., stay in the loop) or provided high-level information that was narrowly focused on a specific operational context and system.

All the papers that discussed human in the loop advocated for and presented evidence that a dynamic allocation strategy was superior to a static allocation strategy for maintaining operator situation awareness (SA), managing workload, preventing de-skilling, and avoiding delays and errors when transitioning control between the human and the autonomous agent. The majority of papers advocated for a human-controlled automation invocation strategy, in part to avoid loss of SA and automation surprises. The literature was mixed about the cues to be used as the basis for shifting allocations. Some advocated for or proposed human centered dynamic allocation triggers, such as physiological or performance metrics. Others focused on task-based metrics, such as changes in the number of tasks or increases in the task rate. Either human- or task- strategies could be used for real-time dynamic allocation or they could be established as “preset” dynamic allocation thresholds. A few papers discussed task allocation on the basis of estimated uncertainty or complexity. These papers suggested that tasks where uncertainty or complexity were high would need to remain with the human to ensure the human remained in the loop. These papers posit that humans are better at using contextual information and thus navigating uncertainty with greater success than non-human agents. Additionally, when non-human agents perform routine or monotonous tasks their behavior is predictable and stable, which also supports the maintenance of SA.

The literature was also consistent on their recommendation for levels of automation. Overwhelmingly, the papers reviewed advocated for a moderate level of automation. In terms of [

13] LOA scale, this would be levels 5 and 6 where the automation executes actions after human approval (management by consent) or executes an action and informs the operator and the operator can intervene within a specific timeframe (management by exception). With this advocacy for moderate levels of automation, the literature consistently offered a caution about over-automation. Many papers argued that it was not beneficial to automate every task that could be automated. The literature suggested that system designers should evaluate the full scope of operator tasks (often through a lens of stages of information processing, see [

28,

29,

30] for example) in the context of human strengths and weaknesses and use a partial automation strategy. Partial automation means that the automation assists with lower-level cognitive tasks like perception and identification, and the human retains the higher-level tasks like decision making. A partial automation strategy and/or moderate level of automation is argued to prevent issues with human out of the loop by avoiding deskilling, maintaining engagement, and sustaining situation awareness, while managing attention and workload. An alternative approach to preventing operator deskilling is to use an intermittent manual control (reversion) strategy, where the operator takes control of automated tasks temporarily [

20]. The few papers that did not provide evidence in favor against any automation strategy geared towards keeping humans in the loop were framework papers representing a first step in proposing an approach to FA.

Many of the papers reviewed discussed the advantages and disadvantages of using real-time versus static automation triggers. Real-time triggers could include human performance or physiological triggers or system-based triggers, such as a change in the event rate. Static automation triggers can also be human- or system-centric. When using static triggers, it is often the case that through tasks analysis, simulation-based, and modeling approaches designers establish workload thresholds for different system conditions. Those thresholds would consistently and predictably trigger a change in the level of automation. Somewhat related to triggering changes in the level of automation and thus task allocations, many papers included the HSI design as a key factor in keeping humans in the loop. To ensure that humans and autonomous agents can act as an integrated team, the information display needs to include information about the status, availability, and actions of the agent. The control system would also need to include ways to re-direct, correct, engage, or disengage the autonomous agent. This feedback and bidirectional communication supports maintenance of SA, supports efficient human-automation collaboration, and prevents deskilling and automation surprises.

[

31] suggested that when working with autonomous agents the human needs to maintain awareness of what other agents are doing, understand why they are doing what they are doing (rationale awareness), and have some ability to predict what the agent will do next (intention awareness). The framework proposed in [

31] views the relationship between human and automation to be collaborative and suggests that the allocation of functions should be guided based on the tasks that require expertise versus those that are common sense/routine.

5. Conclusions

Like other complex industrial systems, the functions required to support safe nuclear power plant operations can be conceptualized using a levels of automation framework, and the human performance consequences of how functions are allocated under that framework can be characterized from a quantitative, qualitative, or mixed method approach. One of the major questions facing regulators, system designers, and operators is how to share functions between the onsite human(s), offsite human(s), intelligent agent(s), active safety systems, inherent safety characteristics, and passive safety features of the plant.

The inherent safety characteristics of a reactor are fundamental properties of the reactor design that are considered “fail-safe”. Hazards that cannot be eliminated are managed through the design of active or passive engineered systems, structures, or components. Active safety features may involve different levels of automation and expectations for human intervention depending on the context under which they are activated and their availability to be activated through external force (e.g., mechanical or electrical). Like in the SGTR example from the previous section, the reactor protection system autonomously trips the reactor based on sensor data, but redirecting auxiliary feedwater from the faulted generator and to the remaining steam generators is performed by the human actor; the operator follows the procedure to manage the auxiliary feedwater system and relies on the motor-driven or turbine driven pumps to complete the actions that redirect the flow of cooling water (an inherent safety characteristic). The human and various non-human “agents” mount a coordinated response to the SGTR event.

The literature review uncovered many system design considerations to keep the human in the loop with both partially and fully autonomous systems. The most common recommendations for avoiding human out of the loop issues with fully autonomous systems is to include information displays as part of the HSI that are dedicated to maintaining situation awareness of the active and passive features of the plant that are self-initiating and acting on the system independent of human control. The HSI should also include a mechanism to communicate, feedback and directions, bidirectionally (human to non-human agent and non-human agent to human). Deskilling was flagged as a common challenge with operators of highly and fully automated systems. Maintaining manual skills in the context of highly autonomous systems is an important safety consideration and will require training and potentially conduct of operation changes to ensure these skills are retained by operators. For example, Transportation Security Administration (TSA) baggage screeners receive training trials periodically intermixed with real screening tasks to ensure their ability to detect extremely low probability targets is retained [

32]. The Department of Defense’s (DOD) Missile Defense Agency has control room operators train on a system that is identical to the operational system because they may never operate the real controls but are chronically underloaded and need to maintain vigilance and readiness to take control [

33].

Nuclear reactors have redundant safety systems, and some of these systems may be able to operate autonomously. It will be critical for designers of new and advanced reactors to determine what skills related to the active and passive safety features need to be maintained through either periodic control or training. Control rooms with highly autonomous systems (including passive safety features) will need HSIs to support effective monitoring so that in the event of an emergency or accident, the operator is aware of all the resources available to bring the plant back to a safe state as quickly as possible. FA methods applied to these types of plant must account for both the availability of active and passive safety features and the cognitive demands associated with monitoring and maintaining a mental model of their behavior alongside the rest of the plant conditions.

These considerations point to a fundamental gap in how FA is currently conceptualized. That is, advanced reactor technologies increasingly blur the boundaries between humans, intelligent non-human agents, active safety features, and passive safety features. However, traditional FA methods that are available have not evolved to address this integration. Traditional methods often treat these as distinct categories and are insufficient for capturing the integrated nature of modern plant operations. A new approach may consider not only how functions are allocated across these ‘agents,’ but also how human errors introduced during design, maintenance, monitoring, or operational decision-making may propagate through these tightly coupled systems. By accounting for these error pathways, function allocation methods may better support design and system decisions that enhance safety outcomes.

This perspective supports a more global dynamic or adaptive approach to function allocation. In such an approach, the full “universe of actions” would be evaluated without presupposing who or what should be performing each action. Instead, tasks may be allocated based on the capabilities, limitations, and available capacity of the different types of agents: the human operator, intelligent agents, (i.e., AI), active safety features, and passive safety features of the plant. In this way, framing non-human agents, active safety features, and passive safety features as non-volitional forms of autonomy ensures that they are not treated as exceptional or separate categories outside of allocation but as part of the same decision space. This approach allows for function allocation methods to capture both the availability of safety features and the cognitive demands associated with monitoring and maintaining a mental model of their behavior. Such an approach also supports a more balanced task load for human operators, which may lead to benefits such as reduced attention demand and improved awareness of overall plant status.

4.1. Data-Driven and Simulator-Based Approaches

In recent years there has been a concerted effort to develop nuclear control room simulators that are easier to use and develop and lower cost. [

34] developed Rancor, a simplified digital control that can simulate different levels of autonomous control (see also [

35,

36]). This simulator technology was developed alongside a companion model, HUNTER, which can rapidly simulate large volumes of human performance data [

37,

38]. This version of the simplified control room, like recent experiments from the NRC, generates data representative of real-world performance because rather than transforming tasks to suit non-expert participants, Rancor and the NRC’s simplified simulator simply narrowed the scope of the tasks-to-simulate to focus on skill and rule based tasks [

39] that would be represented the same way in the full scope and simplified simulator and would be performed in the same way by both operators and non-experts [

40,

41,

42]. In both cases, by focusing primarily on rule and skill-based tasks, large numbers of non-experts can contribute data that tests different allocation strategies and the benefits and pitfalls of different task allocation schemes. Simulator-based approaches will also be critical for assessing different task allocations for plants with inherent safety characteristics and passive safety features because the simulator can be integrated with full fidelity plant models or digital twins so that the impact of monitoring fully autonomous, passive, and inherent aspects of the plant’s safety profile can be modeled in the context of realistic operational scenarios and task loads [

43,

44].

As illustrated by several papers proposing new FA methods or new ways to measure allocations, low or medium fidelity desktop simulations of operational environments and tasks are valuable as efficient, low cost, and low risk ways to iteratively assess different allocation configurations quickly and efficiently [

45,

46,

47,

48]. The research from [

37,

40,

41,

42] demonstrates that this approach is also potentially suitable for nuclear control room function allocation, as the low and medium fidelity simulators can represent core task attributes with sufficient fidelity that they could be used as part of the system design process to estimate how human operators would perform under different allocation schemes. The need for human performance data to determine initial allocations, and develop the models that control dynamic allocations, creates an opportunity for researchers to work within a more realistic context and designers and licenses to approach their FA strategy iteratively and quantitatively, inspired by the research-based approach.

4.2. Function Allocation Pre- and Post-1983: New Challenges for Long Standing Frameworks

Prior to the publication of the NRC’s guidance on function allocation, the predominant view was in line with Fitts List [

2] and the HABA-MABA world view. While technology has advanced considerably since guidance was released, function allocation can still be guided by evaluating what benefits and limitations the human agent brings to the table, and what benefits and limitations the non-human agents bring to the table. [

49] found that over-automation can cause the human agent to lose awareness of the state of the system and actions of the automation. Newer FA methods provide many options for how to assess the complexity of the human autonomous agent interactions and a variety of ways to trigger, manage, and measure autonomous support systems. The NRC’s decision to take a look at existing guidance introduced a novel and interesting new challenge to human-autonomous agent teaming: when the system, in an autonomous, but in a non-volitional way is allocated critical safety functions, what is the best framework to systematically trigger, manage, monitor, and re-allocate the functions that are allocated to the human operators, intelligent agents, active safety features, and passive safety features.

Appendix A

This citation list includes the entire set of 123 papers screened into the literature review on function allocation.

References

- Abbass, H. A. Social integration of artificial intelligence: functions, automation allocation logic and human-autonomy trust. Cognitive Computation 2019, 11(2), 159–171. [Google Scholar] [CrossRef]

- Andersson, J.; Osvalder, A. L. Automation inflicted differences on operator performance in nuclear power plant control rooms. In Nordisk Kernesikkerhedsforskning; Roskilde (Denmark), 2007; p. No. NKS--152. [Google Scholar]

- Baird, I. C. The development of the human-automation behavioral interaction task (HABIT) analysis framework. Master’s thesis, Wright State University, 2019. [Google Scholar]

-

Analysis techniques for human-machine systems design: A report produced under the auspices of NATO defence research group panel 8; Beevis, D., Ed.; Crew Systems Ergonomics/Human Systems Technology Information Analysis Center, 1999. [Google Scholar]

- Beevis, D.; Essens, P.; Schuffel, H. No. CSERIACSOAR9601; Improving function allocation for integrated systems design. Crew System Ergonomics Information Analysis Center, 1996.

- Behymer, K. J.; Flach, J. M. From autonomous systems to sociotechnical systems: Designing effective collaborations. She Ji: The Journal of Design, Economics, and Innovation 2016, 2(2), 105–114. [Google Scholar] [CrossRef]

- Bevacqua, G.; Cacace, J.; Finzi, A.; Lippiello, V. Mixed-initiative planning and execution for multiple drones in search and rescue missions. Proceedings of the International Conference on Automated Planning and Scheduling 2015, Vol. 25, 315–323. [Google Scholar] [CrossRef]

- Bilimoria, K.D.; Johnson, W.W.; Schutte, P.C\. Conceptual framework for single pilot operations. In Proceedings of the International Conference on Human-Computer Interaction in Aerospace, 2014. [Google Scholar]

- Bindewald, J. M.; Miller, M. E.; Peterson, G. L. A function-to-task process model for adaptive automation system design. International Journal of Human-Computer Studies 2014, 72(12), 822–834. [Google Scholar] [CrossRef]

- Bloch, M.; Eitrheim, M.; Mackay, A.; Sousa, E. Connected, Cooperative and Automated Driving: Stepping away from dynamic function allocation towards human-machine collaboration. Transportation research procedia 2023, 72, 431–438. [Google Scholar] [CrossRef]

- Bost, J.R.; Malone, T.B.; Baker, C.C.; Williams, C.D. Human systems integration (HSI) in navy ship manpower reduction. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 1996. [Google Scholar]

- Boy, G. A. FlexTech: From Rigid to Flexible Human–Systems Integration. In Systems Engineering in the Fourth Industrial Revolution; 2019; pp. 465–481. [Google Scholar]

- Boy, G.A. Cognitive function analysis for human-centered automation of safety-critical systems. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 1998. [Google Scholar]

- Bye, A., Hollnagel, E., Hoffmann, M., & Miberg, A.B. (1999). Analysing automation degree and information exchange in joint cognitive systems: FAME, an analytical approach. IEEE International Conference on Systems, Man, and Cybernetics.

- Byeon, S.; Choi, J.; Hwang, I. A computational framework for optimal adaptive function allocation in a human-autonomy teaming scenario. IEEE Open Journal of Control Systems 2023, 3, 32–44. [Google Scholar] [CrossRef]

- Caldwell, B. S.; Nyre-Yu, M.; Hill, J. R. Advances in human-automation collaboration, coordination and dynamic function allocation. In Transdisciplinary Engineering for Complex Socio-technical Systems; IOS Press, 2019; pp. 348–359. [Google Scholar]

- Clamann, M. P.; Kaber, D. B. Authority in adaptive automation applied to various stages of human-machine system information processing. Proceedings of the Human Factors and Ergonomics Society Annual Meeting 2003, Vol. 47(No. 3), 543–547. [Google Scholar] [CrossRef]

- Clegg, C.; Ravden, S.; Corbett, M.; Johnson, G. Allocating functions in computer integrated manufacturing: a review and a new method. Behaviour & Information Technology 1989, 8(3), 175–190. [Google Scholar] [CrossRef]

- Cook, C., Corbridge, C., Morgan, C., & Turpin, E. (1999). Investigating methods of dynamic function allocation for Naval command and control. International Conference on People in Control (Human Interfaces in Control Rooms, Cockpits and Command Centres).

- Crouser, R. J.; Ottley, A.; Chang, R. Balancing human and machine contributions in human computation systems. In Handbook of Human Computation; Michelucci, P., Ed.; Springer, 2013; pp. 615–623. [Google Scholar]

- Cummings, M.L.; How, J.P.; Whitten, A.; Toupet, O. The Impact of Human, Automation Collaboration in Decentralized Multiple Unmanned Vehicle Control. In Proceedings of the IEEE, 2012. [Google Scholar]

- Cummings, M. M. Man versus machine or man+ machine? IEEE Intelligent Systems 2014, 29(5), 62–69. [Google Scholar] [CrossRef]

- De Greef, T.; Arciszewski, H. Triggering adaptive automation in naval command and control. In Frontiers in Adaptive Control; Cong, S., Ed.; INTECH Open Access Publisher, 2009; pp. 165–188. [Google Scholar]

- De Greef, T.; van Dongen, K.; Grootjen, M.; Lindenberg, J. Augmenting cognition: reviewing the symbiotic relation between man and machine. International Conference on Foundations of Augmented Cognition, 2007; Springer; pp. 439–448. [Google Scholar]

- Dearden, A.; Harrison, M.; Wright, P. Allocation of function: scenarios, context and the economics of effort. International Journal of Human-Computer Studies 2000, 52(2), 289–318. [Google Scholar] [CrossRef]

- Dekker, S. W.; Woods, D. D. MABA-MABA or abracadabra? Progress on human–automation co-ordination. Cognition, Technology & Work 2002, 4(4), 240–244. [Google Scholar]

- Di Nocera, F.; Lorenz, B.; Parasuraman, R. Consequences of shifting from one level of automation to another: main effects and their stability. In Human factors in design, safety, and management; 2005; pp. 363–376. [Google Scholar]

- Eraslan, E.; Yildiz, Y.; Annaswamy, A. M. Shared control between pilots and autopilots: An illustration of a cyberphysical human system. IEEE Control Systems Magazine 2020, 40(6), 77–97. [Google Scholar] [CrossRef]

- Feigh, K. M.; Dorneich, M. C.; Hayes, C. C. Toward a characterization of adaptive systems: A framework for researchers and system designers. Human factors 2012, 54(6), 1008–1024. [Google Scholar] [CrossRef] [PubMed]

- Fereidunian, A.; Lucas, C.; Lesani, H.; Lehtonen, M.; Nordman, M. Challenges in implementation of human-automation interaction models. 2007 Mediterranean Conference on Control & Automation, 2007. [Google Scholar]

- Frohm, J.; Lindström, V.; Stahre, J.; Winroth, M. Levels of automation in manufacturing. Ergonomia-an International journal of ergonomics and human factors 2008, 30(3). [Google Scholar]

- Gregoriades, A.; Sutcliffe, A. G. Automated assistance for human factors analysis in complex systems. Ergonomics 2006, 49(12-13), 1265–1287. [Google Scholar] [CrossRef] [PubMed]

- Hancock, P.A.; Chignell, M.H. Adaptive Function Allocation by Intelligent Interfaces. In Proceedings of the 1st International Conference on Intelligent User Interfaces, 1993. [Google Scholar]

- Hardman, N. S. An empirical methodology for engineering human systems integration; Air Force Institute of Technology, 2009. [Google Scholar]

- Hardman, N.; Colombi, J. An empirical methodology for human integration in the SE technical processes. Systems Engineering 2012, 15(2), 172–190. [Google Scholar] [CrossRef]

- Harris, W. C.; Hancock, P. A.; Arthur, E. J. The effect of taskload projection on automation use, performance, and workload. In The Adaptive Function allocation for Intelligent Cockpits; 1993. [Google Scholar]

- Hilburn, B.; Molloy, R.; Wong, D.; Parasuraman, R. Operator versus computer control of adaptive automation. In Proceedings of the 7th International Symposium on Aviation Psychology, 1993. [Google Scholar]

- Hoc, J. M.; Chauvin, C. Cooperative implications of the allocation of functions to humans and machines. Manuscript submitted for publication, 2011. [Google Scholar]

- Hollnagel, E. Control versus dependence: Striking the balance in function allocation 1997, HCI (2), 243–246.

- IJtsma, M.; Ma, L. M.; Pritchett, A. R.; Feigh, K. M. Computational methodology for the allocation of work and interaction in human-robot teams. Journal of Cognitive Engineering and Decision Making 2019, 13(4), 221–241. [Google Scholar] [CrossRef]

- Ijtsma, M.; Ma, L.M.; Pritchett, A.R.; Feigh, K.M. Work dynamics of task work and teamwork in function allocation for manned spaceflight operations. 19th International Symposium on aviation psychology, 2017. [Google Scholar]

- Inagaki, T. Situation-Adaptive Autonomy Dynamic Trading of Authority between Human and Automation. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 2000. [Google Scholar]

- Inagaki, T. Adaptive automation: design of authority for system safety. IFAC Proceedings Volumes 2003, 36(14), 13–22. [Google Scholar] [CrossRef]

- Inagaki, T. Adaptive automation: Sharing and trading of control. In Handbook of cognitive task design; Hollnagel, E., Ed.; CRC Press, 2003; pp. 147–169. [Google Scholar]

- Janssen, C. P.; Donker, S. F.; Brumby, D. P.; Kun, A. L. History and future of human-automation interaction. International journal of human-computer studies 2019, 131, 99–107. [Google Scholar] [CrossRef]

- Jiang, X.; Kaewkuekool, S.; Khasawneh, M.T.; Bowling, S.R.; Gramopadhye, A.K.; Melloy, B.J. Communication between Humans and Machines in a Hybrid Inspection System. IIE Annual Conference. Proceedings, 2003. [Google Scholar]

- Johnson, M.; Bradshaw, J. M.; Feltovich, P. J. Tomorrow’s human–machine design tools: From levels of automation to interdependencies. Journal of Cognitive Engineering and Decision Making 2018, 12(1), 77–82. [Google Scholar] [CrossRef]

- Johnson, P.; Harrison, M.; Wright, P. An evaluation of two function allocation methods. The Second International Conference on Human Interfaces in Control Rooms, Cockpits and Command Centres, 2001. [Google Scholar]

- Jung, C. H.; Kim, J. T.; Kwon, K. C. No. IAEA-TECDOC-952; An integrated approach for integrated intelligent instrumentation and control system. International Atomic Energy Agency, 1997.

- Kaber, D. B.; Endsley, M. R. The Combined Effect of Level of Automation and Adaptive Automation on Human Performance with Complex, Dynamic Control Systems. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 1997. [Google Scholar]

- Kaber, D. B.; Riley, J. M. Adaptive automation of a dynamic control task based on secondary task workload measurement. International journal of cognitive ergonomics 1999, 3(3), 169–187. [Google Scholar] [CrossRef]

- Kaber, D. B.; Onal, E.; Endsley, M. R. Design of automation for telerobots and the effect on performance, operator situation awareness, and subjective workload. Human factors and ergonomics in manufacturing & service industries 2000, 10(4), 409–430. [Google Scholar]

- Kaber, D. B.; Riley, J. M.; Tan, K. W.; Endsley, M. R. On the design of adaptive automation for complex systems. International Journal of Cognitive Ergonomics 2001, 5(1), 37–57. [Google Scholar] [CrossRef]

- Kaber, D. B.; Segall, N.; Green, R. S.; Entzian, K.; Junginger, S. Using multiple cognitive task analysis methods for supervisory control interface design in high-throughput biological screening processes. Cognition, technology & work 2006, 8(4), 237–252. [Google Scholar]

- Kaber, D.B. Adaptive automation. In The Oxford Handbook of Cognitive Engineering; Lee, J.D., Kirlik, A., Eds.; Oxford University Press, 2013; pp. 865–872. [Google Scholar]

- Kidwell, B.; Calhoun, G.L.; Ruff, H.A.; Parasuraman, R. Adaptable and Adaptive Automation for Supervisory Control of Multiple Autonomous Vehicles. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 2012. [Google Scholar]

- Kim, S.Y.; Feigh, K.; Lee, S.M.; Johnson, E. A Task Decomposition Method for Function Allocation. AIAA Infotech@ Aerospace Conference and AIAA UnmannedUnlimited Conference, 2009. [Google Scholar]

- Klein, G.; Feltovich, P. J.; Bradshaw, J. M.; Woods, D. D. Common ground and coordination in joint activity. Organizational simulation 2005, 53, 139–184. [Google Scholar]

- Knee, H. E.; Schryver, J. C. No. CONF-890555-8; Operator role definition and human system integration. Oak Ridge National Lab. (ORNL): Oak Ridge, TN (United States), 1989.

- Knisely, B. M.; Vaughn-Cooke, M. Accessibility Versus Feasibility: Optimizing Function Allocation for Accommodation of Heterogeneous Populations. Journal of Mechanical Design 2022, 144(3). [Google Scholar] [CrossRef]

- Kovesdi, C. R. Addressing function allocation for the digital transformation of existing nuclear power plants; Oil, Gas, Nuclear and Electric Power: Human Factors in Energy, 2022; Volume 54. [Google Scholar]

- Kovesdi, C. R.; Spangler, R. M.; Mohon, J. D.; Murray, P. No. INL/RPT-24-77684-Rev000; Development of human and technology integration guidance for work optimization and effective use of information. Idaho National Laboratory (INL): Idaho Falls, ID (United States), 2024.

- Lee, J. D.; Seppelt, B. D. Human factors in automation design. In Springer handbook of automation; Nof, S.Y., Ed.; Springer, 2009; pp. 417–436. [Google Scholar]

- Li, H.; Wickens, C. D.; Sarter, N.; Sebok, A. Stages and levels of automation in support of space teleoperations. Human factors 2014, 56(6), 1050–1061. [Google Scholar] [CrossRef]

- Liu, J.; Gardi, A.; Ramasamy, S.; Lim, Y.; Sabatini, R. Cognitive pilot-aircraft interface for single-pilot operations. Knowledge-based systems 2016, 112, 37–53. [Google Scholar] [CrossRef]

- Lorenz, B., Di Nocera, F., Rottger, S., & Parasuraman, R. (2001). The Effects of Level of Automation on the Out-of-the-Loop Unfamiliarity in a Complex Dynamic Fault-Management Task during Simulated Spaceflight Operations. Proceedings of the Human Factors and Ergonomics Society Annual Meeting.

- Malasky, J. S. Human machine collaborative decision making in a complex optimization system. Doctoral dissertation, Massachusetts Institute of Technology, 2005. [Google Scholar]

- McGuire, J. C.; Zich, J. A.; Goins, R. T.; Erickson, J. B.; Dwyer, J. P.; Cody, W. J.; Rouse, W. B. No. NAS 1.26: 4374; An exploration of function analysis and function allocation in the commercial flight domain. NASA, 1991.

- Merat, N; Louw, T. Allocation of Function to Humans and Automation and the Transfer of Control. In Handbook of Human Factors for Automated, Connected, and Intelligent Vehicles; Fisher, D.L., Horrey, W.J., Lee, J.D., Regan, M.A., Eds.; CRC Press, 2020; pp. 153–171. [Google Scholar]

- Milewski, A. E.; Lewis, S. H. Delegating to software agents. International Journal of Human-Computer Studies 1997, 46(4), 485–500. [Google Scholar] [CrossRef]

- Mital, A.; Motorwala, A.; Kulkarni, M.; Sinclair, M.; Siemieniuch, C. Allocation of functions to human and machines in a manufacturing environment: Part I—Guidelines for the practitioner. International journal of industrial ergonomics 1994, 14(1-2), 3–29. [Google Scholar] [CrossRef]

- Mital, A.; Motorwala, A.; Kulkarni, M.; Sinclair, M.; Siemieniuch, C. Allocation of functions to humans and machines in a manufacturing environment: Part II—The scientific basis (knowledge base) for the guide. International journal of industrial ergonomics 1994, 14(1-2), 33–49. [Google Scholar] [CrossRef]

- Morrison, J.G.; Gluckman, J.P.; Deaton, J.E. Report No. NADC-91028-60; Adaptive Function Allocation for Intelligent Cockpits. Office of Naval Technology, 1991.

- Mouloua, M.; Parasuraman, R.; Molloy, R. Monitoring Automation Failures Effects of Single and Multi-Adaptive Function Allocation. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 1993. [Google Scholar]

- Naghiyev, A. Mount, J. Rice, A., & Sayce, C. (2020). Allocation of Function Method to support future nuclear reactor plant design. Contemporary Ergonomics and Human Factors 2020.

- Neerincx, M. A. Harmonizing tasks to human knowledge and capacities. Thesis fully internal (DIV), University of Groningen, 1995. [Google Scholar]

- Older, M. T.; Waterson, P. E.; Clegg, C. W. A critical assessment of task allocation methods and their applicability. Ergonomics 1997, 40(2), 151–171. [Google Scholar] [CrossRef]

- Papantonopoulos, S.; Salvendy, G. Analytic Cognitive Task Allocation: a decision model for cognitive task allocation. Theoretical Issues in Ergonomics Science 2008, 9(2), 155–185. [Google Scholar] [CrossRef]

- Parasuraman, R.; Mouloua, M.; Molloy, R.; Hilburn, B. Adaptive Function Allocation Reduces Performance Cost of Static Automation. 7th International Symposium on Aviation Psychology, 1993. [Google Scholar]

- Parasuraman, R.; Sheridan, T. B.; Wickens, C. D. A model for types and levels of human interaction with automation. IEEE Transactions on systems, man, and cybernetics-Part A: Systems and Humans 2000, 30(3), 286–297. [Google Scholar] [CrossRef]

- Pattipati, K. R.; Kleinman, D. L.; Ephrath, A. R. A dynamic decision model of human task selection performance. IEEE Transactions on Systems, Man, and Cybernetics 2012, 13(2), 145–166. [Google Scholar] [CrossRef]

- Prevot, T.; Homola, J. R.; Martin, L. H.; Mercer, J. S.; Cabrall, C. D. Toward automated air traffic control—investigating a fundamental paradigm shift in human/systems interaction. International Journal of Human-Computer Interaction 2012, 28(2), 77–98. [Google Scholar] [CrossRef]

- Prinzel, L. J.; Freeman, F. G.; Scerbo, M. W.; Mikulka, P. J.; Pope, A. T. A closed-loop system for examining psychophysiological measures for adaptive task allocation. The International journal of aviation psychology 2000, 10(4), 393–410. [Google Scholar] [CrossRef]

- Pritchett, A. R.; Kim, S. Y.; Feigh, K. M. Measuring human-automation function allocation. Journal of Cognitive Engineering and Decision Making 2014, 8(1), 52–77. [Google Scholar] [CrossRef]

- Pritchett, A. R.; Kim, S. Y.; Feigh, K. M. Modeling human–automation function allocation. Journal of cognitive engineering and decision making 2014, 8(1), 33–51. [Google Scholar] [CrossRef]

- Pritchett, A.R.; Bhattacharyya, R.P. Modeling the monitoring inherent within aviation function allocations. In Proceedings of the International Conference on Human-Computer Interaction in Aerospace, 2016. [Google Scholar]