Submitted:

01 December 2025

Posted:

04 December 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

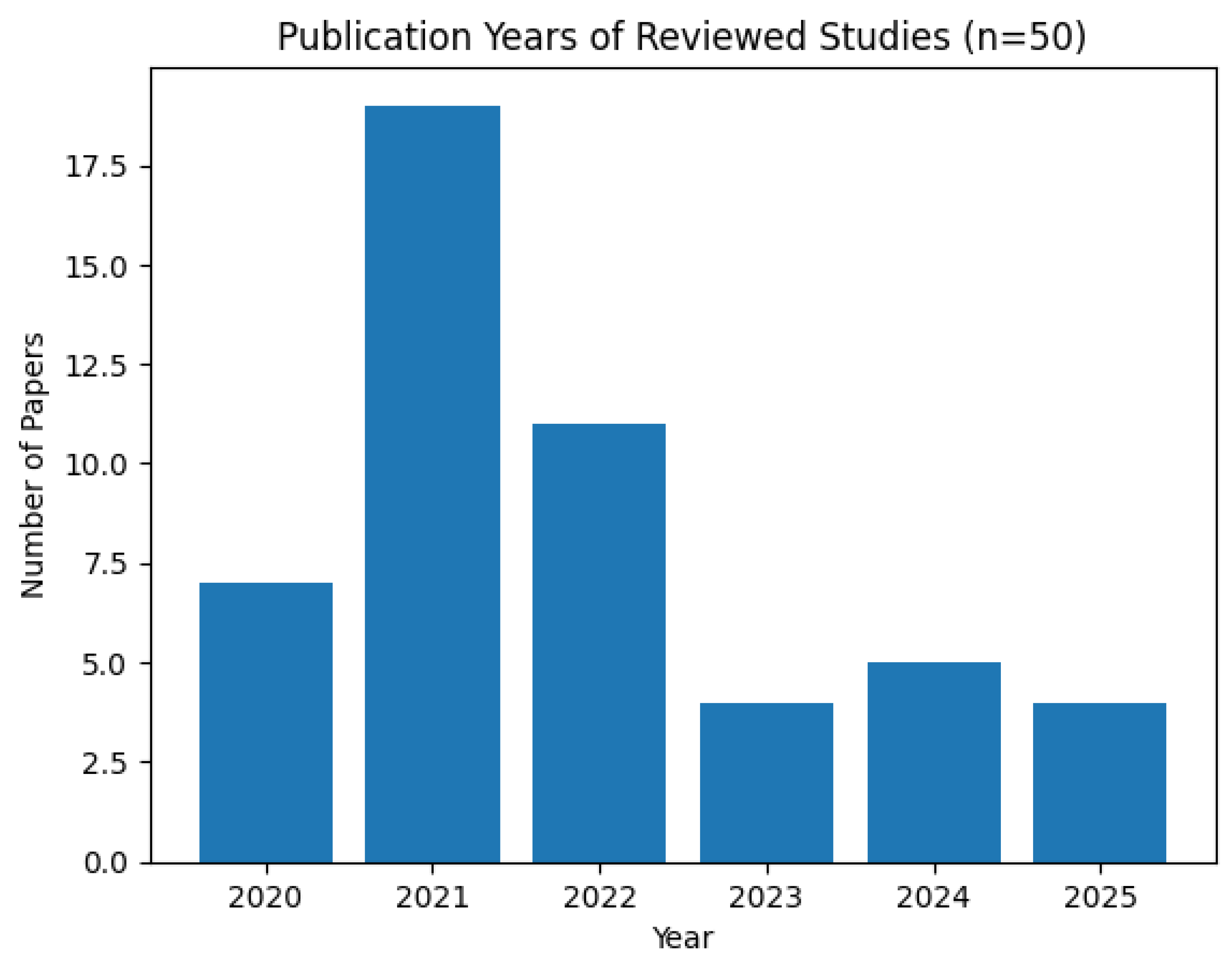

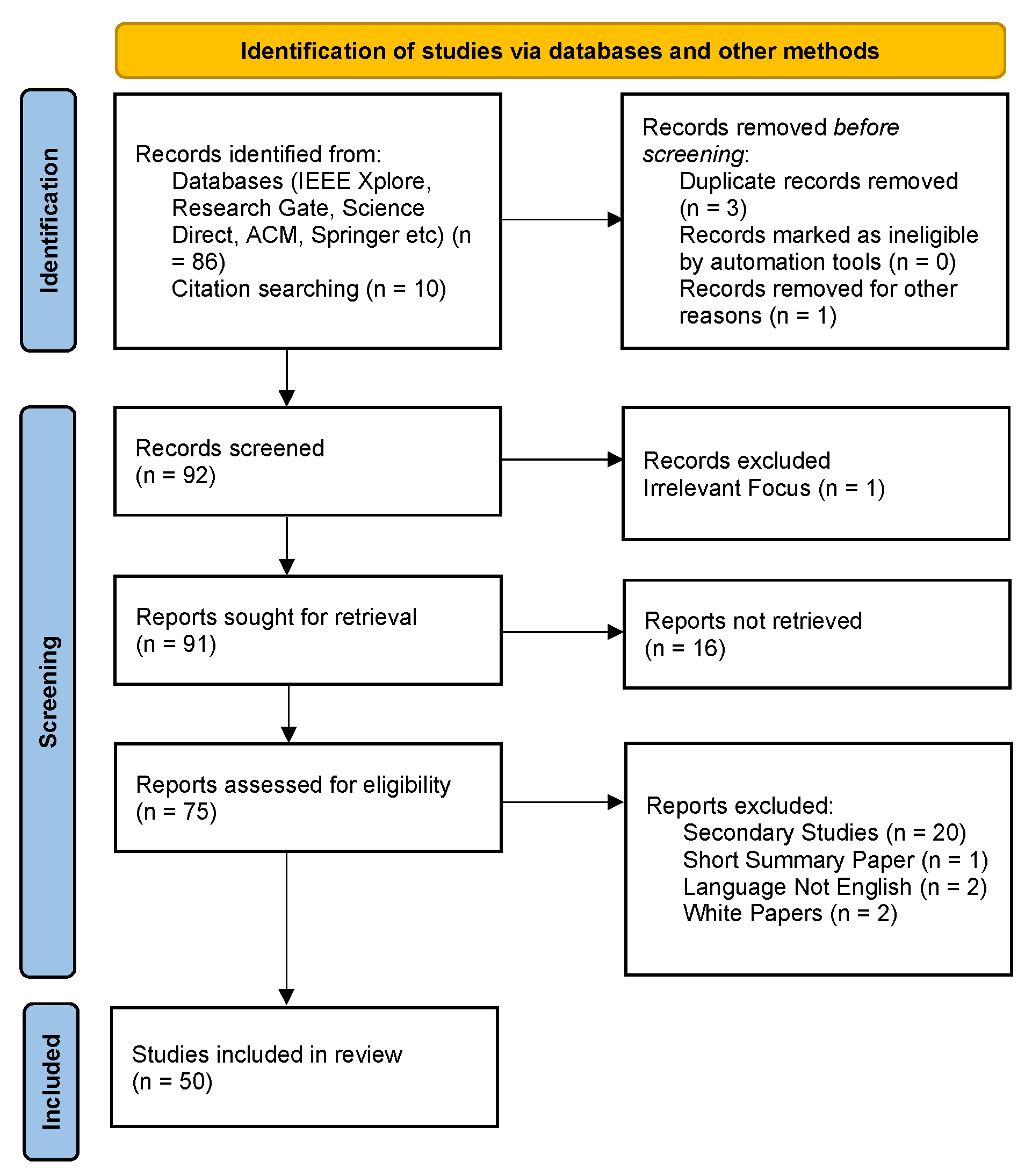

2. Materials and Methods

2.0.1. Inclusion Criteria

2.0.2. Exclusion Criteria

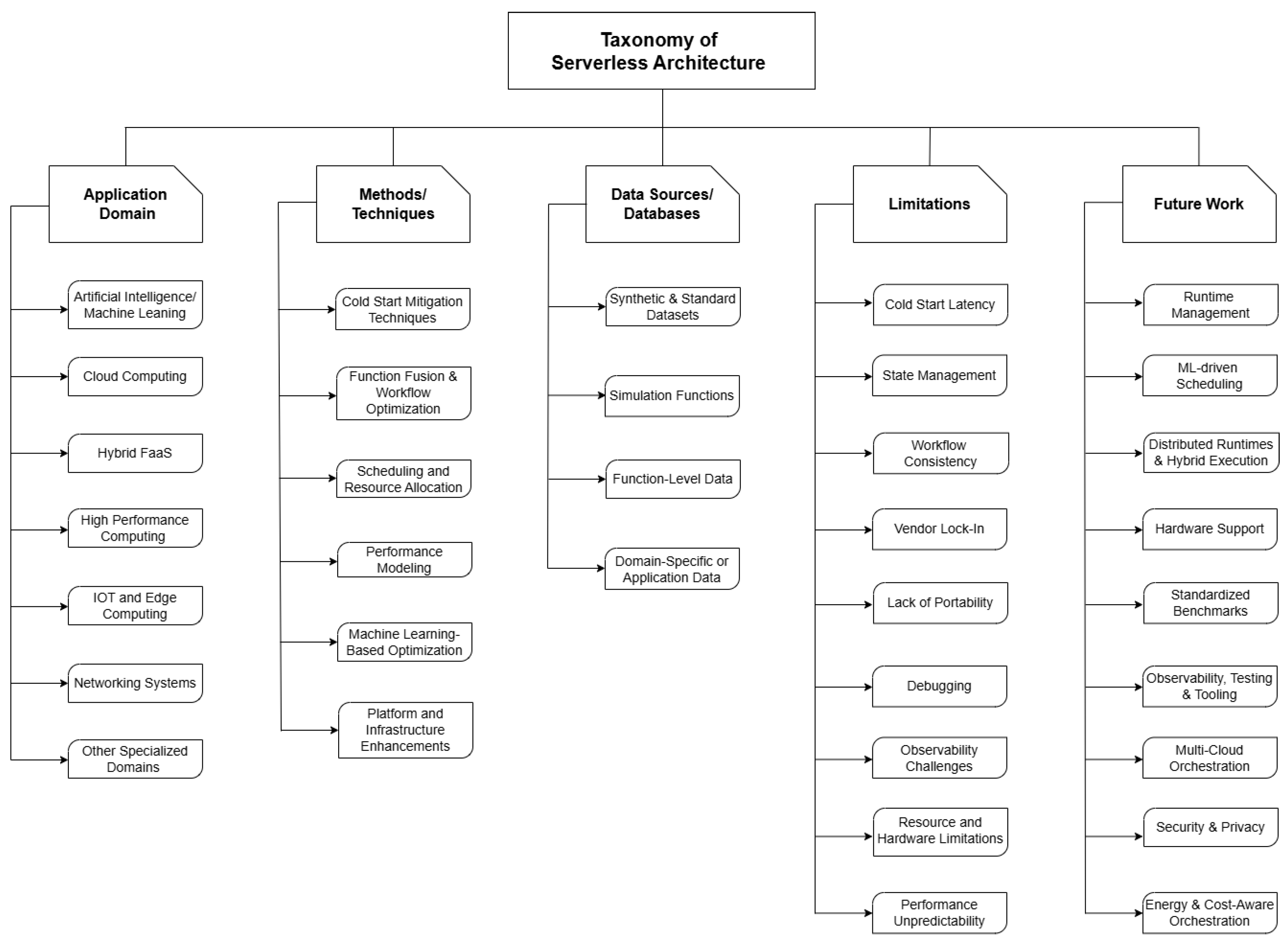

3. Taxonomy of Serverless Architecture and Its Current State of the Art

4. Application Domains

4.1. Artificial Intelligence and Machine Learning

4.2. Cloud-Native and Hybrid Serverless Systems

4.3. High Performance Computing (HPC)

4.4. IoT and Edge Computing

4.5. Networking, SDN, and 5G

4.6. Other Specialized Domains

5. Methods and Techniques

5.1. Cold Start Mitigation Techniques

5.2. Function Fusion and Workflow Optimization

5.3. Scheduling and Resource Allocation

| Category | Examples | Purpose / Usage | Advantages | Limitations |

|---|---|---|---|---|

| Synthetic, Simulated Workloads And Benchmarks | COCOA [5], SCOPE [44], FaaSDOM [6], JMeter [4,13,62], custom load generators [14,22] | Stress testing scaling, concurrency, cold starts | Highly configurable | May not reflect realistic user behavior |

| Function-Level Execution Traces | AWS Lambda logs [3,4,11,56], Azure invocation traces [3,6,66] | Modeling runtime behavior, workload prediction, scheduling | Realistic and platform-specific | Limited access due to privacy/availability constraints |

| Domain-Specific Data | IoT sensor streams [7,12,67], network traffic logs [58,60], medical imaging data [7], video frames [43,59], NLP corpora [44,68], MNIST [13,15,56,63], EMNIST, LIBSVM, EPSILON [56] | Evaluating serverless applicability in real applications | Practical value and deployment feasibility | Harder to reproduce and standardize for comparison |

5.4. Performance Modeling and Analytical Frameworks

5.5. Machine Learning-Based Optimization

5.6. Platform and Infrastructure Enhancements

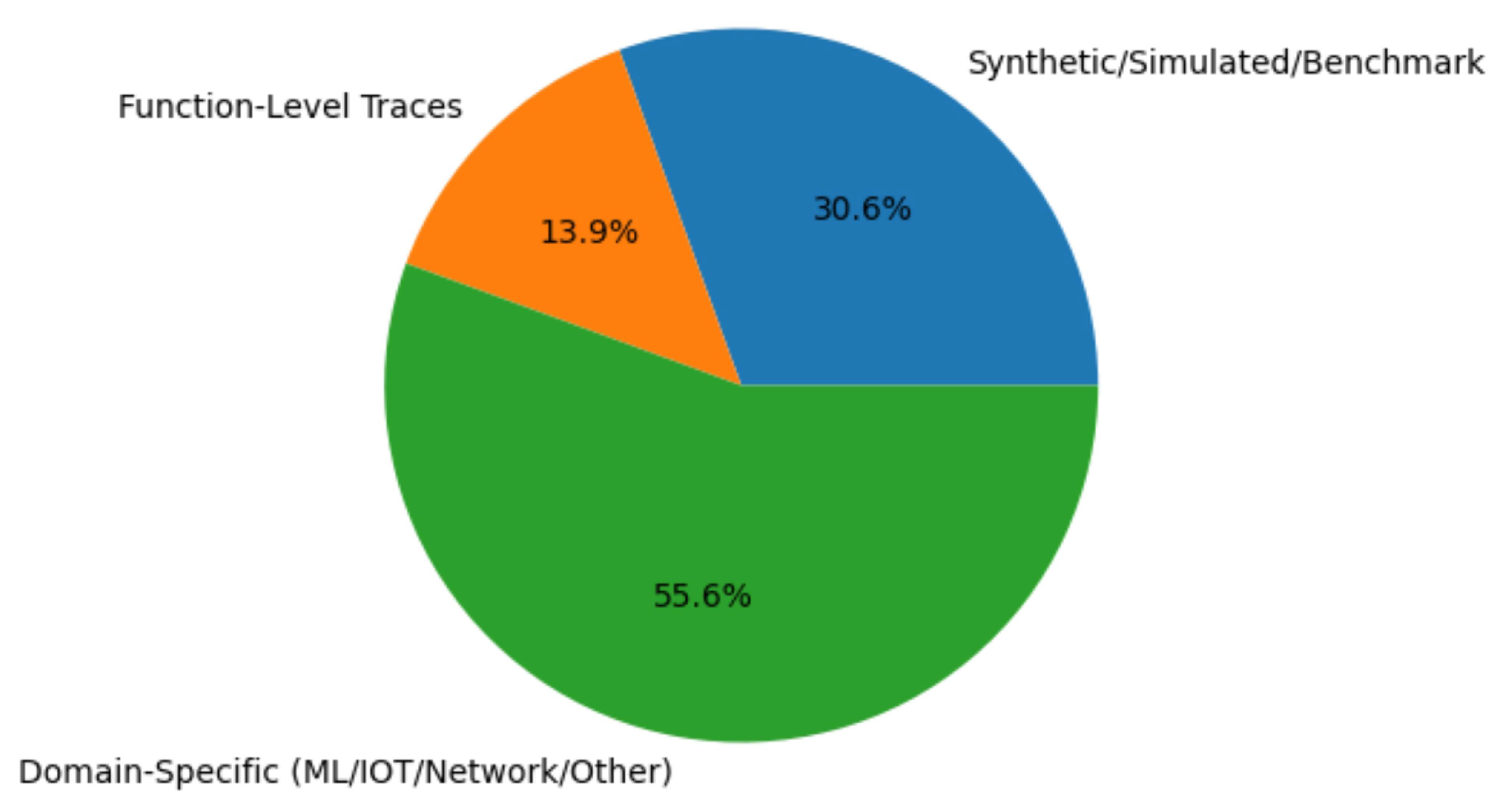

6. Data Sources/Datasets/Databases

6.1. Synthetic, Simulated Workloads and Benchmarks

6.2. Function-Level Execution Traces

6.3. Domain-Specific Datasets

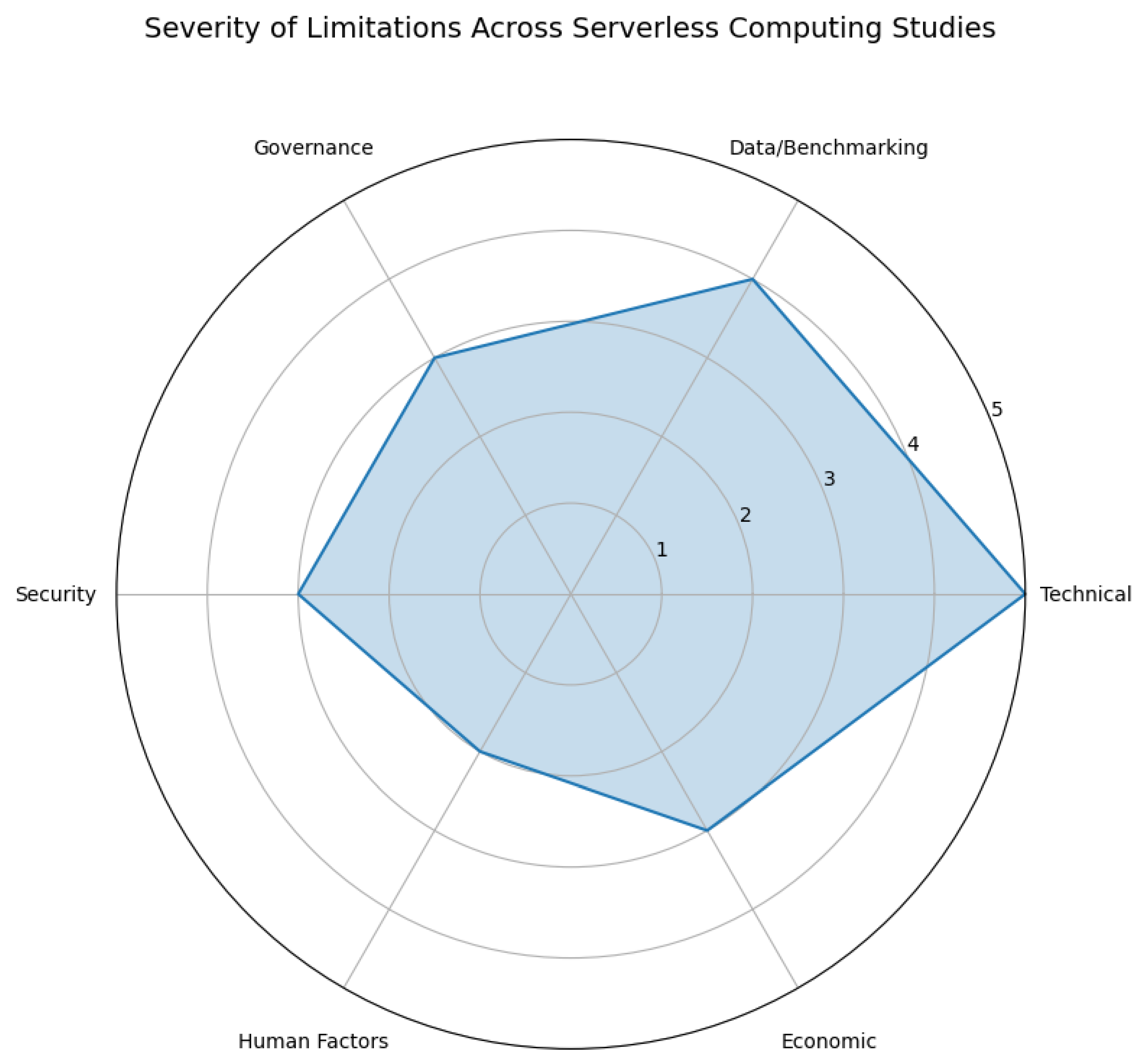

7. Limitations

7.1. Cold Start Latency

7.2. State Management and Workflow Consistency

7.3. Vendor Lock-In and Lack of Portability

7.4. Debugging and Observability Challenges

7.5. Resource Constraints and Hardware Limitations

7.6. Performance Unpredictability

8. Future Work and Research Directions

8.1. Adaptive and Predictive Runtime Management

8.2. ML-driven Scheduling and Autoscaling at Scale

8.3. Distributed Serverless Runtimes and Hybrid Execution

8.4. Heterogeneous Hardware Support (GPU/TPU/Edge Accelerators)

8.5. Standardized Benchmarks, Shared Traces, and Reproducibility

8.6. Observability, Testing, and Development Tooling

8.7. Portability, Multi-Cloud Orchestration and Interoperability

8.8. Security, Privacy and Federated Serverless Patterns

8.9. Energy-Aware and Green Serverless Scheduling

8.10. Economics and Cost-Aware Orchestration

9. Conclusions

References

- De Silva, D.; Hewawasam, L. The Impact of Software Testing on Serverless Applications. IEEE Access 2024, 12, 51086–51099. [Google Scholar] [CrossRef]

- USA.; Nellore, N.S. Optimizing Cost and Performance in Serverless Batch ProcessingA Comparative Analysis of AWS Step Functions vs. Journal of Mathematical & Computer Applications 2022, 1, 1–5. [CrossRef]

- Kodakandla, N. Serverless Architectures: A Comparative Study of Performance, Scalability, and Cost in Cloud-native Applications. 2021, 5. [Google Scholar]

- Faculty of Computing Muhammad Ali Jinnah University (MAJU) Karachi, Pakistan.; Nadeem, M.U.; Raazi, S.M.K.u.R.; Faculty of Computing Mohammad Ali Jinnah University (MAJU) Karachi, Pakistan.; Mehboob, B.; Faculty of Computer Science and Information Technology Superior University Lahore, Pakistan.; Ali, S.M.; Malaysian Institute of Information Technology, Universiti Kuala Lumpur (UniKL MIIT) Kuala Lumpur, Malaysia.; Raza, S.; Faculty of Computing Mohammad Ali Jinnah University (MAJU) Karachi, Pakistan. Cost Analysis of Running Web Application in Cloud Monolith, Microservice and Serverless Architecture. Journal of Independent Studies and Research Computing 2024, 22. [CrossRef]

- Gias, A.U.; Casale, G. COCOA: Cold Start Aware Capacity Planning for Function-as-a-Service Platforms. arXiv 2020, arXiv:2007.01222 [cs]. [Google Scholar] [CrossRef]

- Maissen, P.; Felber, P.; Kropf, P.; Schiavoni, V. FaaSdom: A Benchmark Suite for Serverless Computing. In Proceedings of the Proceedings of the 14th ACM International Conference on Distributed and Event-based Systems; 2020; pp. 73–84, arXiv:2006.03271 [cs]. [Google Scholar] [CrossRef]

- Golec, M.; Ozturac, R.; Pooranian, Z.; Singh Gill, S.; Buyya, R. IFaaSBus: A Security- and Privacy-Based Lightweight Framework for Serverless Computing Using IoT and Machine Learning. ResearchGate 2021. [Google Scholar] [CrossRef]

- Lin, C.; Khazaei, H. Modeling and Optimization of Performance and Cost of Serverless Applications. IEEE Transactions on Parallel and Distributed Systems 2021, 32, 615–632. [Google Scholar] [CrossRef]

- Lee, S.; Yoon, D.; Yeo, S.; Oh, S. Mitigating Cold Start Problem in Serverless Computing with Function Fusion. Sensors 2021, 21, 8416. [Google Scholar] [CrossRef]

- Majewski, M.; Pawlik, M.; Malawski, M. Algorithms for scheduling scientific workflows on serverless architecture. In Proceedings of the 2021 IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid); 2021; pp. 782–789. [Google Scholar] [CrossRef]

- Mahmoudi, N.; Khazaei, H. Performance Modeling of Serverless Computing Platforms. IEEE Transactions on Cloud Computing 2022, 10, 2834–2847. [Google Scholar] [CrossRef]

- Ouyang, R.; Wang, J.; Xu, H.; Chen, S.; Xiong, X.; Tolba, A.; Zhang, X. A Microservice and Serverless Architecture for Secure IoT System. Sensors 2023, 23, 4868. [Google Scholar] [CrossRef] [PubMed]

- Bac, T.P.; Tran, M.N.; Kim, Y. Serverless Computing Approach for Deploying Machine Learning Applications in Edge Layer. In Proceedings of the 2022 International Conference on Information Networking (ICOIN); 2022; pp. 396–401, ISSN: 1976-7684. [Google Scholar] [CrossRef]

- Chahal, D.; Ramesh, M.; Ojha, R.; Singhal, R. High Performance Serverless Architecture for Deep Learning Workflows. In Proceedings of the 2021 IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid); 2021; pp. 790–796. [Google Scholar] [CrossRef]

- Assogba, K.; Arif, M.; Rafique, M.M.; Nikolopoulos, D.S. On Realizing Efficient Deep Learning Using Serverless Computing. In Proceedings of the 2022 22nd IEEE International Symposium on Cluster, Cloud and Internet Computing (CCGrid); 2022; pp. 220–229. [Google Scholar] [CrossRef]

- Castagna, F.; Trombetta, A.; Landoni, M.; Andreon, S. A Serverless Architecture for Efficient and Scalable Monte Carlo Markov Chain Computation. In Proceedings of the Proceedings of the 2023 7th International Conference on Cloud and Big Data Computing, Manchester United Kingdom, 2023. [CrossRef]

- Bermbach, D.; Karakaya, A.S.; Buchholz, S. Using application knowledge to reduce cold starts in FaaS services. In Proceedings of the Proceedings of the 35th Annual ACM Symposium on Applied Computing, Brno Czech Republic; 2020; pp. 134–143. [Google Scholar] [CrossRef]

- Khan, D.; Subba, B.; Sharma, S. Minimizing Cold Start Times in Serverless Deployments. In Proceedings of the Proceedings of the 2022 Fourteenth International Conference on Contemporary Computing, New York, NY, USA, 2022; IC3-2022, pp. 156–161. [CrossRef]

- Nguyen, C.; Bhuyan, M.; Elmroth, E. Taming Cold Starts: Proactive Serverless Scheduling with Model Predictive Control. arXiv 2025, version: 1. arXiv:2508.07640. [Google Scholar] [CrossRef]

- Lakhai, V.; Kuzmych, O.; Seniv, M. An improved method for increasing maintainability in terms of serverless architecture application. In Proceedings of the 2023 IEEE 18th International Conference on Computer Science and Information Technologies (CSIT), Lviv, Ukraine; 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Martins, H.; Araujo, F.; Da Cunha, P.R. Benchmarking Serverless Computing Platforms. Journal of Grid Computing 2020, 18, 691–709. [Google Scholar] [CrossRef]

- Mahmoudi, N.; Khazaei, H. Performance Modeling of Metric-Based Serverless Computing Platforms. IEEE Transactions on Cloud Computing 2023, 11, 1899–1910. [Google Scholar] [CrossRef]

- Pubglob. SERVERLESS ARCHITECTURES: A COMPARATIVE STUDY ON ENVIRONMENTAL IMPACT AND SUSTAINABILITY IN GREEN COMPUTING 2024. Publisher: OSF. [CrossRef]

- Oliver, E.; Thomas, E.; Price, L.; Musa, Z.; Esther, D. Serverless Architecture Patterns for Scalable APIs. 2024. [Google Scholar]

- Kumar, G. The Evolution and Impact of Serverless Architectures in Modern Fintech Platforms. Technical report. 2024. [Google Scholar] [CrossRef]

- Padyana, U.K.; Rai, H.P.; Ogeti, P.; Fadnavis, N.S.; Patil, G.B. Server less Architectures in Cloud Computing: Evaluating Benefits and Drawbacks. Innovative Research Thoughts 2020, 6, 1–12. [Google Scholar] [CrossRef]

- Ekwe-Ekwe, N.; Amos, L. The State of FaaS: An Analysis of Public Functions-as-a-Service Providers. In Proceedings of the 2024 IEEE 17th International Conference on Cloud Computing (CLOUD); 2024; pp. 430–438, ISSN: 2159-6190. [Google Scholar] [CrossRef]

- Kanamugire, J.; Alhajri, F.; Swen, J. Serverless Security and Privacy; 2024. [Google Scholar] [CrossRef]

- Abu, M.; Mallick, M.; Nath, R. Securing the Server-less Frontier: Challenges and Innovative Solutions in Network Security for Server- less Computing. 2024, 193, 1–45. [Google Scholar]

- Tummalachervu, C.K. EXPLORING SERVER-LESS COMPUTING FOR EFFICIENT RESOURCE MANAGEMENT IN CLOUD ARCHITECTURES. 2024; p. 77. [Google Scholar]

- Cinar, B. The Rise of Serverless Architectures: Security Challenges and Best Practices. Asian Journal of Research in Computer Science 2023, 16, 194–210. [Google Scholar] [CrossRef]

- Kathiriya, S.; Challa, N.; Devineni, S.K. Serverless Architecture in LLMs: Transforming the Financial Industry’s AI Landscape. International Journal of Science and Research (IJSR) 2023, 12, 2131–2136. [Google Scholar] [CrossRef]

- Ahmed, N.; Hossain, M.; Shadul, S.; Rimi, N.; Sarkar, I. Server less Architecture: Optimizing Application Scalability and Cost Efficiency in Cloud Computing. 2022, 01, 1366–1380. [Google Scholar]

- Daraojimba, A.I.; Ogeawuchi, J.C.; Abayomi, A.A.; Agboola, O.A.; Ogbuefi, E. Systematic Review of Serverless Architectures and Business Process Optimization. 2021, 5. [Google Scholar]

- Hassan, H.B.; Barakat, S.A.; Sarhan, Q.I. Survey on serverless computing. Journal of Cloud Computing 2021, 10, 39. [Google Scholar] [CrossRef]

- Kuhlenkamp, J.; Werner, S.; Tai, S. The Ifs and Buts of Less is More: A Serverless Computing Reality Check. In Proceedings of the 2020 IEEE International Conference on Cloud Engineering (IC2E); 2020; pp. 154–161. [Google Scholar] [CrossRef]

- Przybylski, B.; Żuk, P.; Rzadca, K. Data-driven scheduling in serverless computing to reduce response time. In Proceedings of the 2021 IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid); 2021; pp. 206–216. [Google Scholar] [CrossRef]

- Sharaf, S. Security Issues in Serverless Computing Architecture. International Journal of Emerging Trends in Engineering Research 2020, 8, 539–544. [Google Scholar] [CrossRef]

- Seth, D.; Chintale, P. Performance Benchmarking of Serverless Computing Platforms. International Journal of Computer Trends and Technology 2024, 72, 160–167. [Google Scholar] [CrossRef]

- Fakinos, I.; Tzenetopoulos, A.; Masouros, D.; Xydis, S.; Soudris, D. Sequence Clock: A Dynamic Resource Orchestrator for Serverless Architectures. In Proceedings of the 2022 IEEE 15th International Conference on Cloud Computing (CLOUD); 2022; pp. 81–90, ISSN: 2159-6190. [Google Scholar] [CrossRef]

- Mampage, A.; Karunasekera, S.; Buyya, R. Deadline-aware Dynamic Resource Management in Serverless Computing Environments. In Proceedings of the 2021 IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid); 2021; pp. 483–492. [Google Scholar] [CrossRef]

- Carpio, F.; Michalke, M.; Jukan, A. Engineering and Experimentally Benchmarking a Serverless Edge Computing System. In Proceedings of the 2021 IEEE Global Communications Conference (GLOBECOM); 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Sheshadri, K.R.; Lakshmi, J. QoS aware FaaS for Heterogeneous Edge-Cloud continuum. In Proceedings of the 2022 IEEE 15th International Conference on Cloud Computing (CLOUD); 2022; pp. 70–80, ISSN: 2159-6190. [Google Scholar] [CrossRef]

- Wen, J.; Chen, Z.; Zhao, J.; Sarro, F.; Ping, H.; Zhang, Y.; Wang, S.; Liu, X. SCOPE: Performance Testing for Serverless Computing. ACM Trans. Softw. Eng. Methodol. 2025, 34, 227–1. [Google Scholar] [CrossRef]

- Golec, M.; Walia, G.K.; Kumar, M.; Cuadrado, F.; Gill, S.S.; Uhlig, S. Cold Start Latency in Serverless Computing: A Systematic Review, Taxonomy, and Future Directions. ACM Computing Surveys 2025, 57, 1–36. [Google Scholar] [CrossRef]

- Yussupov, V.; Soldani, J.; Breitenbücher, U.; Brogi, A.; Leymann, F. FaaSten your decisions: A classification framework and technology review of function-as-a-Service platforms. Journal of Systems and Software 2021, 175, 110906. [Google Scholar] [CrossRef]

- Scheuner, J.; Leitner, P. Function-as-a-Service performance evaluation: A multivocal literature review. Journal of Systems and Software 2020, 170, 110708. [Google Scholar] [CrossRef]

- Shojaee Rad, Z.; Ghobaei-Arani, M. Data pipeline approaches in serverless computing: a taxonomy, review, and research trends. Journal of Big Data 2024, 11, 82. [Google Scholar] [CrossRef]

- Lannurien, V.; d’Orazio, L.; Barais, O.; Boukhobza, J. Serverless Cloud Computing: State of the Art and Challenges. 2023; pp. 275–316. [Google Scholar] [CrossRef]

- Vahidinia, P.; Farahani, B.; Aliee, F.S. Cold Start in Serverless Computing: Current Trends and Mitigation Strategies. In Proceedings of the 2020 International Conference on Omni-layer Intelligent Systems (COINS); 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Taibi, D.; Ioini, N.E.; Pahl, C.; Niederkofler, J.R.S. Patterns for Serverless Functions (Function-as-a-Service): A Multivocal Literature Review. 2025; pp. 181–192. [Google Scholar]

- Barnabas, A.; Johnson, J.; Mabel, E. The Future of Cloud: Exploring Cost- Effective Serverless Architecture. 2025. [Google Scholar]

- Gaurav Samdani; Kabita Paul.; Flavia Saldanha. Serverless architectures for agentic AI deployment. World Journal of Advanced Engineering Technology and Sciences 2022, 7, 320–333. [CrossRef]

- Decker, J.; Kasprzak, P.; Kunkel, J.M. Performance Evaluation of Open-Source Serverless Platforms for Kubernetes. Algorithms 2022, 15, 234. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021, 372, n71, Publisher: British Medical Journal Publishing Group Section: Research Methods & Reporting. [Google Scholar] [CrossRef] [PubMed]

- Gupta, V.; Kadhe, S.; Courtade, T.; Mahoney, M.W.; Ramchandran, K. OverSketched Newton: Fast Convex Optimization for Serverless Systems. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data); 2020; pp. 288–297. [Google Scholar] [CrossRef]

- Banaie, F.; Djemame, K.; Alhindi, A.; Kelefouras, V. Energy efficiency support for software defined networks: a serverless computing approach. Future Generation Computer Systems 2026, 176, 108121. [Google Scholar] [CrossRef]

- Tran, M.N.; Kim, Y. Design of 5G Architecture Enhancements for Supporting Serverless Computing. ResearchGate 2025. [Google Scholar] [CrossRef]

- Pakdil, M.E.; Çelik, R.N. Serverless Geospatial Data Processing Workflow System Design. ISPRS International Journal of Geo-Information 2022, 11, 20. [Google Scholar] [CrossRef]

- Suo, K.; Son, J.; Cheng, D.; Chen, W.; Baidya, S. Tackling Cold Start of Serverless Applications by Efficient and Adaptive Container Runtime Reusing. In Proceedings of the 2021 IEEE International Conference on Cluster Computing (CLUSTER), Portland, OR, USA; 2021; pp. 433–443. [Google Scholar] [CrossRef]

- Kousiouris, G. A self-adaptive batch request aggregation pattern for improving resource management, response time and costs in microservice and serverless environments. In Proceedings of the 2021 IEEE International Performance, Computing, and Communications Conference (IPCCC); 2021; pp. 1–10, ISSN: 2374-9628. [Google Scholar] [CrossRef]

- Agarwal, S.; Rodriguez, M.A.; Buyya, R. A Reinforcement Learning Approach to Reduce Serverless Function Cold Start Frequency. In Proceedings of the 2021 IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid), Melbourne, Australia; 2021; pp. 797–803. [Google Scholar] [CrossRef]

- Gharibi, M.; Bhagavan, S.; Rao, P. FederatedTree: A Secure Serverless Algorithm for Federated Learning to Reduce Data Leakage. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data); 2021; pp. 4078–4083. [Google Scholar] [CrossRef]

- Jia, X.; Zhao, L. RAEF: Energy-efficient Resource Allocation through Energy Fungibility in Serverless. In Proceedings of the 2021 IEEE 27th International Conference on Parallel and Distributed Systems (ICPADS); 2021; pp. 434–441, ISSN: 2690-5965. [Google Scholar] [CrossRef]

- Zhao, Y.; Uta, A. Tiny Autoscalers for Tiny Workloads: Dynamic CPU Allocation for Serverless Functions. In Proceedings of the 2022 22nd IEEE International Symposium on Cluster, Cloud and Internet Computing (CCGrid); 2022; pp. 170–179. [Google Scholar] [CrossRef]

- Govind, H.; González–Vélez, H. Benchmarking Serverless Workloads on Kubernetes. In Proceedings of the 2021 IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid); 2021; pp. 704–712. [Google Scholar] [CrossRef]

- Shah, N.P. Design of a Reference Architecture for Serverless IoT Systems. In Proceedings of the 2021 IEEE International Conference on Omni-Layer Intelligent Systems (COINS); 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Jayaraman, S.; Reddy, C.; Khabiri, E.; Patel, D.; Bhamidipaty, A.; Kalagnanam, J. Asset Modeling using Serverless Computing. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data); 2021; pp. 4084–4090. [Google Scholar] [CrossRef]

- Byrne, A.; Nadgowda, S.; Coskun, A.K. ACE: Just-in-time Serverless Software Component Discovery Through Approximate Concrete Execution. In Proceedings of the Proceedings of the 2020 Sixth International Workshop on Serverless Computing, Delft Netherlands, 2020. [CrossRef]

- Safaryan, G.; Jindal, A.; Chadha, M.; Gerndt, M. SLAM: SLO-Aware Memory Optimization for Serverless Applications. In Proceedings of the 2022 IEEE 15th International Conference on Cloud Computing (CLOUD); 2022; pp. 30–6190. [Google Scholar] [CrossRef]

- Nishimiya, K.; Imai, Y. Serverless Architecture for Service Robot Management System. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA); 2021; pp. 11379–11385, ISSN: 2577-087X. [Google Scholar] [CrossRef]

- Hanaforoosh, M.; Azgomi, M.A.; Ashtiani, M. Reducing the cost of cold start time in serverless function executions using granularity trees. Future Generation Computer Systems 2025, 164, 107604. [Google Scholar] [CrossRef]

- Joosen, A.; Hassan, A.; Asenov, M.; Singh, R.; Darlow, L.; Wang, J.; Deng, Q.; Barker, A. Serverless Cold Starts and Where to Find Them, 2024. arXiv:2410.06145 [cs]. 0614. [Google Scholar] [CrossRef]

- Tang, Y.; Yang, J. Lambdata: Optimizing Serverless Computing by Making Data Intents Explicit. In Proceedings of the 2020 IEEE 13th International Conference on Cloud Computing (CLOUD); 2020; pp. 294–303, ISSN: 2159-6190. [Google Scholar] [CrossRef]

- Yu, H.; Irissappane, A.A.; Wang, H.; Lloyd, W.J. FaaSRank: Learning to Schedule Functions in Serverless Platforms. In Proceedings of the 2021 IEEE International Conference on Autonomic Computing and Self-Organizing Systems (ACSOS); 2021; pp. 31–40. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).