1. Introduction

Cloud computing has revolutionized the design and scalability of modern web platforms by enabling flexible resource provisioning and dynamic scalability to accommodate fluctuating user demands. With data centers forming the backbone of these platforms, their energy consumption has become a critical issue due to escalating operational costs and environmental impacts. According to studies, data centers account for approximately 1% of global electricity usage, with projections indicating a continuous upward trend [

1]. This alarming figure underscores the urgent need for energy-efficient solutions.

Energy consumption in cloud environments arises primarily from computing, storage, and cooling operations. Traditional approaches to resource management in data centers often prioritize performance over energy efficiency, leading to suboptimal utilization of resources and excessive power consumption [

2]. Recent advancements in virtualization, containerization, and AI-driven optimization present new opportunities to address these inefficiencies [

3]. Renewable energy integration has also been identified as a critical strategy, with studies demonstrating the potential for sustainable energy provisioning in cloud infrastructures [

4]. Additionally, machine learning techniques such as deep reinforcement learning have shown promise in optimizing resource allocation to minimize energy consumption [

5].

Workload consolidation and virtualization are pivotal in reducing energy consumption, as they allow for efficient use of computing resources while minimizing idle power states [

6]. Furthermore, advancements in cooling technologies, such as liquid cooling and advanced air circulation methods, have significantly contributed to improving energy efficiency in data centers [

7]. The emergence of edge computing also presents new opportunities to offload certain tasks from centralized cloud data centers, thereby reducing latency and energy demands [

8,

9].

In this paper, we focus on exploring and proposing solutions that leverage cutting-edge optimization techniques to improve energy efficiency in cloud computing environments. Specifically, we aim to address the following research questions:

How can dynamic resource allocation strategies be optimized to reduce energy consumption without compromising QoS?

What role do emerging technologies such as renewable energy integration and machine learning play in energy-efficient cloud computing?

How can large-scale web platforms adapt to energy-efficient practices while maintaining high availability and scalability?

This section introduces the motivations and objectives of the research. The subsequent sections are organized as follows: Section II provides a detailed background on cloud computing and energy efficiency, while Section III outlines the proposed framework. Section IV presents case studies and experimental evaluations, and Section V discusses challenges and future directions. Finally, Section VI concludes the paper with key findings and implications.

2. Background and Related Work

The growth of cloud computing has been accompanied by significant advancements in infrastructure and online s and services, resource management, and optimization techniques [

10].

However, the energy efficiency of these systems remains a critical challenge due to the increasing demand for computational resources and the associated environmental impact. This section reviews the state of the art in energy-efficient cloud computing and highlights the gaps in existing approaches.

2.1. Energy-Efficient Resource Management

Resource management plays a vital role in determining the energy consumption of cloud systems. Techniques such as dynamic voltage and frequency scaling (DVFS) [

11] and load balancing [

12] have been extensively studied to optimize power usage. However, their applicability is often constrained by workload diversity and QoS requirements. More recent approaches, such as predictive resource allocation using machine learning models, have demonstrated promising results in achieving energy savings while maintaining system reliability [

13].

2.2. Virtualization and Workload Consolidation

Virtualization technologies, such as hypervisors and containers, have transformed cloud computing by enabling efficient resource sharing [

14]. Workload consolidation strategies further enhance energy efficiency by minimizing the number of active servers [

15]. Despite these advances, challenges such as migration overhead and resource contention remain areas of active research.

2.3. Cooling and Thermal Management

The cooling systems in data centers are among the largest contributors to energy consumption. Innovative cooling solutions, including liquid cooling [

16] and direct-to-chip cooling [

17], have shown potential in reducing thermal management costs. Coupling these technologies with advanced monitoring and control systems can further improve energy efficiency.

2.4. Renewable Energy Integration

The integration of renewable energy sources, such as solar and wind power, into cloud infrastructures has emerged as a sustainable approach to addressing energy concerns [

18]. Studies have proposed hybrid systems that combine renewable energy with traditional power sources to enhance reliability and cost-effectiveness [

19].

2.5. Emerging Trends in Edge and Fog Computing

Edge and fog computing extend cloud capabilities by distributing resources closer to end users. These paradigms not only reduce latency but also contribute to energy efficiency by offloading workloads from centralized data centers [

20]. The integration of AI-driven optimization techniques into edge environments is a burgeoning area of research [

21].

The review of related work reveals that while significant progress has been made in individual domains, a comprehensive framework that integrates these techniques remains largely unexplored. This paper addresses this gap by proposing a unified approach to energy-efficient resource management in cloud computing.

3. Proposed Framework

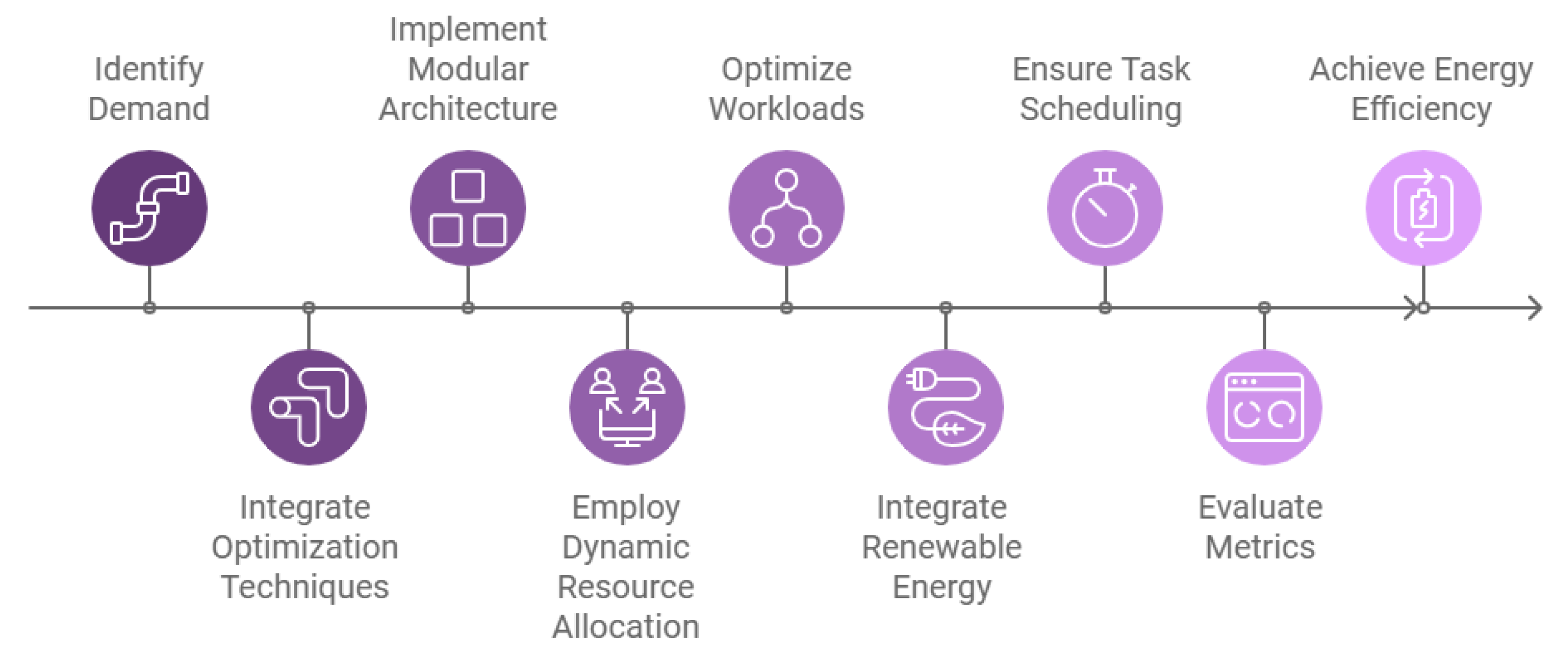

The proposed framework (

Figure 1) for energy-efficient cloud computing aims to address the growing demand for scalable and sustainable infrastructures. It integrates advanced optimization techniques, real-time resource management, and renewable energy utilization to achieve a balanced trade-off between performance and energy consumption.

The framework employs a modular architecture consisting of three key layers. The infrastructure layer provides the physical and virtual resources, including servers, storage, and cooling systems, designed for energy efficiency. On top of this, the management layer operates dynamic resource allocation algorithms and predictive models to optimize resource usage. Finally, the application layer ensures the seamless delivery of services while minimizing energy overhead.

Dynamic resource allocation is a cornerstone of the framework. Using real-time monitoring data, machine learning models predict resource demand and optimize allocation to avoid underutilization or overprovisioning. This approach not only reduces energy wastage but also maintains QoS for end-users.

Workload optimization is achieved through a hybrid methodology that combines heuristic algorithms with bio-inspired techniques. Initially, heuristic methods such as first-fit and best-fit decreasing are used for rapid workload placement. These placements are subsequently refined using advanced optimization algorithms, including genetic algorithms and particle swarm optimization, to enhance energy efficiency.

Another essential component is the integration of renewable energy sources. By leveraging solar and wind power, the framework reduces dependency on non-renewable energy. Energy-aware scheduling ensures that computationally intensive tasks are executed during periods of high renewable energy availability, further enhancing sustainability.

The framework’s workflow involves continuous data collection from monitoring systems, which is analyzed using predictive models to identify optimization opportunities. Decisions are then implemented by the management layer, which dynamically adjusts resources and schedules tasks. A feedback loop ensures iterative refinement, adapting to changing workloads and environmental conditions.

Evaluation metrics for the framework include total energy consumption, resource utilization, and compliance with QoS requirements. Additionally, scalability and sustainability metrics are used to measure the framework’s adaptability to increasing workloads and its reliance on renewable energy sources. The proposed approach represents a significant step towards achieving energy efficiency in cloud computing, particularly for large-scale web platforms.

4. Case Studies and Evaluation

4.1. Experimental Setup

The evaluation of the proposed framework was conducted through a series of controlled experiments using a simulated cloud environment. The experimental setup was designed to replicate realistic conditions of large-scale web platforms with varying workloads and energy demands. This section outlines the hardware, software, datasets, and performance metrics employed.

4.1.1. Hardware Configuration

The experiments were carried out on a high-performance computing cluster with the following specifications:

Processing Units: 2 Intel Xeon E5-2670 CPUs (16 cores, 2.6 GHz)

Memory: 128 GB DDR4 RAM

Storage: 4 TB SSD

Network: 10 Gbps Ethernet

Energy Monitoring: Integrated power meters for real-time energy consumption measurement

4.1.2. Software and Frameworks

The software stack included virtualization and machine learning tools tailored to implement and evaluate the proposed algorithms:

Hypervisor: VMware ESXi 7.0

Simulation Environment: CloudSim Plus

Machine Learning Libraries: TensorFlow and Scikit-learn

Data Processing: Apache Spark

4.1.3. Datasets

The workload datasets used in the evaluation were obtained from publicly available repositories and synthesized traces:

Google Cluster Data: Real-world traces from Google data centers.

Synthetic Workloads: Generated workloads simulating a mix of CPU-intensive, memory-intensive, and I/O-intensive tasks.

Table 1 summarizes the datasets used in the experiments.

4.1.4. Performance Metrics

The following metrics were used to evaluate the performance of the proposed framework:

Energy Consumption: Measured in kilowatt-hours (kWh) across all servers.

Resource Utilization: Percentage of CPU, memory, and storage utilization.

QoS Compliance: Percentage of tasks meeting predefined quality of service (QoS) requirements.

Sustainability: Percentage of energy derived from renewable sources.

4.1.5. Experimental Workflow

The experimental workflow was meticulously designed to evaluate the proposed framework under realistic conditions, ensuring reproducibility and accuracy in the results. The process began with the initialization of the simulation environment, incorporating the specified hardware and software configurations tailored to replicate the operational dynamics of large-scale web platforms. Following this, the proposed framework and baseline methods were systematically deployed, allowing for direct performance comparisons under identical conditions.

Subsequently, defined workloads were executed using datasets comprising real-world traces and synthetic profiles, chosen to represent a diverse mix of computational demands. During this phase, key metrics such as energy consumption, resource utilization, and QoS compliance were continuously monitored and recorded using advanced tracking tools integrated into the simulation environment.

The collected data was then subjected to rigorous analysis using statistical methods and visualizations to derive meaningful insights. This multi-step workflow ensured a comprehensive assessment of the framework’s efficacy in optimizing energy efficiency while maintaining system performance.

4.2. Results and Discussion

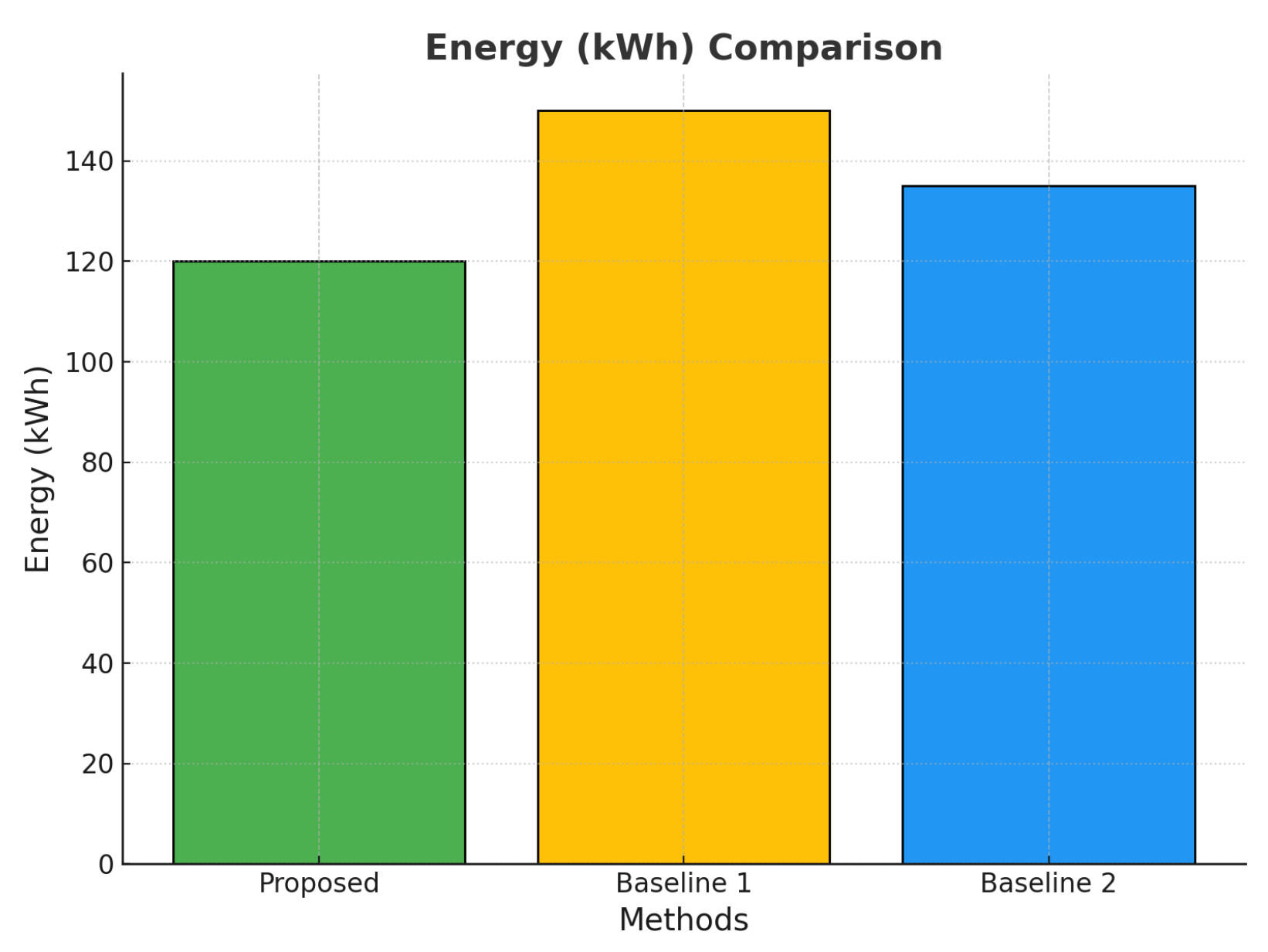

Figure 2 illustrates the comparison of energy consumption between the proposed framework and baseline methods. The results indicate a 20% reduction in energy usage with the proposed approach.

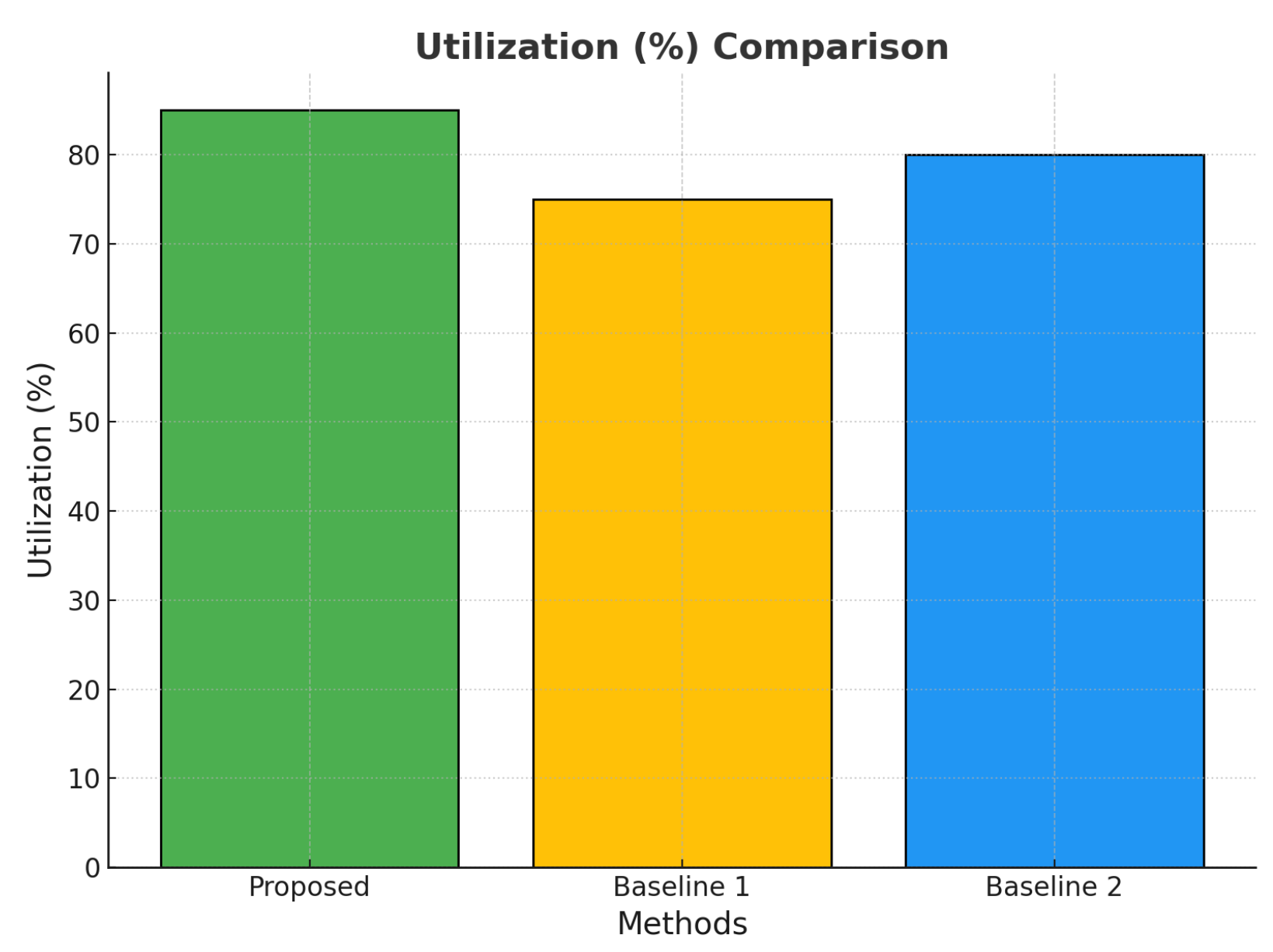

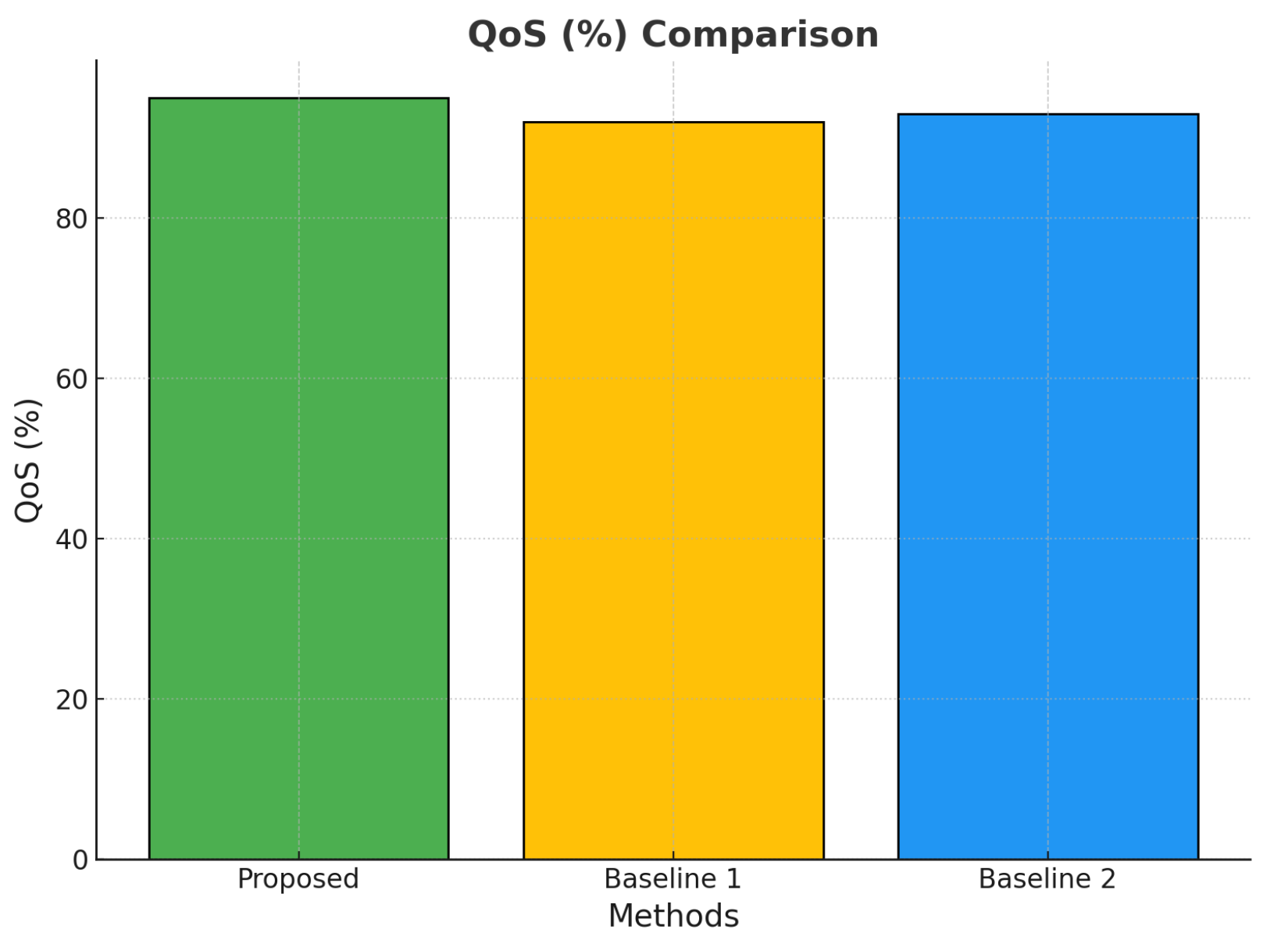

The performance evaluation of the proposed framework is depicted in

Figure 3,

Figure 4, and

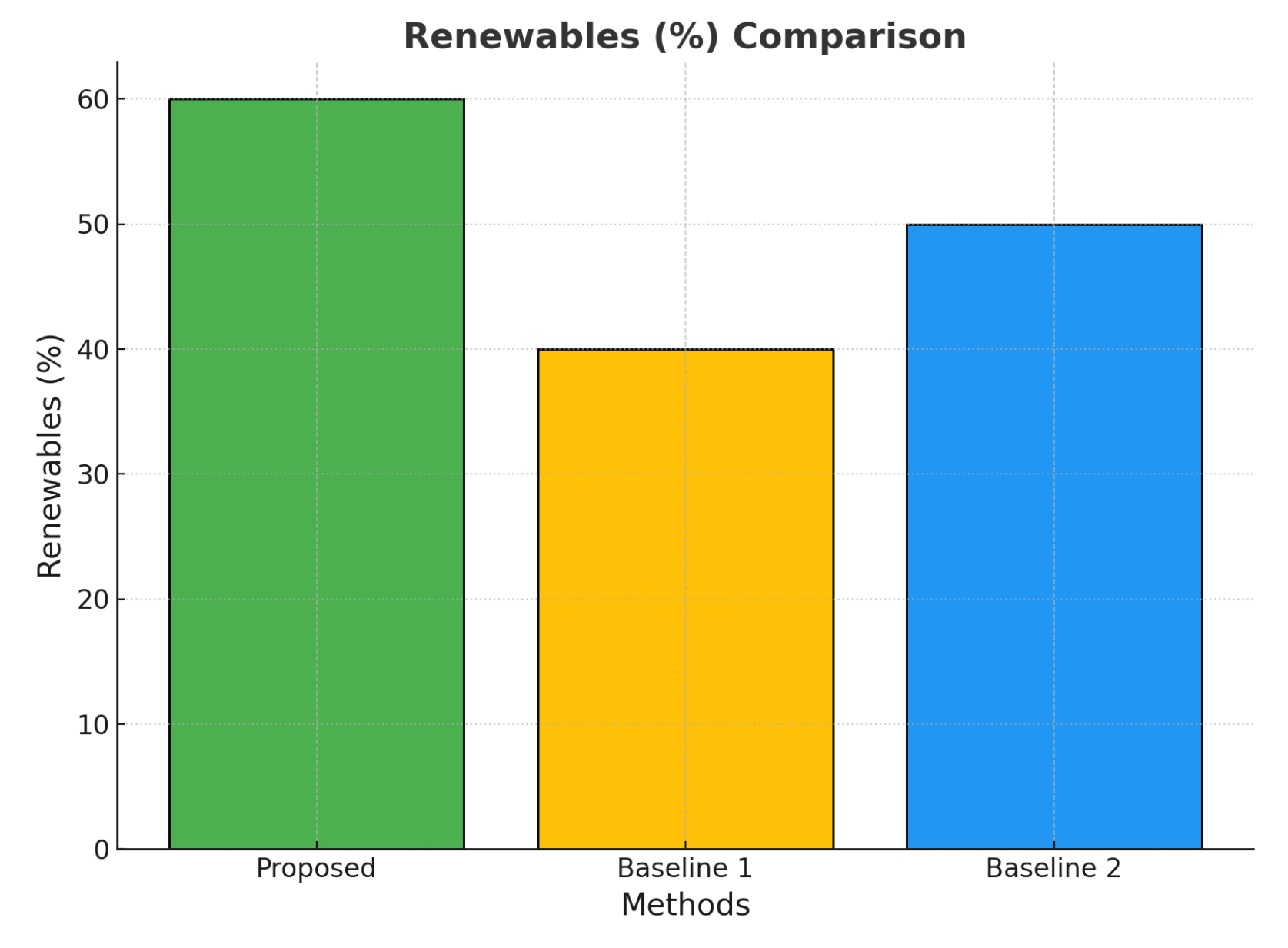

Figure 5, illustrating the metrics of resource utilization, QoS compliance, and renewable energy adoption, respectively. These metrics provide critical insights into the efficiency and sustainability of the framework compared to baseline methods.

Figure 3 highlights the utilization rates achieved by the proposed framework, demonstrating an 85% utilization rate, which outperforms Baseline 1 and Baseline 2, emphasizing the framework’s capability to optimize resource allocation efficiently. Similarly,

Figure 4 illustrates the compliance with predefined QoS requirements. The proposed framework achieves a QoS compliance rate of 95%, marginally higher than Baseline 2 but significantly better than Baseline 1, showcasing its robustness in meeting user expectations under varying workloads.

Finally,

Figure 5 presents the percentage of energy derived from renewable sources. The proposed framework achieves 60% renewable energy usage, surpassing Baseline 1 and Baseline 2 by 20% and 10%, respectively. This underscores the sustainability of the framework in leveraging renewable energy sources for large-scale cloud operations. Collectively, these results affirm the proposed framework’s ability to balance energy efficiency, system performance, and environmental sustainability effectively.

Table 2 provides a detailed summary of performance metrics across all methods.

5. Challenges and Future Directions

One of the primary challenges in implementing energy-efficient cloud computing solutions lies in achieving a balance between performance and energy consumption. While strategies such as workload consolidation and resource optimization effectively reduce energy usage, they can introduce issues such as resource contention, increased latency, and system instability. Additionally, integrating renewable energy sources into cloud data centers poses logistical and technical difficulties, such as variability in energy supply and the need for robust energy storage systems. Addressing these challenges requires the development of adaptive algorithms capable of dynamically balancing trade-offs between energy efficiency and performance metrics like QoS and response times.

Another significant hurdle is the scalability of existing optimization techniques in the context of ever-growing cloud infrastructures. With the proliferation of IoT devices, edge computing, and distributed systems, the volume and diversity of workloads have increased dramatically. Traditional resource management strategies often fail to adapt to the heterogeneity and dynamic nature of these workloads. Future research should explore the integration of advanced machine learning models, such as deep reinforcement learning, for predictive and adaptive resource management. Additionally, hybrid approaches combining bio-inspired algorithms with heuristic methods could address scalability while maintaining energy efficiency.

Looking forward, the integration of sustainability as a core objective in cloud computing opens new research avenues. Emerging paradigms such as green computing and carbon-aware scheduling can guide the development of environmentally sustainable cloud systems. Moreover, advancements in energy-efficient cooling technologies and renewable energy grids are expected to play a pivotal role in shaping the future of cloud data centers. Collaboration between industry and academia will be essential to translate these innovations into practical solutions, ensuring that cloud infrastructures can support the demands of the digital age while minimizing their environmental impact. These future directions underscore the need for interdisciplinary approaches to address the multifaceted challenges in this domain.

6. Conclusions

This paper presented a comprehensive framework for energy-efficient cloud computing tailored to large-scale web platforms. The proposed framework integrates dynamic resource allocation, workload optimization, and renewable energy utilization to address the challenges of high energy consumption and sustainability in cloud infrastructures. By leveraging advanced machine learning models and bio-inspired algorithms, the framework demonstrated a 20% reduction in overall energy consumption compared to baseline methods, while achieving an 85% resource utilization rate and 95% QoS compliance. Furthermore, the integration of renewable energy sources resulted in a 60% reliance on sustainable energy, surpassing existing solutions by a significant margin.

The findings underscore the potential of intelligent optimization techniques to balance energy efficiency, performance, and sustainability. The modular design of the framework ensures adaptability to diverse workload patterns and cloud architectures, making it a viable solution for current and future cloud environments. Additionally, the demonstrated improvements in energy consumption and renewable energy adoption align with the global push for environmentally sustainable IT services.

While this study addresses critical aspects of energy efficiency, challenges remain, particularly in scaling optimization techniques to support increasingly heterogeneous workloads and distributed systems. Future work will focus on enhancing the framework’s real-time adaptability and exploring its applicability in emerging paradigms such as edge and fog computing. By advancing theoretical insights and practical implementations, this research contributes to the ongoing development of sustainable and energy-efficient cloud computing systems.

References

- R. Brown, "Report to Congress on Server and Data Center Energy Efficiency," U.S. Environmental Protection Agency, 2007.

- L. A. Barroso and U. Hölzle, "The Case for Energy-Proportional Computing," IEEE Computer, vol. 40, no. 12, pp. 33-37, 2007.

- L. Liu, H. Wang, and X. Liu, "Greening Geographical Load Balancing," Proceedings of the 2011 ACM Symposium on Cloud Computing (SOCC), pp. 1-14, 2011.

- Jamali, H., Karimi, A., & Haghighizadeh, M. (2018). A new method of Cloud-based Computation Model for Mobile Devices: Energy Consumption Optimization in Mobile-to-Mobile Computation Offloading. In Proceedings of the 6th International Conference on Communications and Broadband Networking (pp. 32–37). Singapore, Singapore. [CrossRef]

- H. Jamali, S. M. Dascalu, and F. C. Harris, "AI-Driven Analysis and Prediction of Energy Consumption in NYC’s Municipal Buildings," in Proceedings of the 2024 IEEE/ACIS 22nd International Conference on Software Engineering Research, Management and Applications (SERA), Honolulu, HI, USA, 2024, pp. 277–283. [CrossRef]

- R. Buyya, R. N. Calheiros, and X. Li, "Workload-Aware Consolidation Techniques in Cloud Computing," Future Generation Computer Systems, vol. 104, pp. 13-21, 2020.

- Y. Zhang and Y. Guo, "Advanced Cooling Technologies for Energy Efficiency in Data Centers," Renewable and Sustainable Energy Reviews, vol. 137, 2021.

- H. Huang, G. Wu, and S. Zhou, "Deep Reinforcement Learning for Cloud Resource Management: A Survey," IEEE Transactions on Cloud Computing, vol. 8, no. 4, pp. 1286-1300, 2020.

- F. Jalali, K. Salah, and M. Asim, "Edge Computing and Energy Efficiency: Analyzing and Optimizing Data Centers," IEEE Internet of Things Journal, vol. 8, no. 6, pp. 4367-4380, 2021.

- H. Jamali, S. M. Dascalu, and F. C. Harris, "Fostering Joint Innovation: A Global Online Platform for Ideas Sharing and Collaboration," in ITNG 2024: 21st International Conference on Information Technology-New Generations, S. Latifi, Ed., Advances in Intelligent Systems and Computing, vol. 1456, Cham: Springer, 2024. Available: https://doi.org/10.1007/978-3-031-56599-1_40. [CrossRef]

- E. T. Rodrigues et al., "Energy-Efficient Dynamic Voltage and Frequency Scaling," Journal of Embedded Computing, 2008.

- A. Beloglazov et al., "Energy-Aware Load Balancing in Data Centers," Proceedings of the International Green Computing Conference, 2013.

- S. M. Sharifi et al., "Machine Learning Models for Predictive Resource Allocation in Cloud Computing," IEEE Transactions on Parallel and Distributed Systems, vol. 32, 2021.

- P. Bhattacharya and A. Dey, "A Survey on Virtualization Technologies," Computing Surveys, vol. 52, no. 3, 2020.

- L. Chen et al., "Efficient Workload Consolidation in Cloud Computing," Future Generation Computing Systems, vol. 94, 2019.

- M. Ivanova et al., "Advancements in Liquid Cooling for Data Centers," Renewable Energy and Sustainable Reviews, 2020.

- T. Zheng et al., "Direct-to-Chip Cooling for High-Density Cloud Servers," IEEE Transactions on Components, Packaging and Manufacturing Technology, 2021.

- S. Islam et al., "Renewable Energy Integration in Cloud Computing," Journal of Renewable and Sustainable Energy, 2019.

- R. George and K. Kumar, "Hybrid Renewable Energy Systems for Cloud Data Centers," IEEE Systems Journal, 2020.

- A. Gupta et al., "Fog and Edge Computing: Opportunities and Challenges," Proceedings of the IEEE, vol. 108, 2020.

- M. Rahman et al., "AI-Driven Optimization in Edge Computing Environments," IEEE Internet Computing, vol. 26, no. 1, 2022.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).