Ref. [

2] explores the integration of LEO satellite constellations into 5G and beyond-5G communication systems. The authors employ mathematical modeling techniques, including network capacity models, signal propagation models, and orbital mechanics to simulate the behavior of LEO satellites in providing mobile broadband, massive machine-type communications (mMTC), and ultra-reliable low-latency communications (URLLC). The models account for satellite orbit parameters, satellite handovers, and coverage areas, while the formulae used include signal-to-noise ratio (SNR) calculations based on the Friis transmission equation and link budget analysis for satellite communication. Additionally, the paper uses models of interference from both satellite and terrestrial networks. The results of the mathematical simulations suggest that LEO satellites are crucial for expanding global network coverage, especially in underserved regions. Ref. [

3] focuses on optimizing the key parameters of LoRa communication for satellite-based IoT applications in the LEO environment. This paper develops a mathematical model to simulate LoRa’s communication behavior under different environmental and system conditions, such as Doppler shifts, signal degradation, and channel noise. The optimization approach is based on the analysis of key performance indicators such as energy efficiency and communication reliability, with the authors employing a multi-objective optimization framework. The formulae used include the computation of the signal-to-noise ratio (SNR) for each parameter configuration, the Shannon capacity formula for determining channel capacity, and energy consumption models derived from the transmission power and duty cycle. Simulation results demonstrate that optimized LoRa parameters can enhance communication reliability and energy efficiency, making it a viable solution for satellite IoT applications. In [

4], the authors introduce a novel mathematical model for ultra-dense LEO satellite constellations. The model is based on the queuing theory to represent satellite network traffic, the interference models to capture signal collisions, and the orbital mechanics models to simulate the deployment and movement of satellites in low Earth orbit. They apply models of Poisson processes to describe the traffic arrival rates and employ the Erlang-B formula to calculate the blocking probability for satellite channels. Additionally, interference management techniques are modeled using game theory, where the authors use Nash equilibrium solutions to model satellite resource sharing and interference mitigation. The results indicate that ultra-dense constellations significantly enhance system capacity, but advanced interference management is crucial to optimize network performance. Ref. [

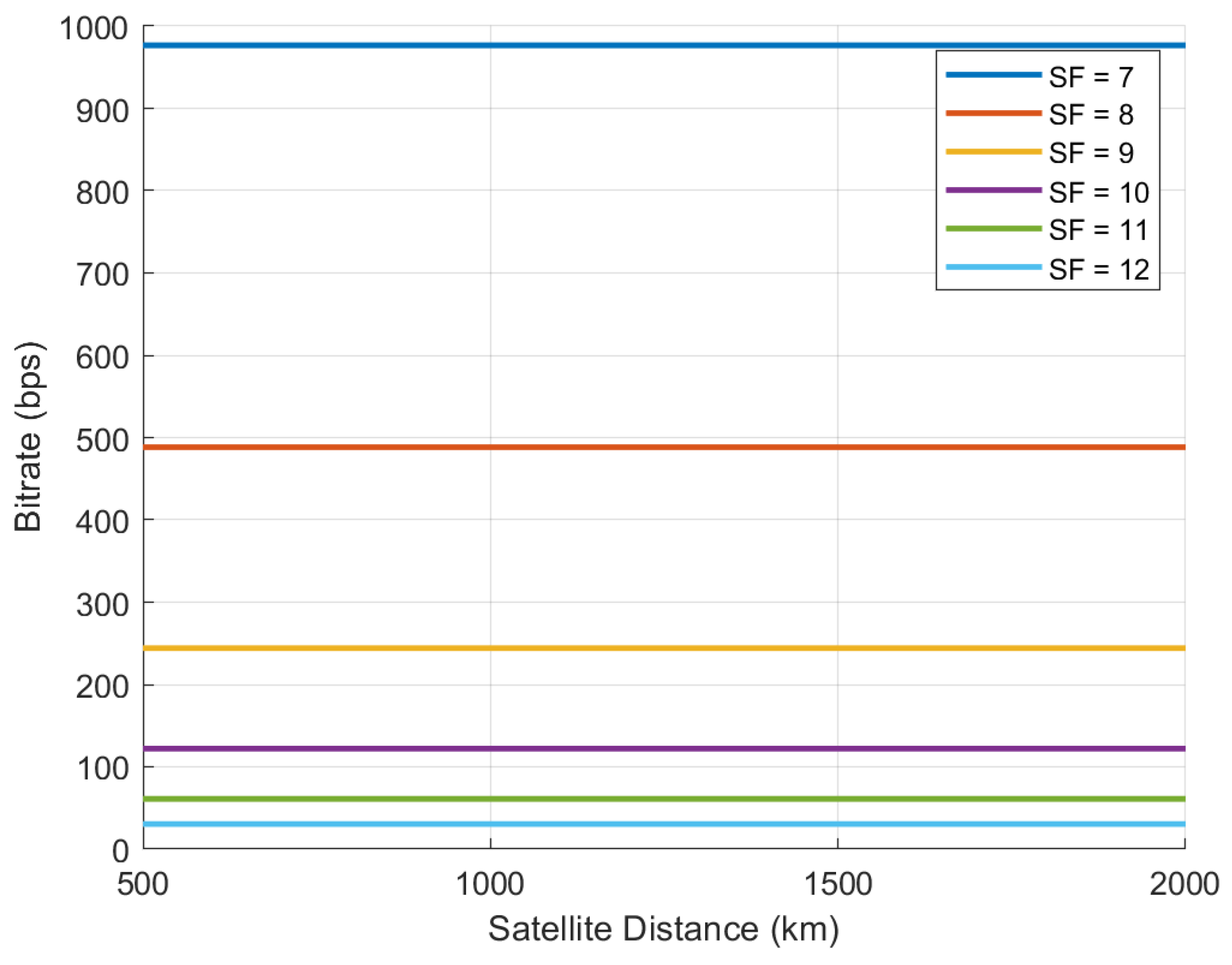

5] investigates the performance of LoRa (Long Range) technology for Internet of Things (IoT) networks using LEO satellites. The authors conduct an in-depth analysis of LoRa’s feasibility in satellite IoT applications, focusing on the challenges posed by the satellite environment, including high mobility, Doppler shifts, and propagation conditions. The study uses both analytical models and simulation results to assess key performance metrics, such as packet delivery success, energy efficiency, and link reliability, under various channel conditions typical of LEO satellite communications. The authors examine how LoRa’s physical-layer characteristics, such as Chirp Spread Spectrum (CSS) modulation and long-range communication capabilities, enable reliable data transmission in satellite IoT networks, especially in remote or underserved areas. The paper also explores the trade-offs between spreading factor, transmission power, and network coverage in the context of LEO satellite IoT deployments. Key findings reveal that while LoRa performs well in terms of range and power efficiency, it requires optimization in terms of synchronization and Doppler shift compensation to effectively support large-scale satellite IoT networks. Ref. [

6] provides a comprehensive overview of the Long Range Frequency-Hopping Spread Spectrum (LR-FHSS) modulation scheme, specifically designed to enhance the scalability and robustness of LoRa-based networks. LR-FHSS introduces frequency hopping to the conventional LoRa modulation, allowing for more efficient spectrum usage and reducing interference in densely deployed environments. The authors offer a detailed performance analysis of LR-FHSS, comparing it to traditional LoRa in terms of capacity, energy efficiency, and interference resilience. Through both theoretical modeling and simulation, the paper demonstrates that LR-FHSS can significantly increase network capacity (up to 40 times) without compromising the low power consumption that is characteristic of LoRa technology. Additionally, the study highlights that LR-FHSS can maintain reliable communication in the presence of narrowband interference and congestion, which are common challenges in large-scale IoT deployments. Key results show that LR-FHSS’s frequency diversity allows for reduced packet collisions and enhanced link reliability, making it an attractive solution for large-scale IoT networks, particularly in applications such as smart cities and industrial IoT. Ref. [

7] introduces a unified architecture that bridges terrestrial and space-based IoT infrastructures, aiming to enable global and ubiquitous connectivity. The authors classify IoT-satellite interactions into direct and indirect satellite access models and systematically assess their performance in terms of energy efficiency, coverage, and delay. Particular attention is given to Low Power Wide Area Network (LPWAN) technologies, specifically NB-IoT and LoRa/LoRaWAN, evaluating their physical and protocol-layer feasibility for Low Earth Orbit (LEO) satellite scenarios. Key challenges such as Doppler shift, timing synchronization, link intermittency, and contention in the access medium are analyzed in detail. The study highlights that although LoRa and NB-IoT offer strong potential for low-energy satellite IoT, protocol adaptations and cross-layer design improvements are critical to ensure robust operation in high-mobility satellite environments. This foundational work sets the stage for further development of hybrid terrestrial-satellite IoT systems. In [

8], the authors explore the application of differential modulation techniques within LoRa communication frameworks targeted at LEO satellite-based IoT networks. The primary objective is to mitigate synchronization and frequency offset challenges, which are prominent in satellite channels characterized by high Doppler shifts and rapid mobility. Differential modulation, by allowing symbol decoding without requiring explicit phase recovery, enhances the robustness of LoRa links in dynamic propagation environments. The study provides both theoretical analysis and simulation-based validation, demonstrating that differential LoRa modulation can maintain reliable connectivity at low SNRs and under varying Doppler conditions. Additionally, it retains the core advantages of LoRa, such as long-range communication and low power consumption, making it a viable candidate for energy-efficient, space-based IoT deployments. This research contributes to improving the link reliability and physical-layer adaptability of LoRa in non-terrestrial environments. The paper [

9] provides a review of dynamic communication techniques for LEO satellite networks. Although this paper focuses more on reviewing existing literature, it references several key mathematical models, including beamforming models that use array gain calculations to optimize the directional communication of satellites. The authors also discuss frequency reuse models, interference mitigation using power control algorithms, and dynamic scheduling methods, with mathematical formulations for optimal frequency allocation and channel assignment. Furthermore, they refer to dynamic beamforming models that employ antenna gain and signal attenuation equations, and discuss adaptive algorithms to handle satellite mobility, including Markov chain models to represent handover and routing protocols in dynamic LEO networks. Ref. [

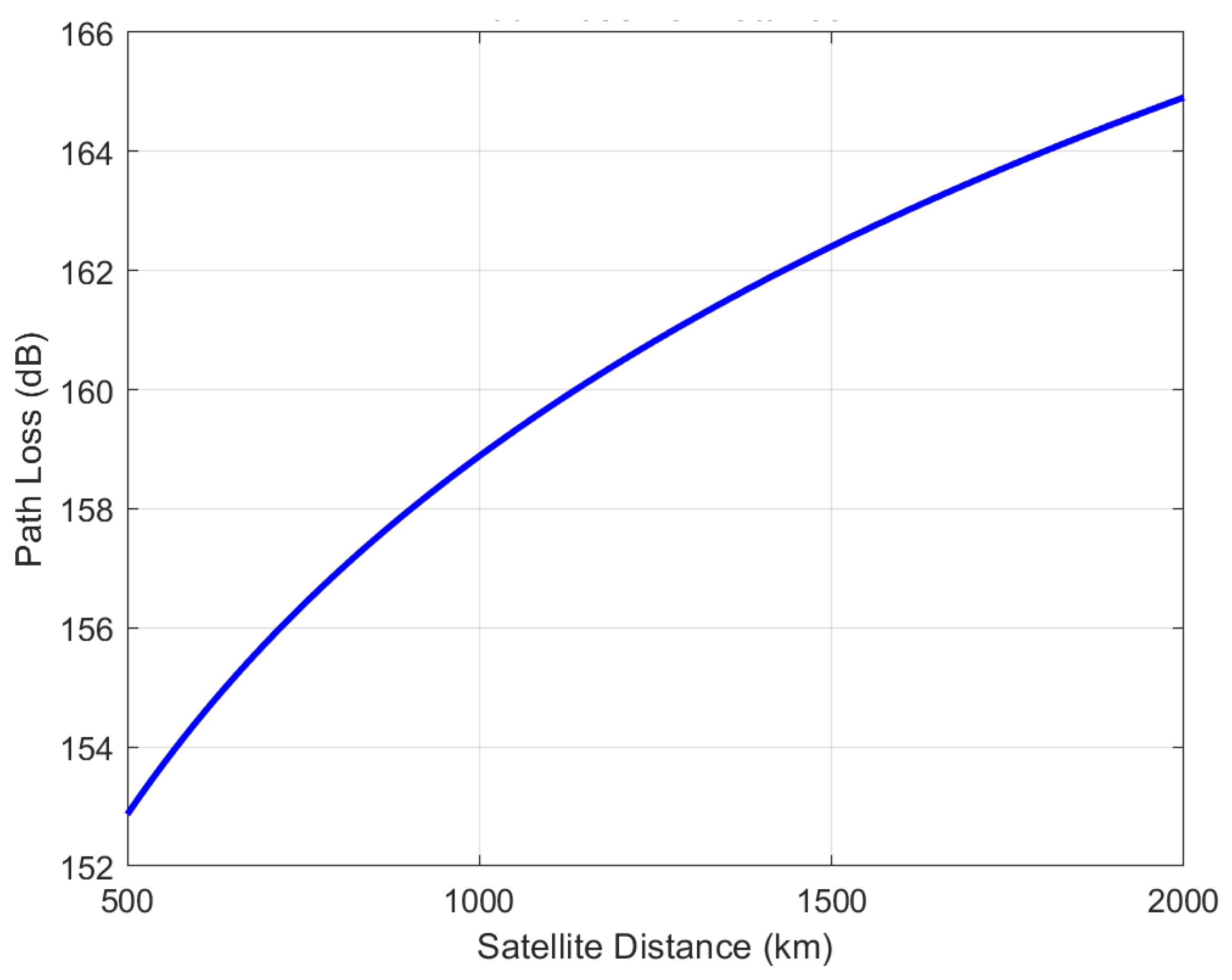

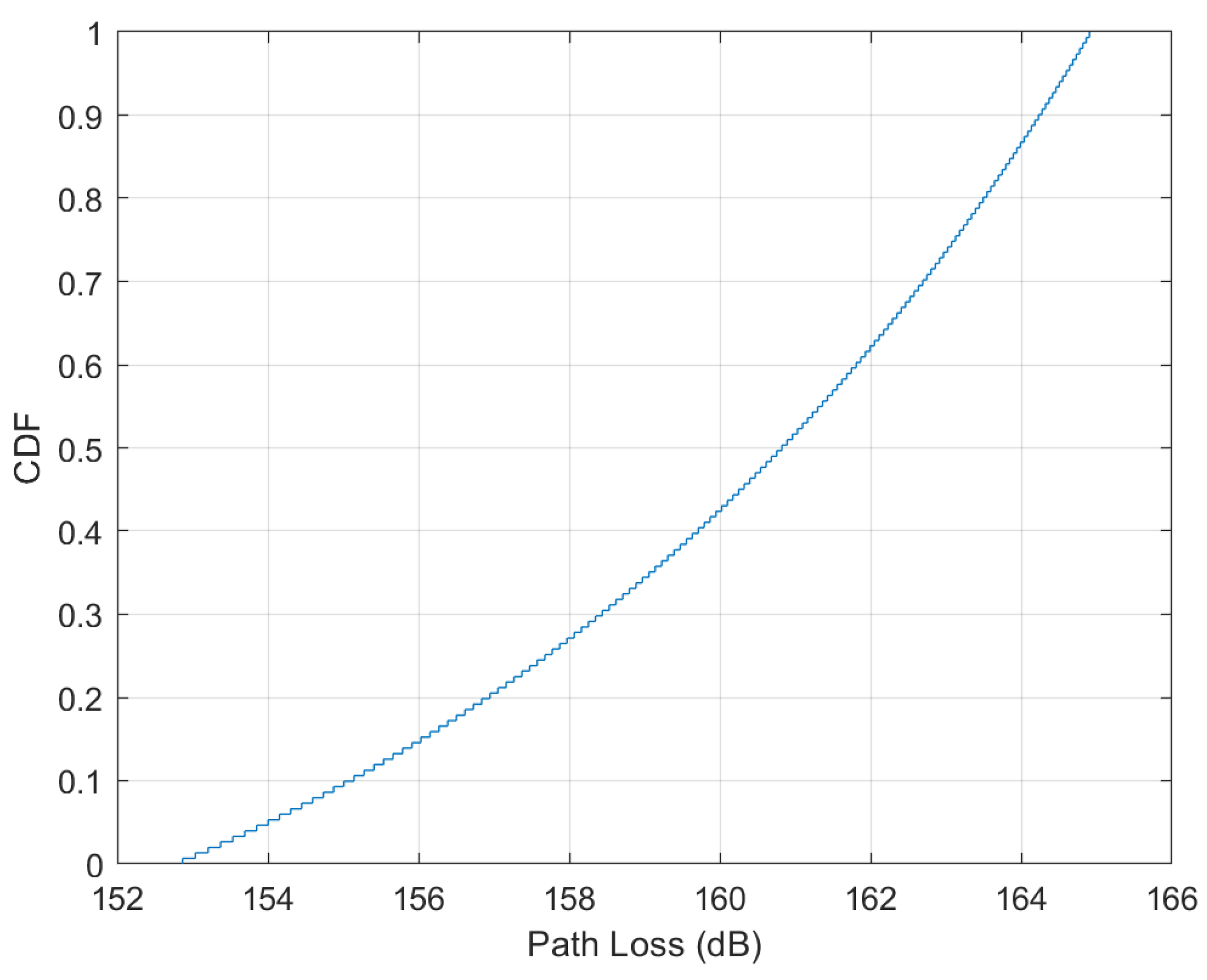

10] presents the design of a LoRa-like transceiver optimized for satellite IoT applications. The mathematical models used in this paper include link budget calculations, considering factors such as satellite altitude, path loss, and free-space propagation models. The paper also uses signal degradation models to predict the attenuation over long distances. The formulae applied include the path loss model derived from the Friis transmission equation, and power consumption models based on signal strength and modulation schemes. Additionally, the authors utilize the Shannon-Hartley theorem to assess the theoretical maximum data rates achievable under varying signal conditions. The optimization process focuses on minimizing energy consumption while maintaining acceptable communication reliability, and the results demonstrate that the LoRa-like transceiver provides an energy-efficient solution with good performance over long distances in satellite networks. Ref. [

11] examines how LoRaWAN combined with LEO satellites can facilitate mMTC in remote regions. The paper uses mathematical models for network coverage and reliability, incorporating satellite link budget equations and path loss models specific to LEO satellites. The authors also apply models of interference and fading, including the Ricean fading model to simulate the impact of environmental conditions on signal strength. Additionally, the paper employs stochastic geometry models to evaluate the spatial distribution of devices and network performance in terms of coverage, throughput, and scalability. Simulations show that integrating LoRaWAN with LEO satellites offers a cost-effective and reliable solution for mMTC in rural and isolated areas. Ref. [

12] explores the performance of the LoRaWAN protocol integrated with LR-FHSS modulation for direct communication to LEO satellites. The authors used a combination of analytical and simulation models to evaluate the feasibility and performance of this system in satellite communication scenarios. To assess packet delivery from ground nodes to LEO satellites, the authors developed mathematical models that consider various factors such as satellite mobility, channel characteristics, and potential signal collisions. These models focus on the integration of LR-FHSS within the LoRaWAN framework to improve network capacity and resilience, specifically addressing the challenges posed by the dynamic nature of satellite communication. The analytical model also takes into account the average inter-arrival times for different packet elements, including headers and payload fragments, and calculates the overall success probability for packet transmission. This success probability is influenced by the first (Ftx-1) payload fragments and the last fragment of a data packet. Additionally, the study incorporates the effects of Rician fading in the communication channel, considering the elevation angle of the satellite as a function of time, which reflects the rapid variations in the channel caused by the movement of the satellite. This dynamic modeling is crucial for accurately simulating the real-world conditions of satellite communication. The results indicate that the use of LR-FHSS significantly improves the potential for large-scale LoRaWAN networks in direct-to-satellite scenarios. The study also identifies important trade-offs between different LR-FHSS-based data rates, particularly for the European region, and highlights the causes of packet losses, such as insufficient header replicas or inadequate payload fragment reception. These findings emphasize the potential of LR-FHSS to enhance direct connectivity between IoT devices and LEO satellites, providing an effective solution for enabling IoT applications in remote and underserved areas. Ref. [

13] investigates the performance of LoRaWAN with Long Range Frequency Hopping Spread Spectrum (LR-FHSS) in direct-to-satellite communication scenarios. The paper uses mathematical models to simulate the impact of Doppler shifts and interference on LoRaWAN’s performance. The authors use Doppler shift models based on satellite velocity and orbital parameters to analyze the variation in signal frequency, and interference models that consider multiple access interference and the impacts of frequency hopping. Additionally, the authors use SNR-based formulas to evaluate the system’s performance under varying link conditions, such as changes in the relative positions of satellites and ground stations. Simulation results indicate that LR-FHSS in LoRaWAN enhances the system’s robustness against Doppler shifts and interference, ensuring reliable communication in direct-to-satellite scenarios. Ref. [

14] explores the scalability of LoRa for IoT satellite communication systems and evaluates its performance in multiple-access communication scenarios. The authors use analytical models to calculate the channel capacity and throughput of LoRa-based networks, applying queuing theory and slotted ALOHA for multiple-access schemes. The paper incorporates interference models based on the random arrival of packets and the effects of channel collisions. Additionally, the authors use the Shannon capacity formula to analyze the maximum achievable data rates in multiple-access satellite IoT networks. Their findings show that LoRa can be scaled for large IoT deployments, offering a low-power solution that ensures efficient multiple-access operation in satellite environments. In [

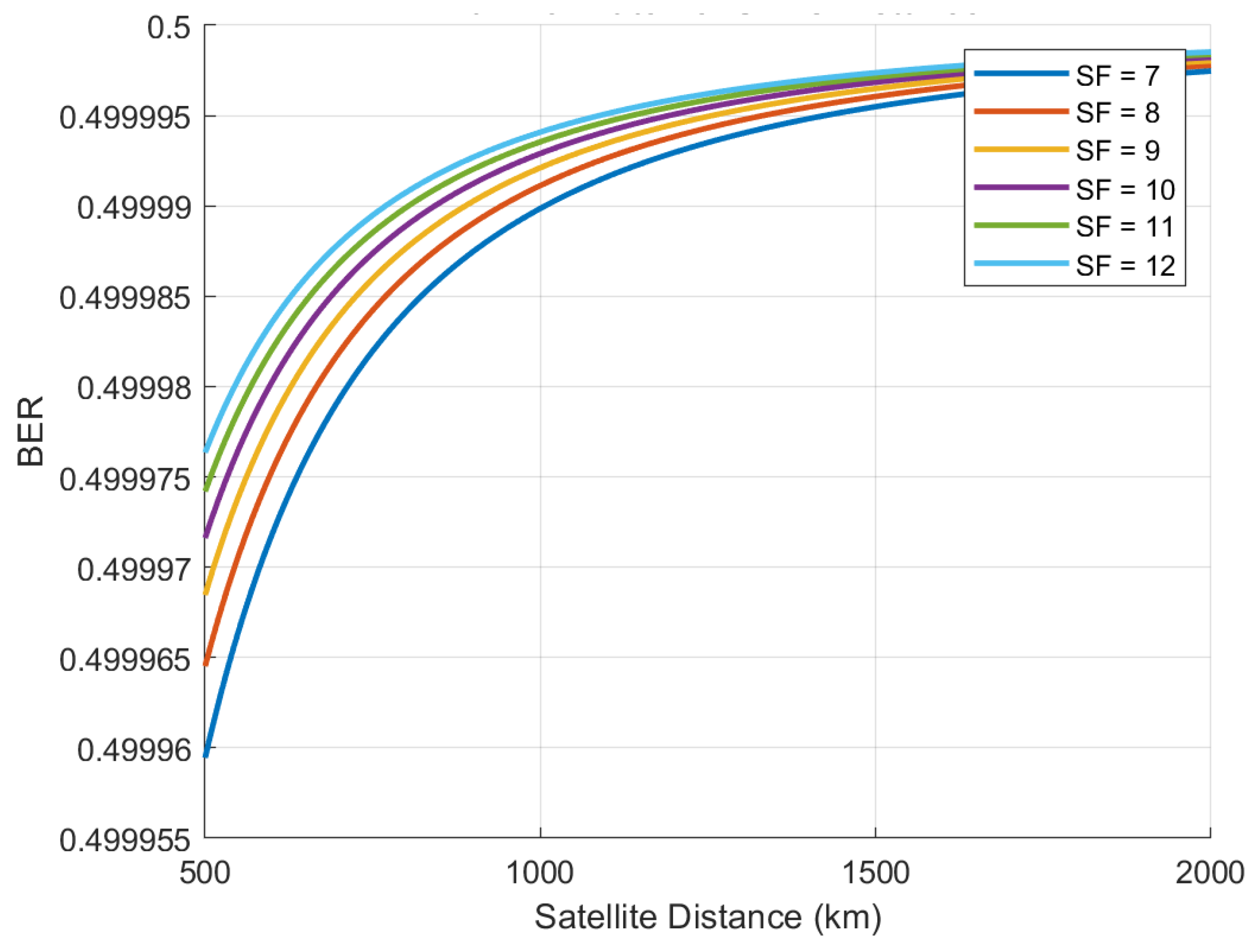

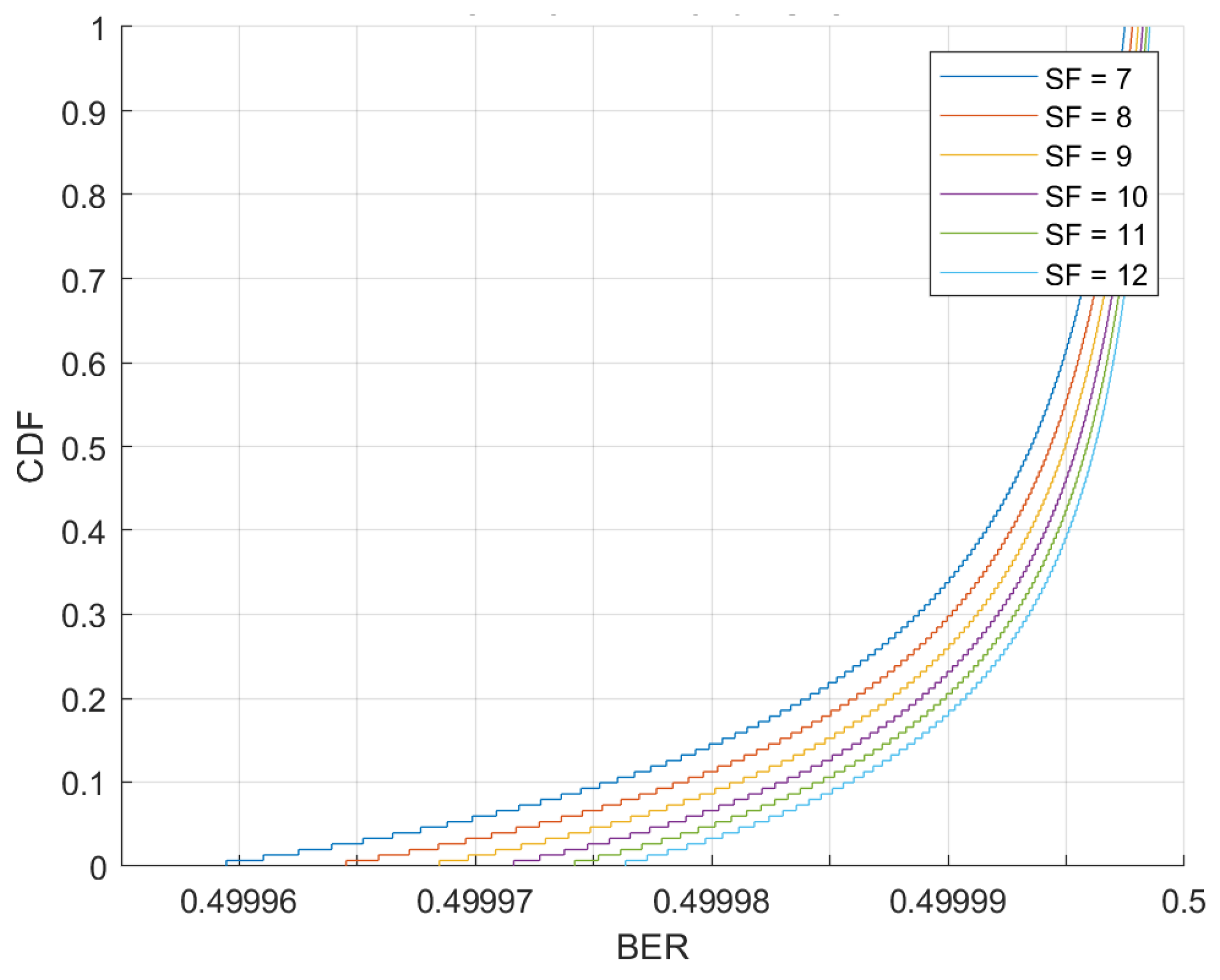

15] the authors model the uplink channel for LoRa in satellite-based IoT systems. The paper uses models based on link budget analysis to calculate the effective SNR and the associated bit error rate (BER) for uplink transmissions. The authors apply the Shannon-Hartley theorem to evaluate the capacity of the uplink channel and use fading models, such as Rayleigh and Ricean fading, to simulate realistic satellite communication conditions. Additionally, the authors consider the impact of transmission power on energy consumption, employing optimization techniques to determine the optimal transmission power for minimizing energy consumption while maintaining sufficient communication reliability. The findings suggest that LoRa provides a viable solution for uplink transmission in satellite IoT networks, particularly when optimized for power and signal conditions.

Ref. [

16] presents a novel scheduling algorithm, SALSA, designed to manage data transmission from IoT devices to LEO satellites. The paper introduces an optimization model for dynamic traffic scheduling, where the authors use queuing models to prioritize data packets based on urgency and delay tolerance. The scheduling algorithm minimizes communication delays and optimizes throughput by dynamically adjusting the transmission window, considering factors such as satellite position and network load. Mathematical formulations used in this paper include the calculation of optimal scheduling intervals using linear programming techniques, and the analysis of queueing delay based on Poisson processes. The paper concludes that SALSA significantly enhances the efficiency of LoRa-based satellite communications by reducing latency and increasing throughput, improving the overall performance of IoT networks. Ref. [

17] provides an in-depth review of scalable LoRaWAN solutions for satellite IoT systems. The authors discuss scalability models that address network densification, including interference mitigation and synchronization techniques. They present mathematical models of LoRaWAN network traffic, using stochastic models and queuing theory to evaluate network scalability under different operational conditions. The paper also references interference models based on Gaussian noise and path loss equations specific to satellite communication. The authors apply models from game theory to address resource allocation and power control in large-scale IoT systems. The review concludes that LoRaWAN can effectively scale for massive IoT systems in satellite networks but suggests that further improvements in network management and interference mitigation are necessary to handle the increasing demands of such systems. Ref. [

18] investigates the applicability of quasisynchronous LoRa communication in LEO nanosatellites. The primary objective is to address the Doppler shift challenges typically encountered in such environments and to enhance the reliability of long-range, low-power communication. The authors conduct a series of simulations to test the robustness and efficiency of quasisynchronous transmission techniques. These simulations are designed to reduce Doppler-induced signal degradation while preserving energy efficiency. Mathematical modeling focuses on the analysis of symbol error rate (SER) under additive white Gaussian noise (AWGN) conditions, considering various spreading factors and chip waveform structures. The outcomes of these simulations demonstrate that quasisynchronous LoRa can significantly improve communication reliability for nanosatellites engaged in IoT operations, particularly where energy constraints are paramount. Ref. [

19] presents a system architecture for a cost-effective nanosatellite communication platform based on LoRa technology, targeting emerging IoT deployments. The study involves the design and prototyping of both hardware and software elements of the communication system. Performance evaluation includes link budget calculations and assessments of signal range and energy efficiency. The analysis employs received signal strength indicator (RSSI) metrics and estimates the maximum communication distance achievable, which exceeds 14 kilometers. The findings suggest that LoRa-based nanosatellites offer a viable solution for long-range, low-power communication systems that are both scalable and economically sustainable for large-scale IoT applications. Ref. [

20] presents a novel transmission protocol that integrates Device-to-Device (D2D) communication with LoRaWAN LR-FHSS to enhance the performance of direct-to-satellite (DtS) IoT networks. The authors propose a D2D-assisted scheme in which IoT devices first exchange data using terrestrial LoRa links and subsequently transmit both the original and parity packets, generated through network coding, to a LEO satellite gateway using the LR-FHSS modulation. This approach is designed to mitigate the limitations of conventional LoRaWAN deployments in DtS scenarios, particularly those associated with limited capacity and high outage rates in dense environments. A comprehensive system model is developed, incorporating a realistic ground-to-satellite fading channel characterized by a shadowed-Rician distribution. The authors derive closed-form expressions for the outage probability of both the baseline and D2D-aided LR-FHSS systems, taking into account key system parameters including noise, channel fading, unslotted ALOHA scheduling, capture effect, IoT device spatial distribution, and the geometry of the satellite link. These analytical results are rigorously validated through computer-based simulations under various deployment scenarios. The study demonstrates that the proposed D2D-aided LR-FHSS scheme significantly enhances network capacity compared to the traditional LR-FHSS approach. Specifically, the network capacity improves by up to 249.9% for Data Rate 6 (DR6) and 150.1% for Data Rate 5 (DR5) while maintaining a typical outage probability of

. This performance gain is achieved at the cost of one to two additional transmissions per device per time slot due to the D2D cooperation. The D2D approach also exhibits strong performance in dense IoT environments, where packet collisions and interference are prominent. In [

21] the authors provide a comparative review of LPWAN technologies, with a particular emphasis on LoRaWAN, and evaluate their potential for direct-to-satellite IoT communication. The methodology involves the systematic comparison of LPWAN protocols based on their scalability, power consumption, and data throughput. The analytical framework includes interference modeling, latency evaluation, and throughput estimation under variable environmental and operational conditions. The survey concludes that LPWAN technologies, especially LoRaWAN, are well-positioned to support satellite IoT networks; however, persistent challenges such as high-latency communication and susceptibility to interference warrant further investigation. Ref. [

22] surveys recent technological trends in the integration of LPWAN, specifically LoRaWAN, within LEO satellite constellations. The authors conduct a thorough review of advancements in synchronization protocols, interference mitigation strategies, and system scalability. Analytical modeling is used to characterize interference dynamics, while synchronization models assess the temporal alignment of satellite communications. Furthermore, scalability is evaluated through network topology simulations and capacity forecasting. The paper concludes that although LPWAN presents numerous advantages for LEO constellations, including low power usage and wide coverage, the technology requires enhanced coordination mechanisms and interference control techniques to achieve full operational efficacy. In [

23] the focus is on quantifying and mitigating the impact of Doppler shifts in LoRa-based satellite communications. The authors develop a mathematical model to calculate Doppler shifts resulting from the relative velocity of LEO satellites and ground terminals. The study introduces frequency compensation algorithms aimed at counteracting the signal distortion caused by rapid satellite motion. Performance is assessed through simulations that incorporate SNR analyses and compare communication quality with and without Doppler compensation mechanisms. The results confirm that Doppler effects significantly impair LoRa signal integrity in direct-to-satellite communication, but also show that well-designed compensation techniques can restore acceptable levels of performance. Ref. [

24] investigates strategies for minimizing energy consumption in LoRaWAN-based communication systems deployed via LEO satellites. The central focus of the paper is the development of adaptive transmission techniques that dynamically adjust power levels and duty cycles based on real-time communication requirements and environmental conditions. The authors utilize simulation-based methods to compare multiple transmission schemes in terms of their energy efficiency and network reliability. The mathematical framework incorporates models for energy consumption as a function of packet size, transmission frequency, and satellite-ground link distance. Additionally, Markov models are used to simulate duty cycle transitions and assess network availability. The findings demonstrate that optimizing these parameters can significantly prolong the operational lifetime of IoT devices in satellite networks and make LoRaWAN a highly viable protocol for energy-constrained space-based IoT deployments. Ref. [

25] proposes a novel uplink scheduling strategy for LoRaWAN-based DtS IoT systems, termed Beacon-based Uplink LoRaWAN (BU-LoRaWAN). The method leverages the Class B synchronization framework of LoRaWAN, utilizing periodic beacons transmitted by satellites to synchronize ground devices. By aligning transmissions within beacon-defined time slots, the scheme significantly reduces packet collisions and improves spectral efficiency, particularly in contention-heavy, low-duty-cycle uplink scenarios. Simulation results demonstrate that BU-LoRaWAN outperforms standard ALOHA-based LoRaWAN protocols in terms of delivery probability and latency under typical LEO orbital conditions. The study also discusses practical implementation challenges such as beacon visibility duration, orbital scheduling, and ground terminal hardware constraints. The proposed protocol is shown to be especially advantageous for delay-tolerant IoT applications in remote and infrastructure-less regions, supporting scalability and efficient utilization of limited satellite resources.