Submitted:

24 October 2025

Posted:

27 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Ensure the empowerment and rights of individuals with disabilities, enabling them to actively engage in contemporary society. We are committed to enhancing user independence and facilitating their ability to independently manage everyday transportation tasks.

- Enhance accessibility to a range of public transportation options, with a particular focus on improving access for individuals with visual impairments.

- Enable our users to fully leverage the affordability of public transportation, thereby reducing the additional expenses associated with seeking assistance or specialized services for their mobility within the city.

- Reduces greenhouse gas emissions, with the underlying principle that fewer people driving will lead to a more favorable environmental impact. Therefore, if individuals with visual impairments begin utilizing public transportation systems, it would result in a reduction in emissions caused by their private chauffeurs.

- Provides a wide range of training materials and user assistance to empower individuals with visual impairments to fully utilize the app's functionalities.

2. Literature Review

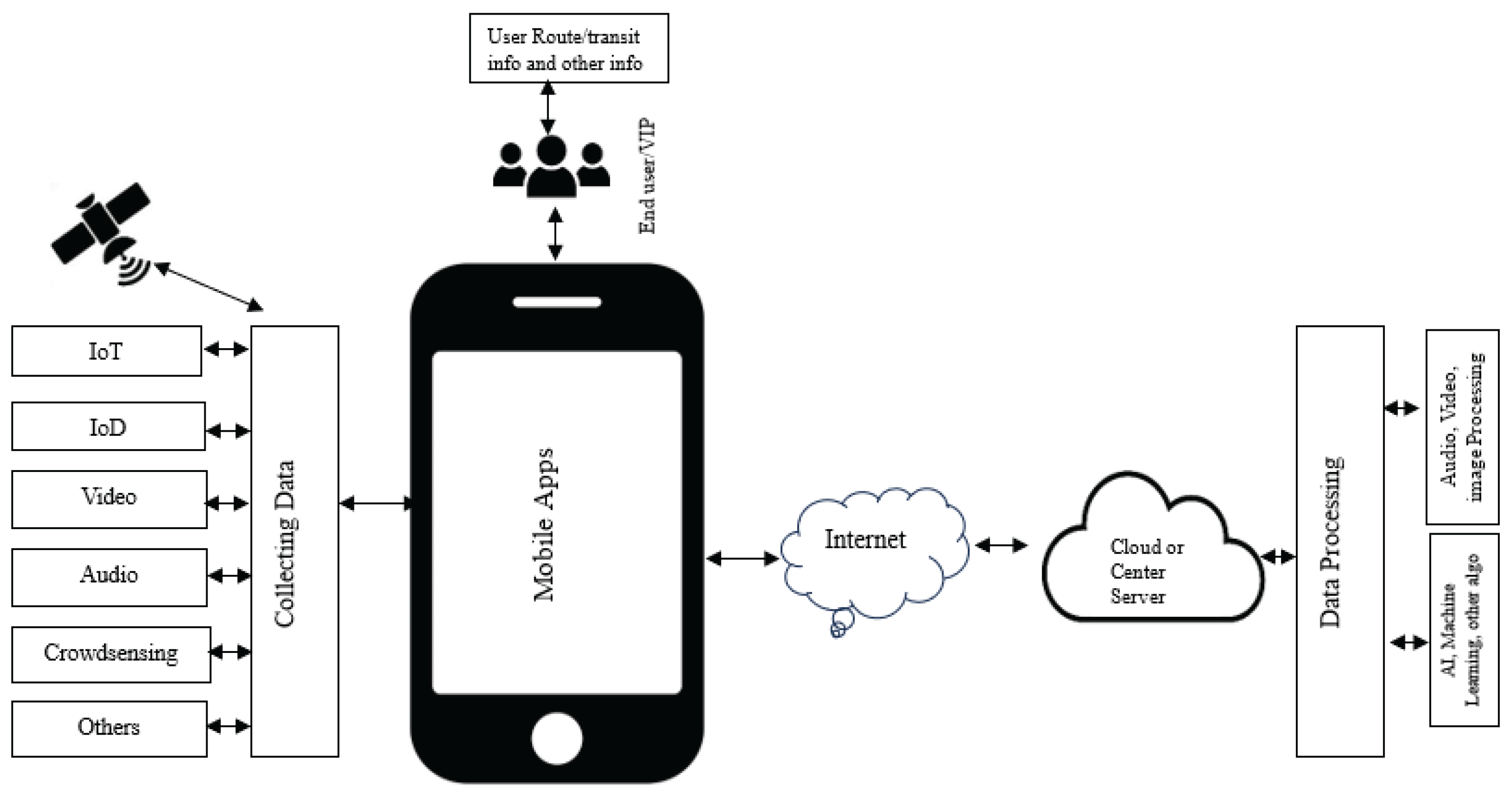

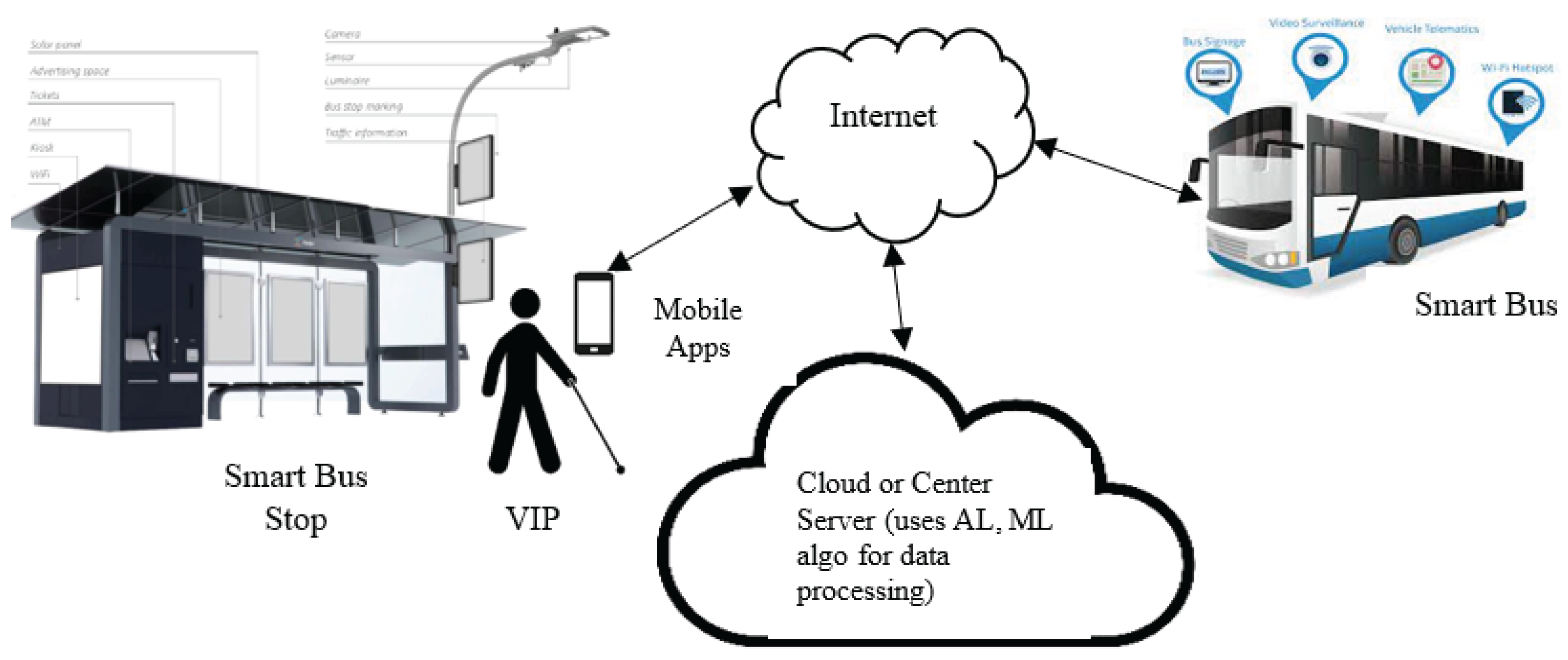

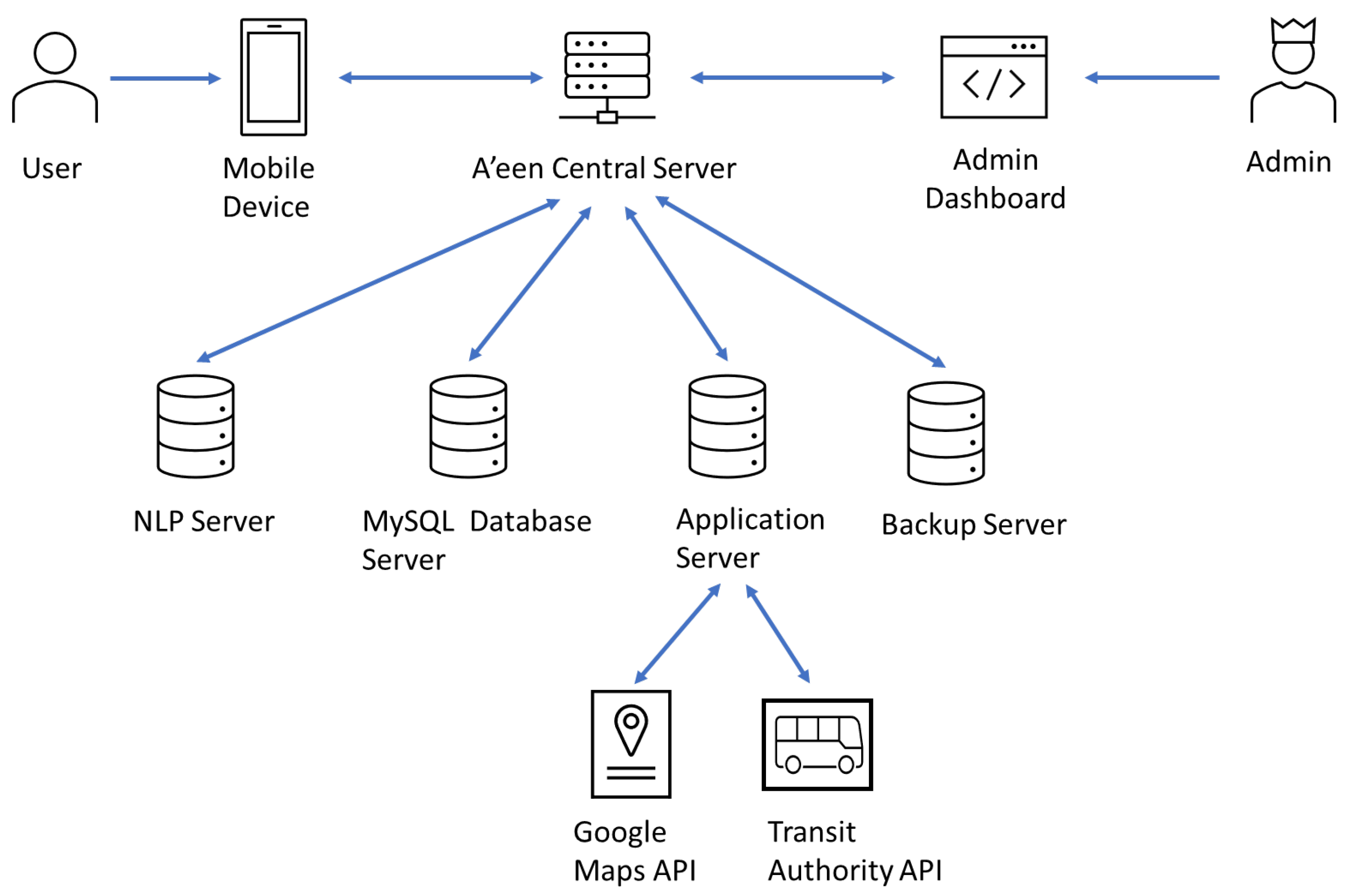

3. Proposed Framework`

3.1. Cloud Server

3.2. IoT, IoD and Other technologies

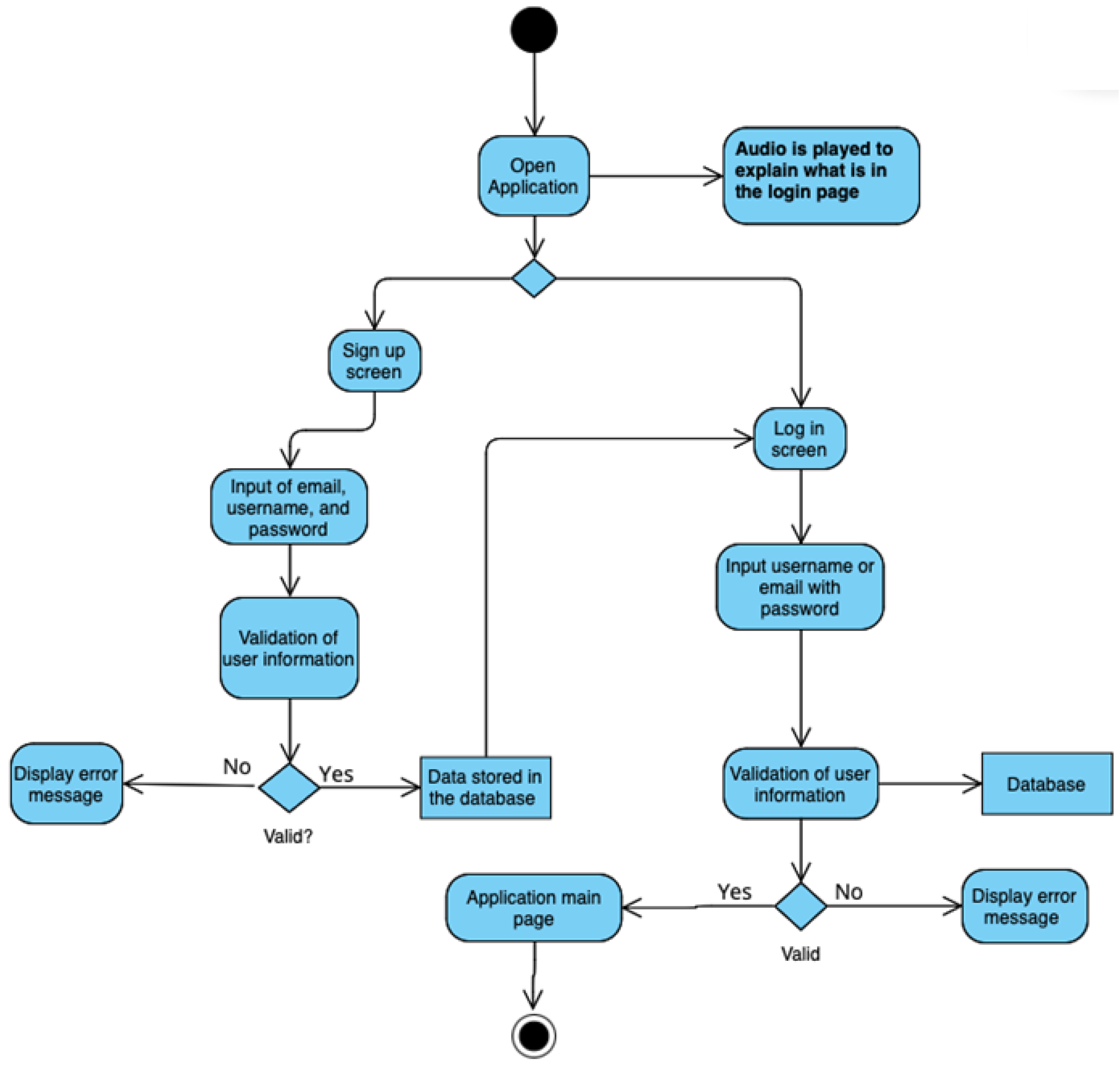

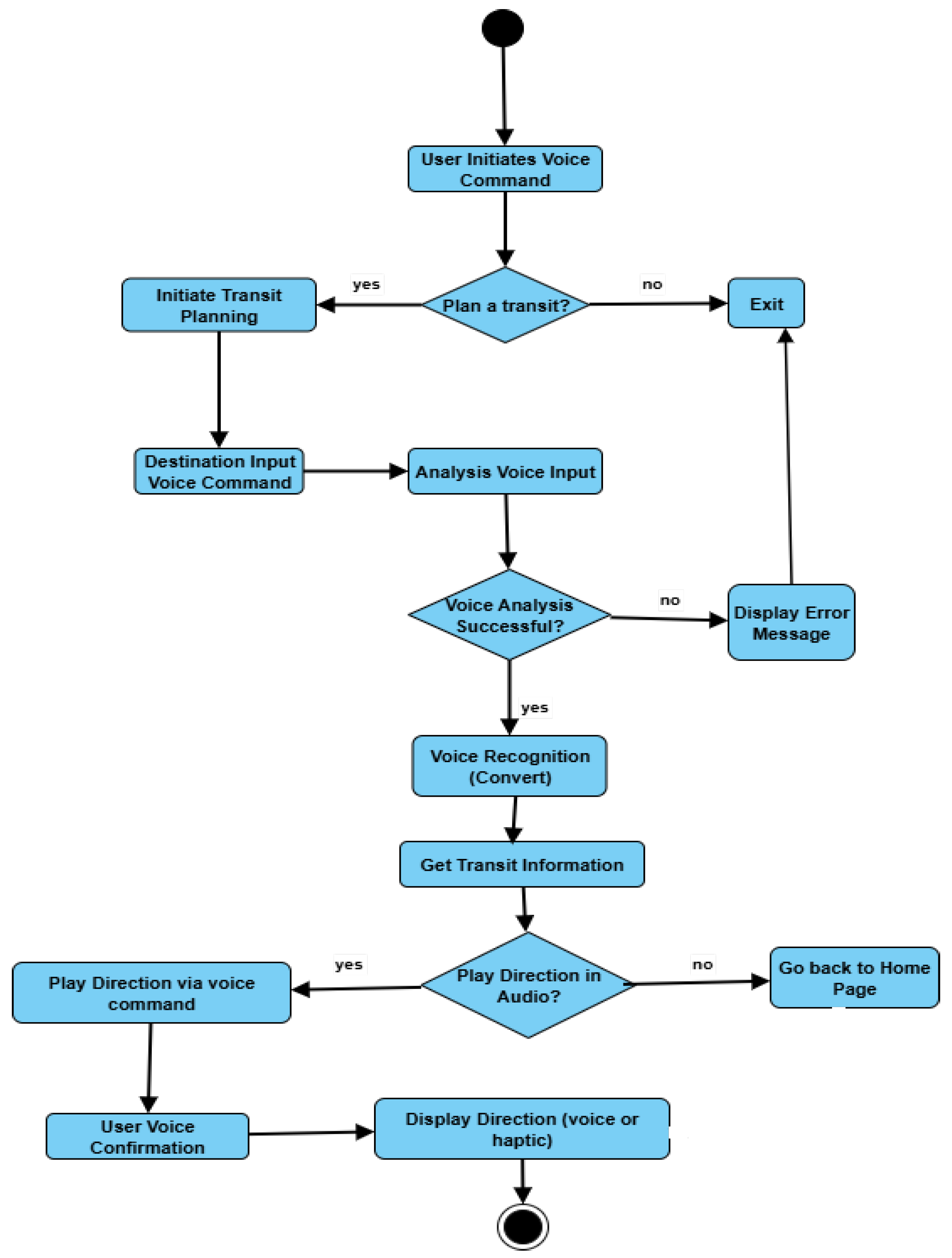

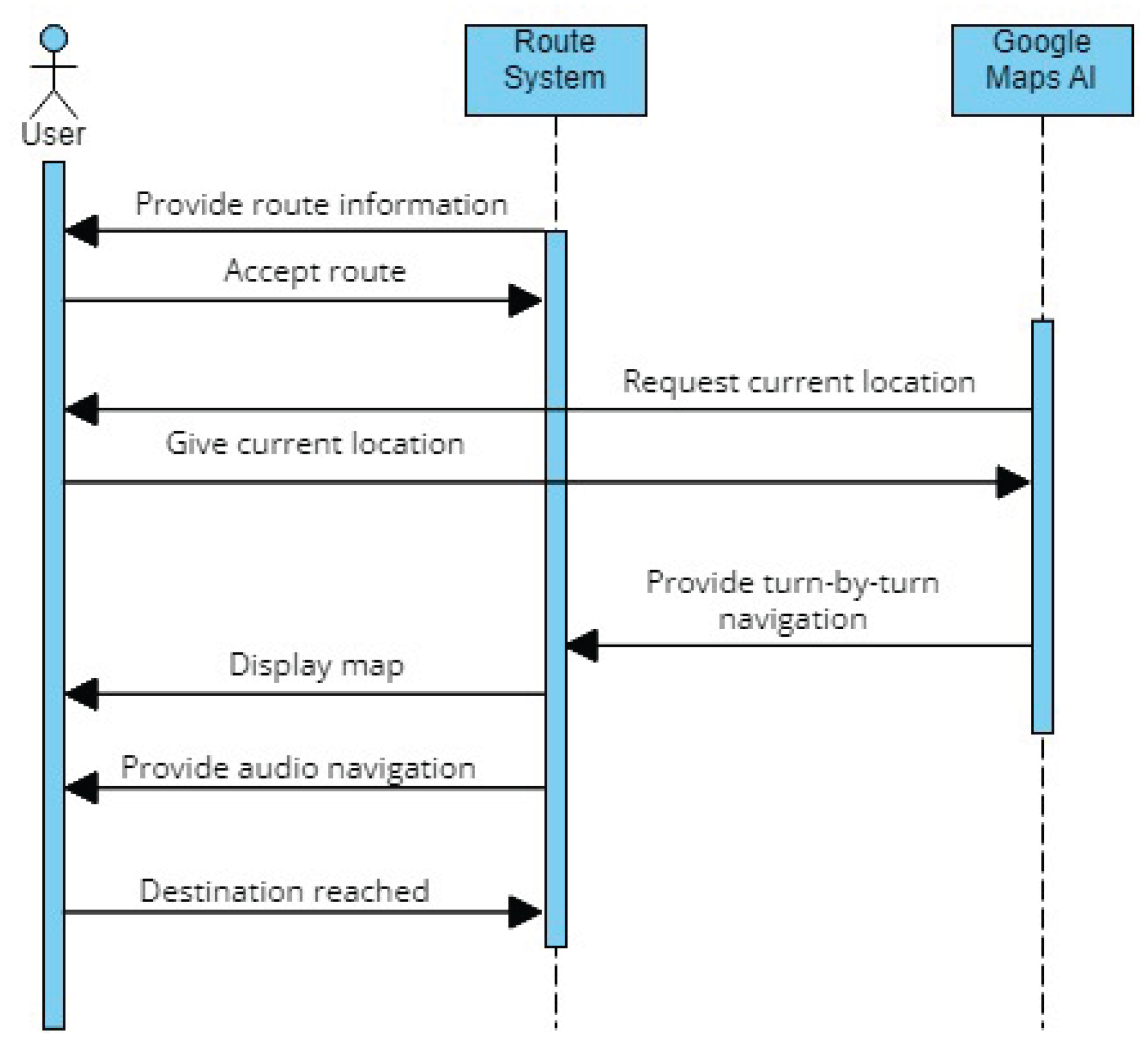

3.3. Mobile Application

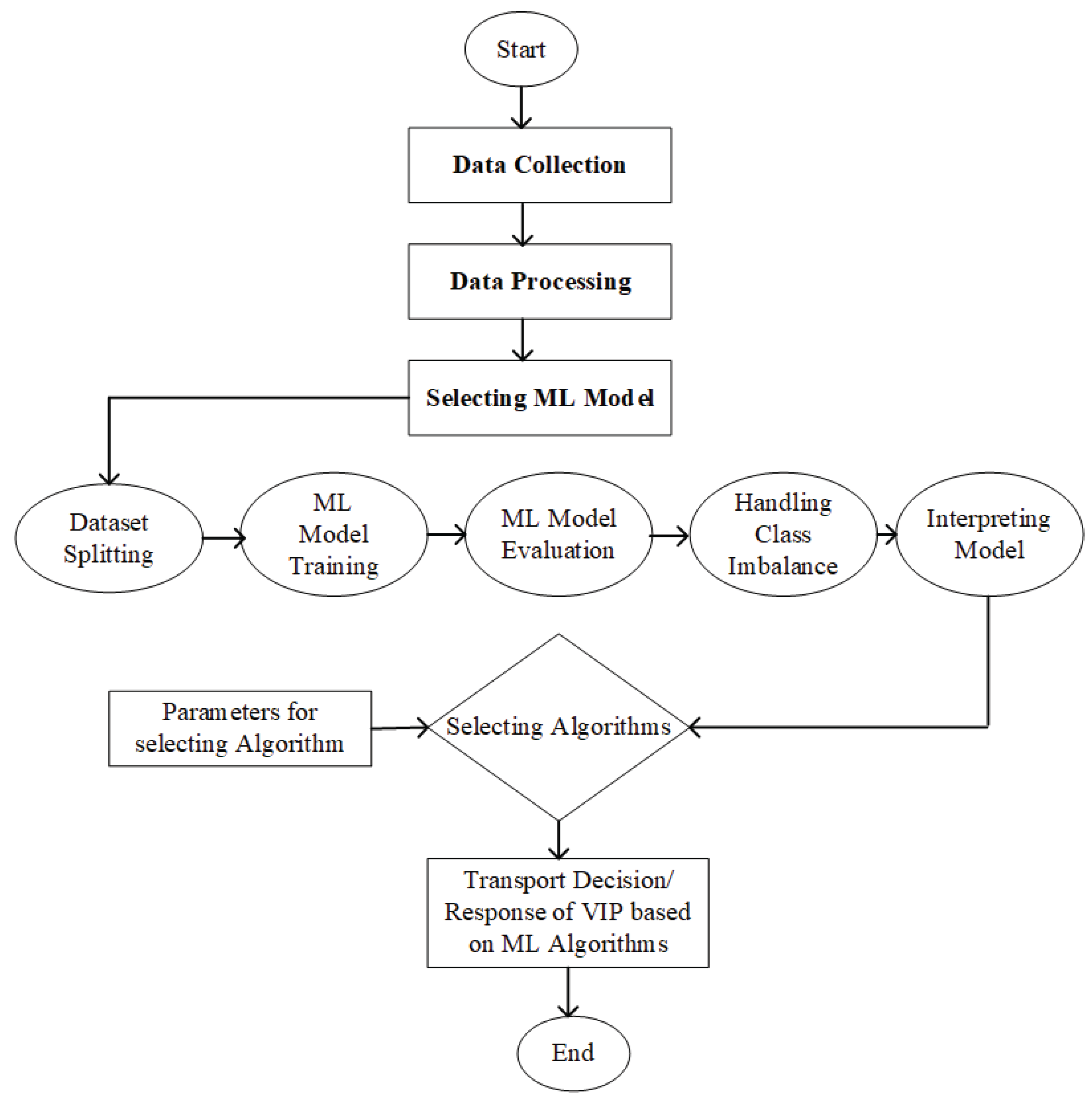

4. AI and ML Algorithms

4.1. AI and ML Component

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- P. Kaur, M. Ganore, R. Doiphode, and T. Ghuge, “Be My Eyes: Android App for visually impaired people,” ResearchGate, Apr. 09, 2017. [Online]. [CrossRef]

- Son, J. H., Kim, D. G., Lee, E., & Choi, H. (2022, January 6). Investigating the Spatiotemporal Imbalance of Accessibility to Demand Responsive Transit (DRT) Service for People with Disabilities: Explanatory Case Study in South Korea. Journal of Advanced Transportation, 2022, 1–9.

- M. M. Ashraf, N. Hasan, L. Lewis, M. R. Hasan, and P. Ray, “A Systematic Literature Review of the Application of Information Communication Technology for Visually Impaired People,” International Journal of Disability Management, vol. 11, 2016. [CrossRef]

- M. Awad, J. E. Haddad, E. Khneisser, T. M. Mahmoud, E. Yaacoub, and M. Malli, “Intelligent eye: A mobile application for assisting blind people,” Apr. 01, 2018. [Online]. [CrossRef]

- B. Pydala, T. P. Kumar, and K. K. Baseer, “Smart_Eye: A Navigation and Obstacle Detection for Visually Impaired People through Smart App,” Journal of Applied Engineering and Technological Science (JAETS), vol. 4, no. 2, pp. 992–1011, Jun. 2023. [CrossRef]

- F. E. -Z. El-Taher, L. Miralles-Pechuán, J. Courtney, K. Millar, C. Smith and S. Mckeever, "A Survey on Outdoor Navigation Applications for People with Visual Impairments," in IEEE Access, vol. 11, pp. 14647-14666, 2023. [CrossRef]

- N. P. Landazabal, O. Andrés Mendoza Rivera, M. H. Martínez, C. Ramírez Nates and B. T. Uchida, "Design and implementation of a mobile app for public transportation services of persons with visual impairment (TransmiGuia)," 2019 XXII Symposium on Image, Signal Processing and Artificial Vision (STSIVA), Bucaramanga, Colombia, 2019, pp. 1-5. [CrossRef]

- V. I. Prusova, M. A. Zhidkova, A. V. Goryunova and V. S. Tsaryova, "Intelligent Transport Systems as an Inclusive Mobility Solution for People with Disabilities," 2022 Intelligent Technologies and Electronic Devices in Vehicle and Road Transport Complex (TIRVED), Moscow, Russian Federation, 2022, pp. 1-5. [CrossRef]

- S. K, S. KMR, S. K. D, V. M, F. L. J and S. P, "I-PWA: IoT based Progressive Web Application for Visually Impaired People," 2023 3rd International Conference on Innovative Practices in Technology and Management (ICIPTM), Uttar Pradesh, India, 2023, pp. 1-6. [CrossRef]

- R. Lima, L. Barreto, A. Amaral and S. Paiva, "Visually Impaired People Positioning Assistance System Using Artificial Intelligence," in IEEE Sensors Journal, vol. 23, no. 7, pp. 7758-7765, 1 April1, 2023. [CrossRef]

- J. A. P. De Jesus, K. A. V. Gatpolintan, C. L. Q. Manga, M. R. L. Trono and E. R. Yabut, "PerSEEption: Mobile and Web Application Framework for Visually Impaired Individuals," 2021 1st International Conference in Information and Computing Research (iCORE), Manila, Philippines, 2021, pp. 205-210. [CrossRef]

- N. Somyat, T. Wongsansukjaroen, W. Longjaroen and S. Nakariyakul, "NavTU: Android Navigation App for Thai People with Visual Impairments," 2018 10th International Conference on Knowledge and Smart Technology (KST), Chiang Mai, Thailand, 2018, pp. 134-138. [CrossRef]

- S. P, S. N, P. D and U. M. R. N, "BLIND ASSIST: A One Stop Mobile Application for the Visually Impaired," 2021 IEEE Pune Section International Conference (PuneCon), Pune, India, 2021, pp. 1-4. [CrossRef]

- J. -Y. Lin, C. -L. Chiang, M. -J. Wu, C. -C. Yao and M. -C. Chen, "Smart Glasses Application System for Visually Impaired People Based on Deep Learning," 2020 Indo – Taiwan 2nd International Conference on Computing, Analytics and Networks (Indo-Taiwan ICAN), Rajpura, India, 2020, pp. 202-206. [CrossRef]

- S. Durgadevi, C. Komathi, K. ThirupuraSundari, S. S. Haresh and A. K. R. Harishanker, "IOT Based Assistive System for Visually Impaired and Aged People," 2022 2nd International Conference on Power Electronics & IoT Applications in Renewable Energy and its Control (PARC), Mathura, India, 2022, pp. 1-4. [CrossRef]

- A. Agarwal, K. Agarwal, R. Agrawal, A. k. Patra, A. K. Mishra and N. Nahak, "Wireless Bus Identification System for Visually Impaired Person," 2021 1st Odisha International Conference on Electrical Power Engineering, Communication and Computing Technology (ODICON), Bhubaneswar, India, 2021, pp. 1-6. [CrossRef]

- Wayfindr. https://www.wayfindr.net/how-audio-navigation-works/wayfindr-consultancy-support, accessed on December 24, 2023.

- Sunu Band. https://sunu.io/pages/sunu-band, accessed on December 22, 2023.

- WEWALK Smartcane. https://wewalk.io/en/about/, access on December 21, 2023.

- Next Generation Talking Sign. https://www.clickandgomaps.com/clickandgo-nextgen-talking-signs.

- Aira Inc. https://aira.io/aira-app/, accessed on December 23, 2023.

- IoT and 5G: Transforming Public Transportation System. https://www.iotforall.com/iot-and-5g-transforming-public-transportation-system, accessed on December 24, 2023.

- A. Ng and M. Jordan, “On discriminative vs. generative classifiers: A comparison of logistic regression and naive bayes,” Advances in Neural Information Processing Systems, vol. 14, 2001.

- J. Friedman, T. Hastie and R. Tibshirani, The Elements of Statistical Learning, vol. 1, 2001.

- R. M. Cruz, G. D. Cavalcanti, R. Tsang and R. Sabourin, “Feature representation selection based on classifier projection space and oracle analysis,” Expert Systems with Applications, vol. 40, no. 9, pp. 3813-3827, 2013.

- Orcam Inc. https://www.orcam.com/en-ca/home?utm_source=landing-page&utm_medium=redirected-from-404, accessed on December 24, 2023.

- Bespecular. https://www.bespecular.com/en/, accessed on December 12, 2023.

- Envision. https://www.letsenvision.com/app, accessed on December 24, 2023.

- Blindsquare. https://www.blindsquare.com/contact/, accessed on December 18, 2023.

- Horizon for Blinds. https://www.horizons-blind.org/, accessed on December 21, 2023.

- V. U and G. V, "Opportunities and Challenges in Development of Support System for Visually Impaired: A Survey," 2023 13th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 2023, pp. 684-690. [CrossRef]

| Algorithm Type | Applications in Transportation | Strengths | Weaknesses |

|---|---|---|---|

| Supervised Learning | - Traffic flow prediction | - Effective for prediction tasks | - Reliance on labeled training data |

| - Demand forecasting | - Generalization to unseen data | - May struggle with complex patterns | |

| - Anomaly detection | |||

| Unsupervised Learning | - Clustering of traffic patterns | - Discover hidden patterns | - Lack of labeled data, interpretability |

| - Anomaly detection | - No need for labeled data | - Interpretation challenges | |

| Reinforcement Learning | - Traffic signal control | - Learning from interactions | - High computational requirements |

| - Autonomous vehicle navigation | - Decision-making in dynamic environments | - Sensitivity to hyperparameters | |

| Deep Learning | - Image recognition for traffic sign detection | - Hierarchical feature learning | - Requires large, labeled datasets |

| - Natural language processing for route optimization | - State-of-the-art performance in some tasks | - Computationally intensive | |

| Decision Trees | - Route optimization | - Intuitive and easy to interpret | - Prone to overfitting, sensitivity to noise |

| Ensemble Methods | - Predictive maintenance for vehicles | - Improved predictive performance | - Complexity and increased computation |

| - Traffic prediction | |||

| Nearest Neighbors | - Traffic flow prediction | - Simple and intuitive | - Sensitive to irrelevant features |

| - Anomaly detection | - No training phase | - Computationally expensive | |

| Natural Language Processing | - Intelligent transportation systems | - Semantic understanding | - Challenges in understanding context |

| - Voice-activated control systems | |||

| Optimization Algorithms | - Route optimization | - Efficient solution search | - May not scale well for large datasets |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).