1. Introduction

In recent decades, society has witnessed the rapid advancement of information and communication technologies, which has significantly transformed safety [

1,

2], health [

3,

4], and environmental monitoring [

5]. Innovations in the Internet of Things (IoT), artificial intelligence (AI)/machine learning (ML), and data analytics have enabled more sophisticated and real-time monitoring capabilities [

6,

7]. Wearable devices, environmental sensors, and intelligent infrastructure are now commonplace, collecting vast amounts of data that can be analyzed to improve public safety and individual well-being [

8].

Concurrently, the emergence of the Metaverse represents a paradigm shift in how humans interact with digital and physical environments [

9]. Extending traditional IoT to the Internet of Physical-Virtual Things (IoPVT), Metaverse creates a seamless integration between physical objects and their virtual counterparts. The integration allows real-time synchronization and interaction within the Metaverse, a collective virtual shared space that merges augmented reality (AR), virtual reality (VR), and the Internet [

10,

11]. Using the capabilities of IoPVT, it becomes possible to create dynamic digital twins (DT) of physical environments [

12]. These digital representations can be used for advanced analytics, predictive modeling, and immersive simulations, opening new horizons in proactive safety and health monitoring [

13].

Despite technological advancements, monitoring the safety, health, and environmental conditions in outdoor environments remains challenging [

14,

15]. Traditional systems often focus on indoor settings or controlled environments, leaving a gap in monitoring capabilities in outdoor, dynamic, and complex settings. On the one hand, outdoor environments are subject to changing weather conditions, natural disasters, and varying terrain, affecting the reliability of sensors and monitoring equipment. However, in rural and coastal areas, there may be limited infrastructure for data transmission and sensor deployment. In addition, the unpredictability of human behavior in public spaces adds complexity to monitoring efforts. Furthermore, collecting and integrating data from various sources, such as environmental sensors, biometric devices, and infrastructure monitoring systems, requires sophisticated data fusion techniques. These challenges are particularly pronounced in urban, rural, and coastal settings, each presenting unique obstacles and requirements for effective safety monitoring.

Comprehensive solutions are needed to address the complexities of outdoor safety monitoring in different environments. New applications and tools enable interdisciplinary approaches while addressing ethical and social implications. Integrating humans, technology, and the environment is crucial to measuring and improving safety, biohealth, and climate resilience. By focusing on these three dimensions, it is possible to develop not only technologically advanced but also socially responsible and environmentally sustainable systems. The successful development of a comprehensive solution will bring many benefits to society. Not only will it reduce accidents and improve emergency response through real-time monitoring and predictive analytics, but also the ability to monitor biohealth indicators will prevent health crises and promote healthy behaviors.

Human Activity Recognition (HAR) focuses on identifying and analyzing human actions through sensor data [

16]. Integrating HAR with IoPVT in the Metaverse allows systems to collect data in real-time, create virtual replicas of physical environments, and allow timely interventions and informed decision-making to prevent accidents and emergencies [

17]. This integration promises to revolutionize safety monitoring by providing a comprehensive, interactive, and predictive approach that adapts to the specific needs of different environments.

This paper aims to explore and articulate a future in which outdoor safety monitoring is significantly enhanced through the integration of HAR with IoPVT in the Metaverse. The proposed system addresses various challenges and illustrates the versatility of the physical-virtual intertwined approach. We focus on the interaction between humans, technology, and the environment to create a holistic monitoring system that leverages edge-based sensor data fusion, fog computing, and cloud analytics to enable real-time data processing and decision-making [

18]. By presenting a cohesive vision, the paper aims to contribute to the advancement of outdoor safety monitoring technologies and establish a roadmap for future research and development efforts.

Specifically, this paper will make the following contributions:

Present a Conceptual HARISM Framework: This vision paper outlines a framework for HAR with IoPVT for Safety Monitoring (HARISM), which focuses on outdoor safety applications, integrating HAR with IoPVT and the Metaverse to proactively safeguard public spaces across different environments.

Explore Technological Innovations: A thorough discussion is presented on advancements in sensor technologies, AI-driven HAR techniques, and computing architectures like edge, fog, and cloud computing.

Examine Societal Benefits: The paper highlights the potential for proactive health monitoring, enhanced emergency response, and contributions to smart city and community initiatives.

Address Challenges and Research Directions: This paper identifies technical, ethical, and policy-related challenges, emphasizing the need for interdisciplinary collaboration.

The remainder of this paper is structured as follows.

Section 2 gives an overview of the conceptual framework and the enabling technologies for outdoor monitoring systems enabled with IoPVT.

Section 3 thoroughly discusses HAR, the core technology of the envisioned paradigm.

Section 4 emphasizes the social benefits and impacts.

Section 5 illustrates the applications in urban, rural, and coastal cities as case studies.

Section 6 indicates the challenges and opportunities and highlights critical research directions in the near future. Finally,

Section 7 concludes the paper with some final thoughts.

2. The Vision of IoPVT-Enabled Outdoor Health Monitoring

In this paper, we envision a framework for HAR within IoPVT for Safety Monitoring (HARISM), an integration of immersive virtual environments, sensor technology, and human-centered design to realize a vision of IoPVT-enabled outdoor safety monitoring. Our vision is that by integrating the HAR with IoPVT systems within the Metaverse, data-driven insights can actively drive immediate or preventive actions in the larger community to strengthen public health, safety, and climate resilience. With the envisioned physical and digital ecosystem, where sensors in physical environments interact with their virtual twins, systems will enable rich and continuous monitoring of urban, rural, and coastal areas in context. Instead of reacting to accidents or dangerous situations, the system would preemptively identify and minimize hazards before they become real tragedies, leading to safer neighborhoods, more agile responses by first responders, and data-driven development in urban space. The HARISM vision goes beyond traditional monitoring approaches, seeking to establish an integrated ecosystem of technologies and actors that collaboratively contribute to creating more flexible, equitable, and sustainable environments.

2.1. Conceptual Framework

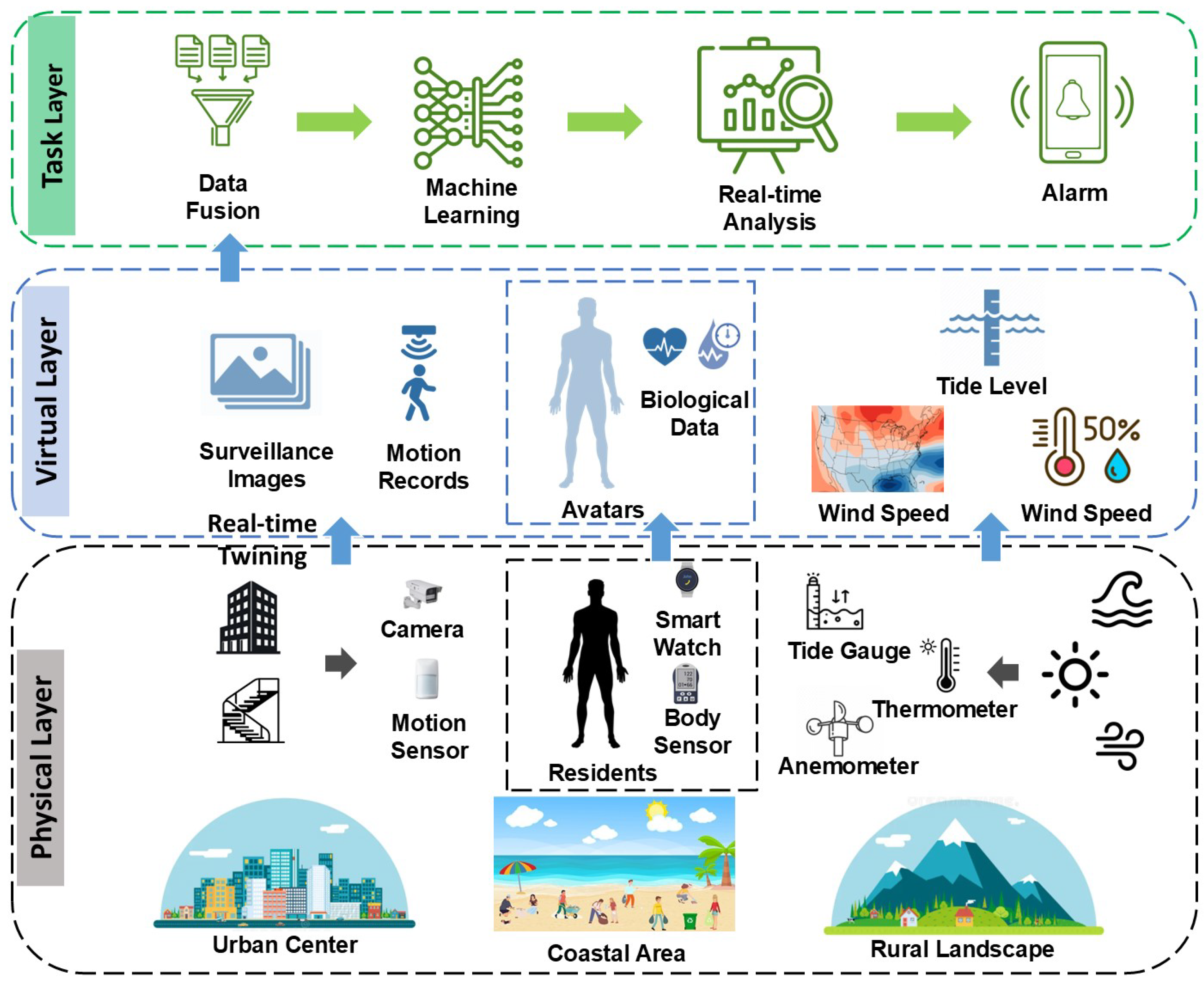

Figure 1 conceptually presents the HARISM framework for outdoor safety monitoring enabled by IoPVT. The HARISM framework centers on creating a dynamic interplay between the physical environment, its virtual representations, and advanced analytics tailored to human activities and environmental conditions. At its core, the HARISM framework connects three key dimensions, humans, technology, and environment, through continuous data exchange and interactive feedback loops.

The Physical Layer is the foundation of the entire framework. It is a distributed network of heterogeneous sensors and devices embedded across various outdoor settings, including urban centers, rural landscapes, and coastal areas. These sensors capture a wide spectrum of data: from human motion and physiological signals (e.g., wearable bio-sensors, camera-based activity recognition) to environmental conditions (e.g., air quality, temperature, humidity), infrastructure integrity (e.g., structural sensors on staircases or buildings), and climate indicators (e.g., tide levels, wind speeds, precipitation). Edge computing nodes process this incoming data locally, enabling immediate responses and reducing latency.

The

Virtual Layer is where the Metaverse integration is built. The virtual counterparts of these physical objects, Digital Twins (DT), reside in the Metaverse. In this immersive and interactive environment, sensor data is continuously synchronized with its virtual representation. The Metaverse visualizes real-time conditions and simulates potential scenarios, allowing stakeholders to experiment with interventions, preventive measures, or changes in infrastructure design [

11]. As DT evolves, it incorporates predictive models that project future risks, population flows, and environmental changes, offering a forward-looking perspective on safety and health [

19].

The HARISM framework concentrates on a robust data fusion and analytics engine that synthesizes data streams from diverse sources. Advanced ML and AI-driven HAR algorithms interpret human activities, detect anomalies (such as sudden falls or suspicious behaviors), and classify complex patterns in real-time [

20]. Additional predictive analytics models integrate environmental and infrastructure data, identify emerging hazards (e.g., ice formation on stairs, degraded air quality near industrial zones), and recommend proactive interventions. With the capacity to learn from historical trends and adapt to changing conditions, the analytics engine ensures the system becomes increasingly accurate and context-aware over time [

21].

A critical aspect of the conceptual HARISM framework is the integration of feedback loops. The alerts, insights, and recommendations generated by the analytics engine are sent to multiple stakeholders: emergency responders, city planners, public health authorities, environmental agencies, and community members [

22,

23]. This bidirectional communication enables swift action, such as dispatching emergency services, issuing public safety advisories, updating evacuation routes, or adjusting resource allocation [

24]. Concurrently, stakeholders can contribute their expertise and context-specific knowledge to the system, refining predictive models, optimizing sensor placement, and informing policy decisions.

To fully realize the HARISM vision, scalability and interoperability are among the essential concerns [

13,

25]. Employing standardized communication protocols, open data formats, and modular architectures ensures that new sensor types, analytics tools, and visualization techniques can be integrated seamlessly as they emerge. Equally important is the ethical dimension, where privacy, data governance, and equitable access must be considered [

26,

27]. Mechanisms for anonymizing sensitive data, secure encryption, and transparent user consent processes help maintain public trust. At the same time, equitable infrastructural investments ensure that well-resourced urban centers and underrepresented rural or coastal communities benefit equally.

In summary, the conceptual HARISM framework envisions an ecosystem where physical sensors, virtual representations, and advanced analytics converge to produce actionable intelligence. HARISM lays the foundation for a transformative approach to outdoor safety monitoring, enabling continuous adaptation, risk mitigation, and collaborative decision-making that ultimately improves the well-being and resilience of human communities.

2.2. Three-Dimensional Integration: Humans, Technology, and Environment

A fundamental principle underlying this vision for IoPVT-enabled outdoor safety monitoring is the understanding that humans, technology, and the environment constitute an interdependent triad. To effectively monitor health and safety outside the four walls of buildings, we need to consider how these three pillars are connected, how they each shape the other, and ultimately, how they form the environment in which residents thrive or not.

Human individuals and communities whose well-being and safety the system aims to protect and enhance are the core of this three-dimensional integration. HAR serves as a critical element, focusing on identifying and interpreting movement patterns, behavior, and physiological signals [

28]. By continuously monitoring individuals’ activities, whether walking up an icy staircase in Montreal, commuting to work along rural roads, or navigating a coastal city’s rising sea levels, the system gains insights into people’s needs, vulnerabilities, and responses to their surroundings. In the long run, human-focused data leads to faster action depending on context, personalization, and more just safety policies that honor individual privacy and cultural environments.

The

technological layer refers to tools, platforms, and analytic capabilities that enable proactive outdoor safety monitoring. That means not just physical sensors and devices implemented on the ground, for example, environmental monitors, wearable health sensors, and structural integrity gauges, it also includes the underlying computing backbone [

29,

30]. Edge nodes, fog computing platforms, and cloud-based analytics engines work together to aggregate data, process it efficiently, and transform raw inputs into actionable intelligence. This integrated data set is extracted for patterns and anomalies by AI-driven algorithms and simulated in the Metaverse environment to visualize and simulate potential interventions [

31]. This technology enablement layer should be designed to evolve, such as advances in hardware, newer AI techniques, and better communication networks, respectively, to make it more scalable, reliable, and resilient.

The

environment, which includes both built and natural surroundings, is complex due to the many factors that influence human health and safety [

32,

33]. Urban landscapes might include complex infrastructure and seasonal risks. At the same time, rural communities can grapple with localized industrial or agricultural issues, and coastal areas have to deal with the impacts of climate change, such as flooding and heavy storms. The system embeds sensors in different terrains to track environmental conditions such as air quality, temperature, wind, precipitation, and structural integrity, mapping them in the Metaverse through the modeling of dynamic factors to holistically consider awareness of environmental stressors [

34]. This perspective guides risk evaluations and strengthens the formulation of context-sensitive risk reduction measures, ensuring that interventions are appropriately tailored to regional ecological and infrastructural contexts.

Adaptability and improvement rely on continuous feedback loops across these three dimensions of integrated solutions [

35]. Understanding human behavior helps decide where and how sensors might be placed or what predictive models to fine-tune. Environmental changes that stress new technological infrastructure require sensor placement adjustments, data fusion techniques, and analytical approaches. Technology, in turn, improves the quality, timeliness, and usefulness of data, allowing systems to meet human needs more effectively and adapt to changing environmental conditions. Adaptability through cyclical reinforcement means that the three elements remain in equilibrium with each other, each one informing and refining the other.

Framing outdoor safety monitoring as a synergy of humans, technology, and the environment lays the groundwork for a responsive, context-aware ecosystem. Rather than treating data in isolation, the system acknowledges the multifaceted interplay among these dimensions, leading to more robust, inclusive, and future-proof solutions to improve public safety and health in the outdoor world.

2.3. Technological Innovations Enabling the Vision

The realization of IoPVT-based outdoor environment safety monitoring is driven by multiple technological advances and convergence in these technologies that improve data-based data-based collection, processing, modeling, visualizing and decision-making processes. These innovations include a broad continuum of devices, from state-of-the-art sensors and next-generation networking infrastructures to sophisticated analytics, immersive virtual platforms, and intelligent automation. By strategically combining these elements, society moves closer to an environment where proactive, data-driven approaches to health and safety become the norm rather than the exception.

Advanced Sensor Technologies: The first pillar of the technological foundation lies in the continuous evolution of sensor hardware. Miniaturized, energy-efficient, and ever-more-affordable sensors are now able to capture a wide range of signals, from human biometrics and structural integrity indicators to environmental parameters like temperature, humidity, particulate matter, and noise levels [

36,

37]. Thanks to innovations in materials science and nanotechnology, there are now sensors that can withstand the rigors of outdoor environments—freezing winters, salt-laden air on the coast, or dusty countryside roads—allowing for reliable and longer-lasting deployments [

38,

39]. The system’s overarching situational awareness is ultimately deepened by improving sensor precision and new modalities, spurring the detection of subtleties that may eventually lead to potential safety hazards.

Ubiquitous Connectivity and Networking: The second critical layer for efficiently transporting collected sensing data is robust communication networks. The proliferation of 5G/6G networks, low-power wide-area networks (LPWANs), and new IoT protocols provide scalable and low-latency connectivity even in challenging terrain [

40,

41]. Such ubiquitous connectivity ensures data flows quickly from edge devices to processing nodes, allowing real-time responses. In the rural and coastal contexts, satellite communications, mesh networks, and specialized relay nodes can extend coverage and maintain constant monitoring despite geographical and infrastructural challenges.

Edge, Fog, and Cloud Computing Architectures: Orchestrating an equilibrium of edge, fog, and cloud computing resources is at the heart of effective data processing [

42,

43]. Some sensor data filtering and aggregation are done locally in the edge devices, allowing immediate response to urgent local events [

44]. Fog nodes near the network edge that serve as intermediaries between sensors and the cloud can do complex analytics, cache essential data, and lower backhaul costs [

45,

46]. Cloud platforms offer immense computational power for historical trend analysis, long-term storage, and global optimization. Following a multi-tiered architecture, the data flow can be optimized, allowing for faster decisions and providing a scalable feature and resilient system to accommodate the changing requirements.

AI-Driven Analytics and Predictive Modeling: AI lies at the core of the decision-making engine. ML algorithms analyze multimodal data, recognize intricate activity patterns through human activity recognition, and discover anomalies that signal safety threats, whether slippery surfaces on city staircases or degrading air quality in rural industrial areas [

47]. These algorithms will run through historical and user feedback data, making their predictions more and more accurate, thus allowing proactive interventions. Predictive modeling tools like digital twins and scenario simulations in the Metaverse enable stakeholders to visualize potential future states, test response strategies, and fine-tune policies long in advance of any emergency emerging [

48,

49].

Metaverse Integration and Immersive Visualization: The fusion of physical and virtual worlds through the Metaverse fundamentally changes how data is understood and acted upon [

50,

51]. Rather than combing through static graphs or disparate data streams, stakeholders build interactive, three-dimensional environments that mirror real-time conditions. Weather patterns, human movement, infrastructure stressors, and environmental quality come together in a shared virtual space. Decision-makers can “walk” through digital twins of neighborhoods, identify high-risk zones, and simulate interventions, such as rerouting pedestrian traffic, adjusting energy usage, or optimizing evacuation plans, gaining insights that would be hard to discern from traditional analytics alone [

52].

Automation, Control, and Feedback Mechanisms: Some time-sensitive tasks will be carried out by automated mechanisms as the system transitions from immature to mature. Intelligent signage could change to route walkers around threatened areas, autonomous drones could inspect infrastructure before it fails, and remotely controlled environmental controls could help mitigate hazards before they start. Significantly, an increasingly degree of automation does not marginalize human judgment. On the contrary, it augments and enhances human decision-making capabilities [

53,

54]. Using continuous feedback loops supported by bidirectional communication channels provides for ongoing responsiveness to community input, expert advice, and governance principles and ensures that accountability and transparency are upheld [

55].

In essence, the technology that makes this vision possible is a stacked synergetic ecosystem. The sensor and network layer, the compute and AI-driven analytics layer, and the immersive interface layer provide distinct capabilities. Together, these tools offer an opportunity to redirect how we move beyond reactive, shattered approaches to proactive, integrated strategies that protect human health, build ecological resiliency, and reimagine the very nature of how we live, work, and move in the outdoors.

3. Human Activity Recognition Techniques

Human activity recognition (HAR) techniques have evolved significantly over the past decades, starting with manual observation and advancing to sophisticated vision-based and sensor-based systems. Early efforts relied on vision-based systems, and later on wearable sensors to capture motion data, which were analyzed using basic machine learning algorithms [

56]. With advances in sensing technologies, computational power, and the emergence of deep learning, HAR now incorporates data from multimodal sources, enabling highly accurate and real-time activity recognition [

57]. These techniques have had transformative impacts in various domains: in defense, HAR is vital for surveillance, threat detection, and situational awareness [

58]; in industry, it enhances workplace safety, productivity monitoring, and automation [

59]; and in healthcare, it improves patient monitoring and facilitates smart home technologies [

60]. The continuous advancement of HAR is driven by the growing need for systems that can operate robustly in complex, dynamic, and real-world environments, addressing challenges like missing data, unreliable inputs, and diverse human behaviors. This drive is further fueled by the potential for HAR to revolutionize emerging areas such as human-robot interaction, augmented reality, and personal well-being.

3.1. Sensing Techniques

Accurate and reliable HAR is based on high-quality data, which forms the foundation for feature extraction and the development of machine learning models. This Section provides an overview of the sensing techniques commonly used for HAR.

3.1.1. Data Sources and Sensing Modalities

HAR data can be collected from various sources, including wearable sensors, environmental monitoring stations, mobile devices, smart appliances, and IoT devices. Common wearable sensors include accelerometers, gyroscopes, magnetometers, and physiological sensors. These sensors are compact, affordable, and suitable for real-time HAR, though they may cause discomfort if worn for extended periods. Environmental sensors, or ambient sensors, are placed in the surroundings to capture activity indirectly. These sensors include cameras, infrared sensors, pressure sensors, passive radio frequency sensors, and microphones. While environmental sensors are non-intrusive, they may raise privacy concerns, especially with visual and audio data. Smartphones and other mobile devices are rich sources of data for HAR. Other examples include inertial measurement unit (IMU) sensors, such as accelerometers, gyroscopes, and magnetometers are widely integrated into smartphones. GPS data can contextualize activities like walking, running, or commuting. Microphone data can capture audio cues such as footsteps, voices, or environmental sounds. Touchscreen data can reveal interaction patterns, such as swiping or typing behavior, which can infer activities. Barometer data measures changes in altitude for activities like climbing a mountain.

Devices designed for gaming or virtual reality provide detailed motion data. Motion controllers can track hand gestures and physical interactions, while head-mounted displays (HMDs) provide head movement and gaze-tracking data. Treadmills and simulators can capture data on walking, running, or driving movements. User-generated data from social media or apps can complement HAR. Check-ins and location tags indicate activities like visiting a gym or restaurant. Activity logs from apps such as fitness trackers record specific actions like jogging or cycling. Data from connected devices in a smart ecosystem also contribute to HAR. Fitness equipment tracks activities on treadmills, cycles, or rowing machines. Smart TVs detect viewing patterns and associated user behaviors. Kitchen appliances’ usage patterns can indicate activities like cooking or eating. These diverse data sources can be used individually or in combination, depending on the requirements of the HAR application. Multimodal approaches are particularly powerful in improving accuracy and robustness.

3.1.2. Data Preprocessing and Feature Extraction

The raw data collected from various sensor modalities are often noisy and high dimensional, necessitating robust preprocessing and feature extraction techniques before being applied for training machine learning algorithms and inference. This section discusses the critical steps and techniques in data preprocessing and feature extraction for HAR, considering the diverse sensor modalities involved.

Data preprocessing is the first and essential step in HAR pipelines, aimed at preparing raw data for feature extraction and classification. Due to data heterogeneity, the major challenge is varying sampling rates, formats, and noise characteristics associated with different sensors. The main preprocessing tasks include data cleaning, synchronization, segmentation, and transformation. Data cleaning addresses noise, missing values, and outliers that often exist in sensor data. Techniques vary depending on the sensor modality. For wearable sensors, signal noise caused by movement artifacts can be reduced by filters such as Butterworth or Savitzky-Golay. Missing data can be imputed using interpolation or predictive models. For environmental sensors, redundant or conflicting signals caused by overlapping sensor ranges can be removed using statistical methods or clustering. Filtering GPS signals collected by mobile devices by Kalman filters is common practice to account for location inaccuracies. And low-pass filters are often applied to address jitter in motion capture data on gaming devices. Incomplete posts or erroneous timestamps can be handled by natural language processing (NLP) techniques.

Data synchronization and segmentation can ensure that data from different sensors align temporally and divide continuous data streams into smaller, meaningful segments. For example, timestamps can be used for synchronization of wearable and environmental sensors. Cross-correlation can identify and correct time lags. For data segmentation, sliding window is a common approach suitable for continuous and repetitive activities like walking or running. Event-based segmentation, which detects specific events, such as phone calls in mobile data or hand movements in VR systems, is particularly helpful in scenarios where human activities are triggered by discrete events or involve task-specific actions. Finally, data transformation, such as normalization and standardization, prepares the data for feature extraction.

Feature extraction transforms preprocessed data into representative attributes that capture the essence of human activities. Features can be broadly categorized into time-domain, frequency-domain, and domain-specific features. Time-domain features are computed directly from raw data and are straightforward to interpret. Common time-domain features include statistical measures, such as mean, median, variance, and standard deviation, signal magnitude area (SMA), which captures the overall activity level, zero-crossing rate useful for cyclic activities, peak intensity, such as steps in walking data, and autocorrelation, which measures similarity between signal segments at different time lags to detect periodic patterns.

Frequency-domain features are often derived using fast Fourier transform (FFT) or wavelet transform. These features are crucial for recognizing periodic and oscillatory activities. Power spectral density (PSD), dominant frequency, and spectral entropy are often calculated for repetitive activities, while wavelet coefficients are useful for transient activities.

Machine learning models benefit from feature-rich datasets, emphasizing domain-specific feature extraction. Domain-specific features leverage knowledge of the application or sensor modality to extract meaningful attributes. For wearable sensors, gait parameters, such as stride length, cadence, or joint angles using inertial data, are often extracted. The occupancy of the room or the activity context can be inferred from environmental sensor features, such as temperature, light, and humidity patterns. Combine GPS data with accelerometer readings on mobile devices can help to identify walking, driving, or stationary states. For gaming and VR devices, pose and motion trajectories can be extracted from 3D position data to recognize gestures or interactions. Analyzing text features, such as word frequency and sentiment, or posting patterns on social media can correlate online behavior with physical activities.

3.2. AI-Driven HAR Algorithms

Advancements in Artificial Intelligence (AI) have revolutionized HAR systems, enhancing their accuracy and adaptability. The ability to operate in real-time and adapt to diverse environments makes AI-driven HAR algorithms indispensable for a wide range of user-centric technologies. One of their primary strengths is the ability to learn complex patterns from high-dimensional data. Deep learning techniques, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have significantly advanced HAR by automating the feature extraction process [

61]. CNNs excel in capturing spatial features from data, such as video frames or sensor signals, while RNNs are well-suited for sequential data, such as time series signals from wearable devices. These models not only improve recognition accuracy but also adapt to a diverse set of activities without extensive manual intervention.

In addition to supervised learning, unsupervised and semi-supervised learning methods are gaining traction in HAR. These approaches are particularly valuable for scenarios where labeled data are limited or difficult to obtain. Techniques like clustering and generative models can uncover hidden patterns in unlabeled data, enabling robust activity classification with minimal human input. Furthermore, advancements in transfer learning allow HAR systems to leverage pre-trained models, reducing the computational cost and time required for deployment.

3.2.1. Traditional Machine Learning Models for HAR

Traditional machine learning models were among the first AI-driven approaches to be applied to HAR. These models typically require hand-crafted features extracted from raw sensor data. Sensors like accelerometers and gyroscopes are typically used to capture motion and orientation data, which is then preprocessed and transformed into features such as mean, variance, and frequency domain components. Decision trees, support vector machines, and random forests have been widely used for HAR.

Decision trees are interpretable models that partition feature space into regions based on a series of hierarchical splits. Studies such as [

62] demonstrated their effectiveness in recognizing basic activities such as sitting, standing, and walking. Support vector machines (SVMs) have been widely used for HAR due to their ability to handle high-dimensional data and nonlinear relationships. Kernel functions play a critical role in enhancing their performance on complex activity datasets. For example, Laxmi et al. proposed a fuzzy proximal kernel SVM as a robust classifier, which transforms samples into a higher dimensional space and assigns their membership degree to reduce the effect of noise and outliers [

63]. The study focuses on recognizing walking, sitting, standing, lying down, and transitioning between these states. Random Forest is a popular ensemble learning method used for HAR due to its robustness and ability to handle large datasets with high dimensionality. It works by constructing multiple decision trees during training and outputting the mode of the classes or mean prediction of the individual trees. In [

64], Wang et al. investigated the effectiveness of a random forest machine learning algorithm to identify complex human activities using data collected from wearable sensors, demonstrating its potential for applications such as health care monitoring and activity tracking.

3.2.2. Deep Learning Approaches

While traditional models have proven effective for simple tasks, their reliance on feature engineering limits scalability and generalizability to diverse activities and sensor modalities. Deep learning techniques are known for their ability to extract high-level features directly from raw data. Neural networks, particularly CNNs and RNNs, excel in capturing spatial and temporal patterns in activity data, have become the backbone of modern HAR systems. In [

65], Islam et al. discussed the performances, strengths, and hyperparameters of CNN architectures for HAR along with data sources and challenges. The study in [

66] evaluates a pre-trained CNN feature extractor for HAR using an inertial measurement unit (IMU) and audio data. While CNNs are particularly effective in capturing spatial dependencies in inertial data, RNNs and long short-term memory networks (LSTMs) are widely used for modeling temporal sequences in human motion signals [

67]. Pienaar et al. [

68] demonstrated using an LSTM-RNN model for HAR using the WISDM dataset [

69]. The dataset includes activities such as jogging, walking, sitting, standing, ascending stairs, and descending stairs, captured using accelerometer data on smartphones. The advent of multimodal sensor fusion has further amplified the potential of these techniques by integrating data from diverse sources, such as accelerometers, gyroscopes, and cameras, to improve recognition accuracy.

Multimodal sensor fusion, enabled by deep learning, plays a pivotal role in addressing the limitations of single-modality systems, such as sensor noise and modality-specific failures. Techniques like attention mechanisms and graph neural networks (GNNs) are increasingly employed to model the relationships and complementarity between modalities. For example, attention-based architectures selectively emphasize informative signals across modalities, thus mitigating the impact of unreliable or missing data. Ma et al. proposed AttnSense [

70], a neural network model incorporating a multi-level attention mechanism. The study utilizes data from accelerometers and gyroscopes to identify activities, including running, walking, and sitting. The model’s combination of CNN and gated recurrent units (GRU) network captures dependencies in spatial and temporal domains, leading to improved performance. However, GNNs provide a framework for representing multi-modal data as a graph structure, capturing complex interdependencies between sensors. Yan et al. proposed a graph-inspired deep learning approach to HAR tasks [

71]. The GNN framework shows good meta-learning ability and transferability for activities like walking, running, and transitional movements.

3.2.3. Emerging Techniques

Emerging techniques in human activity recognition (HAR) are increasingly leveraging the Internet of Physical-Virtual Things (IoPVT) to enhance the accuracy and efficiency of activity detection [

72,

73]. In IoPVT-based HAR systems, the data collected by physical sensors are transmitted to virtual platforms, where advanced algorithms and machine learning models process and analyze the information to recognize and classify different activities. In addition to the aforementioned deep learning models, the IoPVT-based HAR systems rely on data synthesis techniques and transfer learning, to combine data from physical sensors with virtual data sources, such as simulated sensor outputs or data extracted from videos, to create comprehensive datasets for training HAR models, and apply knowledge gained from virtual environments to real-world scenarios, enabling HAR systems to generalize better across different contexts and reduce the need for extensive real-world data collection.

Studies have demonstrated that fusion of wearable sensors with environmental sensors and virtual sensor data, e.g., from videos, simulations, or digital twins, enhances the robustness of HAR systems, particularly in real-world settings with diverse activities and environmental conditions [

74,

75,

76]. Cross-domain learning will allow models to generalize better across different environments and individuals. Digital twins of human activities can be created using IoPVT to simulate and predict behaviors in different environments, useful for applications in rehabilitation, ergonomics, and personalized training. We believe IoPVT-enabled HAR is a game-changer, pushing the boundaries of context-aware AI and ubiquitous computing. Using advances in AI, digital twins, and next-generation IoT networks, HAR systems will become more intelligent, efficient, and privacy-aware, making them indispensable in healthcare, smart environments, and industrial applications.

3.3. Predicative Analytics and Hazard Identification

Using historical and real-time data, predictive analytics aims to forecast future events or conditions. Human activity recognition contributes to this field in multiple ways. In healthcare monitoring and disease prediction, HAR can track movement patterns and detect anomalies to provide early warnings of diseases like Parkinson’s, Alzheimer’s, or cardiovascular conditions. Gait analysis can predict fall risks in elderly patients, enabling preventive interventions. For individuals with disabilities, HAR can predict assistance needs, optimizing caregiver responses. In industrial and office environments, HAR can analyze worker postures and movements to predict fatigue, musculoskeletal disorders, and potential productivity declines [

77,

78]. Activity data can be studied to suggest ergonomic adjustments or break schedules to prevent injuries. Furthermore, daily activity patterns extracted through HAR can be integrated into smart home and building management, automating lighting, heating, and security systems based on user behavior [

79]. Finally, in sports and performance analytics, HAR tracks athlete movements to identify inefficiencies, predict performance trends, and suggest training modifications for injury prevention [

80].

HAR is widely used in safety-critical environments to detect hazardous conditions and prevent accidents. In hazardous environments such as construction sites, HAR systems can analyze deviations from standard workflows, detect risky behaviors like improper lifting, lack of safety gears, or proximity to heavy machinery, and trigger real-time alerts [

81]. In chemical plants and warehouses, HAR monitors worker interactions with hazardous substances, ensuring compliance with safety protocols. It can predict exposure risks and suggest precautionary measures based on movement patterns. In transportation, HAR is used in driver monitoring systems to detect fatigue and analyze aggressive driving behaviors to prevent traffic violations [

82]. In public spaces, HAR helps detect suspicious activity, helping security personnel identify potential threats or criminal behavior. In emergency response scenarios, HAR can detect panic behaviors or sudden movements that indicate distress.

By combining HAR with predictive analytics, we gain the ability to identify and mitigate risks before they escalate. From healthcare and workplace safety to transportation and security, HAR-driven insights enable proactive interventions, ultimately improving efficiency, safety, and overall quality of life.

4. Societal Benefits and Impact

Integrating human activity recognition with IoPVT-enabled outdoor monitoring has profound implications for societies worldwide. At its core, the HARISM concept extends beyond technical innovation, seeking to empower communities with safer public spaces, healthier living conditions, and more informed responses to environmental challenges. By capturing and analyzing data on human behavior, environmental changes, and infrastructural integrity, systems like HARISM can prevent risks, ensure timely interventions, and facilitate better resource allocation. In doing so, they not only enhance immediate safety outcomes, such as fewer accidents, faster emergency response times, and more resilient infrastructure, but also contribute to broader, long-term benefits. These include reinforcing public trust in civic technologies, informing equitable policy development, fostering social cohesion around shared challenges, and guiding responsible urban planning in the face of evolving climate and demographic pressures. Ultimately, the societal benefits and impact of this integrated approach reach well beyond technology, shaping inclusive, adaptive, and thriving communities for generations to come.

4.1. Proactive Health and Safety Monitoring

The IoPVT-enabled HARISM framework aims to be a proactive health and safety monitoring paradigm, shifting from a crisis-response to a prevention-based model, potentially stopping disasters before they happen. Traditionally, outdoor hazards, such as slippery walkways, compromised stairs, deteriorating air quality, and extreme weather events, have been reactively addressed, with solutions deployed only after accidents or noticeable degradation. With continuous sensing, HAR, and predictive analytics integrated, community leaders and individuals can anticipate challenges in advance and make timely, targeted decisions to deal with them.

A critical part of this proactive approach is the ability to spot early indicators of risks and diffuse them before any real damage is done. For instance, environmental sensors placed along outdoor staircases in urban cities like Montreal can detect subtle changes in temperature and moisture that indicate ice formation, prompting maintenance crews or automated de-icing systems to intervene well before pedestrians face slipping hazards. In the same way, wearable biosensors and HAR solutions based on cameras recognize distress patterns, such as an elderly person climbing steps and falling over or a sudden change in gait that suggests a health emergency, which could trigger a cascade of alerts and responses from city officials, medical services or neighbors [

83,

84].

Proactive monitoring helps with long-term resilience and planning beyond short-term intervention. Historical data and predictive models can reveal patterns in infrastructure wear and tear, population movements, environmental factors, etc. These can influence the placement of sensors, the design of public spaces, the allocation of resources, and other aspects of urban planning [

85,

86]. By integrating these insights into policy-making and urban planning, cities can adapt more effectively to emerging challenges, such as shifting demographics, increased tourism, or the increasing impacts of climate change.

This approach facilitates a culture of preparedness and shared responsibility for health and safety. As communities increasingly trust that their surroundings are being actively observed and cared for, public confidence in civic technology grows. This trust, in turn, fosters greater collaboration between stakeholders, from city planners and public health experts to residents and entrepreneurs, who can use shared data and insights to drive innovative safety interventions, build health infrastructure, and optimize quality of life. Instead of relying on a reactive status quo, proactive monitoring is a forward-thinking mindset that embraces, instead of settling for, the sustainability of culture for us and future generations.

4.2. Enhanced Emergency Response

In addition to mitigating accidents and health-related incidents, IoPVT-based outdoor safety monitoring has the potential to redefine the emergency and response landscape. Traditionally, emergency services depend on delayed or incomplete information, such as calls from people who witnessed accidents, on-site assessments, and best guesses about conditions, before they can dispatch resources. This lag in situational awareness frequently results in slower response times, suboptimal allocation of emergency personnel, and problems with coordination across agencies and geographies.

However, in the face of this challenge, emergency responders obtain near-instantaneous access to critical information by linking next-generation sensors, HAR algorithms, and immersive virtual environments in the Metaverse [

11,

87]. The continuous flow of real-time environmental sensor data can detect structural failures or accelerating rises in floodwater to HAR systems, identifying individuals who have fallen or are showing signs of physical distress. Using this continuous instant-sensing data stream, IoPVT-based outdoor safety monitoring systems allow incident location and categorization in seconds for emergency operations centers. This accelerated detection process shortens the interval between onset and intervention, potentially saving lives and reducing the severity of injuries or property damage.

In urban environments like Montreal, for example, a hazardous icy staircase incident would no longer rely solely on bystanders reporting the event. Instead, the system’s advanced analytics would recognize a high-risk condition or an actual fall, automatically alerting local emergency services and dispatching rescue teams to the exact location. Meanwhile, the Metaverse-based visualization tools allow dispatchers and responders to navigate a real-time digital twin of the scene, assessing hazards, planning safe routes for paramedics, and anticipating what equipment might be necessary [

88]. In rural settings, where distances between facilities and incidents can be long, this improved situational awareness translates into more efficient resource deployment and coordinated efforts across fire departments, ambulance services, and community-based first aid responders.

Furthermore, in coastal areas vulnerable to climate impacts, such as storm surges or flash flooding, linking IoPVT data with meteorological and oceanographic modeling provides emergency managers with actionable information. By simulating different response strategies in the Metaverse, decision-makers can strategically pre-position medical units, rescue boats, and shelters to ensure assistance immediately reaches vulnerable populations quickly and safely. Over time, historical data can be used, as well as lessons learned, to determine how to utilize the data to fine-tune evacuation protocols, predict impact zones, and recommend resilience-building measures to local authorities.

Ultimately, systems like HARISM have two-fold impacts on emergency response. They will improve immediate reaction time and strategic decision-making during crises and lay a foundation for continuous improvement in preparedness and resilience. As this cycle of informed action and subsequent learning continues, communities become better equipped to handle emergencies, strengthening public trust in the systems designed to protect them, and reinforcing the collective safety net for current and future generations.

4.3. Contributions to Smart City and Community Initiatives

Integrating outdoor safety monitoring enabled by IoPVT into the larger landscape of urban governance and community development can be a pivotal step toward realizing the vision of truly “smart” cities [

89]. Smart city initiatives frequently emphasize the importance of connectivity, data-driven decision-making, and citizen engagement [

90]. By weaving together human activity recognition, environmental sensing, and predictive analytics within immersive virtual platforms, the approach directly aligns with the core principles of sustainable urban planning and collective well-being.

At the policy-making level, IoPVT data can inform long-term strategies by giving city officials and planners access to abundant valuable insights. For example, analyzing where pedestrians gather, identifying accident hotspots, and monitoring localized health risks among communities would all inform and improve the design of infrastructure. Planners could prioritize upgrading dangerous staircases, using more durable construction materials in areas vulnerable to climate impacts, and adding adaptive lighting or signage in areas with poor visibility. As time goes on, with these data-driven interventions layering on top of each other, cities become safer, healthier, and more responsive to the changing needs of their people.

Furthermore, a live monitoring system that guarantees privacy promotes community engagement through transparency and communication [

91]. Public dashboards, data-driven storytelling, and educational outreach programs can help inform citizens about how their city’s infrastructure and services are prepared to respond to emerging risks. Local residents, businesses, and other advocacy groups could use these insights to call for investments in public health resources or to suggest community-led programs that address local challenges, from forming neighborhood watch groups to creating networks of volunteers trained to help vulnerable populations in times of crisis.

As the IoPVT ecosystem matures, there are opportunities for its capabilities, beyond safety and health, to be leveraged in areas such as energy management, environmental sustainability, cultural heritage, etc. For example, data collected for safety applications can help inform energy usage patterns to better balance consumption and production or reduce the carbon footprint of critical infrastructure [

92,

93]. In coastal communities most affected by climate change, these data can guide adaptation steps, from strengthening sea walls to redesigning green spaces and galvanizing joint climate action within the community. This aggregate data mining not only serves as a measure to mitigate risks but also forms a bedrock that allows urbanization to play a role as part of holistic urban resilience and socio-economic vitality by connecting these dots.

At its core, participation in smart community initiatives goes beyond immediate improvements in health and safety; it opens the doors to inclusivity, participatory governance, and long-term sustainability. These integrated monitoring systems, built on the foundation of aligning high-tech with community values, help create a fabric of citizens, policies, and the environment we inhabit together. They ensure, at every point we collect data, that the nature of our "smartness" remains about human interaction rather than just hardware and software.

5. Scenarios and Illustrative Applications

Among the concepts in which the ubiquitous edge-based IoVT sensing can increase safety and security include urban dwellings, rural industrial complexes, and coastal infrastructures, among others. Smart edge technology [

94] can mitigate risk by monitoring pedestrians, understanding environmental conditions, and analyzing infrastructure in extreme weather conditions such as ice, fire, and hurricanes. As shown in

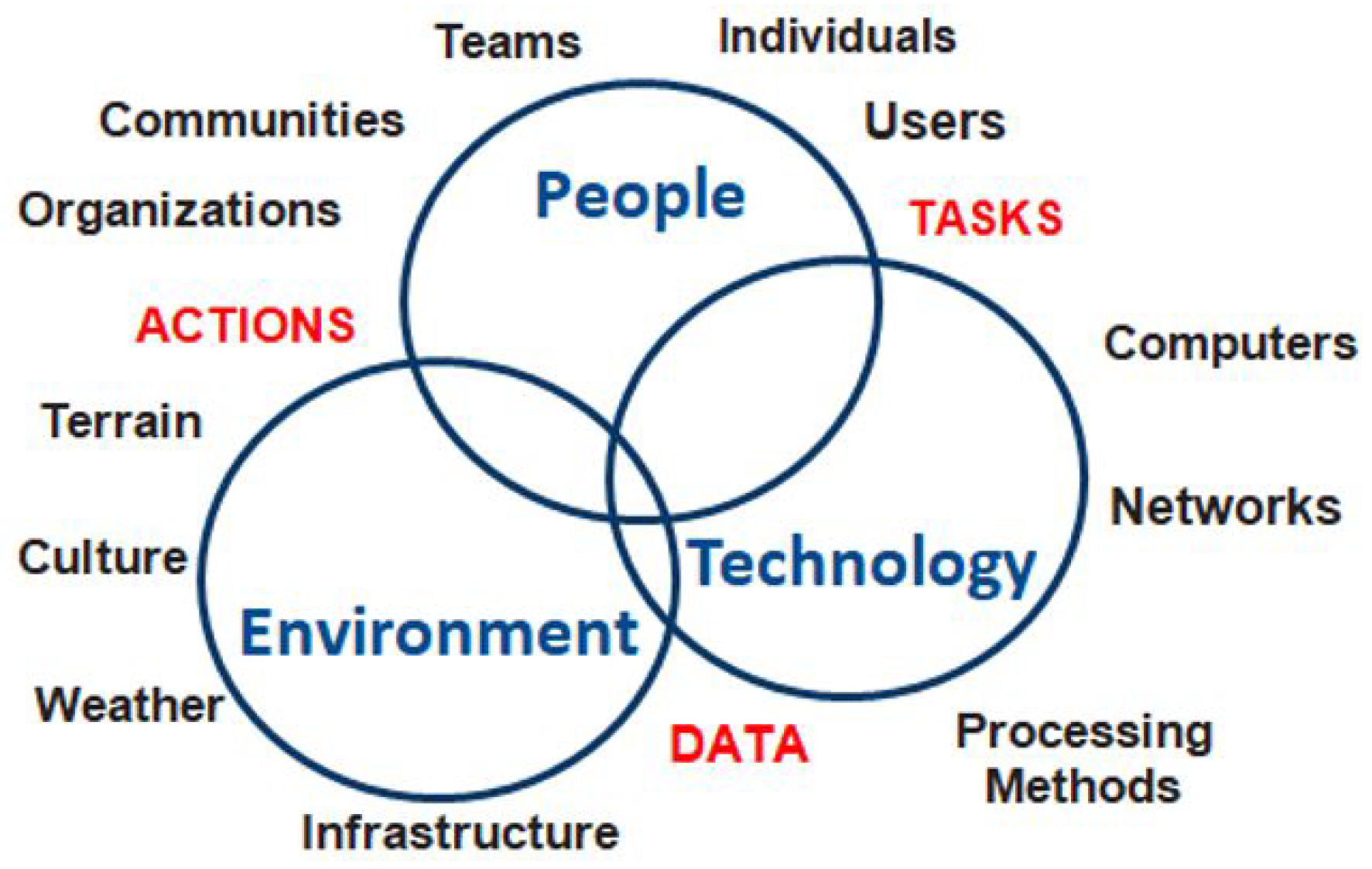

Figure 2, the intersection of HAR (people), IoVT (technology), and scenarios (weather) can facilitate future smart planning for responsive action.

To ground our vision in practical applications, we explore three distinct settings: urban, rural, and coastal, where IoPVT-enabled outdoor safety monitoring can be implemented. These scenarios were selected to reflect a variety of challenges and opportunities arising from different environmental, infrastructural, and societal contexts. Urban settings, such as Montreal, exemplify a high-density environment with complex infrastructure and new risks (e.g., icy staircases, crowded public spaces, etc.). The case of Harrisburg illustrates how rural areas face unique challenges due to dispersed infrastructure and industrial activities impacting public health, necessitating specialized environmental and bio-health monitoring methods. Coastal communities, in contrast, must face the harsh realities of climate change, with rising sea levels and extreme weather events making it essential to develop adaptive strategies for everything from evacuation to the resilience of roads and infrastructure. These scenarios demonstrate how integrated sensor networks, advanced HAR, and immersive virtual analytics can be evaluated for their potential to be customized for specific local challenges within broader outdoor safety monitoring frameworks.

5.1. Urban Setting: Montreal, Canada

Urban environments inherently present a unique set of challenges for outdoor safety monitoring [

95]. High population density, complex infrastructure, and dynamic public spaces all contribute to an environment where the potential for accidents increases. Variable microclimates, widespread air pollution, and elevated ambient noise levels complicate the calibration of the sensors and the quality of data [

96]. Furthermore, high accuracy requirements require robust, scalable, and fully integrated monitoring systems into the existing urban framework. These complexities must be taken into account when designing IoPVT systems that need to be dynamic and adaptable to the multifaceted nature of urban life, which will help improve both short-term urban safety processes and long-term urban planning initiatives.

One of the emerging developments in HAR video surveillance is in outdoor settings near buildings. Outdoor monitoring systems have become quite popular in front of doors to alert for package deliveries, ensure safety surveillance for someone approaching the door, and monitor activity in case of an intrusion. Additionally, outdoor IoVT is utilized by convenience stores, pedestrian pathways (especially busy intersections), and public places such as subways to enhance public safety and security. For discussion, another unique scenario for future analysis is external stairways that can harness existing doorway IoVT developments for urban safety and health monitoring. For instance, Montreal, Canada, has a historical development of external staircases intended to provide safety through community surveillance; however, they can be hazardous in winter conditions, as shown in

Figure 3. Ice and snow can make the staircases slippery, creating challenges for elderly individuals who climb the stairs during winter. Therefore, external video surveillance can be employed not only to alert about package deliveries but also as a method to ensure quick responses in case someone slips and falls on the stairs. While this example is a unique case, dense urban centers can benefit from IoVT in public spaces with distinctive entrance access.

IoPVT systems can install smart sensors along stairs and pedestrian areas to monitor environmental conditions in real-time, including temperature drops and ice formation. HAR algorithms can detect abnormal movements, such as slips or hesitations, which can lead to hazardous situations. From a design perspective, precise sensor placement is crucial: devices must be integrated into the cityscape without being unattractive, and their installation must consider factors such as exposure to pollution, potential vandalism, and access to power sources. In addition to IoT considerations, there are network connectivity challenges to face in densely populated urban areas, where sensors must rely on robust, low-latency communication channels that can withstand interference from numerous devices and urban infrastructure as well.

By entering these data into a dynamic digital twin of Montreal’s urban environment, emergency services and municipal maintenance teams can receive timely alerts, allowing them to deploy de-icing or repair crews before accidents happen. Moreover, urban planners can utilize historical aggregated data to pinpoint chronic issues and design lasting interventions, such as more durable stairs or enhanced pedestrian pathways. Additionally, the system should be developed with a robust user interface that offers decision-makers visual and analytical insights in real-time, along with integration into existing urban management systems and smart city solutions. These features include energy efficiency, modularity, and ease of maintenance, allowing sensors and related infrastructure to be easily scaled or upgraded as technologies advance. This comprehensive approach not only improves public safety in the short term but also promotes the long-term development of the urban landscape in line with Montreal’s specific climate and infrastructure needs.

5.2. Rural Setting: Harrisburg, Pennsylvania, USA

In rural areas like Harrisburg, PA, safety monitoring takes on a unique focus compared to urban settings. Harrisburg has historically faced environmental challenges related to industrial activity, which have led to higher rates of cancer and other health problems, primarily due to pollution from nearby power plants. In this context, IoPVT systems can be utilized to continuously monitor air quality, radiation levels, and other important environmental factors using advanced chemical and biosensors. Alongside these devices, wearable sensors and localized monitors keep track of ambient conditions in conjunction with residents’ health metrics, collectively offering a comprehensive view of community well-being.

HAR algorithms used in these contexts can analyze data from stationary and mobile sensors to identify anomalies that may indicate exposure to hazardous pollutants or other environmental risks. When integrated into a virtual representation of Harrisburg, these data help in planning energy consumption, ensuring that industrial facilities operate within safe limits, informing public health initiatives aimed at reducing exposure, and guiding remediation efforts. This proactive monitoring framework seeks to mitigate health risks, drive targeted industrial regulation, and strengthen community resilience against ongoing industrial challenges.

In addition to environmental monitoring, rural IoPVT applications extend to various industrial contexts, including warehouses, farms, loading docks, oil pipelines, electric power plants, and train stations. As rural industrial complexes grow and residential developments encroach, overlapping zones require robust safety monitoring. For example, in the extreme case of a nuclear power plant (NPP), as illustrated in

Figure 4, IoVT systems can provide integrated surveillance of both the facility and its surrounding area. In the event of industrial accidents or severe weather—such as power plant or power line failures that trigger fires—the system would enable rapid responses not only within the industrial complex but also for nearby rural communities. This comprehensive approach to IoVT in rural settings highlights its crucial role in safeguarding both industrial operations and the populations that depend on them, ensuring resilience and safety amid diverse environmental challenges.

A vital point worthy of further discussion is that deploying IoPVT safety monitoring systems in rural areas goes beyond being a technical task; it is a community initiative. When residents are involved as partners, technology becomes more intelligent, more trusted, and far more effective. Policymakers in cities and counties, such as those on the rural outskirts of Harrisburg, have the responsibility to integrate these systems with larger development plans, aligning them with environmental monitoring programs, industrial safety regulations, and resilience-building efforts. With transparent operations, strong privacy protections, and a commitment to equity, IoPVT innovations can gain community support and provide widespread benefits. Ultimately, an approach that centers on people in the IoPVT realm through participation, co-ownership, and equitable access will improve both the sustainability and safety of rural communities in the face of future challenges. Engaged deployments of this nature can serve as models for how technology and local knowledge work together to foster rural resilience in an increasingly connected world.

5.3. Coastal Setting

Coastal environments are particularly vulnerable to the effects of climate change, such as rising sea levels, extreme weather events, and increased stress on infrastructure. Similar to health and safety concerns during winter conditions, industrial accidents that can cause fires, such as hurricanes and flooding in coastal areas, pose significant risks. With weather services offering forecasting and nowcasting, IoPVT can be utilized not only locally for housing but also globally for community needs and socially for response planning. Two relevant examples are hurricanes and flooding. The initial destruction caused by a hurricane involves high winds, and IoVTs can aid in safety analysis by assessing potential structural damage to bridges, power line towers, and critical facilities like water towers, as illustrated in

Figure 5.

Using digital twin technology and understanding the safety limits of certain structures such as water towers and bridges [

97], we can monitor the IoVT of these structures when forecasted winds pose a risk of destruction. This approach allows for real-time updates on the potential for structural collapse. With insights from IoVT regarding system failures, alerts can be dispatched to the public to ensure safety. Furthermore, in the aftermath of such disasters, the same IoVT systems on bridges and highways can provide critical information about water flow, road blockages, and rising water levels. If first responders cannot reach these areas, the city’s IoVT can supply rescue teams with vital information on safe routes to enhance the survival of residents. While UAVs could assist in these efforts, they would only be operational after the storm has passed. Therefore, IoVT near bridges is essential not only for managing traffic flow under normal conditions and monitoring structural integrity but also for facilitating community-wide disaster response during events like hurricanes, particularly those leading to flooding.

Beyond immediate emergencies, IoPVT generates valuable data that contribute to long-term climate adaptation and resilience planning for coastal cities. Planners and policymakers can analyze trends captured by sensors and connected devices to make informed decisions about adapting the built environment and communities to a changing climate. Key areas of integration include resilient urban development, infrastructure reinforcement, and managed retreat decisions. To fully realize IoPVT’s benefits, policymakers must ensure its equitable deployment in high-risk and underserved coastal communities. Often, the communities most vulnerable to climate disasters, such as low-income neighborhoods or remote coastal villages, have the least access to advanced technologies and infrastructure. This digital divide can compound disaster inequities if not addressed. In fact, smart city research warns that if new innovations like the IoT and 5G are implemented without inclusivity, tech-disadvantaged groups could struggle to utilize or benefit from these services, thus widening inequalities and undermining sustainable resilience efforts. A truly climate-resilient coastal city must, therefore, plan for digital inclusion from the outset.

These three cases, each representing an extreme weather scenario, highlight the potential for IoVT to support HAR in outdoor settings. However, with the advent of large infrastructure projects that consider the people who use them and extreme events, leveraging IoVT could save lives at minimal cost. Furthermore, the proposed outdoor IoVT technology would also be implemented for normal operations concerning safety, security, and inspection, from which HAR is presumed based on the public spaces in which the infrastructures are located.

6. Challenges and Opportunities

Although the significant promise of IoPVT-enabled outdoor safety monitoring has been widely recognized, there are still hurdles to overcome to realize its transformative potential fully. These challenges range from purely technical challenges, such as ensuring that sensor networks remain robust and scalable under diverse conditions, to broader issues related to policy design, interdisciplinary collaboration, and public trust [

11,

98]. At the same time, these very obstacles present unique opportunities to refine technology, develop more inclusive governance models, and push the boundaries of interdisciplinary research. By carefully balancing innovation with ethical stewardship and engaging stakeholders across sectors and disciplines, the path to a safer and more resilient future is feasible and ripe with potential for scientific breakthroughs and social benefit. The following subsections explore critical areas of technical scalability and reliability, policy and design collaboration, ethical and privacy considerations, and future research directions that promise to shape the field.

6.1. Technical Scalability and Reliability

Building a robust and expansive IoPVT infrastructure for outdoor safety monitoring requires addressing multiple interrelated challenges related to scalability and reliability. As the number of sensors, connected devices, and participating stakeholders deployed grows, so does the complexity of ensuring consistent data collection and analysis across diverse environments: urban high rises, rural farmlands, and coastal ecosystems each present distinct conditions that strain network connectivity and sensor performance. In addition, the sheer volume of data generated by real-time monitoring can quickly overwhelm centralized processing infrastructures, calling for distributed computing strategies at the edge and fog layers to handle localized analytics and reduce bottlenecks.

On the hardware side, sensors must be engineered to withstand harsh environmental conditions, including extreme temperatures, high humidity, and corrosive elements such as salt in coastal regions [

99]. Cities like Montreal, for example, have harsh winters that require devices that can still operate through ice, snow, and subzero temperatures. Coastal areas exposed to salty air, storms, and fluctuating tide heights present a different kind of durability challenge, but one that is equally demanding. This mitigates any deterioration, as materials and enclosures must perform just as well and stand up to the time and test associated with weatherproofing. Another key consideration is power efficiency [

100]. Remote or autonomous sensor deployments often use batteries or energy harvesting solutions to maintain continuous operation, which depends on factors such as temperature or seasonal availability of sunlight. The strategic placement of sensors, optimizing access to maintenance while enabling successful repairs/migration, further complicates the large-scale deployment of IoPVT systems.

On the software side, scalability lies in the capacity to process enormous data streams without sacrificing responsiveness or security [

101]. The heterogeneous sensor feeds must be ingested, stored, and indexed efficiently in data management systems to allow for near-real-time analysis and retrieval of relevant information. Also critical is fault tolerance, where a single point failure at the network layer or within the cloud infrastructure can create surveillance gaps, slow notifications, or even complete system outages. Instead, to distribute computational loads and decrease latency (critical for fog networks in remote regions or in bandwidth-limited scenarios), developers can consider introducing redundant network architectures, adding load-balancing mechanisms, or utilizing edge/fog analytics. In addition, end-to-end encryption, other security measures, and secure communication protocols are needed to secure sensitive information, from health data to location data. The third is to install a wide range of sensors, third-party APIs, and different IoPVT layers that require open standards and adaptable middleware solutions to promote interoperability. This level of interoperability is crucial for future-proofing the system so that new technologies can be integrated into it and enable incremental upgrades over time.

Ultimately, ensuring technical scalability and reliability is more about strategic planning and design than technological upgrades. To do so, they must consider topics such as optimal sensor position, appropriate communication protocols for local conditions, or a flexible architecture that can be easily changed. With these aspects in mind, it can be ensured that IoPVT-enabled outdoor safety monitoring can meet the needs of more users and more environments without degradation of data quality and system responsiveness.

6.2. Interdisciplinary Collaboration for Design and Policy

This analytical perspective of the system is essential to implement IoPVT-enabled outdoor safety monitoring on a much larger scale, which requires much more than technical expertise, but seamless interdisciplinary cooperation between a large diversity of stakeholders. Urban planners, public health experts, software and hardware engineers, policymakers, emergency responders, and community members bring essential perspectives and priorities. This, in turn, can help ensure that the solutions we craft are not only technologically sound, but also socially responsible, ethically appropriate, and sustainable in the long term.

At the heart of this vision is a user-centric design philosophy. This will require systems architects and engineers to collaborate closely with urban planners to ensure that sensor deployments and data pipelines can be neatly integrated into the existing and planned infrastructure of the city. Not only does this save on resources, but also systems can be built that are scalable and adaptable. Data can be one way to understand what communities need. However, public health professionals and social scientists further deepen this process by providing insight into community behavior patterns, so that responses match needs and are compatible with local needs and values.

For example, sensors must be placed carefully in sensitive areas such as public schools and hospitals [

102]. Balancing technological efficacy with privacy and ethical concerns requires an inclusive dialogue among all parties. In this context, user-centric design is not just a buzzword. It is a foundational principle that ensures that IoPVT solutions remain context-aware and sensitive to cultural and social nuances [

103]. Whether deployed in a densely populated urban center or a quiet rural community, these solutions must be versatile enough to meet varied local demands.

On the policy side, legislators and regulatory authorities are vital. They create transparent, well-defined processes that define what data is collected, who owns it, and how it is used. Regulations should define the roles and responsibilities of all stakeholders, from local government agencies to private sector service providers. Strong regulation not only protects the rights of citizens, especially around data ownership, data use, and data privacy, but also creates a fundamental framework for innovation to grow and thrive without the fear of running into a legal gray area.

Public-private partnerships (PPPs) are among strategies with distinct potential to accelerate the development of IoPVT platforms [

104]. However, there must be transparent oversight mechanisms behind these collaborations to ensure accountability, trust, and cross-jurisdictional collaboration [

105]. Since the responsibility to manage systems is often distributed across local governments, regional bodies, etc., cross-jurisdictional collaboration across neighboring municipalities or utilities in a region can enhance system interoperability. Partnerships can generate vast data ecosystems that provide information throughout the community on public safety and health trends that ultimately benefit all communities.

Interdisciplinary cooperation is the foundation for IoPVT systems that can benefit their communities, combining technical insight with forward-thinking urban forms, ethical constraints, and robust policy structures [

106]. This makes this holistic approach more than just about optimizing hardware and software. It draws on various perspectives to address the complexities of the real world. In doing so, it fosters stakeholder confidence, accelerates the adoption of emerging technologies, and significantly improves safety outcomes in diverse outdoor environments. This has resulted in a powerful and flexible system capable of retaining the technical requirements of contemporary city life while being attuned to wider social, ethical, and regulatory considerations.

6.3. Ethical and Privacy Considerations

Although IoPVT-enabled safety monitoring systems consider various use cases to detect unsafe outdoor conditions at both individual and community levels, these systems are increasingly integrated with advanced personal and environmental data acquisition devices, many of which are now commonplace in our daily lives. Sensors in public and semi-public spaces can collect detailed information about individual location, movement, and behavior data that can threaten personal freedoms or inadvertently perpetuate harmful discrimination if misused by bad actors. Therefore, balancing proactive security measures and the right to privacy regarding personal data is a critical challenge that requires sound policy-making, robust technical safeguards, and thoughtful public consultation [

107].

One of the biggest questions in these systems is, what data should we collect, who owns those data, and can they be used? Although grainy data yields the most exact information, it also generates more privacy threats [

108]. There should be clear lines drawn informing the use of permissible data by emergency services, health interventions, or infrastructure improvements, but also lines drawn to ensure there is zero room for possible unauthorized or unethical use: no intrusive surveillance or commercial profit without public benefit. There are transparent data governance models that various communities can adopt, including specifying retention periods, anonymization standards, and data-sharing protocols that can create a sense of security for local organizations and help maintain trust in technology.

Outdoor monitoring covers broad geographic spaces, complicating the mechanisms of individual consent, compared to more controlled spaces like hospitals or research laboratories [

109]. Local authorities and system developers can improve public trust in a system by making it more transparent. Clear signage indicating areas under sensor surveillance, publicly available dashboards featuring aggregated data, and outreach efforts to inform citizens of what data is being collected and how to alleviate these concerns. Bringing local communities into the conversation, by holding intensive town hall meetings or setting up online town forums, for example, also offers citizens an opportunity to raise concerns, suggest improvements, and feel a sense of co-ownership in how the system is deployed and evolves.

AI-powered human activity recognition and predictive analytics can unintentionally systematize bias and exclusion if training data or algorithmic designs do not represent diverse populations and contexts [

110,

111]. This can result in unwanted uneven distribution of safety resources, misidentification of innocuous behaviors as suspicious, or systematically excluding specific demographics from early warning systems. To combat these risks, system developers need to use inclusive data sets, regularly audit their algorithms for disparate impacts, and include a diverse group of stakeholders in the model development and vetting process. As part of the responsible aspect of AI, best practices, such as explainable models and continuous monitoring of potential bias, can be incorporated into IoPVT platforms to ensure that the safety benefits are delivered fairly and equitably.

Ultimately, ethical and privacy considerations are not simply additional constraints, but rather foundational pillars of a sustainable IoPVT ecosystem. When handled transparently and collaboratively, these measures protect citizens’ rights, increase public trust, and facilitate the long-term viability of outdoor safety monitoring as a force for communal well-being.

6.4. Future Research Directions