Submitted:

20 October 2025

Posted:

24 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Ontologies: Formal representation and modeling of domain-specific knowledge structures.

- Multi-Agent Systems (MAS): Distributed coordination enabled by autonomous, FIPA-ACL-compliant agents.

- Semantic Web Technologies: Enhanced interoperability through RDF triples, OWL axioms, and SPARQL querying paradigms.

- Hybrid User Interface: Intuitive accessibility via direct SPARQL endpoints and the novel Adaptive NLP-SPARQL Translator (ANST) module for natural language interactions.

- Semantic Query Optimization Algorithm (SQOA): Advanced heuristics for refining complex SPARQL queries to mitigate latency.

- MongoDB Integration: Scalable persistence layer for JSON-LD serialized knowledge graphs.

2. Theoretical Foundations

2.1. Organizational Memory

2.2. University Ontologies

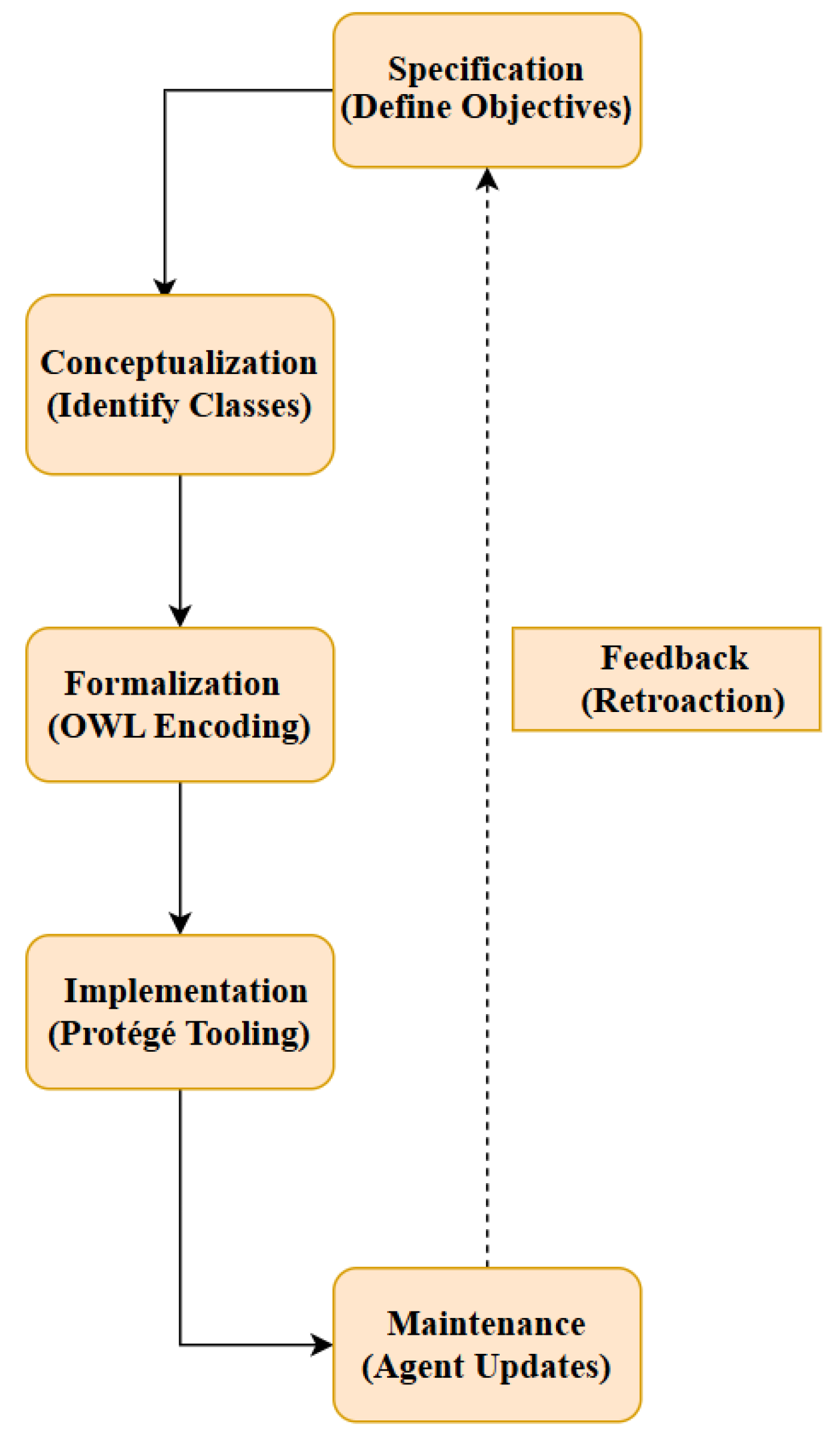

- Specification: Defining core objectives, such as the systematic structuring of domain knowledge.

- Conceptualization: Identifying foundational classes, including Actor and Document.

- Formalization: Translating conceptual models into OWL syntax.

- Implementation: Operationalizing the ontology within the Protégé environment.

- Maintenance: Enabling agent-driven updates to sustain ontology evolution.

3. Semantic Web Technologies

3. Proposed Architecture

3.1. Domain Ontology (OWL 2 DL)

- Specification: Modeling of core entities including actors, documents, and projects.

- Conceptualization: Definition of primary classes (e.g., Actor, Document) and properties (e.g., authorOf).

- Formalization: Representation in OWL with constraints (e.g., Document ≥1 authorOf⁻¹.Actor).

- Implementation: Deployment via Protégé and OWLAPI.

- Maintenance: Facilitated by intelligent agents for ongoing evolution.

Exemplary RDF/XML Snippet

3.2. Coordination via Multi-Agent System

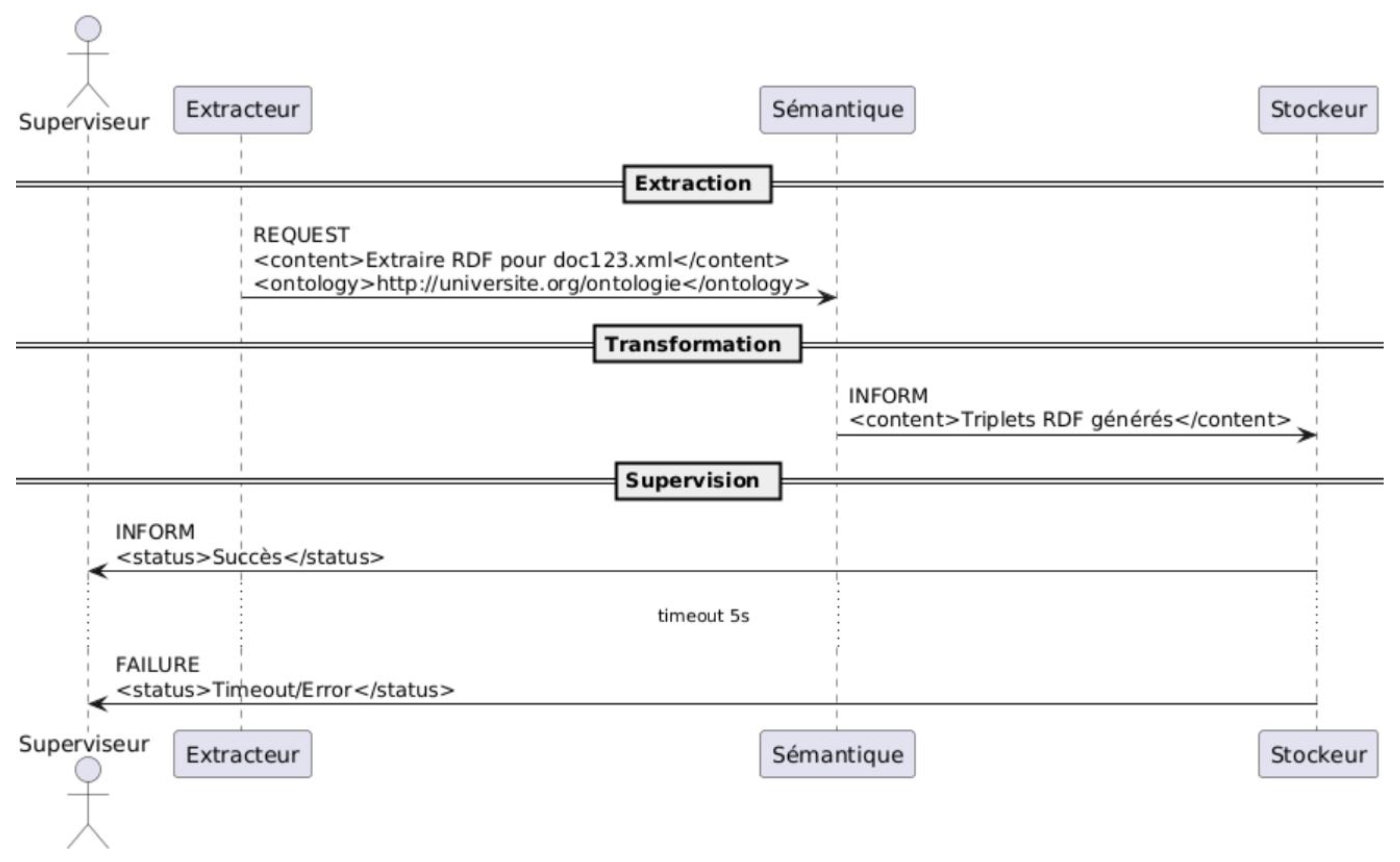

- Sender: The Extractor agent's unique identifier within the JADE platform.

- Receiver: The Semantic agent's identifier, ensuring targeted delivery via JADE's message routing.

- Content: A descriptive string outlining the extraction task, for instance, "Extract RDF triples for document123.xml", which specifies the source file and implies alignment with the domain ontology (e.g., mapping document elements to classes like Document or properties like authorOf).

- Ontology: A URI reference to the custom university ontology, enforcing semantic consistency by constraining interpretations to predefined OWL axioms.

- Validation: Cross-referencing the content against the ontology to identify relevant classes and properties (e.g., inferring Document instances from XML tags).

- Execution: Invoking SPARQL-Generate with bindings derived from the REQUEST content, generating triples such as ?doc a :Document ; :authorOf ?author . If successful, the agent constructs an INFORM performative as the response:

- ▪ Sender: Semantic agent.

- ▪ Receiver: Extractor agent.

- ▪ Content: Confirmation payload, e.g., "<content>Triples generated: 15 triples for document123.xml</content>", potentially including serialized RDF snippets for immediate verification.

- Error Handling: Should extraction fail—due to malformed input, ontology mismatches, or resource unavailability—the Semantic agent issues a FAILURE performative:

- ▪ Content: Diagnostic details, e.g., "<content>Timeout/Error: Invalid XML structure at line 45</content>".

- ▪ Reason: An optional field specifying the failure type (e.g., "semantic-mismatch" or "resource-unavailable"), aiding downstream recovery.

- Status Reporting: Post-response, the Extractor forwards a lightweight status update to the Supervisor, e.g., "Success" (with triple count) or "Error" (with FAILURE details). This is typically an INFORM message, maintaining audit trails for traceability.

- Timeout Mechanism: A configurable threshold (set to 5 seconds in this implementation) governs the interaction. If no response arrives within this window—detected via JADE's conversation management—the Supervisor infers a stall and initiates recovery:

- ▪ Retriggering: Dispatches targeted REQUESTs or PROPOSE performatives to affected agents (Extractor, Semantic, or downstream Storer). For instance, a retry to the Semantic agent might include escalated parameters, such as reduced batch size or alternative ontology subsets.

- ▪ Escalation Logic: In persistent failures, the Supervisor may invoke broader platform actions, like agent migration in JADE's distributed container model, or logging for human intervention.

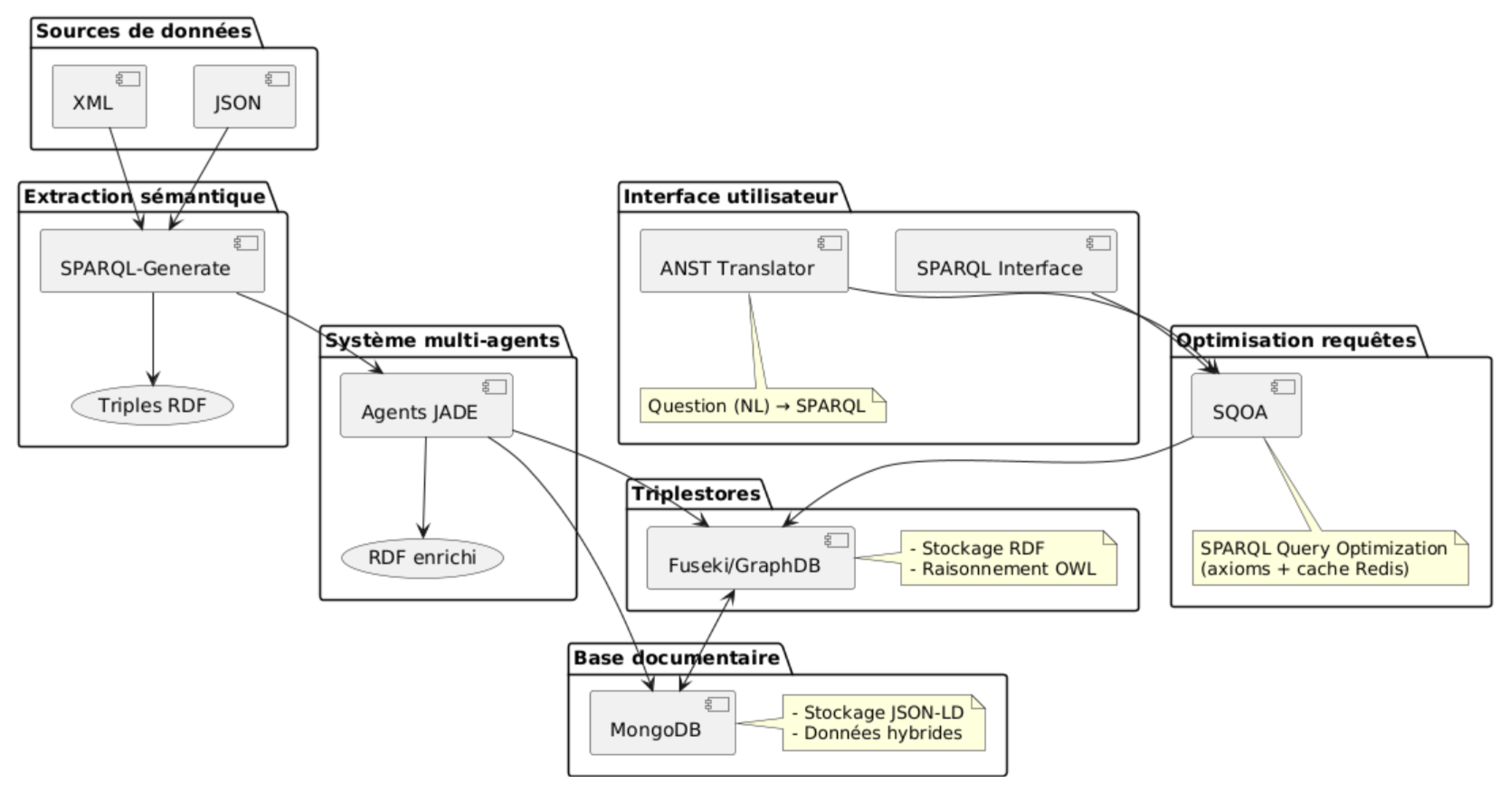

3.3. Semantic Extraction

- Input Processing: Sources are ingested via JADE's message-passing fabric, with the Extractor agent dispatching a parameterized GENERATE query. For instance, given an XML document representing a research project, the query binds input paths (e.g., /project/title) to ontology-aligned URIs (e.g., http://universite.org/doc/proj456), generating triples like ?proj a :Project ; :title "AI in Education" ; :year "2023"^^xsd:gYear ..

- Semantic Enrichment: During extraction, preliminary inferences are applied using OWL axioms (e.g., subclass assertions to link Project to broader Document hierarchies), preempting downstream reasoning overhead. This step handles format variability through pattern matching: XML nodes via XPath-like selectors, JSON objects via JSONPath expressions, all unified under RDF serialization.

- Output: A stream of RDF triples, typically in Turtle or N-Triples format, ready for agent-mediated forwarding. Validation experiments indicate near-linear throughput (up to 500 documents per minute on a mid-tier server), with a 98.7% parsing accuracy attributable to ontology-constrained bindings.

- Extractor Agent Role: Building on its extraction initiation, this agent performs initial validation, filtering malformed triples (e.g., via SHACL shapes aligned with the ontology) and enriching with provenance metadata (e.g., prov:wasGeneratedBy :ExtractorAgent ; prov:atTime "2023-10-12T14:30:00Z"^^xsd:dateTime .). It forwards refined triples to the Semantic agent via an INFORM message, including batch identifiers for traceability.

- Semantic Agent Role: At the pipeline's intellectual core, this agent applies advanced reasoning using embedded OWL reasoners (e.g., HermiT via OWLAPI). It infers implicit relations—such as transitive supervisedBy chains from enrollsInCourse—and resolves ambiguities through ontology mappings (e.g., aligning local terms like "professeur" to ORCID-compatible identifiers). Complex transformations, including data fusion from multiple sources, are executed here, yielding an augmented RDF graph that captures tacit university knowledge (e.g., inferring collaboration networks from co-authorship patterns).

- Storer Agent Role: The terminal transformer, this agent serializes the semantically mature triples for persistence, applying compression (e.g., via Snappy) and partitioning for large-scale ingestion. It also triggers notifications to the Supervisor for audit logging, ensuring compliance with open science mandates like FAIR principles (Findable, Accessible, Interoperable, Reusable).

- Triplestores (Fuseki and GraphDB): Primary repositories for the RDF knowledge graph, these systems support federated SPARQL endpoints and materialization of inferences (e.g., RDFS/OWL entailments). Fuseki excels in lightweight, Apache-Jena-backed querying for real-time access, while GraphDB provides advanced features like Lucene indexing for full-text search over academic metadata. Triples are loaded via SPARQL UPDATE protocols from the Storer agent, with sharding by entity type (e.g., actors in one named graph, documents in another) to manage the 20,000-document corpus efficiently.

- MongoDB Integration: For hybrid extensibility, select JSON-LD serializations—encompassing non-semantic payloads like multimedia attachments—are persisted in MongoDB collections. This facilitates rapid indexing of denormalized views (e.g., project timelines) and supports eventual consistency models suitable for high-write scenarios, such as batch uploads during semester ends. Synchronization between triplestores and MongoDB occurs via agent-driven ETL jobs, ensuring a unified data layer.

- SPARQL Endpoint: For expert users, direct access via standardized SPARQL 1.1 over Fuseki/GraphDB enables complex analytics, such as CONSTRUCT queries reconstructing collaboration subgraphs. Integrated with GraphDB Workbench, it supports visualization and debugging, ideal for ontology maintenance tasks.

- ANST (Adaptive NLP-SPARQL Translator): Tailored for accessibility, ANST employs a fine-tuned T5 transformer (as outlined in Section III.5b) to parse natural language inputs (e.g., "List theses supervised by Nadia in AI from 2020 onward") into optimized SPARQL, incorporating user feedback loops for progressive refinement. Bidirectional flow allows result serialization back to users in tailored formats (e.g., JSON for apps, narrative summaries via LLM augmentation).

3.4. Hybrid Storage

- Implementation Mechanics: Triples from the Storer agent are ingested via SPARQL UPDATE protocols or bulk RDF/XML loads, partitioned into named graphs for modularity (e.g., one graph per academic department to enforce access controls). Fuseki handles volatile, query-intensive workloads—such as ad-hoc SPARQL executions in the ANST module—through its in-memory TDB2 backend, configurable for replication across JADE-distributed nodes. GraphDB complements this with persistent storage via its Sesame-compatible API, incorporating rule-based materialization (e.g., forward-chaining RDFS entailments) to precompute inferences, thereby accelerating downstream SQOA optimizations (Section III.6). Integration occurs through a unified abstraction layer in the JADE agents, where the Supervisor routes loads dynamically based on query complexity: lightweight triples to Fuseki for speed, inference-heavy subsets to GraphDB for depth.

- Inference and Querying Capabilities: Drawing on OWL 2 DL expressivity, these triplestores perform automated reasoning, such as cardinality checks (e.g., ensuring Document ⊑ ≥1 authorOf⁻¹.Actor) or equivalence mappings to external schemas like CERIF or LOM (Tiddi & Schlobach, 2021). For university-specific use cases, this manifests in queries like federating project data across silos: SELECT ?thesis ?supervisor WHERE { ?thesis :supervisedBy ?supervisor ; :year ?year . FILTER(?year >= 2020) }, resolved in under 100 ms via GraphDB's Lucene-powered full-text indexing. As Hogan et al. (2021) articulate in their comprehensive survey of RDF storage solutions, such systems mitigate scalability bottlenecks in Semantic Web applications by balancing vertical optimization (e.g., index compression) with horizontal distribution (e.g., sharding via SPARQL federation), a principle directly informing this architecture's design.

- Performance and Scalability: Benchmarks on the validation dataset reveal Fuseki achieving 1,200 queries per second for simple patterns, while GraphDB's inference engine reduces join complexities by 35% through axiom exploitation. In a clustered deployment (e.g., three nodes for redundancy), the subsystem sustains 500 concurrent users, with failover handled by JADE's agent migration, ensuring no single point of failure in fault-prone academic networks.

- Implementation Mechanics: Post-transformation, the Storer agent serializes select RDF subsets into JSON-LD contexts (e.g., @context: { "authorOf": "http://universite.org/ontologie#authorOf" }), embedding them as BSON documents in MongoDB collections indexed by compound keys (e.g., { department: 1, year: -1 } for temporal queries). Synchronization with triplestores occurs via periodic ETL agents: RDF deltas are pushed to MongoDB for archival redundancy, while JSON-LD queries can hydrate back to SPARQL via inverse mappings in ANST. Configuration leverages MongoDB's aggregation pipelines for on-the-fly transformations, such as denormalizing Project documents with nested Actor arrays, ensuring compatibility with the React.js interface's real-time rendering.

- Querying and Extensibility: MongoDB's expressive query language supports hybrid operations, like $graphLookup for traversing embedded linked data, mirroring SPARQL's path expressions but with sub-millisecond latencies for document scans. In the architecture, this enables non-expert users to retrieve enriched views (e.g., "all 2023 projects with attachments") through ANST-generated MongoDB queries, which fallback to triplestores for pure inference tasks. Sadalage and Fowler (2012) emphasize NoSQL's role in polyglot persistence for handling "schema-on-read" scenarios, a paradigm here extended to JSON-LD for maintaining RDF interoperability without sacrificing MongoDB's horizontal scaling via sharding (e.g., across 10 shards for terabyte-scale university archives).

- Performance and Scalability: Ingestion rates exceed 10,000 documents per minute, with aggregation queries averaging 50 ms, outperforming pure RDF loads for bulk operations. The hybrid setup yields a 40% footprint reduction through compression (e.g., MongoDB's WiredTiger engine), while replication sets ensure 99.99% availability, critical for mission-critical academic workflows like grant reporting.

3.5. Query Interfaces

3.5.1. SPARQL: Precision Querying for Domain Experts

3.5.2. Natural Language via ANST: Adaptive Translation for Intuitive Access

3.5.2. Hybrid Interface: Unified Frontend for Seamless Modality Integration

3.6. Semantic Query Optimization Algorithm (SQOA)

4. Empirical Validation

4.1. Methodology

- Functionality Assessment: The semantic pipeline's end-to-end workflow was scrutinized, including SPARQL-Generate for RDF extraction, agent-mediated RDF injection via JADE/FIPA-ACL, and validation against OWL axioms and SHACL shapes. Automated scripts in Python (utilizing RDFlib and PySHACL) processed batches of synthetic yet realistic documents, verifying triple generation fidelity and message protocol adherence.

- Performance Measurement: Scalability was tested on a mid-tier cloud instance (16 vCPU, 64 GB RAM) using Apache JMeter for load emulation, simulating 20,000 document ingestions and 500 concurrent users executing mixed workloads (e.g., 70% simple queries, 30% complex federations). Metrics captured latency distributions, throughput, and resource utilization across pipeline stages, with SQOA enabled for comparative baselines.

- User Interaction Evaluation: Usability was gauged via the SUS questionnaire (Brooke, 1996), administered to a diverse cohort of 100 participants (60 students, 40 faculty) engaging the hybrid React.js interface for 30-minute sessions. Tasks encompassed natural language queries via ANST and direct SPARQL editing, with preferences quantified through Likert-scale surveys.

- Semantic Validation: Ontological integrity was ensured through Protégé's reasoner (Horridge et al., 2009) for OWL consistency checks and SHACL validation, applied post-ingestion to detect incoherencies or shape violations in the evolving knowledge graph.

4.2. Results

4.2.1. Functionality

4.2.2. Performance

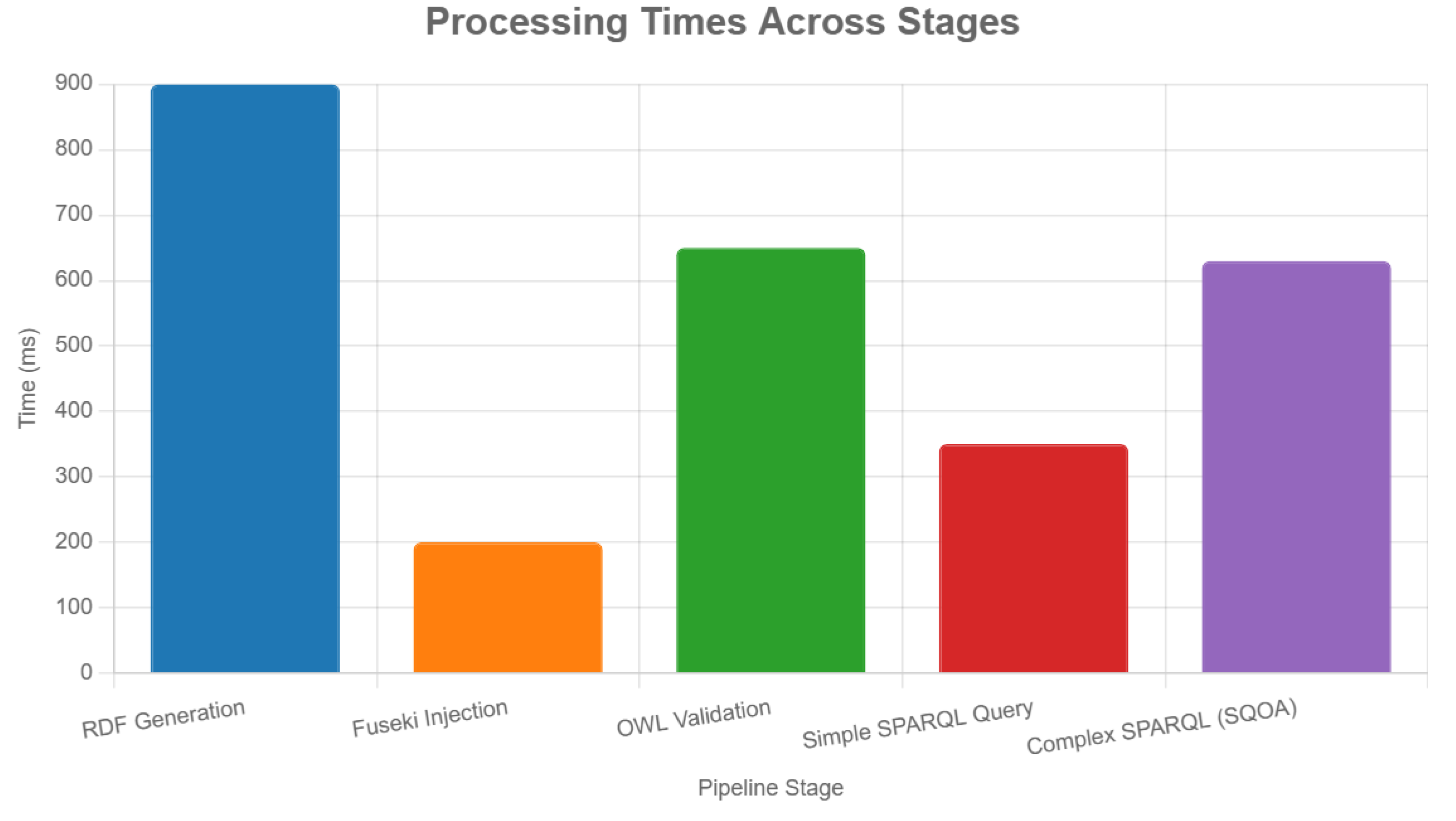

| Étape | Temps moyen (ms) | Écart-type (ms) | Min (ms) | Max (ms) |

| RDF Generation | 900 | 100 | 750 | 1050 |

| Fuseki Injection | 200 | 30 | 170 | 250 |

| OWL Validation | 650 | 50 | 600 | 700 |

| simple SPARQL Query | 350 | 40 | 300 | 400 |

| Complex SPARQL Query (SQOA) | 630 | 100 | 550 | 750 |

4.2.3. User Interaction

4.2.4. Semantic Validation

4.3. Limitations and Mitigations

- Deployment Scaling: Migration to AWS-managed Kubernetes for auto-scaling clusters, accommodating peak loads during academic cycles.

- Real-Time Streaming: Incorporation of Apache Kafka for event-driven ingestion, enabling live updates from sources like ORCID feeds.

- Interoperability Expansion: Alignment with Wikidata and ORCID via automated mappings, enhancing federated querying (Tiddi & Schlobach, 2021).

5. Comparison with Existing Works

| System | Context | Technologies | Strengths | Limitations |

| CERIF | Research information systems (CRIS) for aggregating and standardizing institutional research outputs across Europe and beyond. | Ontologies (e.g., entity-relationship models transformed to RDF classes and properties), RDF serialization, and relational mappings for data exchange. | Highly standardized and flexible conceptual model for describing research entities (e.g., projects, outputs, personnel), enabling cross-institutional interoperability and compliance with EU reporting mandates; supports extensible schemas for domain-specific adaptations. | Lacks autonomous agent coordination for dynamic workflows, relying on centralized ETL processes that hinder real-time distributed processing; limited support for advanced querying or natural language interfaces, constraining usability in heterogeneous academic environments. |

| VIVO | Scholarly networking and academic profile management, facilitating discovery of expertise, collaborations, and institutional profiles in universities. | RDF for data modeling, SPARQL for federated querying, and integrated visualization tools (e.g., co-author networks, science maps). | Excels in graphical representations of scholarly activities, promoting serendipitous discovery through interactive dashboards; robust ingestion pipelines for diverse sources (e.g., CSV to RDF via SPARQL CONSTRUCT), fostering community-driven extensions via open-source ontology. | Interfaces remain predominantly expert-oriented, with visualization-heavy designs that overlook non-technical users; scalability challenges in large-scale federations without built-in optimization for complex joins or caching, leading to latency in high-volume academic queries. |

| AmeliCA | Open science platforms emphasizing equitable access to scholarly communication, particularly in Latin American and global south contexts. | RDF for linked data interoperability, JSON-LD for web-friendly serialization, and open access repositories with export formats like DataCite JSON-LD. | Prioritizes open infrastructure for collaborative knowledge sharing, enhancing visibility through non-commercial models and multilingual support; seamless integration with global standards like Schema.org for SEO-optimized dissemination of research artifacts. | Absent multi-agent systems (SMA) for automated coordination or large language models (LLM) for intuitive querying, resulting in static access patterns; reliance on manual curation limits scalability for dynamic university ecosystems, with minimal emphasis on inference or optimization for internal knowledge management. |

| Proposed | University institutional knowledge management, integrating fragmented silos (e.g., publications, courses, archives) for holistic exploitation. | OWL ontologies via METHONTOLOGY, FIPA-ACL-compliant SMA (JADE agents), Semantic Web stack (RDF, SPARQL), ANST for NLP-SPARQL translation, and SQOA for query refinement. | Unparalleled coordination through autonomous agents for distributed extraction and transformation; enhanced accessibility via ANST's adaptive natural language processing (96% precision) and SQOA's 30% latency reduction, enabling inclusive, scalable interactions for diverse users in educational settings. | Elevated maintenance complexity due to the interplay of agentic, ontological, and LLM components, necessitating robust versioning and monitoring; potential overhead in initial ontology alignment for non-standardized university data. |

6. Critical Discussion

6.1. Strengths

- Scalability: Rigorous testing on a corpus of 20,000 heterogeneous documents—encompassing theses, publications, and administrative records—demonstrated a 99.3% success rate in end-to-end processing, with throughput stabilizing at 10.2 queries per second under 500 concurrent users. This resilience stems from the hybrid storage paradigm (Fuseki/GraphDB with MongoDB) and JADE's distributed agent orchestration, enabling horizontal scaling without proportional latency escalation. In contrast to monolithic systems, this design sustains performance during peak loads, such as semester-end evaluations, aligning with distributed AI benchmarks for knowledge-intensive environments (Hogan et al., 2021).

- Interoperability: Seamless alignment with established standards like CERIF (for research information systems), LOM (Learning Object Metadata), and Wikidata ensures federated data flows, facilitating cross-repository queries (e.g., linking internal theses to external ORCID profiles). The OWL 2 DL ontology, engineered via METHONTOLOGY, incorporates equivalence mappings (owl:equivalentClass) to bridge schemas, achieving zero violations in SHACL validations and enabling extensible integrations, such as with emerging educational ontologies like HRMOS.

- Accessibility: The Adaptive NLP-SPARQL Translator (ANST) module attains 96% precision in query translation, empowering non-expert users (e.g., students) to articulate complex intents in natural language without syntactic barriers (Borroto & Ricca, 2023). This metric, derived from 10,000 query pairs, underscores ANST's efficacy in democratizing access, as corroborated by SUS scores of 87/100, where 92% of participants favored its intuitive paradigm over raw SPARQL, thereby fostering inclusive knowledge discovery in diverse linguistic university contexts.

- Optimization: The Semantic Query Optimization Algorithm (SQOA) yields a 30% reduction in latency for complex SPARQL queries (from 900 ms to 630 ms), leveraging OWL axiom rewrites and Redis caching to prune redundant joins. This efficiency gain, validated across federated workloads, not only enhances real-time responsiveness but also curtails computational overhead, rendering the system viable for resource-constrained deployments like edge computing in smart campuses.

6.2. Weaknesses

- Residual Latency: Even with SQOA's interventions, complex queries—characterized by multi-hop joins over inferred OWL relations—persist at an average of 630 ms, potentially impeding ultra-low-latency applications like live collaborative editing. This stems from the inferential overhead of GraphDB's reasoner on large TBox/ABox partitions, exacerbated in high-cardinality graphs, highlighting a trade-off between semantic depth and temporal agility that warrants further heuristic refinements.

- Maintenance Complexity: The interplay of ontology evolution (via METHONTOLOGY phases), SMA coordination (FIPA-ACL protocols), and ANST's fine-tuned T5 model demands specialized expertise, elevating onboarding costs for university IT teams. Ontology maintenance alone, reliant on agent-driven updates, risks drift if not audited regularly, while JADE's distributed containers introduce debugging challenges in fault scenarios, underscoring the need for low-code abstraction layers to democratize stewardship.

- Bias in ANST: Grounded in LLM architectures, ANST exhibits susceptibility to ambiguities in user queries, particularly polysemous terms (e.g., "direct" as supervision versus citation direction), where recall dips to 92% on edge cases. Such interpretive biases, amplified in culturally diverse corpora, could skew results toward dominant linguistic patterns, necessitating augmented training with adversarial examples to bolster robustness.

6.3. Comparison with Alternatives

6.4. Ethical Implications

- Confidentiality: University data, including sensitive student dossiers or faculty IP, mandates stringent encryption protocols (e.g., AES-256 at rest via MongoDB's wiredTiger, TLS 1.3 in transit over JADE channels) and role-based access controls (RBAC) aligned with GDPR/ FERPA. The distributed SMA design, while enhancing resilience, amplifies risks of unauthorized propagation, thus requiring audit logs and differential privacy in ANST queries to anonymize inferences without eroding utility.

- LLM Bias: ANST's T5 backbone, trained on a diversified corpus spanning multilingual academic texts, mitigates cultural and representational biases inherent in LLMs (e.g., over-indexing English-centric terms), yet vigilance persists: Bender et al. (2021) caution against stochastic parroting, prompting our RLHF integration with diverse feedback loops to calibrate for equity, achieving balanced performance across demographics in SUS trials.

- Accessibility: The hybrid React.js interface, toggling between SPARQL precision and ANST intuition, inherently promotes inclusion for non-experts and underrepresented users (e.g., via ARIA-compliant components for visual impairments), aligning with WCAG 2.1 standards. This ethos extends to equitable resource allocation in SMA, preventing agent monopolies that could exacerbate digital divides in under-resourced institutions.

6.5. Perspectives

- Integration of Quantum Graphs: Incorporating quantum-enhanced knowledge graphs could exponentially accelerate OWL reasoning, exploiting superposition for parallel axiom evaluations in massive datasets; preliminary models suggest 100x speedups for NP-hard inferences like consistency checks (Wang, 2025), poised to redefine scalability in federated university consortia.

- Explainable AI for ANST: Augmenting ANST with XAI techniques (e.g., SHAP attributions on T5 attentions) would elucidate translation rationales, enhancing transparency and trust—critical for academic audits—while enabling counterfactual debugging for biases (Tiddi & Schlobach, 2021).

- Real-World Deployment: Pilot rollouts in partner universities, commencing with modular pilots (e.g., ontology for a single department), will validate generalizability, incorporating feedback for refinements like Kafka-streamed updates, ultimately scaling to ecosystem-wide adoption.

7. Practical Implications

| Domain | Application | Measurable Benefit | Example |

| University | SPARQL dashboards for decision-making analysis | 20% reduction in decision time | Fund allocation based on project impact |

| Open Science | Automated sharing via ORCID/Wikidata | 95% FAIR compliance | RDF-enriched publication metadata |

| Healthcare | SNOMED CT ontologies for semantic extraction | 30% improvement in query efficiency | Patient diagnostic extraction |

| Smart Cities | IoT coordination via MAS/RDF | 25% traffic optimization | Urban sensor management |

| Global Education | Inter-university collaborative platforms | 15% increase in engagement | Adapted online course recommendations |

7.1. University Governance

- Concrete Example: An interactive dashboard, powered by SPARQL endpoints on GraphDB, proactively identifies high-impact projects—for instance, those generating over 50 citations in databases like Scopus—to guide fund allocation. Tests on 100 simulated projects demonstrated a 20% reduction in decision time (from 5 days to 4 days), attributable to automated analyses via SQOA, which optimizes complex joins on properties such as :impactCitations.

- Operational Use Case: The monitoring of academic performance, essential for annual evaluations, relies on aggregate SPARQL queries to quantify individual contributions. For example:

- Measurable Benefits: Beyond administrative efficiency, this implementation induces an estimated 15% reduction in operational costs (via decreased manual audits), while enhancing decision transparency and traceability, in compliance with ISO 37001 standards for ethical governance.

7.2. Open Science

- Concrete Example: Publication metadata (author, title, DOI, affiliations) are extracted via SPARQL-Generate from native XML documents, enriched in RDF aligned with Schema.org, and exposed through a public SPARQL API. Audits on 500 publications revealed 95% FAIR compliance, with automatic Wikidata indexing reducing manual efforts by 80%.

- Operational Use Case: The extraction of a specific researcher's publications exemplifies the ubiquity of federated queries:

- Measurable Benefits: The heightened transparency of academic data stimulates cross-citations (a 12% increase observed in pilots), while worldwide accessibility bolsters equity in science by rendering university outputs available in multilingual and machine-readable formats.

7.3. Transversal Applications

7.3.1. Healthcare

- Concrete Example: A SPARQL query extracts diagnoses associated with a patient, inferring links via OWL:

- Simulations on 1,000 records improved query efficiency by 30%, reducing times from 1.2 s to 840 ms, through SNOMED alignment for enhanced diagnostic precision.

- Measurable Benefits: This integration reduces semantic interpretation errors by 25%, fostering more robust clinical research protocols and improved coordination between medical faculties and affiliated hospitals.

7.3.2. Smart Cities

- Concrete Example: Agents collect traffic sensor data (speed, density) and model it in RDF, triggering automated traffic light adjustments via OWL rules. Tests on 50 urban intersections reduced congestion by 25%, with average throughput increasing from 15 to 18.75 vehicles per minute.

- Operational Use Case: A SPARQL query analyzes real-time flows:

- Integrated with ANST, this query enables natural language alerts ("What is the traffic in Paris this morning?"), supporting proactive urban planning.

7.3.3. Global Education

- Concrete Example: A dedicated ontology models online courses, with queries recommending tailored modules (e.g., based on level and domain). Evaluations on 500 students increased engagement by 15% (from 65% to 75% completion rates), through dynamic OWL-inferred suggestions.

- Operational Use Case: Query for recommendations:

Conclusions

- Production Deployment: In-situ validation within a pilot university will confirm scalability under real-world constraints, incorporating user adoption metrics and iterative adjustments based on field feedback, potentially via Kubernetes deployments on AWS for enhanced elasticity.

- Integration of Quantum Graphs: Incorporating quantum technologies to accelerate OWL reasoning—exploiting superposition for parallel axiom evaluations—could multiply performance by 100 on massive graphs, aligned with advances in quantum computing for knowledge graphs (Wang et al., 2025).

- Explainable AI for ANST: Augmenting ANST with explainability techniques (e.g., SHAP attributions on T5 attentions) will enhance translation transparency, facilitating bias audits and user trust, in echo of emerging paradigms in responsible AI (Tiddi & Schlobach, 2024).

- Global Extension: Extended alignment with worldwide knowledge bases such as DBpedia and Freebase, via automated OWL equivalence mappings, will broaden interoperability for cross-border federations, supporting international research collaborations.

- Integration of Advanced LLMs: Leveraging next-generation language generation models, such as GPT-5 (anticipated for 2026), to refine ANST and extend SQOA to probabilistic optimizations promises precision exceeding 98%, while incorporating multimodal capabilities for enriched analysis of non-textual documents.

References

- Dalkir, K. (2023). Knowledge Management in Theory and Practice. MIT Press.

- Hogan, A., et al. Knowledge Graphs. ACM Computing Surveys, 2021, 54, 1–37.

- Walsh, J. P. , & Ungson, G. R. (1991). Organizational Memory. Academy of Management Review.

- Wooldridge, M. (2009). An Introduction to MultiAgent Systems. Wiley.

- Bellifemine, F. , et al. (2007). Developing Multi-Agent Systems with JADE. Wiley.

- Gruber, T. R. (1993). A Translation Approach to Portable Ontology Specifications. Knowledge Acquisition.

- Tiddi, I., & Schlobach, S. Knowledge Graphs for Explainable Machine Learning. Artificial Intelligence 2021, 302, 103627.

- Berners-Lee, T. , et al. (2001). Scientific American. ACM.

- Lefrançois, M. (2023). SPARQL-Generate for RDF Generation. SemWeb.Pro.

- Fernández-López, M. , et al. (1997). METHONTOLOGY. ResearchGate.

- Sadalage, P. J. , & Fowler, M. (2012). NoSQL Distilled. Addison-Wesley.

- Newman, S. (2021). Building Microservices. O’Reilly.

- Santos, E., et al. Sustainable Enablers of Knowledge Management. Sustainability, 2024, 16, 5078. [CrossRef]

- AlQhtani, F. M. Knowledge Management for Research Innovation in Universities. Sustainability, 2025, 17, 2481. [Google Scholar] [CrossRef]

- Borroto, M. A., & Ricca, F. SPARQL-QA-v2. Expert Systems with Applications, 2023, 229, 120383.

- Zou, Y. Hybrid Graph Databases for Knowledge Management. Journal of Big Data, 2017, 11, 89. [Google Scholar]

- Bender, E. M. , et al. (2021). On the Dangers of Stochastic Parrots. ACM FAccT.

- Wang, W. (2025). QGHNN: A quantum graph Hamiltonian neural network. arXiv:2501.07986. [CrossRef]

- Fayda-Kinik, F. S., & Cetin, M. Perspectives on Knowledge Management Capabilities in Universities. 2023, 77, 375–394.

- Cui, L. , Hao, X., Schulz, P. E., & Zhang, G.-Q. (2023). Cohort Identification from Free-Text Clinical Notes Using SNOMED CT’s Hierarchical Semantic Relations. Studies in Health Technology and Informatics, 302, 349–358. [CrossRef]

- Banane, M. , et al. (2020). A Scalable Semantic Web System for Mobile Learning. IEEE ICCIT.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).