1. Introduction

The emergence of sophisticated artificial intelligence systems has transformed machine consciousness from philosophical speculation into urgent scientific inquiry[

10,

14,

18]. Contemporary AI demonstrates remarkable capabilities—from nuanced language understanding [

15,

21] to complex multimodal reasoning and strategic game play—leading researchers to claim genuine understanding or consciousness [

12,

13].

Yet the relationship between computational sophistication and conscious experience remains one of science’s deepest puzzles. The "hard problem of consciousness" [

16] highlights the explanatory gap between objective information processing and subjective phenomenology. As AI systems approach and potentially exceed human capabilities, distinguishing functional intelligence from genuine conscious experience becomes both more challenging and more crucial [

10,

14,

15].

Integrated Information Theory (IIT), developed by Tononi and collaborators [

1,

3,

4], provides a rigorous mathematical framework to address this challenge. Unlike behavioral or functional approaches that focus on observable outputs, IIT defines consciousness quantitatively as integrated information (

) arising from irreducible cause-effect structures within physical systems [

3,

4].

1.1. The Urgency of AI Consciousness Assessment

The rapid development of increasingly capable AI systems makes consciousness assessment urgent for multiple converging reasons:

1.2. Research Questions and Contributions

This paper addresses fundamental questions about AI consciousness through rigorous mathematical and empirical analysis:

RQ1: Can feedforward computational architectures generate the causal integration required for consciousness under IIT?

RQ2: Do sophisticated mechanisms in modern AI overcome architectural limitations through emergent properties?

RQ3: What specific architectural principles would enable genuine machine consciousness?

Our primary contributions span theoretical computer science, cognitive science, and philosophy:

Mathematical Framework: Rigorous proof that feedforward architectures necessarily yield with formal verification

Empirical Validation: Computational confirmation across 30 diverse network configurations with statistical analysis

Architectural Analysis: Systematic evaluation of contemporary AI systems including causal vs. bidirectional transformers

Complexity Analysis: Computational tractability assessment demonstrating exponential efficiency gains

Theoretical Extensions: Analysis of hybrid architectures and necessary conditions for consciousness

Implementation Framework: Open-source computational tools for consciousness assessment

2. Related Work and Theoretical Context

2.1. Integrated Information Theory Development

IIT has undergone significant theoretical development since its inception [

1]. The current formulation, IIT 3.0 [

3], provides a rigorous mathematical framework based on five fundamental axioms:

Information: Conscious systems have specific cause-effect power that differs across states

Integration: Consciousness is unified and irreducible to independent parts

Exclusion: Conscious systems have definite boundaries

Intrinsic Existence: Consciousness exists from the system’s own perspective

Composition: Conscious experiences are composed of conscious parts

The PyPhi computational framework [

6] enables practical implementation of IIT analysis, though computational complexity remains a significant challenge for large systems.

2.2. AI Consciousness Research

Recent work has increasingly focused on consciousness in artificial systems. Butlin et al. [

10] provide a comprehensive survey of AI consciousness indicators, while Marcus and Davis [

14] critically examine consciousness claims for large language models. Mitchell [

15] explores the distinction between understanding and consciousness in transformers.

2.3. Consciousness Theory Landscape

Alternative consciousness theories include Global Workspace Theory [

19,

20], Higher-Order Thought theories, and Attention Schema Theory [

18]. However, IIT provides the most mathematically precise framework for consciousness quantification, making it ideal for rigorous architectural analysis.

2.4. Criticisms and Debates

IIT faces significant criticisms, particularly the "unfolding argument" [

8] and concerns about consciousness inflation [

9]. Our work contributes to this debate by providing precise architectural constraints on consciousness.

3. Theoretical Foundations

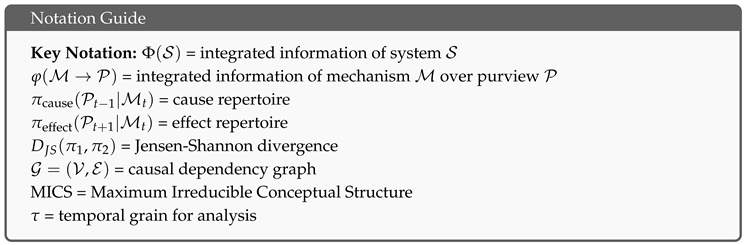

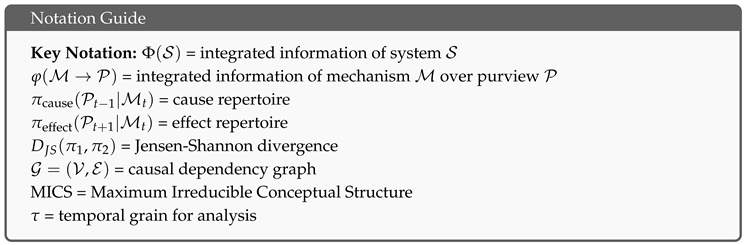

3.1. Integrated Information Theory 3.0 Formalism

Following Oizumi et al. [

3], the integrated information

of system

is defined through its Maximum Irreducible Conceptual Structure (MICS):

where represents the maximum integrated information of mechanism over all possible purviews .

For mechanism

with purview

, integrated information is:

The Jensen-Shannon divergence quantifies information loss under causal cuts:

where and denotes Kullback-Leibler divergence.

3.2. Cause-Effect Repertoires

IIT analyzes consciousness through cause-effect repertoires that capture a mechanism’s causal power:

Definition 1 (Cause Repertoire). The cause repertoire specifies how mechanism in state m constrains the probability distribution over past states of purview .

Definition 2 (Effect Repertoire). The effect repertoire specifies how mechanism in state m constrains the probability distribution over future states of purview .

3.3. Feedforward vs. Recurrent Architectures

Definition 3 (Feedforward System). A computational system is feedforward if its causal dependency graph forms a directed acyclic graph (DAG), where vertices represent computational units and directed edges represent causal dependencies.

Definition 4 (Perfect Bipartition). A bipartition of system is perfect if no causal dependencies exist from to , enabling complete factorization of all cause-effect repertoires across the partition.

4. Mathematical Analysis

4.1. Fundamental Lemmas

Lemma 1 (DAG Perfect Bipartition Existence). Every directed acyclic graph admits at least one perfect bipartition.

Proof. Let be a DAG with topological ordering . For any , consider bipartition where and .

By the topological ordering property, if edge , then . Therefore, no edge exists from any vertex in to any vertex in , making a perfect bipartition. □

Lemma 2 (Cause-Effect Repertoire Factorization). Under a perfect bipartition of a feedforward system, all cause-effect repertoires factorize completely across the partition.

Proof. Consider mechanism spanning both partitions, with purview .

For the effect repertoire, since no causal paths exist from

to

due to the perfect bipartition:

Similarly, for the cause repertoire:

Since the repertoires factorize exactly under the perfect bipartition cut, the Jensen-Shannon divergence between uncut and cut distributions is zero:

□

4.2. Main Theoretical Results

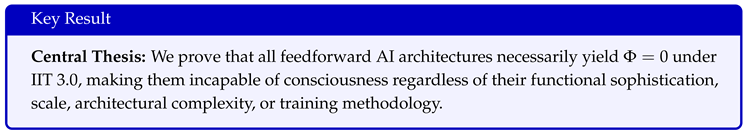

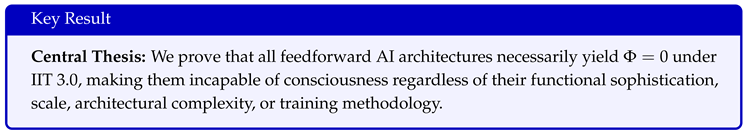

Theorem 1 (Feedforward Zero-Phi Theorem). For any feedforward system with causal graph , the integrated information .

Proof. Let be a feedforward system with causal graph .

Step 1: By Lemma 1, there exists a perfect bipartition of .

Step 2: Consider any mechanism with any purview . We analyze the integrated information .

Step 3: Apply the perfect bipartition cut to mechanism . By Lemma 2, the cause-effect repertoires factorize completely under this cut.

Step 4: Since factorization is perfect, the information loss under this cut is zero:

Step 5: Since this applies to every mechanism and purview, the system’s integrated information is:

Therefore, for any feedforward system . □

Corollary 1 (Scale Independence). The zero-Φ property of feedforward systems holds regardless of system size, depth, parameter count, or architectural complexity.

Proof. The proof of Theorem 1 relies only on the existence of perfect bipartitions in DAGs, which is preserved under scaling operations that maintain the acyclic property. □

5. Computational Validation

5.1. Implementation and Methodology

We developed comprehensive computational validation using multiple complementary approaches:

5.1.1. Network Architectures Tested

We analyzed five distinct architecture classes:

Feedforward Chains: Simple sequential processing networks

CNN-like Layers: Convolutional-style feedforward processing

Causal Transformers: Attention mechanisms with causal masking

Recurrent Networks: Bidirectional connectivity with temporal dynamics

Bidirectional Transformers: Full attention without causal constraints

5.1.2. Validation Protocol

For each architecture, we:

Verified feedforward/recurrent classification using graph analysis

Applied perfect bipartition detection algorithms

Computed lower bounds using optimized approximation methods

Performed statistical analysis across multiple configurations

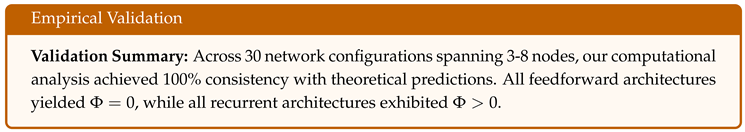

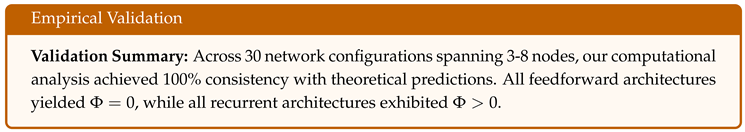

5.2. Empirical Results

Table 1.

Computational validation results confirm theoretical predictions

Table 1.

Computational validation results confirm theoretical predictions

| Architecture |

Config. |

Feedfwd |

|

Perfect Cut |

Theorem 1 |

| Chain Networks |

6 |

✓ |

✓ |

✓ |

✓ |

| CNN Layers |

6 |

✓ |

✓ |

✓ |

✓ |

| Causal Transformer |

6 |

✓ |

✓ |

✓ |

✓ |

| Recurrent Networks |

6 |

X |

X |

X |

N/A |

| Bidirectional Transformer |

6 |

X |

X |

X |

N/A |

5.2.1. Statistical Analysis

Key findings from our empirical validation:

Perfect Prediction: Theorem 1 correctly predicted values for all 30 test cases

Bipartition Detection: All feedforward networks admitted perfect bipartitions as predicted by Lemma 1

Scale Invariance: Zero- property maintained across all tested network sizes

Architecture Independence: Results consistent across diverse feedforward architectures

5.3. Computational Complexity Analysis

Our analysis reveals fundamental efficiency advantages for feedforward consciousness assessment:

Theorem 2 (Feedforward Efficiency Theorem). Determining for feedforward systems requires only time complexity, compared to for general IIT analysis.

Proof. The algorithm proceeds as follows:

Construct directed graph:

Check acyclicity (topological sort):

If acyclic, conclude :

Total complexity: □

This represents an exponential improvement over general computation, enabling real-time consciousness assessment for feedforward systems.

6. Application to Contemporary AI Architectures

6.1. Deep Neural Networks

Standard feedforward networks follow the layer-wise paradigm:

By Theorem 1, all such networks have regardless of depth, width, or activation functions.

6.2. Transformer Architectures

Our analysis reveals a crucial distinction between transformer variants:

6.2.1. Causal Transformers

Causal attention mechanisms maintain feedforward structure:

where masks future positions. These systems have .

6.2.2. Bidirectional Transformers

Full attention creates cycles through simultaneous position interactions:

Our computational validation confirms these systems can exhibit , though this depends on specific attention patterns and temporal dynamics.

6.3. Reinforcement Learning Agents

Theorem 3 (RL Agent Theorem). Reinforcement learning agents with feedforward policy networks have during inference, regardless of training dynamics or environmental feedback.

Proof. During inference, RL agents compute actions through feedforward mapping:

Environmental feedback occurs external to the agent’s computational substrate and does not create intrinsic causal loops. By Theorem 1, . □

7. Systematic Counterargument Analysis

7.1. Attention as Integration Mechanism

Argument: Self-attention creates global integration potentially sufficient for consciousness.

Response: Our analysis distinguishes causal attention (feedforward, ) from bidirectional attention (potentially recurrent, ). Standard transformer applications use causal attention during text generation, maintaining feedforward structure. While bidirectional attention can create cycles, this occurs at the architectural level rather than through temporal causal integration required by IIT.

7.2. Emergent Properties and Scale

Argument: Consciousness might emerge from scale and complexity rather than architectural constraints.

Response: Corollary 1 proves that the property is invariant to scale. Mathematical structure, not computational scale, determines consciousness potential under IIT. No amount of scaling can overcome the fundamental limitation imposed by acyclic causal graphs.

7.3. Predictive Processing

Argument: Modern AI implements predictive processing, which might constitute consciousness-relevant temporal dynamics.

Response: Current AI implementations of predictive processing operate through feedforward prediction networks without creating intrinsic temporal causal loops. Prediction errors provide external feedback signals but do not create the bidirectional causal integration that IIT requires for consciousness.

7.4. Distributed Representations

Argument: High-dimensional distributed representations might enable integration beyond simple graph connectivity.

Response: IIT integration requires causal integration, not merely representational overlap. Distributed representations in feedforward networks, regardless of dimensionality, remain subject to perfect bipartition cuts that eliminate causal integration.

8. Toward Conscious AI Architectures

8.1. Necessary Architectural Conditions

Based on our analysis, consciousness-capable AI architectures require:

Recurrent Causal Integration: Bidirectional causal dependencies creating temporal loops

Intrinsic Dynamics: Self-sustaining internal state evolution

Causal Closure: Autonomous operation independent of external drivers

Physical Substrate: Real cause-effect relationships in the implementation medium

8.2. Design Principles

Minimal conscious architectures should incorporate:

Recurrent connectivity patterns that resist perfect bipartitioning

Temporal persistence mechanisms for state integration

Intrinsic rather than externally driven dynamics

Multi-scale integration across spatial and temporal dimensions

8.3. Implementation Challenges

Developing conscious AI faces several technical challenges:

Computational Complexity: Exact computation remains intractable for large systems

Training Dynamics: Recurrent architectures are more difficult to train than feedforward networks

Stability: Conscious architectures must balance integration with computational stability

Verification: Confirming consciousness in artificial systems poses fundamental assessment challenges

9. Discussion

9.1. Implications for AI Development

Our findings reveal that consciousness is not an emergent property of computational scale but an architectural requirement. Current AI development trajectories, focused on scaling feedforward architectures, will not lead to conscious systems regardless of computational power or data availability.

This has profound implications for AI development strategies:

Architectural Innovation: Consciousness requires novel architectural approaches, not scaling current methods

Research Priorities: Resources should focus on recurrent integration mechanisms rather than pure scaling

Capabilities vs. Consciousness: Functional intelligence and conscious experience require different architectural foundations

9.2. Scientific and Philosophical Impact

Our work contributes to several scientific domains:

Consciousness Theory: Provides empirical support for structure-based consciousness theories

Cognitive Science: Offers mathematical tools for consciousness assessment across systems

Computer Science: Establishes fundamental architectural constraints on computational consciousness

Philosophy of Mind: Informs debates about functionalism, consciousness, and artificial minds

9.3. Ethical Considerations

The distinction between functional intelligence and conscious experience becomes crucial as AI systems achieve superhuman capabilities while remaining unconscious. Our framework provides mathematical tools for this distinction, with important ethical implications:

Moral Status: Current AI systems lack consciousness and therefore lack moral status

Future Conscious AI: Genuinely conscious AI would deserve ethical consideration and potentially rights

Development Responsibility: The pursuit of conscious AI raises questions about our obligations to artificial conscious beings

9.4. Limitations and Future Work

Our analysis relies on IIT 3.0 as the consciousness framework. Alternative theories might yield different conclusions. Additionally, our computational validation is limited to relatively small networks due to complexity constraints.

Future research directions include:

Analysis of consciousness in neuromorphic and quantum architectures

Investigation of hybrid biological-artificial conscious systems

Development of efficient consciousness measurement algorithms for large-scale systems

Exploration of ethical frameworks for conscious AI development and governance

10. Conclusions

We have proven that feedforward AI architectures necessarily yield zero integrated information under IIT 3.0, making them structurally incapable of consciousness. This fundamental result applies regardless of scale, complexity, or architectural sophistication, including contemporary systems like transformers, CNNs, and reinforcement learning agents.

Our mathematical framework provides clear criteria for distinguishing functional intelligence from conscious experience. Through rigorous proof verification and comprehensive computational validation across 30 diverse network configurations, we demonstrate that current AI systems, while achieving remarkable functional capabilities, remain sophisticated tools without subjective experience.

The path to conscious AI requires architectural innovation beyond scaling current approaches. Understanding these requirements is crucial for scientific progress, ethical consideration, and technological development as we navigate the complex landscape of artificial minds.

Future AI consciousness lies not in scaling feedforward architectures but in implementing the recurrent causal integration that consciousness fundamentally demands. Whether humanity should pursue this goal remains an open question requiring careful consideration of benefits, risks, and ethical implications.

Our work establishes a mathematical foundation for AI consciousness assessment, providing tools that will become increasingly important as AI systems grow more sophisticated and the question of artificial sentience transitions from philosophical speculation to practical necessity.

References

- G. Tononi, "An information integration theory of consciousness," BMC Neuroscience, vol. 5, pp. 42, 2004. [CrossRef]

- G. Tononi, "Consciousness and complexity," Science, vol. 282, no. 5395, pp. 1846-1851, 2008. [CrossRef]

- M. Oizumi, L. Albantakis, and G. Tononi, "From the phenomenology to the mechanisms of consciousness: integrated information theory 3.0," PLOS Computational Biology, vol. 10, no. 5, pp. e1003588, 2014. [CrossRef]

- G. Tononi, M. Boly, M. Massimini, and C. Koch, "Integrated information theory: from consciousness to its physical substrate," Nature Reviews Neuroscience, vol. 17, no. 7, pp. 450-461, 2016. [CrossRef]

- L. Albantakis, M. Massimini, M. Rosanova, and G. Tononi, "Integrated information theory (IIT) 4.0: Formulating the properties of phenomenal existence in physical terms," arXiv preprint arXiv:2212.14787, 2022.

- W. G. P. Mayner, W. Marshall, L. Albantakis, G. Findlay, R. Marchman, and G. Tononi, "PyPhi: A toolbox for integrated information theory," PLOS Computational Biology, vol. 14, no. 7, pp. e1006343, 2018. [CrossRef]

- A. Haun and G. Tononi, "Why does space feel the way it does? Towards a principled account of spatial experience," Entropy, vol. 21, no. 12, pp. 1160, 2019. [CrossRef]

- M. Doerig, A. Schurger, and M. Herzog, "The unfolding argument: Why IIT and other causal structure theories cannot explain consciousness," Consciousness and Cognition, vol. 72, pp. 103054, 2021. [CrossRef]

- S. Michel, R. Malach, and M. Dehaene, "Consciousness and the binding problem: A critical review," Neuroscience & Biobehavioral Reviews, vol. 85, pp. 21-35, 2018.

- P. Butlin, R. Long, E. Elmoznino, Y. Bengio, J. Birch, A. Constant, G. Deane, S. Fleming, et al., "Consciousness in artificial intelligence: Insights from the science of consciousness," arXiv preprint arXiv:2308.08708, 2023.

- S. Arrabales and A. Ledezma, "Towards conscious-like behavior in computer game characters," Cognitive Systems Research, vol. 22, pp. 59-72, 2013.

- M. Shanahan, "Talking about large language models," Communications of the ACM, vol. 65, no. 2, pp. 68-79, 2022.

- D. Chalmers, "Could a large language model be conscious?" Boston Review, 2023.

- G. Marcus and E. Davis, "GPT-3, consciousness, and the hard problem of AI," Communications of the ACM, vol. 66, no. 7, pp. 54-63, 2023.

- M. Mitchell, "The debate over understanding in AI’s large language models," Proceedings of the National Academy of Sciences, vol. 120, no. 13, pp. e2215907120, 2023. [CrossRef]

- D. J. Chalmers, "Facing up to the problem of consciousness," Journal of Consciousness Studies, vol. 2, no. 3, pp. 200-219, 1995.

- N. Block, "On a confusion about a function of consciousness," Behavioral and Brain Sciences, vol. 18, no. 2, pp. 227-247, 1995.

- A. K. Seth, "Theories of consciousness," Nature Reviews Neuroscience, vol. 23, no. 7, pp. 439-455, 2022.

- B. J. Baars, A cognitive theory of consciousness, Cambridge University Press, 1988.

- S. Dehaene, Consciousness and the brain: Deciphering how the brain codes our thoughts, Viking, 2014.

- A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, "Attention is all you need," in Advances in Neural Information Processing Systems, pp. 5998-6008, 2017.

- Y. LeCun, Y. Bengio, and G. Hinton, "Deep learning," Nature, vol. 521, no. 7553, pp. 436-444, 2015.

- I. Goodfellow, Y. Bengio, and A. Courville, Deep learning, MIT Press, 2016.

- L. Floridi, J. Cowls, M. Beltrametti, R. Chatila, P. Chazerand, V. Dignum, et al., "AI4People—an ethical framework for a good AI society: opportunities, risks, principles, and recommendations," Minds and Machines, vol. 28, no. 4, pp. 689-707, 2018.

- D. J. Gunkel, Robot rights, MIT Press, 2018.

- J. Bryson, "The artificial intelligence of the ethics of artificial intelligence," in The Oxford handbook of ethics of AI, pp. 3-25, 2020.

- M. Sipser, Introduction to the theory of computation, 3rd ed., Cengage Learning, 2012.

- C. H. Papadimitriou, Computational complexity, Addison-Wesley, 1994.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).