Submitted:

02 October 2025

Posted:

16 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Background

1.2. Gap

1.3. Scope

2. Design and Method

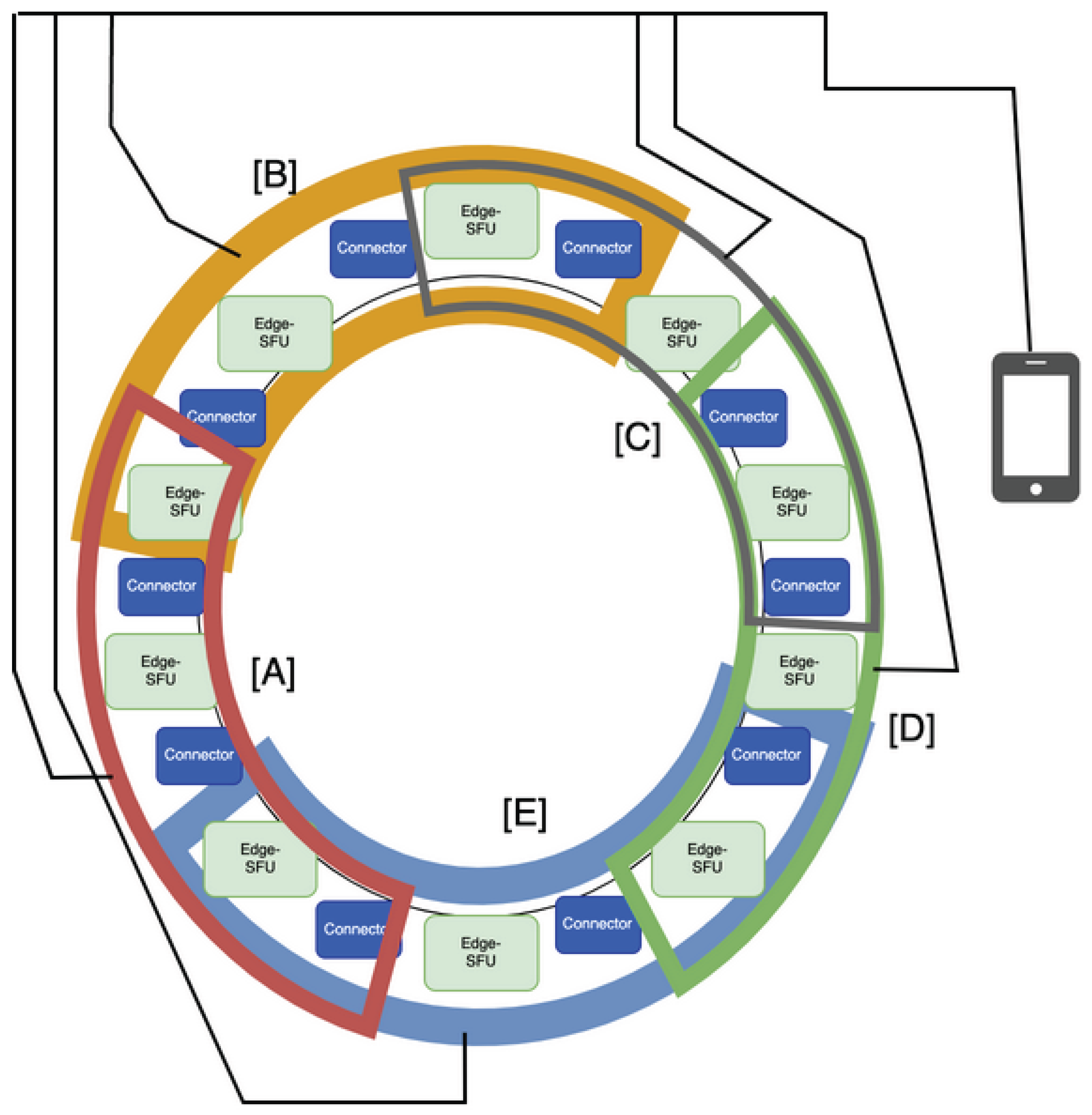

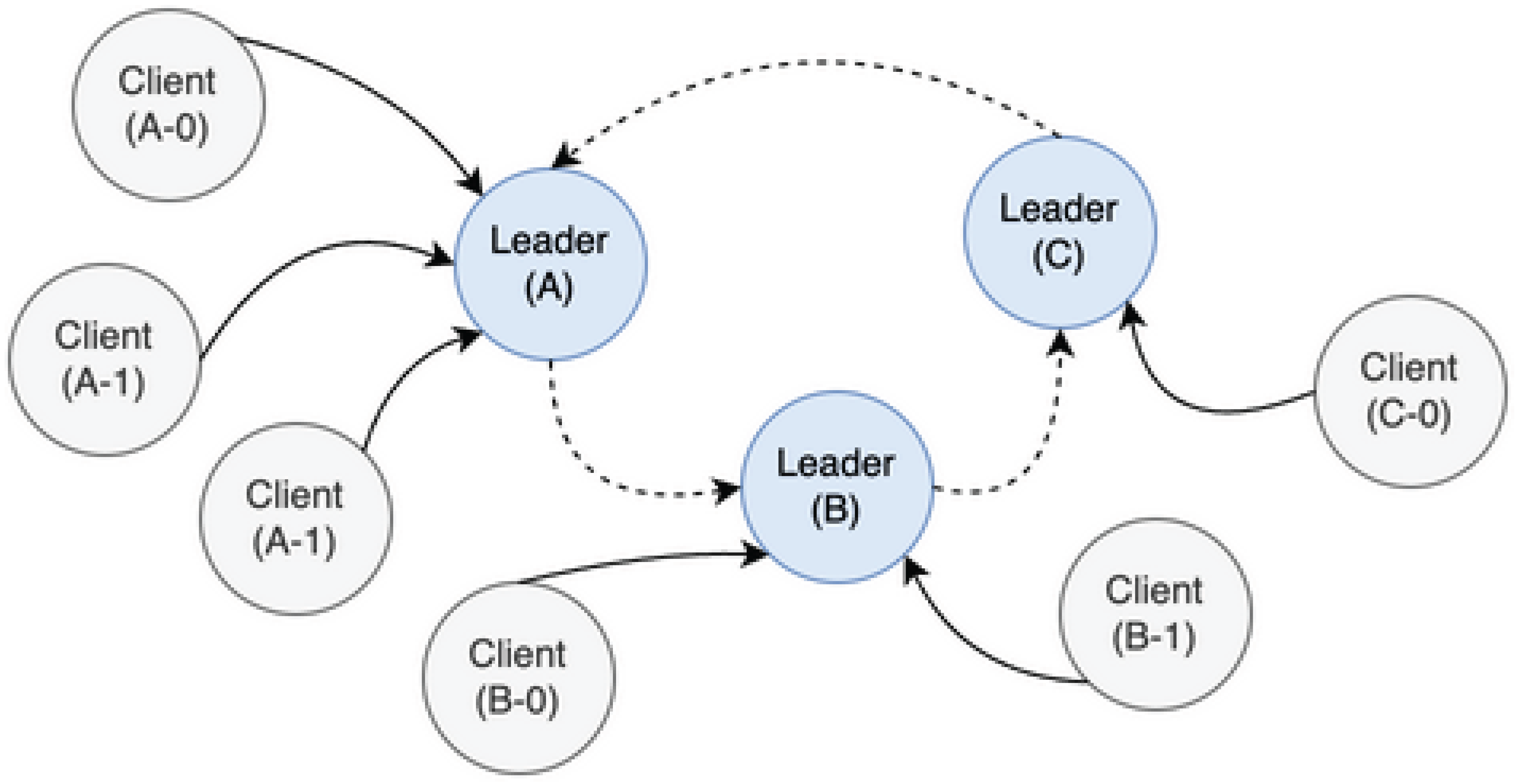

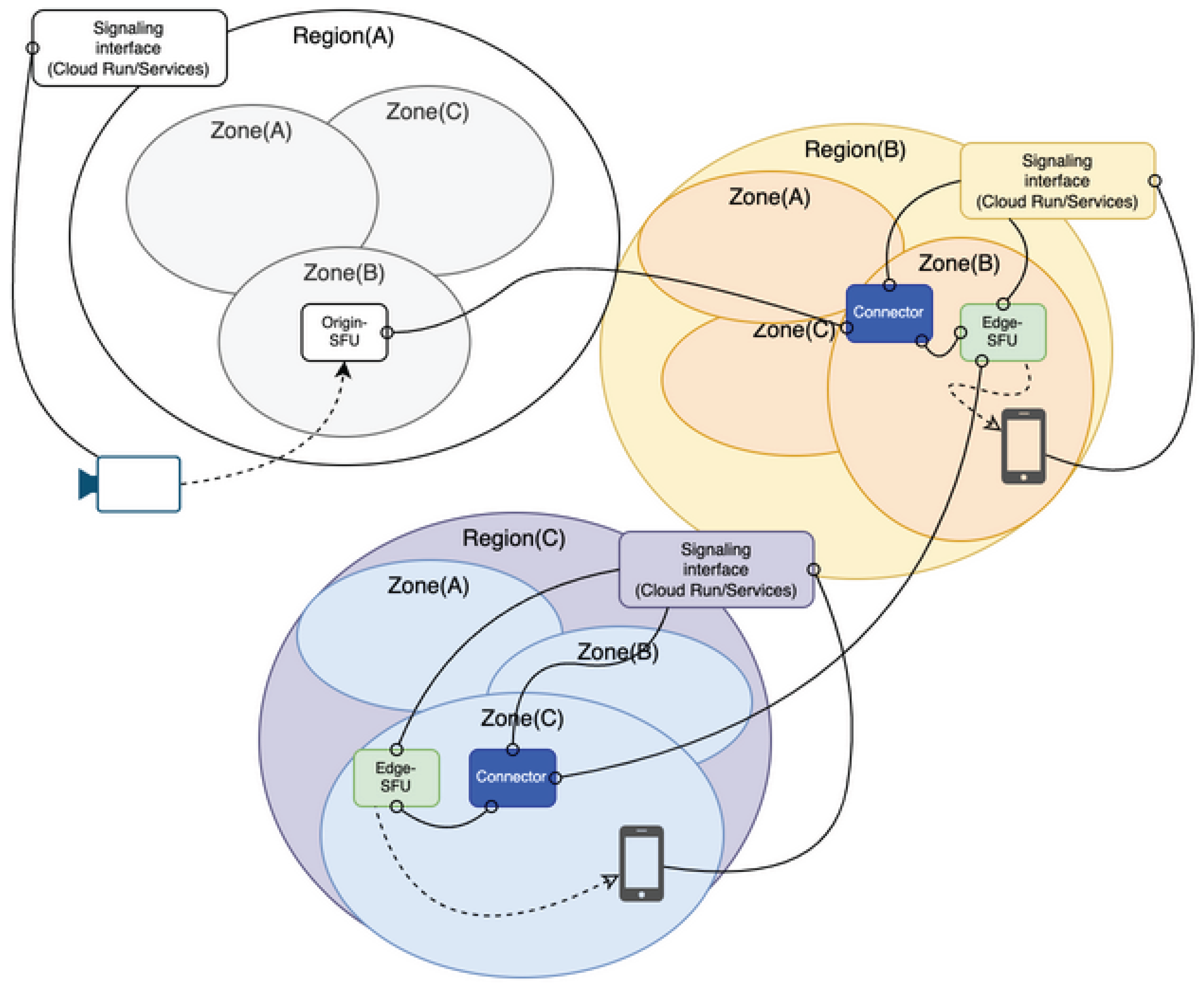

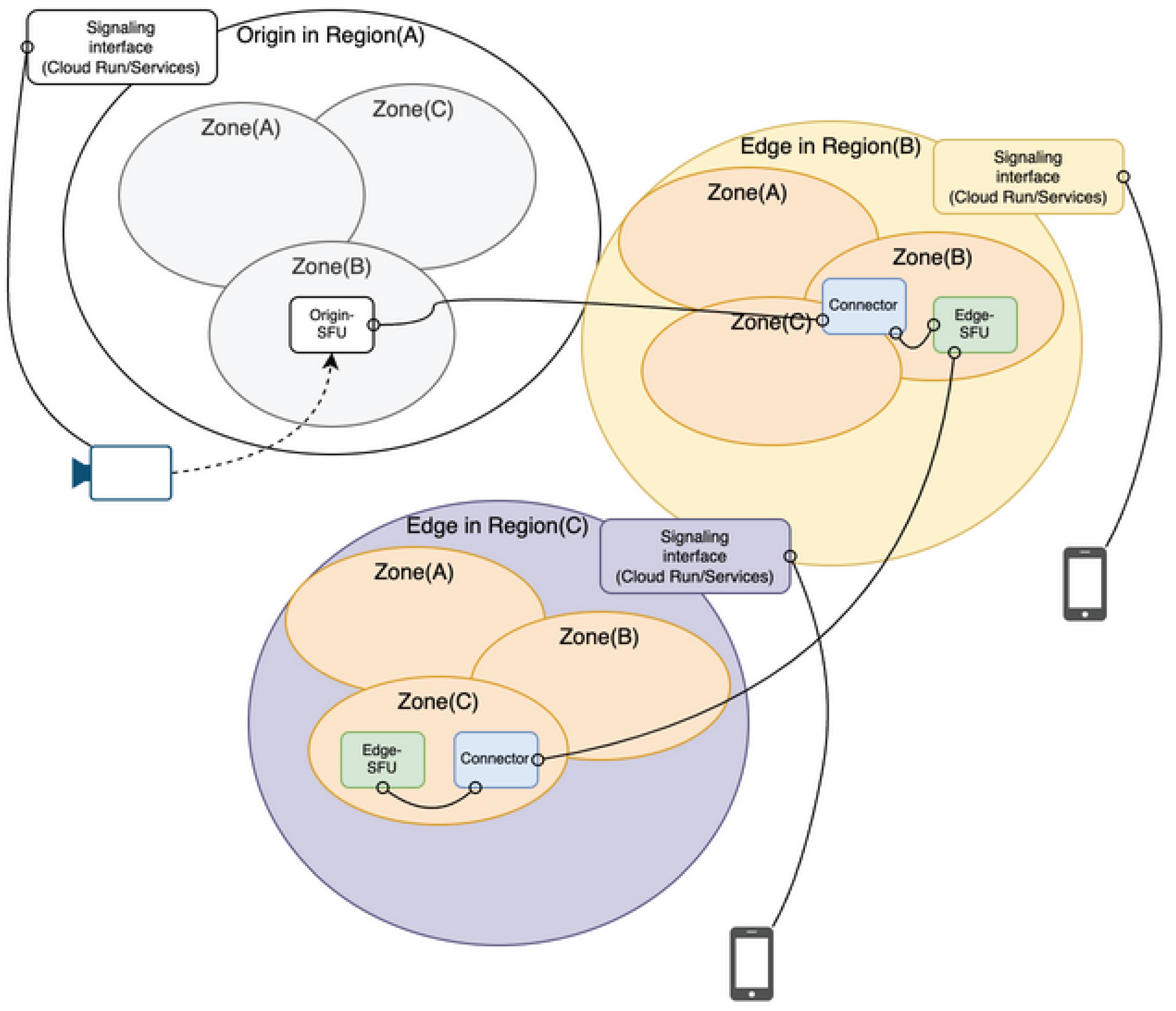

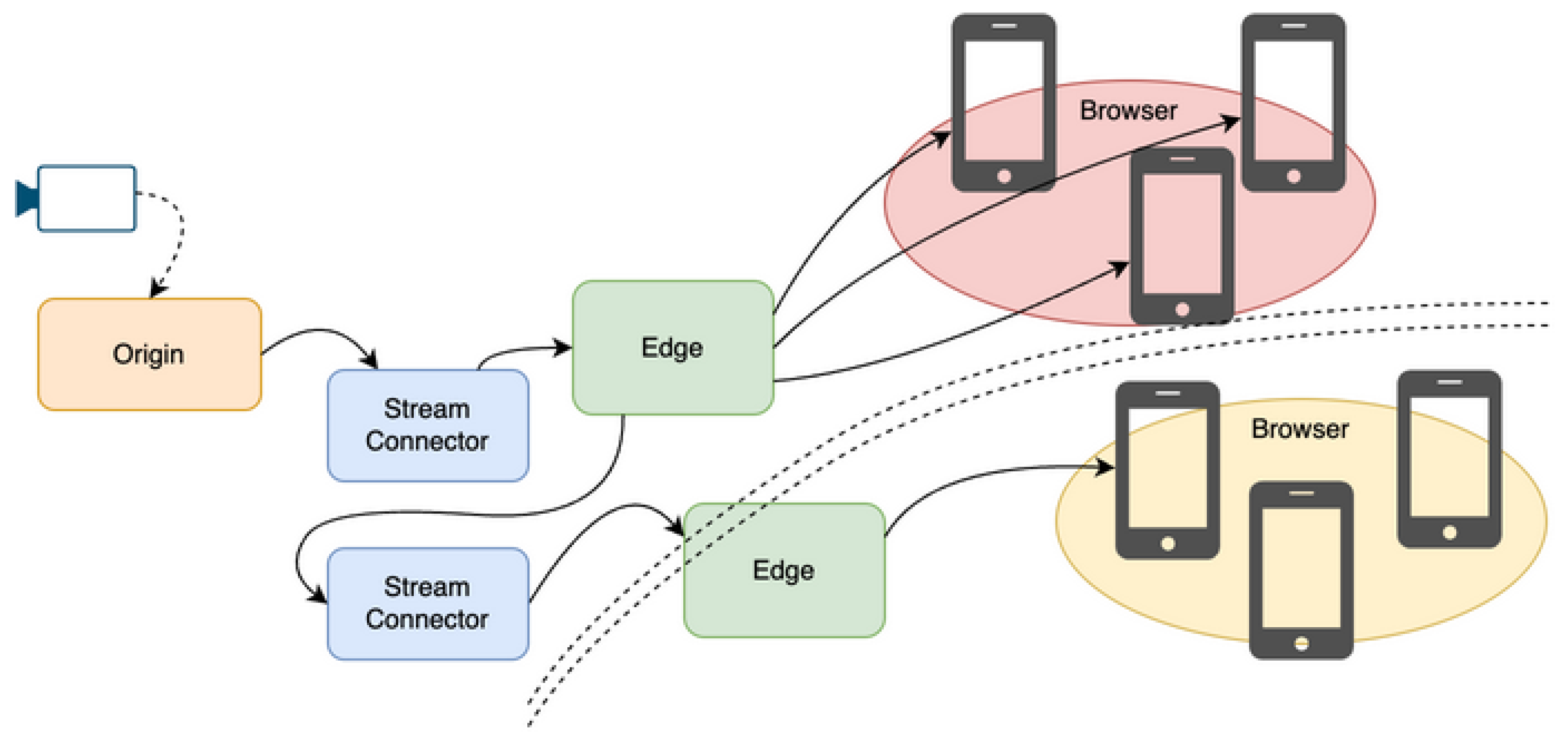

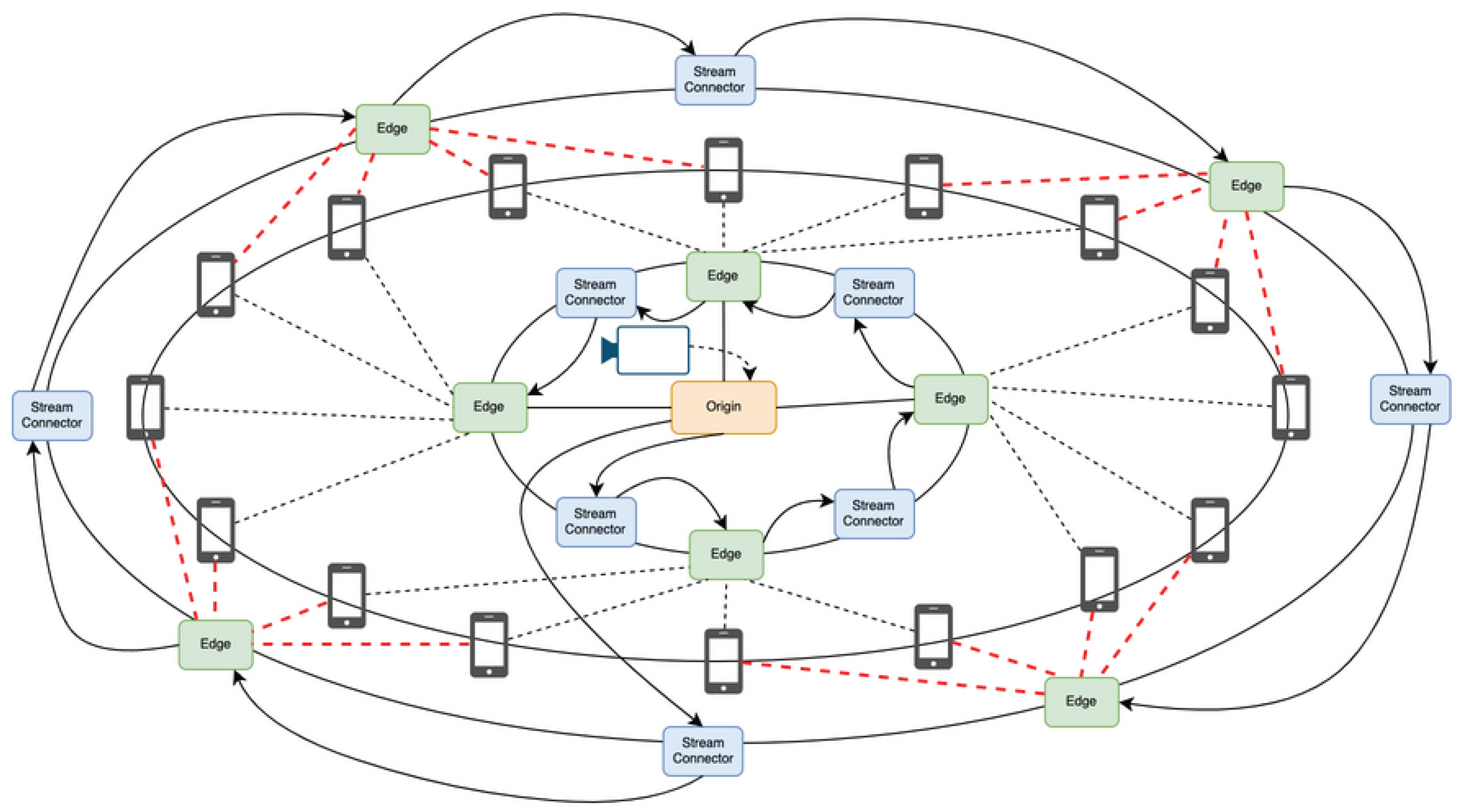

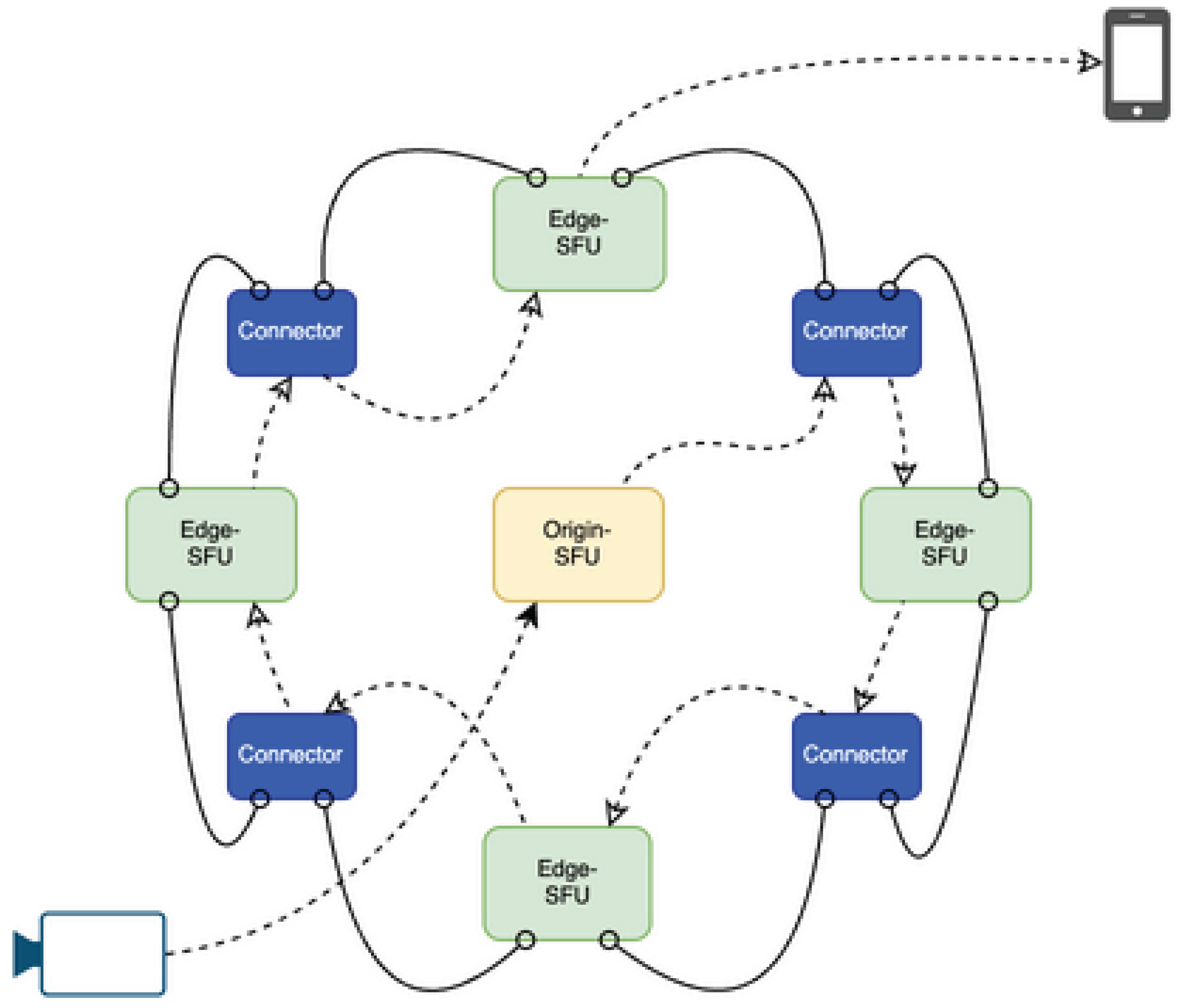

2.1. Self-Distributed Topology

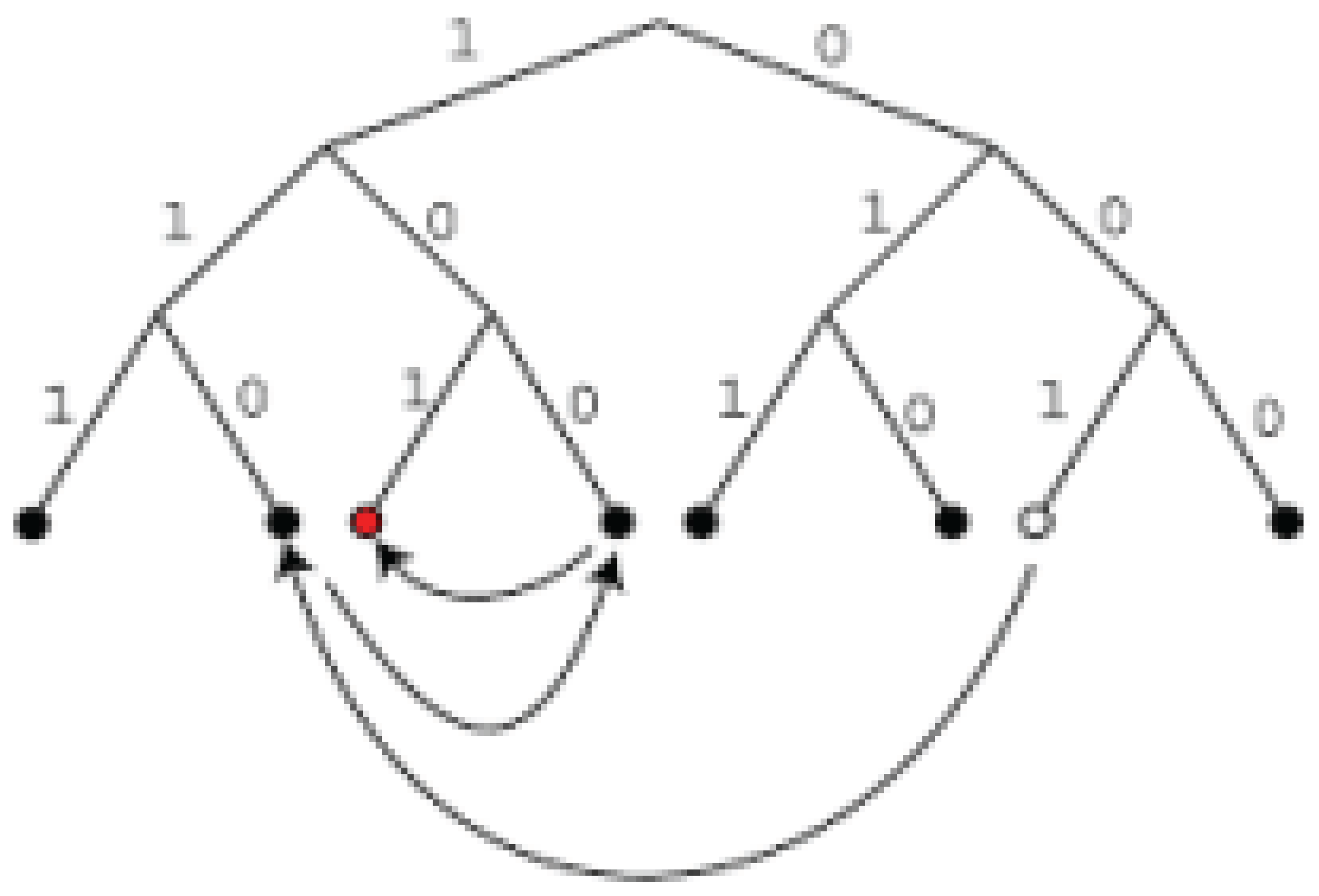

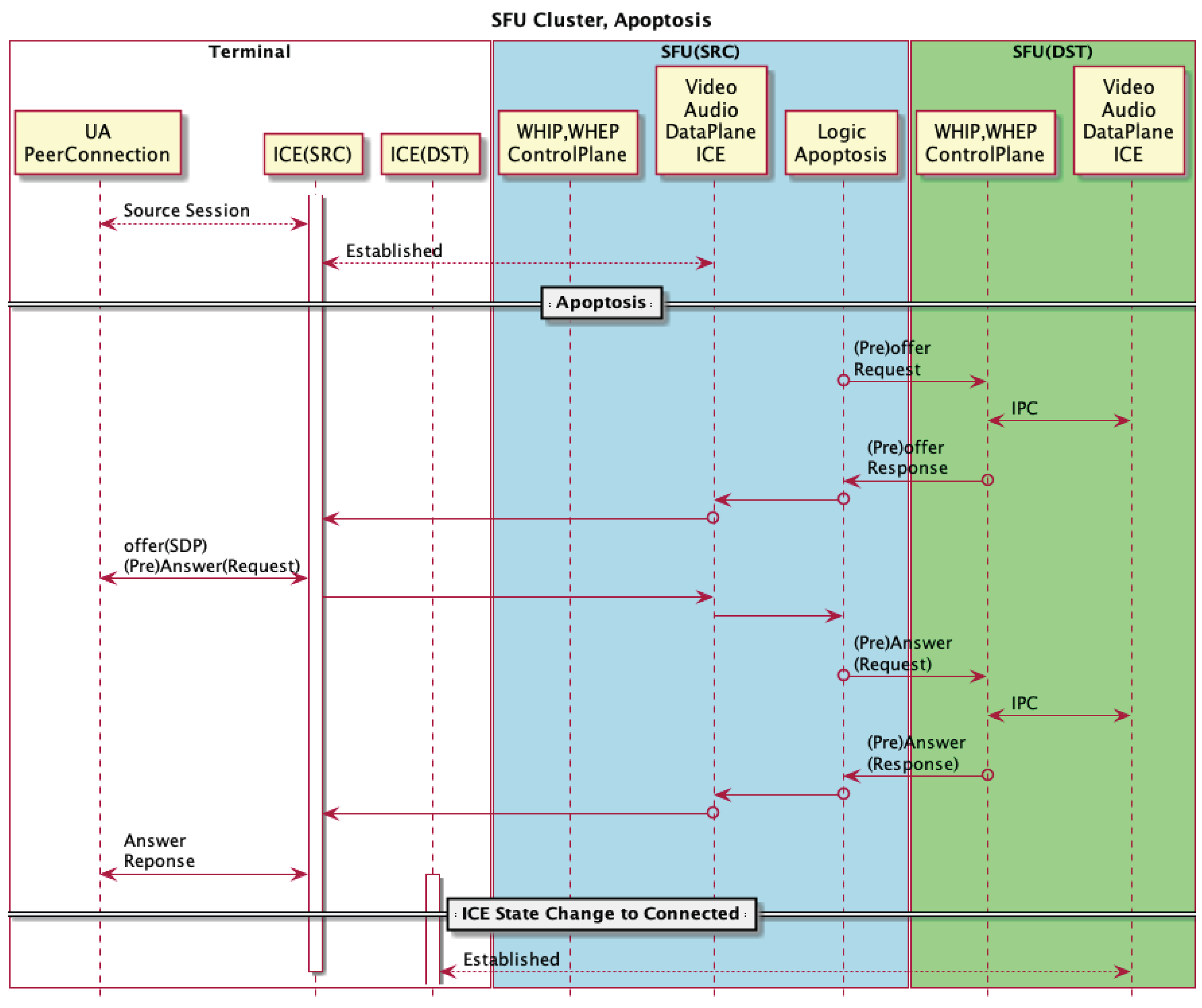

2.2. FSM-Based Protocols

2.3. HALT Metric

3. Implementation

3.1. Frameworks and Environment

3.2. Replication and Statistics Reporting

4. Evaluation / Observations

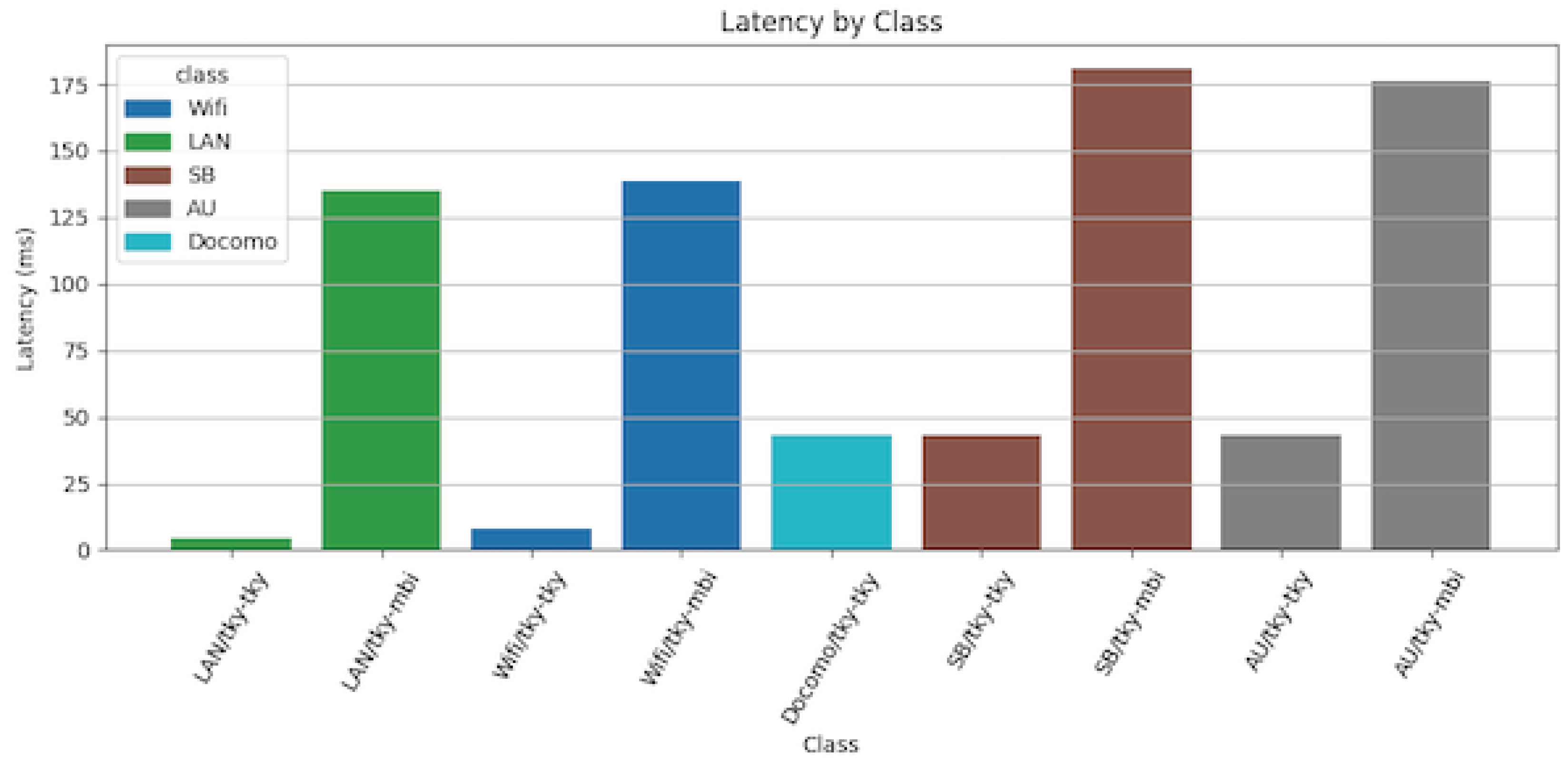

4.1. RTT Measurements

4.2. Effects of Edge Placement (Origin, Proxy)

4.3. Scope of Results

- Round-trip time (RTT) measurements were conducted in a limited set of deployment scenarios and summarized in Table 1.

- Effects of edge placement were observed in small-scale experiments, as illustrated in Figure 5

- The focus is on highlighting qualitative behaviors such as reduced RTT variance and improved stability when edge nodes are closer to clients.

5. Discussion

5.1. Practical Implications

- Elimination of central bottlenecks by relying on self-distributed coordination..

- Lightweight statistics exchange to keep overhead minimal.

- Resilient operation under unpredictable and dynamic traffic surges.

5.2. Limitations

- Large-scale Quality of Experience (QoE) evaluation remains untested.

- Additional research is required to confirm stability at production scale.

5.3. Distributed vs. Autonomously Distributed

- Distributed systems may still rely on controllers, coordinators, or managers.

-

This creates bottlenecks:

- -

- Oversized statistics collection systems

- -

- High reporting costs

6. Conclusions

6.1. Self-* Properties

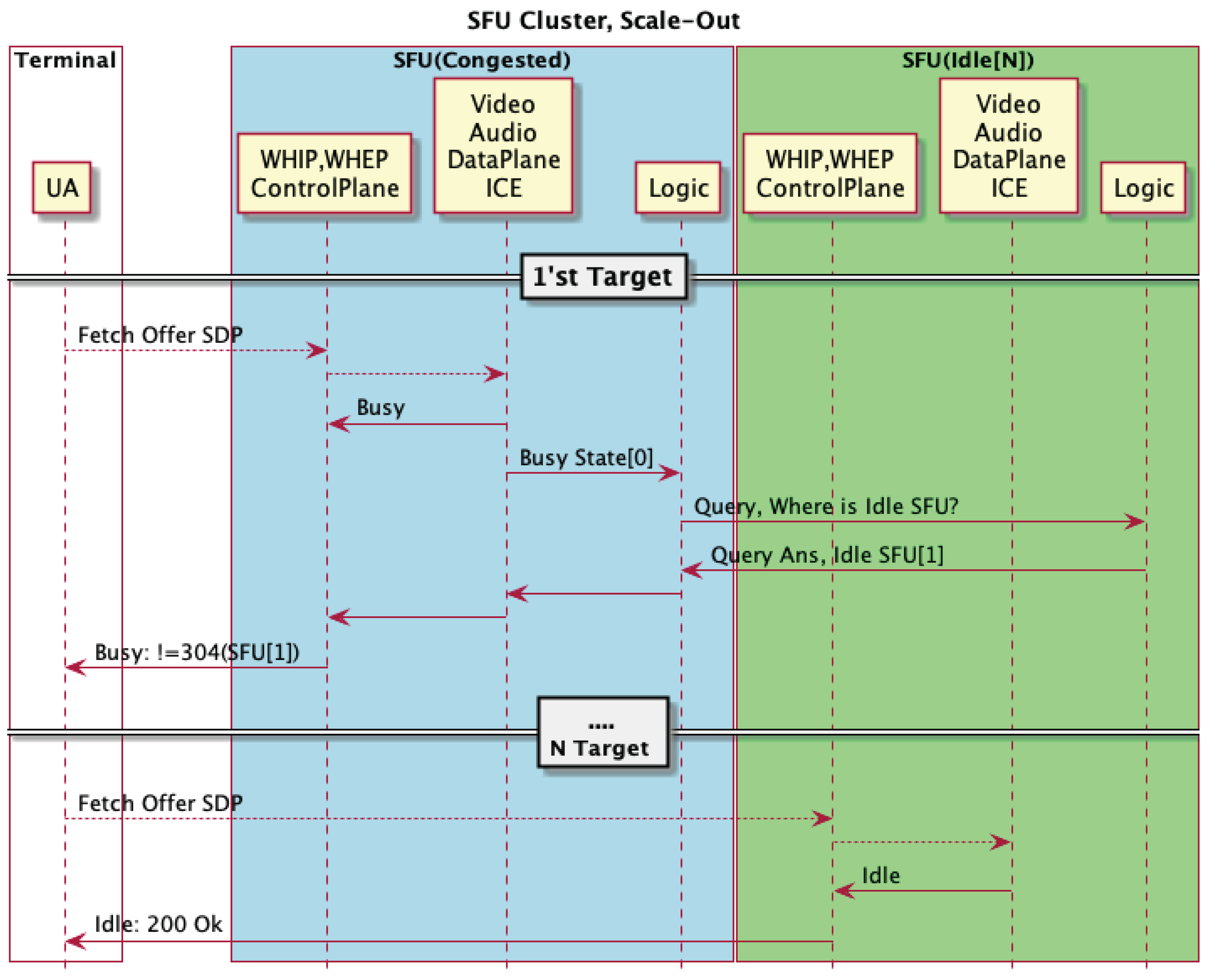

- Self-configuration: Edge-SFUs autonomously replicate and join clusters.

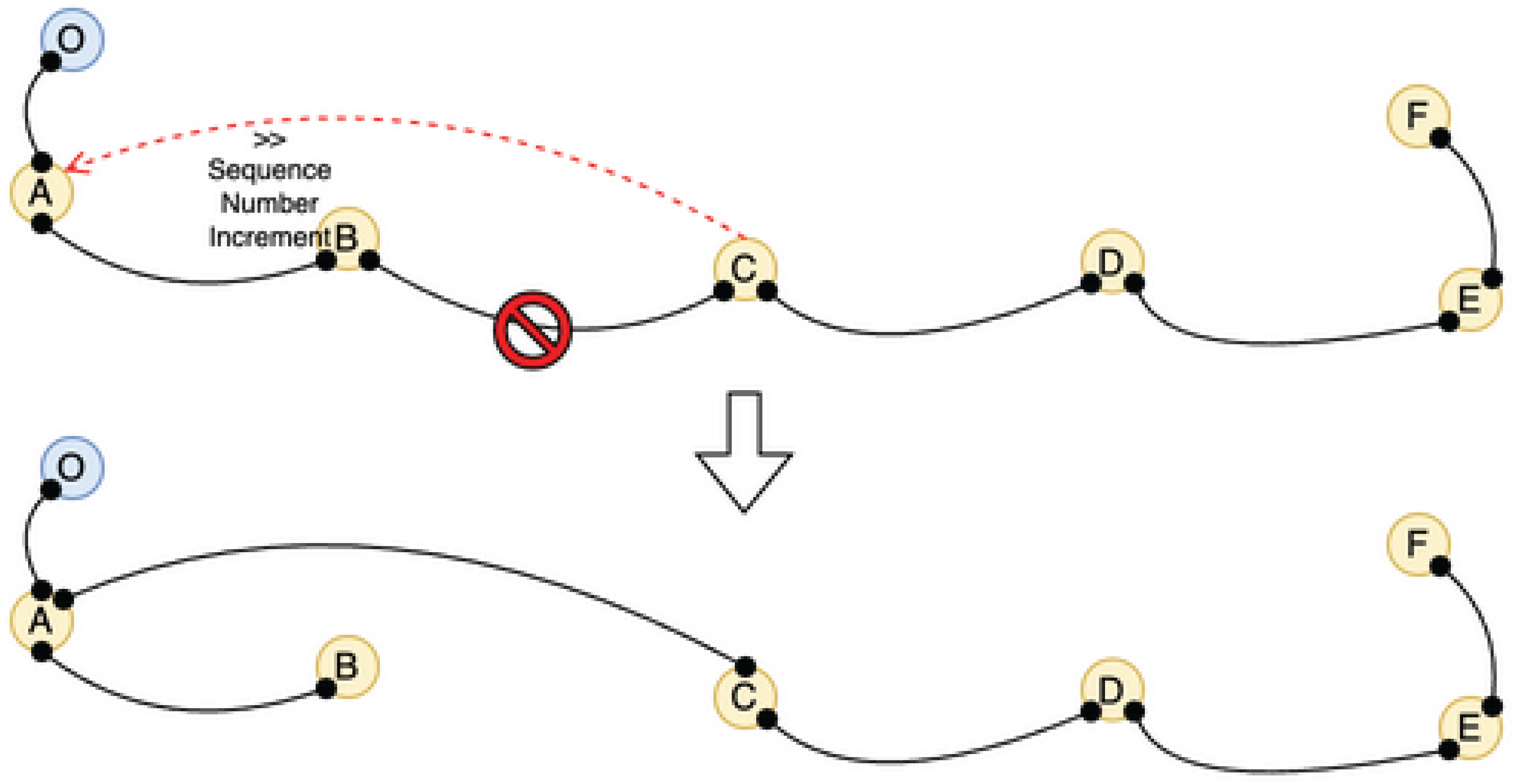

- Self-organization: routing and topology adapt dynamically to load and failures.

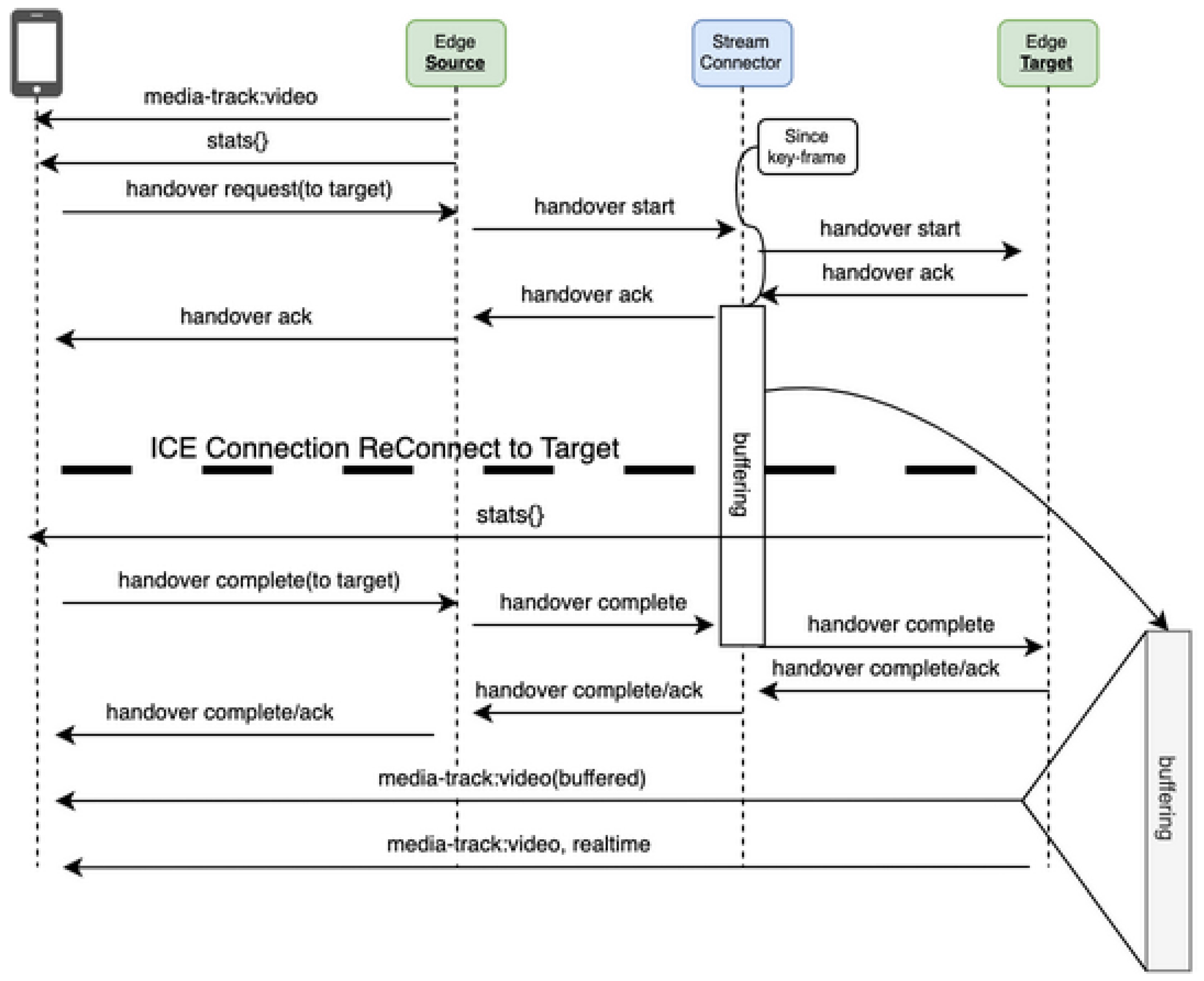

- Self-healing: nodes recover from crashes with seamless failover, Figure 15

- Self-management/optimization: HALT-driven adaptive policies (e.g., DSCP prioritization) sustain efficiency.

7. Latency and Connection Stability

8. Related Work

| Gossip [1] Quorum Consensus Figure 6 |

Reduces monitoring cost, but only at the observation layer, not at control. |

|---|---|

| ENTS [2] (Edge Task Scheduling) Figure 7, Table 3 |

Similar use case (video analytics) but focuses on centralized scheduling, not streaming. |

| DMSA [3] Decentralized Micro- services Table 4 |

Decentralizes monitoring but retains centralized discovery queries. |

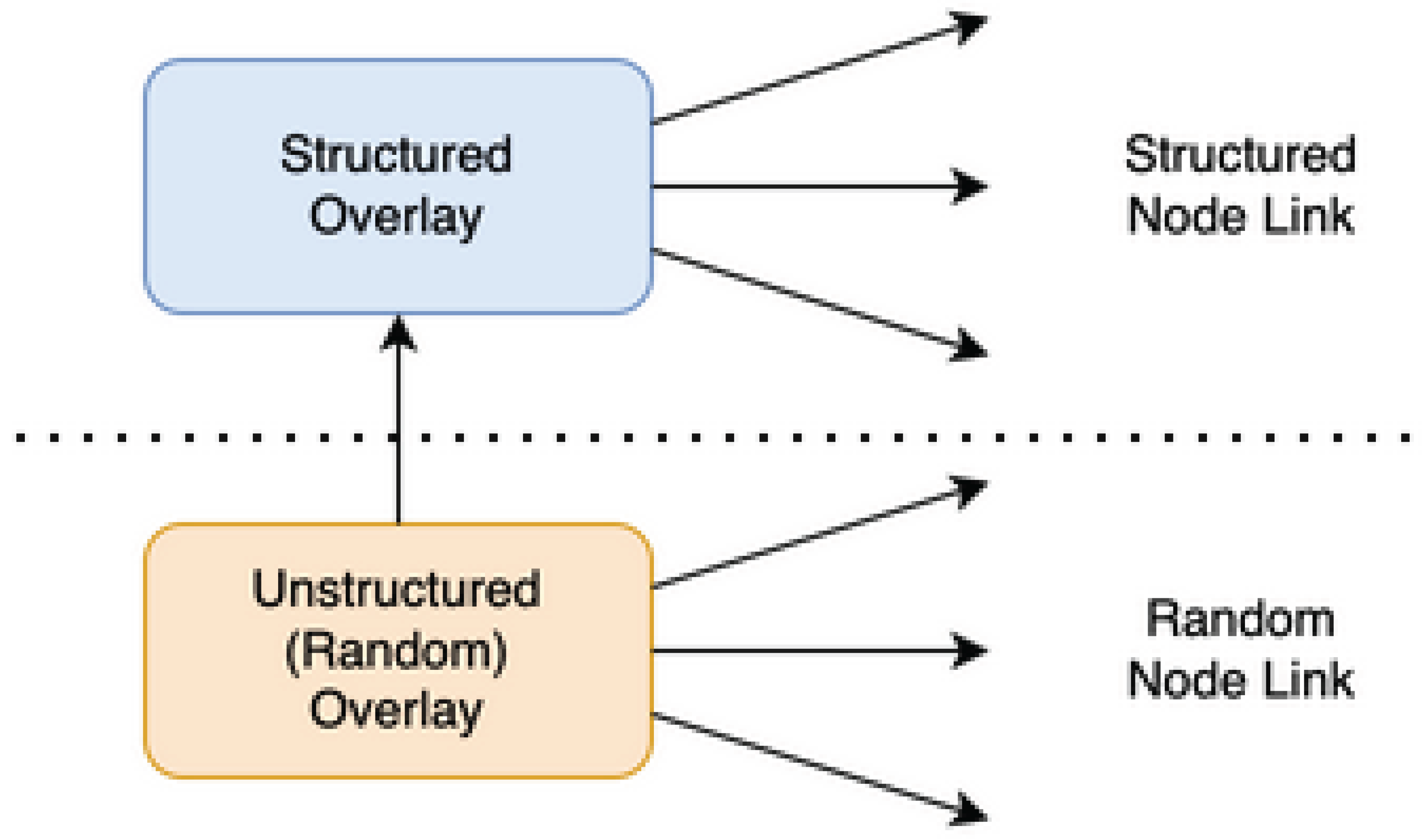

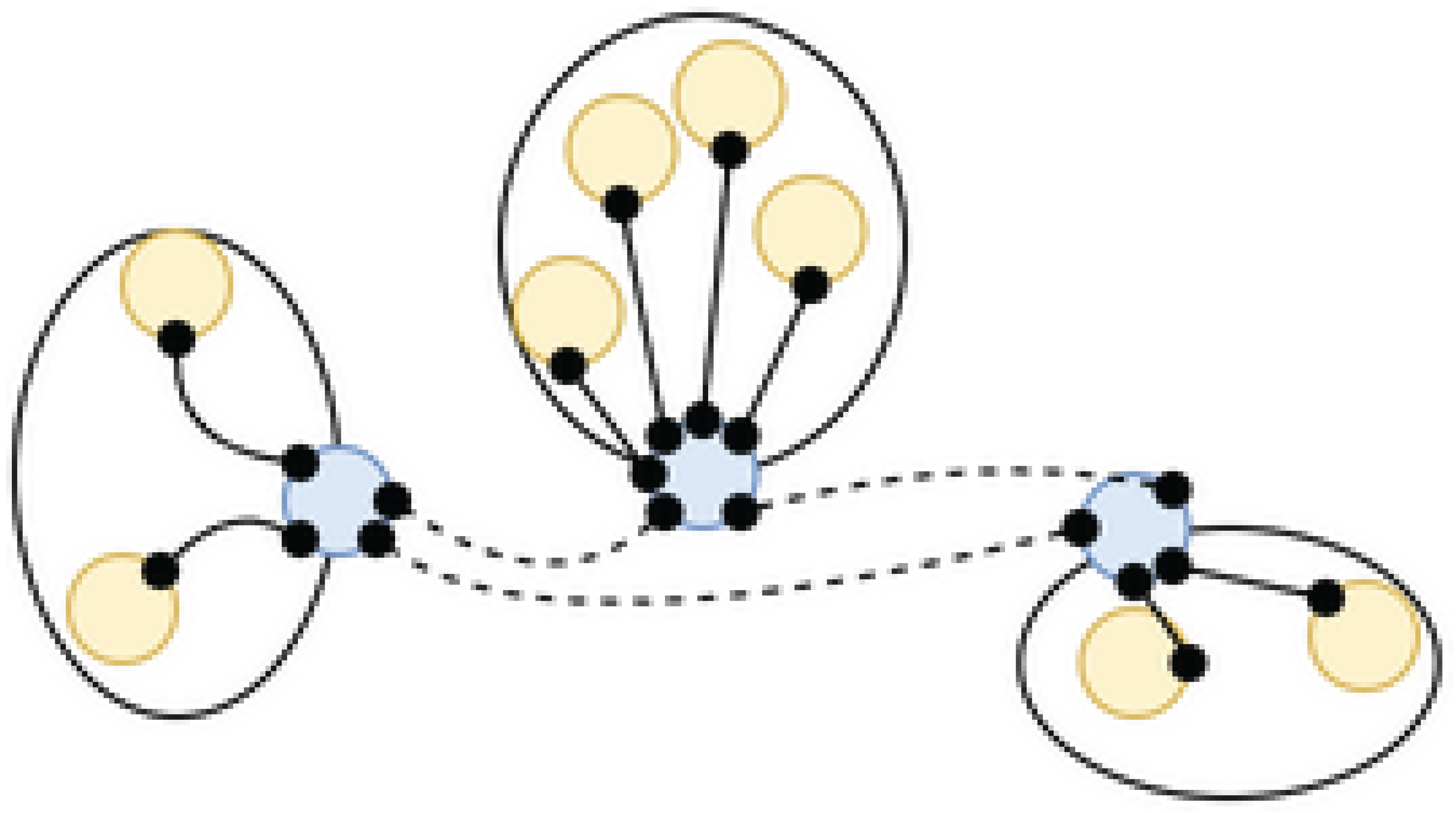

| P2P [12] Unstructured/Structured Figure 10 and Figure 11 |

Introduces hierarchical overlays with super-peers, which reintroduce centralization |

| Kademlia-based WebRTC, [6] Figure 8, Table 5 |

Efficient DHT, but requires global structure and is redundant for cloud-native environments (regions/zones already managed). |

| SDN-based Scalable conferencing, [5] |

Offloads SFU logic into P4 switches, skipping encryption/decryption, unsuitable for cloud cost-optimization |

| Enel Graph Propagation Scaling [7] |

Uses graph propagation with coordinators; unlike our fully autonomous FSM-based approach. |

| US20210218681A1 Flash Crowd Management In Real-Time Streaming [11] Table 6 |

Central servers predict and handle spikes, scaling at the cluster level. In contrast, our method uses HALT-driven autonomous Edge replication. |

| AWS Chime Media Pipelines [9] |

high abstraction and developer ease, but fully centralized, consuming extensive cloud resources. |

| Jitsi Conference Focus [10] |

similarly centralized, constrained by heavy backend control. |

| Item | ENTS | This Proposal |

|---|---|---|

| Use Case | Video Analysis: Data Stream Task | Video Delivery: WebRTC |

| Scheduling | Online Algorithm+Global Optimization | Scale-in/Scale-out via Local Decisions |

| Item | DMSA | This Proposal |

|---|---|---|

| Decentrali- zation Depth |

Depends on central list, API router nature | No central list needed,self-forming topology |

| Limit, Risk | Bottlenecks in reflecting changes to overall definition, redefinition of centralization | Minimal scale-in/out load |

| Item | Kademlia | This Proposal |

|---|---|---|

| Overview | Node search using XOR distance between node IDs | Selects optimal node using only local custom metrics per region/zone VM |

| Placement | Irregular and skewed node placement | Nodes structured logically and physically by region, zone, etc. |

| Search | O(log n) based on XOR distance | Real-time determination from RTT and HALT values of neighboring nodes |

| FSM | DHT distributed management | Nodes/devices manage only neighbor statistics |

| Failure | DHT redundancy | Nodes/devices manage only nearby statistics |

| Delay | k-bucket maintenance, DHT refresh | Node-specific local metric updates |

| Adaptability | Unspecified number of P2P nodes | GCP / Cloud, etc. Simple mechanism adapted to structured infrastructure |

| Item | US20210218681A1 | This Proposal |

|---|---|---|

| Traffic Spike Prediction |

Centralized, Central Server + Management System | Strongly Autonomous Distributed: Each Node Independently Decides, Acts |

| Scaling Granularity |

Scale-out at cluster level | Self-replication at local/neighboring Edge level |

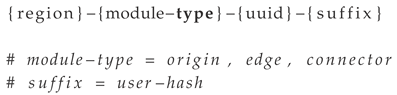

| Listing 1: Naming system. |

|

9. Implementation

10. Appendix

10.1. Code Listings

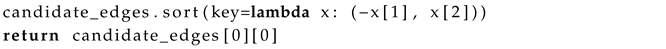

| Listing 2: Neighbor Edge Node Selection Algorithm. |

|

|

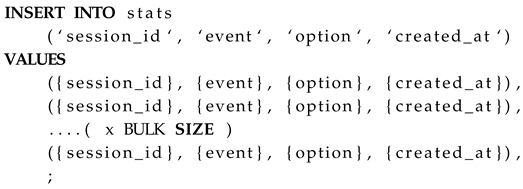

| Listing 3: Statistics Report. |

|

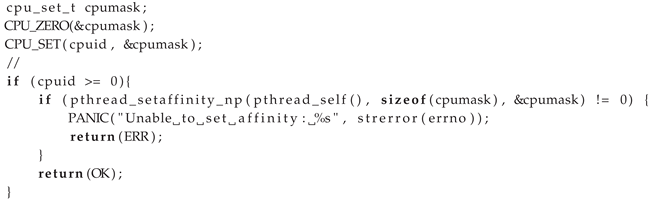

| Listing 4: CPU-Core-Mask. |

|

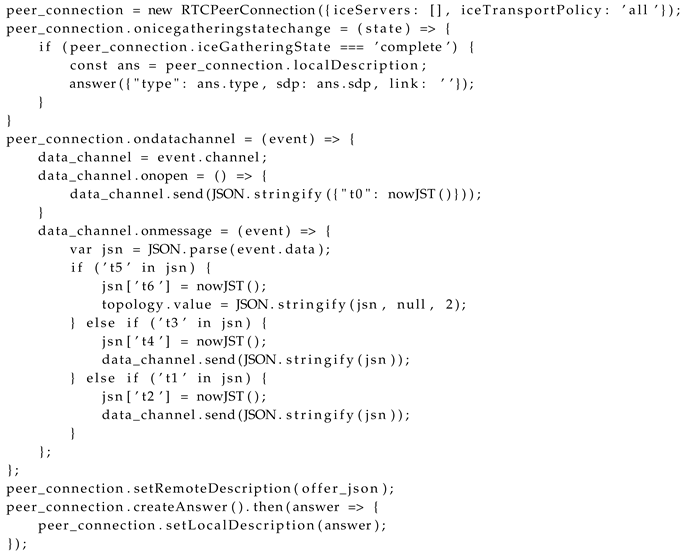

| Listing 5: Sample RTT measurement. |

|

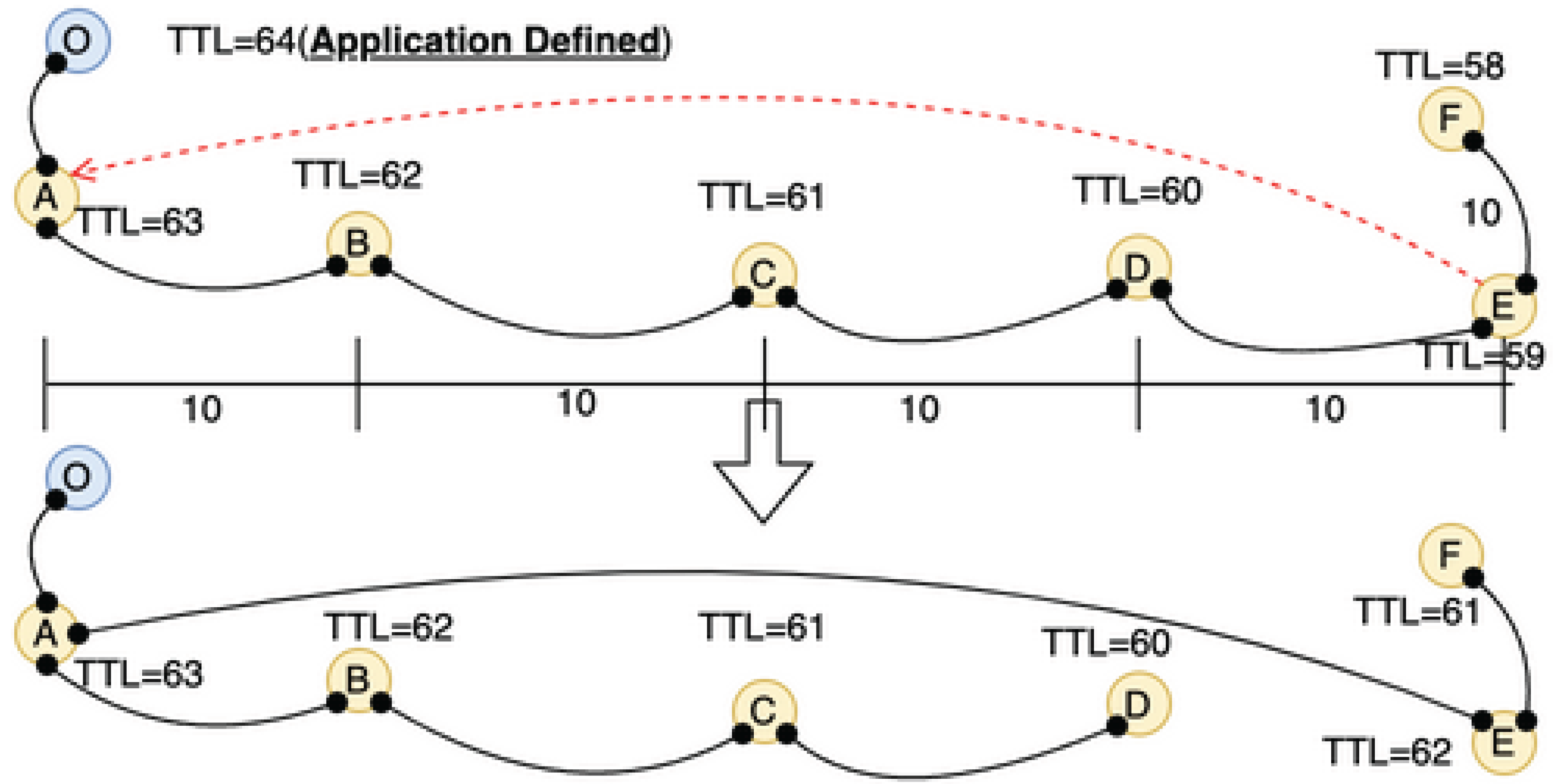

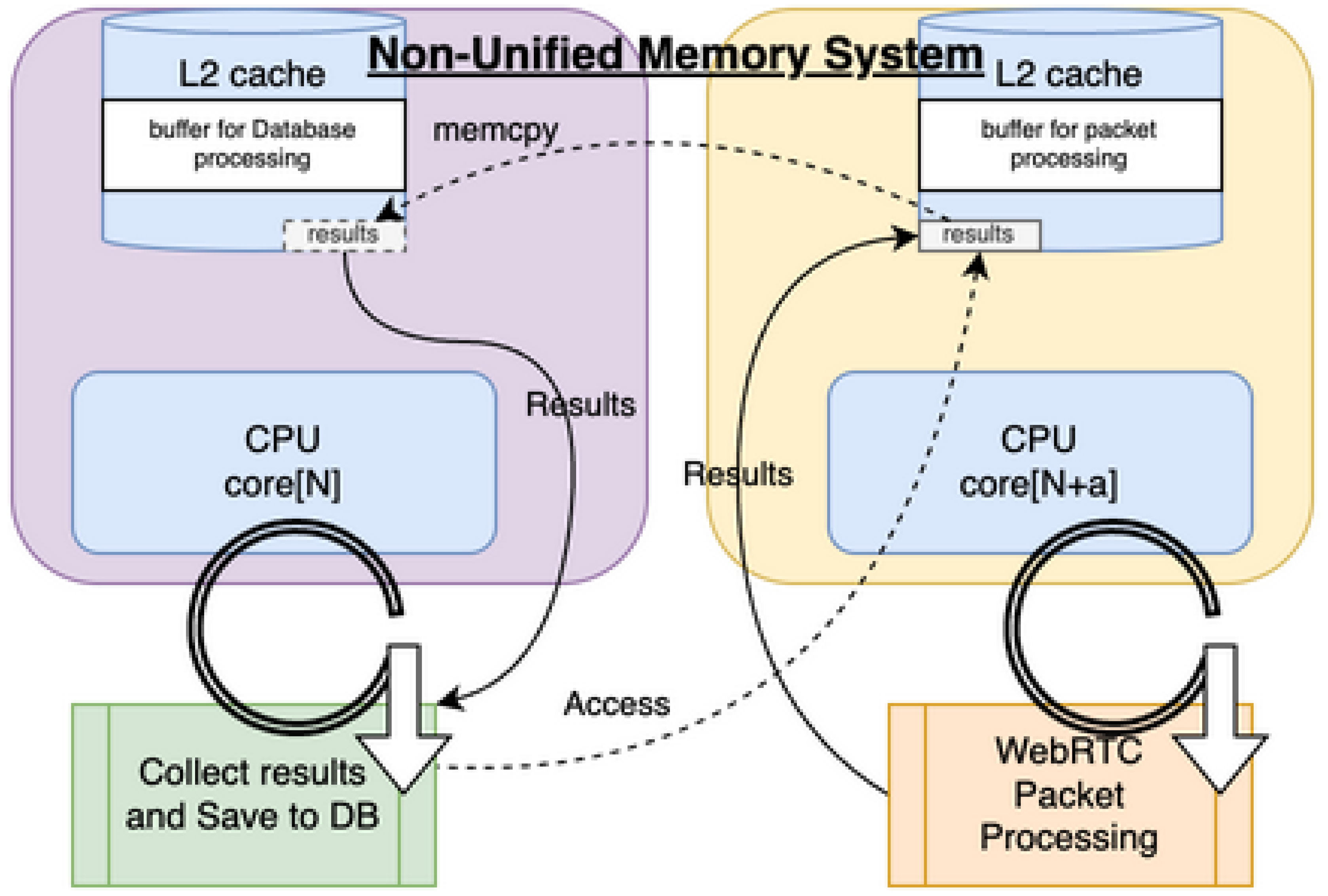

Appendix 10.2. Cache Pollution Avoidance

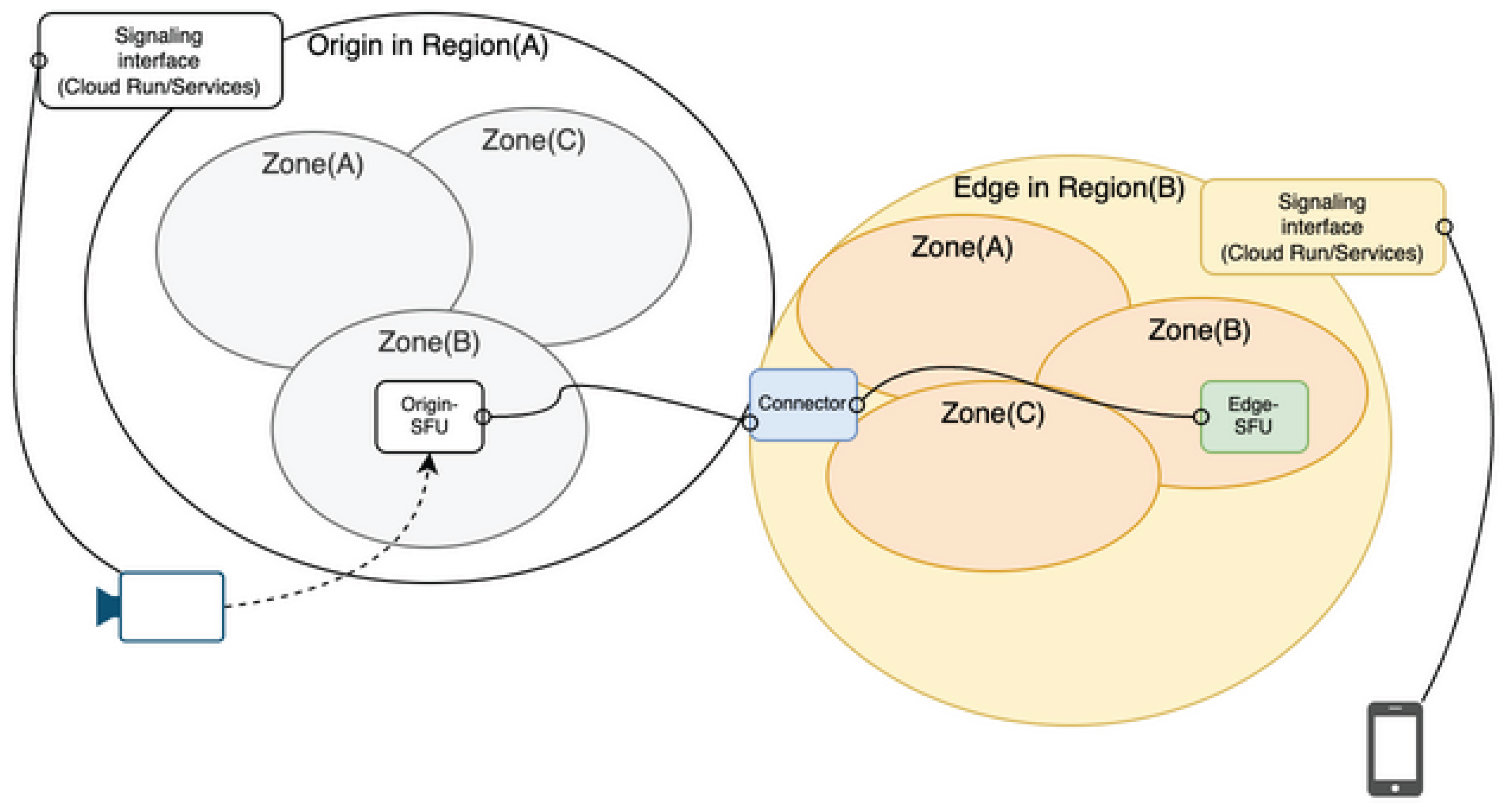

10.3. Origin-Edge Connectivity

- Origin-SFUs and Edge-SFUs connect through NAT traversal connector modules.

- Clients search for nearby Edge-SFUs; if none exist, a new Edge-SFU (B) is spawned near the client.

- The new Edge-SFU automatically connects to an existing Edge-SFU (A) and integrates into the topology.

10.4. Improving Accuracy of Statistics – HALT Value

- We introduce HALT (Hardware Available Load Threshold) as a real metric.

- HALT measures residual processing capacity after each loop, incorporating CPU idle, I/O waits, throughput, etc.

- This enables more reliable distributed decision-making

10.5. Oversized Statistics Systems

10.6. Handover

| Item | Overview |

|---|---|

| Mobile Device | UE(User Equipment) |

| HandOver Origin | Currently Connected Edge-SFU |

| HandOver Dest | Target Edge-SFU |

| Connector | Stream-Connector during handover |

| Buffer | Media(video) buffering during handover |

11. AI Disclosure

Author Contributions

Funding

Conflicts of Interest

References

- SHASHIKANT ILAGER. A Decentralized and Self-Adaptive Approach for Monitoring Volatile Edge Environments. http://arxiv.org/abs/2405. 0780.

- Mingjin Zhang. ENTS: An Edge-native Task Scheduling System for Collaborative Edge Computing. http://arxiv.org/abs/2210. 0784.

- Yuang Chen. DMSA: A Decentralized Microservice Architecture for Edge Networks. https://arxiv.org/pdf/2501. 0088.

- Chmieliauskas. Evaluation of Uplink Video Streaming QoE in 4G and 5G Cellular Networks Using Real-World Measurements. [CrossRef]

- Oliver Michel. Scalable Video Conferencing Using SDN Principles. https://arxiv.org/pdf/2503. 1164.

- Ryle Zhou. Decentralized WebRTC P2P Network Using Kademlia. https://arxiv.org/abs/2206. 0768.

- Dominik Scheinert. Enel:Context-Aware Dynamic Scaling of Distributed Dataflow Jobs using Graph Propagation. https://arxiv.org/pdf/2108. 1221.

- Shaher Daoud and Yanzhen, Qu. A COMPREHENSIVE STUDY OF DSCP MARKINGS’ IMPACT ON VOIP QOS IN HFC NETWORKS. https://aircconline.com/ijcnc/V11N5/11519cnc01.

- AWS Chime Media Pipelines. https://docs.aws.amazon.com/chime-sdk/latest/dg/media-pipelines.

- jitsi. Jitsi Conference Focus. https://github.

- US20210218681A1. Flash crowd management in real-time streaming. https://patents.google. 2021.

- Tao, GU. A Hierarchical Semantic Overlay for P2P Search. https://arxiv.org/pdf/2003. 0500. [Google Scholar]

- Babaoglu. Self-star Properties in Complex Information Systems: Conceptual and Practical Foundations. [CrossRef]

| 1 | |

| 2 | |

| 3 |

| EnterPrise LAN Tokyo-Tokyo | 4.3 ms |

|---|---|

| EnterPrise LAN Tokyo-Mumbai | 135.2 ms |

| EnterPrise Wifi Tokyo-Tokyo | 7.8 ms |

| EnterPrise Wifi Tokyo-Mumbai | 138.5 ms |

| Mobile Docomo Tokyo-Tokyo | 43.0 ms |

| Mobile Softbank Tokyo-Tokyo | 43.0 ms |

| Mobile Softbank Tokyo-Mumbai | 180.7 ms |

| Mobile AU Tokyo-Tokyo | 43.0 ms |

| Mobile AU Tokyo-Mumbai | 176.2 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).