Submitted:

07 November 2025

Posted:

20 November 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Background and Motivation

1.2. Related Work

1.3. Contribution

- We offer a thorough examination of domains, datasets, and tasks specifically within the field of Sentiment Analysis (SA). Additionally, we provide insightful analyses derived from the compiled information.

- Our exploration both machine learning and deep learning algorithms, including Support Vector Machines (SVM), Naive Bayes (NB), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs), played crucial roles. Subsequently, we present a comprehensive analysis of the advantages and disadvantages associated with these approaches in the context of SA.

- We delve into the challenges confronting Sentiment Analysis (SA) models, addressing issues such as the dynamic nature of language, context-dependent interpretations, and the prospect of constructing a knowledge graph representation for semantic analytics or establishing sentiment scores for each entity.

- We draw from the findings in the SA research publication, we present and succinctly outline the essential steps involved in constructing a SA system.

1.4. Paper Structure

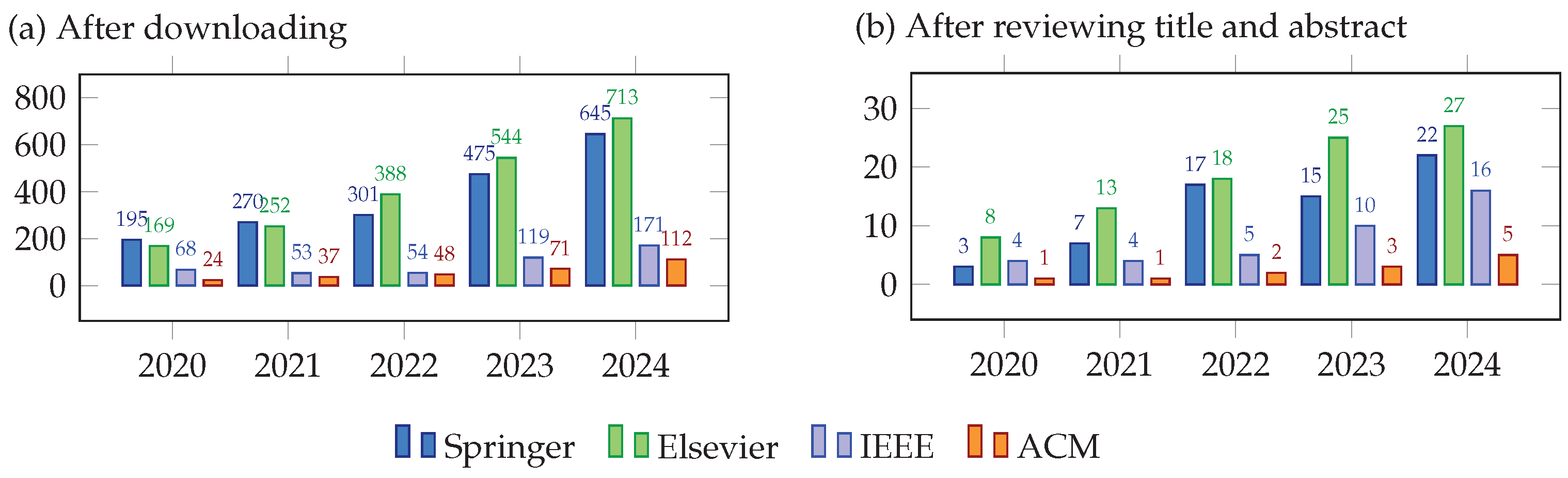

2. Review Methodology

- Published from 01/01/2020 to 30/11/2024.

- Written in English, not discriminating by geographical area and dataset language.

- Title or keywords or abstract of each paper has keywords: ("Sentiment Analysis" OR "Opinion Mining") AND ("Machine Learning" OR "Deep Learning" OR Classification) AND (Datasets OR Applications). The keywords are used in the Boolean search query based on the form requirements of each library.

3. Classification and Analysis

3.1. Overview

3.2. Domains

| Study | Datasets | Algorithms | Future Developments | |

|---|---|---|---|---|

| Source | Name/Feature | |||

| Domain: Finance (12 papers) | ||||

| Nti et al. [12] | Self+NO | Ghana Stock ExchangeTwitter | IKN-ConvLSTM(acc: 0.9831) | Data argumentation techniques, autoencoders to enhance. |

| Colasanto, F et al. [13] | 3rd+O | Financial Time | FinBert, Monte Carlo method | N/A |

| Windsor et al. [14] | Self+NO | Twitter and Sina Weibo | MF-LSTM(R2: 0.9848) | Fusion strategies, modal structures, modality selections, etc. |

| Hajek et al. [15] | Self+NO | EarningsCast database | LSTM, feature: Emotion + FinBert(acc: 0.954) | N/A |

| Kaplan et al. [16] | Self+O | Dow Jones Newswires, Reuters, Bloomberg, Platts.Investing.com | CrudeBERT(acc: 0.98) | Trained on the full article content, extending economic models: inflation and interest rates. |

| Chen et al. [28] | 3rd+Self+O | Wind Financial TerminalPeople’s Daily, Xinhua News Agency, Global Times, Sina Finance, Tencent Finance, Autohome | SEN-LSTM(R2: 0.63) | Use of Knowledge Graph in building the relationships in financial texts, and improve forecasting effects. |

| Meera et al. [39] | Self+NO | financial news headlines | Word2Vec-TFIDF + SVM(acc: 0.82) | hybrid feature extraction techniques with deep learning and ensemble models. |

| Mishev et al. [47] | 3rd+O | LexisNexis databaseSemEval-2017 task | BART-Large(acc: 0.947) | Extend to Health, Legal, Business. |

| Ochilbek Rakhmanov [48] | Self+NO | Global PortalTwitter | DL-GuesS (price predict + sentiment analysis) | N/A |

| Ganglong et al. [53] | Self+NO | financial text | FinBERT + BiGRU + attention mechanism(acc: 0.95) | real-time retraining and error analysis. |

| Mishev et al. [49] | Self+NO | dataset pre-trained (328,326 tweets about crypto currencies and 140,000 tweets on the currency, stock and gold markets)dataset for sentiment (16452: 10631 from stock markets, 4821 headline about financial, 1000 crypto) | Keras Embeddings + LSTMKeras Embeddings + RNN(acc: 0.84) | N/A |

| Du et al. [58] | 3rd+O | SemEval 2017 Task 5FiQA Task 1 | RoBERTa + XLNet(R2: 0.71) | a new technique for knowledge embeddings, and the effectiveness of different transformer architecture. |

| Domain: Product (17 papers) | ||||

| Iddrisu et al. [23] | 3rd+O | Twitter, Kaggle | TF-IDF + optimized SVM(acc: 0.99) | N/A |

| Patel et al. [24] | 3rd+O | Airline reviews gathered from Kaggle | BERT(acc: 0.83) | BERT variants and many more can be used for future development. |

| Ahmed et al. [34] | 3rd+O | SemEval (6055 reviews Laptop, Restaurants)Product Review (1208 reviews on amazon)MAMS (Food and services) | Embedded CNN + BiLSTM(Acc: 0.83, F1: 0.81) | improve the accuracy |

| Ye et al. [35] | 3rd+O | Official website of Douban Books (reviews of 28 Douban education books and 8050 reviews) | LDA BERT | N/A |

| Atandoh et al. [36] | 3rd+O | IMDB, Amazon reviews | B-MLCNN(acc: 0.95) | Identify the explicit polarity based on the contextual position of the text. |

| Gunawan et al. [37] | Self+NO | TripAdvisor | SVM(acc: 0.79) | N/A |

| Source | Name/Feature | |||

| Domain: Finance (12 papers) | ||||

| Greeshma et al. [42] | 3rd+O | IMDb | BiGRU + GloVe + attention(acc: 0.98) | Addressing data biases. |

| Khushboo et al. [43] | 3rd+O | women’s clothing e-commerce reviews (23,486 rows and 10 feature variables) | DistilBERT (Acc: 0.96 for SC and 0.91 for PR) | spam detection, fraud detection, disease detection. |

| Kumar et al. [54] | Self+NO | amazon.com | DPTN + GSK (Acc: 0.95) | integrating self-attention representations. |

| Maroof et al. [55] | Self+NO | mobile app reviews | SVM, DL (Acc: 0.91, 0.92) | N/A. |

| Sherin et al. [56] | 3rd + O | Sentiment 140 dataset, T4SA dataset and Airline Twitter datasets | EAQ-FEE-enBi-LSTM-GRU (Acc: 0.95) | LLaMA’s capabilities in handling large-scale NLP tasks. |

| Rahman et al. [57] | Self + NO | Customer Surveys; Online Reviews; Chatbox Messages | BERT (Acc: 0.95) | N/A. |

| Perti et al. [59] | Self+NO | Twitter (14 Jan 2022 to 27 Dec 2022) of Mobile Phones, Laptops, and Electronic Devices | 4-Conv-NN-features+SVM(acc: 0.845) | Would be experimenting with BERT-based embeddings. |

| Gamal et al. [63] | 3rd+O | 4 datasets: IMDB, Cornell movies, Amazon and Twitter | NB, SGD, SVM, PA, ME, AdaBoost, MNB, BNB, RR and LR with two FE algorithms (n-gram and TF–IDF). Acc: 0.87-0.99 | making an experiemnt the detection of sarcasm and applying sentiment analysis to more domains and cross-domains. |

| Palak Baid et al. [64] | Self+NO | IMDB | NB, RF, K-NN (Acc: 0.81, 0.78, 0.55) | N/A |

| Ali et al. [65] | Self+NO | IMDB | MLP, CNN, LSTM, CNN-LSTM (Acc: 0.86, 0.87, 0.86, 0.89) | N/A |

| Nguyen et al. [66] | Self+NO | thegioididong.comvatgia.comtinhte.vn | Ontology | N/A |

| Domain: Health (11 papers) | ||||

| Bansal and Kumar [17] | Self+NO | Web scrapping 500 hospitals | Semantic method | N/A |

| Areeba and Elio [20] | 3rd+O | 10,000 tweets on COVID-19 vaccines from Kaggle | CT-BERT enhanced with convolutional layers (Acc: 0.875) | Integration with Diverse Data Sources, Real-Time Analysis, Enhanced Models |

| Colon-Ruiz et al. [29] | 3rd+O | Drugs.com | BERT embeddings + LSTM(F1: 0.947) | Explore new techniques in order to reduce the dependence on annotated corpora and the use of semantic features in order to improve the fine-tuning of these approaches. |

| Basiri et al. [30] | 3rd+OSelf+NO | Sentiment140 dataset for training8 datasets from 8 countries to classify (2020-01-24 to 2020-04-21) | Base learner (CNN, BiGRU, fastText, NBSVM, DistilBERT) + meta learner (XGBoost method)Acc: 0.858 | focusing on the opinions published by special communities or target societies to find its impact on the public sentiment and mood. |

| Meena et al. [31] | 3rd+O | Monkeypox Tweets (61379) | CNN-LSTM(acc: 0.94) | exlpore the more robust techniques. |

| Suhartono et al. [32] | 3rd+O | Drugs.com | GloVe + BERT (acc: 0.8487) | N/A |

| Valarmathi et al. [40] | 3rd+O | Kaggle: covid-19-tweets45 | NeatText, TextBlob API, LSTM (Acc: 0.96) | N/A |

| Han et al. [50] | 3rd+Self+NO | SentiDrugs | PM-DBiGRU(acc: 0.78) | Explore a more effective model of aspect-level sentiment classifcation in the medical background. |

| Source | Name/Feature | |||

| Domain: Health (11 papers) | ||||

| Sweidan et al. [51] | Self+NO | AskaPatient, WebMD, DrugBank, Twitter, n2c2 2018, TAC 2017 | Ontology-XLNet + BiLSTM(acc: 0.98) | explore multilingual models, and would be investigating the semi-automated methods for building ontology in the context of sentiment analysis |

| Bengesi et al. [52] | Self+O | Twitter(self-build 500,000 tweets, 103 languages with keyword #monkey pox) | TextBlob annotation, Lemmatization, CountVectorizer, and SVM(acc: 0.93) | Word embeddings (example: doc2Vec) and text labeling (example: Azure Machine Learning) to improve the model’s performance Deep Learning and transformer algorithms. |

| Muhammad et al. [61] | Self+NO | Drugs.com | Bi-GRU + Capsule + Text-Inception + K-Max (Acc: 0.99) | N/A |

| Domain: Detection (7 papers) | ||||

| Chakravarthi et al. [9] | Self+NO | YouTube | T5-Sentence + CNN(F1: 0.75) | A larger dataset with further fine-grained classification and content analysis. |

| Balshetwar et al. [10] | 3rd+O | ISOT, LIAR | TF-IDF + Naïve Bayes, passive-aggressive and Deep Neural Network(Acc: 0.98) | Test on other datasets. |

| Rosenberg et al. [25] | Self+O | Tweets using keywords | BERT(acc: 0.69) | N/A |

| Spinde et al. [26] | Self+O | adfontesmedia.comTwitter | fine-tuning XLNet(Acc: 0.95) | expand the dataset and analysis, including with additional concepts related to media bias. |

| Fazil et al. [44] | 3rd+O | 3 Twitter datasets DS-1 (80000 tweets: abusive, hateful, spam, normal) DS-2 (24802 tweets: offensive, or neither) DS-3 (20148 tweets: hateful, offensive, normal, or undecided) | Multi-Channel CNN-BiLSTM DS-1 (acc: 0.93)DS-2 (acc: 0.92, BERT acc: 0.94)DS-3 (acc: 0.86) | Proposed model over multilingual text, transformer-based language models. |

| Vadivu et al. [62] | 3rd+O | India twitter data; fakenews Net | fICS-DBN-DGCO (Acc: 0.89) | N/A |

| Hoang et al. [67] | 3rd+Self+O | amazon.comebay.com | knowledge-based Ontology(Acc: 0.90) | N/A |

| Domain: Education (5 papers) | ||||

| Dake et al. [11] | Self+NO | University of Education, Winneba, Ghana | SVM (acc: 0.63) | N/A |

| Rakhmanov [27] | Self+NO | University of Nigeria(52,571 comments) | TF-IDF+RF (acc: 0.968) TF-IDF+ANN (acc: 0.96) | bigrams or trigrams can be also tested. |

| Zhai et al. [45] | Self+NO3rd+O | Education and Restaurant | Multi-AFM(acc: 0.946) | develop transformer-based models and use multi-class sentiment analysis. |

| Rajagukguk et al. [46] | Self+NO | Students’ feedback | BiLSTM (Acc: 0.9275) | N/A |

| Dang et al. [60] | Self+NO | USAL-UTH (10,000 Vietnamese customer comments)UIT-VSFC (16,000 Vietnamese students’ feedback) | CNN, LSTM, and SVM(acc: 0.93) | combine with pre-trained language models, leveraging transfer learning and domain adaptation. |

| Domain: Technology (3 papers) | ||||

| Yan et al. [19] | 3rd+O | Lap2014 Rest2014, 2015, 2016 | AccuracyDE-CNN: 0.8489, O2-Bert: 0.8463 O2-Bert: 0.892, 0.8316, 0.8688 | Large Language Model and prompt engineering into TBSA tasks. |

| Muhamet et al. [22] | Self+NO | Collected 17.5 million tweets spanning 10 years (2012–2021) | BiLSTM with FastText (F1: 0.71) | Explore unsupervised and weakly supervised learning techniques. |

| Source | Name/Feature | |||

| Domain: Technology (3 papers) | ||||

| Vasanth et al. [38] | Self+NO | IMDB, Youtube | Bert | use clustering approaches to construct clusters in order to have a better idea |

| Domain: Politics (3 paper) | ||||

| Bola et al. [21] | Self+O | Dataset of 2,000 Canadian maritime court | CNN + LSTM (Acc: 0.925) | Extend the model to other domains of law.Integrate pre-trained word embeddings. |

| Baraniaka et al. [33] | Self+NO | 136.379 articles in English | BERT (Acc: 0.51) | Extending the size of the dataset. |

| Kevin et al. [41] | 3rd+O | SVM (Acc: 0.72)DeBERTa (Acc: 0.71) | N/A | |

| Domain: Management (1 paper) | ||||

| Capuano et al. [18] | Self+NO3rd+O | Analist Group Public datasets | HAN (Acc: 0.8) | integrated into a comprehensive CRM (customer relationship management). |

3.3. Tasks

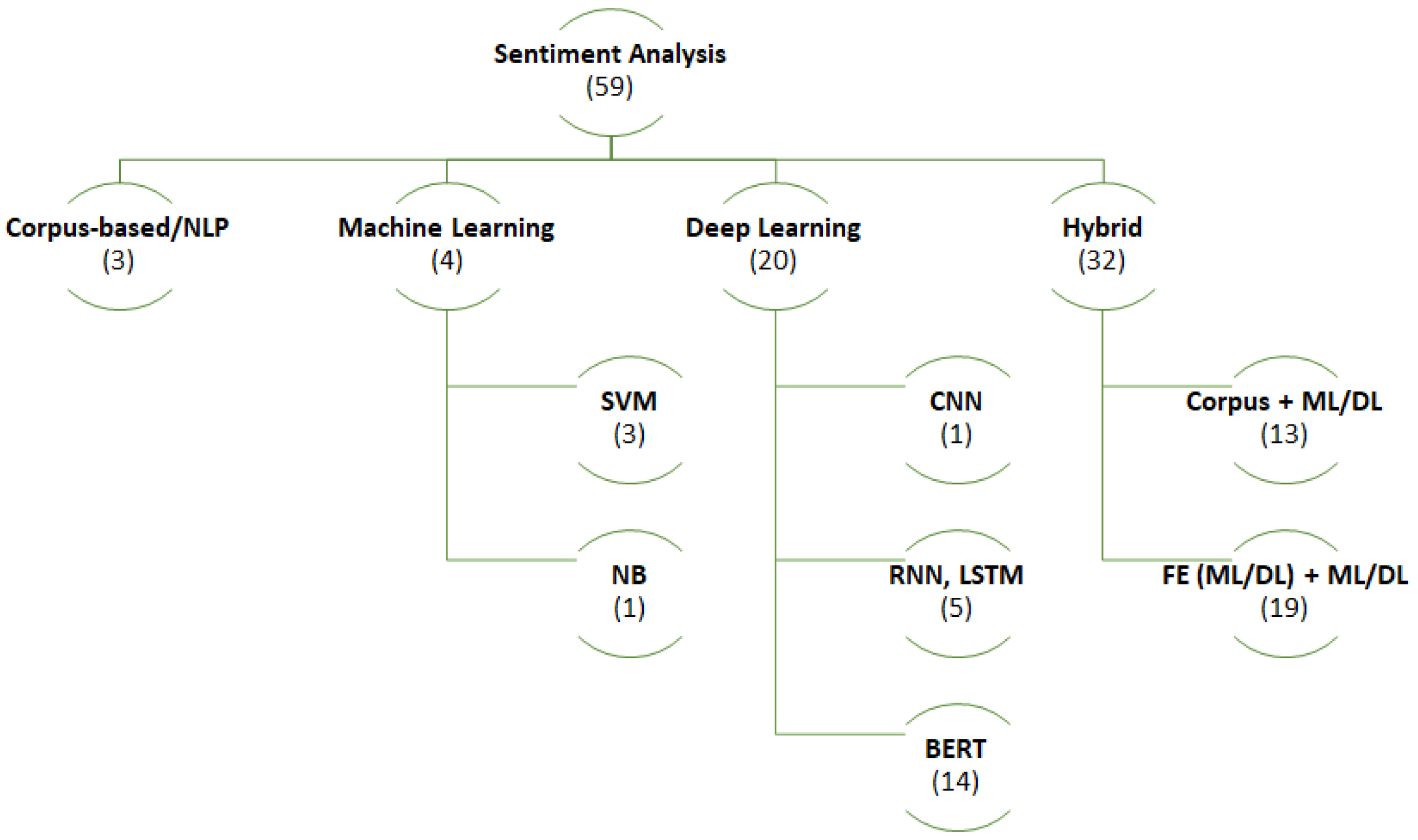

3.4. Models

3.4.1. Corpus-Based/NLP

3.4.2. Machine Learning

3.4.3. Deep Learning

3.4.4. Hybrid Models

3.4.5. Metrics of Sentiment Analysis

3.5. Datasets

3.6. Future Developments

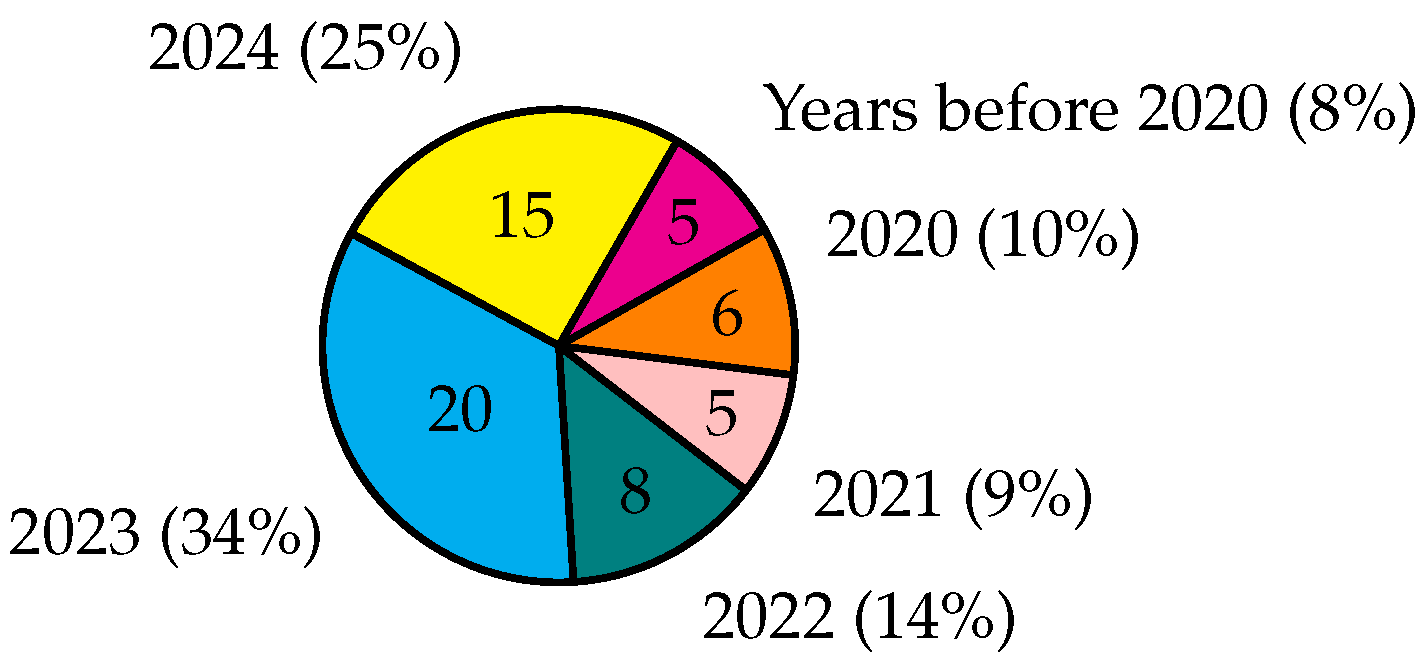

4. Results and Discussion

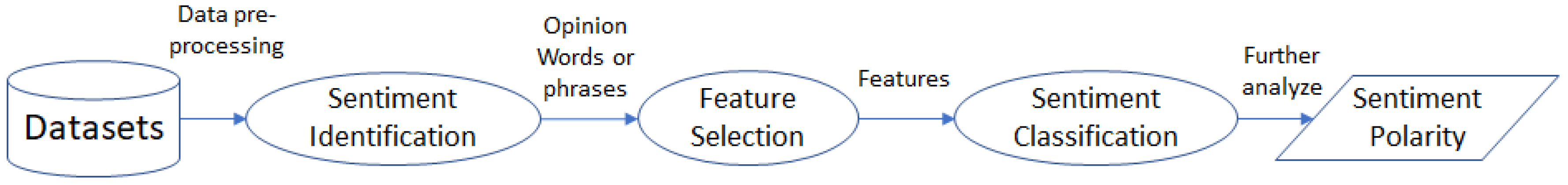

4.1. Process for Developing the SA System

- Cleaning the Twitter RTs, @, #, and the links from the sentences;

- Stemming (flies -> fly), (is -> is) or lemmatization (is -> be);

- Converting the text to lower case;

- Cleaning all the non-letter characters, including numbers;

- Removing English stop words and punctuation;

- Eliminating extra white spaces;

- Decoding HTML to general text.

4.2. Domains

4.3. Tasks

4.4. Models and Algorithms

4.5. Datasets

4.6. Future Developments

5. Conclusion

References

- Rodríguez-Ibánez, M.; Casánez-Ventura, A.; Castejón-Mateos, F.; Cuenca-Jiménez, P.M. A review on sentiment analysis from social media platforms. Expert Systems with Applications 2023, 223, 119862. [CrossRef]

- Hande, A.; Priyadharshini, R.; Chakravarthi, B.R. KanCMD: Kannada CodeMixed Dataset for Sentiment Analysis and Offensive Language Detection. In Proceedings of the Proceedings of the Third Workshop on Computational Modeling of People’s Opinions, Personality, and Emotion’s in Social Media, Barcelona, Spain (Online), 2020; pp. 54–63.

- Wankhade, M.; Rao, A.C.S.; Kulkarni, C. A survey on sentiment analysis methods, applications, and challenges. Artificial Intelligence Review 2022, 55, 5731–5780. [CrossRef]

- G, V.; Chandrasekaran, D. Sentiment Analysis and Opinion Mining: A Survey. Int J Adv Res Comput Sci Technol 2012, 2.

- Bordoloi, M.; Biswas, S.K. Sentiment analysis: A survey on design framework, applications and future scopes. Artif Intell Rev 2023, pp. 1–56. [CrossRef]

- Ezhilarasan, M.; Govindasamy, V.; Akila, V.; Vadivelan, K. Sentiment Analysis On Product Review: A Survey. In Proceedings of the 2019 International Conference on Computation of Power, Energy, Information and Communication (ICCPEIC), 2019, pp. 180–192. [CrossRef]

- Zhang, L.; Wang, S.; Liu, B. Deep Learning for Sentiment Analysis : A Survey. CoRR 2018, abs/1801.07883, [1801.07883].

- Ngo, V.M.; Thorpe, C.; Dang, C.N.; Mckeever, S. Investigation, Detection and Prevention of Online Child Sexual Abuse Materials: A Comprehensive Survey. In Proceedings of the 2022 RIVF International Conference on Computing and Communication Technologies (RIVF), 2022, pp. 707–713. [CrossRef]

- Chakravarthi, B.R. Hope speech detection in YouTube comments. Soc Netw Anal Min 2022, 12, 75. [CrossRef]

- Balshetwar, S.V.; Rs, A.; R, D.J. Fake news detection in social media based on sentiment analysis using classifier techniques. Multimed Tools Appl 2023, pp. 1–31. [CrossRef]

- Dake, D.K.; Gyimah, E. Using sentiment analysis to evaluate qualitative students’ responses. Educ Inf Technol (Dordr) 2023, 28, 4629–4647. [CrossRef]

- Nti, I.K.; Adekoya, A.F.; Weyori, B.A. A novel multi-source information-fusion predictive framework based on deep neural networks for accuracy enhancement in stock market prediction. Journal of Big Data 2021, 8. [CrossRef]

- Colasanto, F.; Grilli, L.; Santoro, D.; Villani, G. BERT’s sentiment score for portfolio optimization: a fine-tuned view in Black and Litterman model. Neural Comput Appl 2022, 34, 17507–17521. [CrossRef]

- Windsor, E.; Cao, W. Improving exchange rate forecasting via a new deep multimodal fusion model. Appl Intell (Dordr) 2022, 52, 16701–16717. [CrossRef]

- Hajek, P.; Munk, M. Speech emotion recognition and text sentiment analysis for financial distress prediction. Neural Computing and Applications 2023, 35, 21463–21477. [CrossRef]

- Kaplan, H.; Weichselbraun, A.; Brasoveanu, A.M.P. Integrating Economic Theory, Domain Knowledge, and Social Knowledge into Hybrid Sentiment Models for Predicting Crude Oil Markets. Cognit Comput 2023, pp. 1–17. [CrossRef]

- Bansal, A.; Kumar, N. Aspect-Based Sentiment Analysis Using Attribute Extraction of Hospital Reviews. New Gener Comput 2022, 40, 941–960. [CrossRef]

- Capuano, N.; Greco, L.; Ritrovato, P.; Vento, M. Sentiment analysis for customer relationship management: an incremental learning approach. Applied Intelligence 2020, 51, 3339–3352. [CrossRef]

- Yan, Y.; Zhang, B.W.; Ding, G.; Li, W.; Zhang, J.; Li, J.J.; Gao, W. O2-Bert: Two-Stage Target-Based Sentiment Analysis. Cognitive Computation 2023. [CrossRef]

- Umair, A.; Masciari, E. Sentiment Analysis Using Improved CT-BERT_CONVLayer Fusion Model for COVID-19 Vaccine Recommendation. SN Computer Science 2024, 5, 931. [CrossRef]

- Abimbola, B.; Tan, Q.; De La Cal Marín, E.A. Sentiment analysis of Canadian maritime case law: a sentiment case law and deep learning approach. International Journal of Information Technology 2024, 16, 3401–3409. [CrossRef]

- Kastrati, M.; Kastrati, Z.; Shariq Imran, A.; Biba, M. Leveraging distant supervision and deep learning for twitter sentiment and emotion classification. Journal of Intelligent Information Systems 2024, 62, 1045–1070. [CrossRef]

- Iddrisu, A.M.; Mensah, S.; Boafo, F.; Yeluripati, G.R.; Kudjo, P. A sentiment analysis framework to classify instances of sarcastic sentiments within the aviation sector. International Journal of Information Management Data Insights 2023, 3. [CrossRef]

- Patel, A.; Oza, P.; Agrawal, S. Sentiment Analysis of Customer Feedback and Reviews for Airline Services using Language Representation Model. Procedia Computer Science 2023, 218, 2459–2467. [CrossRef]

- Rosenberg, E.; Tarazona, C.; Mallor, F.; Eivazi, H.; Pastor-Escuredo, D.; Fuso-Nerini, F.; Vinuesa, R. Sentiment analysis on Twitter data towards climate action. Results in Engineering 2023, 19. [CrossRef]

- Spinde, T.; Richter, E.; Wessel, M.; Kulshrestha, J.; Donnay, K. What do Twitter comments tell about news article bias? Assessing the impact of news article bias on its perception on Twitter. Online Social Networks and Media 2023, 37-38. [CrossRef]

- Rakhmanov, O. A Comparative Study on Vectorization and Classification Techniques in Sentiment Analysis to Classify Student-Lecturer Comments. Procedia Computer Science 2020, 178, 194–204. 9th International Young Scientists Conference in Computational Science, YSC2020, 05-12 September 2020, . [CrossRef]

- Chen, L.; Kong, Y.; Lin, J. Trend Prediction Of Stock Industry Index Based On Financial Text. Procedia Computer Science 2022, 202, 105–110. International Conference on Identification, Information and Knowledge in the internet of Things, 2021, . [CrossRef]

- Colon-Ruiz, C.; Segura-Bedmar, I. Comparing deep learning architectures for sentiment analysis on drug reviews. J Biomed Inform 2020, 110, 103539. [CrossRef]

- Basiri, M.E.; Nemati, S.; Abdar, M.; Asadi, S.; Acharrya, U.R. A novel fusion-based deep learning model for sentiment analysis of COVID-19 tweets. Knowl Based Syst 2021, 228, 107242. [CrossRef]

- Meena, G.; Mohbey, K.K.; Kumar, S.; Lokesh, K. A hybrid deep learning approach for detecting sentiment polarities and knowledge graph representation on monkeypox tweets. Decision Analytics Journal 2023, 7. [CrossRef]

- Suhartono, D.; Purwandari, K.; Jeremy, N.; Philip, S.; Arisaputra, P.; Parmonangan, I. Deep neural networks and weighted word embeddings for sentiment analysis of drug product reviews. Procedia Computer Science 2023, 216, 664–671. [CrossRef]

- Baraniak, K.; Sydow, M. A dataset for Sentiment analysis of Entities in News headlines (SEN). Procedia Computer Science 2021, 192, 3627–3636. [CrossRef]

- Ahmed, Z.; Wang, J. A fine-grained deep learning model using embedded-CNN with BiLSTM for exploiting product sentiments. Alexandria Engineering Journal 2023, 65, 731–747. [CrossRef]

- Ye, L.; Yimeng, Y.; Wei, C. Analyzing Public Perception of Educational Books via Text Mining of Online Reviews. Procedia Computer Science 2023, 221, 617–625. [CrossRef]

- Atandoh, P.; Zhang, F.; Adu-Gyamfi, D.; Atandoh, P.H.; Nuhoho, R.E. Integrated deep learning paradigm for document-based sentiment analysis. Journal of King Saud University - Computer and Information Sciences 2023, 35. [CrossRef]

- Gunawan, L.; Anggreainy, M.; Wihan, L.; Santy, Lesmana, G.; Yusuf, S. Support vector machine based emotional analysis of restaurant reviews. Procedia Computer Science 2023, 216, 479–484. [CrossRef]

- Vasanth, K.; P., S.; Shete, V.; Ravi, C.N.; P, V. Dynamic Fusion of Text, Video and Audio models for Sentiment Analysis. Procedia Computer Science 2022, 215, 211–219. [CrossRef]

- George, M.; Murugesan, R. Improving sentiment analysis of financial news headlines using hybrid Word2Vec-TFIDF feature extraction technique. Procedia Computer Science 2024, 244, 1–8. 6th International Conference on AI in Computational Linguistics, . [CrossRef]

- Valarmathi, B.; Gupta, N.S.; Karthick, V.; Chellatamilan, T.; Santhi, K.; Chalicheemala, D. Sentiment Analysis of Covid-19 Twitter Data using Deep Learning Algorithm. Procedia Computer Science 2024, 235, 3397–3407. International Conference on Machine Learning and Data Engineering (ICMLDE 2023), . [CrossRef]

- Tanoto, K.; Gunawan, A.A.S.; Suhartono, D.; Mursitama, T.N.; Rahayu, A.; Ariff, M.I.M. Investigation of challenges in aspect-based sentiment analysis enhanced using softmax function on twitter during the 2024 Indonesian presidential election. Procedia Computer Science 2024, 245, 989–997. 9th International Conference on Computer Science and Computational Intelligence 2024 (ICCSCI 2024), . [CrossRef]

- Greeshma, M.; Simon, P. Bidirectional Gated Recurrent Unit with Glove Embedding and Attention Mechanism for Movie Review Classification. Procedia Computer Science 2024, 233, 528–536. 5th International Conference on Innovative Data Communication Technologies and Application (ICIDCA 2024), . [CrossRef]

- Taneja, K.; Vashishtha, J.; Ratnoo, S. Transformer Based Unsupervised Learning Approach for Imbalanced Text Sentiment Analysis of E-Commerce Reviews. Procedia Computer Science 2024, 235, 2318–2331. International Conference on Machine Learning and Data Engineering (ICMLDE 2023), . [CrossRef]

- Fazil, M.; Khan, S.; Albahlal, B.M.; Alotaibi, R.M.; Siddiqui, T.; Shah, M.A. Attentional Multi-Channel Convolution With Bidirectional LSTM Cell Toward Hate Speech Prediction. IEEE Access 2023, 11, 16801–16811. [CrossRef]

- Zhai, G.; Yang, Y.; Wang, H.; Du, S. Multi-attention fusion modeling for sentiment analysis of educational big data. Big Data Mining and Analytics 2020, 3, 311–319. [CrossRef]

- Rajagukguk, S.A.; Prabowo, H.; Bandur, A.; Setiowati, R. Higher Educational Institution (HEI) Promotional Management Support System Through Sentiment Analysis for Student Intake Improvement. IEEE Access 2023, 11, 77779–77792. [CrossRef]

- Mishev, K.; Gjorgjevikj, A.; Vodenska, I.; Chitkushev, L.T.; Trajanov, D. Evaluation of Sentiment Analysis in Finance: From Lexicons to Transformers. IEEE Access 2020, 8, 131662–131682. [CrossRef]

- Parekh, R.; Patel, N.P.; Thakkar, N.; Gupta, R.; Tanwar, S.; Sharma, G.; Davidson, I.E.; Sharma, R. DL-GuesS: Deep Learning and Sentiment Analysis-Based Cryptocurrency Price Prediction. IEEE Access 2022, 10, 35398–35409. [CrossRef]

- Yekrangi, M.; Nikolov, N.S. Domain-Specific Sentiment Analysis: An Optimized Deep Learning Approach for the Financial Markets. IEEE Access 2023, 11, 70248–70262. [CrossRef]

- Han, Y.; Liu, M.; Jing, W. Aspect-Level Drug Reviews Sentiment Analysis Based on Double BiGRU and Knowledge Transfer. IEEE Access 2020, 8, 21314–21325. [CrossRef]

- Sweidan, A.H.; El-Bendary, N.; Al-Feel, H. Sentence-Level Aspect-Based Sentiment Analysis for Classifying Adverse Drug Reactions (ADRs) Using Hybrid Ontology-XLNet Transfer Learning. IEEE Access 2021, 9, 90828–90846. [CrossRef]

- Bengesi, S.; Oladunni, T.; Olusegun, R.; Audu, H. A Machine Learning-Sentiment Analysis on Monkeypox Outbreak: An Extensive Dataset to Show the Polarity of Public Opinion From Twitter Tweets. IEEE Access 2023, 11, 11811–11826. [CrossRef]

- Duan, G.; Yan, S.; Zhang, M. A Hybrid Neural Network Model for Sentiment Analysis of Financial Texts Using Topic Extraction, Pre-Trained Model, and Enhanced Attention Mechanism Methods. IEEE Access 2024, 12, 98207–98224. [CrossRef]

- Kumar, L.K.; Thatha, V.N.; Udayaraju, P.; Siri, D.; Kiran, G.U.; Jagadesh, B.N.; Vatambeti, R. Analyzing Public Sentiment on the Amazon Website: A GSK-Based Double Path Transformer Network Approach for Sentiment Analysis. IEEE Access 2024, 12, 28972–28987. [CrossRef]

- Maroof, A.; Wasi, S.; Jami, S.I.; Siddiqui, M.S. Aspect-Based Sentiment Analysis for Service Industry. IEEE Access 2024, 12, 109702–109713. [CrossRef]

- Sherin, A.; Jasmine Selvakumari Jeya, I.; Deepa, S.N. Enhanced Aquila Optimizer Combined Ensemble Bi-LSTM-GRU With Fuzzy Emotion Extractor for Tweet Sentiment Analysis and Classification. IEEE Access 2024, 12, 141932–141951. [CrossRef]

- Rahman, B.; Maryani. Optimizing Customer Satisfaction Through Sentiment Analysis: A BERT-Based Machine Learning Approach to Extract Insights. IEEE Access 2024, 12, 151476–151489. [CrossRef]

- Du, K.; Xing, F.; Cambria, E. Incorporating Multiple Knowledge Sources for Targeted Aspect-based Financial Sentiment Analysis. ACM Transactions on Management Information Systems 2023, 14, 1–24. [CrossRef]

- Perti, A.; Sinha, A.; Vidyarthi, A. Cognitive Hybrid Deep Learning-based Multi-modal Sentiment Analysis for Online Product Reviews. ACM Transactions on Asian and Low-Resource Language Information Processing 2023. [CrossRef]

- Dang, C.N.; Moreno-García, M.N.; De la Prieta, F.; Nguyen, K.V.; Ngo, V.M. Sentiment Analysis for Vietnamese – Based Hybrid Deep Learning Models 2023. pp. 293–303.

- Swaileh A. Alzaidi, M.; Alshammari, A.; Almanea, M.; Al-khawaja, H.A.; Al Sultan, H.; Alotaibi, S.; Almukadi, W. A Text-Inception-Based Natural Language Processing Model for Sentiment Analysis of Drug Experiences. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024. [CrossRef]

- Vadivu, S.V.; Nagaraj, P.; Murugan, B.S. Opinion Mining on Social Media Text Using Optimized Deep Belief Networks. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024. [CrossRef]

- Gamal, D.; Alfonse, M.; M. El-Horbaty, E.S.; M. Salem, A.B. Analysis of Machine Learning Algorithms for Opinion Mining in Different Domains. Machine Learning and Knowledge Extraction 2019, 1, 224–234. [CrossRef]

- Palak Baid, A.G.; Chaplot, N. Sentiment Analysis of Movie Reviews Using Machine Learning Techniques. International Journal of Computer Applications 2017.

- Nehal Mohamed Ali, M.; Youssif. Sentiment analysis for movies reviews dataset using deep learning models. International Journal of Data Mining and Knowledge Management Process 2019, 09, 19–27. [CrossRef]

- Nguyen, P.T.; Le, L.T.; Ngo, V.M.; Nguyen, P.M. Using Entity Relations for Opinion Mining of Vietnamese Comments. CoRR 2019, abs/1905.06647, [1905.06647].

- Nguyen, H.L.; Pham, H.T.N.; Ngo, V.M. Opinion Spam Recognition Method for Online Reviews using Ontological Features. CoRR 2018, abs/1807.11024, [1807.11024].

- Ngo, V.M.; Gajula, R.; Thorpe, C.; Mckeever, S. Discovering child sexual abuse material creators’ behaviors and preferences on the dark web. Child Abuse & Neglect 2024, 147, 106558. [CrossRef]

- Ngo, V.M.; Cao, T.H. Discovering Latent Concepts and Exploiting Ontological Features for Semantic Text Search. In Proceedings of the Proceedings of 5th International Joint Conference on Natural Language Processing, 2011, pp. 571–579.

- Cao, T.H.; Ngo, V.M. Semantic search by latent ontological features. New Generation Computing 2012, 30, 53–71. [CrossRef]

- Ngo, V.M.; Munnelly, G.; Orlandi, F.; Crooks, P. A Semantic Search Engine for Historical Handwritten Document Images. In Proceedings of the Linking Theory and Practice of Digital Libraries: 25th International Conference on Theory and Practice of Digital Libraries, TPDL 2021, Virtual Event, September 13–17, 2021, Proceedings. Springer Nature, 2021, Vol. 12866, p. 60. [CrossRef]

- Ngo, V.M.; Bolger, E.; Goodwin, S.; O’Sullivan, J.; Cuong, D.V.; Roantree, M. A Graph Based Raman Spectral Processing Technique for Exosome Classification. In Proceedings of the Artificial Intelligence in Medicine; Bellazzi, R.; et al., Eds. Springer, 2025, pp. 344–354. [CrossRef]

| Publisher | The number of literatures | ||

|---|---|---|---|

| with search | After | After reviewing | After reviewing |

| criteria | downloading | title and abstract | full text |

| Springer | 1,886 | 64 | 16 (Res1: 14, Sur2: 2) |

| Elsevier | 2,066 | 91 | 22 (Res: 21, Sur: 1) |

| IEEE | 465 | 39 | 14 (Res: 14, Sur: 0) |

| ACM | 292 | 12 | 5 (Res: 5, Sur: 0) |

| Total | 4,709 | 206 | 57 (Res: 54, Sur: 3) |

| Others with unlimited publisher and pub. year | 8 (Res: 5, Sur: 3) | ||

| Final Review | 65 (Res: 59, Sur: 6) | ||

| No | Study | Domains | Year | Task1 | Models and Accuracy |

|---|---|---|---|---|---|

| Springer | |||||

| 1 | Chakravarthi et al. [9] | Detection | 2022 | new-m | Corpus+DL, F1: 0.75 |

| 2 | Balshetwar et al. [10] | Detection | 2023 | new-m | Corpus+ML, Acc: 0.98 |

| 3 | Dake et al. [11] | Education | 2022 | comp | ML, Acc: 0.63 |

| 4 | Nti et al. [12] | Finance | 2021 | new-m | FE (DL)+DL, Acc: 0.98 |

| 5 | Colasanto et al. [13] | Finance | 2022 | impr | DL |

| 6 | Windsor et al. [14] | Finance | 2022 | new-m | DL, R2: 0.9848 |

| 7 | Hajek et al. [15] | Finance | 2023 | new-m | FE (DL)+DL, Acc: 0.954 |

| 8 | Kaplan et al. [16] | Finance | 2023 | new-m | DL, Acc: 0.98 |

| 9 | Bansal et al. [17] | Health | 2021 | new-m | Corpus-based/NLP |

| 10 | Capuano et al. [18] | Management | 2020 | impr | DL, Acc: 0.8 |

| 11 | Yan et al. [19] | Technology | 2023 | new-m | DL, Acc: 0.89 |

| 12 | Areeba et al. [20] | Health | 2024 | impr | DL, Acc: 0.87 |

| 13 | Bola et al. [21] | Politics | 2024 | new-m | FE (DL)+DL, Acc: 0.92 |

| 14 | Muhamet et al. [22] | Technology | 2024 | new-m | Corpus+DL, F1: 0.71 |

| Elsevier | |||||

| 1 | Iddrisu et al. [23] | Product | 2023 | impr | Corpus+ML, Acc: 0.99 |

| 2 | Patel et al. [24] | Product | 2023 | comp | DL, Acc: 0.83 |

| 3 | Rosenberg et al. [25] | Detection | 2023 | expe | DL, Acc: 0.69 |

| 4 | Spinde et al. [26] | Detection | 2023 | expe | FE (DL)+DL, Acc: 0.95 |

| 5 | Rakhmanov [27] | Education | 2020 | expe | Corpus+ML, Acc: 0.96 |

| 6 | Chen et al. [28] | Finance | 2022 | expe | DL, R2: 0.63 |

| 7 | Colon-Ruiz et al. [29] | Health | 2020 | comp | FE (DL)+DL, F1: 0.947 |

| 8 | Basiri et al. [30] | Health | 2021 | new-m | FE (DL)+DL, Acc: 0.858 |

| 9 | Meena et al. [31] | Health | 2023 | expe | FE (DL)+DL, Acc: 0.94 |

| 10 | Suhartono et al. [32] | Health | 2023 | comp | Corpus+DL, Acc: 0.84 |

| 11 | Baraniak et al. [33] | Politics | 2021 | expe | DL, Acc: 0.51 |

| 12 | Ahmed et al. [34] | Product | 2022 | expe | FE (DL)+DL, Acc: 0.83 |

| 13 | Ye et al. [35] | Product | 2023 | new-m | DL |

| 14 | Atandoh et al. [36] | Product | 2023 | new-m | FE (DL)+DL, Acc: 0.95 |

| 15 | Gunawan et al. [37] | Product | 2023 | expe | ML, Acc: 0.79 |

| 16 | Vasanth et al. [38] | Technology | 2022 | new-m | DL |

| 17 | Meera et al. [39] | Finance | 2024 | impr | Corpus+ML, Acc: 0.82 |

| 18 | Valarmathi et al. [40] | Health | 2024 | impr | Corpus+DL, Acc: 0.96 |

| 19 | Valarmathi et al. [41] | Politics | 2024 | expe | ML(Acc: 0.72), DL(Acc: 0.71) |

| 20 | Greeshma et al. [42] | Product | 2024 | new-m | Corpus+DL, Acc: 0.98 |

| 21 | Khushboo et al. [43] | Product | 2024 | comp | DL, Acc: 0.96 |

| IEEE | |||||

| 1 | Fazil et al. [44] | Detection | 2023 | new-m | FE (DL)+DL, Acc: 0.94 |

| 2 | Zhai et al. [45] | Education | 2020 | new-m | FE (DL)+DL, Acc: 0.946 |

| 3 | Rajagukguk et al. [46] | Education | 2023 | expe | DL, Acc: 0.927 |

| 4 | Mishev et al. [47] | Finance | 2020 | expe | DL, 0.947 |

| 5 | Parekh et al. [48] | Finance | 2022 | new-m | Corpus+DL |

| 6 | Yekrangi et al. [49] | Finance | 2023 | impr | FE (DL)+DL, Acc: 0.84 |

| 7 | Han et al. [50] | Health | 2020 | comp | DL, Acc: 0.78 |

| IEEE | |||||

| 8 | Sweidan et al. [51] | Health | 2021 | new-m | Corpus+DL, Acc: 0.98 |

| 9 | Bengesi et al. [52] | Health | 2023 | expe | Corpus+ML, Acc: 0.93 |

| 10 | Ganglong et al. [53] | Finance | 2024 | impr | FE (DL)+DL, Acc: 0.95 |

| 11 | Kumar et al. [54] | Product | 2024 | new-m | Corpus+DL, Acc: 0.95 |

| 12 | Maroof et al. [55] | Product | 2024 | comp | ML(Acc: 0.91) DL(Acc: 0.92) |

| 13 | Sherin et al. [56] | Product | 2024 | new-m | FE (DL)+DL(Acc: 0.94) |

| 14 | Rahman et al. [57] | Product | 2024 | comp | DL(Acc: 0.95) |

| ACM | |||||

| 1 | Du et al. [58] | Finance | 2023 | new-m | DL, R2: 0.71 |

| 2 | Perti et al. [59] | Product | 2023 | expe | FE (DL)+ML, Acc: 0.845 |

| 3 | Dang et al. [60] | Education | 2023 | new-m | FE (DL)+ML, Acc: 0.93 |

| 4 | Muhammad et al. [61] | Health | 2024 | new-m | Corpus+DL, Acc: 0.99 |

| 5 | Vadivu et al. [62] | Detection | 2024 | new-m | DL, Acc: 0.89 |

| Others | |||||

| 1 | Gamal et al. [63] | Product | 2018 | comp | Corpus+ML, Acc: 0.87-0.99 |

| 2 | Palak Baid et al. [64] | Product | 2017 | comp | ML, Acc: 0.81 |

| 3 | Ali et al. [65] | Product | 2019 | comp | FE (DL)+DL, Acc: 0.89 |

| 4 | Nguyen et al. [66] | Product | 2019 | new-m | Corpus-based/NLP, Acc: 0.84 |

| 5 | Nguyen et al. [67] | Detection | 2019 | new-m | Corpus-based/NLP, Acc: 0.90 |

| 1 | |

| 2 | |

| 3 | |

| 4 | |

| 5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).