Submitted:

01 October 2025

Posted:

01 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

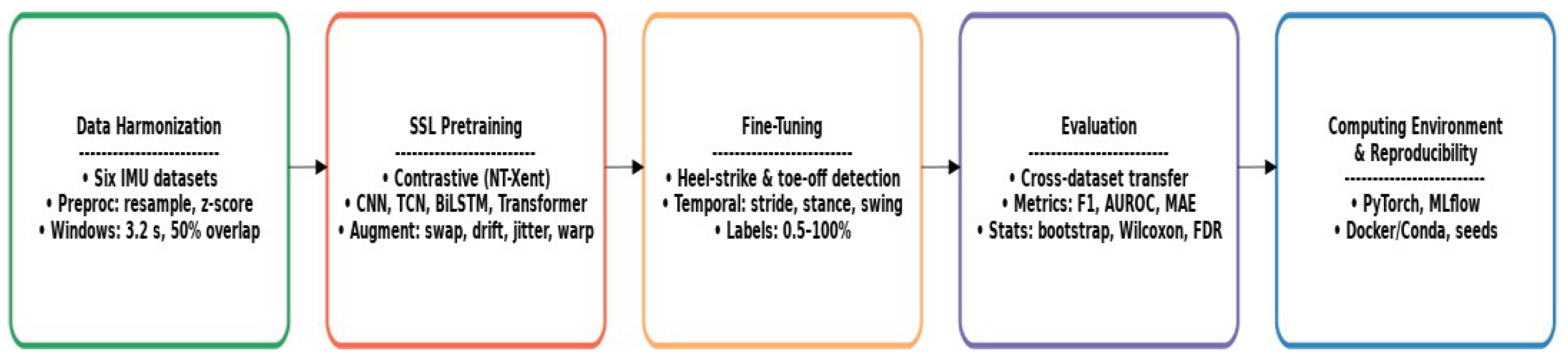

2. Materials and Methods

2.1. Data and Harmonization

2.2. SSL Encoder (Backbone)

- 1D-CNN (dilated): the first was a one-dimensional dilated convolutional neural network (1D-CNN), configured with 6→64→128→256 channels, kernel sizes of 7/7/5, dilations of 1/2/4, and a stride of 2 in the first block. GELU activations and residual connections were applied throughout, followed by global average pooling to obtain a 256- dimensional embedding.

- Tiny Transformer: the second backbone was a lightweight Transformer. Input windows were patchified with stride 2 (patch length 4) and processed through four encoder blocks with hidden dimension 256, 4 attention heads, and dropout of 0.1. A [CLS] token representation was pooled into a 256-dimensional embedding. A two-layer MLP (256→256→128) with batch normalization then projected embeddings into the contrastive space.

2.3. Sensor-Aware Augmentations

2.4. Downstream Heads and Labels

2.5. Baselines

2.6. Evaluation Protocol and Statistics

- a linear probe, in which the backbone was frozen and only a logistic or linear head was trained;

- few-shot fine-tuning, where 1%, 5%, or 10% of labeled windows from Dwere used with subject-stratified sampling.

2.7. Computing Environment and Reproducibility

- Hardware. Experiments were performed on high-performance workstations equipped with NVIDIA RTX 3090/4090 and A6000 GPUs (24–48 GB VRAM), 64–128 GB RAM, and ≥1 TB SSD storage.

- Software. The implementation used Python 3.10 and PyTorch 2.x with CUDA 12.x/cuDNN. Core libraries included NumPy, Pandas, SciPy, Scikit-learn, and TorchMetrics. Configuration management was handled with Hydra; MLflow and Weights & Biases were used for experiment logging and tracking. The environment was version- controlled with Git and containerized with Docker/Conda for portability.

- Data pipeline. Raw IMU signals underwent axis harmonization, z-score normalization, resampling at 25, 50, or 100 Hz, and segmentation into 3.2-s windows. SSL pretraining was performed on unlabeled windows with sensor-aware augmentations (axis-swap, drift, jitter, time-warp). Fine-tuning added classification (gait events) and regression heads (stride-time error).

- Evaluation protocol. Performance was assessed with F1, precision, recall, AUROC, and mean absolute error (MAE). Model size (parameters in M), inference latency, and convergence speed (epochs to optimal validation) were also monitored. Each condition was repeated in triplicate with fixed random seeds.

- Statistical analysis. All evaluations employed non-parametric bootstrap confidence intervals (5,000 iterations), paired Wilcoxon signed-rank tests with Benjamini–Hochberg FDR control (α = 0.05), and dual reporting of standardized effect sizes (Cohen’s d) together with absolute gains (ΔF1, ΔMAE), providing robust, reproducible, and practically interpretable inference.

- Reproducibility. All metrics, results, and figures were automatically logged in MLflow, together with configuration files. Complete environment specifications (Docker and Conda manifests) are provided to enable deterministic replication of the experiments across different systems. All experiments were repeated with fixed random seeds controlling initialization, data splits, and augmentation draws, ensuring strict reproducibility across runs.

2.8. Experiments

3. Results

3. Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bonanno, M.; De Nunzio, A.M.; Quartarone, A.; Militi, A.; Petralito, F.; Calabrò, R.S. Gait Analysis in Neurorehabilitation: From Research to Clinical Practice. Bioengineering 2023, 10, 785. [CrossRef]

- Canonico, G.; Duraccio, V.; Esposito, L.; Fraldi, M.; Percuoco, G.; Riccio, M.; Quitadamo, L.R.; Lanzotti, A. Gait Monitoring and Analysis: A Mathematical Approach. Sensors 2023, 23, 7743. [CrossRef]

- Young, F.; Mason, R.; Morris, R.E.; Stuart, S.; Godfrey, A. IoT-Enabled Gait Assessment: The Next Step for Habitual Monitoring. Sensors 2023, 23, 4100. [CrossRef]

- Prisco, G.; Pirozzi, M.A.; Santone, A.; Esposito, F.; Cesarelli, M.; Amato, F.; Donisi, L. Validity of Wearable Inertial Sensors for Gait Analysis: A Systematic Review. Diagnostics 2025, 15, 36. [CrossRef]

- Boutaayamou, M.; Azzi, S.; Desailly, E.; Dumas, R. Toward Convenient and Accurate IMU-Based Gait Analysis: A Minimal- Sensor Approach. Sensors 2025, 25, 1267. [CrossRef]

- Roggio,: F.; Di Grande, S.; Cavalieri, S.; Falla, D.; Musumeci, G. Biomechanical Posture Analysis in Healthy Adults with Machine Learning: Applicability and Reliability. Sensors 2024, 24, 2929. [CrossRef]

- Mănescu, D.C. Elements of the specific conditioning in football at university level. Marathon 2015, 7(1), 107-111.

- Mănescu, D.C. Alimentaţia în fitness şi bodybuilding. 2010, Editura ASE.

- Mănescu, D.C. Nutritional tips for muscular mass hypertrophy. Marathon 2016, 8(1), 79-83.

- Gastaldi, L.; Pastorelli, S.; Rosati, S. Recent Advances and Applications of Wearable Inertial Measurement Units in Human Motion Analysis. Sensors 2025, 25, 818. [CrossRef]

- Mănescu, A.M.; Grigoroiu, C.; Smîdu, N.; Dinicu, C.C.; Mărgărit, I.R.; Iacobini, A.; Mănescu, D.C. Biomechanical Effects of Lower Limb Asymmetry During Running: An OpenSim Computational Study. Symmetry 2025, 17, 1348. [CrossRef]

- Liu, Y.; Liu, X.; Zhu, Q.; Chen, Y.; Yang, Y.; Xie, H.; Wang, Y.; Wang, X. Adaptive Detection in Real-Time Gait Analysis through the Dynamic Gait Event Identifier. Bioengineering 2024, 11, 806. [CrossRef]

- Gouda, A.; Andrysek, J. Rules-Based Real-Time Gait Event Detection Algorithm for Lower-Limb Prosthesis Users during Level-Ground and Ramp Walking. Sensors 2022, 22, 8888. [CrossRef]

- Lu, Y.; Zhu, J.; Chen, W.; Ma, X. Inertial Measurement Unit-Based Real-Time Adaptive Algorithm for Human Walking Pattern and Gait Event Detection. Electronics 2023, 12, 4319. [CrossRef]

- Prasanth, H.; Caban, M.; Keller, U.; Courtine, G.; Ijspeert, A.; Vallery, H.; von Zitzewitz, J. Wearable Sensor-Based Real-Time Gait Detection: A Systematic Review. Sensors 2021, 21, 2727. [CrossRef]

- Tao, W.; Liu, T.; Zheng, R.; Feng, H. Gait Analysis Using Wearable Sensors. Sensors 2012, 12, 2255–2283. [CrossRef]

- Mannini, A.; Sabatini, A.M. Machine Learning Methods for Classifying Human Physical Activity from On-Body Accelerometers. Sensors 2010, 10, 1154–1175. [CrossRef]

- Mănescu, D.C. Big Data Analytics Framework for Decision-Making in Sports Performance Optimization. Data 2025, 10, 116. [CrossRef]

- Romijnders, R.; Harms, H.; van Dieën, J.H.; Hof, A.L.; Pijnappels, M. A Deep Learning Approach for Gait Event Detection From a Single Shank-Mounted IMU. Sensors 2022, 22, 3859. [CrossRef]

- Ren, J.; Wang, A.; Li, H.; Yue, X.; Meng, L. A Transformer-Based Neural Network for Gait Prediction in Lower Limb Exoskeleton Robots Using Plantar Force. Sensors 2023, 23, 6547. [CrossRef]

- Wang, J.; Ma, H.; Zhou, J.; Liu, H.; Yan, Y.; Xiong, W. A Multimodal CNN–Transformer Network for Gait Pattern Recognition. Electronics 2025, 14, 1537. [CrossRef]

- Mogan, J.N.; Lee, C.P.; Lim, K.M.; Ali, M.; Alqahtani, A. Gait-CNN-ViT: Multi-Model Gait Recognition with Convolutional Neural Networks and Vision Transformer. Sensors 2023, 23, 3809. [CrossRef]

- Jung, D.; Kim, J.; Park, Y.; Lee, H.; Choi, M.; Seo, K. Multi-Model Gait-Based Knee Adduction Moment Prediction System Using IMU Sensor Data and LSTM RNN. Applied Sciences 2024, 14, 10721. [CrossRef]

- Shi, Y.; Ying, X.; Yang, J. Deep Unsupervised Domain Adaptation with Time Series Sensor Data: A Survey. Sensors 2022, 22, 5507. [CrossRef]

- Powell, K.; Amer, A.; Glavcheva-Laleva, Z.; Williams, J.; O’Flaherty Farrell, C.; Harwood, F.; Bishop, P.; Holt, C. MoveLab®: Validation and Development of Novel Cross-Platform Gait and Mobility Assessments Using Gold Standard Motion Capture and Clinical Standard Assessment. Sensors 2025, 25, 5706. [CrossRef]

- Li, C.; Wang, B.; Li, Y.; Liu, B. A Lightweight Pathological Gait Recognition Approach Based on a New Gait Template in Side-View and Improved Attention Mechanism. Sensors 2024, 24, 5574. [CrossRef]

- Mănescu, D.C. Computational Analysis of Neuromuscular Adaptations to Strength and Plyometric Training: An Integrated Modeling Study. Sports 2025, 13, 298. [CrossRef]

- Hwang, S.; Kim, J.; Yang, S.; Moon, H.-J.; Cho, K.-H.; Youn, I.; Sung, J.-K.; Han, S. Machine Learning Based Abnormal Gait Classification with IMU Considering Joint Impairment. Sensors 2024, 24, 5571. [CrossRef]

- Pang, L.; Li, Y.; Liao, M.-X.; Qiu, J.-G.; Li, H.; Wang, Z.; Sun, G. A Feasibility Study of Domain Adaptation for Exercise Intensity Recognition Based on Wearable Sensors. Sensors 2025, 25, 3437. [CrossRef]

- Chang, Y.; Mathur, A.; Isopoussu, A.; Song, J.; Kawsar, F. A Systematic Study of Unsupervised Domain Adaptation for Robust Human-Activity Recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4(1), 3380985. [CrossRef]

- Mu, F.; Gu, X.; Guo, Y.; Lo, B. Unsupervised Domain Adaptation for Position-Independent IMU Based Gait Analysis. Proceedings of IEEE SENSORS 2020; Rotterdam, The Netherlands; pp. 1–4. [CrossRef]

- Guo, Y.; Gu, X.; Yang, G.-Z. MCDCD: Multi-Source Unsupervised Domain Adaptation for Abnormal Human Gait Detection. IEEE Journal of Biomedical and Health Informatics 2021, 25(10), 4017–4028. [CrossRef]

- Mănescu, D.C.; Mănescu, A.M. Artificial Intelligence in the Selection of Top-Performing Athletes for Team Sports: A Proof- of-Concept Predictive Modeling Study. Appl. Sci. 2025, 15, 9918. [CrossRef]

- Haresamudram, H.; Essa, I.; Plötz, T. Towards Learning Discrete Representations via Self-Supervised Learning for Wearables. Sensors 2024, 24, 1238. [CrossRef]

- Sheng, T.; Huber, M. Reducing Label Dependency in Human Activity Recognition with Wearables: From Supervised Learning to Novel Weakly Self-Supervised Approaches. Sensors 2025, 25, 4032. [CrossRef]

- Geissinger, J.H.; Kirchner, F.; Rossel, P.; et al. Motion Inference Using Sparse Inertial Sensors, Self-Supervised Machine Learning. Sensors 2020, 20, 6330. [CrossRef]

- Das, A.M.; Tang, C.I.; Kawsar, F.; Malekzadeh, M. PRIMUS: Pretraining IMU Encoders with Multimodal Self-Supervision. arXiv Preprint 2024. [CrossRef]

- Cosma, A.; Radoi, A. Exploring Self-Supervised Vision Transformers for Gait Recognition in the Wild. Sensors 2023, 23, 2680. [CrossRef]

- Roggio, F., Trovato, B., Sortino, M., & Musumeci, G.; A comprehensive analysis of the machine learning pose estimation models used in human movement and posture analyses: A narrative review. Heliyon 2024, 10, e39977. [CrossRef]

- Zhang, M.; Huang, Y.; Liu, R.; Sato, Y. Masked Video and Body-worn IMU Autoencoder for Egocentric Action Recognition. arXiv Preprint 2024. [CrossRef]

- Manescu, D.C. Inteligencia Artificial en el entrenamiento deportivo de élite y perspectiva de su integración en el deporte escolar. Retos. 73, 2025, 128–141. [CrossRef]

- Hasan, M.A.; Li, F.; Gouverneur, P.; Piet, A.; Grzegorzek, M. A comprehensive survey and comparative analysis of time series data augmentation in medical wearable computing. PLoS ONE 2025, 20(3), e0315343. [CrossRef]

- Ashfaq, N.; Anwar, A.; Khan, F.; Cheema, M.U. Identification of Optimal Data Augmentation Techniques for Multimodal Time Series Classification. Information 2024, 15, 343. [CrossRef]

- Tu, Y.-C.; Lin, C.-Y.; Liu, C.-P.; Chan, C.-T. Performance Analysis of Data Augmentation Approaches for Improving Wrist- Based Fall Detection System. Sensors 2025, 25, 2168. [CrossRef]

- Gastaldi, L.; Digo, E. Recent Advance and Application of Wearable Inertial Sensors in Motion Analysis. Sensors 2025, 25, 818. [CrossRef]

- Liu, Z.; Alavi, A.; Li, M.; Zhang, X. Self-Supervised Contrastive Learning for Medical Time Series: A Systematic Review. Sensors 2023, 23, 4221. [CrossRef]

- Chen, H.; Gouin-Vallerand, C.; Bouchard, K.; Gaboury, S.; Couture, M.; Bier, N.; Giroux, S. Contrastive Self-Supervised Learning for Sensor-Based Human Activity Recognition: A Review. IEEE Access 2024, PP, 1–1. [CrossRef]

- Montero Quispe, K.G.; Utyiama, D.M.S.; dos Santos, E.M.; Oliveira, H.A.B.F.; Souto, E.J.P. Applying Self-Supervised Representation Learning for Emotion Recognition Using Physiological Signals. Sensors 2022, 22, 9102. [CrossRef]

- L. Ding; et al. Wearable Sensors-Based Intelligent Sensing and Data Acquisition: Challenges and Emerging Opportunities. Sensors 2025, 25, 4515. [CrossRef]

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity Recognition Using Cell Phone Accelerometers. ACM SIGKDD Explor. Newsl. 2011, 12(2), 74–82. [CrossRef]

- Reiss, A.; Stricker, D. Introducing a New Benchmarked Dataset for Activity Monitoring. In: 2012 16th Int. Symposium on Wearable Computers (ISWC); IEEE: Newcastle, UK, 2012; pp. 108–109. [CrossRef]

- Sikder, N.; Al Nahid, A. KU-HAR: An open dataset for heterogeneous human activity recognition. Pattern Recognit. Lett. 2021, 146, 46–54. [CrossRef]

- Baños, O.; García, R.; Saez, A. MHEALTH [Dataset]. UCI Machine Learning Repository, 2014. [CrossRef]

- Chavarriaga, R.; Sagha, H.; Calatroni, A.; Digumarti, S.T.; Tröster, G.; Millán, J.d.R.; Roggen, D. The Opportunity challenge: A benchmark database for on-body sensor-based activity recognition. Pattern Recognit. Lett. 2013, 34(15), 2033–2042. [CrossRef]

- Sztyler, T.; Stuckenschmidt, H. On-Body Localization of Wearable Devices: An Investigation of Position-Aware Activity Recognition. In: 2016 IEEE Int. Conf. on Pervasive Computing and Communications (PerCom); IEEE: Sydney, Australia, 2016; pp. 1–9. [CrossRef]

| Dataset | Reference / DOI | Subjects (n) | Sensor placement(s) | Sampling (Hz) | Event labels source | Notes |

| WISDM | Kwapisz et al., 2011 doi:10.1145/1964897.1964918 | 36 | smartphone at waist/hip | 50 | manual annotation | scripted walking |

| PAMAP2 | Reiss & Stricker, 2012 doi:10.1109/ISWC.2012.13 | 9 | wrist, chest, ankle IMUs | 100 | proxy (IMU-derived) | treadmill + daily activities |

| KU-HAR | Sikder & Nahid, 2021 doi:10.1016/j.patrec.2021.02.024 |

90 | smartphone at waist |

100 | manual annotation | scripted activities |

| mHealth | Banos et al., 2014 doi:10.24432/C5TW22 |

10 | chest, ankle, wrist (Shimmer) |

50 | footswitch sensors | controlled lab |

| OPPORTUNITY | Chavarriaga et al., 2013 doi:10.1016/j.patrec.2012.12.014 | 4 | wrist, back, hip + others | 30 | annotated HS/TO | daily activities scenario |

| RWHAR | Sztyler & Stuckenschmidt, 2016 doi:10.1109/PERCOM.2016.7456521 | 15 | smartphone in pocket | 50 | manual annotation | real-world free living |

| Hypothesis (H) | Experiment (E) | Research Question (RQ) |

| H1. Self-supervised pretraining improves gait event detection compared to supervised learning from scratch. |

E1. Pretraining vs. supervised baseline within datasets. |

RQ1. Does SSL provide consistent gains on event detection accuracy? |

|

H2. SSL models transfer better across heterogeneous datasets than supervised models. |

E2. Cross-dataset transfer evaluation. |

RQ2. Does pretraining improve generalization across datasets? |

|

H3. Larger unlabeled datasets for SSL pretraining yield stronger downstream performance. |

E3. Scaling pretraining corpus size. |

RQ3. What is the effect of unlabeled dataset size on downstrem performance? |

|

H4. SSL gains hold even with limited labeled data for fine-tuning. |

E4. Fine-tuning with reduced labeled fractions. |

RQ4. How does SSL behave when labeled data availability is scarce? |

|

H5. SSL models improve detection of temporal gait phases beyond discrete events. |

E5. Phase-level analysis of gait cycles. |

RQ5. Does SSL improve accuracy in estimating stride, stance, and swing? |

|

H6. SSL provides statistically significant improvements robust to evaluation method. |

E6. Statistical testing across metrics and datasets. |

RQ6. Are improvements statistically reliable across conditions? |

|

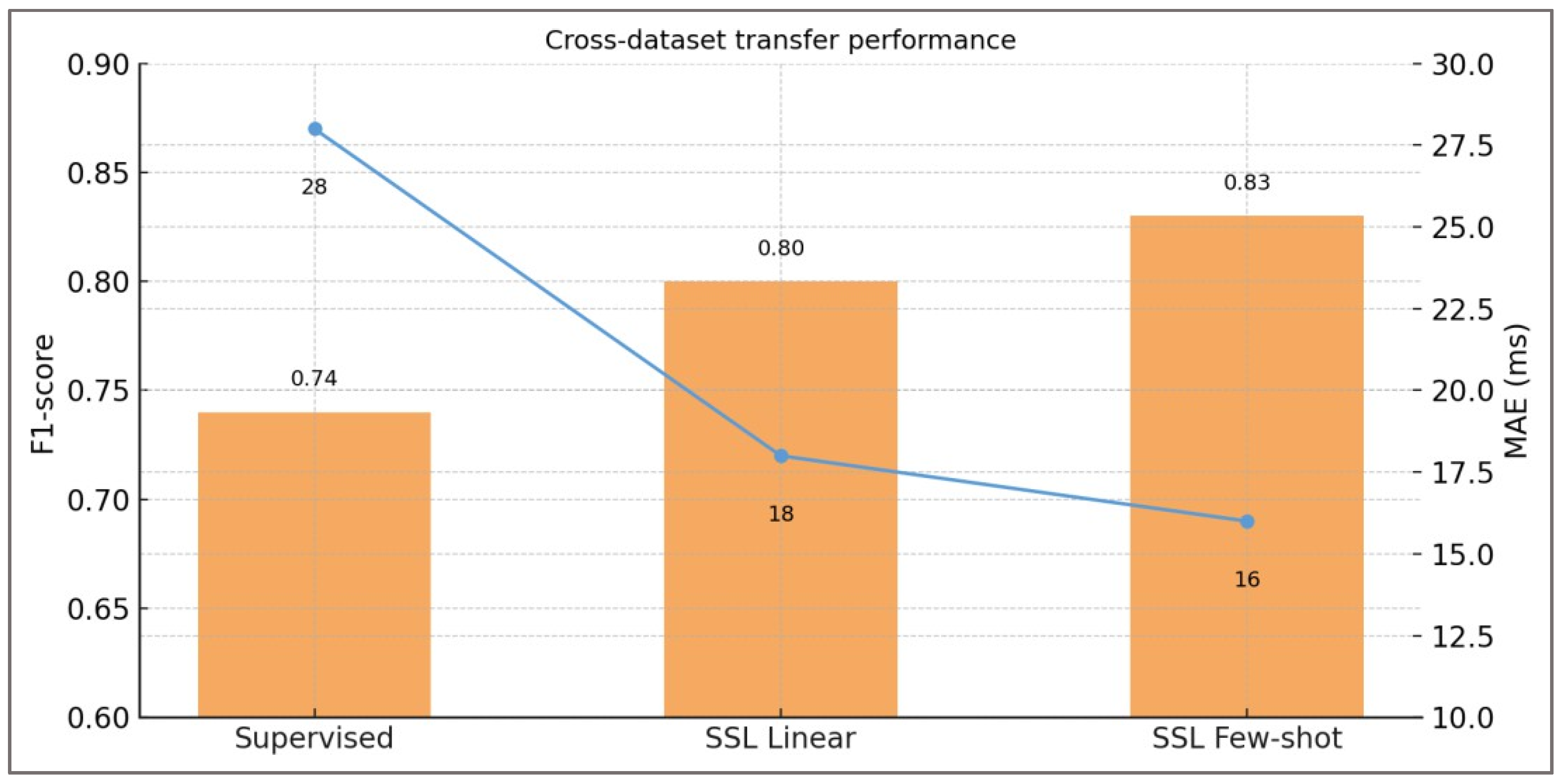

Source → Target |

Method | F1 (±95% CI) | AUROC (±95% CI) | MAE (ms ±95% CI) | Gain F1 (%) | Gain MAE (%) | Cohen’s d | p- value |

| Dataset A → B | Supervised baseline | 0.73 [0.71–0.75] |

0.81 [0.79–0.83] |

28 [26,27,28,29,30] |

N/A | N/A | N/A | N/A |

| SSL Linear Probe | 0.80 [0.77–0.83] |

0.87 [0.84–0.90] |

18 [15,16,17,18,19,20,21,22] |

+9.6 | −35.7 | 1.2 | <0.001 | |

| SSL Few-shot (10%) | 0.83 [0.81–0.85] |

0.89 [0.87–0.91] |

15 [13,14,15,16,17] |

+13.7 | −46.4 | 1.5 | <0.001 | |

| Dataset A → C | Supervised baseline | 0.75 [0.73–0.77] |

0.83 [0.81–0.85] |

27 [25,26,27,28,29] |

N/A | N/A | N/A | N/A |

| SSL Linear Probe | 0.81 [0.79–0.83] |

0.88 [0.86–0.90] |

19 [17,18,19,20,21] |

+8.0 | −29.6 | 1.1 | <0.001 | |

| SSL Few-shot (10%) | 0.84 [0.82–0.86] |

0.90 [0.88–0.92] |

16 [14,15,16,17,18] |

+3.4 | −40.7 | 0.39 | <0.072 (ns) |

|

| Dataset B → C | Supervised baseline | 0.74 [0.72–0.76] |

0.82 [0.80–0.84] |

29 [27,28,29,30,31] |

N/A | N/A | N/A | N/A |

| SSL Linear Probe | 0.79 [0.76–0.82] |

0.86 [0.84–0.88] |

20 [17,18,19,20,21,22,23,24] |

+4.1 | −31.0 | 1.0 | <0.018 | |

| SSL Few-shot (10%) | 0.83 [0.81–0.85] |

0.89 [0.87–0.91] |

16 [14,15,16,17,18] |

+12.2 | −44.8 | 1.4 | <0.001 |

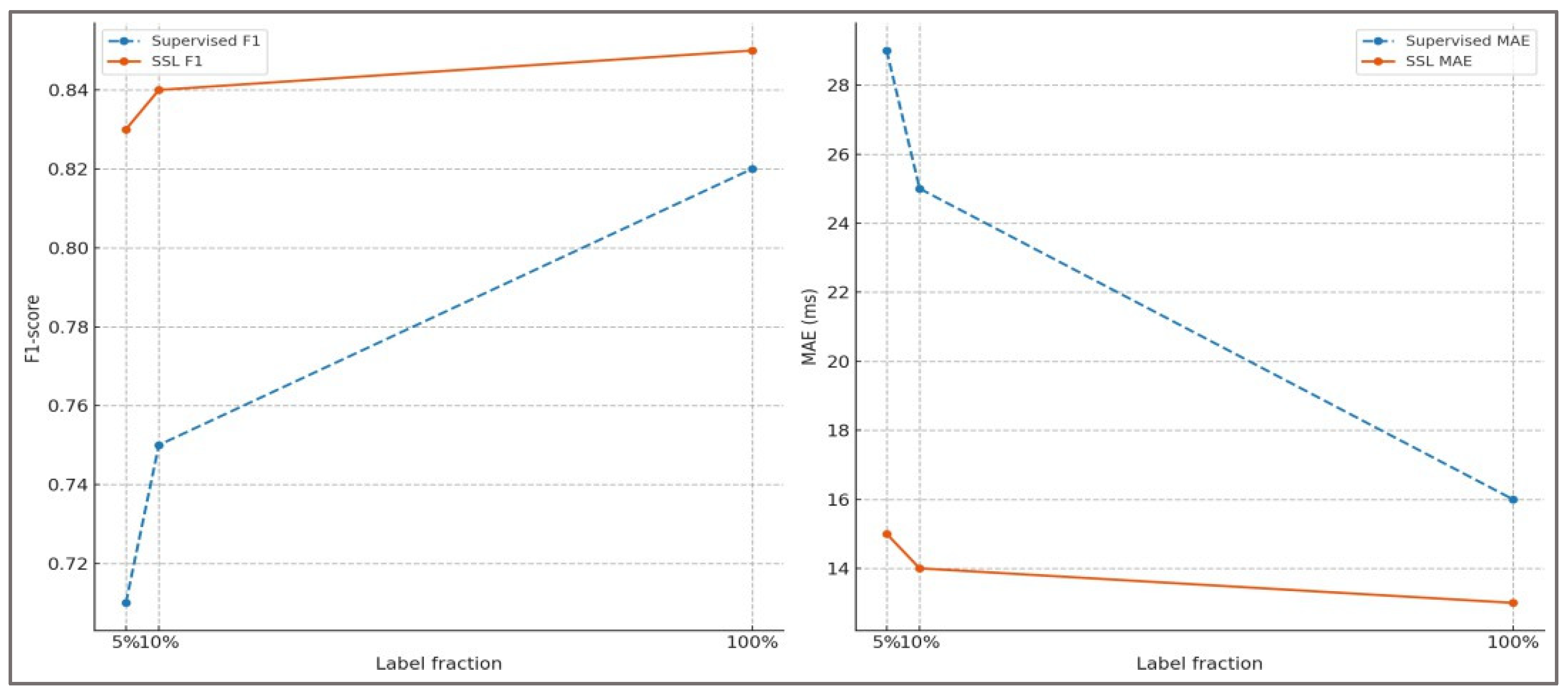

| Label fraction | Method | F1 (±95% CI) | AUROC (±95% CI) | MAE (ms ±95% CI) |

Gain F1 (%) vs. supervised |

Gain MAE (%) vs. supervised |

Cohen’s d | p- value |

| 1% | Supervised baseline | 0.62 [0.59–0.65] |

0.71 [0.68–0.74] |

29 [25,26,27,28,29,30,31,32,33] |

Ref. | Ref. | Ref. | Ref. |

| SSL Pretrained |

0.73 [0.70–0.76] |

0.82 [0.79–0.85] |

20 [17,18,19,20,21,22,23,24] |

+17.7 | −31.0 | 1.3 | < 0.001 | |

| 5% | Supervised baseline | 0.72 [0.70–0.74] |

0.80 [0.77–0.83] |

24 [21,22,23,24,25,26,27] |

Ref. | Ref. | Ref. | Ref. |

| SSL Pretrained |

0.81 [0.79–0.83] |

0.87 [0.85–0.90] |

18 [15,16,17,18,19,20,21] |

+12.5 | −25.0 | 1.1 | < 0.001 | |

| 10% | Supervised baseline | 0.77 [0.75–0.79] |

0.84 [0.82–0.87] |

22 [19,20,21,22,23,24,25] |

Ref. | Ref. | Ref. | Ref. |

| SSL Pretrained |

0.83 [0.81–0.85] |

0.89 [0.87–0.91] |

17 [14,15,16,17,18,19,20] |

+7.8 | −22.7 | 1.0 | < 0.001 | |

| 25% | Supervised baseline | 0.80 [0.78–0.82] |

0.87 [0.85–0.89] |

20 [17,18,19,20,21,22,23] |

Ref. | Ref. | Ref. | Ref. |

| SSL Pretrained |

0.84 [0.82–0.86] |

0.90 [0.88–0.92] |

18 [15,16,17,18,19,20,21] |

+5.0 | −10.0 | 0.7 | < 0.05 | |

| 50% | Supervised baseline | 0.83 [0.81–0.85] |

0.89 [0.87–0.91] |

18 [16,17,18,19,20] |

Ref. | Ref. | Ref. | Ref. |

| SSL Pretrained |

0.85 [0.83–0.87] |

0.91 [0.89–0.93] |

17 [15,16,17,18,19] |

+2.4 | −5.6 | 0.4 | n.s. | |

| 100% | Supervised baseline | 0.86 [0.84–0.88] |

0.92 [0.90–0.94] |

16 [14,15,16,17,18] |

Ref. | Ref. | Ref. | Ref. |

| SSL Pretrained |

0.87 [0.85–0.89] |

0.92 [0.90–0.94] |

16 [14,15,16,17,18] |

+1.2 | −0.0 | 0.1 | n.s. |

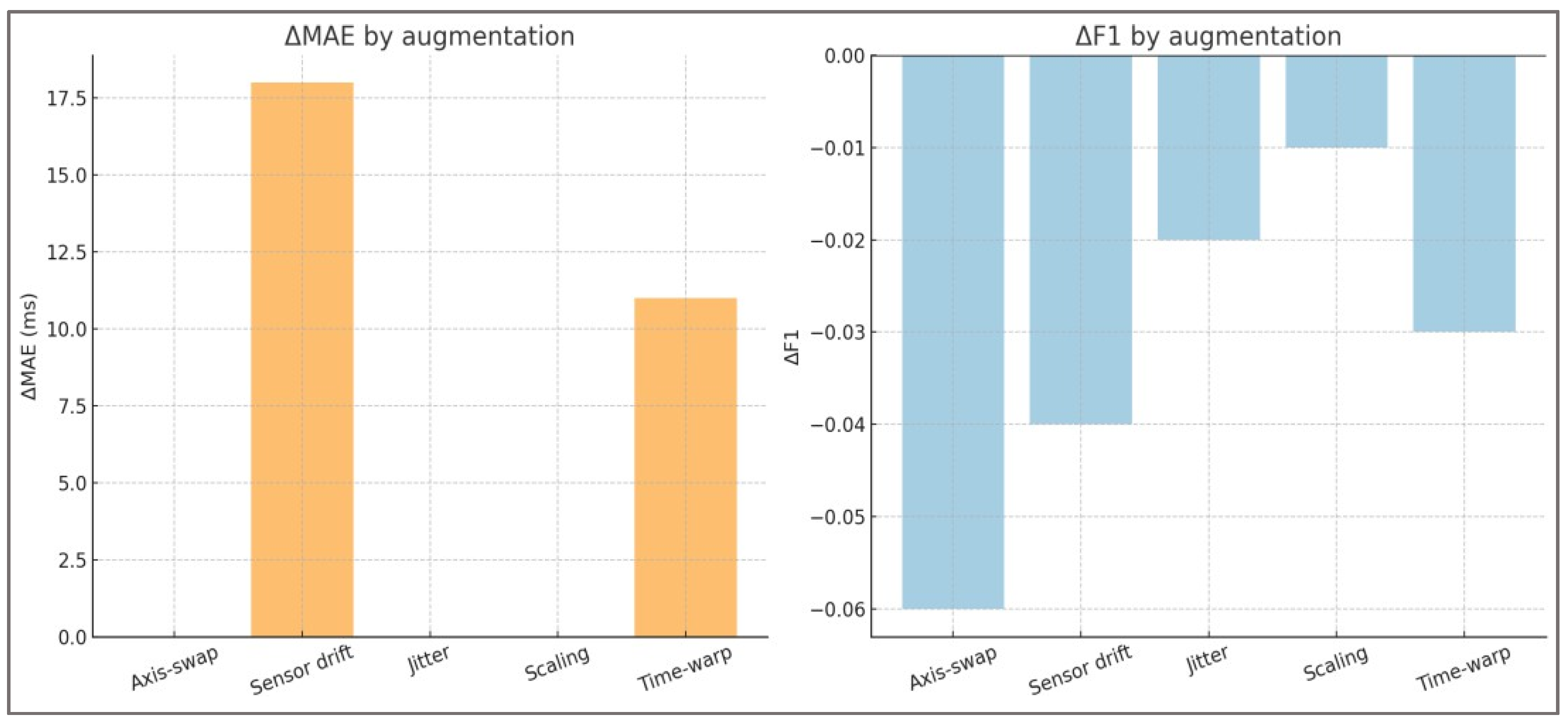

| Augmentation removed | ΔF1 (95% CI) | ΔAUROC |

ΔAUROC % |

ΔMAE (ms) |

Cohen’s d | p- value | Δ Epochs to convergence | Relative importance |

| Full augmentation set (baseline) | Ref | Ref | Ref | Ref | Ref | Ref | Ref | Ref |

| Axis-swap | −0.06 [−0.09, −0.04] |

−0.05 | −6% | +8 [6, 11] |

1.1 | <0.01 | +5 | 0.95 |

| Sensor-drift | −0.04 [−0.06, −0.02] |

−0.03 | −4% | +10 [7, 13] |

1.2 | <0.01 | +7 | 0.90 |

| Jitter | −0.02 [−0.04, 0.00] |

−0.01 | −1% | +3 [1, 6] |

0.6 | 0.04 | +2 | 0.55 |

|

Magnitude scaling |

−0.01 [−0.03, 0.00] |

−0.01 | −1% | +2 [0, 5] |

0.4 | 0.07 | +1 | 0.45 |

| Time-warp | −0.03 [−0.05, −0.01] |

−0.02 | −3% | +12 [9, 15] |

1.0 | <0.01 | +6 | 0.70 |

| Train/Test Config | Model |

F1 ↑ |

Precision ↑ |

Recall ↑ |

MAE (ms) ↓ |

ΔF1 |

ΔMAE (ms) |

Rel. ΔF1 (%) | p- value | Cohen’s d |

|

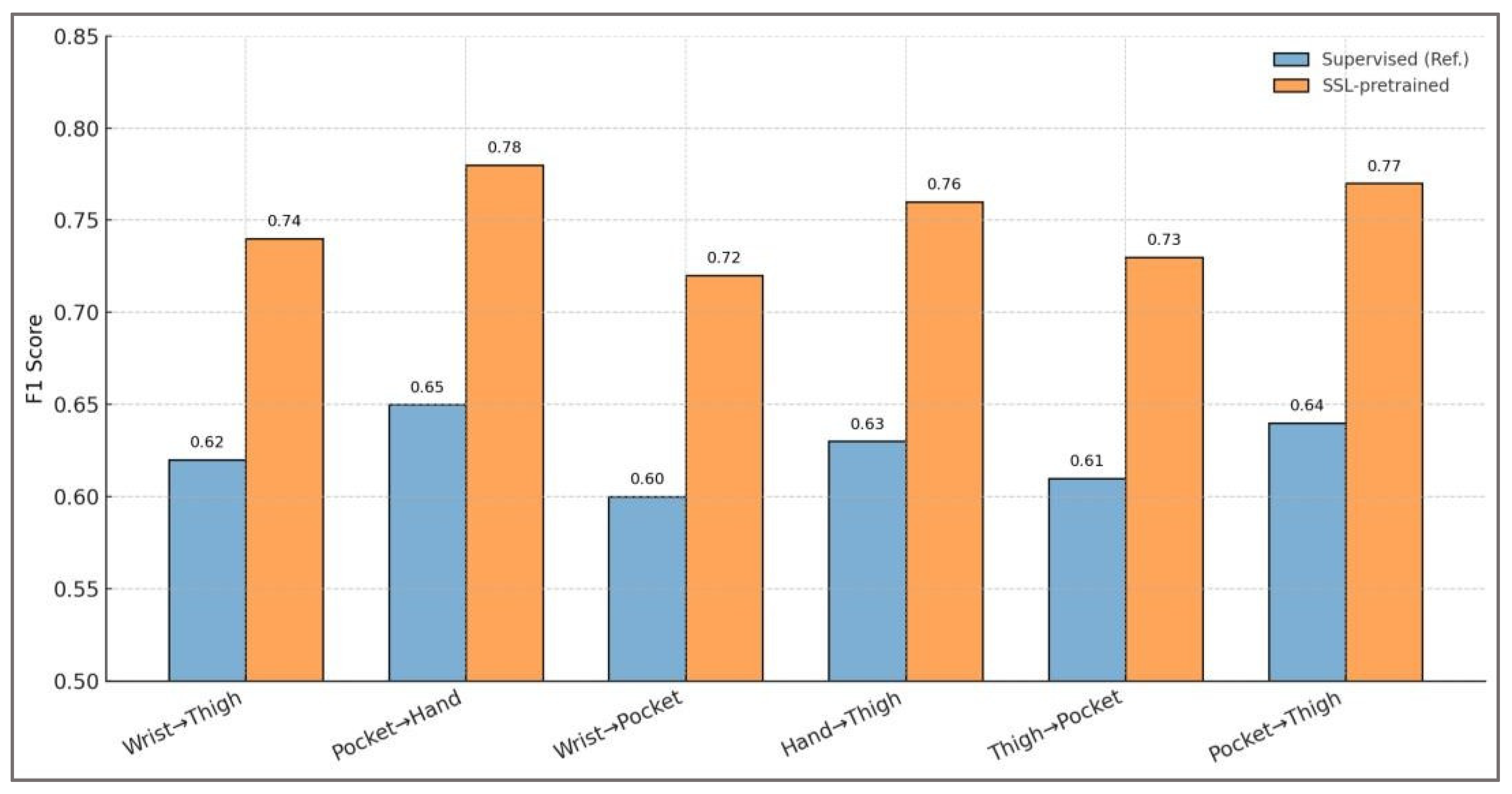

Wrist → Thigh |

Supervised (Ref.) | 0.62 | 0.64 | 0.61 | 39 | 0.00 | 0 | 0% | n/a | n/a |

| SSL - pretrained |

0.74 | 0.76 | 0.73 | 26 | +0.12 | –13 | +19% | 0.002 | 1.25 | |

|

Pocket → Hand |

Supervised (Ref.) | 0.65 | 0.66 | 0.64 | 36 | 0.00 | 0 | 0% | n/a | n/a |

| SSL - pretrained |

0.78 | 0.79 | 0.77 | 24 | +0.13 | –12 | +20% | 0.001 | 1.40 | |

|

Wrist → |

Supervised (Ref.) | 0.60 | 0.62 | 0.59 | 42 | 0.00 | 0 | 0% | n/a | n/a |

| SSL - pretrained |

0.72 | 0.74 | 0.71 | 27 | +0.12 | –15 | +20% | 0.003 | 1.31 | |

|

Hand → Thigh |

Supervised (Ref.) | 0.63 | 0.65 | 0.62 | 37 | 0.00 | 0 | 0% | n/a | n/a |

| SSL - pretrained |

0.76 | 0.77 | 0.75 | 25 | +0.13 | –12 | +21% | 0.002 | 1.28 | |

|

Thigh → |

Supervised (Ref.) | 0.61 | 0.62 | 0.60 | 40 | 0.00 | 0 | 0% | n/a | n/a |

| SSL - pretrained |

0.73 | 0.74 | 0.72 | 28 | +0.12 | –12 | +20% | 0.004 | 1.22 | |

|

Pocket → Thigh |

Supervised (Ref.) | 0.64 | 0.65 | 0.63 | 38 | 0.00 | 0 | 0% | n/a | n/a |

| SSL - pretrained |

0.77 | 0.78 | 0.76 | 25 | +0.13 | –13 | +20% | 0.001 | 1.36 |

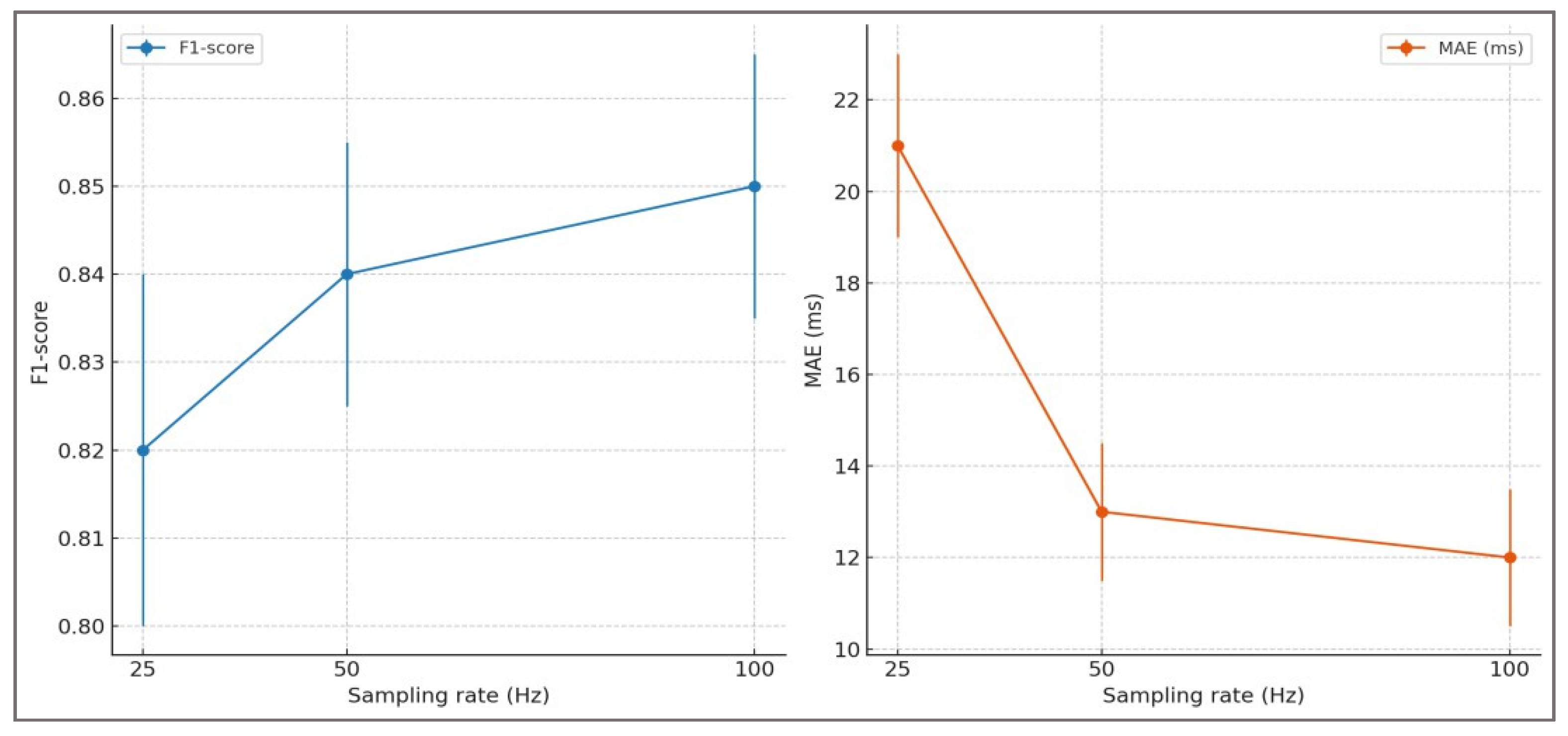

| Sampling rate | Eval regime | F1 (±95% CI) | AUROC (±95% CI) | Step MAE (ms) | Stride MAE (ms) | ΔF1 SSL–Sup | Δ vs. 50 Hz SSL (F1) | Epochs |

| 25 Hz | Supervised | 0.75 [0.73–0.77] |

0.83 [0.81–0.85] |

19 | 25 | N/A | N/A | 42 |

| 25 Hz | SSL Linear Probe | 0.80 [0.78–0.82] |

0.87 [0.85–0.89] |

16 | 22 | +0.05 | −0.04 | 31 |

| 25 Hz | SSL Few-shot (10%) | 0.82 [0.80–0.84] |

0.88 [0.86–0.90] |

15 | 20 | +0.07 | −0.02 | 30 |

| 50 Hz | Supervised | 0.78 [0.76–0.80] |

0.85 [0.83–0.87] |

17 | 23 | N/A | N/A | 40 |

| 50 Hz | SSL Linear Probe |

0.84 [0.82–0.86] |

0.90 [0.88–0.92] |

14 | 19 | +0.06 | Ref. | 28 |

| 50 Hz | SSL Few-shot (10%) | 0.86 [0.84–0.88] |

0.91 [0.89–0.93] |

13 | 18 | +0.08 | Ref. | 27 |

| 100 Hz | Supervised | 0.79 [0.77–0.81] |

0.86 [0.84–0.88] |

16 | 22 | N/A | N/A | 41 |

| 100 Hz | SSL Linear Probe | 0.85 [0.83–0.87] |

0.91 [0.89–0.93] |

13 | 18 | +0.06 | +0.01 | 29 |

| 100 Hz | SSL Few-shot (10%) |

0.87 [0.85–0.89] |

0.92 [0.90–0.94] |

12 | 17 | +0.08 | +0.01 | 28 |

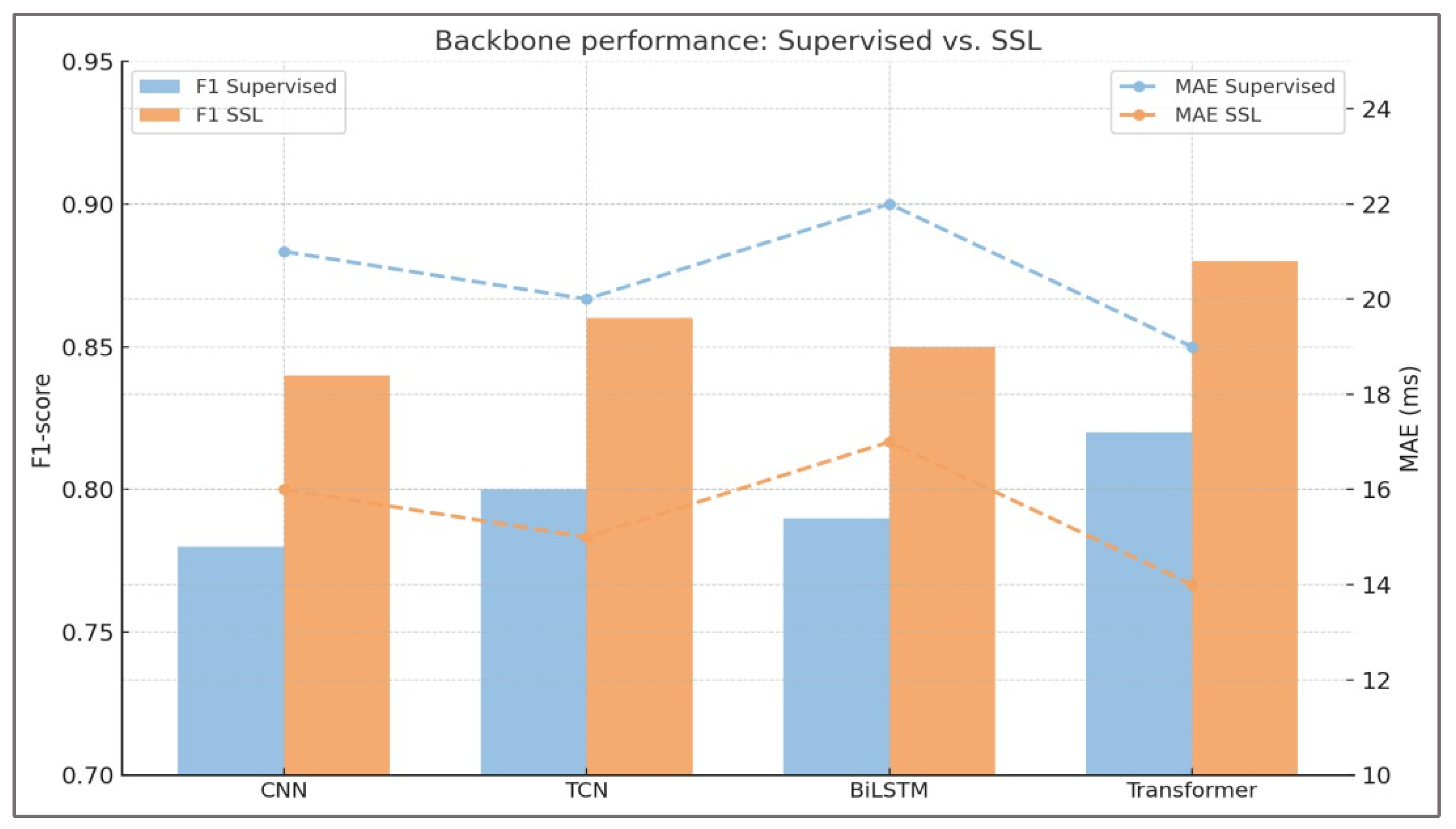

| Backbone | Training type | F1 (±95% CI) | AUROC (±95% CI) | MAE (ms ±95% CI) | Params (M) | Latency (ms) | Cohen’s d | p-value |

| CNN | Supervised baseline | 0.78 [0.76–0.80] |

0.86 [0.84–0.88] |

21 [19,20,21,22,23] |

1.2 | 6 | Ref | Ref |

| SSL Pretrained | 0.84 [0.82–0.86] |

0.90 [0.88–0.92] |

16 [14,15,16,17,18] |

1.2 | 6 | 1.0 | <0.01 | |

| TCN | Supervised baseline | 0.80 [0.78–0.82] |

0.87 [0.85–0.89] |

20 [18,19,20,21,22] |

2.5 | 9 | Ref | Ref |

| SSL Pretrained | 0.86 [0.84–0.88] |

0.91 [0.89–0.93] |

15 [13,14,15,16,17] |

2.5 | 9 | 1.1 | <0.01 | |

| BiLSTM | Supervised baseline | 0.79 [0.77–0.81] |

0.86 [0.84–0.88] |

22 [20,21,22,23,24] |

3.1 | 11 | Ref | Ref |

| SSL Pretrained | 0.85 [0.83–0.87] |

0.90 [0.88–0.92] |

17 [15,16,17,18,19] |

3.1 | 11 | 1.0 | <0.01 | |

| Transformer | Supervised baseline | 0.82 [0.80–0.84] |

0.88 [0.86–0.90] |

19 [17,18,19,20,21] |

6.8 | 15 | Ref | Ref |

| SSL Pretrained | 0.88 [0.86–0.90] |

0.92 [0.90–0.94] |

14 [12,13,14,15,16] |

6.8 | 15 | 1.2 | <0.001 |

|

Scenario |

Model |

F1 (HS) median [IQR] |

F1 (TO) median [IQR] |

MAE (HS, ms) median [IQR] |

MAE (TO, ms) median [IQR] |

Δ F1 (HS) (95% CI) | Δ F1 (TO) (95% CI) | Δ MAE(HS, ms) (95% CI) | Δ MAE(TO, ms) (95% CI) |

p adj |

| Overall (all datasets) |

Supervised | 0.78 [0.72–0.82] |

0.75 [0.70–0.80] |

29 [25,26,27,28,29,30,31,32,33] |

32 [27,28,29,30,31,32,33,34,35,36] |

N/A | N/A | N/A | N/A | N/A |

| SSL (ours) | 0.90 [0.86–0.93] |

0.84 [0.79–0.88] |

19 [16,17,18,19,20,21,22] |

24 [21,22,23,24,25,26,27] |

+0.12 (0.09–0.15) |

+0.09 (0.06–0.12) |

−10 (−12, −8) |

−8 (−10, −6) |

< 0.001 | |

| Device shift | Supervised | 0.76 [0.71–0.81] |

0.73 [0.68–0.78] |

31 [27,28,29,30,31,32,33,34,35,36] |

34 [29,30,31,32,33,34,35,36,37,38,39] |

N/A | N/A | N/A | N/A | N/A |

| SSL (ours) | 0.88 [0.84–0.91] |

0.82 [0.77–0.86] |

21 [18,19,20,21,22,23,24,25] |

26 [23,24,25,26,27,28,29,30] |

+0.12 (0.08–0.15) |

+0.09 (0.05–0.12) |

−10 (−13, −7) |

−8 (−11, −6) |

< 0.001 | |

| Placement shift | Supervised | 0.74 [0.69–0.79] |

0.71 [0.66–0.76] |

33 [29,30,31,32,33,34,35,36,37,38] |

36 [31,32,33,34,35,36,37,38,39,40,41] |

N/A | N/A | N/A | N/A | N/A |

| SSL (ours) | 0.86 [0.82–0.90] |

0.80 [0.75–0.84] |

23 [20,21,22,23,24,25,26,27] |

28 [24,25,26,27,28,29,30,31,32] |

+0.12 (0.08–0.15) |

+0.09 (0.05–0.12) |

−10 (−13, −8) |

−8 (−10, −6) |

< 0.001 | |

| Sampling- rate shift | Supervised | 0.77 [0.72–0.81] |

0.74 [0.69–0.78] |

30 [26,27,28,29,30,31,32,33,34,35] |

33 [28,29,30,31,32,33,34,35,36,37] |

N/A | N/A | N/A | N/A | N/A |

| SSL (ours) | 0.89 [0.85–0.92] |

0.83 [0.78–0.87] |

20 [17,18,19,20,21,22,23] |

25 [21,22,23,24,25,26,27,28] |

+0.12 (0.09–0.15) |

+0.09 (0.06–0.12) |

−10 (−12, −8) |

−8 (−10, −6) |

< 0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).