Submitted:

22 September 2025

Posted:

24 September 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Analyze the regulatory landscape and actor-specific obligations under the EU AI Act, focusing on the distribution of legal burdens across providers, deployers, importers, and related roles.

- Assess the limitations of ALTAI as a soft-law instrument, especially in traceability and enforceability.

- Review complementary governance frameworks (e.g., ISO/IEC 42001, NIST AI RMF) and identify gaps in their suitability for low-capacity actors.

- Identify structural asymmetries in the compliance ecosystem that call for proportionate, role-sensitive approaches.

2. Methodology

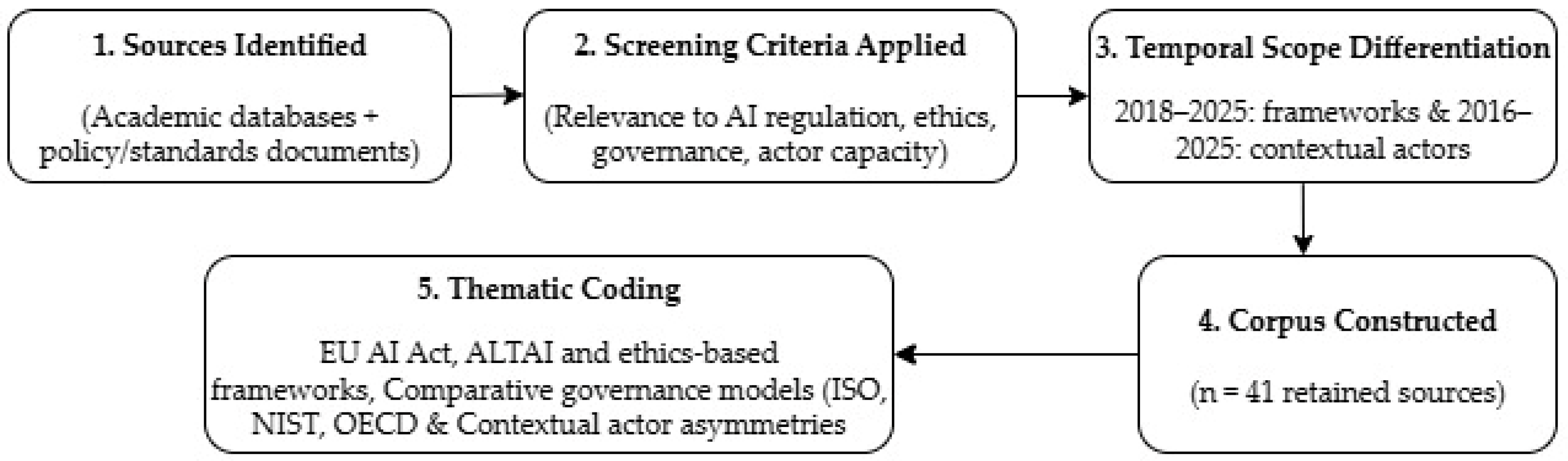

2.1. Research Design

- Source identification across academic and policy databases.

- Screening against predefined legal, ethical, and governance relevance criteria.

- Temporal scoping, distinguishing between recent frameworks and longer-standing actor constraints.

- Corpus construction and thematic coding.

2.2. Search Strategy

2.3. Inclusion and Exclusion Criteria

2.3.1. Inclusion Criteria

2.3.2. Exclusion Criteria

2.4. Temporal Scope of Sources

| Category | Oldest Source in Review | Temporal Scope Applied | Rationale |

|---|---|---|---|

| Governance & Ethics Frameworks (ALTAI, OECD, NIST, ISO) | OECD AI Principles (2019) | 2018–2025 | A rapidly evolving field; earlier sources predate the formalization of AI governance. |

| ALTAI (HLEG) | 2020 | 2018–2025 | Originated in 2019, Ethics Guidelines; operationalised in 2020. |

| NIST AI RMF | Draft 2021, final 2023 | 2018–2025 | Framework development intensified post-2019; older sources are not directly relevant. |

| ISO/IEC 42001 | 2023 | 2018–2025 | Standard finalised in late 2023; included as a cutting-edge regulatory benchmark. |

| Contextual Actors (SMEs, Public Sector, Low-Capacity Actors) | Safa, Solms & Furnell (2016) | 2016–2025 | Structural constraints (resources, compliance capacity) are long-standing; older sources remain relevant. |

2.5. Data Extraction and Synthesis

- 1.

- The EU AI Act (regulatory scope, obligations, proportionality).

- 2.

- Ethics-based frameworks (ALTAI and related governance checklists).

- 3.

- Comparative governance models (ISO/IEC 42001, NIST AI RMF, OECD AI Principles).

- 4.

- Contextual actor asymmetries (SMEs, public authorities, non-specialist deployers).

2.6. Limitations

3. Materials and Methods

3.1. The Regulatory Landscape: The EU AI Act

3.1.1. Objectives, Scope, and Risk-Based Classification

3.1.2. Unacceptable-Risk Systems and Implications for Compliance

3.1.3. Limited-Risk Systems and Implications for Compliance

3.1.4. Minimal-Risk Systems

3.1.5. High-Risk Systems and Implications for Compliance

3.1.6. General Purpose AI (GPAI)

- Transparency documentation on model capabilities, limitations, and risk areas (Article 53).

- Risk mitigation plans, including systemic and societal risk analysis (Article 55 for systemic-risk GPAI).

- Technical documentation detailing architecture, training, and dataset provenance (Article 53).

- Protocols for responsible open release, particularly for systemic-risk GPAI models (Article 55).

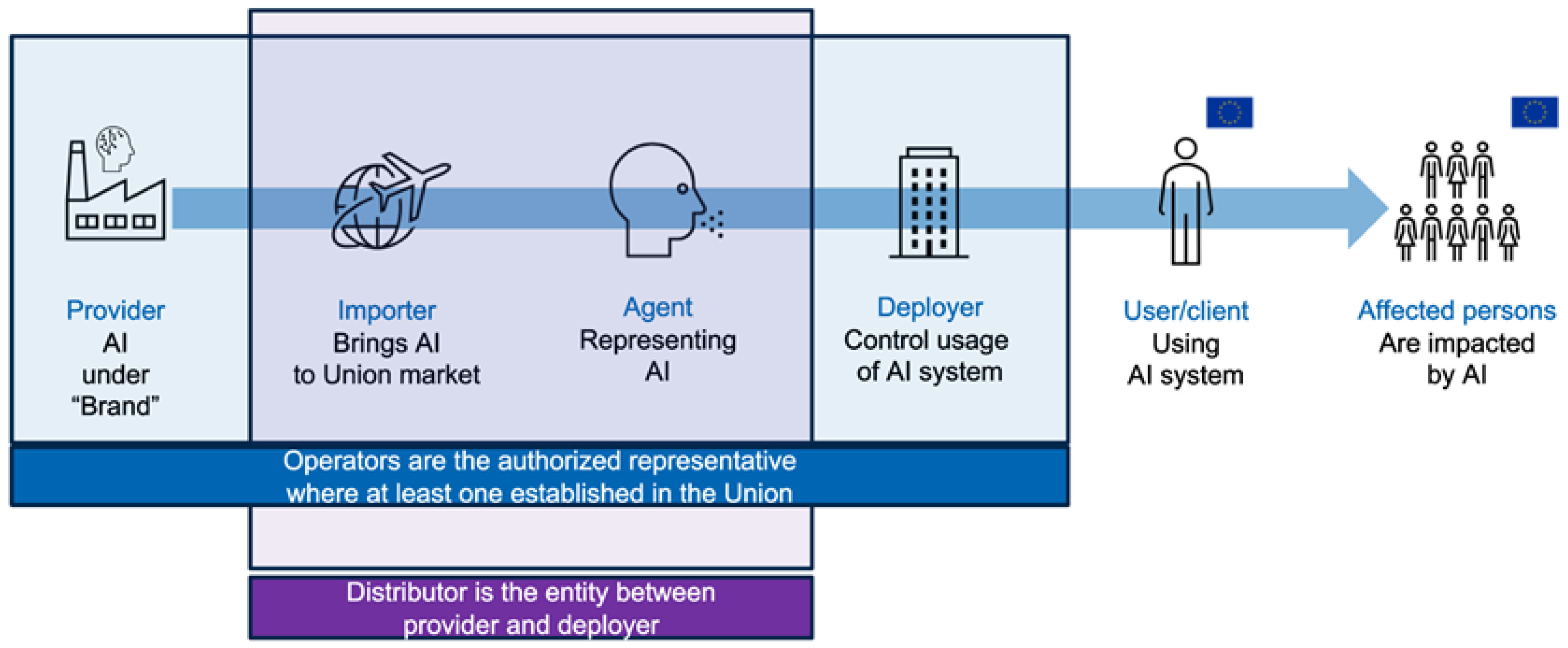

3.1.7. The AI Value Chain and Role-Specific Compliance

- Provider (Art. 3(2)): the natural or legal person who develops an AI system or has it developed, and places it on the market or puts it into service under their name or trademark.

- Deployer (Art. 3(4)): a natural or legal person using an AI system in the course of their professional activities.

- Authorised Representative (Art. 3(5)): a legal entity established in the Union that is appointed in writing by a non-EU provider to act on their behalf concerning regulatory obligations.

- Importer (Art. 3(6)): any natural or legal person established in the Union who places an AI system on the Union market that originates from a third country.

- Distributor (Art. 3(7)): any natural or legal person in the supply chain, other than the provider or importer, who makes an AI system available on the Union market without modifying its properties.

- Product Manufacturer (Art. 3(8)): a manufacturer placing a product on the market or putting it into service under their own name, and who integrates an AI system into that product.

3.1.8. Summary of Role-Based Obligations under the EU AI Act

3.2. Ethics and Governance: The ALTAI Checklist

3.2.1. Origins and Principles

3.2.2. Role in EU Governance

3.2.3. Strengths and Normative Value

3.2.4. Sector-Specific Ethical Tensions

3.2.5. Limitations in Security and Enforceability

3.3. Comparative Compliance Frameworks

3.3.1. Audit-Oriented Frameworks

3.3.2. Design-Integrated Frameworks

2.3.3. Alignment and Gaps

3.4. Implementation Gaps and Legal Complexity

3.4.1. Challenges in Translating Regulation to Practice

3.4.2. Resource Asymmetry

3.4.3. Fragmentation and Contradictions GDPR vs EU AI Act

3.4.4. Iterative Governance as a Low-Burden Strategy for SMEs

3.5. Framing Least Responsible AI Controls

3.5.1. Rationale and Structural Asymmetry

3.5.2. Idealism vs Legal Sufficiency

3.5.3. Enabling Compliance in Low-Capacity Settings

4. Results

5. Suggestions for Future Research

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models 2023.

- Iyelolu, T.V.; Agu, E.E.; Idemudia, C.; Ijomah, T.I. Driving SME Innovation with AI Solutions: Overcoming Adoption Barriers and Future Growth Opportunities. International Journal of Science and Technology Research Archive 2024, 7, 036–054.

- Jafarzadeh, P.; Vähämäki, T.; Nevalainen, P.; Tuomisto, A.; Heikkonen, J. Supporting SME Companies in Mapping out AI Potential: A Finnish AI Development Case. J Technol Transf 2024. [CrossRef]

- Golpayegani, D.; Pandit, H.J.; Lewis, D. Comparison and Analysis of 3 Key AI Documents: EU’s Proposed AI Act, Assessment List for Trustworthy AI (ALTAI), and ISO/IEC 42001 AI Management System. In Artificial Intelligence and Cognitive Science; Longo, L., O’Reilly, R., Eds.; Communications in Computer and Information Science; Springer Nature Switzerland: Cham, 2023; Vol. 1662, pp. 189–200 ISBN 978-3-031-26437-5.

- Madhavan, K.; Yazdinejad, A.; Zarrinkalam, F.; Dehghantanha, A. Quantifying Security Vulnerabilities: A Metric-Driven Security Analysis of Gaps in Current AI Standards 2025.

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, n71. [CrossRef]

- Kalodanis, K.; Rizomiliotis, P.; Feretzakis, G.; Papapavlou, C.; Anagnostopoulos, D. High-Risk AI Systems—Lie Detection Application. Future Internet 2025, 17, 26.

- Li, Z. AI Ethics and Transparency in Operations Management: How Governance Mechanisms Can Reduce Data Bias and Privacy Risks. Journal of Applied Economics and Policy Studies 2024, 13, 89–93. [CrossRef]

- Rintamaki, T.; Pandit, H.J. Developing an Ontology for AI Act Fundamental Rights Impact Assessments 2024.

- Golpayegani, D. Semantic Frameworks to Support the EU AI Act’s Risk Management and Documentation. PhD Thesis, Trinity College, 2024.

- Bhouri, H. Navigating Data Governance: A Critical Analysis of European Regulatory Framework for Artificial Intelligence. Recent Advances in Public Sector Management 2025, 171.

- Kriebitz, A.; Corrigan, C.; Boch, A.; Evans, K.D. Decoding the EU AI Act in the Context of Ethics and Fundamental Rights. In The Elgar Companion to Applied AI Ethics; Edward Elgar Publishing, 2024; pp. 123–152 ISBN 1-80392-824-7.

- Sun, N.; Miao, Y.; Jiang, H.; Ding, M.; Zhang, J. From Principles to Practice: A Deep Dive into AI Ethics and Regulations. arXiv preprint arXiv:2412.04683 2024.

- Karami, A. Artificial Intelligence Governance in the European Union. Journal of Electrical Systems 2024, 20, 2706–2720. [CrossRef]

- Iyer, V.; Manshad, M.; Brannon, D. A Value-Based Approach to AI Ethics: Accountability, Transparency, Explainability, and Usability. Mercados y negocios 2025, 26, 3–12.

- Aiyankovil, K.G.; Lewis, D. Harmonizing AI Data Governance: Profiling ISO/IEC 5259 to Meet the Requirements of the EU AI Act. In Legal Knowledge and Information Systems; IOS Press, 2024; pp. 363–365.

- Ashraf, Z.A.; Mustafa, N. AI Standards and Regulations. Intersection of Human Rights and AI in Healthcare 2025, 325–352.

- Comeau, D.S.; Bitterman, D.S.; Celi, L.A. Preventing Unrestricted and Unmonitored AI Experimentation in Healthcare through Transparency and Accountability. npj Digital Medicine 2025, 8, 42.

- Scherz, P. Principles and Virtues in AI Ethics. Journal of Military Ethics 2024, 23, 251–263.

- Ho, C.-Y. A Risk-Based Regulatory Framework for Algorithm Auditing: Rethinking “Who,” “When,” and “What.” Tennessee Journal of Law and Policy 2025, 17. [CrossRef]

- NIST AI Risk Management Framework. NIST 2021.

- Janssen, M. Responsible Governance of Generative AI: Conceptualizing GenAI as Complex Adaptive Systems. Policy and Society 2025, puae040.

- Al-Omari, O.; Alyousef, A.; Fati, S.; Shannaq, F.; Omari, A. Governance and Ethical Frameworks for AI Integration in Higher Education: Enhancing Personalized Learning and Legal Compliance. Journal of Ecohumanism 2025, 4, 80-86-80–86.

- Proietti, S.; Magnani, R. Assessing AI Adoption and Digitalization in SMEs: A Framework for Implementation. arXiv preprint arXiv:2501.08184 2025.

- Thoom, S.R. Lessons from AI in Finance: Governance and Compliance in Practice. Int. J. Sci. Res. Arch. 2025, 14, 1387–1395. [CrossRef]

- Dimitrios, Z.; Petros, S. Towards Responsible AI: A Framework for Ethical Design Utilizing Deontic Logic. International Journal on Artificial Intelligence Tools 2025.

- Ajibesin, A.A.; Çela, E.; Vajjhala, N.R.; Eappen, P. Future Directions and Responsible AI for Social Impact. In AI for Humanitarianism; CRC Press, 2025; pp. 206–220.

- Meding, K. It’s Complicated. The Relationship of Algorithmic Fairness and Non-Discrimination Regulations in the EU AI Act 2025.

- Busch, F.; Geis, R.; Wang, Y.-C.; Kather, J.N.; Khori, N.A.; Makowski, M.R.; Kolawole, I.K.; Truhn, D.; Clements, W.; Gilbert, S.; et al. AI Regulation in Healthcare around the World: What Is the Status Quo? 2025.

- Palmiotto, F. The AI Act Roller Coaster: The Evolution of Fundamental Rights Protection in the Legislative Process and the Future of the Regulation. European Journal of Risk Regulation 2025, 1–24. [CrossRef]

- Liang, P. Leveraging Artificial Intelligence in Regulatory Technology (RegTech) for Financial Compliance. Applied and Computational Engineering 2024, 93, 166–171.

- Paolini E Silva, M.; Tamo-Larrieux, A.; Ammann, O. AI Literacy Under the AI Act: Tracing the Evolution of a Weakened Norm 2025.

- Olufunbi Babalola; Adebisi Adedoyin; Foyeke Ogundipe; Adebola Folorunso; Chineme Edger Nwatu Policy Framework for Cloud Computing: AI, Governance, Compliance and Management. Global J. Eng. Technol. Adv. 2024, 21, 114–126. [CrossRef]

- Gasser, U.; Almeida, V.A.F. A Layered Model for AI Governance. IEEE Internet Comput. 2017, 21, 58–62. [CrossRef]

- Heeks, R.; Renken, J. Data Justice for Development: What Would It Mean? Information Development 2018, 34, 90–102. [CrossRef]

- Safa, N.S.; Solms, R.V.; Furnell, S. Information Security Policy Compliance Model in Organizations. Computers & Security 2016, 56, 70–82. [CrossRef]

- Yeung, K.; Lodge, M. Algorithmic Regulation; Oxford University Press, 2019; ISBN 0-19-257543-0.

- Popa, D.M. Frontrunner Model for Responsible AI Governance in the Public Sector: The Dutch Perspective. AI Ethics 2024. [CrossRef]

- Wei, K.; Ezell, C.; Gabrieli, N.; Deshpande, C. How Do AI Companies “Fine-Tune” Policy? Examining Regulatory Capture in AI Governance. AIES 2024, 7, 1539–1555. [CrossRef]

- Arnold, Z.; Schiff, D.S.; Schiff, K.J.; Love, B.; Melot, J.; Singh, N.; Jenkins, L.; Lin, A.; Pilz, K.; Enweareazu, O.; et al. Introducing the AI Governance and Regulatory Archive (AGORA): An Analytic Infrastructure for Navigating the Emerging AI Governance Landscape. AIES 2024, 7, 39–48. [CrossRef]

- Singh, L.; Randhelia, A.; Jain, A.; Choudhary, A.K. Ethical and Regulatory Compliance Challenges of Generative AI in Human Resources. In Generative Artificial Intelligence in Finance; Chelliah, P.R., Dutta, P.K., Kumar, A., Gonzalez, E.D.R.S., Mittal, M., Gupta, S., Eds.; Wiley, 2025; pp. 199–214 ISBN 978-1-394-27104-7.

| Actor Type | High Risk | Limited Risk |

|---|---|---|

| Provider | Lifecycle risk management (Art. 9), data governance (Art. 10), technical documentation (Art. 11), logging capabilities (Art. 12), transparency and instructions (Art. 13), human oversight (Art. 14), robustness, accuracy, and cybersecurity (Art. 15), Provider Identification (Art. 16(b)), Quality Management System (Art. 17), Maintain Technical Documentation (Art. 18), Logging Obligations (Art. 19), Corrective Actions & Reporting (Art. 20), Conformity Assessment (Art. 43), EU Declaration of Conformity (Art. 47), CE Marking (Art. 48), Registration in the EU Database (Art. 49(1)), Demonstrate Compliance upon Authority Request (Art. 16(k)), Accessibility Compliance (Art. 16(l), Recital 80) |

Ensure Users Are Informed When Interacting with AI Systems (Art. 50(1), Recital 132), Mark Synthetic Content as Artificially Generated or Manipulated in Machine-Readable Format (Art. 50(2), Recital 133). |

| Importer | Verify Conformity Assessment by Provider (Art. 23(1)(a)), Verify Technical Documentation Exists (Art. 23(1)(b)), Ensure CE Marking, Declaration of Conformity, and Instructions Are Present (Art. 23(1)(c)), Verify Appointment of Authorised Representative (Art. 23(1)(d)), Withhold Market Placement if Non-compliant or Falsified (Art. 23(2)), Inform Authorities if System Poses a Risk (Art. 23(2)), Importer Identity and Contact Information (Art. 23(3)), Ensure Storage and Transport Do Not Jeopardise Compliance (Art. 23(4)), Retain Key Documentation for 10 Years (Art. 23(5)), Provide Authorities with Documentation Upon Request (Art. 23(6)), Ensure Technical Documentation Availability (Art. 23(6)), Cooperate with Authorities in Risk Mitigation Actions (Art. 23(7)). |

No obligations specified under the EU AI Act |

| Distributor | Verify CE Marking, EU Declaration, Instructions, and Upstream Compliance (Art. 24(1)), Withhold Market Availability if Non-compliant or Risky (Art. 24(2)), Inform Provider or Importer if Risk is Identified (Art. 24(2)), Ensure Storage and Transport Conditions Preserve Compliance (Art. 24(3)), Take or Ensure Corrective Action, Withdrawal, or Recall if Non-compliant (Art. 24(4)), Inform Provider, Importer, and Authorities if System Poses a Risk (Art. 24(4)), Provide Documentation on Compliance Actions to Authorities Upon Request (Art. 24(5)), Cooperate with Authorities in Risk Mitigation Actions (Art. 24(6)). |

No obligations specified under the EU AI Act |

| Deployer | Perform a FRIA (Art. 27) Ensure Use by Instructions for Use (Art. 29(1)), Assign Competent Human Oversight (Art. 29(2)), Ensure Relevant and Representative Input Data Under Their Control (Art. 29(4)), Monitor Operation and Inform Provider or Authorities in Case of Risk or Serious Incident (Art. 29(5)), Retain System Logs for a Minimum of Six Months if Under Their Control (Art. 29(6)), Inform Workers and Representatives Prior to Workplace Deployment (Art. 29(7), Recital 92), Public Deployers Must Verify Registration in EU Database (Art. 29(8)), Use Art. 13 Information for DPIA Compliance Where Applicable (Art. 29(9)), Request Judicial or Administrative Authorisation Before Using Post-Remote Biometric ID Systems in Criminal Investigations (Art. 29(10), Recital 94–95), Inform Natural Persons When Affected by Decisions from Annex III Systems (Art. 29(11)), Cooperate with Competent Authorities (Art. 29(12)). |

Inform Individuals of Emotion Recognition or Biometric Categorisation Systems (Art. 50(3), Recital 132), Disclose Artificial Generation or Manipulation of Deepfake Content and Public-Facing AI-Generated Text (Art. 50(4), Recital 134)systems (Art. 52) |

| Product Manufacturer | Ensure Prevention and Mitigation of Safety Risks from AI Components in Products, Including Autonomous Robots and Diagnostic Systems in High-Stakes Contexts like Health and Manufacturing (Recital 47), Ensure Safety of Non-High-Risk AI Systems via General Product Safety Regulation (EU) 2023/988 as a Complementary Safeguard (Recital 166) |

No obligations specified under the EU AI Act |

| Authorized Representative (Agent) | Appointment by Written Mandate (Art. 22(1)), Task Performance as Mandated by Provider (Art. 22(2)), Provide Mandate Copy to Authorities Upon Request (Art. 22(3)), Verify EU Declaration and Technical Documentation (Art. 22(3)(a Retain Provider Contact Details and Compliance Documentation for 10 Years (Art. 22(3)(b)), Provide Information and Access to Logs to Authorities Upon Request (Art. 22(3)(c)), Cooperate with Authorities in Risk Mitigation (Art. 22(3)(d)), Ensure Registration Compliance or Verify Accuracy if Done by Provider (Art. 22(3)(e)), Accept Regulatory Contact on Provider's Behalf (Art. 22(3), final sentence), Terminate Mandate if Provider Breaches Obligations and Inform Authorities (Art. 22(4)). |

No obligations specified under the EU AI Act |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).