Submitted:

19 September 2025

Posted:

19 September 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

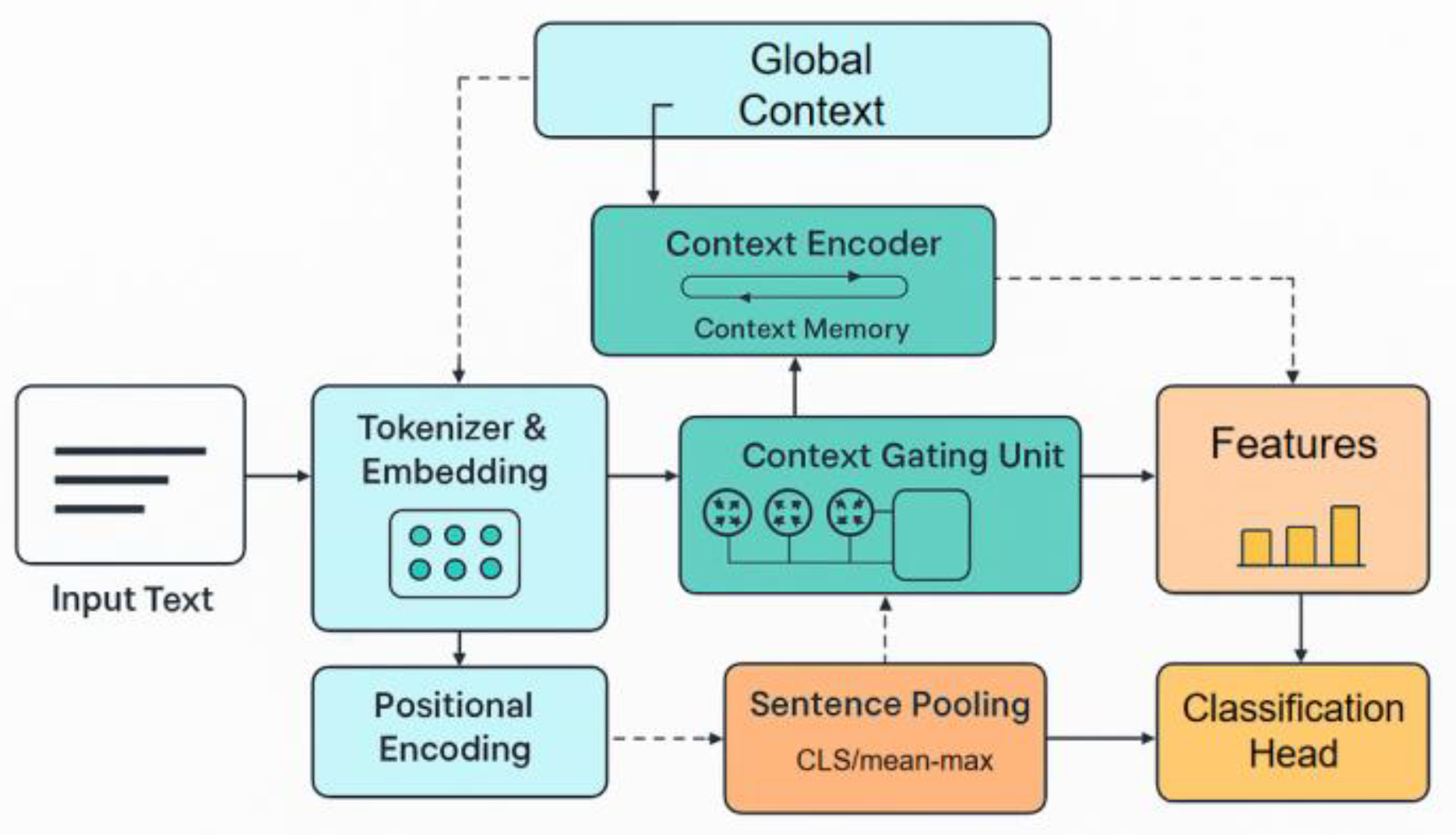

2. Proposed Approach

3. Performance Evaluation

3.1. Dataset

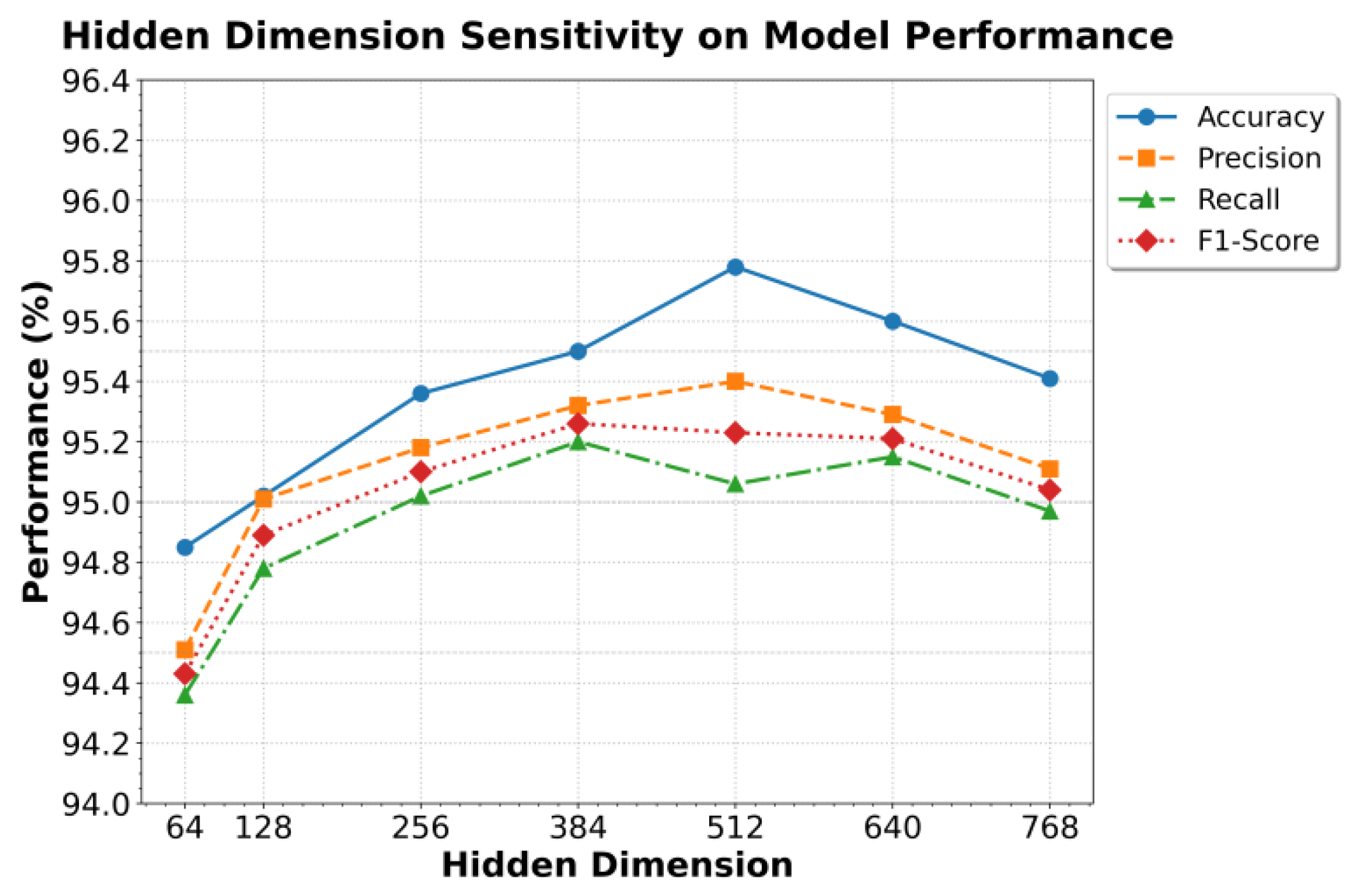

3.2. Experimental Results

4. Conclusion

References

- Edwards A, Camacho-Collados J. Language models for text classification: is in-context learning enough?(2024)[J]. arXiv preprint arXiv:2403.17661, 2024.

- Sun X, Li X, Li J, et al. Text classification via large language models[J]. arXiv preprint arXiv:2305.08377, 2023.

- Sen J, Pandey R, Waghela H. Context-Enhanced Contrastive Search for Improved LLM Text Generation[J]. arXiv preprint arXiv:2504.21020, 2025.

- Abd-Elaziz M M, El-Rashidy N, Abou Elfetouh A, et al. Position-context additive transformer-based model for classifying text data on social media[J]. Scientific Reports, 2025, 15(1): 8085. [CrossRef]

- Karthick R. Context-Aware Topic Modeling and Intelligent Text Extraction Using Transformer-Based Architectures[J]. Available at SSRN 5275391, 2025.

- Li C, Xie Z, Wang H. Short Text Classification Based on Enhanced Word Embedding and Hybrid Neural Networks[J]. Applied Sciences, 2025, 15(9): 5102. [CrossRef]

- Luo D, Zhang C, Zhang Y, et al. CrossTune: Black-box few-shot classification with label enhancement[J]. arXiv preprint arXiv:2403.12468, 2024.

- X. Yan, J. Du, X. Li, X. Wang, X. Sun, P. Li and H. Zheng, “A Hierarchical Feature Fusion and Dynamic Collaboration Framework for Robust Small Target Detection,” IEEE Access, vol. 13, pp. 123456–123467, 2025. [CrossRef]

- Peng, S., Zhang, X., Zhou, L., and Wang, P., “YOLO-CBD: Classroom Behavior Detection Method Based on Behavior Feature Extraction and Aggregation,” Sensors, vol. 25, no. 10, p. 3073, 2025. [CrossRef]

- J. Wei, Y. Liu, X. Huang, X. Zhang, W. Liu and X. Yan, “Self-Supervised Graph Neural Networks for Enhanced Feature Extraction in Heterogeneous Information Networks”, 2024 5th International Conference on Machine Learning and Computer Application (ICMLCA), pp. 272-276, 2024.

- Gong, M., Deng, Y., Qi, N., Zou, Y., Xue, Z., and Zi, Y., “Structure-Learnable Adapter Fine-Tuning for Parameter-Efficient Large Language Models,” arXiv preprint arXiv:2509.03057, 2025.

- Li, X. T., Zhang, X. P., Mao, D. P., and Sun, J. H., “Adaptive robust control over high-performance VCM-FSM,” 2017.

- Li, Y., Han, S., Wang, S., Wang, M., and Meng, R., “Collaborative Evolution of Intelligent Agents in Large-Scale Microservice Systems,” arXiv preprint arXiv:2508.20508, 2025.

- Enamoto L, Santos A R A S, Maia R, et al. Multi-label legal text classification with BiLSTM and attention[J]. International Journal of Computer Applications in Technology, 2022, 68(4): 369-378.

- Galke L, Scherp A. Bag-of-words vs. graph vs. sequence in text classification: Questioning the necessity of text-graphs and the surprising strength of a wide MLP[J]. arXiv preprint arXiv:2109.03777, 2021.

- Cacciari I, Ranfagni A. Hands-On Fundamentals of 1D Convolutional Neural Networks—A Tutorial for Beginner Users[J]. Applied Sciences (2076-3417), 2024, 14(18). [CrossRef]

- Karl F, Scherp A. Transformers are short text classifiers: a study of inductive short text classifiers on benchmarks and real-world datasets. Corr abs/2211.16878 (2022)[J]. URL: https://doi. org/10.48550/arXiv, 2211.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).