1. Introduction

Crude oil, as a critical strategic resource, plays a pivotal role in global energy security, economic stability, and industrial development [

2]. Its price fluctuations have far-reaching impacts on macroeconomic indicators such as inflation, employment, and trade balances [

3], as well as on micro-level decision-making in industries ranging from transportation to manufacturing [

4]. However, crude oil prices exhibit complex dynamics, including volatility clustering

1, nonlinear temporal dependencies, and sensitivity to geopolitical events, economic policies, and supply-demand shocks [

5,

6]. These characteristics pose significant challenges to accurate forecasting, making it a long-standing focus in energy economics and financial research. This macro–financial nexus has been documented across regimes and markets [

7,

8,

9,

10,

11].”

Accurate crude oil price forecasting is essential for multiple stakeholders. For governments, it informs energy policy formulation, strategic reserves management, and inflation control [IEA, 2022]. For energy companies, it supports investment decisions, risk hedging, and production planning [

12]. For financial markets, it guides the pricing of oil-related derivatives and portfolio optimization [

13]. Conversely, inaccurate forecasts may lead to market distortions, excessive speculation, or suboptimal policy responses [

14]. Thus, developing robust forecasting models remains a critical research priority. See also real-time evidence on forecastability [

11] and stock-market transmission [

9].

Crude oil prices are influenced by a complex interplay of factors, including supply-demand fundamentals such as OPEC production quotas, shale oil extraction costs, and global energy transitions [

15]; financialization through speculative activities in commodity markets that amplify price volatility [

16]; and geopolitical and macroeconomic shocks like conflicts in oil-producing regions, economic recessions, or policy shifts [

17]. These factors result in price dynamics that violate the assumptions of traditional linear models, such as homoskedasticity

2 and stationarity [

18]. Volatility clustering, for instance, indicates that past price fluctuations contain information about future volatility—a feature formalized by Engle [

19] through the Autoregressive Conditional Heteroskedasticity (ARCH) model. Additionally, nonlinear relationships between drivers and bidirectional temporal dependencies further complicate forecasting [

20]. Moreover, asymmetric/leverage effects motivate EGARCH/GJR/TARCH extensions [

21,

22,

23].”

To address these challenges, researchers have developed three main categories of forecasting models, each with distinct strengths and limitations. Early efforts relied on linear time series models such as Autoregressive Integrated Moving Average [

24] and its variants. While ARIMA captures linear temporal trends, it fails to model volatility clustering or nonlinearities [

25]. To address volatility, [

26] extended ARCH to Generalized ARCH (GARCH), which models conditional variance as a function of past squared residuals and volatility. GARCH and its variants remain widely used for volatility forecasting [

27], but struggle with complex nonlinear relationships and long-range temporal dependencies [

28].

With advances in computational power, machine learning models such as Support Vector Regression [

29] and Random Forest [

30] have been applied to capture nonlinear patterns. Deep learning (DL) models, particularly Recurrent Neural Networks (RNNs) and their variants, excel at modeling temporal sequences. Long Short-Term Memory (LSTM) networks [

31] address the vanishing gradient problem in RNNs, enabling long-term dependency learning, and are widely adopted for time series forecasting [

32]. Bidirectional LSTM (BiLSTM) [

33] further enhances performance by processing sequences in both directions, capturing bidirectional temporal relationships [

34]. However, standalone DL models often overlook volatility clustering, as they treat input data as homoscedastic [

35].

Recognizing the limitations of single-model approaches, hybrid models have emerged that combine statistical and ML/DL techniques. For example, GARCH-LSTM models integrate GARCH-derived volatility with LSTM to capture both volatility and temporal dependencies [

20]. Similarly, CNN-LSTM models apply convolutional layers to extract local features before feeding them into LSTM [

36]. While these hybrids outperform standalone models, gaps remain: (1) few incorporate bidirectional temporal learning to capture asymmetric dependencies; (2) nonlinear refinement remains limited, as traditional activation functions may not fully model complex high-dimensional nonlinearities [

37].

Notably, existing hybrid frameworks suffer from three critical gaps: (i) volatility modeling (e.g., GARCH) is often loosely coupled with sequential learning, failing to encode time-varying risk into temporal features; (ii) unidirectional LSTMs in models like GARCH-LSTM cannot capture forward-backward feedback loops—for example, how future geopolitical expectations influence current prices; (iii) nonlinear refinement relies on traditional activation functions, which underperform in approximating discontinuous jumps, such as the 2020 negative WTI prices caused by storage constraints.

Our GARCH–BiLSTM–KAN addresses these limitations by: (i) fusing GARCH-derived volatility as structured input to BiLSTM; (ii) leveraging bidirectional sequences to model expectation-driven feedback; and (iii) employing KAN’s spline-based nonlinearity to capture extreme market anomalies.

To this end, we propose a novel hybrid model—GARCH–BiLSTM–KAN—that integrates three components with complementary strengths: GARCH(1,1), which models time-varying volatility to enrich input features; BiLSTM, which captures bidirectional temporal dependencies in both returns and volatility; and the Kolmogorov–Arnold Network (KAN), a recently proposed neural architecture [

37] that uses univariate basis functions to model complex nonlinearities and refine BiLSTM outputs more efficiently than traditional networks.

Using daily WTI crude oil prices from 1986 to 2025, we compare GARCH–BiLSTM–KAN with benchmark models (e.g., GARCH, LSTM, GARCH-LSTM, CNN–LSTM–KAN). The contributions of this study are threefold: (1) Methodologically, we introduce a synergistic framework that unifies volatility modeling, bidirectional sequence learning, and advanced nonlinear refinement; (2) Empirically, we demonstrate superior forecasting performance via RMSE, MAE, and , validated by statistical tests over a 39-year dataset; (3) Practically, we provide a robust tool for energy market stakeholders to support risk management, policy formulation, and investment decision-making.

The remainder of the paper is organized as follows:

Section 2 details the methodology of the GARCH–BiLSTM–KAN model, including the mathematical formulations of each component and their integration.

Section 3 describes the data, including sources, preprocessing, and descriptive statistics.

Section 4 presents empirical results, comparing the proposed model with benchmark models.

Section 5 discusses the implications of the findings, while

Section 6 concludes with limitations and directions for future research. Throughout, the forecasting target is the one-step-ahead

shifted log-return (

Section 3); price-level forecasts are reconstructed for comparability across model families (

Section 4).

2. Methodology

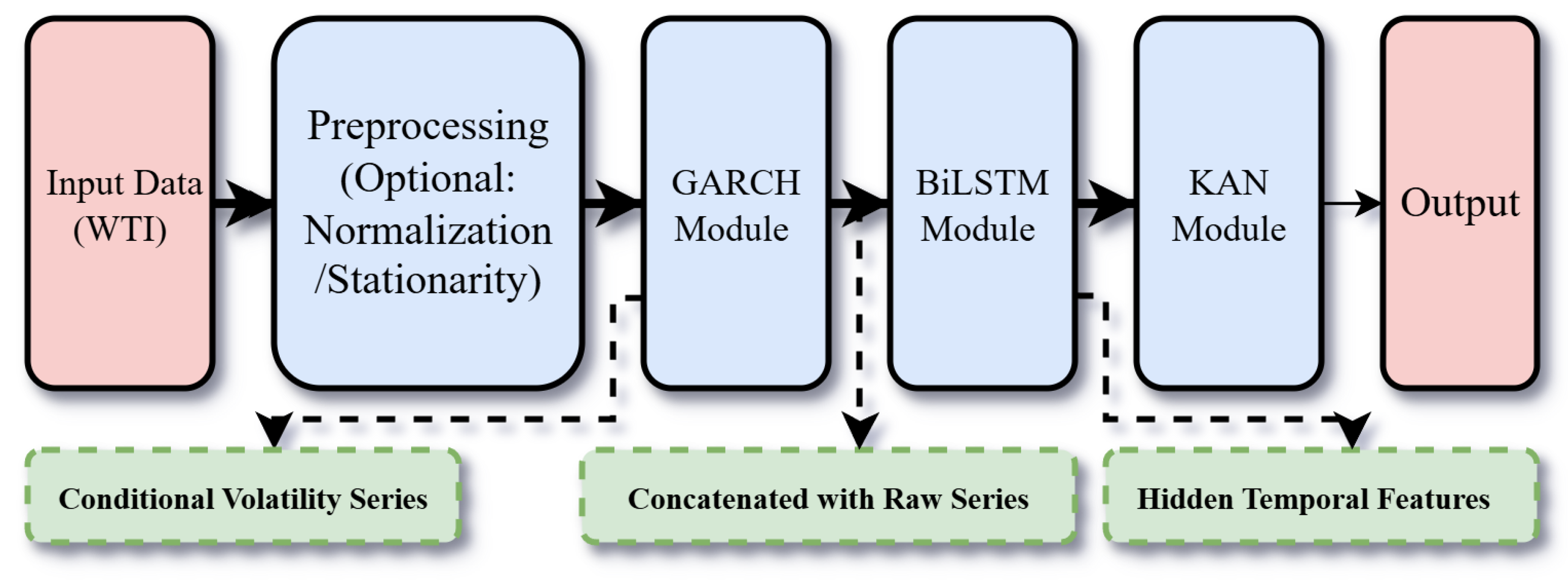

This section presents the methodological framework of the proposed GARCH–BiLSTM–KAN hybrid model (hereafter referred to as GBK-Net), which integrates three complementary components: GARCH for modeling time-varying volatility, BiLSTM for capturing bidirectional temporal dependencies, and KAN for refining complex nonlinear relationships. The architecture is designed to address the inherent challenges of financial time series, such as volatility clustering, temporal asymmetry, and nonlinear dynamics.

Figure 1 provides a schematic overview of the GBK-Net framework.

2.1. GARCH for Volatility Estimation

The Generalized Autoregressive Conditional Heteroskedasticity (GARCH) model, originally proposed by Bollerslev [

26], constitutes the foundational component for volatility estimation in the GBK-Net framework. Financial time series, particularly asset returns, often exhibit volatility clustering—a stylized fact where periods of high volatility are likely to be followed by high volatility, and low volatility by low volatility. This behavior violates the homoskedasticity assumption inherent in classical linear models. GARCH addresses this by modeling the conditional variance as a function of both past squared residuals and past conditional variances, allowing it to effectively capture time-varying volatility dynamics.

For a given time series of returns

, the GARCH

specification consists of two equations: the mean equation and the variance equation. The mean equation is defined as:

where

is the constant mean return, and

is the error term at time

t, which follows a conditional normal distribution

with

representing the information set up to time

.

The conditional variance

, which measures the volatility, is specified in the GARCH

variance equation as:

where

is the constant term,

are the ARCH coefficients capturing the impact of past squared residuals (news about volatility from the recent past), and

are the GARCH coefficients representing the persistence of volatility. To ensure the

positivity and

stationarity of the conditional variance, the parameters must satisfy:

In our hybrid model, we employ the GARCH (1,1) variant, which is parsimonious and widely documented to effectively capture volatility dynamics in financial data [

26,

27]. The estimated conditional volatility

from GARCH (1,1) serves as a critical input to the subsequent BiLSTM and KAN components, providing a structured measure of historical volatility that complements the raw return series. For completeness, asymmetric volatility alternatives include EGARCH, GJR-GARCH and TARCH [

21,

22,

23].”

2.2. BiLSTM for Bidirectional Temporal Learning

While GARCH models excel at volatility estimation, they are limited in capturing complex temporal dependencies, especially those involving long-range interactions and bidirectional relationships. To address this, we integrate a Bidirectional Long Short-Term Memory (BiLSTM) network, an extension of the LSTM architecture [

31], which is designed to model sequential data by preserving information from both past and future time steps.

LSTM networks overcome the vanishing gradient problem of traditional Recurrent Neural Networks (RNNs) through a gated cell structure, enabling them to learn long-term dependencies. Each LSTM cell contains three key gates: the forget gate, input gate, and output gate, which regulate the flow of information into and out of the cell state .

Forget Gate: Determines which information to discard from the cell state:

Input Gate: Controls the update of the cell state with new information:

Cell State Update: Combines the forget gate output and input gate output:

Output Gate: Determines the hidden state

based on the cell state:

where

is the sigmoid activation function, ∘ denotes element-wise multiplication,

is the input at time

t,

is the hidden state from the previous time step, and

W and

b are weight matrices and bias vectors, respectively.

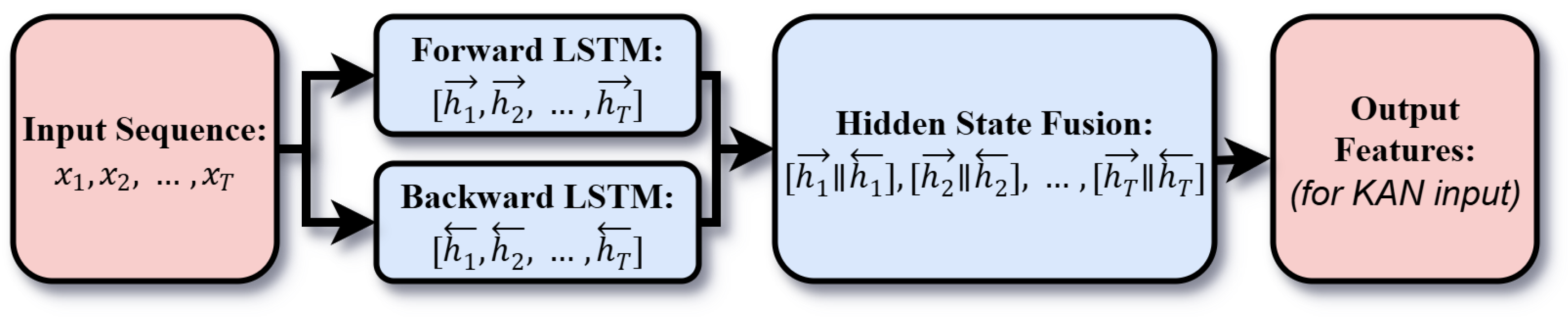

The BiLSTM (Bidirectional Long Short-Term Memory) architecture consists of two parallel LSTM networks: a forward LSTM that processes the sequence from past to future (

to

) and a backward LSTM that processes it from future to past (

to

). The hidden states from both directions are concatenated at each time step to form the final output, capturing both historical and future contextual information:

In our model, the input to the BiLSTM layer includes both the raw return series

and the GARCH-estimated volatility

, forming a multivariate input sequence:

A Lookback Window of 20 is used to construct input sequences, meaning each sample contains 20 time steps of historical data. At inference, inputs are sliding windows

. The BiLSTM encodes the within-window sequence bidirectionally; no information beyond time

t is used. The BiLSTM is configured with 32 hidden units in each direction (64 after concatenation) and 2 layers, trained using the Adam optimizer with a learning rate of 0.01 [

38]. This allows the BiLSTM to learn temporal patterns in both returns and volatility, with the bidirectional design enabling it to capture asymmetric dependencies. The output of the BiLSTM,

, is a high-dimensional representation of bidirectional temporal features, which is fed into the KAN layer for further refinement.

Figure 2 shows the schematic diagram of hidden state fusion process in BiLSTM.

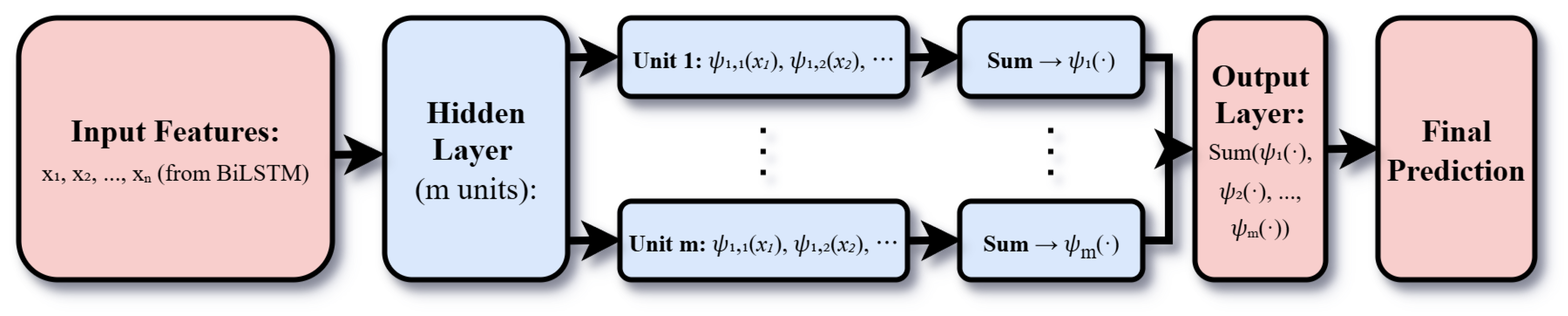

2.3. KAN for Nonlinear Refinement

Despite the strength of BiLSTM in modeling temporal dynamics, financial time series often exhibit highly nonlinear relationships that are challenging to capture with standard neural network architectures. To address this, we incorporate a Kolmogorov-Arnold Network (KAN) (Liu et al., 2024) [

37], a novel neural network paradigm that leverages the Kolmogorov-Arnold representation theorem [

39] to model complex nonlinear functions through a combination of univariate functions and linear operations.

Kolmogorov–Arnold Networks (KANs) differ from traditional feedforward neural networks in their internal transformation mechanism. In conventional neural networks, each layer typically applies a linear transformation followed by a nonlinear activation function. In contrast, KANs replace this with a structure based on univariate basis functions and linear combination.

Specifically, for a KAN with input dimension

d and output dimension

m, the transformation of an input vector

is defined as:

where

denotes the univariate basis function applied to the

i-th input for the

k-th output,

are trainable weights, and

are bias terms.

In this work, the KAN layer uses cubic B-spline basis functions with 5 knots per input dimension. Knot locations are initialized at empirical quantiles of each input feature and are fine-tuned jointly with the spline coefficients and output weights via backpropagation. We adopt Xavier initialization for weights and apply regularization to the spline coefficients; early stopping is used to prevent overfitting. This piecewise-polynomial parameterization provides local, stable nonlinear refinement of the BiLSTM features without invoking radial basis functions or Gaussian centers/widths. As a result, they reduce the number of learnable parameters compared to fully connected networks while preserving strong expressive power.

In our framework, the KAN receives the output of the BiLSTM layer,

, as input. The KAN has a width structure of

and uses cubic B-spline basis functions with 5 knots for

. These functions are selected due to their flexibility in approximating smooth nonlinearities and their high interpretability [

37].

The KAN is optimized using the Adam optimizer with a learning rate of 0.01. Its role is to enhance the modeling of residual nonlinear dependencies that are not fully captured by the GARCH and BiLSTM components. The output of the KAN, denoted

, represents the refined prediction after accounting for both temporal dependencies and nonlinear patterns.

Figure 3 shows the schematic diagram of the KAN model structure.

Our lightweight KAN head (width

) and mild regularization are also motivated by graph-theoretic sparsity notions. The maximum forcing number characterizes the smallest edge set ensuring structural uniqueness, while conditional matching-preclusion quantifies the least deletions that destroy perfect matchings [

40,

41]. By analogy, we seek the smallest set of active channels that preserves identifiability of nonlinear mappings—achieving parameter economy without sacrificing predictive fidelity.

2.4. Hybrid Model Integration of GARCH–BiLSTM–KAN

The GBK-Net hybrid model is integrated in a sequential manner, where each component’s output serves as input to the next, leading to the final prediction. For the implementation of this model, we adopt a one-step-ahead walk-forward prediction strategy: at each time point

t, the GBK-Net model is trained using data from the time interval

to predict the data at time

; all data standardization tools (scalers) are only fitted based on the training set data (i.e., the data from

used for model training at time

t), and the prediction results are inverted back to the original data scale before calculating the prediction error; unless otherwise specified, the prediction period is 1 day.

Table 1 summarizes the key parameters of each component in the GBK-Net hybrid model.

1. GARCH Preprocessing: The raw return series is first fed into the GARCH(1,1) model to estimate the conditional variance (volatility). This step transforms the univariate return series into a two-dimensional feature vector , enriching the input with volatility information.

2. BiLSTM Feature Extraction: The two-dimensional feature sequence is fed into the BiLSTM network, which processes it bidirectionally (forward and backward) to generate bidirectional temporal features . The network uses a Lookback Window of 20 to construct input sequences, with 32 hidden units per direction and 2 layers, trained using the Adam optimizer with a learning rate of 0.01.

3. KAN Refinement: The GBK-Net features are input to the KAN, which applies cubic B-spline basis functions (with 5 knots) to each feature dimension, followed by a linear combination to yield the final prediction . The KAN has a width structure of and uses the same Adam optimizer.

The sequential integration is theoretically motivated by the “hierarchical feature refinement” principle: GARCH first decomposes raw returns into predictable volatility patterns, reducing noise for subsequent layers. BiLSTM then encodes bidirectional dependencies—forward LSTMs capture supply-driven trends, while backward LSTMs model demand expectations. Finally, KAN refines these temporal features using cubic B-splines, which outperform ReLU in approximating non-smooth functions. Consequently, this pipeline ensures that volatility, temporal asymmetry, and nonlinearity are modeled as interdependent rather than isolated phenomena.

4. Training and Loss Function: The entire model is trained end-to-end for 100 epochs using the mean squared error (MSE) loss function, defined as:

where

is the true value and

is the model’s prediction. Three evaluation metrics are used to assess performance:

Root Mean Squared Error (RMSE):

Mean Absolute Error (MAE):

Coefficient of Determination ():

The model is trained on 80% of the data (7,877 samples) and tested on the remaining 20% (1,969 samples) using a time-series split to avoid data leakage from future to past. The price data are normalized using the MinMaxScaler with a feature range of , which improves training stability and convergence for the BiLSTM architecture.

The integration of GARCH, BiLSTM, and KAN is motivated by their complementary strengths: GARCH provides a statistically grounded measure of volatility, BiLSTM captures bidirectional temporal dependencies in both returns and volatility, and KAN refines these features by modeling residual nonlinearities. This hierarchical approach ensures that the model leverages both parametric (GARCH) and nonparametric (BiLSTM, KAN) techniques, making it robust to the diverse characteristics of financial time series, which single-model approaches often fail to address.

The hyperparameter settings are summarized in

Table 1.

From a robustness perspective, our design is informed by connectivity and restricted-connectivity results in high-dimensional interconnection networks. Studies on

k-ary

n-cubes,

m-ary hypercubes, and locally twisted cubes analyze minimal cut sets and extra/restricted edge-connectivity, offering a structural analogy for how performance degrades when feature channels or links are perturbed [

42,

43,

44,

45,

46]. Related results on leaf-sort graphs connect connectivity with matching preclusion, motivating our choice to keep a small but reliable set of pathways in the hybrid pipeline rather than many fragile ones [

47,

48].

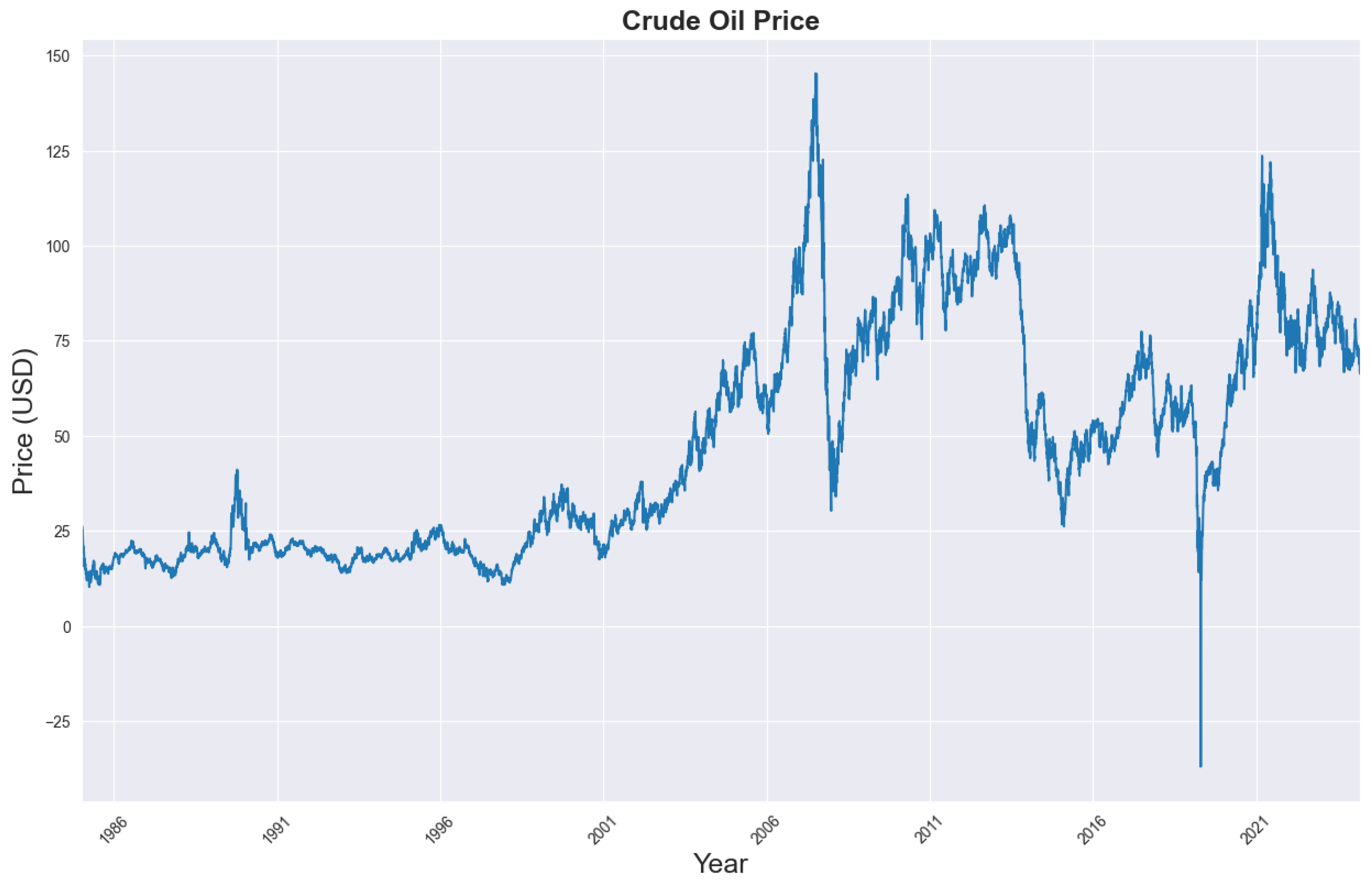

3. Data Description

This study uses daily price data of West Texas Intermediate (WTI) crude oil, a widely recognized benchmark for global oil markets (U.S. Energy Information Administration [EIA], 2023). WTI is favored for its high liquidity and frequent use in pricing agreements. The dataset covers 9,866 daily observations over 39 years, from January 2, 1986, to March 10, 2025. This period encompasses diverse market conditions, including economic expansions, recessions, geopolitical crises, and energy policy changes. Such variety is essential for evaluating the robustness of the proposed forecasting model. Historical evidence further underscores regime shifts and asymmetric macro effects [

7,

8].”

The raw WTI crude oil price data were obtained from the U.S. Energy Information Administration (EIA) database

3, a widely trusted source for energy market data (EIA, 2023). Additional validation was conducted using historical records from the Federal Reserve Economic Data (FRED) database

4, maintained by the Federal Reserve Bank of St. Louis, to ensure consistency.

To handle both non-stationarity and the 2020 negative prices, we first shift prices by a positive constant

C so that logarithms are well defined:

Daily

shifted log-returns are then computed as

Unless otherwise specified,

serves as the default target for model training and evaluation. All price-like inputs are normalized by a MinMaxScaler to

after the shift in (

19); metrics are reported on the original (unshifted) price scale after inverting the transformation during evaluation.

Additionally, all price data were normalized using Min–Max Normalization (MinMaxScaler) with a feature range of

to standardize input values. This standardization enhances the convergence speed of neural network components (BiLSTM and KAN) during training [

49].

Table 2 summarizes the key descriptive statistics of the WTI crude oil prices over the sample period. The mean price is

, with a standard deviation of

, indicating significant price volatility—a characteristic consistent with previous studies on energy commodities [

50]. The median price is

, slightly lower than the mean, suggesting a right-skewed distribution, which is typical of commodity prices influenced by supply shocks. The interquartile range (IQR) is calculated as:

which further highlights the substantial price variability over the period. Over the sample period, prices range from a minimum of

, observed during the 2020 COVID–19 crisis when storage constraints led to negative prices [

51], to a maximum of

during the 2008 global financial crisis, driven by supply concerns [

3].

Figure 4 presents the trend of WTI crude oil prices from 1986 to 2025, highlighting several notable periods. From 1986–1992, prices remained relatively stable, averaging around USD 20.00–30.00 per barrel, reflecting balanced supply–demand dynamics [

52]. Between 2000–2008, prices exhibited a sharp upward trend, peaking at USD 145.31 in 2008 due to rapid economic growth in emerging markets and geopolitical tensions in the Middle East [

53]. The 2014–2016 crash saw prices fall from over USD 100.00 to below USD 30.00, driven by a supply glut from U.S. shale oil production and OPEC’s decision to maintain output [

54]. In April 2020, during the COVID–19 pandemic, prices reached an unprecedented USD -36.98 as lockdowns triggered a collapse in demand [

55]. The post-2020 recovery was marked by a gradual rebound, influenced by economic reopening, supply chain disruptions, and geopolitical conflicts.

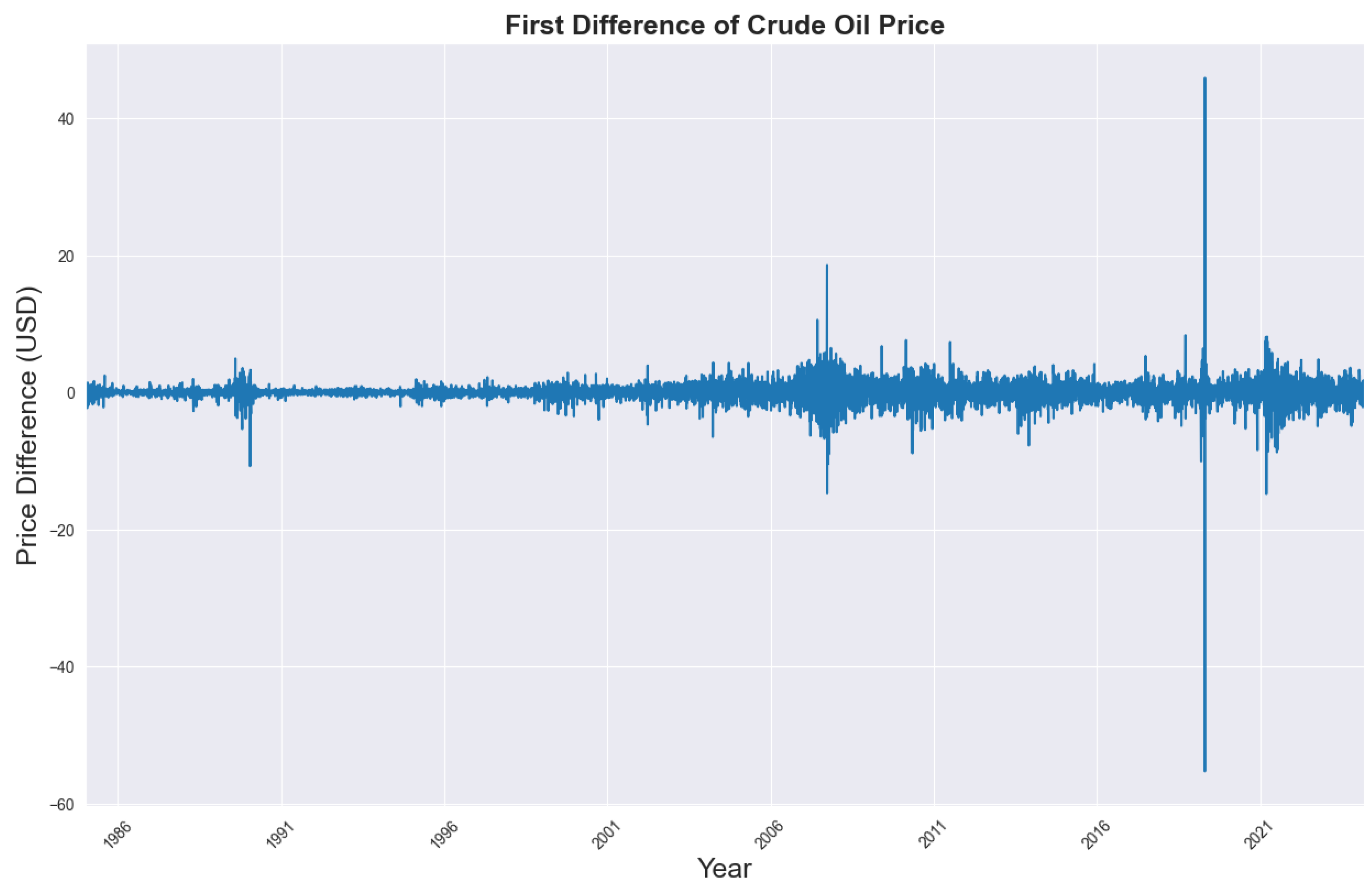

Figure 5 illustrates the first difference of WTI prices, representing daily price changes, and confirms the presence of volatility clustering—periods of high volatility (e.g., 2008, 2020) interspersed with relatively calm intervals—supporting the inclusion of volatility-modeling components such as GARCH in the GBK-Net hybrid framework [

26].

To evaluate the out-of-sample forecasting performance of GBK-Net, the dataset was split into a training set (80%, 7,877 observations, 1986–2016) and a testing set (20%, 1,969 observations, 2017–2025). This chronological split ensures that the model is trained exclusively on historical data and tested on more recent observations, enabling it to capture evolving market dynamics. The testing period includes major events such as the COVID–19 pandemic and the Russia–Ukraine conflict, providing a realistic assessment of the model’s robustness under changing macroeconomic and geopolitical conditions [

25].

4. Empirical Results

To systematically evaluate the predictive efficacy of the proposed GBK-Net, a comprehensive comparative analysis was conducted against multiple benchmark models, including traditional volatility models (GARCH, EGARCH), standalone deep learning architectures (LSTM), and hybrid configurations (LSTM-KAN, GARCH-LSTM, CNN-LSTM, CNN–LSTM–KAN).

Given the differences between the output forms of statistical models (GARCH/EGARCH, which predict a conditional mean of returns) and deep learning models (which output prices directly), we reconstruct all statistical-model forecasts to the same price target before scoring. We proceed in two steps.

so that

and log-returns are well defined even in the presence of negative prices.

If a model outputs the one-step-ahead

shifted log-return , we reconstruct price via

If a model outputs the

arithmetic return , we use

In all cases, evaluation metrics (RMSE, MAE,

) are computed on the original (unshifted) price scale by comparing

with

. By default we fit and report

shifted log-returns; arithmetic-return variants are included only where explicitly noted and are mapped back using (

24).

Three performance metrics—root mean squared error (RMSE), mean absolute error (MAE), and coefficient of determination (

)—were computed on the out-of-sample test set. The results are summarized in

Table 3 for direct comparison across models.

As shown in

Table 3, GBK-Net achieved the best performance across all evaluation metrics, registering the lowest RMSE (2.4876) and MAE (1.5156), along with the highest

value (0.9810). This indicates that the model explains approximately 98.10% of the variance in observed WTI crude oil prices, reflecting a strong fit to the underlying process. Among the hybrid alternatives, CNN–LSTM–KAN ranked second, with slightly higher errors (RMSE = 2.6047, MAE = 1.6464) and a marginally lower

(0.9792). The CNN-LSTM architecture, while effective at capturing local spatiotemporal patterns, produced comparatively inferior results (RMSE = 2.7639, MAE = 1.7693,

= 0.9765), underscoring the incremental value of integrating KAN for nonlinear feature refinement.

Figure 6.

Local Comparison of Prediction Results of Various Models on the Test Set

Figure 6.

Local Comparison of Prediction Results of Various Models on the Test Set

Beyond the top-performing GBK-Net, we examined standalone deep learning architectures and simpler hybrids. Standalone deep learning models exhibited comparatively diminished performance: the LSTM network achieved an RMSE of 2.8802, MAE of 1.8445, and of 0.9745, while the LSTM-KAN hybrid showed a marginal decline in predictive accuracy (RMSE = 2.9223, MAE = 1.8780, = 0.9738). This suggests that KAN’s nonlinear mapping relies on the prior integration of volatility modeling and bidirectional temporal learning components to achieve optimal performance. The GARCH-LSTM model, which combines volatility estimation with unidirectional sequence processing, demonstrated a higher error profile (RMSE = 3.0753, MAE = 1.9996) and lower (0.9710) compared with the proposed framework, highlighting the importance of bidirectional temporal dependency modeling for capturing asymmetric price dynamics.

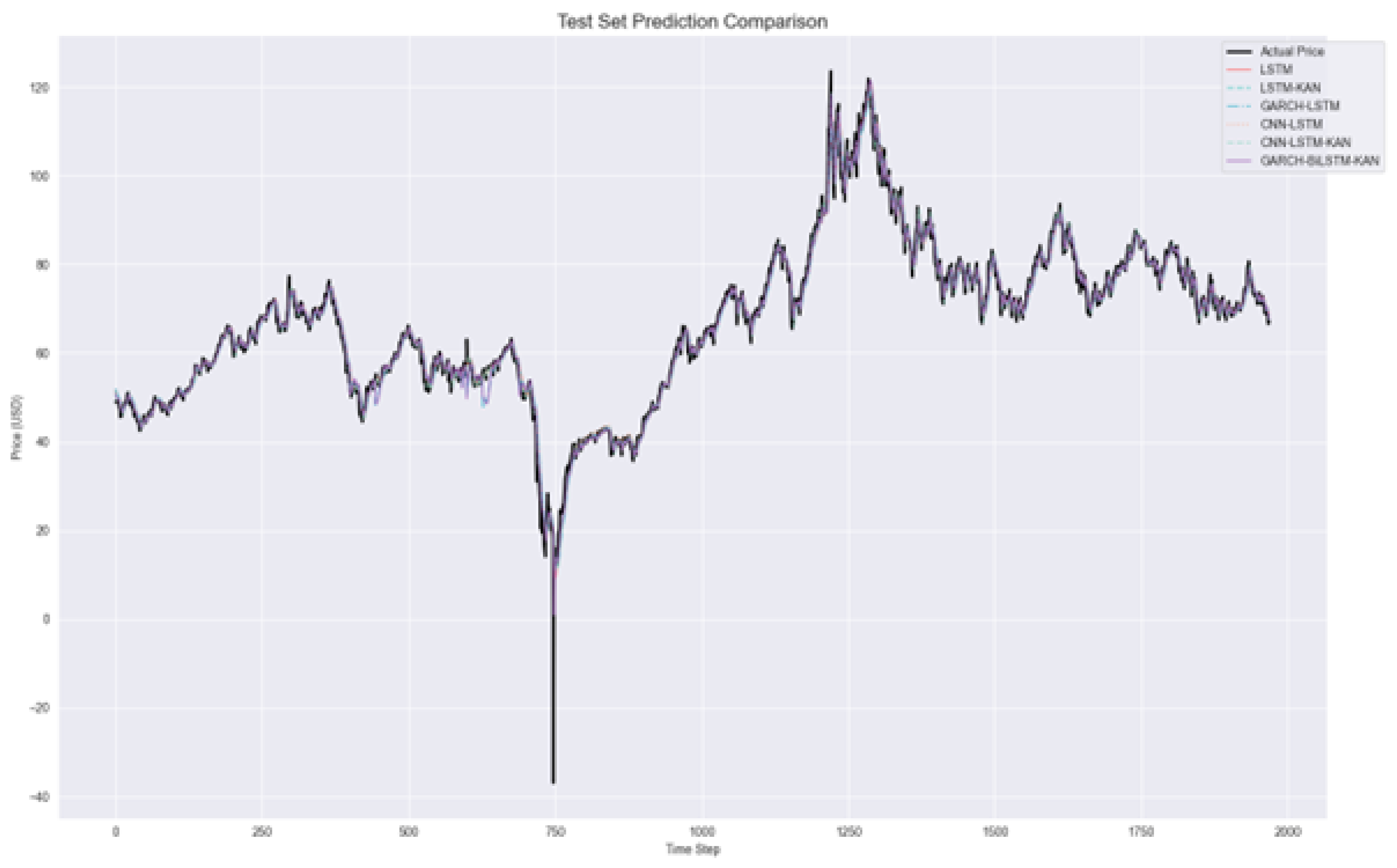

Figure 7.

Comparison of test set predictions among effective models (LSTM, LSTM-KAN, GARCH-LSTM, CNN-LSTM, CNN–LSTM–KAN, and GBK-Net) against the actual WTI crude oil prices. The curves closely overlap, highlighting the superior alignment of GBK-Net with observed prices.

Figure 7.

Comparison of test set predictions among effective models (LSTM, LSTM-KAN, GARCH-LSTM, CNN-LSTM, CNN–LSTM–KAN, and GBK-Net) against the actual WTI crude oil prices. The curves closely overlap, highlighting the superior alignment of GBK-Net with observed prices.

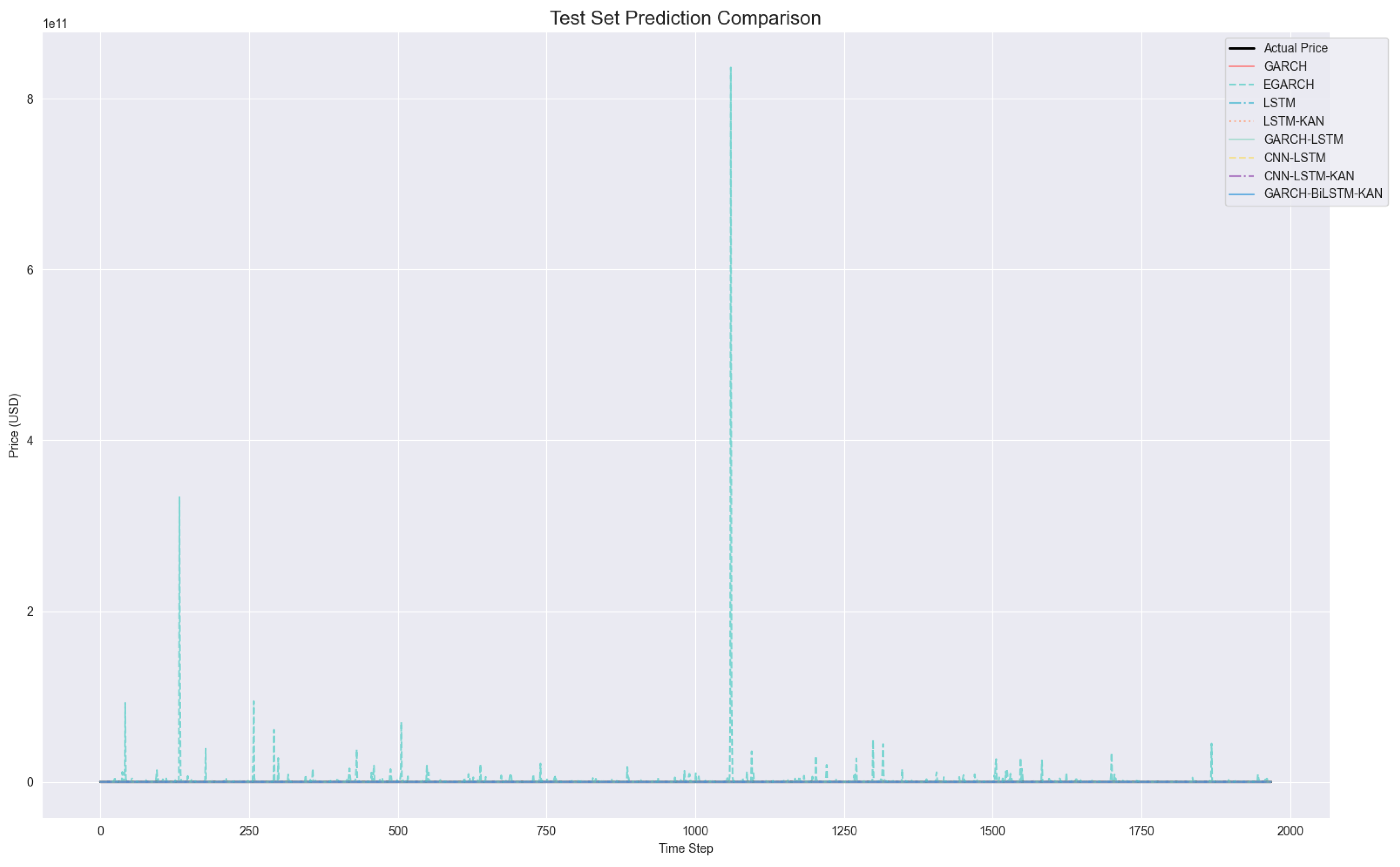

Figure 8.

Comparison of test set predictions including all models (GARCH, EGARCH, LSTM, LSTM-KAN, GARCH-LSTM, CNN-LSTM, CNN–LSTM–KAN, and GBK-Net). The extreme deviations of GARCH and EGARCH dominate the scale, illustrating their numerical instability and poor suitability for crude oil price forecasting.

Figure 8.

Comparison of test set predictions including all models (GARCH, EGARCH, LSTM, LSTM-KAN, GARCH-LSTM, CNN-LSTM, CNN–LSTM–KAN, and GBK-Net). The extreme deviations of GARCH and EGARCH dominate the scale, illustrating their numerical instability and poor suitability for crude oil price forecasting.

Due to these excessively large deviations and much lower

values, directly displaying GARCH and EGARCH results alongside other models in the same figures would obscure the finer prediction details and distort the comparative visualization of more effective models (e.g., GBK-Net, CNN–LSTM–KAN). Accordingly, We provide two complementary views:

Figure 8 includes all models (revealing scale dominance by GARCH/EGARCH), whereas

Figure 7 and the dual-panel

Figure 10 focus on effective models to expose fine-grained differences.

Visual corroboration of these quantitative results is provided in

Figure 6,

Figure 7,

Figure 8,

Figure 9 and

Figure 10.

Figure 6 presents a localized comparison of test set predictions, where GBK-Net forecasts exhibit close alignment with actual prices, in contrast to the substantial deviations observed in GARCH and EGARCH. This superior alignment is further evidenced in

Figure 7 and

Figure 8, which zooms into a sub-period of the test set, revealing the model’s ability to capture both short-term fluctuations and medium-term trends more effectively than CNN–LSTM–KAN and other LSTM-based architectures, which display noticeable lag effects and overshooting.

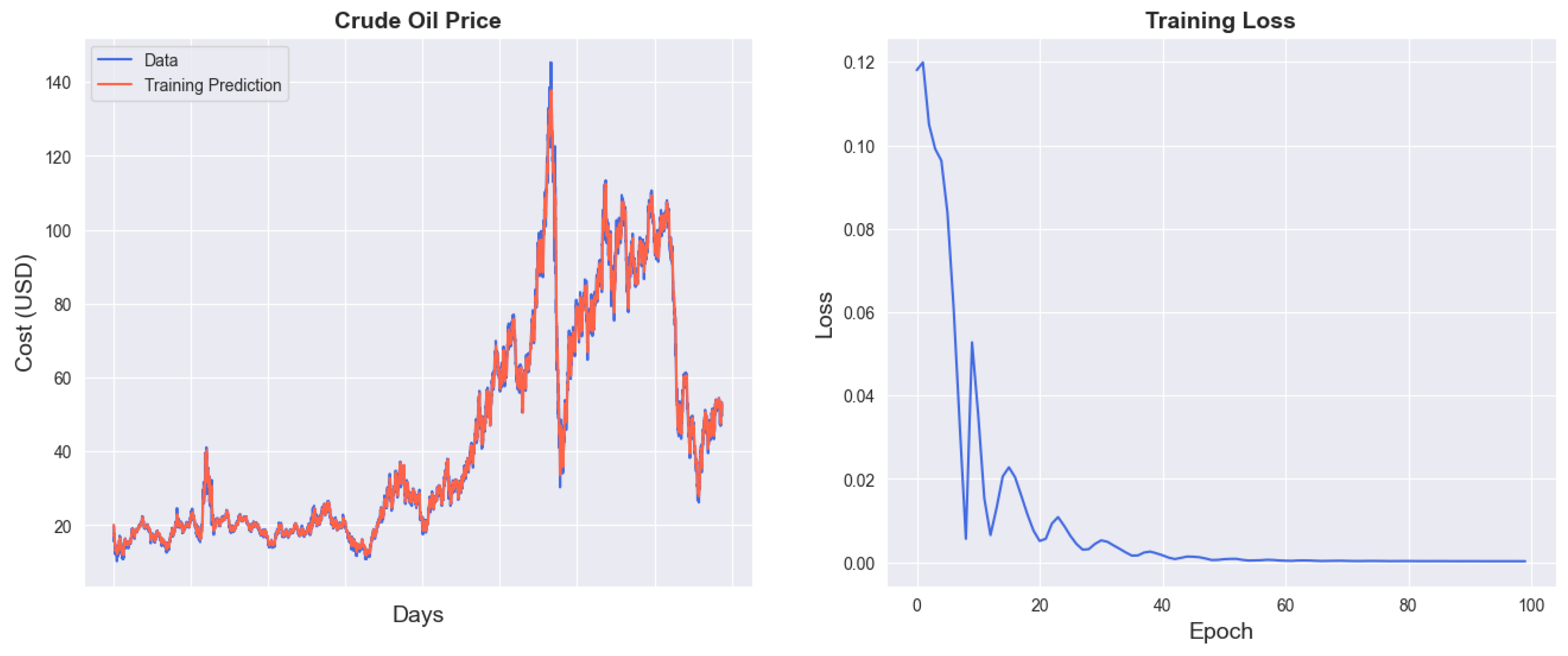

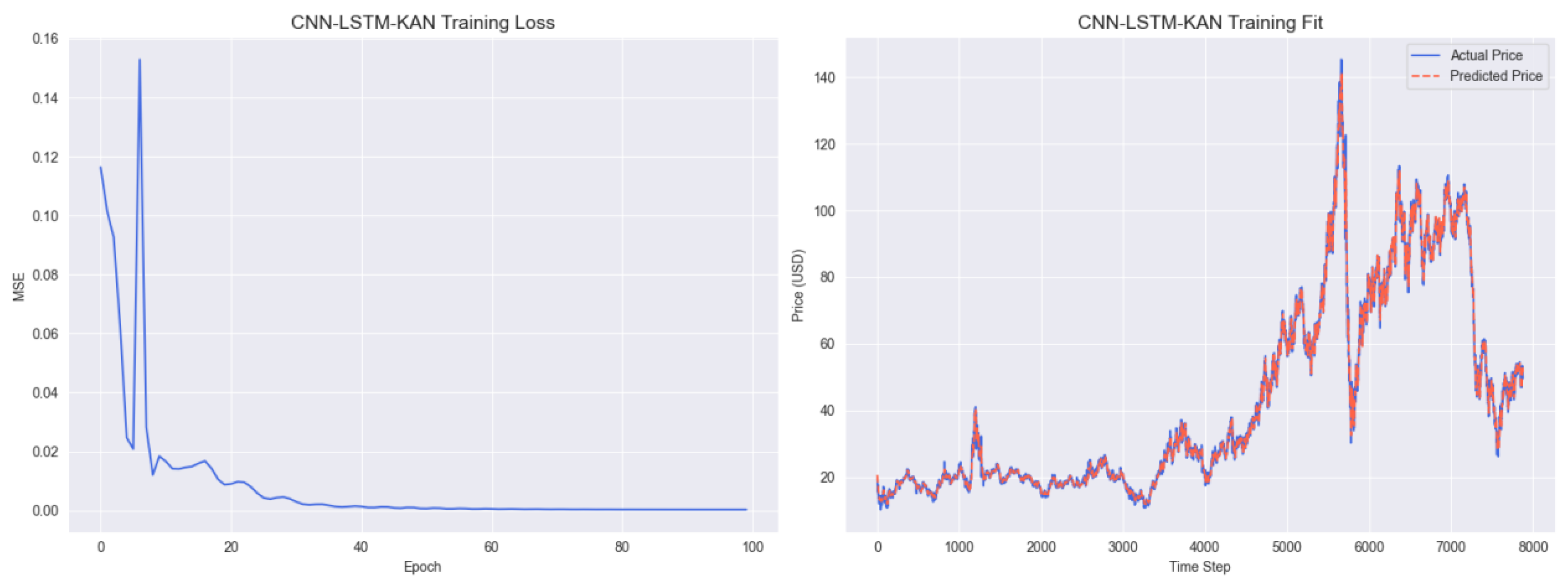

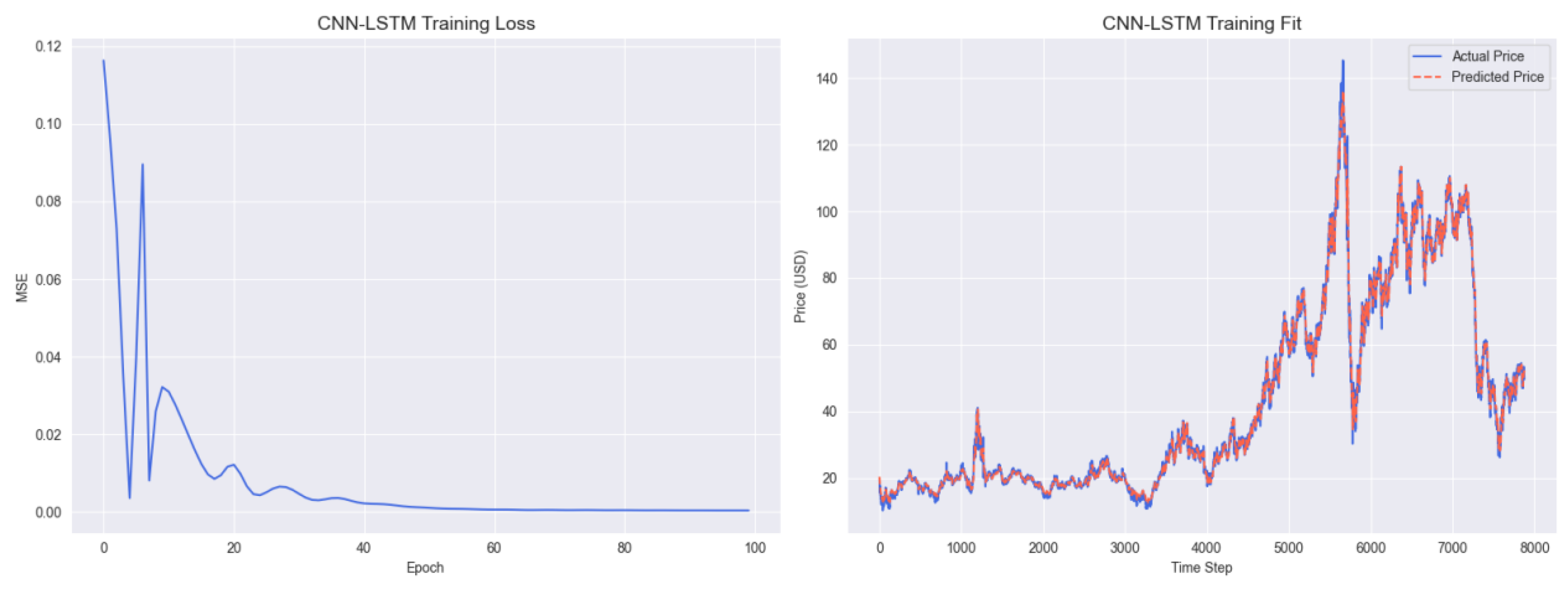

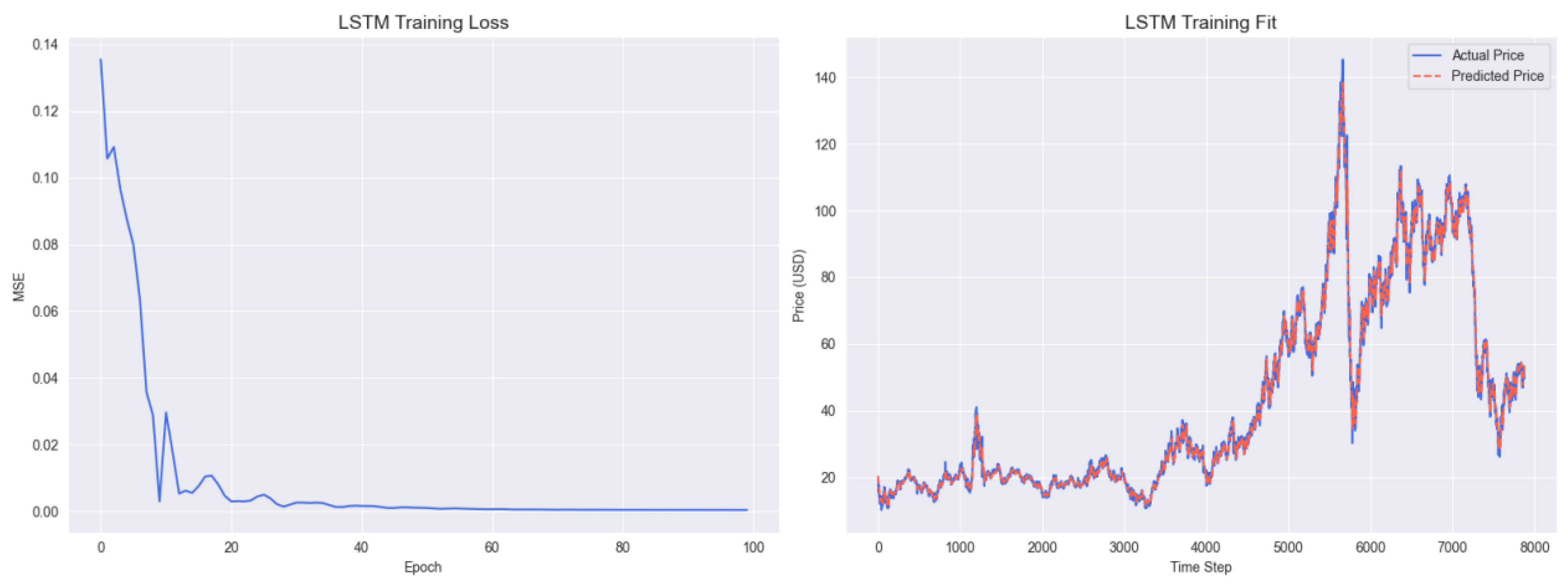

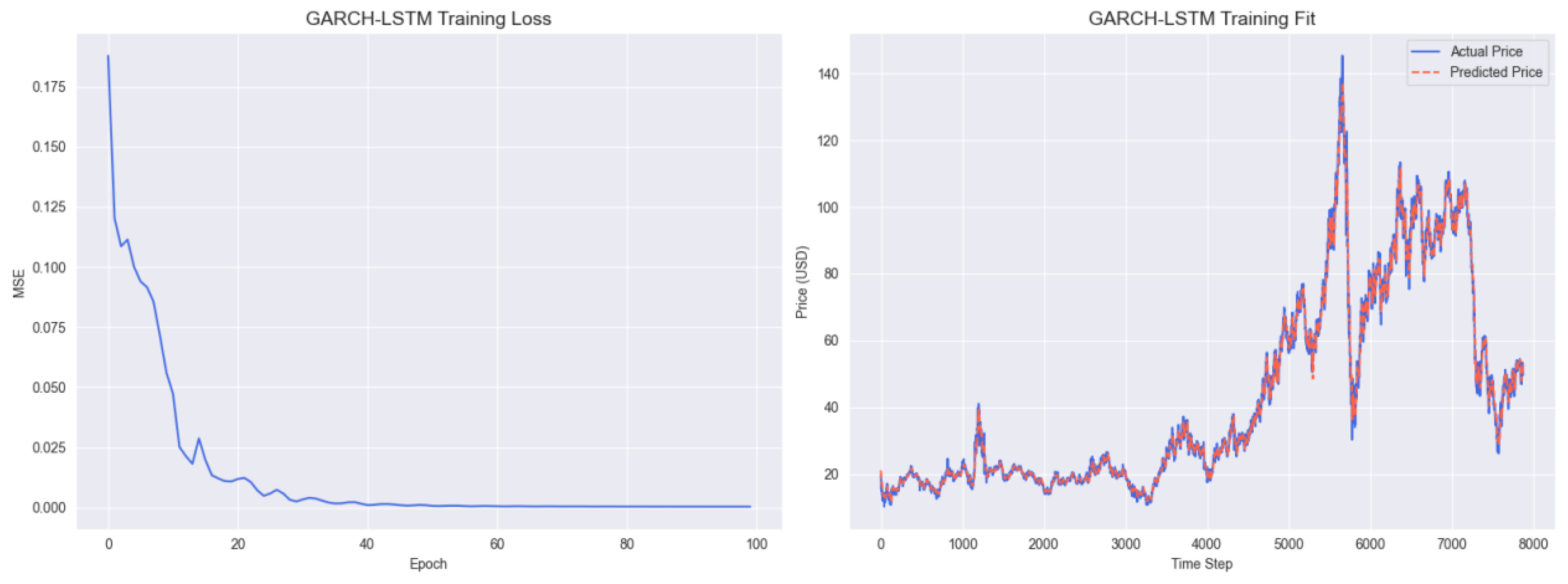

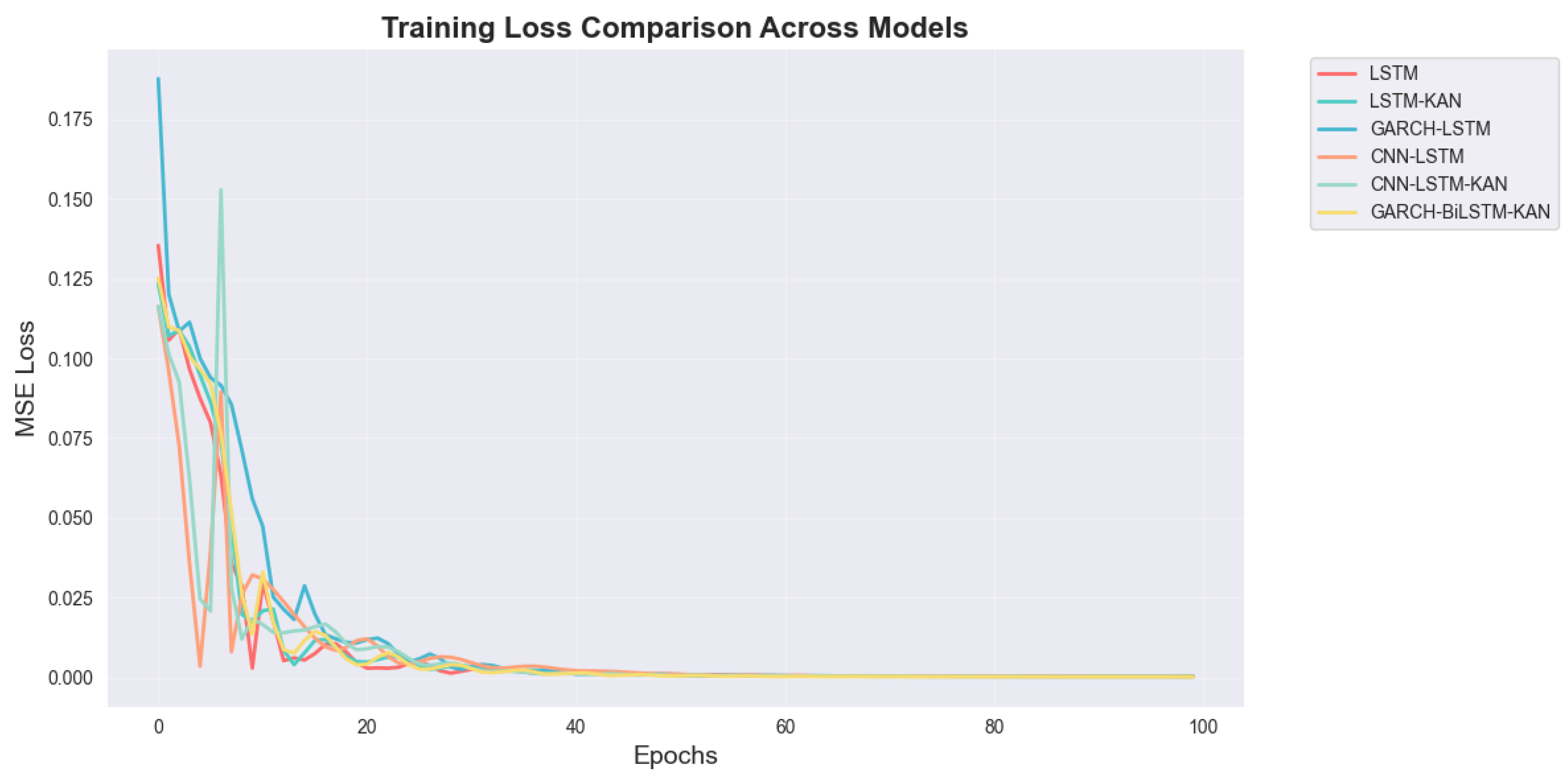

Training dynamics in

Figure 9 compare MSE loss over 100 epochs. All models descend steeply within the first 10–20 epochs and then flatten, indicating fast optimization. GBK-Net (GARCH–BiLSTM–KAN) and CNN–LSTM–KAN converge earlier with smaller oscillations, reflecting the stabilizing effect of combining volatility modeling with nonlinear refinement. Plain LSTM and CNN–LSTM show larger early-epoch jitter, and GARCH–LSTM reduces loss steadily but reaches its plateau later. By 50 epochs, training losses are already near zero for most models, so performance differences are better judged on the test set (

Table 3), where GBK-Net remains the top performer. Overall, the sequential integration of GARCH, BiLSTM, and KAN improves both convergence speed and training stability.

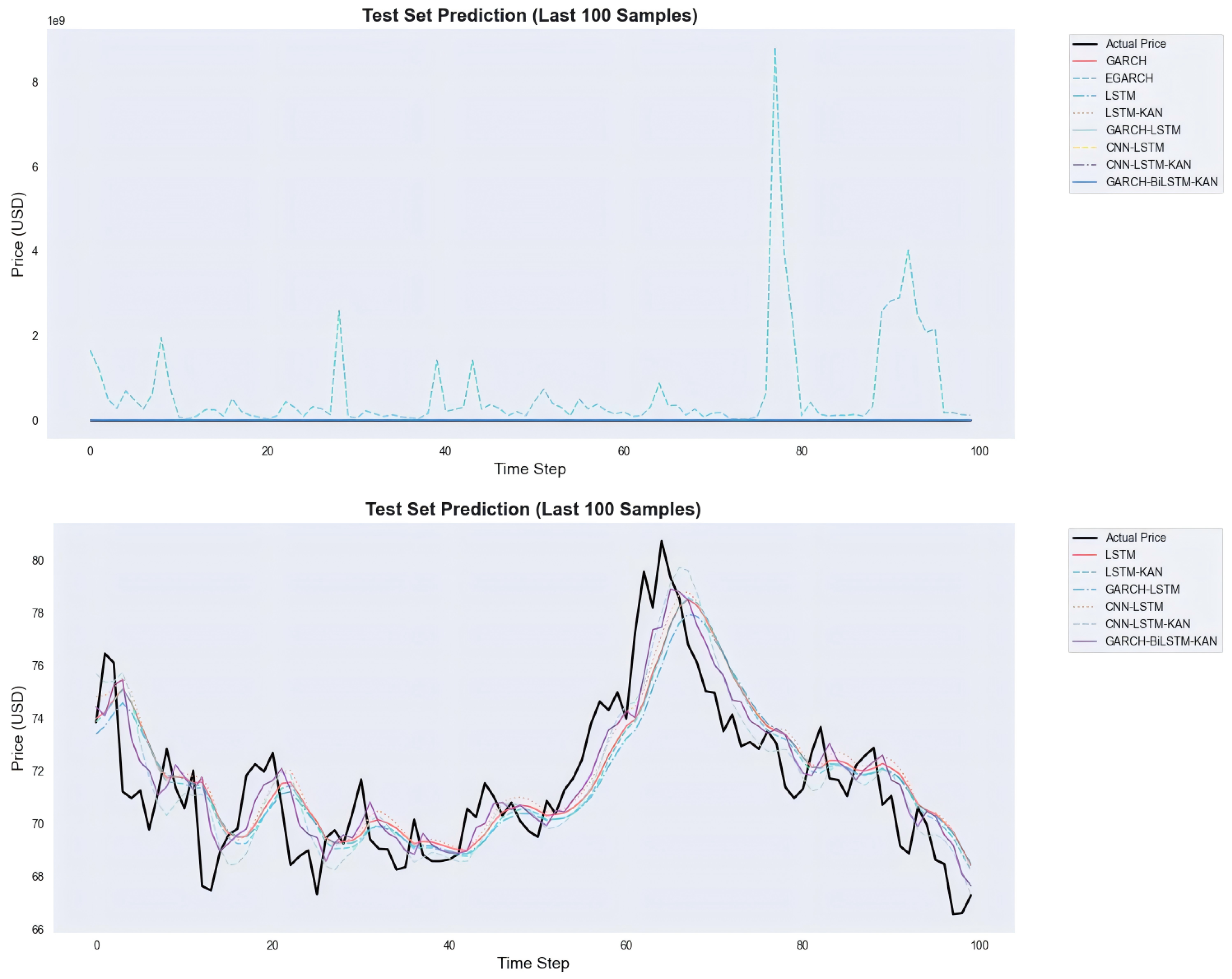

Figure 10 presents a zoomed-in view of the last 100 test samples in two panels, with the dual-panel design explicitly intended to resolve visual ambiguity caused by traditional volatility models: the top panel includes all models (GARCH, EGARCH, LSTM, LSTM-KAN, GARCH-LSTM, CNN-LSTM, CNN–LSTM–KAN, GBK-Net), where the y-axis scale is dominated by the extreme prediction spikes of GARCH/EGARCH—these spikes stem from numerical divergence and mismatched prediction targets, compressing the curves of other models into nearly indistinguishable lines, and this panel is designed to directly demonstrate the severe inadequacy of GARCH/EGARCH for crude oil price forecasting; the bottom panel excludes GARCH/EGARCH to focus solely on effective models, aiming to eliminate scale distortion and clearly reveal performance differences among competitive models: GBK-Net aligns closest with the actual price series, especially capturing the sharp upswing and subsequent correction (time steps 60–80) with smaller phase lag and less amplitude damping, while CNN–LSTM–KAN underestimates abrupt changes, plain LSTM/CNN-LSTM show noticeable lag, and GARCH-LSTM responds slowly near peaks.

Figure 10.

Last 100 test samples. Top—all models incl. GARCH/EGARCH; Bottom—effective models only.

Figure 10.

Last 100 test samples. Top—all models incl. GARCH/EGARCH; Bottom—effective models only.

In order to verify the contribution of GARCH volatility features, BiLSTM time-series modelling module, and KAN nonlinear fitting module to the forecasting performance of WTI crude oil price, this study designs three sets of core ablation experiments, using the original model "GARCH–BiLSTM–KAN" as a benchmark, and removing or replacing the target modules one by one by the "control single variable" principle to compare the forecasting accuracy (RMSE, MAE, ) and training efficiency of each model on the test set. The original model "GARCH–BiLSTM–KAN" is used as the baseline, and the target modules are removed or replaced one by one by the principle of "controlling a single variable", and the prediction accuracies (RMSE, MAE, and ) and training efficiencies of the models are compared with each other on the test set.

From the

Table 4, GBK - Net has the best performance in terms of metrics, indicating that its architecture integrating GARCH volatility, BiLSTM time-series modelling and KAN nonlinear fitting is able to effectively capture the complex characteristics of crude oil prices; the GARCH - KAN model with the ablation of BiLSTM, due to the loss of the time-series dependence capturing ability, the RMSE and MAE increase significantly, and

The GARCH - BiLSTM - FC model with KAN ablation has limited nonlinear fitting ability and inferior indexes than GBK - Net, which demonstrates the advantage of KAN in adapting to complex nonlinear relationships; the BiLSTM - KAN model with GARCH ablation has less prediction accuracy than GBK - Net due to missing volatility information, which demonstrates that the GARCH volatility model has less prediction accuracy than GBK - Net, which proves that the GARCH volatility model has less prediction accuracy than GBK - Net. Net, which proves that GARCH volatility can help identify the risk of price fluctuations. In conclusion, crude oil price prediction requires the integration of multi-module capabilities, and the fusion architecture of GBK-Net is more suitable for its characteristics.

Collectively, these empirical findings provide compelling evidence for the superiority of GBK-Net in crude oil price forecasting, demonstrating consistent superiority across both quantitative metrics and qualitative visual analyses. This validates the effectiveness of synergistically integrating volatility modeling, bidirectional temporal learning, and advanced nonlinear refinement to address the multifaceted characteristics of crude oil price dynamics.

5. Discussion

The empirical findings in

Section 4 underscore the superior performance of the

GARCH–BiLSTM–KAN hybrid model (GBK-Net) in forecasting WTI crude oil prices, consistently outperforming both traditional volatility models and alternative hybrid architectures across key evaluation metrics. This section discusses the theoretical and practical implications of these results, situates them within the existing literature, and identifies potential avenues for further investigation.

The outperformance of GBK-Net stems from the synergistic integration of its three components, each targeting a distinct characteristic of crude oil price dynamics. First, the GARCH(1,1) module captures volatility clustering—a defining feature of energy markets [

26]—by embedding historical volatility estimates into the input sequence, thus enriching the feature space and mitigating the homoskedasticity assumption inherent in many deep learning models [

35]. Second, the BiLSTM layer builds on this volatility-informed input by modeling bidirectional temporal dependencies, enabling the capture of asymmetric relationships between past and future price movements—an advantage over unidirectional LSTM or GARCH-LSTM configurations, which struggle with complex sequential asymmetries [

14]. Third, the KAN module refines these bidirectional features through cubic B-spline basis functions, efficiently modeling high-dimensional nonlinearities that standard activation functions may overlook [

37]. This hierarchical refinement—from volatility estimation to bidirectional sequence learning to nonlinear feature tuning—explains the model’s ability to account for

of price variance in the test set (

, see

Table 3).

These results contribute to the ongoing discussion on the relative merits of statistical versus machine learning (ML) approaches in time series forecasting. Statistical models such as GARCH and EGARCH performed poorly, with implausibly high error metrics and strongly negative

values, confirming their inability to capture the nonlinear and multifaceted nature of crude oil price dynamic [

28]. In contrast, standalone ML models (e.g., LSTM, LSTM-KAN) outperformed pure statistical methods but still fell short of GBK-Net, highlighting the necessity of explicitly modeling volatility rather than relying solely on sequential pattern extraction. Likewise, the GARCH-LSTM hybrid, while incorporating volatility estimation, underperformed GBK-Net, underscoring the added value of bidirectional temporal learning in modeling asymmetric price responses to shocks.

Consistent with recent studies advocating for hybrid frameworks that combine statistical rigor with ML flexibility [

20,

36], our findings extend the literature by

quantitatively demonstrating the incremental benefits of bidirectional temporal learning and advanced nonlinear refinement: incorporating BiLSTM yields a

improvement in

over GARCH-LSTM, while integrating KAN further improves

by

over CNN-LSTM. These quantified gains highlight the practical significance of the proposed architecture in high-volatility commodity markets.

Practically, the GARCH–BiLSTM–KAN (GBK-Net) model offers a robust tool for stakeholders in energy markets. For policymakers, its high accuracy enhances the reliability of energy policy simulations, strategic reserve planning, and inflation forecasts. Energy companies can leverage its volatility-aware predictions to improve risk hedging strategies and production planning, particularly during periods of market turbulence (e.g., the 2020 COVID–19 crisis or the 2022 Russia–Ukraine conflict, as observed in

Figure 9). Financial market participants, including traders and portfolio managers, may benefit from more precise pricing of oil derivatives and optimized asset allocation, reducing exposure to forecast errors [

13]. Notably, the model’s performance remains strong across diverse market conditions—from the 2008 financial crisis to the post-2020 recovery, encompassing both bull and bear phases—suggesting generalizability to both stable and volatile periods.

Our handling of extreme events and noisy inputs parallels graph-diagnosability frameworks. In

g-good-neighbor and comparison diagnosis models on Cayley/star-like networks, faults can be located under limited probes provided certain observability constraints hold [

56,

57]. This perspective supports our joint use of volatility cues (GARCH) and bidirectional temporal context (BiLSTM) to make anomalies identifiable rather than merely smoothed; the leaf-sort results that unify connectivity and diagnosability further justify coupling “risk (volatility) signals” with sequence features to maintain identifiability during shock periods [

58].

Despite its strengths, this study has limitations that warrant consideration. First, the analysis focuses exclusively on WTI crude oil; future research should validate the model’s performance on other benchmarks or related commodities such as Brent crude or natural gas to assess its broader applicability. Second, while KAN improves nonlinear modeling, its interpretability remains limited compared to parametric models. Exploring explainable AI techniques to unpack the KAN layer’s contributions—for example, identifying which basis functions drive specific price predictions to facilitate regulatory compliance and strategic transparency—could enhance trust among practitioners. Third, the model’s input features are restricted to historical prices and volatility. Incorporating external variables such as macroeconomic indicators, geopolitical risk indices, or OPEC production data may further improve predictive power, as these factors are known to influence oil prices [

15,

25], with Kilian [

15] emphasizing macroeconomic factors and Hyndman & Athanasopoulos [

25] focusing on forecasting methodologies.

Looking forward, attention-based fusion across heterogeneous exogenous signals may further enhance GBK-Net. Self-attention offers a principled way to model cross-type dependencies in heterogeneous networks (e.g., the Temporal Fusion Transformer for interpretable multi-horizon time-series forecasting [

59]), while multimodal pipelines in high-throughput sensing align and fuse image/sensor/text channels to improve robustness under distributional shift [

60,

61,

62]. In our setting, an attention-driven fusion block could integrate macroeconomic series, inventories, shipping indices, option-implied measures, and news sentiment, enabling context-conditioned regime detection that complements volatility cues from GARCH and temporal features from BiLSTM.

On the efficiency side, recent work in applied vision for agriculture demonstrates that lightweight deep networks can retain accuracy while sharply reducing parameters and energy cost—enabling field deployment and real-time inference [

63,

64]. Inspired by these designs, we plan a compute-aware GBK-Net variant—e.g., narrower BiLSTM widths, low-rank/sparse KAN spline expansions, and post-training quantization—to support on-premises risk systems and edge analytics without sacrificing forecast fidelity.

In summary, the GBK-Net model advances crude oil price forecasting by harmonizing volatility modeling, bidirectional temporal learning, and advanced nonlinear refinement. This is, to our knowledge, the first application of KAN in energy market forecasting. Its empirical success validates the utility of hybrid frameworks in addressing the complexities of energy markets, offering both theoretical insights and practical benefits for decision-making. Future work should focus on expanding the model’s scope, enhancing interpretability, and integrating additional predictive features to solidify its role as a leading forecasting tool in energy economics.

6. Conclusion

This study addresses the long-standing challenge of crude oil price forecasting by proposing a novel hybrid model, GARCH–BiLSTM–KAN, which synergistically integrates the strengths of volatility modeling, bidirectional temporal learning, and advanced nonlinear refinement. By systematically combining GARCH(1,1) for capturing time-varying volatility, BiLSTM for modeling bidirectional temporal dependencies, and KAN for refining complex nonlinear relationships, the proposed framework effectively navigates the multifaceted dynamics of crude oil prices—including volatility clustering, asymmetric temporal interactions, and high-dimensional nonlinearities—that have historically stymied single-model approaches.

Empirical results using 39 years of daily WTI crude oil prices (1986–2025) demonstrate the superiority of GARCH–BiLSTM–KAN over a range of benchmark models. With the lowest RMSE (2.49), MAE (1.52), and highest (0.98), the model outperforms traditional volatility models (GARCH, EGARCH), standalone deep learning architectures (LSTM), and alternative hybrids (GARCH-LSTM, CNN–LSTM–KAN). This consistent outperformance validates the value of its integrated design: GARCH enriches inputs with structured volatility information, BiLSTM captures asymmetric past-future relationships often missed by unidirectional models, and KAN refines residual nonlinearities beyond the capacity of conventional neural network activations.

The findings carry significant implications for both academia and practice. Theoretically, this research advances the field of energy economics by demonstrating how hybrid frameworks can harmonize parametric and nonparametric methods to address the complexities of financial time series—offering a new paradigm for modeling assets with volatile, nonlinear dynamics. Practically, the model provides a robust tool for governments, energy firms, and financial institutions: policymakers can leverage its accuracy for more informed energy policy and strategic reserve management; energy companies can enhance risk hedging and production planning, particularly during turbulent periods like the 2020 COVID–19 crisis or 2022 geopolitical tensions; and investors can improve derivative pricing and portfolio optimization.

Despite these contributions, limitations remain. The focus on WTI crude oil calls for validation across other benchmarks or energy commodities to confirm generalizability. Additionally, the model’s reliance on historical price and volatility data leaves room to incorporate external factors—such as macroeconomic indicators, geopolitical risk indices, or OPEC production quotas—that shape oil markets. Future work could also explore explainable AI techniques to unpack KAN’s nonlinear mappings, enhancing transparency for practical adoption.

In conclusion, the GARCH–BiLSTM–KAN model represents a significant step forward in crude oil price forecasting, blending statistical rigor with cutting-edge machine learning to tackle the inherent complexities of energy markets. Its success underscores the potential of hybrid approaches in financial time series analysis, offering both theoretical insights and actionable tools to navigate the uncertainties of global oil markets.