Submitted:

03 September 2025

Posted:

04 September 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

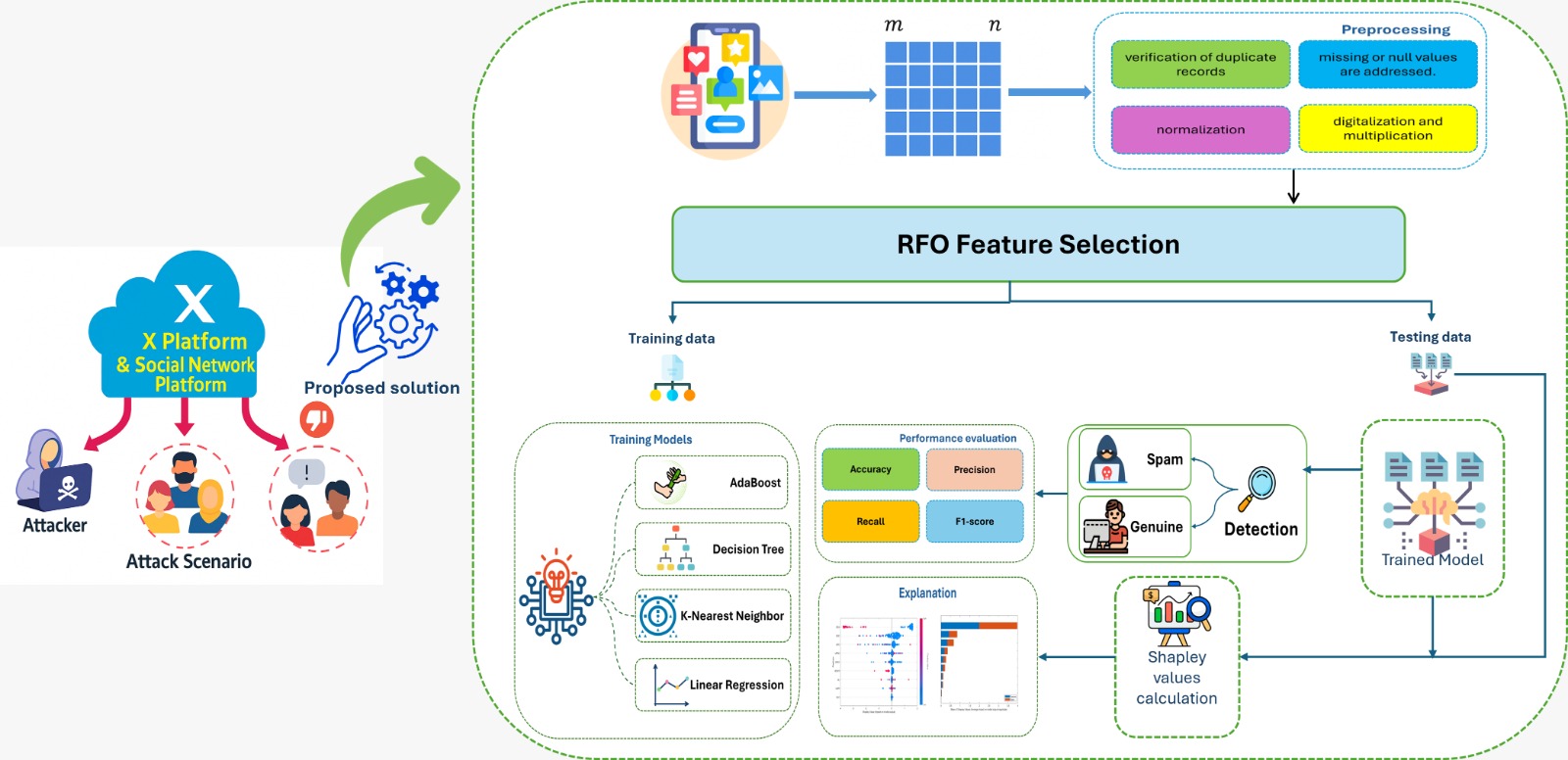

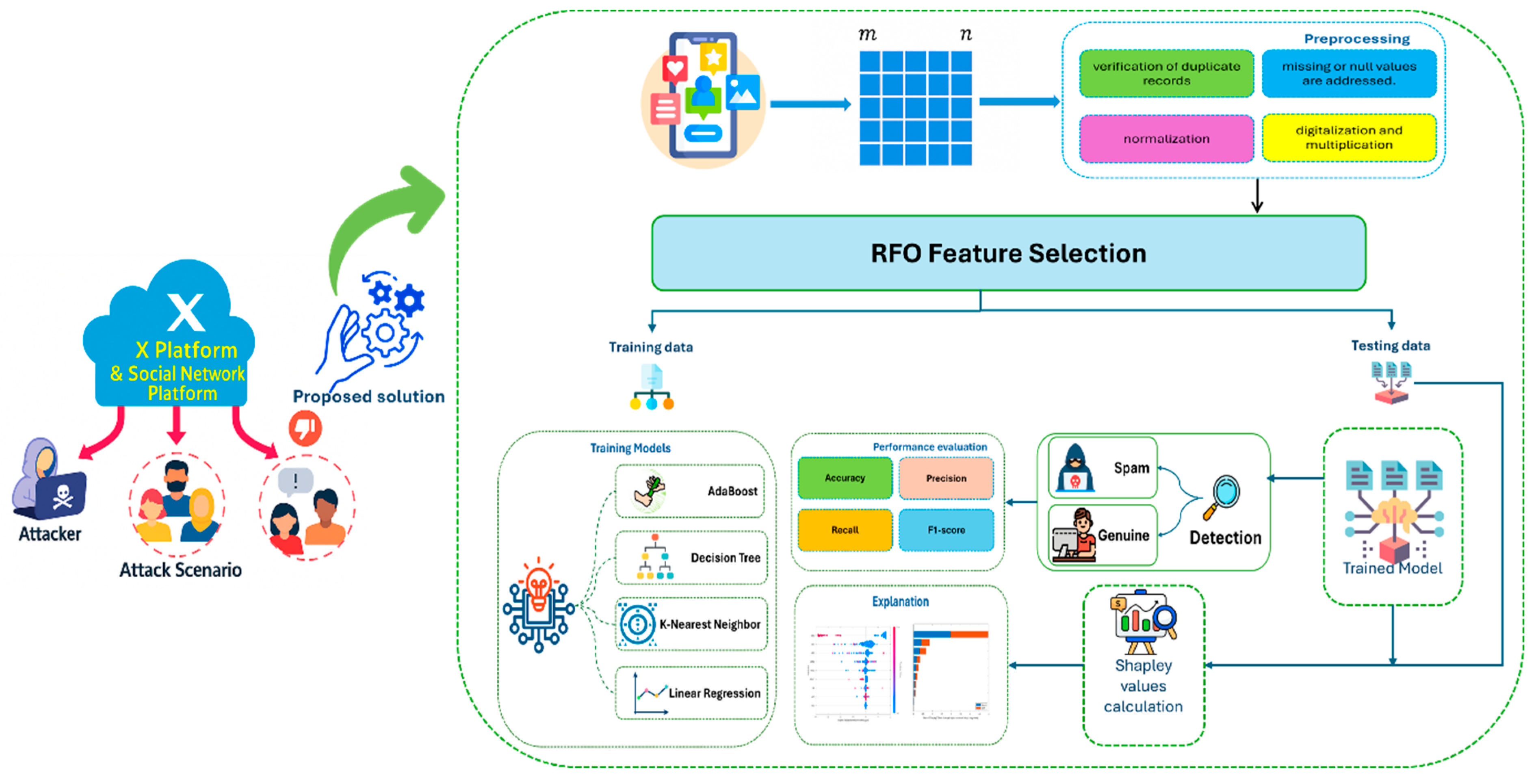

- An innovative XAI-powered Ensemble learning model significantly improving classification accuracy X spam account detection.

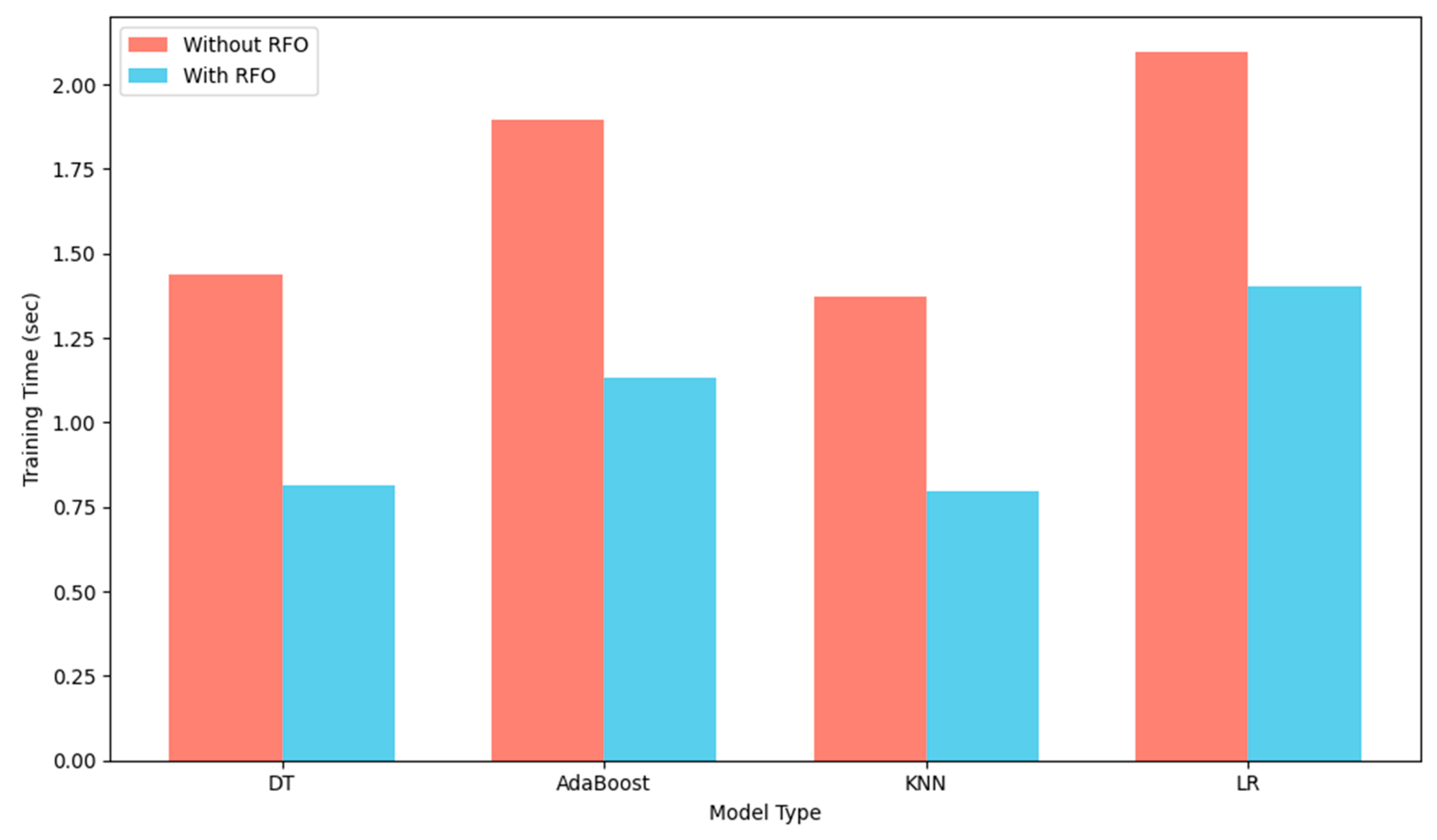

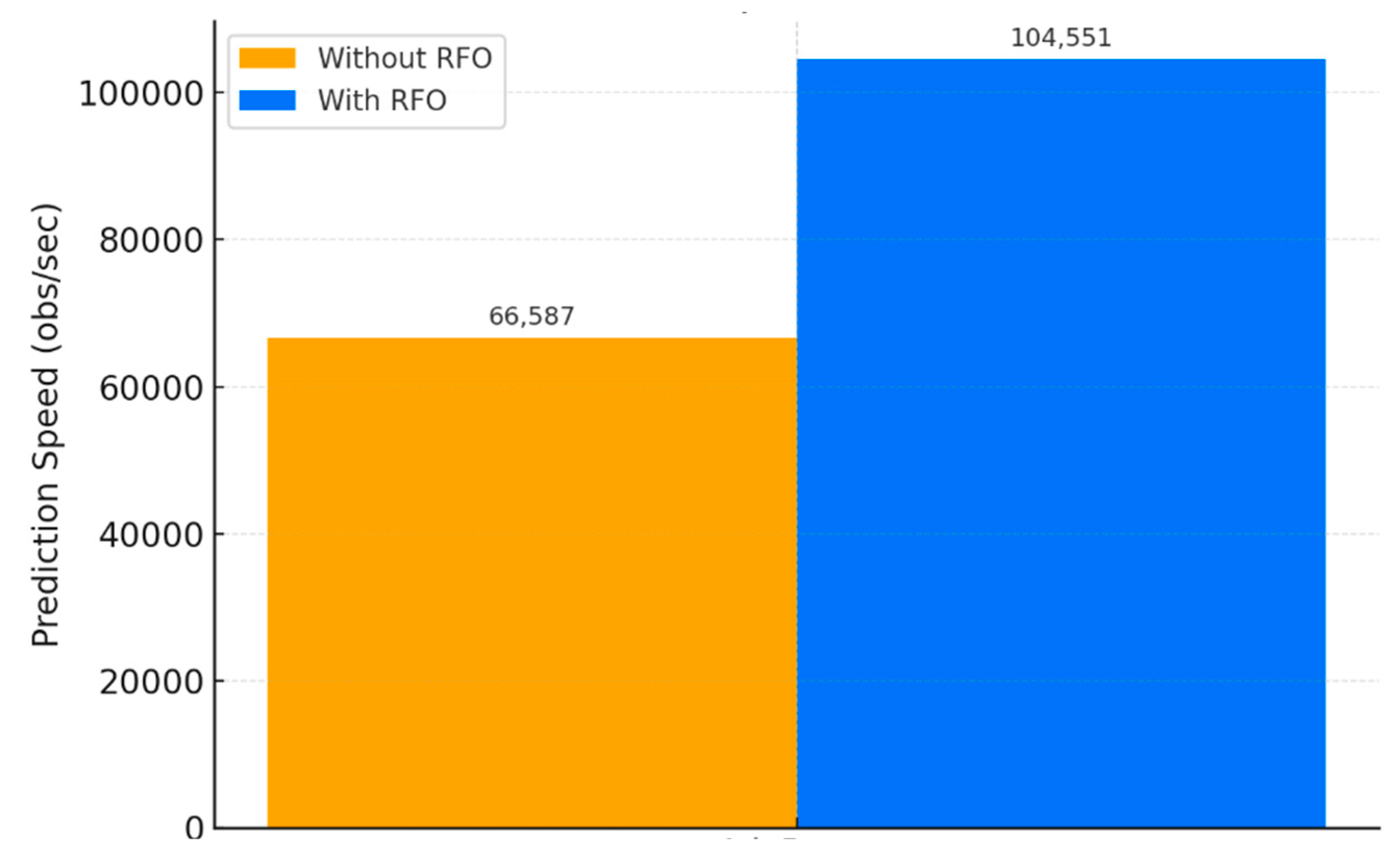

- A swarm-based, nature-inspired meta-heuristic method, called Ruppell's Fox Optimizer (RFO) algorithm for feature selection which for the first time applied to cybersecurity and light weighted system load.

- The proposed solution is experimentally evaluated using the real world X dataset. Developed model significantly improving performance metrics such as confusion matrix precision, recall, accuracy, F1-score and (AUC) value of area under the curve.

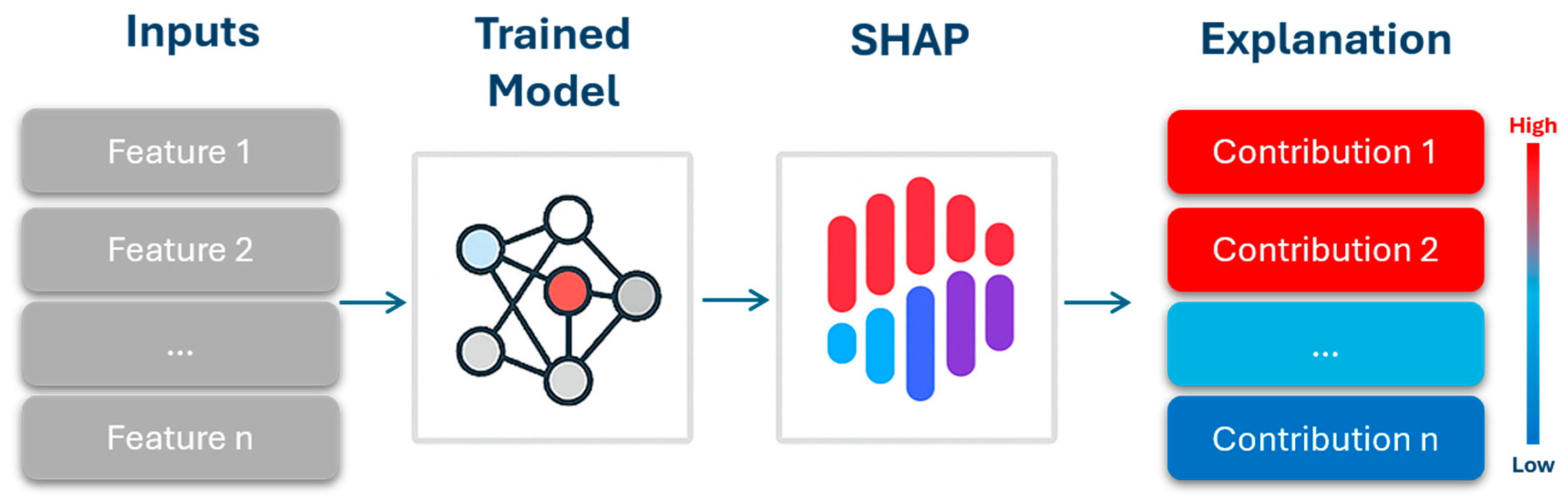

- The prediction made by ML- driven spam detection model is interpreted via computing the Shapley values through the SHAP methodology.

2. Background

2.1. Ensemble Model

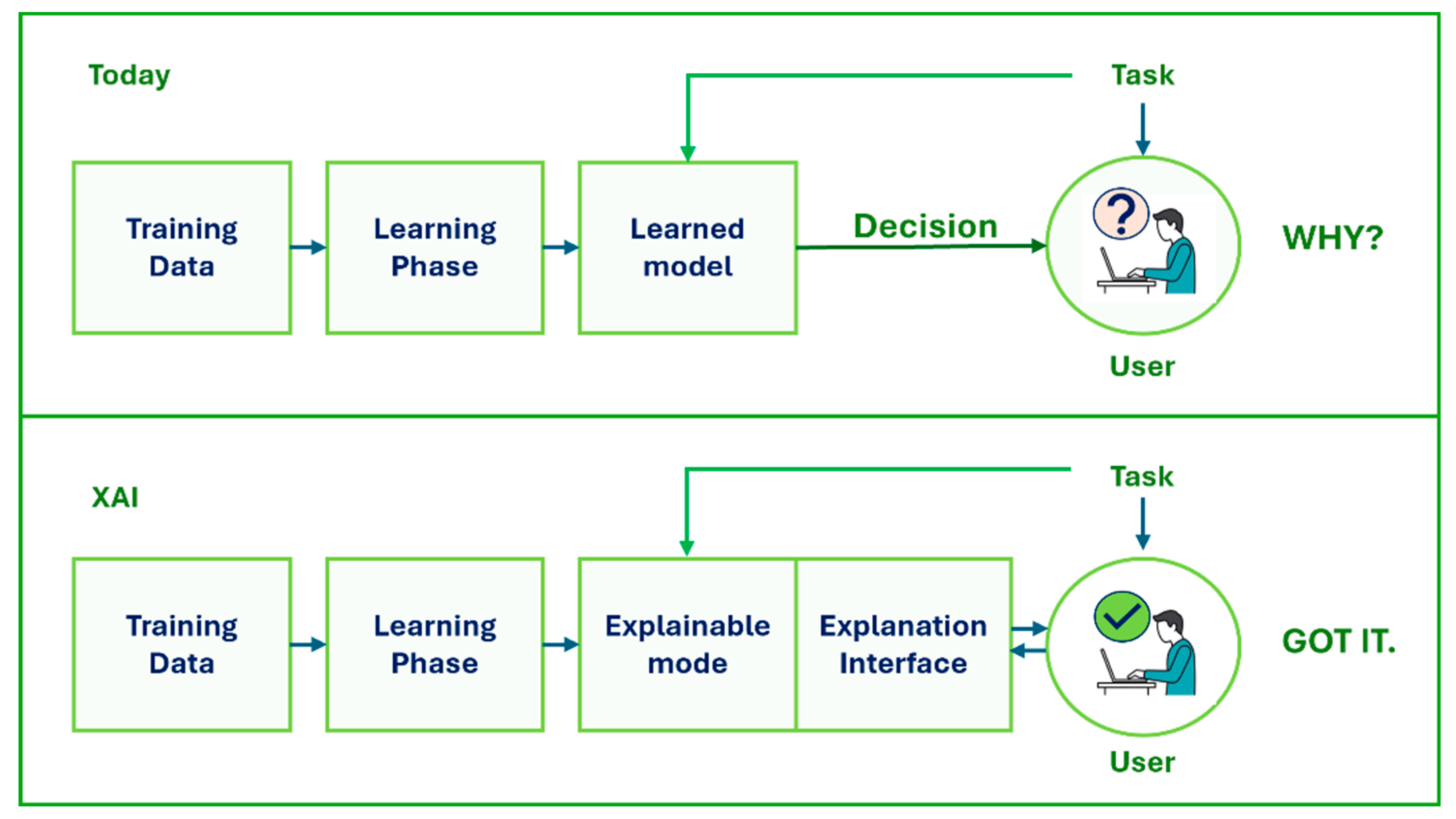

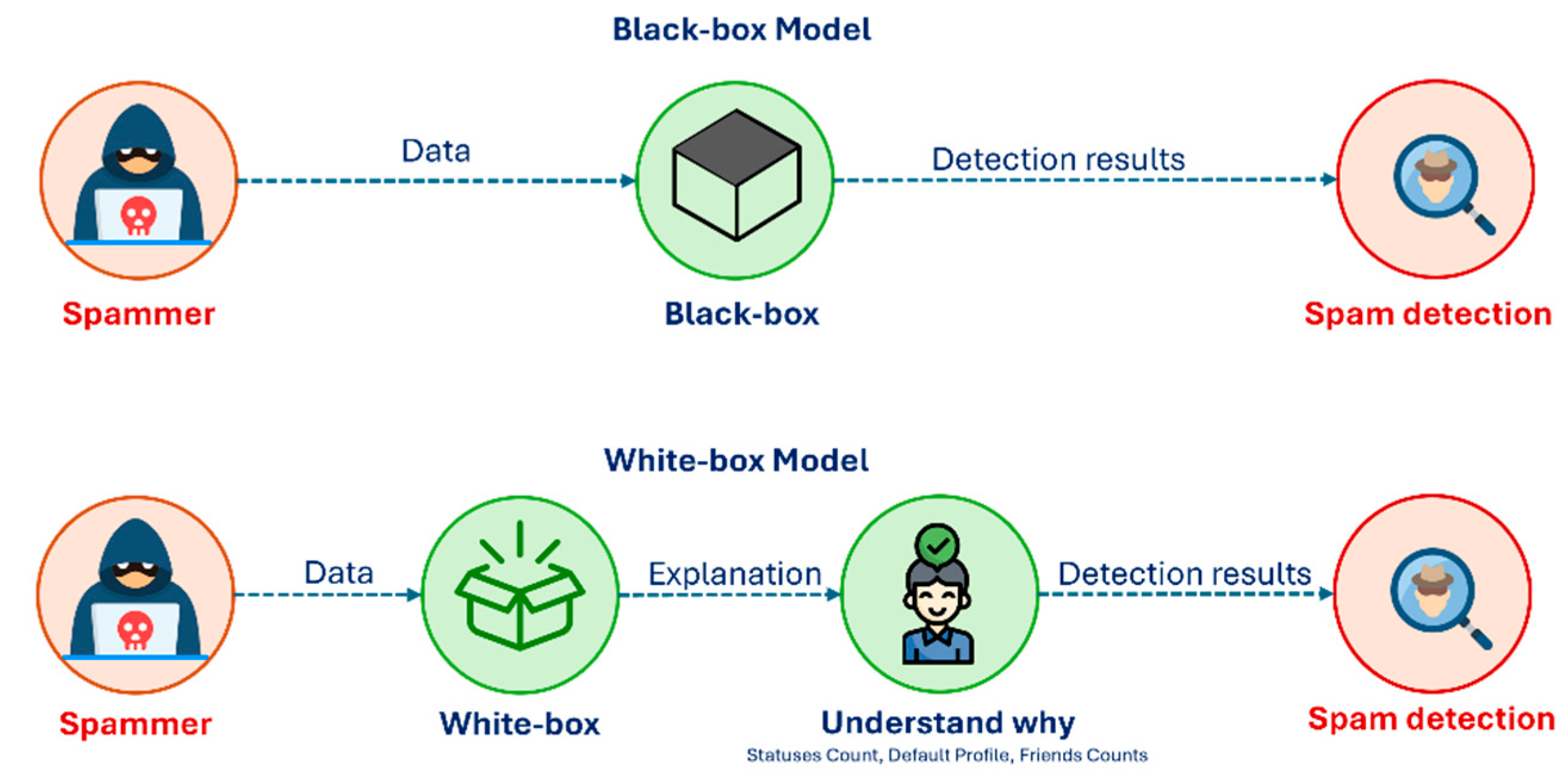

2.2. Explainable Artificial Intelligence

2.3. Machine Learning Algorithms

2.3.1. Decision Tree

2.3.2. K-Nearest Neighbors (KNN)

2.3.3. Logistic Regression (LR)

3. Literature Review

4. Methodology

4.1. Dataset Description

- User profile features: Several attributes measure activity of account with its reach. User-Statuses-Count (USC) represents the user's latest Tweets or retweets. This indicates the account's productive nature on the platform. Moreover, User-Followers-Count (UFLC) is the total quantity of Tweets this user has endorsed over the account's existence. Whereas User-Friends-Count (UFRC) is users number following this account Also known as their "followings". User-Favourites-Count (UFC) perspectival denotes if the verifying user has liked (favorited) this Tweet. Additionally, User-Listed-Count (ULC) this indicates of public lists number of which the user is a member, its measure prominence of account among different users. These number indicators essentially encapsulate engagement of accounts and footprint in social platform. including these properties enables the analysis of such patterns within the dataset.

- Tweet and profile indicators: Dataset additionally includes a number binary (yes/no) attributes that represent tweet characteristics and profile configurations. Sensitive-Content-Alert (SCA) represents a Boolean value It denotes sensitive items contained in Tweet content or within the textual properties for the user's property. This may indicate spam, as spammer tweets often contain this type of material. Source-in-X (SITW) displays the utility employed to publish the Tweet, formatted as an HTML string. denotes whether the tweet was disseminated using an official X platform (YES) or a third-party source (NO).For instance, Tweets originating from the X website possess a source designation of web. Conversely, spammers may utilize specific applications. User-Location (UL) constitutes a category domain indicates the user provided location for the account profile (YES) or unfilled (NO). the location actual text is not employs due to its random nature and difficulty in parsing. This binary characteristic just indicates the existence of a profile location. commonly possessed by legal users. User-Geo-Enabled (UGE) if the user has activated geotagging on tweets is TRUE (indicating that the account permits the attachment of geographical coordinates to tweets). User-Default-Profile-Image (UDPI) is a boolean variable, signifies that the user using default X profile picture(When true, indicates the used not uploaded image for profile). Significantly, suspicious accounts typically use the default image profile and possess minimal private information [42] .So this trait may indicate a potential threat. Finally, ReTweet (RTWT) Boolean value, this indicates if the validating user has retweeted this Tweet.

- Class attribute: this attribute in the dataset signifies that an account is categorized as spam account if true, while false represents an account that is legal.

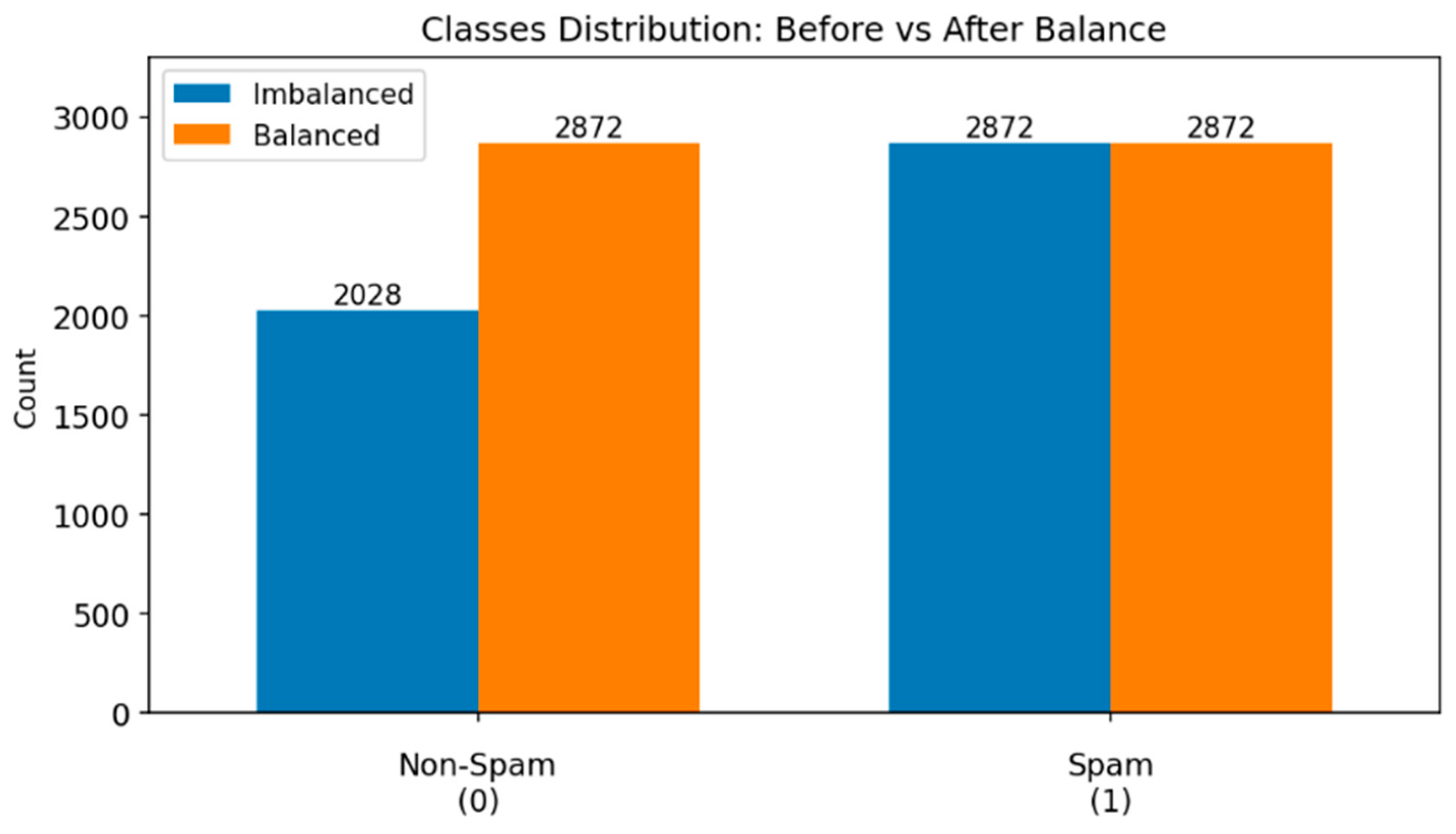

4.2. Data Preprocessing and Balancing

4.4. Feature Selection Approach

4.4.1. Rüppell Fox Optimization

4.1.2. Daylight and Night Behavioral Update Strategies

- Daylight mode (p≥0.5)

- 2.

- Night mode (p<0.5)

4.5. Model Training

4.6. Model Evaluating

4.7. Implementation Environment

5. Results and Discussion

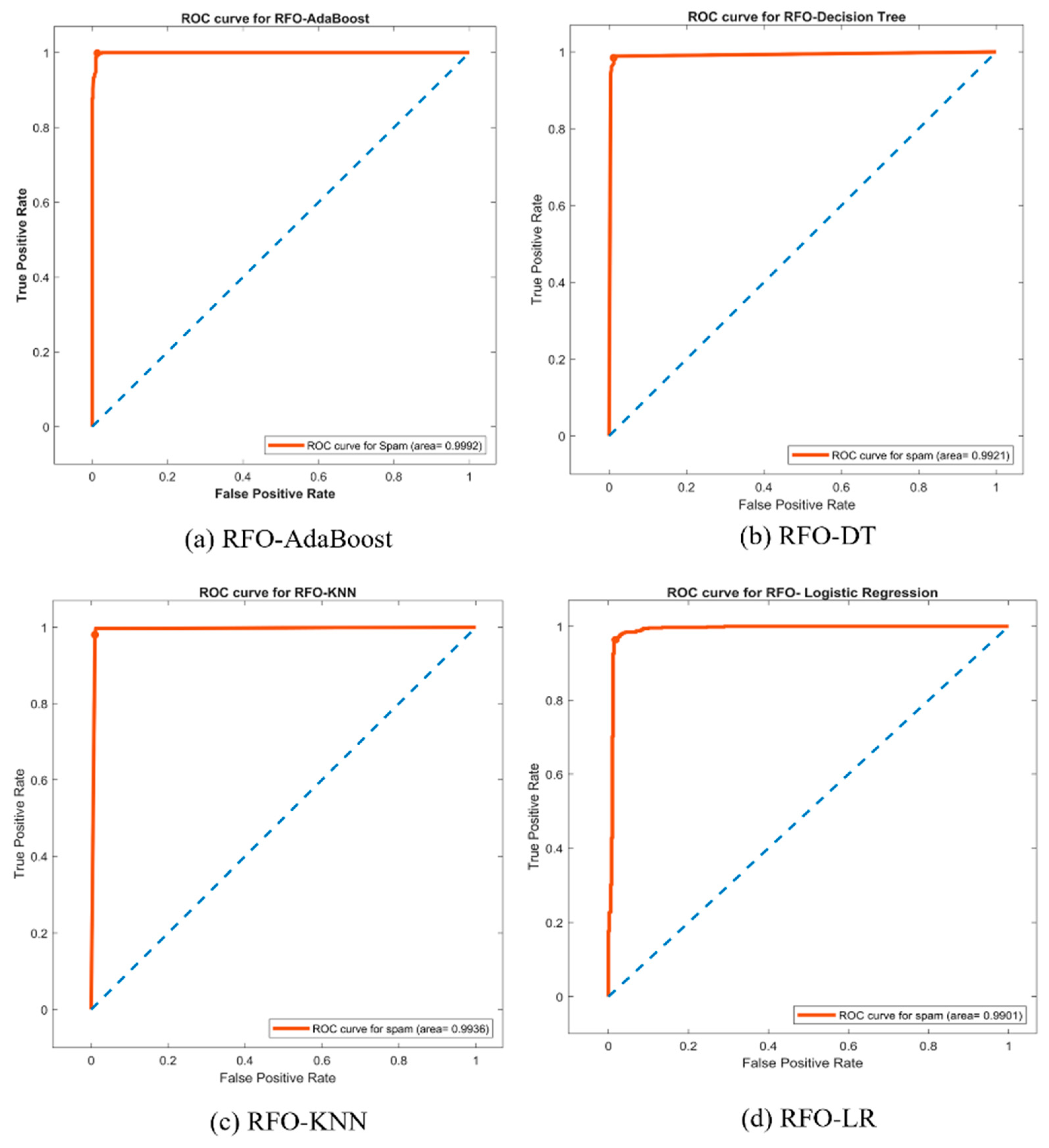

5.1. Evaluation of Performance with the ROC Curve

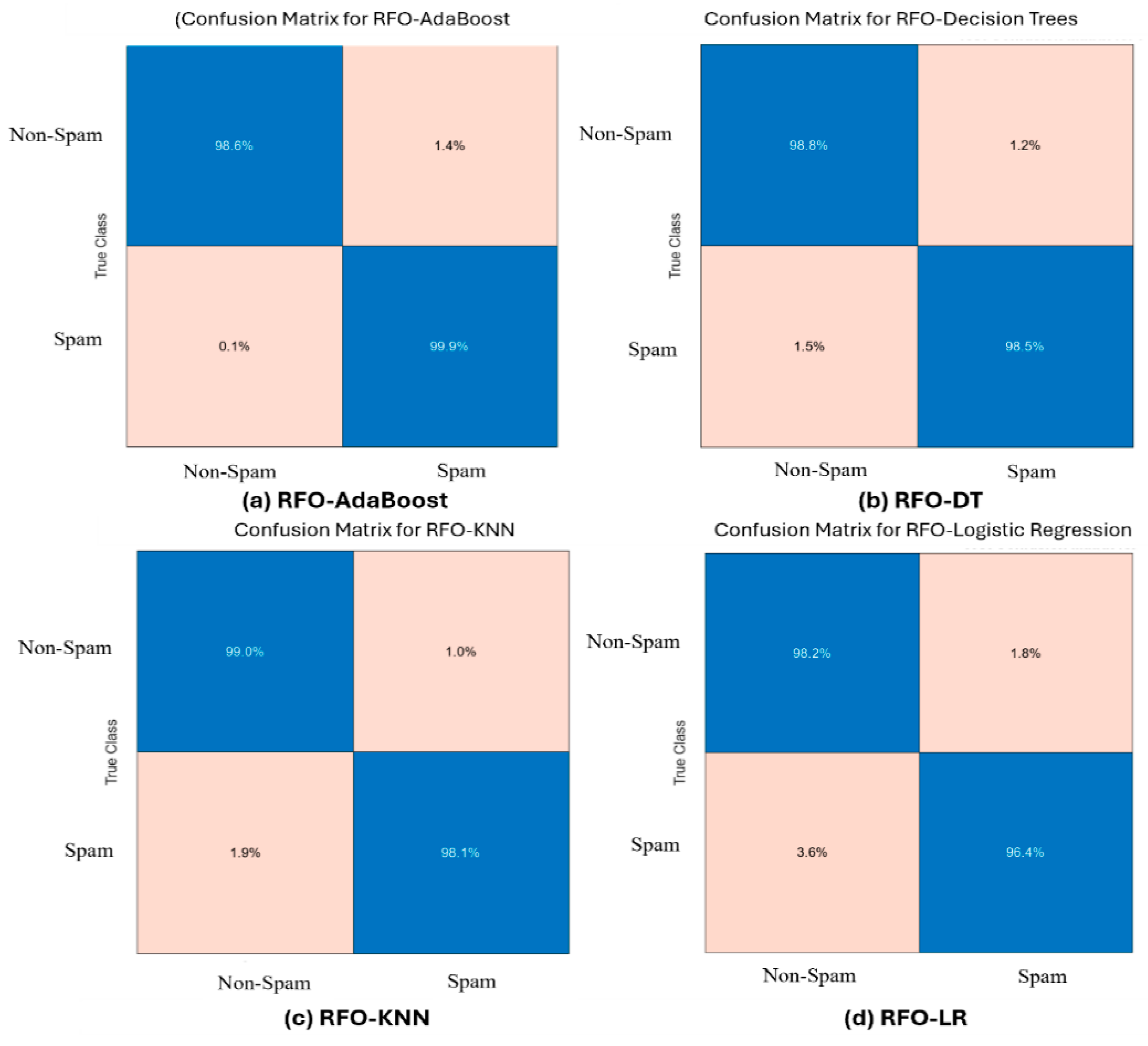

5.2. Performance Evaluation Using Confusion Matrix

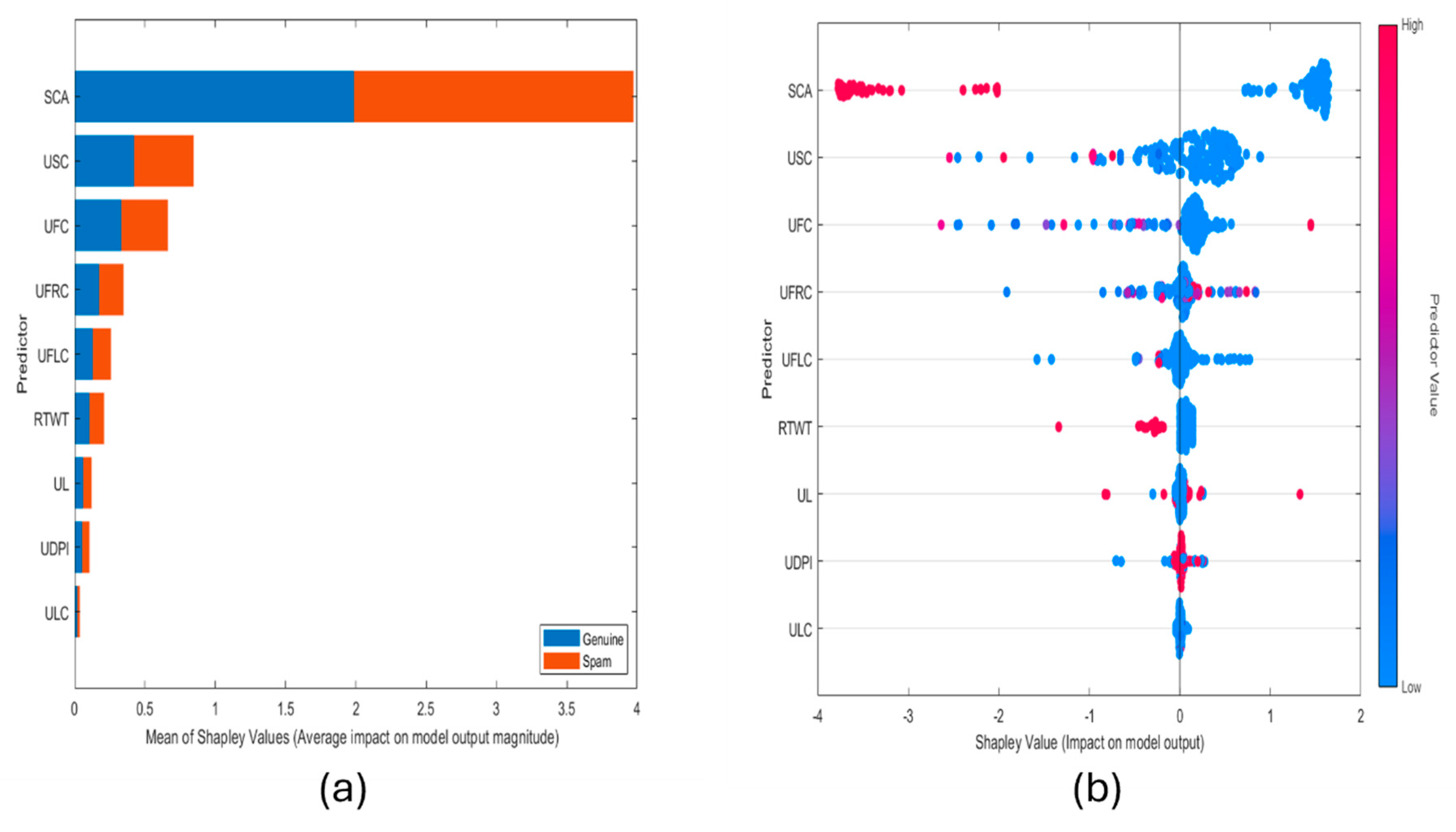

5.3. Explain Global Model Predictions Using Shapley Importance Plot

5.4. Explain Global Model Predictions Using Shapley Summary Plot

6. Conclusions

References

- G. Jethava and U. P. Rao, "Exploring security and trust mechanisms in online social networks: An extensive review," Computers & Security, p. 103790, 2024.

- D. Nevado-Catalán, S. Pastrana, N. Vallina-Rodriguez, and J. Tapiador, "An analysis of fake social media engagement services," Computers & Security, vol. 124, p. 103013, 2023.

- E. de Keulenaar, J. C. Magalhães, and B. Ganesh, "Modulating Moderation: A Genealogy of Objectionable Content on Twitter," MediArXiv. September, vol. 1, 2022.

- R. Murtfeldt, N. Alterman, I. Kahveci, and J. D. West, "RIP Twitter API: A eulogy to its vast research contributions," arXiv preprint arXiv:2404.07340, 2024.

- J. Song, S. Lee, and J. Kim, "Spam filtering in twitter using sender-receiver relationship," in Recent Advances in Intrusion Detection: 14th International Symposium, RAID 2011, Menlo Park, CA, USA, September 20-21, 2011. Proceedings 14, 2011: Springer, pp. 301-317.

- S. J. Delany, M. Buckley, and D. Greene, "SMS spam filtering: Methods and data," Expert Systems with Applications, vol. 39, no. 10, pp. 9899-9908, 2012.

- Z. Iman, S. Sanner, M. R. Bouadjenek, and L. Xie, "A longitudinal study of topic classification on twitter," in Proceedings of the International AAAI Conference on Web and Social Media, 2017, vol. 11, no. 1, pp. 552-555.

- H. Rashid, H. B. Liaqat, M. U. Sana, T. Kiren, H. Karamti, and I. Ashraf, "Framework for detecting phishing crimes on Twitter using selective features and machine learning," Computers and Electrical Engineering, vol. 124, p. 110363, 2025.

- S. B. S. Ahmad, M. Rafie, and S. M. Ghorabie, "Spam detection on Twitter using a support vector machine and users’ features by identifying their interactions," Multimedia Tools and Applications, vol. 80, no. 8, pp. 11583-11605, 2021.

- S. Bazzaz Abkenar, E. Mahdipour, S. M. Jameii, and M. Haghi Kashani, "A hybrid classification method for Twitter spam detection based on differential evolution and random forest," Concurrency and Computation: Practice and Experience, vol. 33, no. 21, p. e6381, 2021.

- Galli, V. La Gatta, V. Moscato, M. Postiglione, and G. Sperlì, "Explainability in AI-based behavioral malware detection systems," Computers & Security, vol. 141, p. 103842, 2024.

- T.-T.-H. Le, H. Kim, H. Kang, and H. Kim, "Classification and explanation for intrusion detection system based on ensemble trees and SHAP method," Sensors, vol. 22, no. 3, p. 1154, 2022.

- M. Braik and H. Al-Hiary, "Rüppell’s fox optimizer: A novel meta-heuristic approach for solving global optimization problems," Cluster Computing, vol. 28, no. 5, pp. 1-77, 2025.

- K. Kaczmarek-Majer et al., "PLENARY: Explaining black-box models in natural language through fuzzy linguistic summaries," Information Sciences, vol. 614, pp. 374-399, 2022.

- A. Khan, O. Chaudhari, and R. Chandra, "A review of ensemble learning and data augmentation models for class imbalanced problems: Combination, implementation and evaluation," Expert Systems with Applications, vol. 244, p. 122778, 2024.

- Mohammed and R. Kora, "A comprehensive review on ensemble deep learning: Opportunities and challenges," Journal of King Saud University-Computer and Information Sciences, vol. 35, no. 2, pp. 757-774, 2023.

- H.-J. Xing, W.-T. Liu, and X.-Z. Wang, "Bounded exponential loss function based AdaBoost ensemble of OCSVMs," Pattern Recognition, vol. 148, p. 110191, 2024.

- E. M. Ferrouhi and I. Bouabdallaoui, "A comparative study of ensemble learning algorithms for high-frequency trading," Scientific African, vol. 24, p. e02161, 2024.

- G. Paic and L. Serkin, "The impact of artificial intelligence: from cognitive costs to global inequality," EUROPEAN PHYSICAL JOURNAL-SPECIAL TOPICS, 2025.

- Abusitta, M. Q. Li, and B. C. Fung, "Survey on Explainable AI: Techniques, challenges and open issues," Expert Systems with Applications, vol. 255, p. 124710, 2024.

- E. Borgonovo, E. Plischke, and G. Rabitti, "The many Shapley values for explainable artificial intelligence: A sensitivity analysis perspective," European Journal of Operational Research, vol. 318, no. 3, pp. 911-926, 2024.

- V. Selvakumar, N. K. Reddy, R. S. V. Tulasi, and K. R. Kumar, "Data-Driven Insights into Social Media Behavior Using Predictive Modeling," Procedia Computer Science, vol. 252, pp. 480-489, 2025.

- D. Mienye and N. Jere, "A survey of decision trees: Concepts, algorithms, and applications," IEEE access, 2024.

- S. Mohammed, N. Al-Aaraji, and A. Al-Saleh, "Knowledge Rules-Based Decision Tree Classifier Model for Effective Fake Accounts Detection in Social Networks," International Journal of Safety & Security Engineering, vol. 14, no. 4, 2024.

- R. K. Halder, M. N. Uddin, M. A. Uddin, S. Aryal, and A. Khraisat, "Enhancing K-nearest neighbor algorithm: a comprehensive review and performance analysis of modifications," Journal of Big Data, vol. 11, no. 1, p. 113, 2024.

- M. Teke and T. Etem, "Cascading GLCM and T-SNE for detecting tumor on kidney CT images with lightweight machine learning design," The European Physical Journal Special Topics, pp. 1-16, 2025.

- Q. Ouyang, J. Tian, and J. Wei, "E-mail Spam Classification using KNN and Naive Bayes," Highlights in Science, Engineering and Technology, vol. 38, pp. 57-63, 2023.

- E. Bisong and E. Bisong, "Logistic regression," Building machine learning and deep learning models on google cloud platform: A comprehensive guide for beginners, pp. 243-250, 2019.

- S. K. Sarker et al., "Email Spam Detection Using Logistic Regression and Explainable AI," in 2025 International Conference on Electrical, Computer and Communication Engineering (ECCE), 2025: IEEE, pp. 1-6.

- K. Bharti and S. Pandey, "Fake account detection in twitter using logistic regression with particle swarm optimization," Soft Computing, vol. 25, no. 16, pp. 11333-11345, 2021.

- S. B. Abkenar, M. H. Kashani, M. Akbari, and E. Mahdipour, "Learning textual features for Twitter spam detection: A systematic literature review," Expert Systems with Applications, vol. 228, p. 120366, 2023.

- Qazi, N. Hasan, R. Mao, M. E. M. Abo, S. K. Dey, and G. Hardaker, "Machine Learning-Based Opinion Spam Detection: A Systematic Literature Review," IEEE Access, 2024.

- N. H. Imam and V. G. Vassilakis, "A survey of attacks against twitter spam detectors in an adversarial environment," Robotics, vol. 8, no. 3, p. 50, 2019.

- E. Alnagi, A. Ahmad, Q. A. Al-Haija, and A. Aref, "Unmasking Fake Social Network Accounts with Explainable Intelligence," International Journal of Advanced Computer Science & Applications, vol. 15, no. 3, 2024.

- İ. Atacak, O. Çıtlak, and İ. A. Doğru, "Application of interval type-2 fuzzy logic and type-1 fuzzy logic-based approaches to social networks for spam detection with combined feature capabilities," PeerJ Computer Science, vol. 9, p. e1316, 2023.

- S. Ouni, F. Fkih, and M. N. Omri, "BERT-and CNN-based TOBEAT approach for unwelcome tweets detection," Social Network Analysis and Mining, vol. 12, no. 1, p. 144, 2022.

- F. E. Ayo, O. Folorunso, F. T. Ibharalu, I. A. Osinuga, and A. Abayomi-Alli, "A probabilistic clustering model for hate speech classification in twitter," Expert Systems with Applications, vol. 173, p. 114762, 2021.

- S. Liu, Y. Wang, C. Chen, and Y. Xiang, "An ensemble learning approach for addressing the class imbalance problem in Twitter spam detection," in Information Security and Privacy: 21st Australasian Conference, ACISP 2016, Melbourne, VIC, Australia, July 4-6, 2016, Proceedings, Part I 21, 2016: Springer, pp. 215-228.

- S. Gupta, A. Khattar, A. Gogia, P. Kumaraguru, and T. Chakraborty, "Collective classification of spam campaigners on Twitter: A hierarchical meta-path based approach," in Proceedings of the 2018 world wide web conference, 2018, pp. 529-538.

- P. Manasa et al., "Tweet spam detection using machine learning and swarm optimization techniques," IEEE Transactions on Computational Social Systems, 2022.

- R. Krithiga and E. Ilavarasan, "Hyperparameter tuning of AdaBoost algorithm for social spammer identification," International Journal of Pervasive Computing and Communications, vol. 17, no. 5, pp. 462-482, 2021.

- Ghourabi and M. Alohaly, "Enhancing spam message classification and detection using transformer-based embedding and ensemble learning," Sensors, vol. 23, no. 8, p. 3861, 2023.

- R. Kohavi, "A study of cross-validation and bootstrap for accuracy estimation and model selection," in Ijcai, 1995, vol. 14, no. 2: Montreal, Canada, pp. 1137-1145.

- R. E. Schapire, "Explaining adaboost," in Empirical inference: festschrift in honor of vladimir N. Vapnik: Springer, 2013, pp. 37-52.

- M. Djuric, L. Jovanovic, M. Zivkovic, N. Bacanin, M. Antonijevic, and M. Sarac, "The adaboost approach tuned by sns metaheuristics for fraud detection," in Proceedings of the International Conference on Paradigms of Computing, Communication and Data Sciences: PCCDS 2022, 2023: Springer, pp. 115-128.

- F. Jáñez-Martino, R. Alaiz-Rodríguez, V. González-Castro, E. Fidalgo, and E. Alegre, "A review of spam email detection: analysis of spammer strategies and the dataset shift problem," Artificial Intelligence Review, vol. 56, no. 2, pp. 1145-1173, 2023.

- B. Meriem, L. Hlaoua, and L. B. Romdhane, "A fuzzy approach for sarcasm detection in social networks," Procedia Computer Science, vol. 192, pp. 602-611, 2021.

- S. Liu, Y. Wang, J. Zhang, C. Chen, and Y. Xiang, "Addressing the class imbalance problem in twitter spam detection using ensemble learning," Computers & Security, vol. 69, pp. 35-49, 2017.

- K. Ameen and B. Kaya, "Spam detection in online social networks by deep learning," in 2018 international conference on artificial intelligence and data processing (IDAP), 2018: IEEE, pp. 1-4.

- S. Madisetty and M. S. Desarkar, "A neural network-based ensemble approach for spam detection in Twitter," IEEE Transactions on Computational Social Systems, vol. 5, no. 4, pp. 973-984, 2018.

- M. Ashour, C. Salama, and M. W. El-Kharashi, "Detecting spam tweets using character N-gram features," in 2018 13th International conference on computer engineering and systems (ICCES), 2018: IEEE, pp. 190-195.

| No | Input model Features | Type | Range of evaluate |

| 1 | User Statuses Count (USC) | Integer | 0-99,100-199,…,1000000-1999999 |

| 2 | Sensitive Content Alert (SCA) | Boolean | TRUE(T)/FALSE(F) |

| 3 | User Favorites Count(UFC) | Integer | 0-9,10-19,20-29,…,100000-1999999 |

| 4 | User Listed Count (ULC) | Integer | 0-9,10-19,20-29,…,900-999 |

| 5 | Source in Twitter (SITW) | String | Yes(Y)/No(N) |

| 6 | User Friends Counts (UFRC) | Integer | 0-9,10-19,20-29,…,1000-99999 |

| 7 | User Followers Count (UFLC) | Integer | 0-9,10-19,20-29,…,100000-1999999 |

| 8 | User Location (UL) | String | Yes(Y)/No(N) |

| 9 | User Geo Enabled (UGE) | Boolean | TRUE(T)/FALSE(F) |

| 10 | User Default Profile Image (UDPI) | Boolean | TRUE(T)/FALSE(F) |

| 11 | Re-Tweet (RTWT) | Boolean | TRUE(T)/FALSE(F) |

| 12 | Account-Suspender (CLASS) | Boolean | TRUE(T)/FALSE(F) |

| Optimal features | Selectedf eatures |

| Nine optimal features for detection models | SCA, UL, UDPI, RTWT, USC, UFC, ULC, UFRC, UFLC |

| metrics | Formula | Description |

| Accuracy | It is a measure which indicates the proportion of accurately identified cases relative with the total cases assessed. |

|

| F1-score | This is a statistic that provides the harmonic average of recall and precision metrics. |

|

| Recall | This indicator quantifies the proportion of non-Spam positives identified by the training model in a specific classification issue. |

|

| Precision | It is a measure that quantifies the proportion of accurately identified positive cases among all positively classified cases. The amount of accurate predictions to all correct predictions is termed precision. |

|

| AUC | This indicator evaluates the efficacy of the training model based ROC curve , illustrating the relationship among the rate of false positives and the rate of true positives across various thresholds. | |

| Macro-average | Unweighted average metric values of the per-class | |

| Micro-average | Average of the designated metric computed from the combined predicted and actual values across all classes. | |

| Weighted average | Weighted average of per-class metric values, based on the frequency of occurrence for each class |

- True-Positive (TP): represents the number of accounts model predicted correctly as spam.

- True-Negative (TN): represents the number of accounts model predicted correctly as non-spam.

- False-Positive (FP): represents the number of non-spam accounts the model predicted as spam.

- False-Negative (FN): represents the number of spam the model predicted as non-spam.

- is metric class, is number of true instances (Support) for class

| Metric/class | Precision(%) | Recall(%) | F1-Score(%) | Support |

| Non-Spam | 99.08 | 98.62 | 99.02 | 507 |

| Spam | 99.03 | 99.86 | 99.44 | 718 |

| Overall | 1225 | |||

| Accuracy | 99.35 | |||

| Macro Avg | 99.41 | 99.24 | 99.32 | |

| Micro Avg | 99.34 | 99.34 | 99.34 | |

| Weighted Avg | 99.35 | 99.34 | 99.35 | |

| Models | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

| RFO-AdaBoost | 99.35 | 99.03 | 99.86 | 99.44 |

| RFO--DT | 98.69 | 99.16 | 98.47 | 98.81 |

| RFO--KNN | 98.45 | 99.30 | 98.05 | 98.67 |

| RFO--LR | 97.14 | 98.72 | 96.38 | 97.47 |

| Author | Dataset | Methodology |

Feature selection |

XAI | Performance results (%) |

| [35] | X Dataset (by X API) |

(IT2-M) (FIS) (IT2-S) (FIS) (IT1-M) (FIS) (IT1-S) (FIS) |

⃝ | ⃝ | Acc = 95.5 P = 95.7 F = 96.2 R = 96.7 AUC = 97.1 |

| [36] | SemCat-(2018) | TOBEAT leveraging BERT and CNN | ● | ⃝ | Acc = 94.97 P = 94.05 R = 95.88 F = 94.95 |

| [37] | X Dataset (by hatebase.org) |

A clustering framework using probabilistic rules and fuzzy sentiment classification | ⃝ | ⃝ | Acc = 94.53 P = 92.54 R = 91.74 F = 92.56 AUC = 96.45 |

| [47] | X Dataset (Sem-Eval-2014 and Bamman) |

Classification methodology based on (FL) | ● | ⃝ | Acc = 90.9 P = 95.7 R = 82.4 F = 87.4 |

| [48] | X Dataset (by [48]) | Ensemble Learning technique with Random oversampling (ROS) plus random undersampling (RUS) plus fuzzy-based oversampling (FOS) | ⃝ | ⃝ | Mean P= 0.76-0.78 Mean F= 0.76-0.55 Mean FP=0.11 TP= 0.74-0.43 |

| [39] | X Dataset (by X API) |

(HMPS) Hierarchical Meta-Path Based Approach with Feedback and default one-class classifier | ⃝ | ⃝ | P = 95.0 R = 90.0 F = 93.0 AUC = 92.0 |

| [49] | X Dataset (by X API) |

Deep Learning(DL) Methodology Utilizing a Multilayer Perceptron (MLP) algorithm | ⃝ | ⃝ | P = 92.0 R = 88.0 F = 89.0 |

| [34] | X Dataset (by www.unipi.it) | Ensemble based XGBoost with Random Forest | ● | ● | Acc = 90 P = 91.0 R = 86.0 F = 89.0 |

| [50] | X Dataset (HSpam14 and 1KS10KN) |

Ensemble Method Utilizing Convolutional Neural Network Models and a Feature-Based Model |

⃝ | ⃝ | Acc = 95.7 P = 92.2 R = 86.7 F = 89.3 |

| [51] | X Dataset (by [49]) |

LR,SVM and RF utilizing various character N-gram features. |

⃝ | ⃝ | P = 79.5 R = 79.4 F = 79.4 |

| Proposed RFO-AdaBoost | X Dataset (by [35]) | nature-inspired with ensemble Learning approach | ● | ● | Acc = 99.35 P = 99.03 R = 99.86 F = 99.44 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).