Submitted:

01 September 2025

Posted:

02 September 2025

You are already at the latest version

Abstract

Keywords:

Introduction

2. Literature Review

Methodology

- 1.

- Selection of Big Data Technologies

- ○

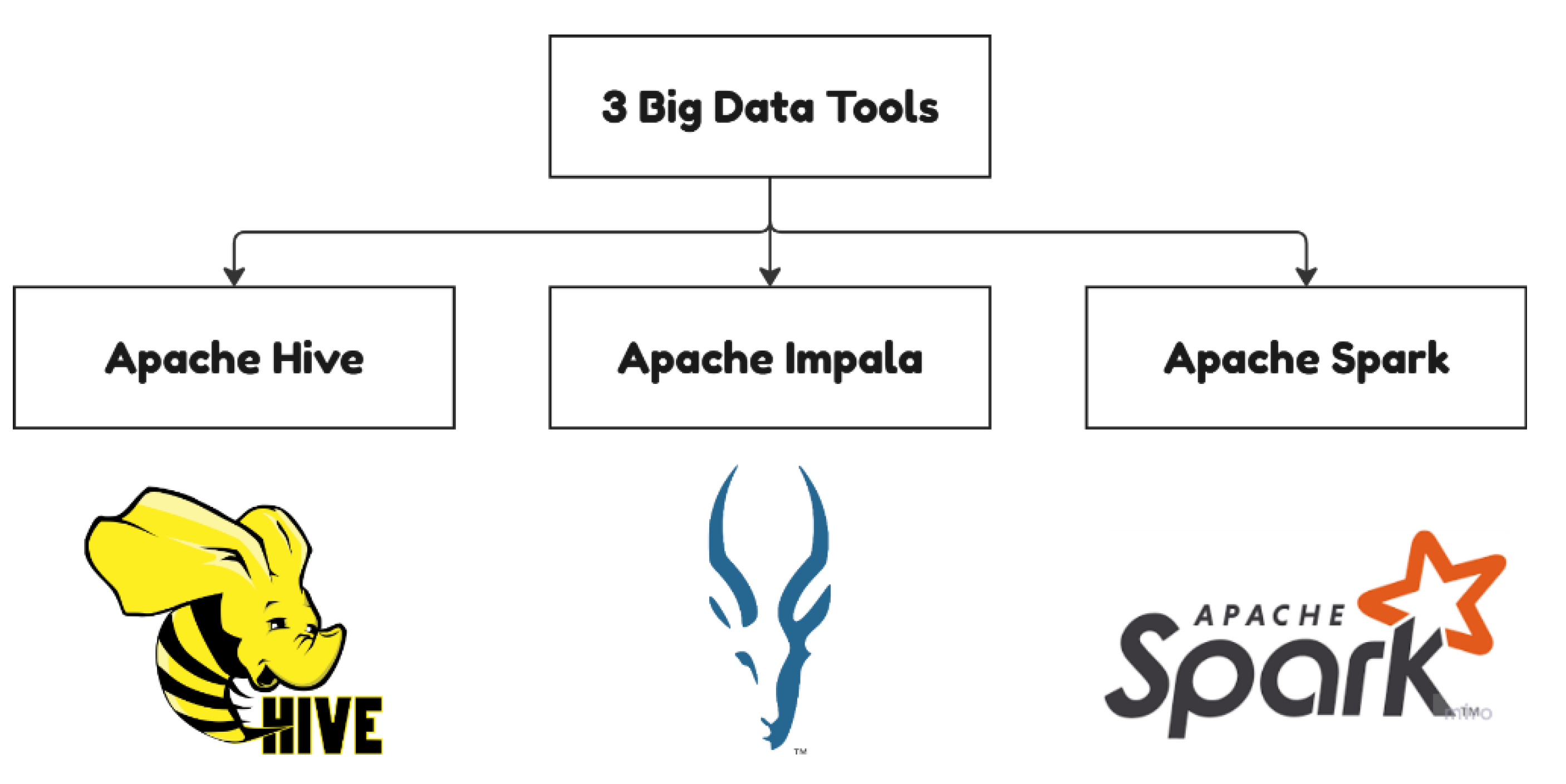

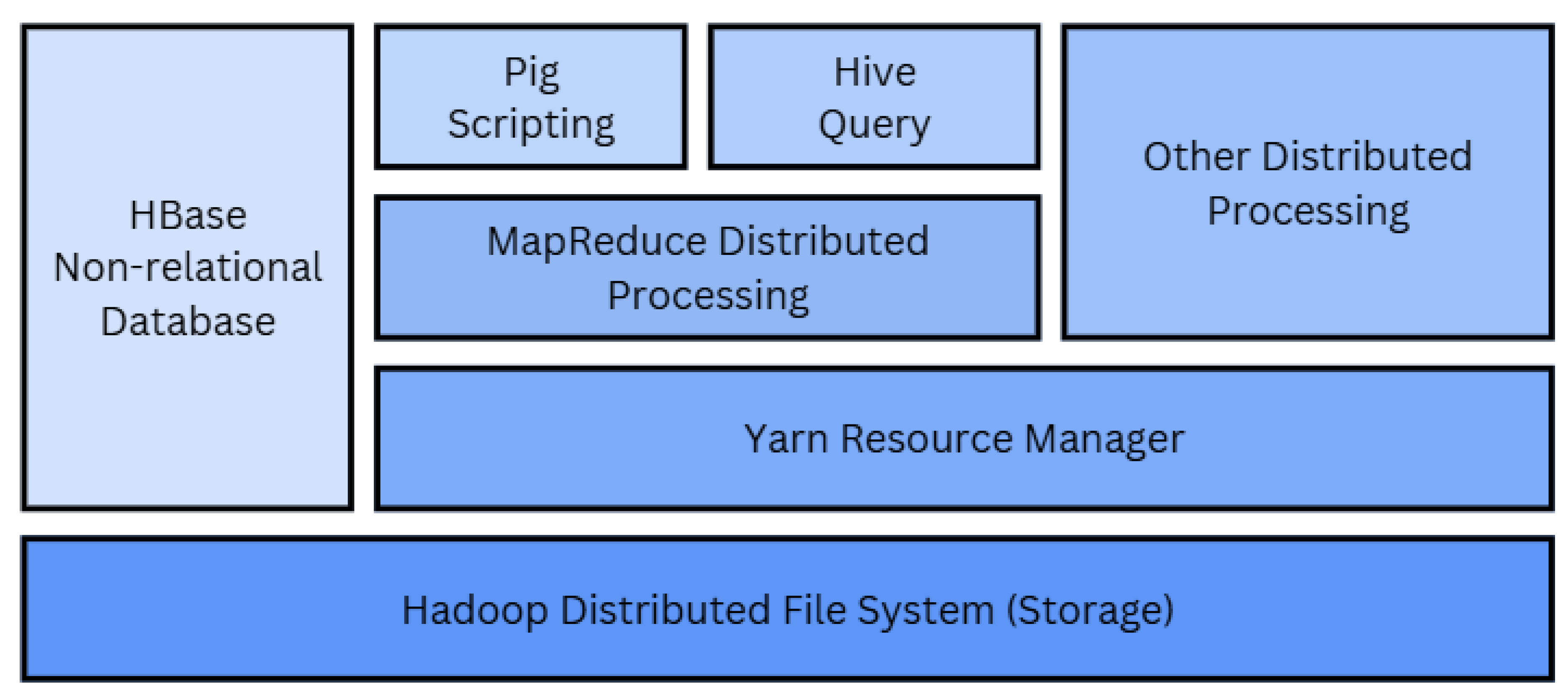

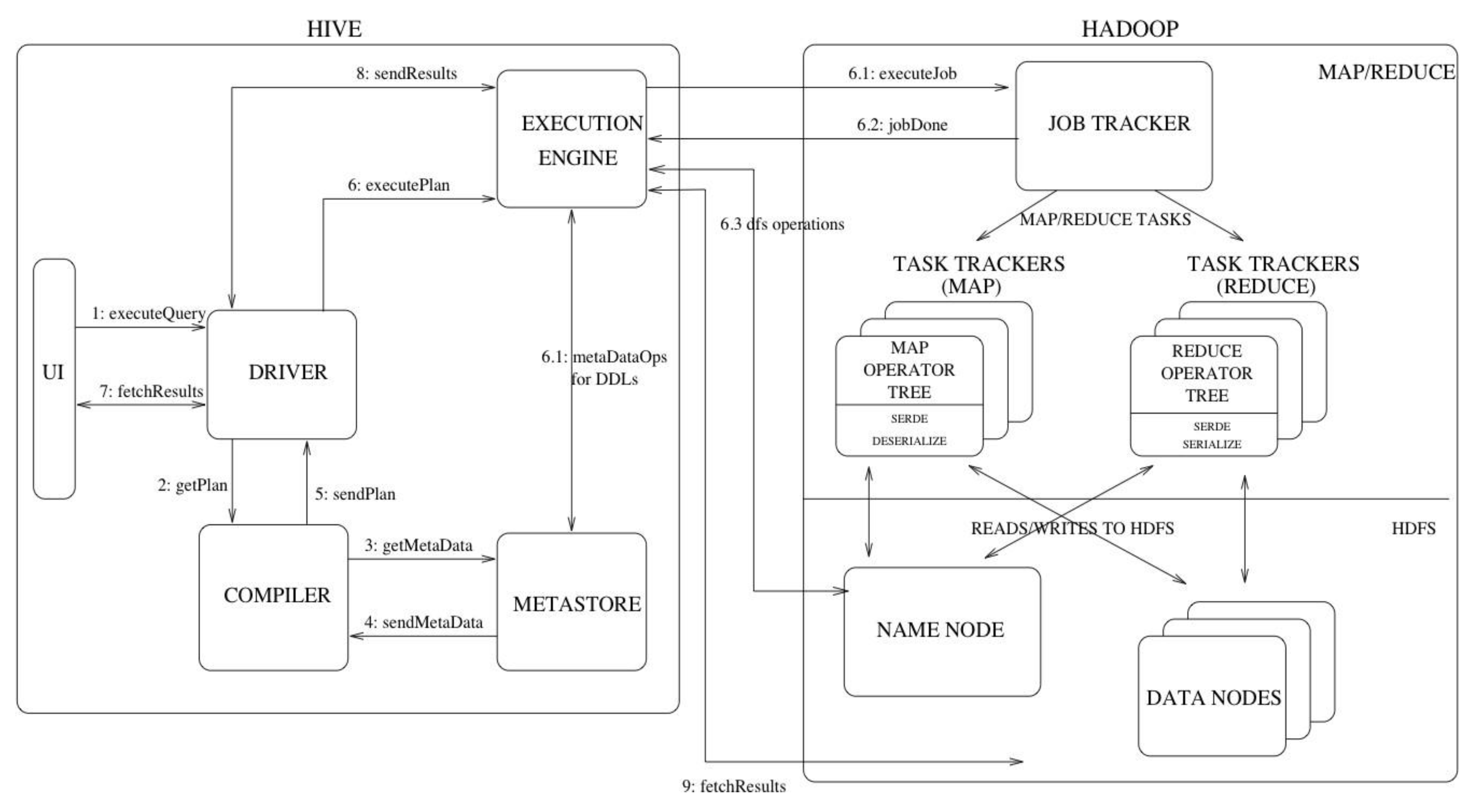

- Apache Hive – for query against datasets within HDFS using SQL-like syntax with MapReduce.

- ○

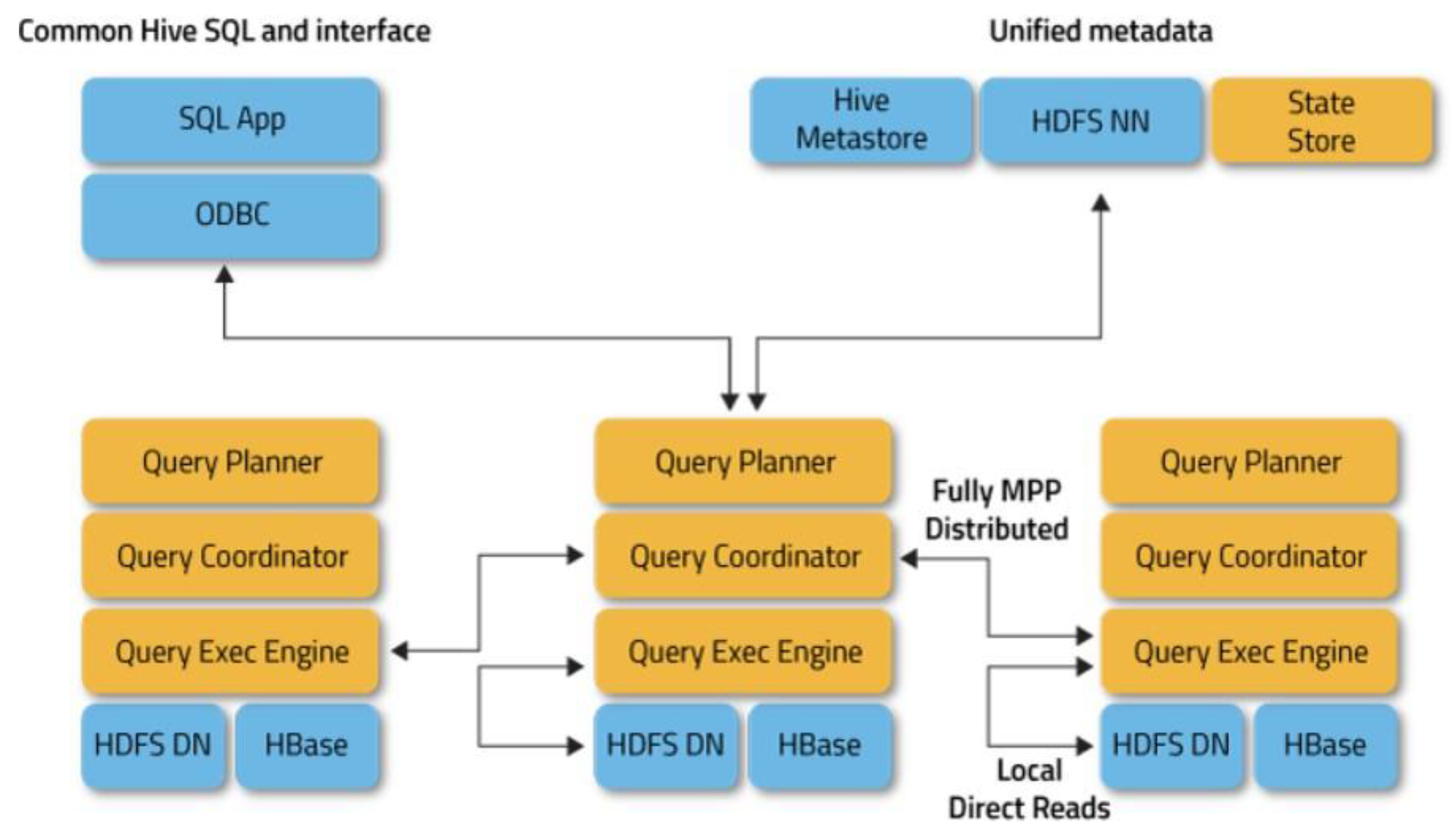

- Apache Impala – for providing SQL query processing with low-latency, low-latency, high-speed processing of query statements across a distributed Hadoop ecosystem.

- ○

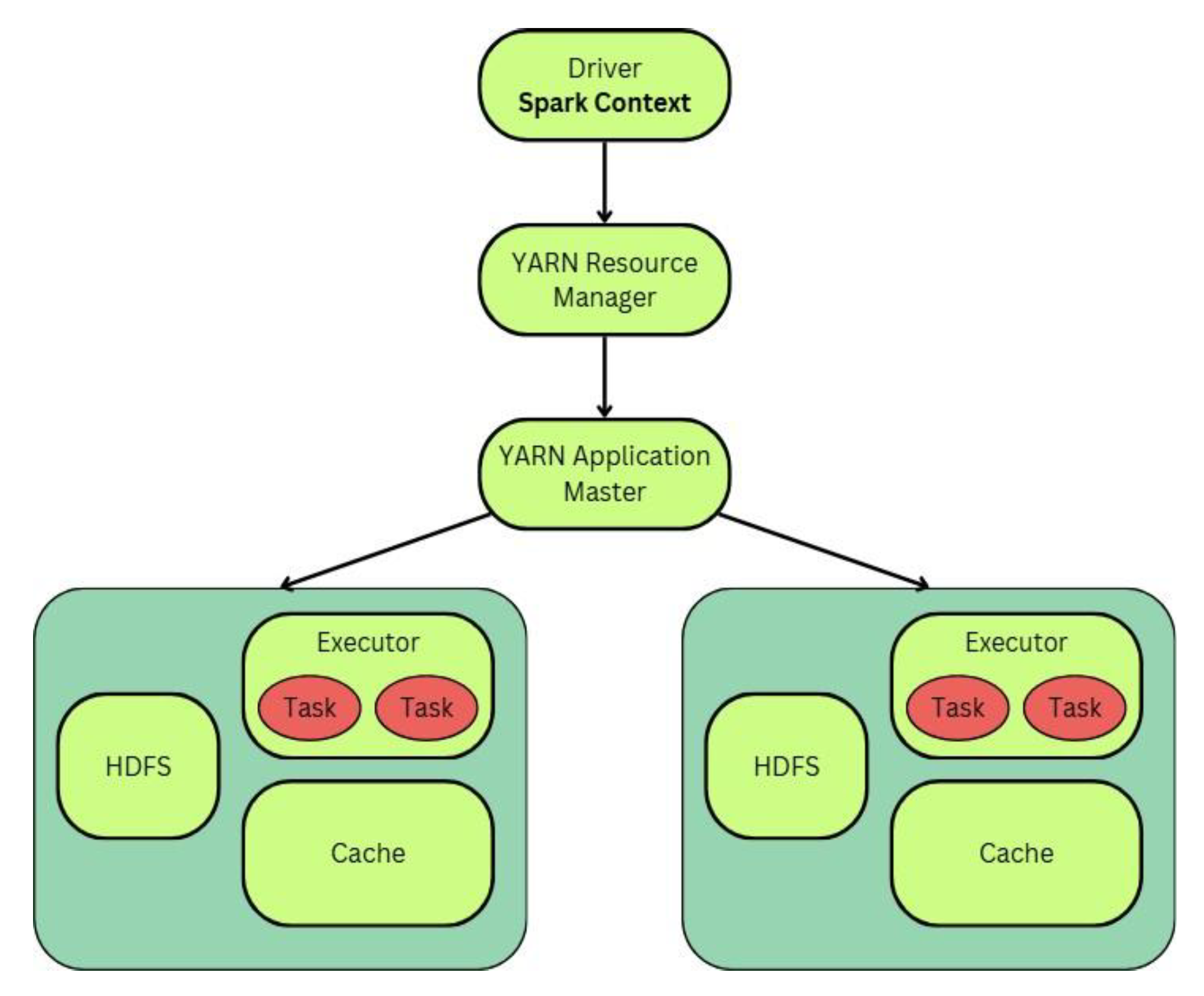

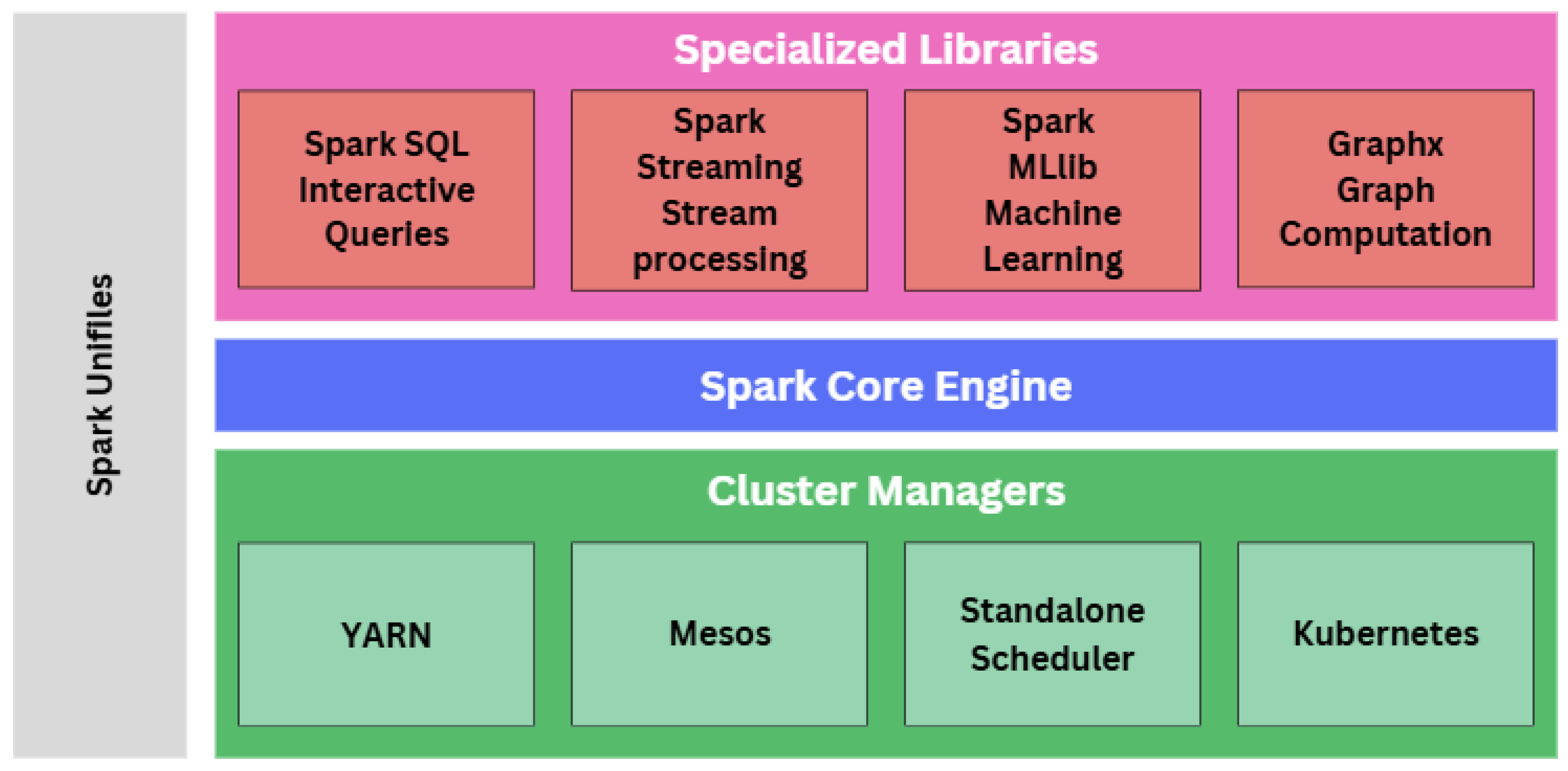

- Apache Spark – for distributed, in-memory processing of data stored across several distributed nodes and also for machine learning.

- 2.

- Dataset Acquisition and Preprocessing

- ○

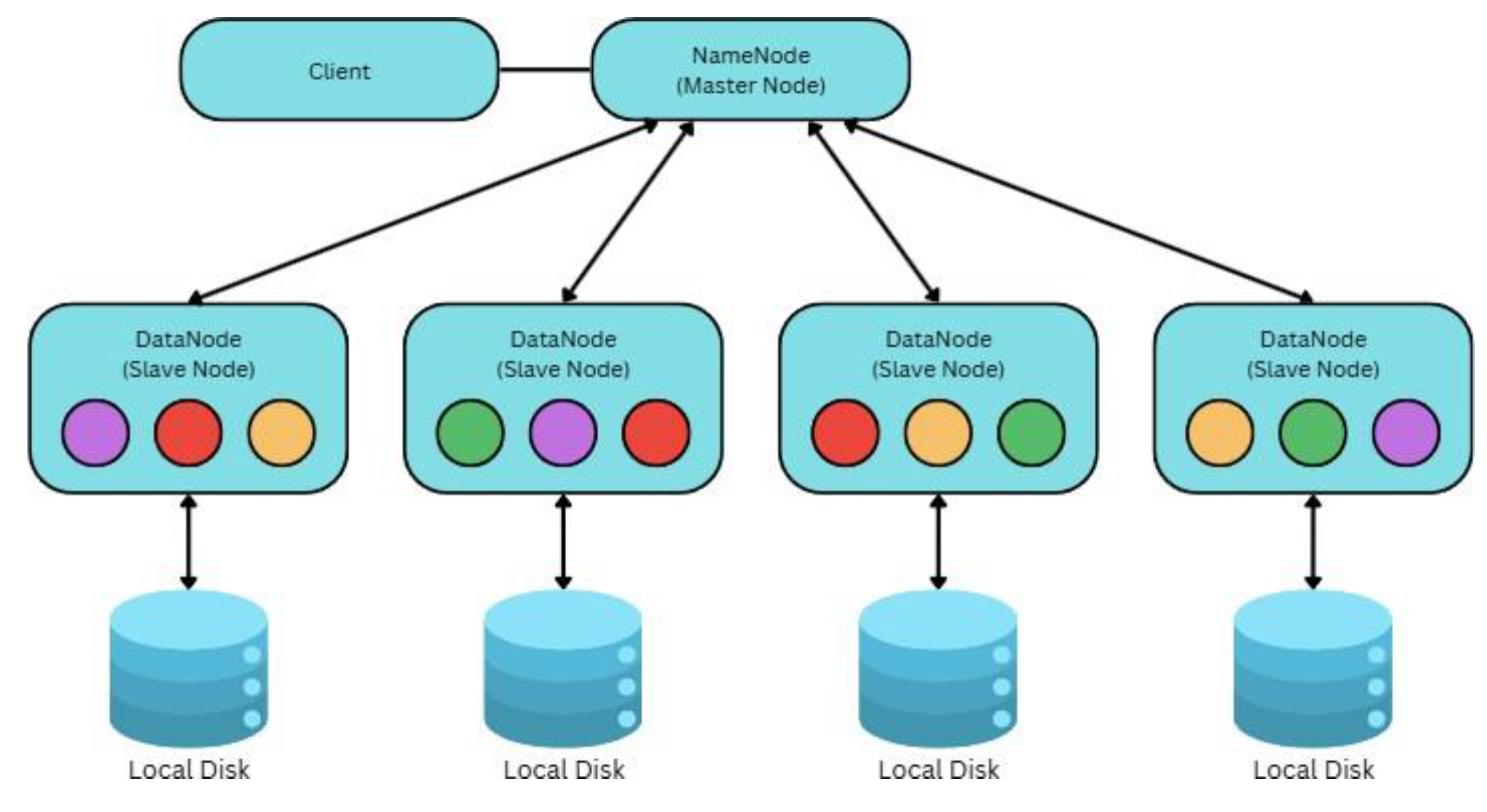

- Conversion: The dataset was initially in csv format, but we converted it to text format (.txt) to upload to Hadoop Distributed File System (HDFS).

- ○

- Categorization: Datasets were sorted into two categories:

- ○

- Demographics - customer status data (age, gender, customer id, etc.)

- ○

- Transaction - attribute data (price, quantity, category, method of payment, etc.).

- 3.

- Experimental Design

- ○

- Batch Processing Tasks: We employed MapReduce frameworks within Hadoop to complete word count operations in order to determine credit card usage.

- ○

- SQL-Like Queries: We carried out five SQL-like tasks (e.g. top payment methods, top product categories, revenue computation) across Hive and Impala.

- ○

- In a non-SQL environment (Spark), a MapReduce pipeline was created using Java, and consisted of:

- ○

- Mapper Class – that tokenized the input data into key-value pairs.

- ○

- Reducer Class – that summarized mapped values.

- ○

- Driver Class – that controlled the execution on Spark Cluster.

- 4.

- Machine Learning Implementation

- ○

- Decision Tree Regression was implemented using Spark MLlib and Python (in a Jupyter Notebook) to predict customer spending behavior

- ○

- Feature Engineering: Data Preprocessing consisted of label encoding categorical variables, feature selection, and normalization.

Experimentation

- Evaluation Metrics

- Dataset

- Three Big Data Technologies

- Hive

- Impala

- Spark

- Comparative Analysis

Discussion

Conclusion

References

- Alkan, N., Menguc, K. and Kabak, Ö. (2022). Prescriptive Analytics: Optimization and Modeling. Springer Series in Advanced Manufacturing, [online] pp.239–264. [CrossRef]

- Ankur, S. (2023). The Top Distributed Data Processing Technologies: A Comprehensive Overview. [online] Medium. Available at: https://medium.com/@singhal.ankur8/the-top-distributed-data-processing-tech nologies-a-comprehensive-overview-712756db3242.

- Apache Impala (n.d.). Impala. [online] impala.apache.org. Available at: https://impala.apache.org/overview.html.

- Apache Software Foundation (2015). Design - Apache Hive - Apache Software Foundation. [online] cwiki.apache.org. Available at: https://cwiki.apache.org/confluence/display/hive/design.

- Apache Software Foundation (2019). Apache Hadoop. [online] Apache.org. Available at: https://hadoop.apache.org/.

- Aslan, M.T. (2022). Customer Shopping Dataset - Retail Sales Data. [online] www.kaggle.com. Available at: https://www.kaggle.com/datasets/mehmettahiraslan/customer-shopping-datas et [Accessed 6 Nov. 2024].

- Azhar, A. (2022). What is data integration? | TechRepublic. [online] TechRepublic. Available at: https://www.techrepublic.com/article/data-integration/ [Accessed 29 Oct. 2024].

- Bajaj, P., Ray, R., Shedge, S., Vidhate, S. and Shardoor, N. (2020). SALES PREDICTION USING MACHINE LEARNING ALGORITHMS. [online].

- International Research Journal of Engineering and Technology. Available at: https://www.academia.edu/download/64640730/IRJET-V7I6676.pdf [Accessed 29 Oct. 2024].

- Capriolo, E., Wampler, D. and Rutherglen, J. (2012). Programming Hive. O’Reilly Online Learning. O’Reilly Media, Inc.

- Collins, N. (2023). What Is YARN In Big Data | Robots.net. [online] Robots.net. Available at: https://robots.net/fintech/what-is-yarn-in-big-data/.

- Cote, C. (2021). What Is Descriptive Analytics? 5 Examples | HBS Online. [online] Business Insights - Blog. Available at: https://online.hbs.edu/blog/post/descriptive-analytics [Accessed 31 Oct. 2024].

- Dutta, S. (2024). What is Data Integration and Importance of Data Integration. [online] www.sprinkledata.com. Available at: https://www.sprinkledata.com/blogs/importance-of-data-integration.

- Favaretto, M., De Clercq, E., Schneble, C.O. and Elger, B.S. (2020). What is your definition of Big Data? Researchers’ understanding of the phenomenon of the decade. PLOS ONE, 15(2), p.e0228987. [CrossRef]

- GeeksForGeeks (2018). Apache Hive. [online] GeeksforGeeks. Available at: https://www.geeksforgeeks.org/apache-hive/.

- GeeksForGeeks (2019). Introduction to Apache Pig. [online] GeeksforGeeks. Available at: https://www.geeksforgeeks.org/introduction-to-apache-pig/ [Accessed 18 Dec. 2024]. Last Updated : 14 May, 2023.

- GeeksforGeeks (2023). Machine Learning Algorithms. [online] GeeksforGeeks. Available at: https://www.geeksforgeeks.org/machine-learning-algorithms/ [Accessed 5 Nov. 2024].

- Grolinger, K. and AlMahamid, F. (2022). Reinforcement Learning Algorithms: An Overview and Classification. [online] Arxiv. Available at: https://arxiv.org/pdf/2209.14940 [Accessed 15 Dec. 2024].

- ishrakhussain (2023). EDA and Prediction for customer spending. [online] Kaggle.com. Available at: https://www.kaggle.com/code/ishrakhussain/eda-and-prediction-for-customer- spending/notebook [Accessed 21 Dec. 2024].

- Kalyanathaya, K.P., Akila, D. and Rajesh, P. (2019). Advances in natural language processing–a survey of current research trends, development tools and industry applications. International Journal of Recent Technology and Engineering, 7, pp.199–202.

- Kelly, B. (2023). Positive Retail | Cloud vs On-premise POS. [online] Positive Retail. Available at: https://positiveretail.ie/cloud-vs-on-premise-pos/ [Accessed 29 Oct. 2024].

- Khurana, D., Koli, A., Khatter, K. and Singh, S. (2022). Natural Language processing: State of the art, Current Trends and Challenges. Multimedia Tools and Applications, 82(3), pp.3713–3744. [CrossRef]

- Kornacker, M., Behm, A., Bittorf, V., Bobrovytsky, T., Ching, C., Choi, A., Erickson, J., Grund, M., Hecht, D., Jacobs, M., Joshi, I., Kuff, L., Kumar, D., Leblang, A., Li, N., Pandis, I., Robinson, H., Rorke, D., Rus, S. and Russell, J. (n.d.). Impala: A Modern, Open-Source SQL Engine for Hadoop. [online] Available at: https://www.cidrdb.org/cidr2015/Papers/CIDR15_Paper28.pdf.

- Krissberg, C. (2024). Cloud vs On Premise Infrastructure: What’s Best for Your Business IT Operations. [online] www.linkedin.com. Available at: https://www.linkedin.com/pulse/cloud-vs-premise-infrastructure-whats-best-yo ur-cody-krissberg-ona9e/.

- Lawton, G. (2022). What is Descriptive Analytics? Definition from WhatIs.com. [online] WhatIs.com. Available at: https://www.techtarget.com/whatis/definition/descriptive-analytics [Accessed 31 Oct. 2024].

- Maceira, J. (2024). Cloud Platforms vs. On-Premise Solutions Which one to choose? [online] Orienteed • leading e-commerce solutions. Available at: https://orienteed.com/en/cloud-platforms-vs-on-premise-solutions/ [Accessed 29 Oct. 2024].

- Moesmann , M. and Pedersen, T.B. (n.d.). ata-Driven Prescriptive Analytics Applications: A Comprehensive Survey. Arxiv. [online]. [CrossRef]

- Mohan, V. (2022). CCPA vs GDPR: The 5 Differences You Should Know. [online] Sprinto. Available at: https://sprinto.com/blog/ccpa-vs-gdpr/.

- Ouaknine, K., Carey, M. and Kirkpatrick, S. (2015). The PigMix Benchmark on Pig, MapReduce, and HPCC Systems. [CrossRef]

- OpenAI. (2024). ChatGPT (Dec 14 version) [Large language model]. Available at: https://chatgpt.com/share/675d985b-5b10-8005-8aa2-4a3226a599a6.

- Saeed, S. (2019). Analysis of software development methodologies. IJCDS. Scopus; Publish.

- Saeed, S. (2019). The serverless architecture: Current trends and open issues moving legacy applications. IJCDS. Scopus.

- Saeed, S., & Humayun, M. (2019). Disparaging the barriers of journal citation reports (JCR). IJCSNS: International Journal of Computer Science and Network Security, 19(5), 156-175. ISI-Index: 1.5.

- Saeed, S. (2016). Surveillance system concept due to the uses of face recognition application. Journal of Information Communication Technologies and Robotic Applications, 7(1), 17-22.

- Shi, J.-C., Yu, Y., Da, Q., Chen, S.-Y. and Zeng, A.-X. (2019). Virtual-Taobao: Virtualizing Real-World Online Retail Environment for Reinforcement Learning. Proceedings of the AAAI Conference on Artificial Intelligence, [online] 33(01), pp.4902–4909. [CrossRef]

- Stedman, C. (2023). What is Data Analytics? - Definition from WhatIs.com. [online] SearchDataManagement. Available at: https://www.techtarget.com/searchdatamanagement/definition/data-analytics [Accessed 4 Nov. 2024].

- Tucci, L. (2021a). What Is Machine Learning and Why Is It Important? [online] Techtarget. Available at: https://www.techtarget.com/searchenterpriseai/definition/machine-learning-ML [Accessed 5 Nov. 2024].

- Tucci, L. (2021b). What is Predictive Analytics? An Enterprise Guide. [online] SearchBusinessAnalytics. Available at: https://www.techtarget.com/searchbusinessanalytics/definition/predictive-anal ytics [Accessed 4 Nov. 2024].

- Turkmen, B. (2022). Customer Segmentation with Machine Learning for Online Retail Industry. The European Journal of Social & Behavioural Sciences, [online] 31(2), pp.111–136. [CrossRef]

- TutorialsPoint (2024). Impala - Architecture. [online] Tutorialspoint.com. Available at: https://www.tutorialspoint.com/impala/impala_architecture.htm [Accessed 20 Dec. 2024].

- Yasar, K. (2023). What is Data Analytics? - Definition from WhatIs.com. [online] SearchDataManagement. Available at: https://www.techtarget.com/searchdatamanagement/definition/data-analytics [Accessed 31 Oct. 2024].

- Miro. (n.d.). Flowchart maker. Miro. https://miro.com/flowchart/.

- Jhanjhi, N.Z. (2025). Investigating the Influence of Loss Functions on the Performance and Interpretability of Machine Learning Models. In: Pal, S., Rocha, Á. (eds) Proceedings of 4th International Conference on Mathematical Modeling and Computational Science. ICMMCS 2025. Lecture Notes in Networks and Systems, vol 1399. Springer, Cham. [CrossRef]

- Humayun, M., Khalil, M. I., Almuayqil, S. N., & Jhanjhi, N. Z. (2023). Framework for detecting breast cancer risk presence using deep learning. Electronics, 12(2), 403. [CrossRef]

- Gill, S. H., Razzaq, M. A., Ahmad, M., Almansour, F. M., Haq, I. U., Jhanjhi, N. Z., ... & Masud, M. (2022). Security and privacy aspects of cloud computing: a smart campus case study. Intelligent Automation & Soft Computing, 31(1), 117-128. [CrossRef]

- Aldughayfiq, B., Ashfaq, F., Jhanjhi, N. Z., & Humayun, M. (2023, April). Yolo-based deep learning model for pressure ulcer detection and classification. In Healthcare (Vol. 11, No. 9, p. 1222). MDPI. [CrossRef]

- N. Jhanjhi, "Comparative Analysis of Frequent Pattern Mining Algorithms on Healthcare Data," 2024 IEEE 9th International Conference on Engineering Technologies and Applied Sciences (ICETAS), Bahrain, Bahrain, 2024, pp. 1-10. [CrossRef]

- Ray, S. K., Sirisena, H., & Deka, D. (2013, October). LTE-Advanced handover: An orientation matching-based fast and reliable approach. In 38th annual IEEE conference on local computer networks (pp. 280-283). IEEE.

- Samaras, V., Daskapan, S., Ahmad, R., & Ray, S. K. (2014, November). An enterprise security architecture for accessing SaaS cloud services with BYOD. In 2014 Australasian Telecommunication Networks and Applications Conference (ATNAC) (pp. 129-134). IEEE.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).