1. Introduction

The process of airdrops, regardless of their purpose (humanitarian aid, rescue materials, scientific research), is complex and requires strict coordination of multiple services, appropriate equipment, and adherence to required regulations. The success of such missions is influenced by many interdependent factors. The most important of these include:

- ▪

Accuracy defining the drop zone, dependent on data from navigation systems (GPS, GLONASS, INS),

- ▪

Maneuverability, mainly dependent on the aerodynamics of the capsule, but also on the structural strength (acting g-forces),

- ▪

Achievable range, which allows for dropping the cargo from a safe distance, thereby minimizing potential losses while maintaining safety conditions,

- ▪

Achievable object speed, which in turn minimizes the time required to deliver the airdropped cargo,

- ▪

Flight profile, which allows for low-altitude flight,

- ▪

Weather conditions, mainly those whose effects cannot be predicted (atmospheric turbulence, wind shear, and gusts),

- ▪

All kinds of human factors, such as pilot or operator skills, or their availability.

Airdrops are mainly carried out using airplanes and unmanned aerial vehicles (UAV). The primary goal is to drop humanitarian aid (primarily medicines, specialized equipment, or other sensitive cargo) by quickly delivering essential resources to areas cut off from land communication routes, affected by natural disasters, armed conflict, or other crisis situations. Another specific type of mission involves the airdrop of resources such as underwater gliders, which are widely used in ocean observations [

1]. In such cases, the proper water entry of the glider is crucial to prevent its destruction. For each type of these operations, methods based on airdrop dynamics modeling and control optimization are known for both cargo loading flight operations and airdrop flight operations [

2,

3].

Extremely important aspect concerning the cargo airdrop process is the issue of safety. Its assessment process is complex, mainly due to the strong interconnections between human, machine, and environment, but also due to the high demands placed on such missions. One example of a method used for safety assessment is the improved System-Theoretic Process Analysis-Bayesian Network (STPA-BN) method. This is a system theory-based safety analysis method. Its purpose is to understand how a lack of control over the system can lead to unsafe situations. The STPA-BN method enables real-time decision support for pilots by estimating the probability of losses in case of a single fault or failure. The authors of article [

4] propose the application of a novel risk analysis method that integrates STPA and Bayesian Networks (BN) along with elements such as Noisy-OR gates, the Parent-Divorcing Technique (PDT), and sub-modeling for remote piloting operations. For quantitative safety assessment methods, Bayesian Networks themselves are used. They model cause-and-effect relationships between events and serve to calculate probabilities.

The article [

5] takes the topic of airdropping heavy equipment (over 1 ton), often used in military and humanitarian missions. The authors highlight the operational complexity of such a process, characterized by strong human-machine-environment interaction. This complexity leads to difficulties in safety assessment and numerous accidents.

In the presented article, we discuss safety, but specifically in the context of errors resulting from the improper spatial position of the cargo carrier at the moment of release. It is crucial for the aircraft or UAV carrying humanitarian aid or specialized equipment (firefighting, research) to be outside the dangerous zone. Considering all this, specifying the initial conditions V0, h0, θ0 for the cargo drop is a very important issue. It frees the carrier’s operator from the need to calculate, mainly the range of such cargo, thereby eliminating the so-called "human error" in this regard. This enables real-time decision-making regarding the initial conditions for the cargo drop.

Bearing in mind the methods of cargo airdrops, they can be divided into several categories:

- -

-

Non-precision cargo airdrop systems

- ○

They are characterized by low accuracy, meaning the cargo does not always land at the intended point,

- ○

Most commonly used during good visibility,

- ○

These systems require a low altitude and typically involve flying over the drop zone, which is not always possible and carries a high risk of mission failure, as well as danger to the pilot and aircraft.

- -

-

Precision cargo airdrop systems

- ○

Work on guided airdrop systems began in the early 1960s, utilizing a modified parabolic parachute [

6],

- ○

Equipped with Autonomous Guidance Units (AGU), whose elements include: a computer for calculating flight trajectory, communication devices with antennas, a GPS receiver, temperature and pressure sensors, LIDAR radar, devices controlling steering lines, and an operating panel,

- ○

They use appropriate devices that detect the wind profile and speed,

- ○

They allow for airdropping cargo from altitudes of over 9,000 meters with a drop accuracy of 25 to 150 meters [

7],

- ○

They utilize advanced software (Launch Acceptability Region, LAR) that calculates the area from which a drop can be made to ensure the cargo hits the target,

- ○

Joint Precision Airdrop System, which is designed for conducting precise airdrops from high altitudes and comes in a wide range of versions depending on cargo weight (from 90 kg to 4500 kg). Equipped with a wing-type gliding parachute, it has the ability to fly in any direction regardless of wind and to change flight direction at any moment [

8].

- -

-

Guided parachutes/parafoils

- ○

They are equipped with Autonomous Guidance Units (AGU), that allow for a change in flight trajectory, including: adjusting the course mid-flight, avoiding obstacles, and precise maneuvering to reach the designated drop point,

- ○

They have the ability to be dropped from higher altitudes and greater distances from the drop point,

- ○

Ram-air parachutes (wing-type) are characterized by their maneuverability and ability to fly in any direction,

- ○

Round parachutes (modified) are less maneuverable than ram-air, but have the advantage of being cheaper to produce; they are used in systems like AGAS, where pneumatic muscle actuators are used for steering,

- ○

The parachute’s smart guidance System Joint Precision Airdrop System (JPADS) autonomously calculates the correct drop point. To do this, it uses data from global positioning, weather models, and advanced mathematical operations, allowing it to reach the target on its own based on the received coordinates.

Determining the area where cargo should be airdropped to reach its target is crucial in precision airdrop technology and constitutes a highly complex task that requires considering many factors. The main elements influencing this process are: wind field modeling, the flight dynamics of the cargo with a parachute, algorithms for predicting the drop target, and error compensation and real-time correction. In practice, this process requires modeling the cargo’s flight trajectory from the point of release to impact, taking into account all aerodynamic forces and atmospheric conditions. This, in turn, involves advanced algorithms and measurement technologies that enable the determination of the optimal point in the air so that the cargo lands within the intended, relatively small area on the ground. In the literature [

9] we can also encounter the term Calculated Aerial Release Point (CARP). Considering all these aspects (factors), it becomes reasonable to use a system that would allow for precise determination of the initial airdrop parameters before the flight and simultaneously ensure that the cargo reaches its designated target, taking into account the current flight parameters (altitude

h0, velocity

V0, and possibly the angle

θ0 at which the drop is to occur). Such conditions are fulfilled by the proposed inverse algorithm, which enables the determination of the required initial parameters — including

V0,

h0, and

θ0 — based on the desired landing location and target parameters. This approach effectively supports pre-flight planning and can be integrated with on-board systems to enhance precision in cargo delivery under varying flight and environmental conditions. The feasibility and efficiency of solving inverse problems using neural networks — even in complex, highly non-linear systems — has been confirmed in other fields such as robotics. For instance, in the work of [

10], inverse kinematics problems in 6-DOF manipulators were successfully addressed using MLP-based networks with additional segmentation and error correction strategies, resulting in both high accuracy and low computational cost.

In order to determine the search area for a dropped cargo, mainly in conditions of limited visibility (night, fog), a multifaceted approach is used, combining precise pre-drop calculations, cargo tracking methods, and advanced post-drop search methods. The main element of this process is forecasting the drop zone. In the initial stage of this process, the probable drop zone is calculated based on precise navigation data of the aircraft (e.g., from a GNSS system) at the moment of cargo release. It is also possible to use tracking devices that can be integrated with the dropped cargo, such as: locator beacons (e.g., ELT-type devices), GPS/GNSS trackers, radio beacons, or IoT sensors. However, it is known that navigation systems are subject to various types of interference [

11]. In the context of Global Navigation Satellite Systems, the main type of interference is Radio Frequency Interference (RFI), including jamming [

12]. Therefore, these interferences affecting GNSS systems can cause a complete loss of signal, data distortion, or reduced positioning precision. In each of these cases, there is a huge risk of mission failure for the cargo drop. Eliminating this problem most often involves using alternative systems, such as visual odometry systems [

13] or Simultaneous Localization And Mapping (SLAM) [

14]. Both the visual odometry system and SLAM can operate independently of data from GNSS systems, or they can rely on them to improve their performance. Therefore, systems used to define the cargo drop zone that utilize GPS/GNSS modules [

15] have their biggest disadvantage in power consumption. This can impact their functionality and operating time, and ultimately their usability. There are also places in the world that are devoid of these types of satellite signals. Therefore, it’s impossible to use systems that require data from GPS. To address this, we can use the presented method for determining three parameters: r

k,

tk, and

Vk, to define the impact point of the dropped cargo. Most importantly, the process of airdropping resources using the presented system does not require satellite navigation data. It relies on data generated before the planned mission. This system can also serve as pilot or operator support and can be activated when GPS data is unavailable.

Algorithms and models predicting the cargo’s flight trajectory and its potential drift also play a crucial role in forecasting the drop zone. In the presented research, only the impact point was determined, but this provides key information during a mission. For this purpose, the geometric-mass data of our capsule were used to calculate its range (rk), flight time (tk), and final impact velocity (Vk) using artificial neural networks. This minimizes the risk of improper carrier positioning in space (i.e., the dropped cargo failing to reach the designated location – ocean, earth).

Another aspect discussed regarding precision cargo airdrop systems is their reliability. It should be noted that these types of airdrops require incredibly advanced systems, which can be prone to failure. The article [

16] focuses on the need to estimate the reliability of airdrop systems already at the design stage, which helps avoid costly and time-consuming field tests. If such an estimation is not possible, and additionally, in the absence of information from a damaged component, the presented system can be used as support. These are typically situations where urgent cargo delivery is required, and there’s no time or opportunity to fix such failures.

Artificial neural networks (ANN) have very wide applications in the area of scientific research. With the development of computational techniques, their involvement in a broad range of applications is faster and more effective. They also constitute a valuable tool during the design of various types of systems, making them more efficient, reliable, and at the same time innovative. The following examples of artificial neural network applications refer exclusively to applications in cargo airdrop systems. A review of available literature indicates that despite the widespread use of artificial intelligence, the number of scientific papers utilizing this area for precision airdrop applications is small. In contrast, commercial development in this sphere is highly current [

17].

Research utilizing intelligent cargo airdrop systems covers a wide range of issues, aiming primarily to increase precision, but also the autonomy, reliability, and safety of the conducted mission. They find application in such scientific areas as: intelligent control and guidance algorithms, trajectory modeling and prediction, reliability and safety, and multi-sensor data fusion. The authors [

18] indicate the possibility of applying intelligent control technology to increase the precision of airdrop systems (PADS). This system utilizes the reinforcement learning control strategy based on the AC architecture, operating in both windless and windy environments. The article [

19] presents a trajectory planning model based on a backpropagation neural network (BPNN). Meanwhile, a genetic algorithm (GA) is utilized as an optimization algorithm for landing point accuracy, providing a database verified by the Kane’s Equation (KE) model, on which the BPNN is trained, verified, and tested. The presented research results show that the BPNN model exhibits the highest landing point precision among the three investigated models. Another example of artificial intelligence utilization for unmanned aerial vehicles is an article by Chinese scholars [

20], which addresses the topic of using a deep reinforcement learning method. Additionally, an Adaptive Priority Experience Replay Deep Double Q-Network (APER-DDQN) algorithm based on Deep Double Q-Network (DDQN) was applied. An algorithm based on reinforcement learning was also used to generate effective maneuvers for a UAV agent to autonomously perform an airdrop mission in an interactive environment [

21]. The training set sampling method is constructed based on the Prioritized Experience Replay (PER) method. The obtained research results confirm that the algorithm proposed by the researchers was able to successfully solve the turn-around and guidance problems after successful training. The issue of precision landing of an autonomous parafoil system using deep reinforcement learning is addressed in [

22]. It should be noted that, similar to this article, a case where initial conditions are randomly selected is also analyzed to test the effectiveness of the proposed system. Besides reinforcement learning, other methods from the artificial intelligence group are also applied in the field of cargo airdrop research, such as: genetic algorithm [

23,

24], particle swarm optimization [

25,

26], and Bayesian Network [

16].

Neural networks (NNs) are computational models designed based on the principles observed in biological neural systems. These models have gained widespread application across numerous scientific and engineering disciplines due to their ability to process complex data structures efficiently. The extensive use of neural networks in engineering has been thoroughly reviewed in the literature, see, i.e. [

27,

28]. The fundamental theoretical aspects of neural networks have been comprehensively discussed in various publications, such as [

29,

30,

31].

Among the diverse types of neural networks, one type designed for addressing regression and inverse problems has been employed in this study. This type of network is particularly well-suited for learning complex input-output relationships by mapping a given set of input data onto an output function, formally expressed as , where and denote the input and output vectors, respectively, and represents the set of network parameters that determine its behavior.

In general, we can assume that there are two approaches to solving identification problems. The

forward mode is based on the definition of an error function for the differences between the results of a numerical model and the results of an experiment (numerical or measurement). The solution is obtained by minimizing this function. This type of identification can be considered more general and stable and is therefore usually used in numerical validation. A neural network can approximate the relation between input

X and output

Y data in a simple way:

where:

X – input vector (e.g. control parameters, material parameters),

Y – output vector (e.g. observed system response, simulation result),

w – set of neural network parameters (weights and biases),

f(⋅) – function approximated by the neural network.

The

inverse mode analysis approach is based on the assumption that a well-defined and mathematically consistent inverse relationship exists between outputs and inputs. Once this relationship is rigorously formulated, the process of retrieving the corresponding input parameters becomes a systematic procedure that can be executed efficiently and repeatedly with high reliability. In this approach, the neural network searches for a set of input parameters

X based on a expected output

Y:

where:

g(⋅) – is the inverse relation approximated by a NN. Examples of the use of NN in reverse analysis are often presented in the literature [

32,

33,

34,

35].

The research presented in this paper focuses on an imprecise cargo airdrop system, primarily due to two key constraints: low operational cost and the ability to operate without reliance on satellite navigation signals.

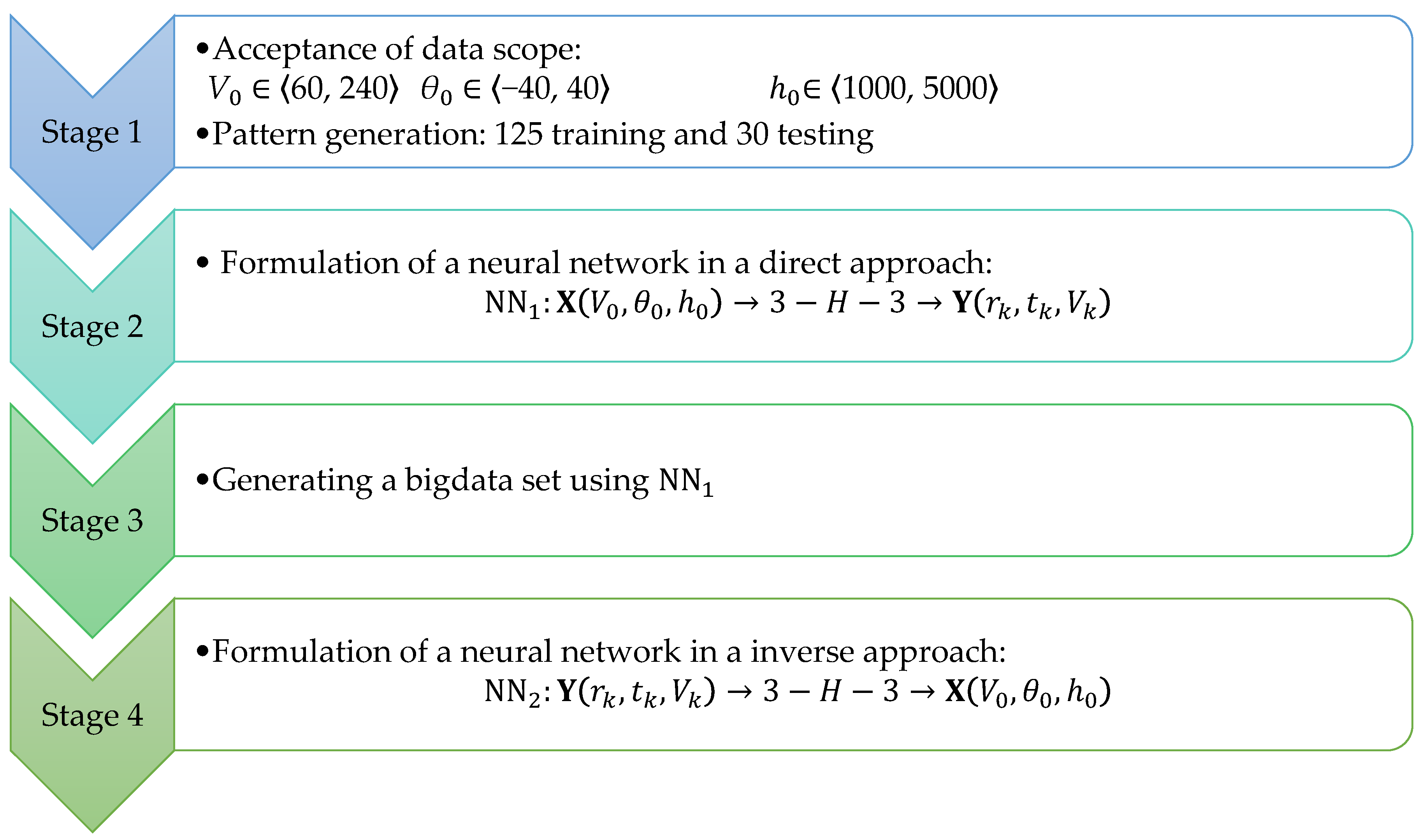

Two computational models were employed: a forward analysis and an inverse analysis, integrated into a computational framework (see

Figure 1). In the first Stage, initial conditions of the free-fall cargo drop were assumed: initial drop velocity

V0, drop angle

θ0, and drop altitude

h0. Based on these parameters, key outcome variables were calculated: the horizontal range of the cargo

rk, flight time

tk, and impact velocity

Vk.

A comprehensive mathematical model of the capsule’s trajectory was used, incorporating aerodynamic forces and numerical integration of the equations of motion. This model provides a foundation for accurately determining drop parameters. In total, 125 base data sets were generated during this Stage, along with an additional 30 test sets.

The second Stage of the system involved the development of a neural network designed to perform forward prediction — that is, to compute output values rk, tk, Vk. based on arbitrary input data within a predefined range: V0, θ0, h0. In this Stage, a simple multilayer perceptron (MLP) network with backpropagation and a single hidden layer was implemented.

In the third Stage, a numerically structured dataset similar to that of the first stage was generated, but on a much larger scale — comprising 8800 sets of numerical samples. This dataset was generated using the neural network model developed in Stage two. The purpose of this step was to significantly reduce computation time. The total time required to generate the full dataset was approximately 2.3 seconds.

In the fourth Stage, a new neural network was formulated to perform the inverse task, which required a significantly large training dataset. The objective of this model was to infer the initial conditions V0, θ0 and h0 based on the desired output values rk, tk and Vk.

System validation was carried out by comparing randomly selected reference patterns — recalculated using the original numerical (hard) method — with the results generated by the proposed neural-based computational system. For further use, only the trained neural network model from Stage four would be necessary for the end user.

2. Research Assumptions and Methods

Stage 1: Flight Assumptions and Capsule’s Mathematical Model

For the presented research, we adopt an aerodynamic capsule shape that allows for:

- ▪

Reduced air resistance – this advantage is extremely important because it minimizes friction and air turbulence around the capsule, allowing for faster and more controlled descent,

- ▪

Improved precision and trajectory prediction – aerodynamically shaped capsules are less susceptible to the influence of crosswinds and other atmospheric disturbances. Their flight path is more stable and easier to predict, which increases the chances of a precise airdrop,

- ▪

Increased stability during descent – allows the capsule to maintain a constant orientation in flight, reducing the risk of uncontrolled spinning, swaying, or tumbling. This is particularly important to avoid cargo damage,

- ▪

Reduced loads on the capsule’s structure and its contents. Laminar airflow around an aerodynamic shape minimizes the dynamic forces acting on the capsule, which reduces the risk of damage to its structure and contents, especially sensitive items such as precision measuring equipment or shock-sensitive packaged medications,

- ▪

More stable parachute opening and deployment if one is used. In the presented research, the use of a parachute was not considered. The capsule is dropped directly, e.g., from an airplane or an unmanned aerial vehicle,

- ▪

Additionally, if an increased descent speed is desired when rapid cargo delivery is a priority.

In summary, an aerodynamic shape is crucial for ensuring the safe, precise, and effective delivery of cargo in airdrop operations. This translates into minimizing the risk of damage and increasing the chances of mission success.

It should also be emphasized that damage to specialized measuring equipment or special medications during an airdrop involves high costs. These losses include not only the value of the equipment or medications themselves, but also potential delays in mission execution, loss of important research results, or the inability to provide immediate assistance in crisis situations. Therefore, ensuring the reliability and safety of airdrops is a priority, and the aerodynamic shape of the capsule is one of the factors that contributes to this.

To determine the range parameter, a mathematical model for the capsule under consideration had to be developed. To make this possible, several assumptions needed to be made:

- ▪

The capsule is a rigid solid, made of resistant materials that are not easily damaged,

- ▪

The mass of the capsule does not change with time,

- ▪

The capsule is an axisymmetric solid,

- ▪

The Earth occupies a fixed position in space,

- ▪

The planes of geometric, mass, and aerodynamic symmetry are the planes ,

- ▪

There is no wind speed.

The motion of the center of mass of the capsule will be described by equations of translational and rotational motion, well-known from mechanics [

36]. Newton’s law was used to derive the equations of motion for a capsule, according to which the sum of external forces acting on an object in a chosen direction is equal to the change in momentum in that direction per unit of time. Thus, the vector equation of translational motion for the capsule’s center of mass can be written in the form [

37]

where:

represents the sum of all external forces in the body frame,

represents the velocity of the capsule, expressed in body coordinates,

represents the angular velocity vector of the body frame with respect to the inertial frame, also expressed in body coordinates.

Newton’s second law for the rotational motion of the capsule is given by:

where:

represents the sum of all external moments, expressed in the capsule body frame and

represents the moment of inertia matrix.

Most often, cargo is placed on standard aerial pallets such as ECDS (Enhanced Container Delivery System), Type V, or 463L and secured with nets. In our research, we assume that the cargo is airdropped in a special capsule.

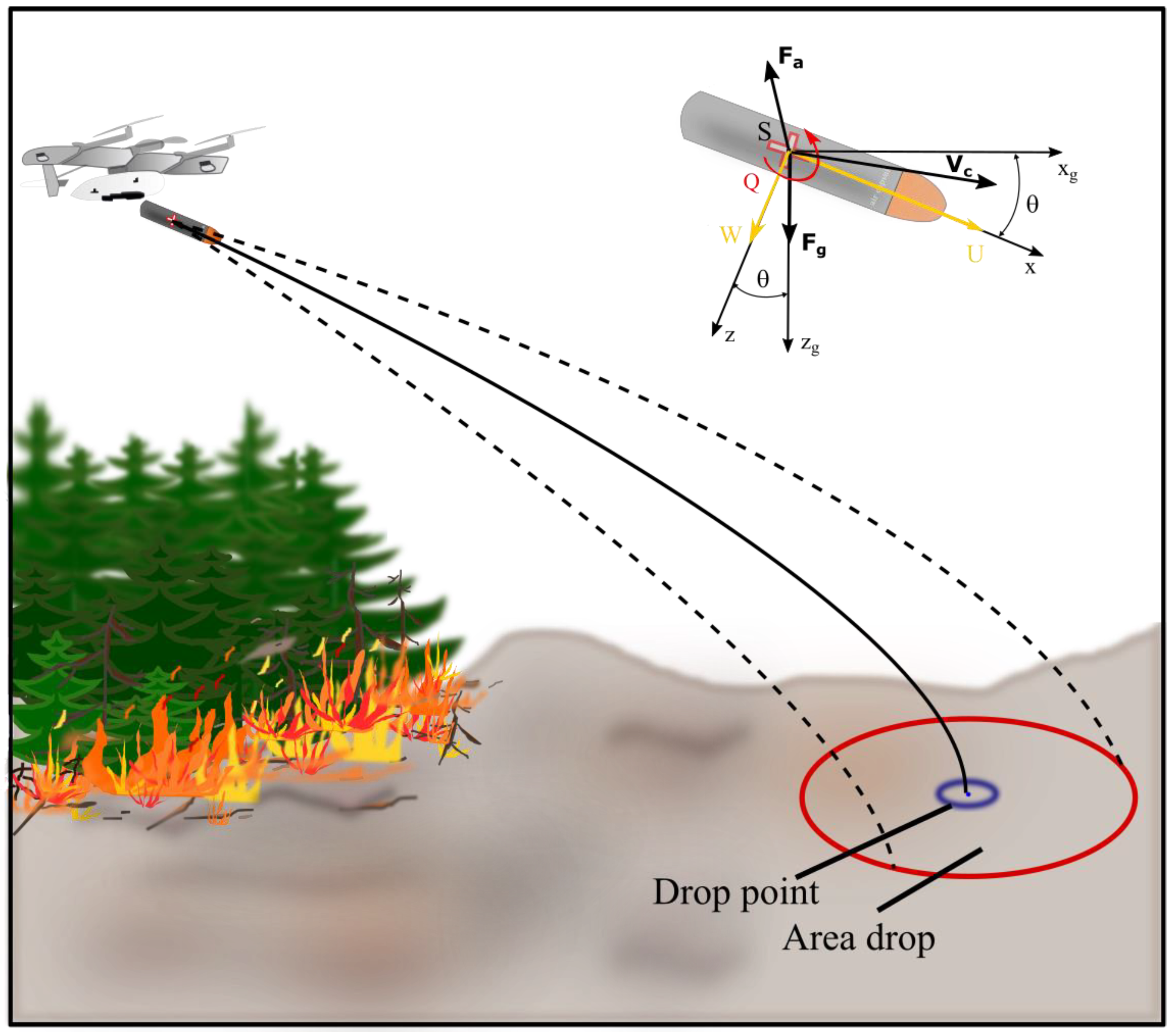

Figure 2 shows the references system and the external forces that act on the capsule during the flight.

We assume that the capsule’s motion occurs exclusively in the vertical plane. For such a case, considering equation (3) and (4), we can finally write the differential equations for longitudinal dynamics of the capsule [

38]:

where:

are the resultant forces along

and

body axes,

is the total pitching moment acting on the capsule,

is the moment of inertia about the pitch axis,

is capsule mass,

is the components of the velocity vector of the capsule in relation to the air in the boundary system

,

is the component of the angular velocity vector of the capsule body and

,

.

Ultimately, the forces

and

and moment

acting on the capsule appearing in equation (5) can be written in the form:

where:

is the acceleration of gravity,

is the air density,

is the diameter of the capsule body,

is the characteristic surface (cross-sectional area of the capsule),

represents the velocity vector of the centre of capsule mass in relation to the air,

is the coefficient of the aerodynamic axial force,

is the coefficient of the aerodynamic normal force,

is the coefficient of the aerodynamic damping force,

is the coefficient of the aerodynamic tilting moment,

is the coefficient of the damping tilting moment.

The trajectory describes the position of the capsule’s center of mass as a function of time and external forces. Besides the gravitational force, the axial aerodynamic force has a significant impact on the shape of the capsule’s flight trajectory. It acts along the longitudinal axis of the capsule, but opposite to its axis, which has a measurable impact on its range. The second component of the resultant aerodynamic force is the normal force , which is perpendicular to the axis.

The primary source of difficulty in determining the aerodynamic forces and moments of a capsule is the determination of its aerodynamic characteristics. This concept refers to the coefficients of aerodynamic forces and moments acting on a capsule moving through the Earth’s atmosphere [

39]. These coefficients, due to their dimensionless nature, allow for the comparison and evaluation of the aerodynamic properties of flight objects of different sizes [

40]. The coefficient

of the axial force depends on the nutation angle

:

where:

represents zero pitch coefficient,

is pitch drag coefficient,

is the nutation angle. However, the normal force coefficient

mainly depends on the angle of attack

.

The aerodynamic characteristics of the considered capsule were determined experimentally, in a wind tunnel.

The trajectory of the capsule’s center of mass in the Earth-fixed coordinate system is obtained using appropriate transformations. Finally, for a capsule moving in the vertical plane, we get:

Numerical integration of the capsule’s flight trajectory was performed using the fourth-order Runge-Kutta algorithm. We thus obtained the capsule’s position for each moment in time.

In summary, the proposed system for determining initial parameters for the pilot or operator can be used as a supporting system for the precise cargo airdrop process. Incorrect determination of the initial airdrop conditions can result in mission failure or difficulties in its execution. Most importantly, the proposed system takes into account the dynamics of the dropped capsule, which reflects its actual behavior.

For the assumptions made, the range of the capsule can be determined in the form of:

where:

are the coordinates of the end point (cargo drop),

are the coordinates of the initial point.

It should be noted that the available literature lacks specific data on airdrop speeds for packages containing medicines, firefighting equipment, or measurement capsules. Therefore, a rather broad range of drop speeds for the carrier was adopted in the research, falling within the interval of m/s. Additionally, in the research, an airdrop angle for such cargo was adopted, which directly refers to the pitch angle of the object that will be transporting this cargo.

The total mass of the capsule, including its contents, was assumed not to exceed 15 kilograms. Meteorological conditions and the necessity of defining aerodynamic characteristics for each falling object type further complicate accurate delivery. From the standpoint of precision and reducing the risk of uncontrolled cargo damage upon impact, the drop altitude should be kept as low as possible. As indicated in

Table 1, the height of the cargo drop is within the range

. Moreover, the UAV’s velocity at the moment of release is a key factor affecting delivery accuracy. The presented studies assumed that

[

41].

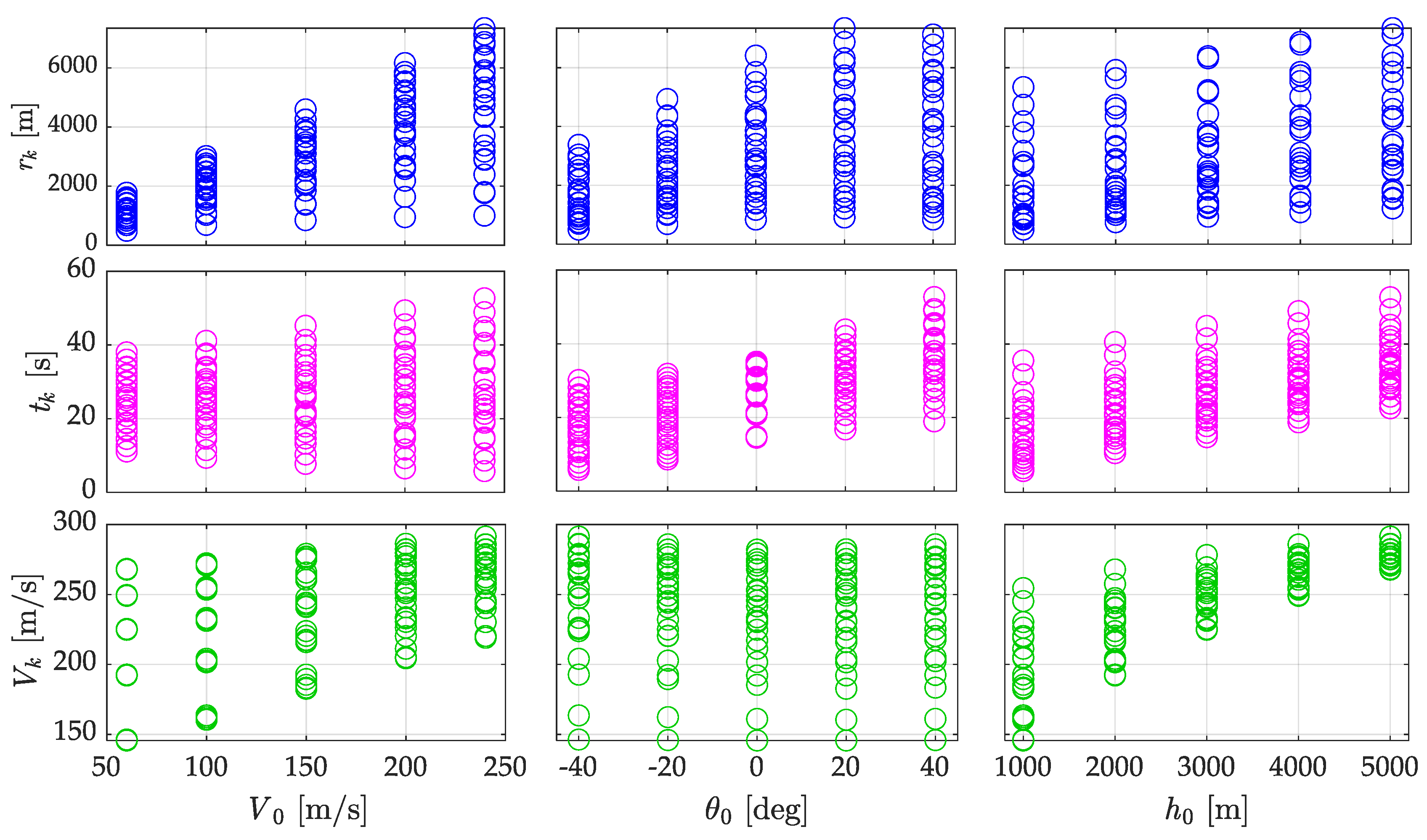

Table 1 presents the reference dataset consisting of 125 data samples used in the research process. It includes both the adopted input parameters — initial velocity, angle, and altitude — as well as the corresponding computed output values, namely horizontal range, flight time, and impact velocity. As indicated in footnote¹, the input parameters were divided into four distinct ranges, which allowed for their structured distribution in preparation for analysis and neural network training conducted in Stage 2.

Figure 3 shows scatter plots illustrating the relationships between the three input variables of the model and their corresponding output parameters. The 3×3 grid layout enables a clear analysis of how each input variable influences the computed results. Distinct patterns are visible — for example, the horizontal range

rk increases with both the initial velocity

V0 and drop altitude

h0, while the effect of the drop angle

θ0 appears more complex. The color scheme was selected to highlight each output variable: blue for

rk, magenta for

tk, and green for

Vk.

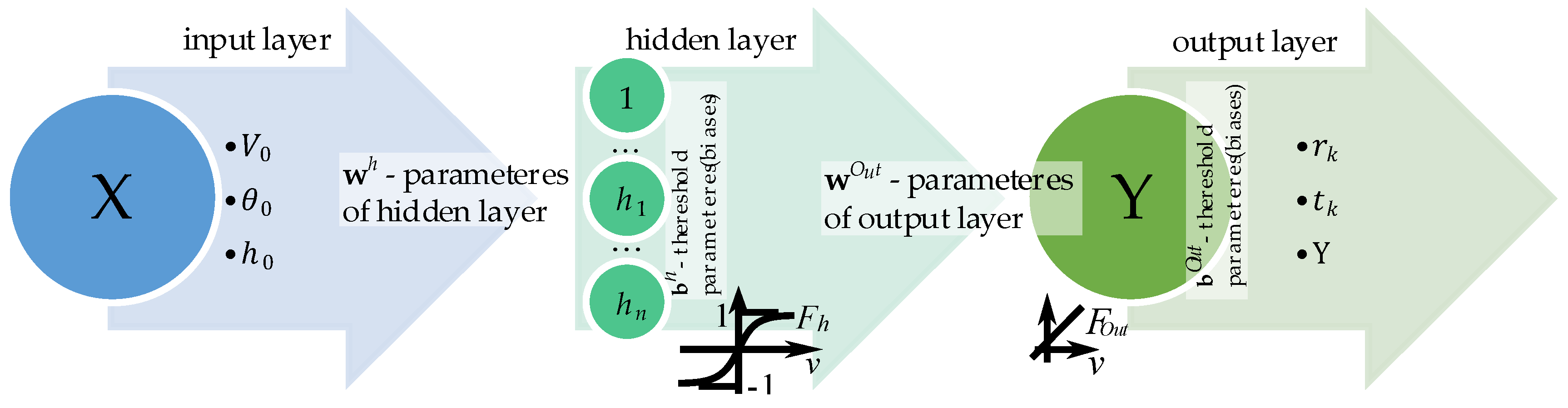

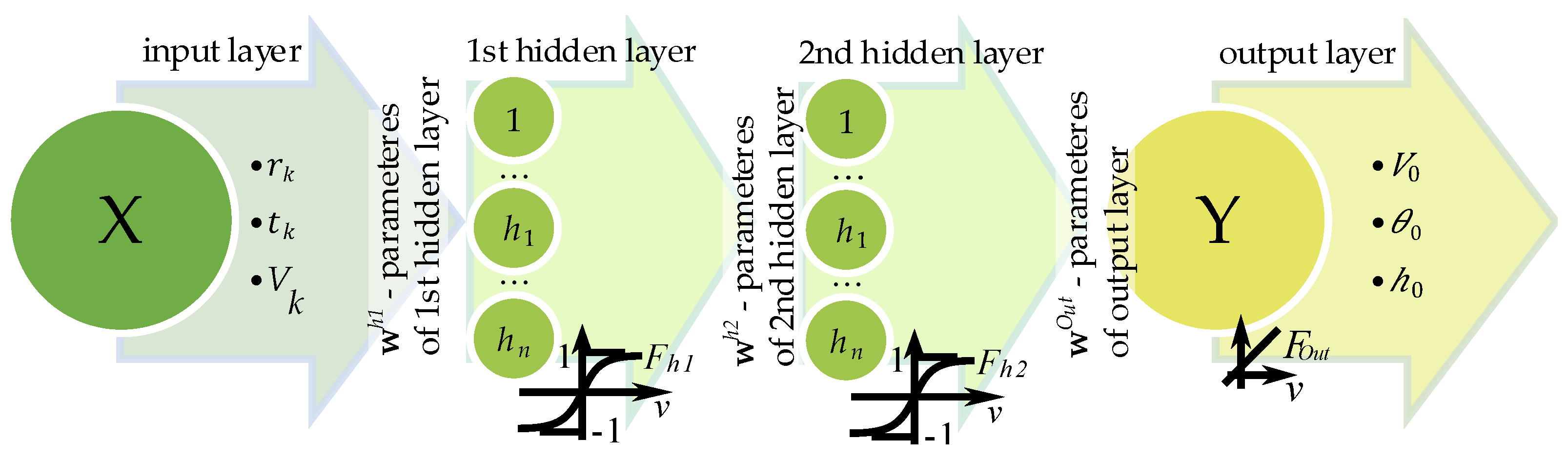

Stage 2: Simple Neural Network:NN1 in a Direct Approach

In this study, the same type of neural network – a backpropagation neural network – was applied in both Stage 2 and Stage 4 computations. Due to the structure of the training data, a 3 –

H – 3 architecture was adopted in Stage 2, consisting of three neurons in the input layer,

H neurons in the hidden layer, and three neurons in the output layer (

Figure 3). In contrast, Stage 4 employed a deeper network with two hidden layers, following a 3 –

H –

H – 3 architecture (refer to

Figure 4). The neurons in the input layer receive the input signals and transmit them to the hidden layers through synaptic weights. The hidden layer performs a nonlinear transformation of the input data, which enables the network to model complex nonlinear relationships between inputs and outputs. The output from the hidden layer is passed to the output layer, which generates the final result vector. The learning process is carried out using the backpropagation method, which minimizes the error function, typically by means of the gradient descent algorithm. During training, the derivatives of the error with respect to the weights are computed, and the weights are subsequently updated in the direction opposite to the gradient in order to reduce the prediction error. Neural networks of this type are commonly used for classification, regression, and pattern recognition tasks. A critical aspect of their design is the selection of the number of neurons in the hidden layer (

H), which directly affects the network’s ability to generalize and to avoid overfitting. A network with a 3 –

H – 3 architecture is an example of a multilayer perceptron (MLP). In general terms, the operation of the network can be expressed as the following formula:

|

(12) |

where:

X – input vector (e.g. control parameters, material parameters),

Y – output vector (e.g. observed system response, simulation result),

w, b – set of neural network parameters (weights and biases),

(⋅),

(⋅) – activation functions of output and hidden layer respectively.

At the beginning of Stage 2, the data computed in Stage 1 had to be appropriately preprocessed. Due to the activation function used in the hidden layer (

(⋅), = tanh(⋅)), which operates within the range (-1, 1), the input data also needed to be scaled to this interval. Tanh is defined by the formula:

This function (13) is commonly used in neural networks due to its normalization properties and its ability to model nonlinear dependencies. For more complex problems, advanced activation functions may be applied, such as the Universal Activation Function (UAF), see e.g. [

42]. The scaling factors and the adjusted data ranges are presented in the

Table 2.

For the development of the resulting free-flight parameters of the air-dropped packages, a backpropagation neural network with a 3 –

H – 3 architecture was implemented (

Figure 4). This network is referred to as NN

1 throughout the study.

The input layer consisted of a three-element vector representing the predefined flight assumptions:

V0,

θ0,

h0. The number of neurons

H in the hidden layer was determined adaptively. During the preliminary computational phase, multiple network configurations were evaluated, with

H varying from 4 to 16. It was observed that for

H ≥ 7, the learning and testing errors, measured using Mean Squared Error (MSE), satisfied the predefined accuracy threshold set by the authors. Consequently, this network architecture was selected for further analysis (see

Figure 3). The MSE formula is expressed as follows:

where:

– pairs of data, – reference data, – computed values.

Table 3 presents the Mean Percent Error (MPE) and Mean Squared Error (MSE, see Eq. (14)) for both the training and testing phases of the adopted network, evaluated in the normalized input space scaled to the range [–1, 1]. The results indicate a high-quality fit to the training dataset, confirming the network’s ability to accurately model the underlying functional relationships.

The trained neural network (NN1) serves a crucial role in the subsequent analytical phase, where it will be efficiently utilized to generate a comprehensive dataset. This dataset will then constitute the training set for NN2, which is designed to solve the inverse problem.

Stage 3: Generating a Big Data Set Using

At this Stage, the formulated neural network NN1 was employed to solve the direct problem. To generate the dataset, the input variables V0, θ0, and h0 were once again used to compute the corresponding output set rk, tk, and Vk by means of the NN1 network. The adopted values were divided into 20 or 22 ranges.

In total, 8800 reference data sets were generated in this manner, with the entire computational process taking 0.218889 seconds. The range of the input values used, as well as the resulting output values, was consistent with those presented in

Table 1.

Figure 5 presents an example of the data structure for the variable

rk, shown as a function of the input parameters

V0 and

θ0. The plot illustrates how the generated dataset is distributed with respect to the original reference data in the input space. As shown, both datasets span the same input domain; however, the network-generated data exhibits a significantly higher density in the input space. This increased granularity provides a richer representation of the feature space, enabling more effective training of the inverse model (NN

2). The clear continuity and smoothness of the generated surface also confirm the stability and generalization capability of the NN

1 network across the considered input range.

3. Results

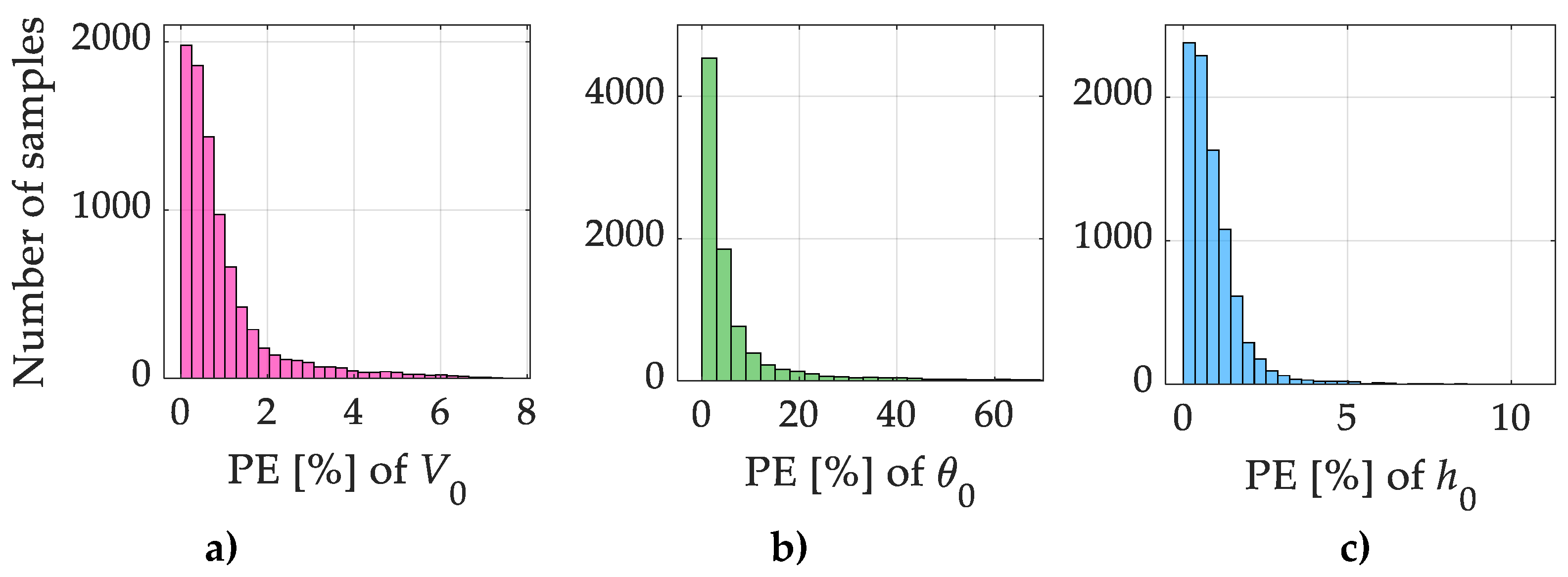

The primary objective of the analysis was to determine whether it is possible to reliably estimate the corresponding initial conditions (initial velocity, release angle, and drop height) based on the known and expected range of a dropped object. Two test scenarios were conducted, differing in their approach to defining the input data for the neural network NN2.

Case 1 – deterministic verification: in this scenario, all input variables of the network (range, flight time, and final velocity) are taken from the reference dataset (Stage 1), and the outputs generated by NN2 (V0.NN, θ0.NN, h0.NN) are compared to the corresponding values from the reference set to assess their accuracy. The errors are calculated by comparing the original data (Stage 1) with the outputs of the neural network NN2.

Case 2 – verification using random data sets: the input data for the network are sampled from predefined intervals based on a discrete uniform distribution. The results obtained from NN2 are verified by analyzing the final parameters after conducting simulations using the estimated initial conditions.

In all cases, the results are compared in the physical (unscaled) domain, which allows for a direct assessment of their practical applicability. It is assumed that, under real-world conditions, the range value is determined based on data acquired from a GPS system.

3.1. Case 1

In this test, six data sets were selected from the training set generated in Stage 1 for verification using the NN

2: 3 – 6 – 6 – 3 network, which performs the inverse task. It should be noted that these data sets overlap with those used for training the NN

1 network. As shown in

Table 5, this approach allows for achieving relatively low errors. In the selected set, the highest relative percentage error (EP) was 14.47% and occurred in only one case, concerning the release angle.

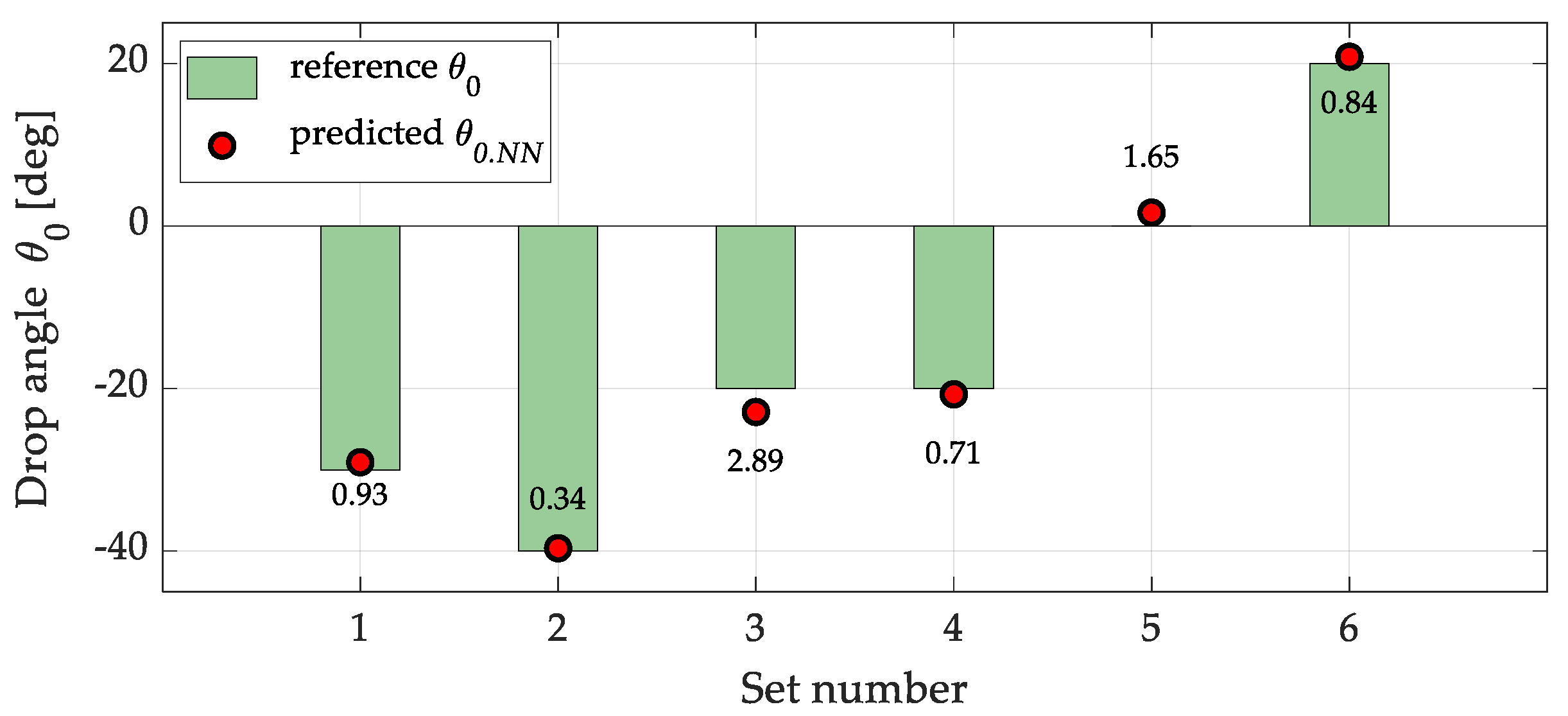

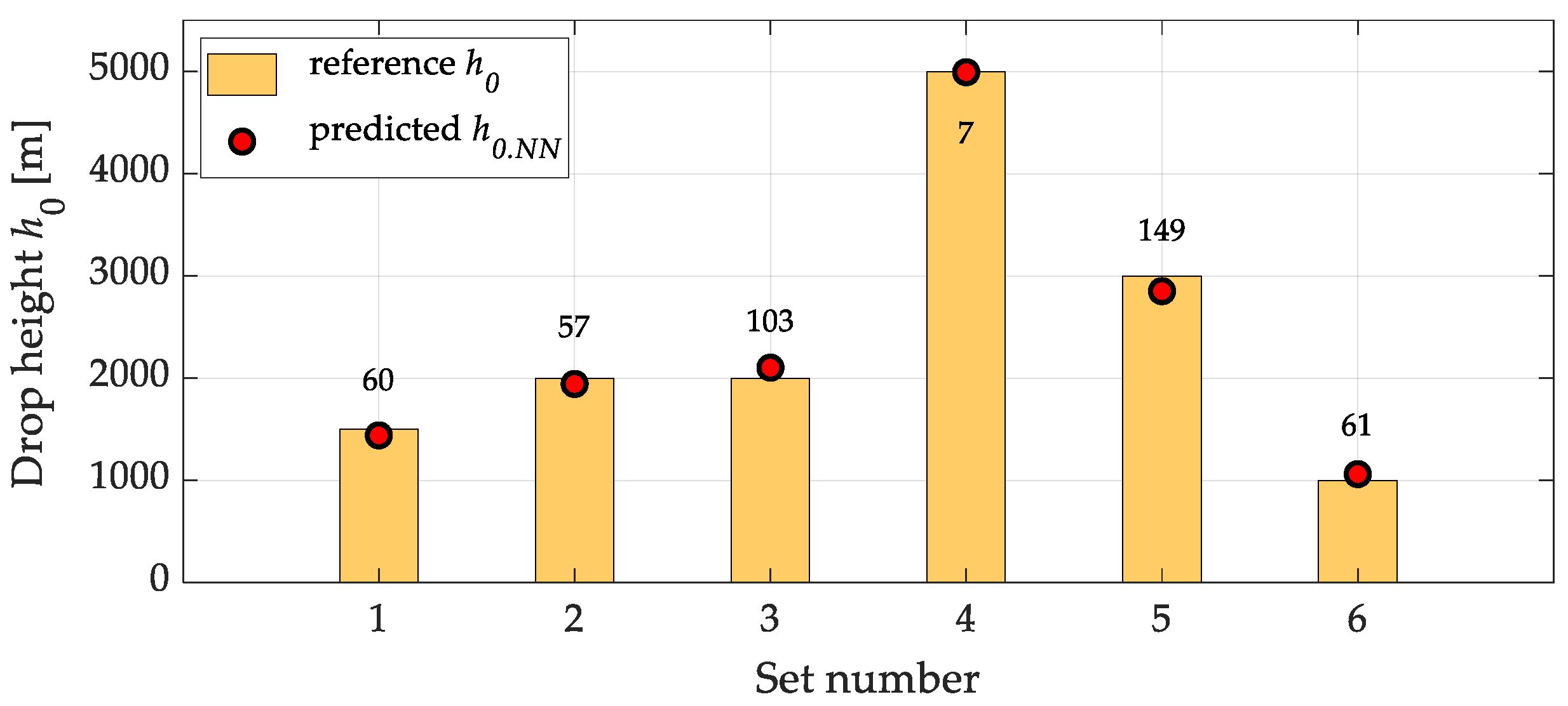

The results presented in

Table 5 were grouped according to three analyzed parameters: initial speed, drop angle, and drop height. To further illustrate the neural network’s performance, a separate plot was generated for each of these groups, comparing the reference values with the network’s predicted values. For the initial speed plot (

Figure 8), the discrepancies observed between the actual data and the neural network predictions are minimal, not exceeding a few units. This demonstrates the model’s high accuracy in estimating the initial parameters:

V0.NN,

θ0.NN,

h0.NN.

For the drop angle plot (

Figure 9), only minor discrepancies are observed. Despite the greater variability of this parameter in the data set, the predicted values remain close to the reference data, indicating good generalization capability of the model.

The drop height plot (

Figure 10), on the other hand, shows that even for larger magnitudes (on the order of thousands of meters), the absolute errors remain relatively small. This indicates that the network can effectively reproduce the data across the entire range of drop heights.

In summary, the differences between the reference data and the predictions are minor in the context of the overall scale of the respective parameters, which confirms the high quality of the predictions generated by the applied neural network NN2.

3.2. Case 2

In the Type II test scenario (Case 2), 18 input data sets were generated for the inverse neural network (NN2). Each set included three key final parameters describing the course of an airdrop operation: the delivery range rk.rand, the fall time tk.rand, and the final velocity at touchdown Vk.rand. These data were generated randomly based on a discrete uniform distribution, meaning that each integer within a given interval had an equal probability of being selected. The applied intervals were as follows:

rk.rand ∈ ⟨492, 7347⟩ [m],

tk.rand ∈ ⟨5, 53⟩ [s],

Vk.rand ∈ ⟨145, 292⟩ [m/s].

The use of a uniform distribution ensures the absence of any preference toward specific regions of the input space, which is particularly important in analyses aimed at evaluating the model’s response across the full spectrum of possible operational scenarios—without implicit assumptions regarding their likelihood [

47,

48].

Inverse problems—where the final parameters are known and the initial conditions are sought—are generally more challenging to solve than problems based on direct cause-effect relationships. In the classical forward approach (e.g., V0, θ0, h0 → rk, tk, Vk), a single set of input values typically leads to a unique solution. In the inverse direction (i.e., rk, tk, Vk → V0, θ0, h0 ), ambiguities may arise, as different initial conditions can produce similar final outcomes. Moreover, for certain combinations of final values, a physically feasible solution may not exist at all (e.g., an unrealistically short time relative to the range, or a negative drop height).

The data used in the training process (for both NN1 and NN2) do not uniformly cover the entire space of possible final parameters. This implies the existence of substantial regions within the input space for which the neural network was not previously exposed to reference data. Random sampling may inadvertently target such "gaps," increasing the risk of extrapolation beyond known regions, which can lead to inaccurate or unreliable results.

For each test sample, the minimum absolute difference (distance) between the randomly generated value and the closest value in the training dataset was calculated—separately for each parameter: rk, tk and Vk. This enabled an estimation of how "close" the test data were to the known (training) data.

The computational scheme illustrating the data flow in the analyzed scenario was as follows:

The initial parameters predicted by the inverse neural network — V0.NN, θ0.NN, h0.NN — did not have assigned reference values. As a result, it was not possible to directly compare them with the real reference values. Their quality was assessed indirectly—by analyzing the resulting final values rk.new, tk.new, Vk.new obtained from a simulation based on the initial parameters returned by NN2.

Table 6 presents the data used in the Type II test scenario, in which the inverse neural network (NN

2) receives randomly assigned values of the range variable (

rk.rand) and is tasked with determining the initial conditions that lead to similar outcomes. The table compares these target values (

rk.rand) with the corresponding values (

rk.new) obtained through simulation, following the complete inverse prediction chain. Additionally, the table includes the percentage error, absolute error, and the minimum distance of each point from the nearest training sample (Min. Dev. of

rk). The general formula for calculating the minimum deviation (

Min. Dev.) of any variable

x from the training set is given by:

where

denotes the set of training samples for the variable

x.

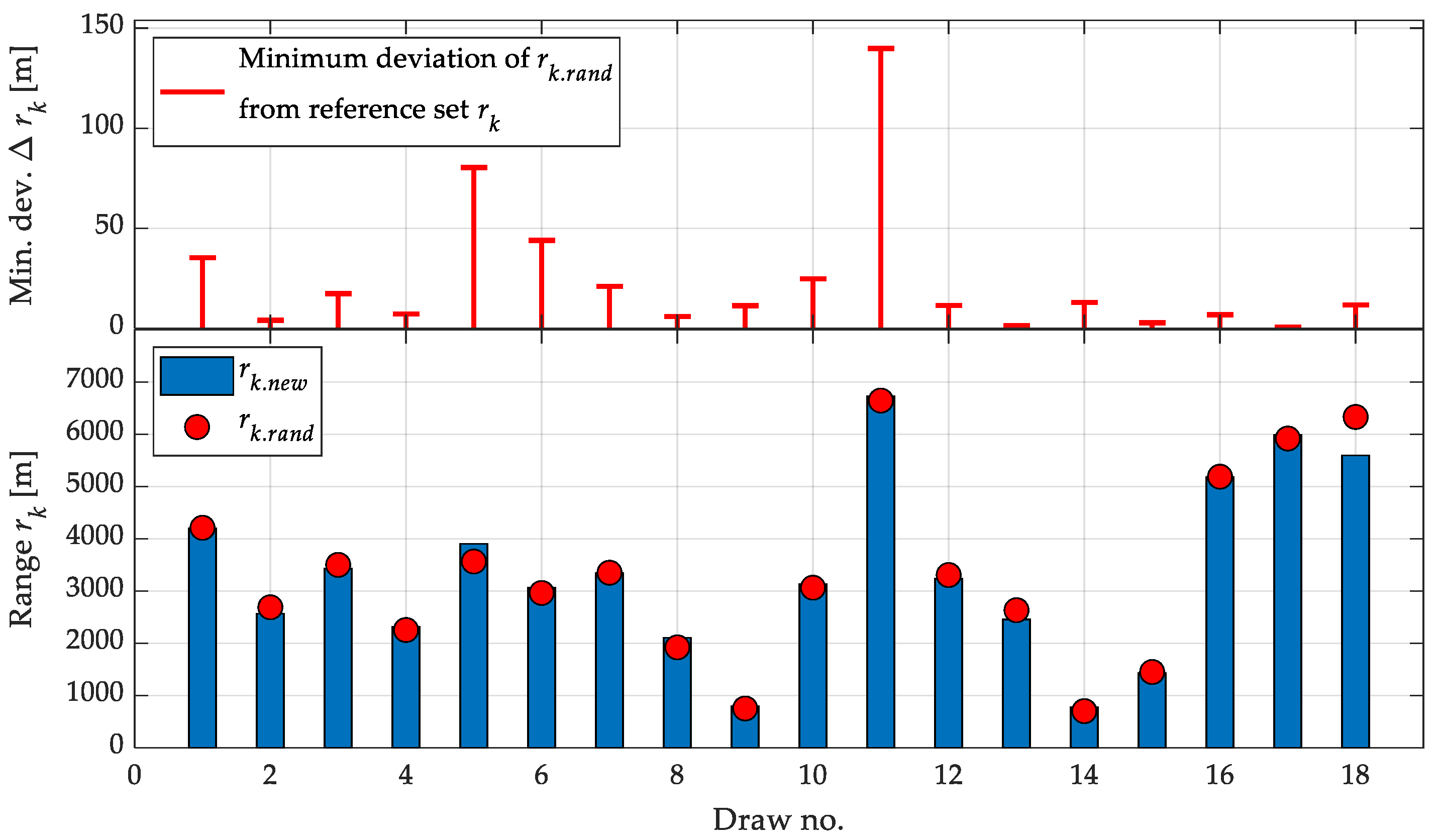

Table 3.2 presents the data used in the Type II test scenario, in which the inverse neural network (NN2) receives randomly generated reference values of the range variable (rk.rand). Its task is to determine the initial conditions that lead to similar outcomes. The table compares these reference values (rk.rand) with the values rk.new, which were obtained through simulation after executing the complete inverse prediction chain. Additionally, the table reports the percentage error, absolute error, and the minimum distance of the given point from the nearest training sample (Min. Dev. of Δrk).

The data analysis indicates that the inverse neural network accurately reconstructs the parameters leading to the desired range in most cases. The average absolute error for the entire dataset is approximately 124.6 meters, while the average relative error is around 4.14%. Given the wide variability in range values (from several hundred to over 6000 meters), this can be considered a satisfactory result.

The best fitting results were obtained for test cases in which the minimum distance from the training data was small—below 10 meters (e.g., samples 1, 7 and 16). In contrast, the largest deviations occurred in cases where the test input data were significantly distant from the training points (e.g., sample 5: 80.4 meters from the nearest training sample and an error of 340.3 meters). Interestingly, some larger errors were also observed in samples with low Min. Dev. values, which may indicate local discontinuities or ambiguities in the inverse solution.

Figure 11 presents a graphical analysis of the performance accuracy of the inverse neural network in the Type II test scenario (Case 2). The lower plot illustrates a comparison between the randomly generated range values (

rk.rand, red dots) and the corresponding rk.new values (bars) obtained through the inverse prediction chain. The horizontal axis represents the sample (draw) number, while the vertical axis shows the range value in meters.

The upper part of the plot illustrates the minimum distance (in meters) between each random sample rk.rand and the nearest training point from the original dataset. This provides insight into how far a given sample is from known training data, which may affect the prediction quality.

Table 7 presents a comparison between the randomly generated time-of-flight values (

tk.rand), which served as input for Stage 4 of the inverse model, and the new reference values (

tk.new) obtained through a two-step predictive process. The table also includes the absolute and percentage errors, as well as the minimum deviation of each sample from the nearest element in the training dataset (Δ

tk), allowing for an assessment of how far a given sample is from known cases within the network.

The results show that, for the majority of samples, the absolute differences between tk.rand and tk.new are small—typically below 1 second—indicating the model’s strong ability to reconstruct temporal parameters when the test data fall within the range represented by the training set. Exceptions to this pattern occur for samples located outside the main region of the training space, as confirmed by higher Δtk values. In such cases (e.g., samples 2, 8, and 18), the percentage errors exceeded 20%, with the maximum absolute deviation reaching 9.07 seconds (sample 18).

These results confirm that the inverse model exhibits high predictive accuracy when performing interpolation within well-represented regions of the training data space. However, in situations that require extrapolation into sparsely represented areas of the input space, the accuracy declines significantly, leading to increased prediction errors.

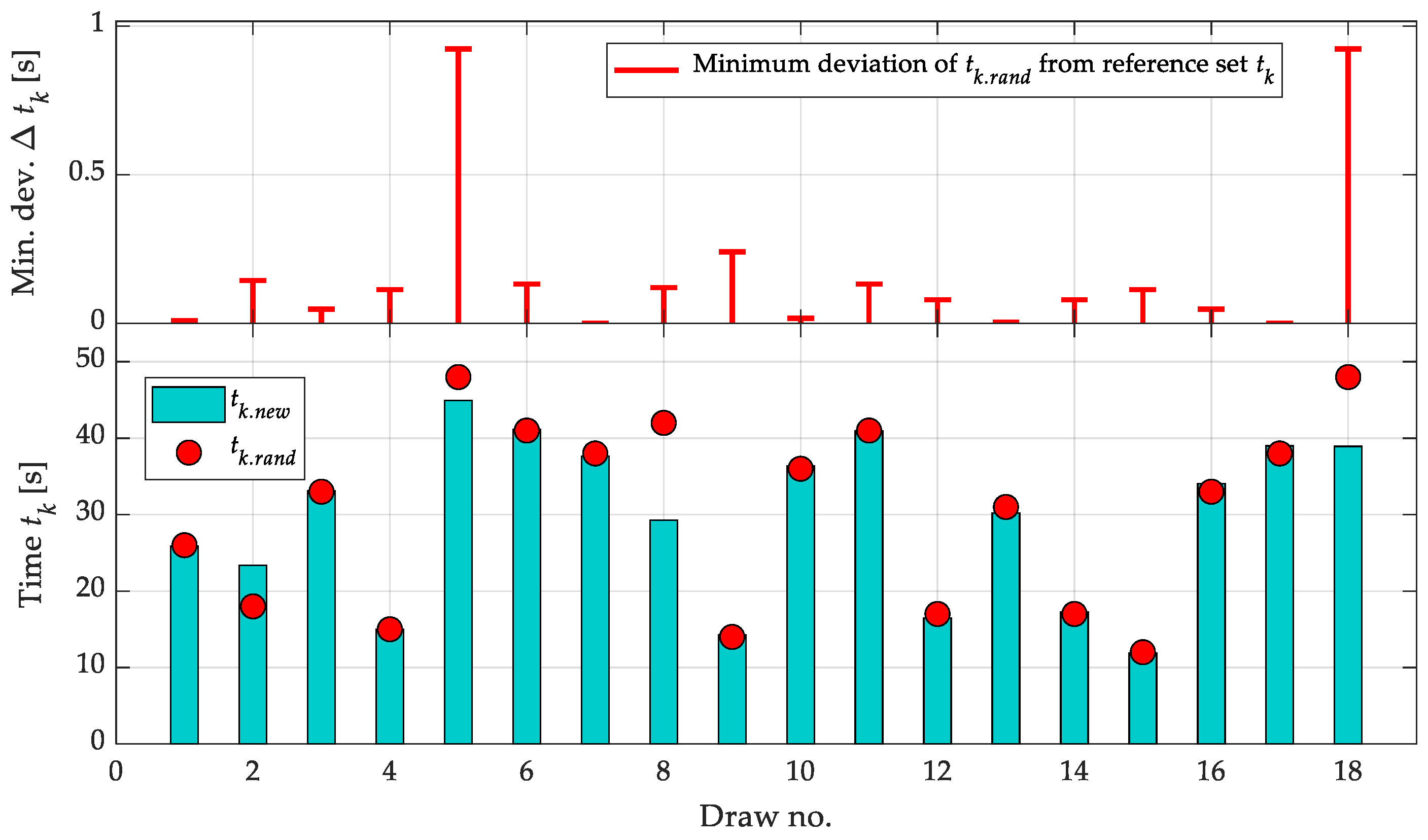

Figure 12 provides a graphical interpretation of the results presented in

Table 7. The lower plot displays a comparison between the randomly generated time-of-flight values (

tk.rand – red points) and the new reference values (

tk.new – blue bars), obtained by the inverse model based on the predicted initial conditions. The horizontal axis corresponds to the sample (draw) number. The upper plot indicates the minimum deviation of each

tk.rand value from the nearest training sample (Δ

tk), allowing for an estimation of how far each test sample deviates from the known range of the training data.

The plot reveals that, in most cases, the differences between tk.rand and tk.new are relatively small, indicating high-quality inverse prediction in the context of interpolation. However, in cases where tk.rand is located farther from the training points (e.g., samples 5, 8, and 18), significant prediction errors emerge—both in terms of absolute deviation and elevated Δtk values. This relationship confirms the strong influence of the representativeness of the training data on the effectiveness of inverse prediction.

Table 8 presents the results of a comparison between the randomly assigned values of the final contact velocity (

Vk.rand) and their corresponding reference values (

Vk.new), as determined by the inverse model. For each pair, the table also includes the relative and absolute errors, as well as the minimum deviation (Min. Dev. Δ

Vk) of the given random sample from the nearest value in the training dataset, providing a basis for assessing interpolation quality.

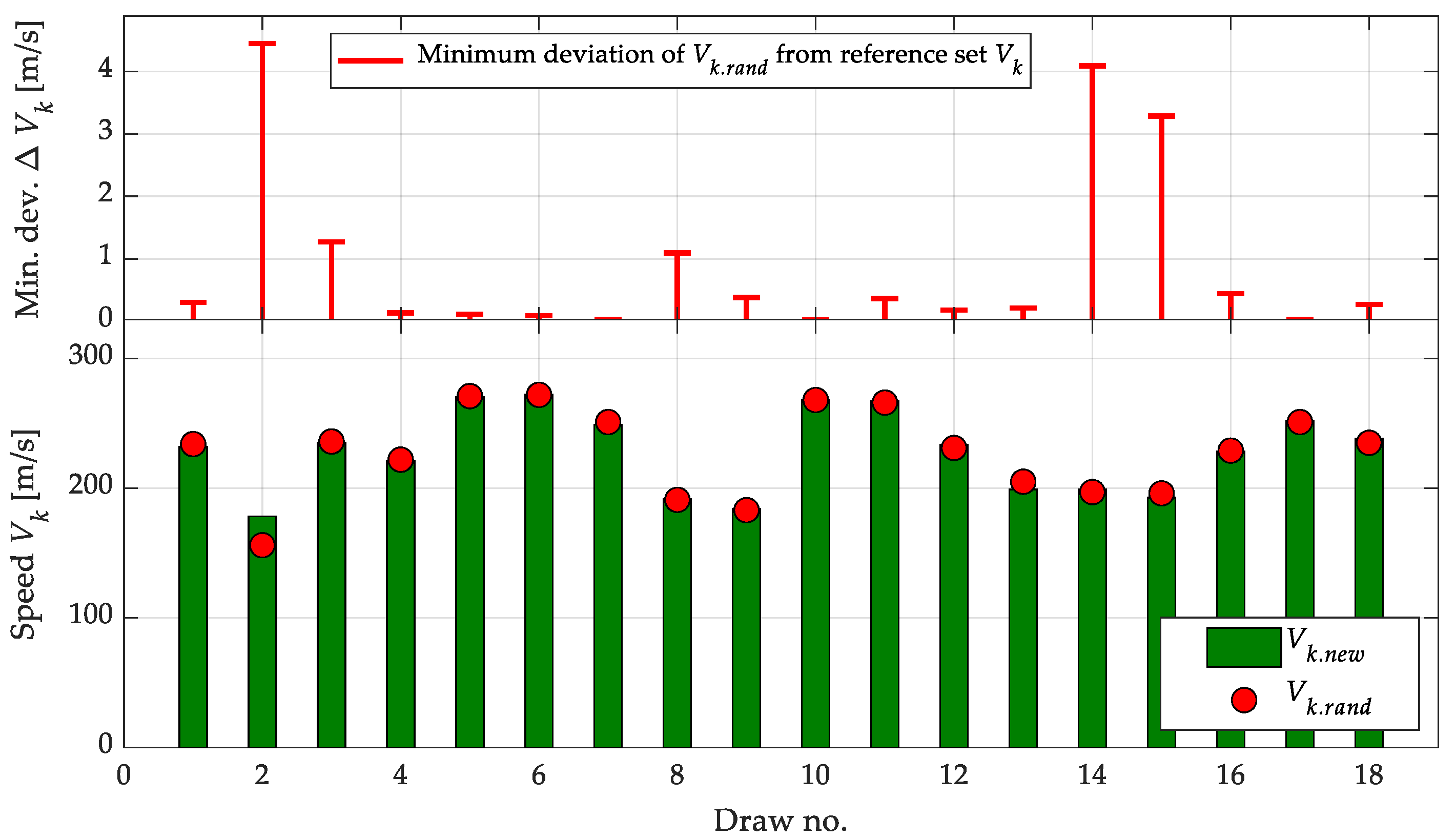

The analysis indicates that, for the vast majority of cases, the differences between Vk.rand and Vk.new are minimal—both the relative and absolute errors remain within a few percent. The largest deviations were observed in samples 2, 13, 14, and 15, where the relative error exceeded 3%, and Vk.rand was located farther from the reference data (e.g., Min. Dev. ΔVk = 4.45 m/s in row 2). In the remaining cases, the discrepancies fall within the range of measurement error or are negligible for practical applications.

The conclusions drawn from the table confirm the effectiveness of the inverse model’s predictions, provided that the training data space is sufficiently covered. The smaller the deviation from the existing training set, the higher the accuracy of the resulting predictions.

Figure 13 presents a comparison between the randomly generated final descent velocity values (

Vk.rand – red dots) and their corresponding reference values (

Vk.new – green bars), determined based on the predictions of the inverse model. The lower panel displays the values of both sets across 18 random samples, while the upper panel shows the minimum deviation of

Vk.rand from the nearest point in the training dataset (Δ

Vk), indicating the degree of similarity between each test sample and the training data.

The plot indicates that, in most cases, the inverse model accurately reproduced Vk—the Vk.new values closely match Vk.rand, particularly where ΔVk was small. Noticeably larger prediction errors occurred only in a few instances (e.g., samples 1, 5, and 15), where the deviation from the training data was relatively substantial.

Table 9 presents the values of the initial conditions predicted by the inverse model, i.e., the initial velocity

V0.NN, launch angle

θ0.NN, and release altitude

h0.NN, for each tested case. Since no direct reference data were available for these parameters, no quantitative error metrics are provided at this Stage. Nevertheless, these results constitute the core output of the neural prediction process and form the basis for the subsequent forward simulation and verification Stages.

Model NN2 demonstrates good performance in interpolation within regions that are well represented by the training data. However, the prediction quality significantly deteriorates for inputs located far from the training data space. The results presented in Case 2 confirm that the sampling density of the training set is crucial for the effectiveness of the inverse model..